Significance

Using transcranial magnetic stimulation and functional magnetic resonance imaging techniques, we demonstrate here that the transfer of perceptual learning from a task involving coherent motion to a task involving noisy motion can induce a functional substitution of V3A (one of the visual areas in the extrastriate visual cortex) for MT+ (middle temporal/medial superior temporal cortex) to process noisy motion. This finding suggests that perceptual learning in visually normal adults shapes the functional architecture of the brain in a much more pronounced way than previously believed. The effects of perceptual learning extend far beyond the retuning of specific neural populations that mediate performance of the trained task. Learning could dramatically modify the inherent functional specializations of visual cortical areas and dynamically reweight their contributions to perceptual decisions based on their representational qualities.

Keywords: perceptual learning, motion, psychophysics, transcranial magnetic stimulation, functional magnetic resonance imaging

Abstract

Training can improve performance of perceptual tasks. This phenomenon, known as perceptual learning, is strongest for the trained task and stimulus, leading to a widely accepted assumption that the associated neuronal plasticity is restricted to brain circuits that mediate performance of the trained task. Nevertheless, learning does transfer to other tasks and stimuli, implying the presence of more widespread plasticity. Here, we trained human subjects to discriminate the direction of coherent motion stimuli. The behavioral learning effect substantially transferred to noisy motion stimuli. We used transcranial magnetic stimulation (TMS) and functional magnetic resonance imaging (fMRI) to investigate the neural mechanisms underlying the transfer of learning. The TMS experiment revealed dissociable, causal contributions of V3A (one of the visual areas in the extrastriate visual cortex) and MT+ (middle temporal/medial superior temporal cortex) to coherent and noisy motion processing. Surprisingly, the contribution of MT+ to noisy motion processing was replaced by V3A after perceptual training. The fMRI experiment complemented and corroborated the TMS finding. Multivariate pattern analysis showed that, before training, among visual cortical areas, coherent and noisy motion was decoded most accurately in V3A and MT+, respectively. After training, both kinds of motion were decoded most accurately in V3A. Our findings demonstrate that the effects of perceptual learning extend far beyond the retuning of specific neural populations for the trained stimuli. Learning could dramatically modify the inherent functional specializations of visual cortical areas and dynamically reweight their contributions to perceptual decisions based on their representational qualities. These neural changes might serve as the neural substrate for the transfer of perceptual learning.

Perceptual learning, an enduring improvement in the performance of a sensory task resulting from practice, has been widely used as a model to study experience-dependent cortical plasticity in adults (1). However, at present, there is no consensus on the nature of the neural mechanisms underlying this type of learning. Perceptual learning is often specific to the physical properties of the trained stimulus, leading to the hypothesis that the underlying neural changes occur in sensory coding areas (2). Electrophysiological and brain imaging studies have shown that visual perceptual learning alters neural response properties in primary visual cortex (3, 4) and extrastriate areas including V4 (5) and MT+ (middle temporal/medial superior temporal cortex) (6), as well as object selective areas in the inferior temporal cortex (7, 8). An alternative hypothesis proposes that perceptual learning is mediated by downstream cortical areas that are responsible for attentional allocation and/or decision-making, such as the intraparietal sulcus (IPS) and anterior cingulate cortex (9, 10).

Learning is most beneficial when it enables generalized improvements in performance with other tasks and stimuli. Although specificity is one of the hallmarks of perceptual learning, transfer of learning to untrained stimuli and tasks does occur, to a greater or lesser extent (2). For example, visual perceptual learning of an orientation task involving clear displays (a Gabor patch) also improved performance of an orientation task involving noisy displays (a Gabor patch embedded in a random-noise mask) (11). Transfer of perceptual learning to untrained tasks indicates that neuronal plasticity accompanying perceptual learning is not restricted to brain circuits that mediate performance of the trained task, and perceptual training may lead to more widespread and profound plasticity than we previously believed. However, this issue has rarely been investigated. Almost all studies concerned with the neural basis of perceptual learning have used the same task and stimuli for training and testing. One exception is a study conducted by Chowdhury and DeAngelis (12). It is known that learning of fine depth discrimination in a clear display can transfer to coarse depth discrimination in a noisy display (13). Chowdhury and DeAngelis (12) examined the effect of fine depth discrimination training on the causal contribution of macaque MT to coarse depth discrimination. MT activity was essential for coarse depth discrimination before training. However, after training, inactivation of MT had no effect on coarse depth discrimination. This result is striking, but the neural substrate of learning transfer was not revealed.

Here, we performed a transcranial magnetic stimulation (TMS) experiment and a functional magnetic resonance imaging (fMRI) experiment, seeking to identify the neural mechanisms involved in the transfer of learning from coherent motion (i.e., a motion stimulus containing 100% signal) to a task involving noisy motion (i.e., a motion stimulus containing only 40% signal and 60% noise:40% coherent motion). By testing with stimuli other than the trained stimulus, we uncovered much more profound functional changes in the brain than expected. Before training, V3A and MT+ were the dominant areas for the processing of coherent and noisy motion, respectively. Learning modified their inherent functional specializations, whereby V3A superseded MT+ as the dominant area for the processing of noisy motion after training. This change in functional specialization involving key areas within the cortical motion processing network served as the neural substrate for the transfer of motion perceptual learning.

Results

Perceptual Learning of Motion Direction Discrimination.

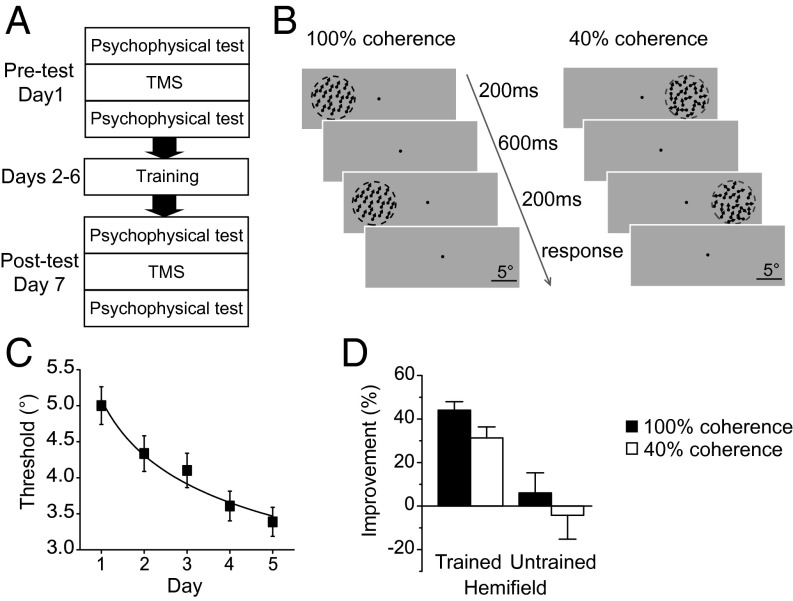

In our first experiment, we used TMS to identify the causal contributions of V3A and MT+ to coherent and noisy motion processing before and after training. We focused on V3A and MT+ because they are both pivotal areas in the cortical network that supports motion perception (14). Furthermore, both V3A and MT+ are bilateral, which allowed us to train one visual hemifield and left the other hemifield untrained. The experiment consisted of three phases: pretraining test (Pre), motion direction discrimination training, and posttraining test (Post) (Fig. 1A).

Fig. 1.

The TMS experimental protocol and psychophysical results. (A) Experimental protocol. Subjects underwent five daily training sessions. The pre- and posttraining tests took place on the days immediately before and after training. In the tests, before and after TMS stimulation, motion direction discrimination thresholds at the 100% and 40% coherence levels were measured in both the left and right visual hemifields. (B) Schematic description of a two-alternative forced-choice trial within a QUEST staircase designed to measure motion direction discrimination thresholds at the 100% and 40% coherence levels. (C) Group mean learning curve. Motion direction discrimination thresholds are plotted as a function of training day. (D) Percentage improvements in motion direction discrimination performance after training. Error bars denote 1 SEM across subjects.

Psychophysical tests and TMS were performed on the days before (Pre) and after (Post) training. Motion direction discrimination thresholds were measured for each combination of stimulus type (100% coherent: the trained stimulus; 40% coherent: the untrained stimulus) and hemifield (trained and untrained) before and after TMS. TMS was delivered using an offline continuous theta burst stimulation (cTBS) protocol. cTBS induces cortical suppression for up to 60 min (15), which was enough time for all subjects to complete the motion direction discrimination threshold measures. Subjects were randomly assigned to receive TMS of V3A (n = 10) or MT+ (n = 10). Only the hemisphere that was contralateral to the trained hemifield was stimulated.

During training, subjects completed five daily motion direction discrimination training sessions. On each trial, two 100% coherent random dot kinematograms (RDKs) with slightly different directions were presented sequentially at 9° eccentricity in one visual hemifield (left or right). Within a two-alternative forced-choice task, subjects judged the change in direction from the first to the second RDK (clockwise or counter clockwise) (Fig. 1B). A QUEST staircase was used to adaptively control the angular size of the change in direction within each trial and provided an estimate of each subject’s 75% correct discrimination threshold.

Similar to the original finding by Ball and Sekuler (16), subjects’ discrimination thresholds gradually decreased throughout training (Fig. 1C). The perceptual learning effect was quantified as the percentage change in performance from the pre-TMS psychophysical measures made at Pre to the pre-TMS measures made at Post (Fig. 1D). In the trained hemifield, training led to a significant decrease in discrimination threshold for both the trained stimulus [44%; t(19) = 11.46; P < 0.001] and the untrained stimulus [31%; t(19) = 5.95; P < 0.001]. The transfer from the trained to the untrained stimulus was substantial (71%, the percentage threshold decrease for the untrained stimulus/the percentage threshold decrease for the trained stimulus × 100%). However, little learning occurred in the untrained hemifield for either stimulus [both t(19) < 0.66; P > 0.05]. Note that none of the learning effects differed significantly between the V3A and MT+ stimulation groups [all t(18) < 1.11; P > 0.05].

A Double Dissociation Between the Causal Contributions of V3A and MT+ to Motion Processing Before Training.

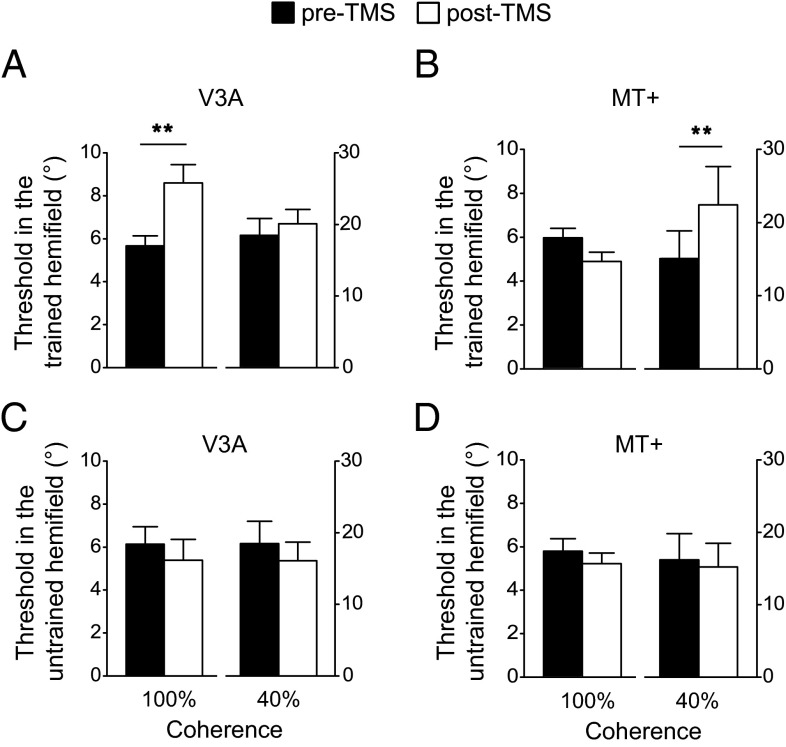

Before training, we found a double dissociation between the effects of TMS delivered to V3A and MT+. For each hemifield (trained and untrained) and each stimulation group (V3A and MT+), subjects’ motion discrimination thresholds were subjected to a two-way repeated-measures ANOVA with TMS (pre-TMS and post-TMS) and stimulus coherence level (100% and 40%) as within-subject factors (Fig. 2). Because the discrimination task with the 40% coherent RDK was much more difficult than that with the 100% coherent RDK, all the main effects of stimulus coherence level were significant. Therefore, here we focused on the main effects of TMS and the interactions between TMS and stimulus coherence level.

Fig. 2.

Motion direction discrimination thresholds before training. (A) Thresholds in the trained hemifield before and after TMS stimulation of V3A. (B) Thresholds in the trained hemifield before and after TMS stimulation of MT+. (C) Thresholds in the untrained hemifield before and after TMS stimulation of V3A. (D) Thresholds in the untrained hemifield before and after TMS stimulation of MT+. Asterisks indicate a significant difference (**P < 0.01) between pre-TMS and post-TMS thresholds. Error bars denote 1 SEM across subjects.

In the trained hemifield (contralateral to the hemisphere that received TMS), for the V3A stimulation group (Fig. 2A), the main effect of TMS [F(1,9) = 4.57; P = 0.06] and the interaction [(F(1,9) = 9.70; P < 0.05] were (marginally) significant. Paired t tests showed that after TMS, performance was impaired and discrimination thresholds were significantly elevated for the 100% coherent stimulus [t(9) = 3.30; P < 0.01]. However, performance for the 40% coherent stimulus was unaffected by TMS [t(9) = 1.29; P > 0.05]. For the MT+ stimulation group (Fig. 2B), we found the opposite pattern: The main effect of TMS and the interaction were significant [both F(1,9) > 10.32; P < 0.05]. After stimulation, discrimination thresholds were significantly elevated for the 40% coherent stimulus [t(9) = 3.71; P < 0.01), but not for the 100% coherent stimulus [t(9) = 2.24; P > 0.05). These results demonstrated that V3A stimulation specifically impaired the processing of 100% coherent motion, whereas MT+ stimulation specifically impaired the processing of 40% coherent motion. This effect was highly specific to the trained hemifield. In particular, there was no significant main effect of TMS or interaction for either the V3A or MT+ stimulation group in the untrained hemifield [ipsilateral to the hemisphere that received TMS; all F(1,9) < 0.94; P > 0.05; Fig. 2 C and D].

Training Changes the Causal Contributions of V3A and MT+ to Motion Processing.

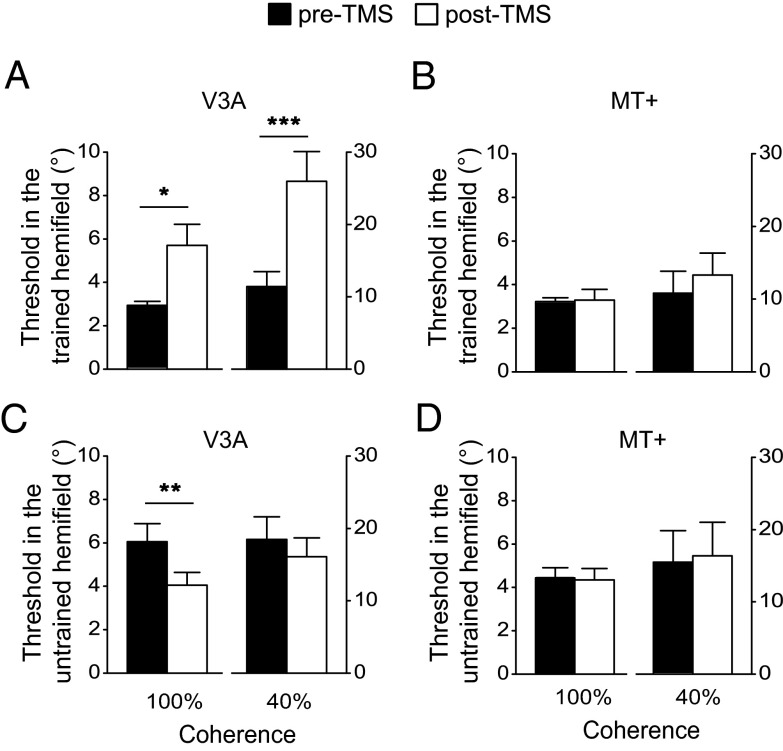

The same statistical analysis used for the pretraining data was applied to the posttraining data. In the trained hemifield, for the V3A stimulation group (Fig. 3A), the main effect of TMS and the interaction between TMS and stimulus coherence level were significant [both F(1,9) > 23.56; P < 0.01]. After TMS, subjects’ discrimination thresholds were significantly elevated for both the 100% and 40% coherent stimuli [both t(9) > 3.14; P < 0.05]. For the MT+ stimulation group (Fig. 3B), the main effect of TMS and the interaction were not significant [both F(1,9) < 3.27; P > 0.05]. These results demonstrated that, after training, TMS of V3A disrupted motion processing not only for the 100% coherent stimulus but also for the 40% coherent stimulus. Surprisingly, TMS of MT+ no longer had any effect on task performance for the 40% coherent stimulus, which was in sharp contrast to the pronounced TMS effect for this stimulus before training.

Fig. 3.

Motion direction discrimination thresholds after training. (A) Thresholds in the trained hemifield before and after TMS stimulation of V3A. (B) Thresholds in the trained hemifield before and after TMS stimulation of MT+. (C) Thresholds in the untrained hemifield before and after TMS stimulation of V3A. (D) Thresholds in the untrained hemifield before and after TMS stimulation of MT+. Asterisks indicate a significant difference (*P < 0.05, **P < 0.01, ***P < 0.001) between pre-TMS and post-TMS thresholds. Error bars denote 1 SEM across subjects.

In the untrained hemifield, for the V3A stimulation group (Fig. 3C), the interaction was not significant [F(1,9) = 0.07; P > 0.05], but the main effect of TMS was significant [F(1,9) = 13.08; P < 0.01]. After TMS, subjects’ discrimination thresholds decreased for the 100% coherent stimulus [t(9) = 3.58; P < 0.01]. This facilitation might reflect a TMS-induced disinhibition of contralateral cortical activity (17), which will be a topic for future investigation. For the MT+ stimulation group (Fig. 3D), the main effect of TMS and the interaction were not significant [both F(1,9) < 0.77; P > 0.05].

The TMS experiment demonstrated that before training, V3A and MT+ played causal and dissociable roles in the processing of the 100% and 40% coherent motion stimuli, respectively. Intriguingly, after training, the role of MT+ was replaced by V3A. A possible explanation for this phenomenon is that, before training, among visual cortical areas, the 100% and 40% coherent motion stimuli are best represented in V3A and MT+, respectively. However, after training, the representation of the 40% coherent motion stimulus in V3A was improved to the extent that this stimulus was better represented in V3A than in MT+. A natural consequence of neural representation change is representation readout/weight change by decision-making areas. If we assume that decision-making areas rely on the best stimulus representation, as posited by the lower-envelope principle on neural coding (18), then V3A would have superseded MT+ in supporting motion direction discrimination with the 40% coherent motion stimulus after training. We designed an fMRI experiment to test this hypothesis.

Training Effects on fMRI Decoding Accuracy in Visual Cortical Areas.

The fMRI experiment also had three phases: Pre, training, and Post. A group of 12 new subjects were recruited for this experiment. The training protocol and psychophysical measures of motion direction discrimination were identical to those used in the TMS experiment. Psychophysical tests and MRI scanning were performed at Pre and Post. Within the trained hemifield, training resulted in a significant decrease in discrimination threshold with both the trained stimulus [43%, t(11) = 8.15; P < 0.001] and the untrained stimulus [28%, t(11) = 5.95; P < 0.001]. The transfer from the trained to the untrained stimulus was substantial (66%). No significant improvement in performance occurred in the untrained hemifield [both t(11)< 1.41; P > 0.05], replicating the behavioral result in the TMS experiment.

During scanning, subjects viewed the 100% and 40% coherent stimuli in the trained hemifield. They performed the motion direction discrimination task with the stimuli that moved in the trained direction or in the direction orthogonal to the trained direction. To investigate how training modified the neural representation of the motion stimuli in visual cortical areas, especially in V3A and MT+, we used decoding analysis to classify the fMRI activation patterns evoked by the trained and orthogonal directions for the 100% and 40% coherent stimuli. Decoders were constructed by training linear support vector machines (SVMs) on multivoxel activation patterns in visual areas, and decoding accuracies were calculated after a leave-one-run-out cross-validation procedure. Independent SVMs were used on the pre- and posttraining data to maximize sensitivity to any learning-induced changes in the representation of the stimuli. To ensure that the representation information was fully extracted, all motion responsive voxels within each cortical area were included. Note that because of the limits of spatial resolution and sensitivity of fMRI, SVMs are not able to classify two directions with a very small near-threshold direction difference. Therefore, we could not perfectly match the stimuli used in the fMRI and psychophysical experiments. Instead, we trained decoders to classify two orthogonal directions and used decoding accuracy to quantify the representation quality. We reasoned that if training could improve the neural representations of the motion stimuli (especially in the trained direction), as suggested by the TMS and psychophysical results, it was possible that decoding accuracies for the orthogonal directions could be improved by training. Similar approaches have been used previously (19–21).

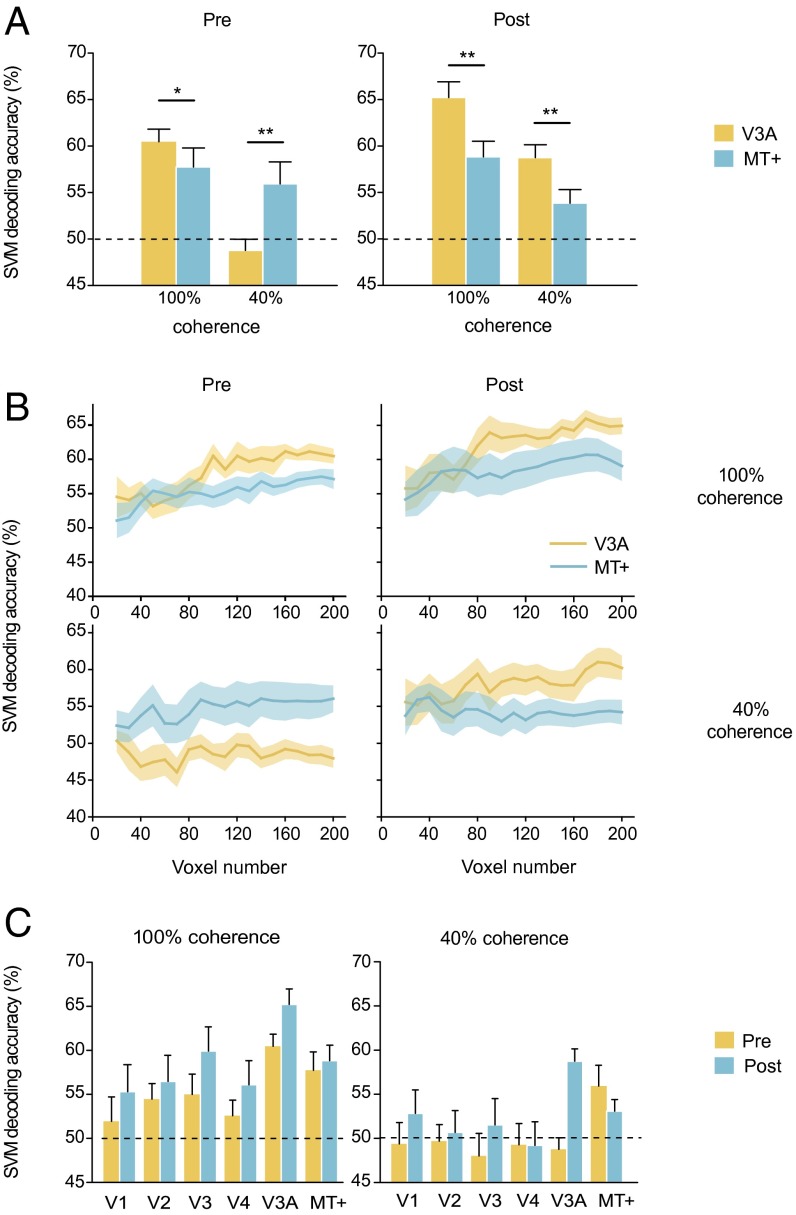

Before training, a repeated-measures ANOVA revealed a significant main effect of stimulus coherence level and a significant interaction between stimulus coherence level and area [V3A and MT+; both F(1,11) > 7.87l P < 0.05; Fig. 4A, Left). For the 100% coherent motion, the decoding accuracy in V3A was higher than that in MT+ [t(11) = 2.49; P < 0.05], and both were above chance level [both t(11) > 3.75; P < 0.01). For the 40% coherent motion, only the decoding accuracy in MT+ was above chance level [t(11) = 2.52; P < 0.01], and it was significantly higher than that in V3A [t(11) = 3.19; P < 0.01].

Fig. 4.

SVM decoding of the 100% and 40% coherent motion stimuli at Pre and Post. (A) Decoding accuracies in V3A and MT+. Asterisks indicate a significant difference (*P < 0.05, **P < 0.01) between V3A and MT+. (B) Decoding accuracies as a function of voxel number. (C) Decoding accuracies in visual cortical areas. Error bars and shaded regions denote 1 SEM across subjects.

After training, the decoding accuracies in V3A increased for both the 100% and 40% coherent motion [both t(11) > 3.09; P < 0.01]. ANOVA showed that the main effects of stimulus coherence level and area were significant [both F(1,11) > 11.32; P < 0.01; Fig. 4A, Right]. Furthermore, in stark contrast to the pretraining result, the decoding accuracies in V3A were higher than those in MT+, not only for the 100% coherent motion [t(11) = 2.85; P < 0.05] but also for the 40% coherent motion [t(11) = 3.51; P < 0.01]. Therefore, the classification abilities of these two areas before and after training were in accordance with their dissociable contributions to the 100% and 40% coherent motion processing revealed in the TMS experiment, supporting our hypothesis.

It should be pointed out that the decoding result did not depend on the number of selected voxels. For V3A and MT+, we selected 20–200 responsive voxels and performed the decoding analysis. The decoding performance generally improved as the voxel number increased. ANOVAs with factors of area (V3A and MT+) and voxel number (160–200) revealed significant main effects of area when conducted separately on data corresponding to each combination of stimulus coherence level (100% and 40%) and training [pretraining and posttraining; Fig. 4B; all F(1,11) > 4.92; P < 0.05].

In addition to V3A and MT+, we also investigated how training changed decoding accuracy in other visual cortical areas (Fig. 4C). For the 100% coherent motion, V3A had the highest decoding accuracy before and after training [paired t tests between V3A and other areas, all t(11) > 2.21; P < 0.05]. In addition to V3A and MT+, the decoding accuracies in V2 and V3 were also significantly above chance level before training [both t(11) > 2.26; P < 0.05]. Notably, only the decoding accuracy in V3A increased significantly after training [t(11) = 5.99; P < 0.01]. For the 40% coherent motion, MT+ was the only area with decoding accuracy that was significantly above chance level before training [t(11) = 2.52; P < 0.05]. However, after training, decoding accuracy in V3A increased dramatically [t(11) = 7.01; P < 0.01], allowing V3A to surpass MT+ and become the area with the highest decoding accuracy [paired t tests between V3A and other ROIs, all t(11) > 2.15; P < 0.05]. Taken together, these results suggest that decision-making areas in the brain rely on the visual area with the best decoding performance for the task at hand, and crucially, that this process is adaptive, whereby training-induced changes in decoding performance across visual areas are reflected in decision-making.

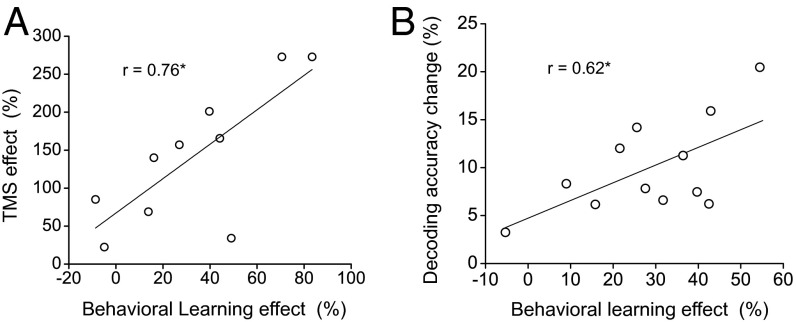

Correlations Among Psychophysical, TMS, and fMRI Effects.

To evaluate further the role of V3A in processing the 40% coherent motion after training, we calculated the correlation coefficients between the psychophysical and TMS/fMRI measures for the 40% coherent stimulus across individual subjects (Fig. 5). The coefficient between the behavioral learning effect and the posttraining TMS effect [(post-TMS threshold − pre-TMS threshold)/pre-TMS threshold × 100%] at V3A was 0.76 (P < 0.05), and the coefficient between the behavioral learning effect and the decoding accuracy change in V3A was 0.62 (P < 0.05), demonstrating a close relationship among the psychophysical, TMS, and fMRI effects. Specifically, the greater the improvement in direction discrimination of 40% coherent motion after training, the greater the involvement of V3A in the task. In addition, the correlation between behavioral learning and decoding accuracy change indicated that the use of orthogonal stimuli within the fMRI experiment allowed for the detection of learning-induced changes in stimulus representation.

Fig. 5.

Correlations between the psychophysical and the TMS/fMRI measures at the 40% coherence level across individual subjects. (A) Correlation between the behavioral learning effect and the posttraining TMS effect at V3A. (B) Correlation between the behavioral learning effect and the decoding accuracy change in V3A. Asterisks indicate a significant correlation (*P < 0.05).

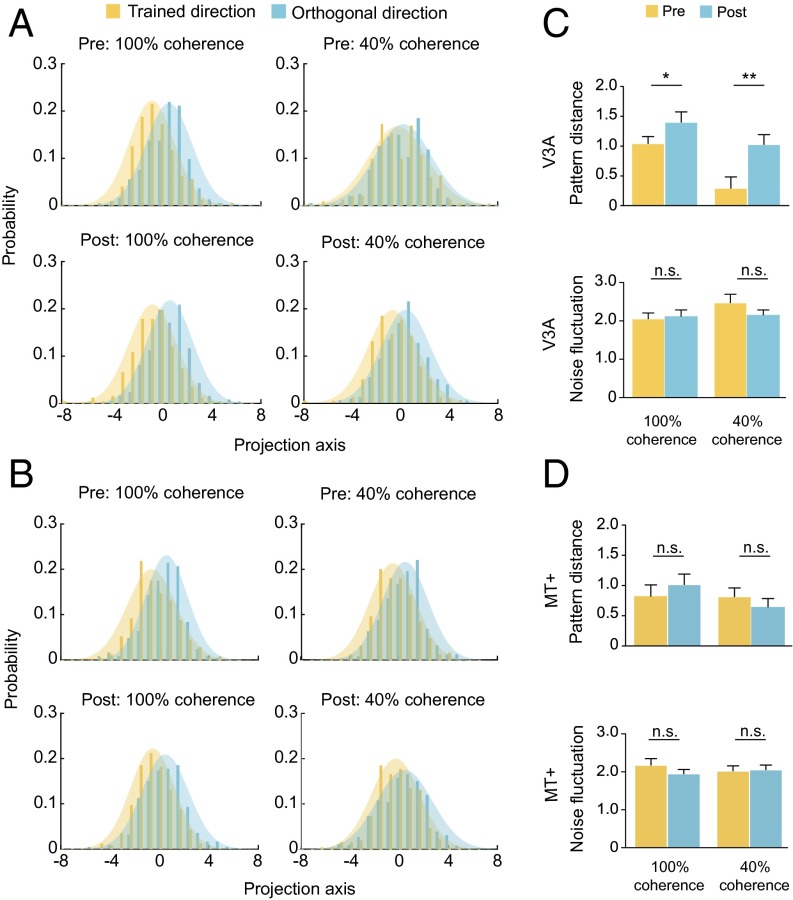

fMRI Linear Discriminant Analysis.

Training improved the decoding performance of V3A with the 40% coherent motion. Responses of V3A voxels to repeatedly presented motion blocks are noisy, fluctuating around a mean value. From the perspective of signal detection theory, there are two strategies to increase the signal-to-noise ratio to improve decoding performance: increasing the distance between the mean values of responses to the trained and the orthogonal directions, and decreasing the response fluctuations along the direction orthogonal to the decision line that separates the responses to the two directions (22). Here we asked which strategy V3A adopted during training.

Linear discriminant analysis (LDA) was used to project the multivoxel response patterns onto a linear discriminant dimension by weighting each voxel’s response to maximize the ratio of the between-direction (trained direction vs. orthogonal direction) variance to the within-direction variance. Using this method, we characterized the distributions of the two response patterns in the direction orthogonal to the decision line. In V3A, training reduced the overlap between the patterns evoked by the trained and the orthogonal directions for both the 100% and 40% coherent stimuli (Fig. 6A). We fitted the projected patterns with two Gaussians and compared the signal distance (i.e., the distance between the two Gaussians) and the noise fluctuation (i.e., the variance of the Gaussians) before and after learning. After training, we found significant increases in signal distance at both coherence levels [both t(11) > 2.49; P < 0.05], but no change in noise fluctuation [both t(11) < 1.39; P > 0.05; Fig. 6C]. In MT+, no change occurred in either signal distance or noise fluctuation (Fig. 6 B and D). Notably, the signal distance in V3A at the 40% coherence level, which was almost zero before training [t(11) = 1.57; P > 0.05], surpassed that in MT+ [t (11) = 3.09; P < 0.01] after training. These results confirmed the findings from the decoding analysis and demonstrated that perceptual training increased the pattern distance between the trained and the untrained (orthogonal) directions, rather than reducing the noise fluctuation of neural responses to the two directions.

Fig. 6.

LDA of fMRI response patterns to the trained and the orthogonal directions. (A and B) Projections of V3A and MT+ response patterns onto a linear discriminant dimension. (C and D) Signal distances and noise fluctuations in V3A and MT+. Asterisks indicate a significant difference (*P < 0.05, **P < 0.01) between the signal distances at Pre and Post. Error bars denote 1 SEM across subjects.

Discussion

Whether functional differences exist between V3A and MT+ has been a long-standing question in visual neuroscience. Most previous studies have found that V3A and MT+ exhibit similar functional properties when processing motion (23, 24). In contrast, Vaina and colleagues (25, 26) provided neuropsychological evidence indicating that V3A and MT+ are dominant in local and global motion processing, respectively. Recently, we found that perceptual training with 100% coherent motion increased the neural selectivity in V3A (21). We also have data showing that training with 40% coherent motion increased the neural selectivity in MT+. Together with the results in the current study, these findings point to dissociable roles of V3A and MT+ in coherent and noisy motion processing. In a coherent motion stimulus, the local motion direction of individual dots is the same as the global direction of the stimulus. The specialization of V3A in coherent motion processing might be a result of its greater capacity to process local motion signals than MT+, which is underpinned by its relatively small receptive field sizes and narrow tuning curves for motion direction (27, 28). In a noisy motion stimulus, only some dots move in the global direction, whereas others move in random directions and can be treated as noise. The MT+ specialization for noisy motion processing is believed to be a result of spatial pooling of local motion, which averages out motion noise to reveal the global motion direction (29).

In this study, a substantial transfer of learning occurred from coherent motion to noisy motion, consistent with other studies demonstrating that learning transferred from stimuli without noise to those with noise (11, 13). We speculate that the transfer reported here is a result of an improved representation of the trained motion direction within V3A combined with an increased resilience to the noise present in the noisy motion stimulus. It has been suggested that local motion processing is a primary limitation for global motion sensitivity (30), and that perceptual learning of global motion tasks reflects changes in local motion processing (31). Because 40% of the dots (i.e., the signal dots) in the noisy motion stimuli traveled in the trained motion direction, training with the coherent motion stimuli could enhance the ability of the visual system to use the direction information provided by these signal dots. At the neural level, training with coherent motion resulted in an improvement of local motion representation in V3A. This improvement was characterized by an increase in the pattern distance between the trained direction and the orthogonal directions, which may have made the representation of the trained direction more resistant to the noise present in the noisy motion stimulus. Together, these changes enabled V3A to outperform MT+ in identifying the global motion direction of the noisy motion stimuli.

The neural mechanisms of perceptual learning have been explained as changes in the tuning properties of neurons that provide sensory information and/or changes in how these sensory responses are routed to and weighted by decision-making areas (10, 21, 32). Our results suggest that both of these processes work in concert to improve task performance. Before training, motion decision-making areas (e.g., IPS) (21, 33) might put more weight on the signals from V3A and MT+ for the coherent and noisy motion, respectively. Training improved the representation of motion direction for both coherent and noisy motion stimuli within V3A. As a consequence, after training, the signals from V3A were weighted more heavily than those from any other visual cortical area for both kinds of motion. The popular reweighting theory of perceptual learning argues that perceptual learning is implemented by adjusting the weights between basic visual channels and decision-making areas. The visual channels are assumed to lie either within a single cortical area or across multiple cortical areas (34). In the context of the reweighting theory, our results suggest that reweighting can occur between different cortical areas for optimal decision-making. However, it is currently unknown whether training-induced changes in the relative contribution of V3A and MT+ to motion processing were associated with changes in connection “weights” between motion processing areas and decision-making areas, as assumed by the reweighting model. In our study, fMRI slices did not cover IPS. Therefore, we were not able to measure the connection weight changes. This issue should be investigated in the future.

Most perceptual learning studies trained and tested on the same task and stimuli, and assumed that the neural plasticity that accompanies learning is restricted to areas that mediate performance of the trained task. The functional substitution of V3A for MT+ in noisy motion processing induced by coherent motion training challenges this view and approach. Previously, studies of functional substitution or reorganization have mostly been restricted to subjects with chronic sensory disorders. For example, the “visual” cortex of blind individuals is active during tactile or auditory tasks (35, 36). In the area of perceptual learning, two studies attempted to investigate the functional substitution issue. Chowdhury and DeAngelis (12) found that fine depth discrimination training eliminated the causal contribution of MT to coarse depth discrimination. However, the visual areas that took responsibility for coarse depth discrimination after training were not identified. Using TMS, Chang et al. (37) demonstrated that perceptual training shifts the limits on perception from the posterior parietal cortex to the lateral occipital cortex (see also ref. 38). Here, we propose that perceptual learning in visually normal adults shapes the functional architecture of the brain in a much more pronounced way than previously believed. Importantly, this extensive cortical plasticity is only revealed when subjects are tested on untrained tasks and stimuli. In the future, investigating the neural mechanisms underpinning the transfer of perceptual learning will not only remarkably advance our understanding of the nature of brain plasticity but also help us develop effective rehabilitation protocols that may result in training-related functional improvements generalizing to everyday tasks through learning transfer.

Materials and Methods

Subjects.

Twenty subjects (11 female, 20–27 y old) participated in the TMS experiment, and 12 subjects (five female, 20–25 y old) participated in the fMRI experiment. They were naive to the purpose of the experiment and had never participated in a perceptual learning experiment before. All subjects were right-handed and had normal or corrected-to-normal vision. They had no known neurological or visual disorders. They gave written, informed consent in accordance with the procedures and protocols approved by the human subject review committee of Peking University. Detailed methods are provided in SI Materials and Methods.

SI Materials and Methods

MRI-Guided TMS Experiment.

Stimuli and protocol.

Visual stimuli were RDKs consisting of 400 dark dots (luminance: 0.021 cd/m2; diameter: 0.1°), which were presented against a gray background (luminance: 11.55 cd/m2). The dots moved at a velocity of 37°/s within a virtual circular area subtending 9° in diameter. The center of the aperture was positioned 9° horizontally to the left or right of the central fixation point (Fig. 1B). In the 100% motion coherence condition, all dots moved in the same direction. In the 40% motion coherence condition, 40% of the dots were assigned to be signal and moved in the same direction, and the rest of dots were assigned to be noise and moved in random directions. In psychophysical tests (see following), the stimuli were presented on an IIYAMA HM204DT 22-inch monitor with a refresh rate of 100 Hz and a resolution of 1,024 × 768 pixels. Subjects viewed the stimuli at a distance of 60 cm with their head stabilized by a chin and headrest. They were asked to maintain fixation throughout the tests.

The TMS experiment consisted of three phases: pretraining test (Pre), motion direction discrimination training, and posttraining test (Post) (Fig. 1A). Pre and Post took place on the days immediately before and after training. At the beginning of the experiment, subjects were randomly assigned to two groups of 10 subjects, in which V3A and MT+ were stimulated by TMS, respectively.

During training, each subject performed a 100% coherent motion direction discrimination task at a direction of θ (22.5° or −67.5°; 0° was the upward direction) in either the left or the right visual hemifield. There were five daily training sessions, and the trained direction and hemifield was fixed for all sessions. Each session consisted of 20 QUEST staircases of 40 trials. On a trial, two RDKs with motion directions of θ ± Δθ were presented successively for 200 ms each and were separated by a 600-ms blank interval (Fig. 1B). The temporal order of these two RDKs was randomized. Subjects were asked to make a two-alternative forced-choice judgment of the direction of the second RDK relative to the first one (clockwise or counter clockwise). Feedback was provided after each response by brightening (correct response) or dimming (wrong response) the fixation point. The next trial began 1 s after feedback. Δθ varied trial by trial and was controlled by the QUEST staircase to estimate subjects’ discrimination thresholds at 75% correct. To measure the time course of the training effect (learning curve), discrimination thresholds from the 20 staircases within a session were averaged and then plotted as a function of training day. The learning curves were fitted with a power function.

During the test phases, we measured TMS effects over V3A and MT+ on motion perception. TMS stimulation was always performed over the hemisphere contralateral to subjects’ trained hemifield. Before and after TMS stimulation, subjects completed four QUEST staircases of 40 trials at two coherence levels (100% and 40%) and two hemifields (left and right). Before the experiment, subjects practiced two staircases for each coherence level and each hemifield (eight staircases in total) to become familiarized with the stimuli and the experimental procedure.

MRI data acquisition.

MRI data were collected using a 3T Siemens Trio scanner with a 12-channel phase-array coil. In the scanner, the stimuli were back-projected via a video projector (refresh rate: 60 Hz; spatial resolution: 1,024 × 768) onto a translucent screen placed inside the scanner bore. Subjects viewed the stimuli through a mirror located above their eyes. The viewing distance was 83 cm. Blood oxygen level-dependent (BOLD) signals were measured with an echo-planar imaging sequence [echo time (TE): 30 ms; repetition time (TR): 2 s; FOV: 192 × 192 mm2; matrix: 64 × 64; flip angle: 90; slice thickness: 3 mm; gap: 0 mm; number of slices: 33, slice orientation: axial]. fMRI slices covered the occipital lobe, most of the parietal lobe, and part of the temporal lobe. A high-resolution 3D structural data set (3D MPRAGE; 1 × 1 × 1 mm3 resolution) was collected in the same session before functional runs.

MRI data preprocessing.

MRI data were processed using BrainVoyager QX (Brain Innovation). The anatomical volume for each subject was transformed into the anterior and posterior commissures space and then inflated. Functional volumes for each subject were preprocessed, including 3D motion correction, linear trend removal, and high-pass (0.015 Hz) filtering. The functional volumes were then aligned to the anatomical volume. The first 6 s of BOLD signals were discarded to minimize transient magnetic saturation effects.

Defining regions of interest.

Retinotopic visual areas (V1, V2, V3, V3A) were defined by a standard phase-encoded method developed by Engel et al. (39), in which subjects viewed a rotating wedge and an expanding ring that created traveling waves of neural activity in visual cortex. An independent block-design run was repeated twice to localize regions of interest (ROIs) in V3A and MT+. The localizer run contained eight moving dot blocks and eight stationary dot blocks of 12 s, interleaved with 17 blank blocks of 12 s. In the moving dot blocks, the stimulus was identical to that in the psychophysical experiment, except that each dot traveled back and forth along a randomly chosen direction, alternating once per second. For both the moving and stationary dot blocks, the dots were presented in the left visual hemifield in half of the blocks and in the right visual hemifield in the other half. A general linear model procedure was used for selecting ROIs. MT+ was identified as a set of significantly responsive voxels to moving dots (vs. stationary dots) within or near the occipital continuation of the inferior temporal sulcus. The ROIs in V3A and MT+ in each hemisphere were defined as voxels that responded more strongly to moving dots in the contralateral visual hemifield than those in the ipsilateral visual hemifield (P < 0.001, uncorrected).

TMS.

cTBS was delivered through a MagStim Super Rapid2 stimulator and a double 70-mm figure-of-eight coil. A train of 600 pulses, three pulses at 50 Hz every 200 ms, was delivered at 50% of the maximum stimulator output. cTBS was guided using individual subjects’ structural and functional MRI data and the Visor2 neuro-navigation system (Advanced Neuro Technology). The stimulation sites in V3A and MT+ were the voxels exhibiting the strongest BOLD activation (contralateral vs. ipsilateral moving dots). The coil was held over the scalp tangentially, with the handle oriented posteriorly toward the occiput parallel to the subject’s spine. The position of the coil was monitored through the course of the 40-s cTBS protocol.

fMRI Experiment.

MRI data acquisition.

MRI data were collected using a 3T Siemens Prisma scanner with a 20-channel phase-array coil. In the scanner, the stimuli were back-projected via a video projector (refresh rate: 60 Hz; spatial resolution: 1,024 × 768) onto a translucent screen placed inside the scanner bore. Subjects viewed the stimuli through a mirror located above their eyes. The viewing distance was 70 cm. BOLD signals were measured with an echo-planar imaging sequence (TE: 30 ms; TR: 2 s; FOV: 152 × 152 mm2; matrix: 76 × 76; flip angle: 90°; slice thickness: 2 mm; gap: 0 mm; number of slices: 28; slice orientation: quasi-axial, roughly parallel to the calcarine sulcus). fMRI slices covered the visual cortex. A high-resolution 3D structural data set (3D MPRAGE; 1 × 1 × 1-mm3 resolution) was collected in the same session before functional runs.

Defining ROIs.

Retinotopic mapping and the motion localization protocols were the same as those used in the TMS experiment. The ROIs in V1–V4, V3A, and MT+ were contralateral to the trained stimulus (see following) and were defined as areas that showed greater activation to moving dots than stationary dots (P < 0.001, uncorrected).

Stimuli and protocol.

The fMRI experiment also consisted of the same three phases as those in the TMS experiment. The stimuli and training protocol were identical to those used for the TMS experiment. At Pre and Post, subjects completed six QUEST staircases of 40 trials for two coherence levels (100% and 40%) and two hemifields (left and right). Then MRI scanning was conducted. During scanning, subjects performed the motion direction discrimination task at the trained direction (22.5°/−67.5°) and the direction orthogonal to the trained direction (−67.5°/22.5°). The stimuli were always presented in the trained hemifield at one of two coherence levels (100% and 40%). BOLD signals were measured in 11 functional runs. Each run contained eight stimulus blocks of 12 s, two blocks for each of four stimulus conditions (each combination of two directions and two coherence levels). Stimulus blocks were interleaved with 12-s fixation blocks. A stimulus block consisted of five trials. On a trial, two RDKs with motion directions of 22.5/−67.5 ± 5° were presented successively for 200 ms each. They were separated by a 600-ms blank interval and were followed by a 1,400-ms blank interval. Similar to the psychophysical measurements, subjects were asked to make a two-alternative forced-choice judgment of the direction of the second RDK relative to the first one (clockwise or counter clockwise). Before the experiment, subjects practiced two staircases at each coherence level and each hemifield (eight staircases in total) to become familiarized with the stimuli and the experimental procedure.

fMRI data analysis.

A general linear model procedure was used to estimate beta values for individually responsive voxels in a stimulus block, resulting in 22 beta value patterns per test for each stimulus condition and ROI. The beta values in each pattern were converted to z-scores to remove run-to-run variance. For the decoding analysis, we trained linear support vector machine classifiers (www.csie.ntu.edu.tw/∼cjlin/libsvm), using these patterns per ROI and calculated mean decoding accuracies after a leave-one-run-out cross-validation procedure. That is, for each coherence level, we trained binary classifiers (i.e., trained direction vs. orthogonal direction) on 20 training patterns and tested their accuracy on the remaining two patterns per stimulus condition and ROI using an 11-fold cross-validation procedure. The chance performance for the classifiers was 0.5. LDA was further used to find a set of optimal weights that projected the multidimensional beta values onto a 1D space (referred to as the LDA direction) by maximizing the ratio of the between-direction (trained direction vs. orthogonal direction) variance to the within-direction variance. We estimated the weights with 20 training patterns and projected the remaining two patterns onto the estimated LDA direction per stimulus condition and ROI, following an 11-fold cross-validation procedure. Because the number of dimensions was larger than the number of measures, a sparse LDA algorithm was implemented (40) to reduce dimensionality and to estimate a stable covariance matrix. For the data analyses in the study, unless otherwise stated, Bonferroni correction was applied with t tests and ANOVAs involving multiple comparisons.

Acknowledgments

We thank Zhong-Lin Lu and Aaron Seitz for helpful comments. This work was supported by NSFC 31230029, MOST 2015CB351800, NSFC 31421003, NSFC 61527804, and NSFC 91224008. B.T. was supported by NSERC Grants RPIN-05394 and RGPAS-477166.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1524160113/-/DCSupplemental.

References

- 1.Watanabe T, Sasaki Y. Perceptual learning: Toward a comprehensive theory. Annu Rev Psychol. 2015;66:197–221. doi: 10.1146/annurev-psych-010814-015214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Sagi D. Perceptual learning in Vision Research. Vision Res. 2011;51(13):1552–1566. doi: 10.1016/j.visres.2010.10.019. [DOI] [PubMed] [Google Scholar]

- 3.Hua T, et al. Perceptual learning improves contrast sensitivity of V1 neurons in cats. Curr Biol. 2010;20(10):887–894. doi: 10.1016/j.cub.2010.03.066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schoups A, Vogels R, Qian N, Orban G. Practising orientation identification improves orientation coding in V1 neurons. Nature. 2001;412(6846):549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- 5.Adab HZ, Vogels R. Practicing coarse orientation discrimination improves orientation signals in macaque cortical area v4. Curr Biol. 2011;21(19):1661–1666. doi: 10.1016/j.cub.2011.08.037. [DOI] [PubMed] [Google Scholar]

- 6.Zohary E, Celebrini S, Britten KH, Newsome WT. Neuronal plasticity that underlies improvement in perceptual performance. Science. 1994;263(5151):1289–1292. doi: 10.1126/science.8122114. [DOI] [PubMed] [Google Scholar]

- 7.Bi T, Chen J, Zhou T, He Y, Fang F. Function and structure of human left fusiform cortex are closely associated with perceptual learning of faces. Curr Biol. 2014;24(2):222–227. doi: 10.1016/j.cub.2013.12.028. [DOI] [PubMed] [Google Scholar]

- 8.Lim S, et al. Inferring learning rules from distributions of firing rates in cortical neurons. Nat Neurosci. 2015;18(12):1804–1810. doi: 10.1038/nn.4158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kahnt T, Grueschow M, Speck O, Haynes J-D. Perceptual learning and decision-making in human medial frontal cortex. Neuron. 2011;70(3):549–559. doi: 10.1016/j.neuron.2011.02.054. [DOI] [PubMed] [Google Scholar]

- 10.Law C-T, Gold JI. Neural correlates of perceptual learning in a sensory-motor, but not a sensory, cortical area. Nat Neurosci. 2008;11(4):505–513. doi: 10.1038/nn2070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dosher BA, Lu Z-L. Perceptual learning in clear displays optimizes perceptual expertise: Learning the limiting process. Proc Natl Acad Sci USA. 2005;102(14):5286–5290. doi: 10.1073/pnas.0500492102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chowdhury SA, DeAngelis GC. Fine discrimination training alters the causal contribution of macaque area MT to depth perception. Neuron. 2008;60(2):367–377. doi: 10.1016/j.neuron.2008.08.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chang DH, Kourtzi Z, Welchman AE. Mechanisms for extracting a signal from noise as revealed through the specificity and generality of task training. J Neurosci. 2013;33(27):10962–10971. doi: 10.1523/JNEUROSCI.0101-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tootell RB, et al. Functional analysis of V3A and related areas in human visual cortex. J Neurosci. 1997;17(18):7060–7078. doi: 10.1523/JNEUROSCI.17-18-07060.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Huang Y-Z, Edwards MJ, Rounis E, Bhatia KP, Rothwell JC. Theta burst stimulation of the human motor cortex. Neuron. 2005;45(2):201–206. doi: 10.1016/j.neuron.2004.12.033. [DOI] [PubMed] [Google Scholar]

- 16.Ball K, Sekuler R. Direction-specific improvement in motion discrimination. Vision Res. 1987;27(6):953–965. doi: 10.1016/0042-6989(87)90011-3. [DOI] [PubMed] [Google Scholar]

- 17.Seyal M, Ro T, Rafal R. Increased sensitivity to ipsilateral cutaneous stimuli following transcranial magnetic stimulation of the parietal lobe. Ann Neurol. 1995;38(2):264–267. doi: 10.1002/ana.410380221. [DOI] [PubMed] [Google Scholar]

- 18.Barlow HB. Single units and sensation: A neuron doctrine for perceptual psychology? Perception. 2009;38(6):795–798. doi: 10.1068/pmkbar. [DOI] [PubMed] [Google Scholar]

- 19.Jehee JF, Ling S, Swisher JD, van Bergen RS, Tong F. Perceptual learning selectively refines orientation representations in early visual cortex. J Neurosci. 2012;32(47):16747–53a. doi: 10.1523/JNEUROSCI.6112-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Shibata K, et al. Decoding reveals plasticity in V3A as a result of motion perceptual learning. PLoS One. 2012;7(8):e44003. doi: 10.1371/journal.pone.0044003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chen N, et al. Sharpened cortical tuning and enhanced cortico-cortical communication contribute to the long-term neural mechanisms of visual motion perceptual learning. Neuroimage. 2015;115:17–29. doi: 10.1016/j.neuroimage.2015.04.041. [DOI] [PubMed] [Google Scholar]

- 22.Yan Y, et al. Perceptual training continuously refines neuronal population codes in primary visual cortex. Nat Neurosci. 2014;17(10):1380–1387. doi: 10.1038/nn.3805. [DOI] [PubMed] [Google Scholar]

- 23.McKeefry DJ, Burton MP, Vakrou C, Barrett BT, Morland AB. Induced deficits in speed perception by transcranial magnetic stimulation of human cortical areas V5/MT+ and V3A. J Neurosci. 2008;28(27):6848–6857. doi: 10.1523/JNEUROSCI.1287-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Harvey BM, Braddick OJ, Cowey A. Similar effects of repetitive transcranial magnetic stimulation of MT+ and a dorsomedial extrastriate site including V3A on pattern detection and position discrimination of rotating and radial motion patterns. J Vis. 2010;10(5):21. doi: 10.1167/10.5.21. [DOI] [PubMed] [Google Scholar]

- 25.Vaina LM, et al. Can spatial and temporal motion integration compensate for deficits in local motion mechanisms? Neuropsychologia. 2003;41(13):1817–1836. doi: 10.1016/s0028-3932(03)00183-0. [DOI] [PubMed] [Google Scholar]

- 26.Vaina LM, Cowey A, Jakab M, Kikinis R. Deficits of motion integration and segregation in patients with unilateral extrastriate lesions. Brain. 2005;128(Pt 9):2134–2145. doi: 10.1093/brain/awh573. [DOI] [PubMed] [Google Scholar]

- 27.Wandell BA, Winawer J. Computational neuroimaging and population receptive fields. Trends Cogn Sci. 2015;19(6):349–357. doi: 10.1016/j.tics.2015.03.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lee HA, Lee S-H. Hierarchy of direction-tuned motion adaptation in human visual cortex. J Neurophysiol. 2012;107(8):2163–2184. doi: 10.1152/jn.00923.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Born RT, Bradley DC. Structure and function of visual area MT. Annu Rev Neurosci. 2005;28:157–189. doi: 10.1146/annurev.neuro.26.041002.131052. [DOI] [PubMed] [Google Scholar]

- 30.Mareschal I, Bex PJ, Dakin SC. Local motion processing limits fine direction discrimination in the periphery. Vision Res. 2008;48(16):1719–1725. doi: 10.1016/j.visres.2008.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Nishina S, Kawato M, Watanabe T. Perceptual learning of global pattern motion occurs on the basis of local motion. J Vis. 2009;9(9):1–6. doi: 10.1167/9.9.15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Dosher BA, Jeter P, Liu J, Lu Z-L. An integrated reweighting theory of perceptual learning. Proc Natl Acad Sci USA. 2013;110(33):13678–13683. doi: 10.1073/pnas.1312552110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kayser AS, Erickson DT, Buchsbaum BR, D’Esposito M. Neural representations of relevant and irrelevant features in perceptual decision making. J Neurosci. 2010;30(47):15778–15789. doi: 10.1523/JNEUROSCI.3163-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bejjanki VR, Beck JM, Lu ZL, Pouget A. Perceptual learning as improved probabilistic inference in early sensory areas. Nat Neurosci. 2011;14(5):642–648. doi: 10.1038/nn.2796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Cheung S-H, Fang F, He S, Legge GE. Retinotopically specific reorganization of visual cortex for tactile pattern recognition. Curr Biol. 2009;19(7):596–601. doi: 10.1016/j.cub.2009.02.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Striem-Amit E, Cohen L, Dehaene S, Amedi A. Reading with sounds: Sensory substitution selectively activates the visual word form area in the blind. Neuron. 2012;76(3):640–652. doi: 10.1016/j.neuron.2012.08.026. [DOI] [PubMed] [Google Scholar]

- 37.Chang DH, Mevorach C, Kourtzi Z, Welchman AE. Training transfers the limits on perception from parietal to ventral cortex. Curr Biol. 2014;24(20):2445–2450. doi: 10.1016/j.cub.2014.08.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Walsh V, Ashbridge E, Cowey A. Cortical plasticity in perceptual learning demonstrated by transcranial magnetic stimulation. Neuropsychologia. 1998;36(4):363–367. doi: 10.1016/s0028-3932(97)00113-9. [DOI] [PubMed] [Google Scholar]

- 39.Engel SA, Glover GH, Wandell BA. Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cereb Cortex. 1997;7(2):181–192. doi: 10.1093/cercor/7.2.181. [DOI] [PubMed] [Google Scholar]

- 40.Clemmensen L, Hastie T, Witten D, Ersbøll B. Sparse discriminant analysis. Technometrics. 2011;53(4):406–413. [Google Scholar]