Abstract

We give a mathematical framework for Exact Milestoning, a recently introduced algorithm for mapping a continuous time stochastic process into a Markov chain or semi-Markov process that can be efficiently simulated and analyzed. We generalize the setting of Exact Milestoning and give explicit error bounds for the error in the Milestoning equation for mean first passage times.

Keywords: accelerated molecular dynamics, long-time dynamics, stationary distribution, semi-Markov processes

1. Introduction

Molecular Dynamics (MD) simulations, in which classical equations of motion are solved for molecular systems of significant complexity, have proven useful for interpreting and understanding many chemical and biological phenomena (for textbooks see [23, 12, 1]). However, a significant limitation of MD is of time scales. Many molecular processes of interest occur on time scales significantly longer than the temporal scales accessible to straightforward simulations. For example, permeation of molecules through membranes can take hours [6], while MD is usually restricted to the microsecond time scale. One approach to extend simulation times is to use faster hardware [24, 25, 21]. Other approaches focus on developing theories and algorithms for long time phenomena. The emphasis in developing algorithms has been on methodologies for activated processes with a single dominant barrier such as Transition Path Sampling [8, 19]. Approaches for dynamics on rough energy landscapes and for more general and/or diffusive behavior have also been developed [22, 7, 26, 20]. The techniques of Exact Milestoning [3] and Milestoning [10] belong to the latter category.

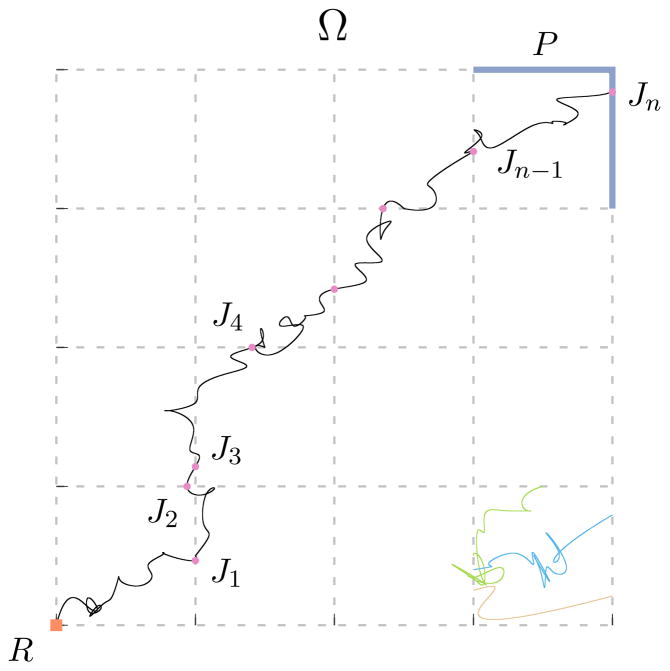

The acceleration in Milestoning is based on the use of a large number of short trajectories between certain hypersurfaces in phase space that we call milestones, instead of complete trajectories between reactants and products (see Figure 1). The use of short trajectories is trivial to parallelize, making the formulation efficient to use on modern computing resources. Moreover, using short trajectories initiated near bottlenecks of reactions allows for enhanced sampling of improbable but important events. The last enhancement can lead to exponential speed up of the computations. A challenge is how to start the short trajectories and how to analyze the result to obtain the correct long time behavior.

Fig. 1.

Representation of the state space Ω and the milestones. Each milestone is an edge of one of the squares traced by dashed grey lines. The reactant state R is highlighted as a square dot in the left-bottom corner while the product state P is comprised of the two line segments shown in blue at the upper-right corner. A particular realization of a long trajectory appears as a continuous black line and the corresponding values of the process (Jn) are marked with circles. The individual trajectories shown in different colors near the bottom-right corner represent trajectory fragments as used in the Milestoning algorithm.

Milestoning uses an approximate distribution for the initial conditions of the trajectories at the hypersurfaces. The results are then analyzed within the Milestoning theory. This approximation is typically the canonical distribution conditioned to be at the milestones. Exact Milestoning improves over the starting guess of the initial conditions by an iterative process that converges to the correct stationary distribution.

There are related approaches that use short trajectory information to obtain information on long time kinetics such as non-equilibrium umbrella sampling [28] and trajectory tilting [27]. However the overall scope of these methods is more limited than that of Exact Milestoning, which provides a complete statistical mechanical picture of the system.

While Milestoning is an approximate procedure, it shares the same philosophy and core algorithm as the Exact Milestoning approach. In both algorithms the state space Ω is coarse-grained by closed subsets called milestones. The short trajectories are run between nearby milestones and are terminated the first time they reach a milestone different from the one they were initiated on (see Figure 1). The short trajectories in time and space should be contrasted with long continuous trajectories from the reactant R to the products P in straight-forward MD.

In the theoretical analysis of the short trajectories, in Milestoning and Exact Milestoning emphasis is made on the absolute flux. The absolute flux is always positive and is the number of trajectories that pass through a milestone per unit time. While we obtained time-dependent solutions for the kinetics in Milestoning in the past [10], more recently and frequently we use stationary solutions [3, 17]. In stationary systems the flux is time independent and can be used to define a probability measure after a proper normalization. Hence, it is possible to reformulate the algorithms of Milestoning and Exact Milestoning in terms of widely used concepts and tools of probability theory. This formulation and a rigorous mathematical framework are provided in the present manuscript.

This article is organized as follows. In Section 2, we describe the setting for Exact Milestoning and introduce notation used throughout. In Section 3, we show existence of and convergence to a stationary flux under very general conditions. In Section 4 we state precisely the Exact Milestoning algorithm [3]. In Section 5, we establish conditions under which convergence to the stationary flux is consistent in the presence of numerical error (Lemma 5.2 and Theorem 5.3), and we give a natural upper bound for the numerical error arising in Exact Milestoning (Theorem 5.4). Finally, in Section 6 we consider some instructive examples.

2. Setup and notation

2.1. The dynamics and MFPT

In Milestoning we spatially coarse-grain a stochastic dynamics (Xt). The basic idea is to stop and start trajectories on certain interfaces, called milestones, and then reconstruct functions of (Xt) using these short trajectories, which can be efficiently simulated in parallel. We focus here on using Milestoning for the efficient computation of mean first passage times (MFPTs) of (Xt), although similar ideas can be used to compute other non-equilibrium quantities.

To make our arguments we need some assumptions on the process (Xt). We assume (Xt) is a time homogeneous strong Markov process with càdlàg paths taking values in a standard Borel space Ω. These assumptions allow us to stop and restart (Xt) on the milestones without knowing its history. In applications, usually (Xt) is Langevin or overdamped Langevin dynamics, and Ω is a subset of Euclidean space. More generally, (Xt) could be a diffusion and Ω = ℝd/ℤd could be a torus, or (Xt) could be a continuous time Markov chain on a discrete set Ω = ℕ.

We write ℙ, 𝔼 for all probability measures and expectations. ℙξ and ℙx will represent probabilities for a process starting at a distribution ξ or point x, respectively. We write ||·||TV for total variation norm of signed measures. Our analysis below will mostly take place in an idealized setting where we assume infinite sampling on the milestones. In this setting, distributions are smooth (if state space is continuous) and the total variation norm is the appropriate one. We omit explicit mention of sigma algebras and filtrations, which will always be the natural ones, and we will use the words distribution and probability measure interchangeably.

Recall we are interested in computing the MFPT of (Xt) from a reactant set R to a product set P. Throughout we consider fixed disjoint product and reactant sets P, R ⊂ Ω. When R is not a single point, we will start (Xt) from a fixed probability measure ρ on R. If R is a single point, ρ = δR, the delta distribution at R. As discussed above, Milestoning allows for an efficient computation of the MFPT of (Xt) to P, starting at ρ. It is useful to think of P as a sink, and R as a source for (Xt). More precisely, we assume that when (Xt) reaches P, it immediately restarts on R according to ρ. Obviously, this assumption has no effect on the MFPT to P. It will be useful, however, for computational and theoretical considerations.

2.2. The milestones and semi-Markov viewpoint

Milestoning uses a set M ⊂ Ω to spatially parallelize the computation of the MFPT. Each point x ∈ M will belong to a milestone Mx ⊂ M. Thus, M is the union of all the milestones. We assume there are finitely many milestones, each of which is a closed set. Moreover, we assume that (Xt) passes through the intersection of two milestones with probability 0 – thus, (Xt) can only cross one milestone at a time. This can be accomplished for Langevin or overdamped Langevin dynamics by, for example, taking the milestones to be codimension 1 manifolds whose pairwise intersections are codimension 2 or larger. The sets P and R will be two of the milestones. Typically, the milestones are hyperplanes which approximate the level sets of a reaction coordinate, or they are faces of cell boundaries of a Voronoi tesselation obtained by taking points along a high temperature trajectory for (Xt).

Let X0 ~ ξ, where ξ denotes a generic probability measure on M. We now describe in detail the coarse-graining process. By following the sequence of milestones crossed by (Xt), we obtain a stochastic process defined on M as follows. Let θ0, θ1, θ2, . . . be stopping times for (Xt) defined by

Informally, θn corresponds to the time at which (Xt) crosses a milestone for the nth time. It is very important to note that θn corresponds to the first time for (Xt) to hit the “next” milestone, that is, a milestone different from the one (Xt) hit at time θn−1. Thus, when we refer to milestone crossings, we mean crossings of a milestone different from the last one crossed.

Define also τ0 = 0 and for n ≥ 1, τn = θn − θn−1. Informally, τn is the time between the (n − 1)th and nth milestone crossings. For n ≥ 0, define Jn = Xθnto be the sequence of milestone points visited. That is, Jn is the point on the nth milestone crossed by (Xt). Note that each Jn is an element on some milestone – not the label of a milestone. Thus, (Jn) lives on the possibly uncountable set M.

As discussed above, due to our assumptions on M and (Xt), the law of Xn and τn depends only on Xn−1, that is,

| (2.1) |

Let (Yt) be the jump process on M defined by Yt = Jn if θn ≤ t < θn+1. Since Jn is the nth milestone point crossed by (Xt), and θn is the time at which (Xt) crossed this point, we see that (Yt) equals (Xt) at each milestone crossing. Thus, (Yt) can be considered a coarse-grained version of (Xt) which throws away the path of (Xt) between milestones, keeping only the endpoints. When (Yt) has a probability density, it corresponds to the density p(x, t) from [3] for the last milestone point passed. Due to (2.1), (Yt) is called semi-Markov. It has transition kernel K(x, dy, dt) given by

We adopt the convention that the innermost integral corresponds to the first variable of integration appearing in the integrand. Note that if (Yt) and (Xt) start at the same distribution ξ on M, then they have the same MFPT to P. Thus, for the purposes of studying the MFPT, it suffices to consider the coarse-grained process (Yt). Since (Xt) restarts at ρ upon reaching P, so does (Yt), and so

| (2.2) |

Following standard terminology in probability theory, we call (Jn) the jump chain of (Yt). It is a Markov chain with transition kernel

2.3. Stopping times

Note that the sojourn times τn between milestones depend on the position of (Xt) on the nth milestone crossed at time t = θn−1. The following notation will thus be useful. For x ∈ M, we write

This is the first time for (Yt) to hit some milestone other than Mx, starting at x ∈ M. In particular, if Xθn−1 = x was the (n − 1)th crossing point, then is the next crossing time. As we are concerned with first passage times to P, we will also use the notation

In particular, the MFPT we are interested in is 𝔼ρ[τP]. As discussed above, the MFPT will be estimated via short trajectories between milestones. An important ingredient is the correct starting distribution for these trajectories. Exact Milestoning makes use of a stationary flux of (Xt) on the milestones, which corresponds to the stationary distribution (shown to exist below) of the jump chain (Jn). To study this distribution we need to define also the first hitting time of P for (Jn):

Some assumption is required to guarantee the existence of a stationary flux. We adopt the following very general sufficient condition, which we assume holds through the remainder of the paper: 𝔼ξ[τP] and 𝔼ξ[σP] are finite for all probability measures ξ on M. This ensures that (Yt) reaches P in finite expected time and does not cross infinitely many milestones in finite time. In probabilistic language, the latter condition means that (Yt) is non-explosive. This sufficient condition can be readily verified in the standard settings for milestoning discussed above. Under only this assumption, we give in Theorem 3.3 an exact milestoning equation for 𝔼ρ[τP]. Moreover we show in Section (4) how the equation leads to an efficient algorithm for computing &Eopfρ[τP]. The exact equation we give is the same as in [3], except phrased in the semi-Markov framework defined above.

2.4. Dual action of kernels

We use the following notation for the expected value of a bounded function f : M → ℝ with respect to a probability measure ξ on M:

It will be useful to consider K(x, dy) as a stochastic kernel which operates on probability measures from the left and bounded functions on the right. More precisely, by a stochastic kernel we mean an operator P such that

are maps preserving, respectively, the complete metric space ℘ of probability measures on M with the total variation norm, and the Banach space ℬ of bounded functions on M with the sup norm. Informally, ξK is the distribution of Jn, and ξKf is the expected value of f(Jn), given that Jn−1 was distributed according to ξ. In particular, Kn is a stochastic kernel for each n ≥ 0, with ℙξ(Jn ∈ ·) = ξKn and 𝔼ξ[f(Jn)] = ξKnf, where K0 = 1 is the identity operator.

3. Invariant measure and MFPT

3.1. Stationary distribution on the milestones

The formula for the MFPT relies on a stationary distribution, which we denote by μ, for (Jn). Our μ is the same as the appropriately normalized stationary flux q in other Milestoning papers. We use the notation μ instead of q to emphasize that μ is a probability measure on M that may not have a density. We show in Theorem 3.1 below that μ exists. The proof of existence requires only the above assumption of finite MFPTs to P and non-explositivity of (Yt), along with the special source and sink structure of the dynamics implied by (2.2).

Theorem 3.1

(Jn) has an invariant probability measure μ defined by

Proof

Define and observe that

If C ∧ R = ∅, by bounded convergence,

where the second equality uses ℙρ(J0 ∈ C) = 0 and Jn+1 ∉ R ⇒ σP ≠ n. If C ⊂ R,

We will show below that (Jn) converges to μ under appropriate conditions. In that case μ is unique and we will call μ the stationary measure. We emphasize that the formula on which Exact Milestoning is based – the equation in Theorem 3.3 below – holds true whether or not μ is unique. However, a successful application of the Exact Milestoning algorithm will require some technique for sampling μ. The algorithm we present (Algorithm 1 below) is based on convergence of (Jn) to μ. We consider two types of convergence in Theorem 3.4 and 3.5 below. We also discuss a simple case where (Jn) may not converge following Theorem 3.4 below.

Algorithm 1.

Exact Milestoning algorithm.

| Input: Milestones , initial guess ξ, and tolerance ε > 0 for the absolute error in the MFPT. |

| Output: Estimates for μ, local MFPTs 𝔼μ[τM], and overall MFPT 𝔼ρ[τP]. |

| μ(0) ← ξ |

| T(0) ← +∞ |

| for all n = 1, 2, . . . do |

| for i = 1 to m do |

| Estimate and |

| end for |

| Solve wTA = w T (with and ) |

| for j = 1 to m do |

| end for |

| Normalize μ(n) |

| T(n) ← μ(P)−1 𝔼μ(n−1)[τM] |

| if |T(n) − T(n−1)| < ε then |

| break |

| end if |

| end for |

| return (μ(n), 𝔼μ(n−1)[τM], T(n)) |

It is worth noting that the proof of Theorem 3.1 leads to the following representation of μ as a Neumann series. The representation is given in Corollary 3.2 below. In principle, this representation can be used to sample μ even when (Jn) does not converge to μ. The Neumann series is written in terms of the sub-stochastic transient kernel K̄ defined by

| (3.1) |

The transient kernel K̄ has the same right action as the stochastic kernel K, but its left action is on the larger Banach space of signed bounded measures with total variation norm. Informally, it corresponds to a version of (Jn) that is “killed” when upon reaching P.

Corollary 3.2

We have

| (3.2) |

Proof

Recall that μ = ν/ν (M) where

Moreover,

| (3.3) |

and the right hand side of (3.3) is summable since by assumption 𝔼ρ[σP] < ∞.

3.2. Milestoning equation for the MFPT

Equipped with an invariant measure μ, we are now able to state the Milestoning equation (3.4) for the MFPT. In Exact Milestoning, this equation is used to efficiently compute the MFPT. The algorithm is based on two principles: first, many trajectories can be simulated in parallel to estimate for various x; and second, the stationary distribution μ can be efficiently estimated through a technique based on power iteration. (See the right hand side of equation (3.4).) The gain in efficiency comes from the fact that the trajectories used to estimate are much shorter than trajectories from R to P. The second principle above depends somewhat on whether we have a good “initial guess” of μ. When (Xt) is Langevin dynamics, we have found in some cases the canonical Gibbs distribution is a sufficiently good initial guess. See [3] and [4] for details and discussion.

Theorem 3.3

Let μ be defined as above. Then μ(P) > 0 and

| (3.4) |

Proof

The assumption 𝔼ρ[σP] < ∞ shows that μ(P) > 0. For any x ∈ M,

Thus,

and so

In Section 4 below we present the Exact Milestoning algorithm (Algorithm 1) recently used in [3] and [4]. The algorithm uses a technique which combines coarse-graining and power iteration to sample μ. Consistency of power iteration algorithms are justified via Theorem 3.4 below, where we show ξKn → μ as n → ∞. Though we emphasize that there are a range of possibilities for sampling μ (for example, algorithms based on (3.2) or (3.7) below) we note that Algorithm 1 was shown to be efficient for computing the MFPT in the entropic barrier example of [3] and the random energy landscapes example of [4].

3.3. Convergence to stationarity

In this section we justify the consistency of power iteration-based methods for sampling μ by showing that ξKn converges to μ in total variation norm as n → ∞. The theorem requires an extra assumption – aperiodicity of the jump chain (Jn).

Theorem 3.4

Suppose that (Jn) is aperiodic in the following sense:

| (3.5) |

Then for all probability measures ξ on M,

| (3.6) |

In particular, μ is unique.

Proof

We use a simple coupling argument. Let (Hn) be an independent copy of (Jn) and let J0 ~ ξ and H0 ~ μ. For n ≥ 0, let Sn (resp. Tn) be the times at which (Jn) (resp. (Hn)) hit P for the (n + 1)st time. Then Sn+1 − Sn, n ≥ 0, are iid random variables with finite expected value and nonlattice distribution, and (Sn+1 −Sn)n≥0 ~ (Tn+1 −Tn)n≥0. It follows that (Sn −Tn)n≥0 is a mean zero random walk with nonlattice step distribution. Thus, its first time to hit 0 is finite almost surely. So

obeys ℙ (ζ ≥ n) → 0 as n → ∞. Note that Jn ~ Hn whenever ζ < n. Thus

Since μ is stationary for (Hn) we have ℙμ(Hn ∈ C) = μ(C). Now

which establishes the convergence result. To see uniqueness, suppose ξ is another invariant probability measure for (Jn); then the last display becomes ||ξ − μ||TV ≤ 2ℙ(ζ ≥ n). Letting n → ∞ shows that ξ ~ μ.

We now consider a class of problems where there is a smooth one-dimensional reaction coordinate ψ : Ω → [0, 1] tracking progress of (Xt) from R to P. In this case ψ|R ≡ 0, ψ|P ≡ 1, the milestones M1, ..., Mm are disjoint level sets of ψ, and R = M1, P = Mm. The jump chain (Jn) can only hop between neighboring milestones, unless it is at P. That is, if Jn ∈ Mi for i ∉ {1, m}, then Jn+1 ∈ Mi−1 or Jn ∈ Mi+1; if Jn ∈ M1 then Jn+1 ∈ M2; and if Jn ∈ Mm, then Jn+1 ∈ M1. Suppose that if Jn ∈ Mi for i ∉ {1, m}, then Jn+1 ∈ Mi−1 with probability in (0, 1). Such systems satisfy the aperiodicity assumption (3.5) if and only if m is odd. This is due to the fact that, starting at M1, (Jn) can reach Mm in either m − 1 or m + 1 steps with positive probability, and m and m+2 are coprime when m is odd. On the other hand, if m is even then the conclusion of Theorem 3.4 cannot hold. To see this, let m be even and start (Jn) at an initial distribution supported on an odd-indexed milestone. Then after any even number of steps (Jn) is supported on an odd-indexed milestone, while after any odd number of steps (Jn) is supported on an even-indexed milestone.

Theorem 3.4 estabishes convergence of the time marginals of (Jn), that is, the distributions of Jn at fixed times n. (Jn) also converges in a time-averaged sense, even if (3.5) does not hold. More precisely, we have the following version of the Birkhoff ergodic theorem:

Theorem 3.5

Let J0 ~ ξ, with ξ a probability measure on M. For bounded f : M → ℝ,

| (3.7) |

Proof

Let Sn be the times at which (Jn) hits P for the (n + 1)st time, and define

Note that fn, n ≥ 0, are iid. Let k(n) = max{k : Sk ≤ n} and write

Since (Jn) hits P in finite time a.s., n − Sk(n) and S0 are finite a.s. Thus,

Notice Rn := Sn+1 − Sn, n ≥ 0 are iid with finite expectation and

By the previous two displays and the law of large numbers,

with ν defined as in Theorem 3.4.

Theorem 3.5 shows that problems in sampling μ arising from aperiodicity can be managed by averaging over time.

Markov chains for which the conclusion of Theorem 3.4 hold are called Harris ergodic. It is worth noting that Harris ergodicity, together with aperiodicity of the sojourn times, also leads to a limit for the time marginals of of (Yt). More precisely, suppose (3.5) holds and for each x ∈ M \ P and y ∈ M, ℙx(τ1 ∈ · | J1 = y) is nonlattice. Then for any C ⊂ M and μ-a.e. x,

| (3.8) |

See [2] for details and proof. When the right hand side of (3.8) has a density, it is the same as the stationary probability density p(x) in [3] for the last milestone point passed.

4. Exact Milestoning algorithm

We now describe in detail an algorithm for sampling the stationary measure μ and the MFPT 𝔼ρ[τP], used successfully for instance in [3] and [4]. Let ξ be an initial guess for μ. For example, if (Xt) is Brownian or Langevin dynamics, we usually take ξ to the canonical Gibbs distribution. We assume here that the conclusion of Theorem 3.4 holds. We write Mi for the distinct milestones, so that M = ∪iMi and Mi, Mj have disjoint interiors when i ≠ j. The algorithm will produce approximations

of μ. Let be the non-normalized restriction of μ(n) to Mi, and define

(This is a slight abuse of notation, since is not a probability distribution: it is not normalized.) For C ⊂ Mj we will also use the notation

Below we think of and as either distributions or densities. The are obtained based on observations of trajectory fragments. We can conceive a simple Monte Carlo scheme for the purpose of approximating these distributions as follows. Let x1,..., xL be iid samples from the distribution . From each i sample point xℓ ∈ Mi, we simulate (Xt) until it hits a neighboring milestone, say at the point yℓ ∈ Mj. If we idealize by assuming the simulation of (Xt) is done exactly, then by Chebyshev’s inequality,

where δy is the Dirac delta distribution at y. We therefore write, for y ∈ M,

| (4.1) |

where δ̃z is either some suitable approximation to the identity at z, or simply a delta function at z. Thus, in Algorithm 1 we think of and as either densities in the former case, or as distributions in the latter. The local mean first passage times, that is, the times between milestone crossings, are approximated by the sample means

It is important to realize that we do not need to store the full coordinates of each yℓ in memory. Instead, it suffices to use a data-structure that keeps track of the pairs (yℓ, Mj). The actual coordinates of each point can be written to disk and read from it as needed.

The eigenvalue problem in Algorithm 1 involves a stochastic matrix that is sparse. Indeed, the i-th row corresponds to milestone Mi and may have only as many non-zero entries as the number of neighboring milestones Mj. In practice, to solve the eigenvalue problem we can use efficient and accurate Krylov subspace solvers [15] such as Arnoldi iteration [18] to obtain w without computing all the other eigenvectors.

In Algorithm 1, if is used instead of the solution w to wTA = wT, then the algorithm approximates μ by simple power iteration. The reason for defining the weights as the solution to wTA = wT is practical: we have found that it gives faster convergence of the iterations, at no apparent cost to accuracy. It can be seen as a version of power iteration that uses coarse-graining. See [3, 4] for applications of the algorithm in Exact Milestoning and [15, 18] for related discussions.

5. Error analysis

5.1. Stationary distribution error

In practice, due to time discretization error, we cannot generate trajectories exactly according to the transition kernel K. Instead, we can generate trajectories according to a numerical approximation Kε. We investigate here whether such schemes are consistent, that is, whether powers of Kε of K converge to a distribution με ≈ μ. We emphasize that, even though we account for time discretization here, we still assume infinite sampling, and thus for a given x ∈ M, Kε (x, dy) may be a continuous distribution. See Section 5.2 below for related remarks and a discussion of how time discretization errors affect the Exact Milestoning estimate of the MFPT.

The following theorem, restated from [11], establishes consistency of iteration schemes based on Theorem 3.4 when Kε is sufficiently close to K and (Jn) is geometrically ergodic. After the theorem, in Lemma 5.2 and Theorem 5.3 we give natural conditions for geometric ergodicity of (Jn).

Theorem 5.1

Suppose (Jn) is geometrically ergodic: there exists κ ∈ (0, 1) such that

Let {Kε} be a family of stochastic kernels with K0 = K, assumed to act continuously on ℬ, such that

| (5.1) |

Then for each κ̂ ∈ (κ, 1), there is δ > 0 such that for each ε ∈ [0, δ), Kε has a unique invariant probability measure με, and

Geometric ergodicity is inconvenient to check directly. We give two sufficient conditions for geometric ergodicity of (Jn). The first condition is a uniform lower bound on the probability to reach P in N steps; see Lemma 5.2. We use this to obtain a strong Feller condition in Theorem 5.3. The latter is a very natural condition and is easy to verify in some cases, for instance when (Xt) is a nondegenerate diffusion and the milestones are sufficiently regular.

Lemma 5.2

Suppose that there exists λ ∈ (0, 1) and N ∈ ℕ such that for all x ∈ M, ℙx(JN−1 ∈ P) ≥ λ > 0. Then (Jn) is geometrically ergodic:

Proof

Let ξ1, ξ2 ∈ ℘, consider the signed measure ξ = ξ1 − ξ2 and compute

The last line uses the fact that KN(y, dz) − λρ(dz) is a positive measure. This shows that KN is a contraction mapping on ℘ with contraction constant (1 − λ). Observe also that ||ξ1K − ξ2K||TV ≤ ||ξ1 − ξ2||TV. The result now follows from the contraction mapping theorem. See for instance Theorem 6.40 of [9].

Note that the λ in Lemma (5.2) is a quantity that can be estimated, at least in principle, by running trajectories of (Xt) starting at x which cross N − 1 milestones before reaching P. However, this is likely impractical for the same reason direct estimation of the MFPT is impractical – the trajectories would be too long. One alternative would be to compute the probability for the Markov chain ( ) on {1, …, m} with transition matrix A, and use the minimum over i ∈ {1, …, m} as a proxy for λ. Even without a practical way to estimate λ, we believe the characterization of Lemma 5.2 is useful for understanding the convergence rate.

Lemma 5.2 leads to the following condition for geometric ergodicity of (Jn).

Theorem 5.3

Suppose that M is compact and (Jn) is a strong Feller chain which is aperiodic in the sense of (3.5). Then (Jn) is geometrically ergodic.

Proof

Let ε ∈ (0, μ(P)). By Theorem 3.4, for each x ∈ M there is Nx ∈ ℕ such that ℙx(Jn ∈ P ) ≥ ε for all n ≥ Nx. Because (Jn) is strong Feller, the map x → ℙx(Jn ∈ P) is continuous. By compactness of M, it follows that for any λ ∈ (0, ε) there is N ∈ ℕ such that ℙy(Jn ∈ P) ≥ λ for all y ∈ M and n ≥ N − 1. Theorem 5.2 now yields the result.

5.2. MFPT error

As discussed above, Equation 3.4 can be used to estimate the MFPT 𝔼ρ[τP ] by sampling μ and local MFPTs . The error in this estimate has two sources. First, in general we only have an approximation μ̃ ≡ με of μ. The second source of error is in the sampling of , due to the fact that we can only simulate a time discrete version (X̃nδt) of (Xt). In Theorem 5.4 below we give an explicit formula for the numerical error of the MFPT in terms of these two sources. We first need the following notation. Let be the minimum of all n δt > 0 such that the line segment between X̃nδt and X̃ (n+1)δt intersects M \ Mx, and define

We emphasize that Theorem 5.4 below gives an expression for the error in the original Milestoning as well as in Exact Milestoning.

Theorem 5.4

There exists a nonnegative function ϕ such that

| (5.2) |

where

Proof

Note that

where we have written . We may write

for the term depending only on time stepping error. Note that

Combining the last three expressions yields the result.

Recall that in the above we have ignored errors from finite sampling. We now discuss the implications of those errors. In the original Milestoning, μ̃ is the canonical Gibbs distribution on the milestones. In that setting, we can typically sample independently from μ̃ on the milestones. Thus, the central limit theorem implies that the true error in the Milestoning approximation of 𝔼ρ [τP] is bounded above with high probability by the right hand side of (5.2) plus a constant times , where N is the number of samples. An analogous argument applies to Exact Milestoning if μ̃ is sampled by simple power iteration. For our coarse-grained version of power iteration, however, we obtain samples of μ̃ which are not independent, and thus a more detailed analysis would be required to determine the additional error from finite sampling.

We do not analyze the time discretization error ϕ(δt) and instead refer the reader to [14] and references therein. Here we simply remark that, if (Xt) is a diffusion process, then under certain smoothness assumptions on the drift and diffusion coefficients of (Xt) and on M, we have when (Xnδt) is the standard Euler time discretization with time step δt. See [13] for details and proof and see [5, 16] for numerical schemes that mitigate time discretization error in the MFPTs.

6. Illustrative examples

In this section we present two examples of Milestoning on the torus Ω = ℝ2/ℤ2. The process (Xt) will be governed by the Brownian dynamics equation,

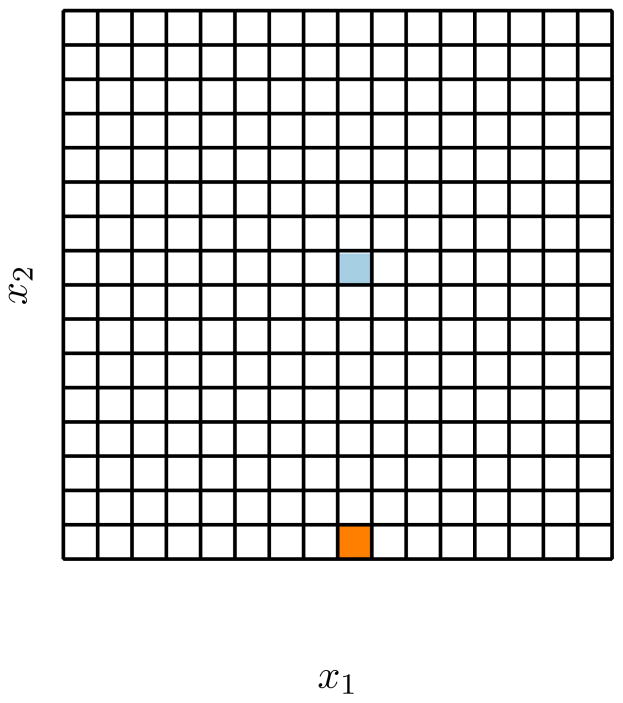

where U : Ω → ℝ is a smooth potential energy function, β > 0 is the inverse temperature, and (Bt) is a standard Brownian motion. For our computations, we consider a uniformly spaced mesh of milestones with fixed product and reactant sets P, R; see Figure 2.

Fig. 2.

Diagram showing the reactant state (orange square) and the product state (blue square) within the set of all milestones for the example in Section 6. Each milestone is an edge of one of the small squares in the diagram. (The total number of milestones has been decreased to enhance visibility.)

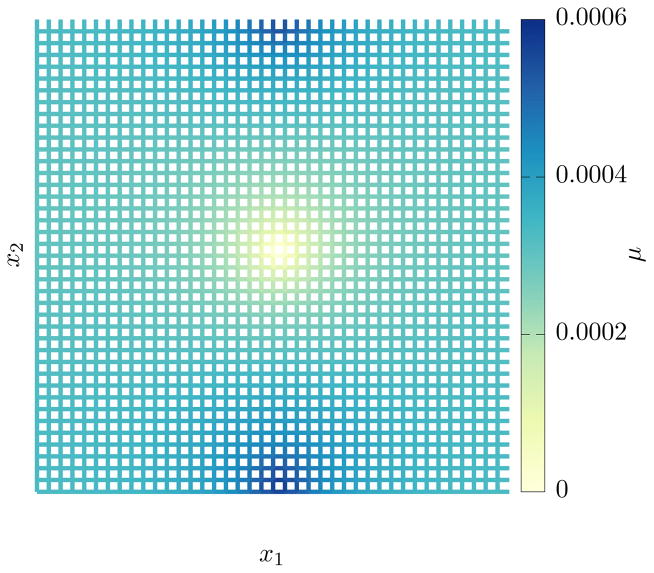

6.1. Constant potential energy

In the case where U is a constant function, the corresponding canonical Gibbs distribution is also constant. However, the stationary distribution μ obtained in Milestoning is not constant. This is because every trajectory reaching the product state P immediately restarts at R according to ρ, as determined by (2.2). The resulting (density of) μ is shown in Figure 3.

Fig. 3.

Stationary distribution μ corresponding to a system with constant potential energy for the example in Section 6. There are 2 × 40 × 40 total milestones.

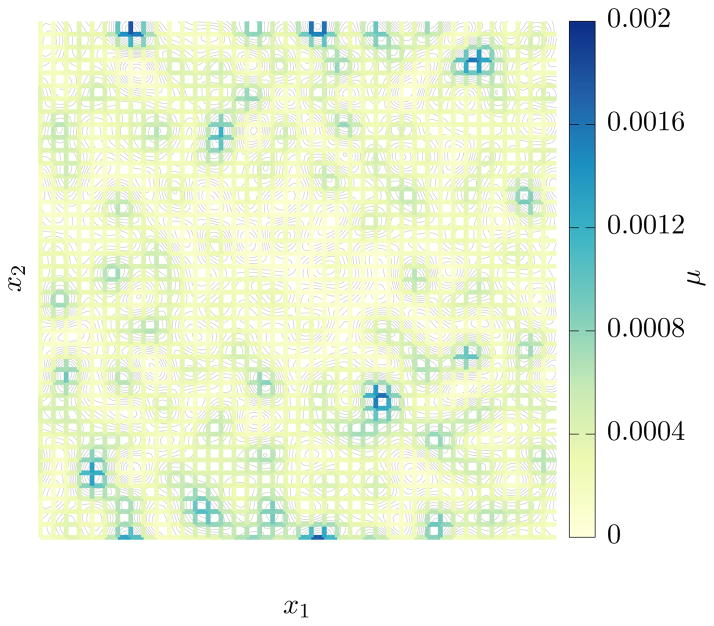

6.2. Rough energy landscape

In this case, we consider U of the form

| (6.1) |

where N is a parameter determining the maximum allowed frequency. The constants Ck1,k2 are given by

with ck1,k2 being uniformly distributed in the interval ( ). The random functions fk1,k2 are defined as

Therefore, the potential energy function U is a sparse, periodic function on the two-dimensional torus. An example of a stationary distribution on a particular realization of the above potential energy functions is displayed in Figure 4, where we see that (the density of) μ is concentrated in a few basins of attraction.

Fig. 4.

Stationary density μ on a rough energy landscape for the example in Section 6. The contour lines are the level sets of U.

Acknowledgments

This research was supported in part by a grant from the NIH GM59796 and from the Welch Foundation Grant No. F-1783.

References

- 1.Allen P, Tildesley DJ. Computer Simulation of Liquids. Oxford Science Publications, Clarendon Press; 1989. [Google Scholar]

- 2.Alsmeyer G. On the Markov renewal theorem. Stochastic Processes and their Applications. 1994;50:37–56. [Google Scholar]

- 3.Bello-Rivas JM, Elber R. Exact milestoning. The Journal of Chemical Physics. 2015;142:094102. doi: 10.1063/1.4913399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bello-Rivas JM, Elber R. Simulations of thermodynamics and kinetics on rough energy landscapes with milestoning. 2015 doi: 10.1002/jcc.24039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bouchard B, Geiss S, Gobet E. First time to exit of a continuous Itô process: general moment estimates and $L 1$-convergence rate for discrete time approximations. 2013:1–31. [Google Scholar]

- 6.Cardenas AE, DeLeon KY, Hegefeld WA, Kuczera K, Jas GS, Elber R. Unassisted Transport of Block Tryptophan through DOPC Membrane: Experiment and Simulation. 2012 doi: 10.1021/jp2102447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chodera JD, Singhal N, Pande VS, Dill KA, Swope WC. Automatic discovery of metastable states for the construction of Markov models of macromolecular conformational dynamics. Journal of Chemical Physics. 2007;126 doi: 10.1063/1.2714538. [DOI] [PubMed] [Google Scholar]

- 8.Dellago C, Bolhuis PG, Geissler PL. Transition Path Sampling. 2002;123 doi: 10.1146/annurev.physchem.53.082301.113146. [DOI] [PubMed] [Google Scholar]

- 9.Douc R, Moulines E, Stoffer D. Chapman & Hall/CRC Texts in Statistical Science. Taylor & Francis; 2014. Nonlinear Time Series: Theory, Methods and Applications with R Examples. [Google Scholar]

- 10.Faradjian AK, Elber R. Computing time scales from reaction coordinates by milestoning. Journal of Chemical Physics. 2004;120:10880–10889. doi: 10.1063/1.1738640. [DOI] [PubMed] [Google Scholar]

- 11.Ferré D, Hervé L, Ledoux J. Regular perturbation of v -geometrically ergodic markov chains. Journal of Applied Probability. 2013;50:184–194. [Google Scholar]

- 12.Frenkel D, Smit B. Understanding Molecular Simulation: From Algorithms to Applications. 2002. [Google Scholar]

- 13.Gobet E, Menozzi S. Exact approximation rate of killed hypoelliptic diffusions using the discrete Euler scheme. Stochastic Processes and their Applications. 2004;112:201–223. [Google Scholar]

- 14.Gobet E, Menozzi S. Stopped diffusion processes: Boundary corrections and overshoot. Stochastic Processes and their Applications. 2010;120:130–162. [Google Scholar]

- 15.Golub GH, Van Loan CF. Matrix computations. 4. Johns Hopkins Studies in the Mathematical Sciences, Johns Hopkins University Press; Baltimore, MD: 2013. [Google Scholar]

- 16.Higham DJ, Mao X, Roj M, Song Q, Yin G. Mean Exit Times and the Multilevel Monte Carlo Method. SIAM Journal on Uncertainty Quantification. 1(1):2–18. [Google Scholar]

- 17.Kirmizialtin S, Elber R. Revisiting and computing reaction coordinates with Directional Milestoning. The journal of physical chemistry A. 2011;115:6137–48. doi: 10.1021/jp111093c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lehoucq RB, Sorensen DC, Yang C. ARPACK users’ guide, vol. 6 of Software, Environments, and Tools. Society for Industrial and Applied Mathematics (SIAM); Philadelphia, PA: 1998. [Google Scholar]

- 19.Metzner P, Schütte C, Vanden-Eijnden E. Transition Path Theory for Markov Jump Processes. 2009. [Google Scholar]

- 20.Moroni D, Bolhuis PG, Van Erp TS. Rate constants for diffusive processes by partial path sampling. Journal of Chemical Physics. 2004;120:4055–4065. doi: 10.1063/1.1644537. [DOI] [PubMed] [Google Scholar]

- 21.Ruymgaart AP, Cardenas AE, Elber R. MOIL-opt: Energy-Conserving Molecular Dynamics on a GPU/CPU system. Journal of chemical theory and computation. 2011;7:3072–3082. doi: 10.1021/ct200360f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Sarich M, Noé F, Schütte C. On the Approximation Quality of Markov State Models. 2010. [Google Scholar]

- 23.Schlick T. Molecular Modeling and Simulation: An Interdisciplinary Guide. 2010. [Google Scholar]

- 24.Shaw DE, Chao JC, Eastwood MP, Gagliardo J, Grossman JP, Ho CR, Lerardi DJ, Kolossváry I, Klepeis JL, Layman T, McLeavey C, Deneroff MM, Moraes MA, Mueller R, Priest EC, Shan Y, Spengler J, Theobald M, Towles B, Wang SC, Dror RO, Kuskin JS, Larson RH, Salmon JK, Young C, Batson B, Bowers KJ. Anton, a special-purpose machine for molecular dynamics simulation. 2008. [Google Scholar]

- 25.Stone JE, Phillips JC, Freddolino PL, Hardy DJ, Trabuco LG, Schulten K. Accelerating molecular modeling applications with graphics processors. Journal of Computational Chemistry. 2007;28:2618–2640. doi: 10.1002/jcc.20829. [DOI] [PubMed] [Google Scholar]

- 26.Swenson DWH, Bolhuis PG. A replica exchange transition interface sampling method with multiple interface sets for investigating networks of rare events. Journal of Chemical Physics. 2014;141 doi: 10.1063/1.4890037. [DOI] [PubMed] [Google Scholar]

- 27.Vanden-Eijnden E, Venturoli M. Exact rate calculations by trajectory parallelization and tilting. The Journal of chemical physics. 2009;131:1–7. doi: 10.1063/1.3180821. [DOI] [PubMed] [Google Scholar]

- 28.Warmflash A, Bhimalapuram P, Dinner AR. Umbrella sampling for nonequilibrium processes. The Journal of chemical physics. 2007;127:154112. doi: 10.1063/1.2784118. [DOI] [PubMed] [Google Scholar]