Abstract

Face recognition usually takes place in a social context, where faces are surrounded by other stimuli. These can act as distracting flankers which impair recognition. Previous work has suggested that flankers expressing negative emotions distract more than positive ones. However, the various negative emotions differ in their relative impact and it is unclear whether all negative emotions are equally distracting. We investigated the impact of three negative (angry, fearful, sad) and one positive (happy) facial flanker conditions on target recognition in an emotion discrimination task. We examined the effect of the receiver’s gender, and the impact of two different temporal delays between flanker and target onset, as stimulus onset asynchrony is assumed to affect distractor strength. Participants identified and rated the emotional intensity of target faces surrounded by either face (emotional and neutral) or non-face flankers. Target faces were presented either simultaneously with the flankers, or delayed by 300 ms. Contrary to our hypothesis, negative flankers did not exert stronger distraction effects than positive or neutral flankers. However, happy flankers reduced recognition performance. Results of a follow-up experiment with a balanced number of emotion categories (one positive, one negative and one neutral flanker condition) suggest that the distraction effect of emotional flankers depends on the composition of the emotion categories. Additionally, congruency effects were found to be valence-specific and overruled by threat stimuli. Females responded more quickly and rated targets in happy flankers as less intense. This indicates a gender difference in emotion processing, with greater sensitivity to facial flankers in women. Targets were rated as more intense when they were presented without a temporal delay, possibly due to a stronger flanker contrast. These three experiments show that an exceptional processing of threat-related flanker stimuli depends on emotion category composition, which should be considered a mediating factor when examining emotional context effects.

Keywords: threat superiority, distractor, congruency, gender differences, emotional face processing, flanker, stimulus onset asynchrony

Introduction

Our ability to rapidly infer someone else’s mental state by deciphering their facial expression is an essential prerequisite for successful human social interactions, which serve adaptive purposes (Ekman and Friesen, 1971; Shariff and Tracy, 2011). Studies investigating facial emotion processing have typically employed standardized batteries of isolated static faces (Adolphs, 2002). But because isolated faces do not capture the complexity present in our everyday social interactions, researchers have begun to study contextual influences on face and emotion perception (Righart and de Gelder, 2008b; Van den Stock and de Gelder, 2012). Several studies have confirmed a biasing effect by contextual information originating from multiple sources, e.g., background scenes (Righart and de Gelder, 2006; den Stock et al., 2014), body expressions (Van den Stock et al., 2007), or faces (Lavie et al., 2003). Distraction effects caused by context are reported to be automatic and unintentional (Aviezer et al., 2011; Van den Stock and de Gelder, 2014), and stronger when contextual stimuli are of social relevance (Lavie et al., 2003).

Contextual stimuli communicating threat (e.g., anger or fear) seem to be advantageous in capturing attention, which might be explained by evolutionary mechanisms regarding the importance of threat detection. The latter is supported by results on the Face-in-the-Crowd (FITC) visual search task. This task demands participants to detect the unique emotional expression (e.g., anger) in a crowd of emotionally homogenous distractor faces. In line with this, an angry target face is identified faster and more accurate when surrounded by neutral or emotional distractor faces compared to happy or neutral targets (Hansen and Hansen, 1988; Eastwood et al., 2001; Öhman et al., 2001). An alternate explanation for a threat-specific superiority suggests low-level perceptual differences responsible for “pop-out” effects. This assumes that angry faces draw attention due to their discontinuity with the lower face boundaries (chin, upward u-shape) compared to happy face shapes, which are more congruent with the general face boundaries (Coelho et al., 2010; Purcell and Stewart, 2010). The interpretation is mainly supported by studies using simple schematically drawn faces. Yet, the emotional impact might be insufficient to induce any measureable attentional shift, which makes it difficult to draw conclusions (Schmidt and Schmidt, 2013).

Generally, most studies investigating whether or not threatening faces draw more attention than other emotion categories have used the FITC task. In contrast, the flanker task measures response interference when a centrally presented target stimulus is surrounded by different irrelevant flankers (Eriksen and Eriksen, 1974). Originally, the stimuli consisted of letters, but were extended to emotional faces (Fenske and Eastwood, 2003). Here, negative target faces captured subjects’ attention more than positive or neutral ones; hence flanker stimuli did not exert a comparable distraction in negative targets. This was explained by a narrowing of attention in stimuli with a negative valence. Results were, however, limited to one single negative emotion, i.e., sadness, which was compared to the attention capture of happy and neutral faces. It remains unclear whether the observed effect is generalizable to facial flankers – e.g., whether negative flanker faces capture more attention than happy or neutral ones (‘distractor hypothesis’). Furthermore, it is of interest whether all negative emotions distract to the same extent or whether this is most evident for stimuli signaling fear or anger (Stenberg et al., 1998).

To investigate whether specific emotions within the negative emotion range result in stronger interference effects, we used facial flankers of either one of three negative or one positive emotion, and assessed their effects on concurrently presented target faces. We hypothesized that emotion recognition performance would be slower and less precise for targets presented with threat-expressing flankers (anger and fear) than with flankers expressing other negative (sadness), positive or neutral emotions. This may be explained by the distractor hypothesis, stating that evolutionary salient cues capture attention and distract from the target. It is, however, also conceivable that congruency effects influence the expected results: there is evidence that congruent target-flanker combinations predict better performance than incongruent ones (‘congruency hypothesis,’ Gelder et al., 2006; Kret and Gelder, 2010; Ito et al., 2012). Yet the picture is even more complex and suggests an interaction between valence and congruence: a few studies reported valence differences with stronger congruency effects in positive compared to negative target-flanker combinations (Fenske and Eastwood, 2003; Righart and de Gelder, 2008a,b).

The influence of emotional flanker faces may be different between males and females. On the one hand, women generally perform better in emotion recognition tasks and rate emotions as more intense (Fujita et al., 1991; Hall and Matsumoto, 2004; but see Barrett et al., 1998). On the other hand, women seem to pay more attention to peripheral stimuli, evident by stronger cuing effects to neutral objects as well as greater distractibility by irrelevant facial features (Merritt et al., 2007; Stoet, 2010; Feng et al., 2011). More specifically, women are reported to be more influenced by positive emotional primes only (Donges et al., 2012). Based on these inconsistent results, we considered whether potential differences between men and women in recognition accuracy and reaction times are valence-specific.

Besides the emotional impact of the flanker, the temporal dynamics of stimulus presentation [i.e., the stimulus onset asynchrony (SOA) between flanker and target stimulus presentation] are known to influence target face processing. Previous work suggests that the interference effect is strongest when target and flanker are presented simultaneously and decreases rapidly with an increasing delay between flanker and target. With SOA greater than 200 ms, the distracting effect of preceding flankers is thought to be relatively small (Taylor, 1977; Botella et al., 2002). We therefore tested two different flanker-target timing modes. In Experiment 1, we presented flankers and targets simultaneously (SOA 0 ms). In Experiment 2, the onset of the flankers preceded the central targets by 300 ms (SOA 300 ms). We expected antecedent flankers to result in higher accuracy and intensity ratings of target faces due a weaker interference effect, compared to a simultaneous onset of flanker and target (SOA 0 ms).

The aim of the present study was to investigate whether an influence of task-irrelevant facial flankers can be explained by the ‘distractor hypothesis’ (worse performance when targets are surrounded by threat-associated flankers). Our approach also enabled to test whether results fit the ‘congruency hypothesis’ and to take into account potential valence-specificity for congruency. Gender differences as well as temporal dynamics (SOA) were additionally taken into consideration, due to their previously reported interferring influence.

Materials and Methods

Two separate experiments were conducted, one with flanker and target faces presented simultaneously (Experiment 1, SOA 0 ms), the other one with the onset of flankers presented prior to the onset of the target (Experiment 2, SOA 300 ms). All other aspects (material and stimuli, task) were held constant across the two experiments. The studies were performed in accordance to the Declaration of Helsinki and approved by the local institutional review board (protocol number EK 040/12). Participants were informed about the study protocol and gave written informed consent. They were reimbursed with 10 Euro.

Material and Stimuli

Pictures of 10 males and 10 females characters were selected from a standardized stimulus set (Gur et al., 2002). They were comparable in age, emotion intensity, emotion valence, and visual luminance.

Stimuli in both experiments consisted of color pictures of a centrally presented face (‘target’) surrounded by six other faces (‘flanker’), all presented on gray background (Figure 1). Target and flanker faces each consisted of five different emotion categories (fearful, sad, angry, happy, and neutral). Additional ‘scrambled’ non-face flankers were included as a control condition (created with mosaic filter in Adobe® Photoshop® 6.0; for example stimuli see Supplementary Figure S1). The gender ratio of target faces and context faces was balanced.

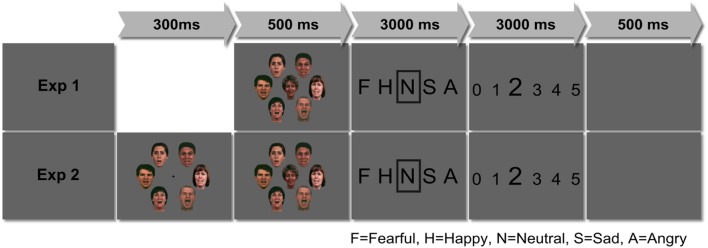

FIGURE 1.

Experimental design set-up. In Experiment 1, flanker-target combinations were presented simultaneously for 500 ms, in Experiment 2, flanker presentations preceded the target face by 300 ms, then, flankers and targets were also presented simultaneously for 500 ms.

All faces were adjusted to a size of 3.6 cm × 5.8 cm (3.9° × 6.26°). The target face was presented in the center of the screen, the flanker faces were arranged circle-wise with a diameter of 11.73 cm (12.7°). The distance from the midpoint of the target face to the midpoint of the flanker faces was 6.4° of visual angle at a viewing distance of 53 cm (compare Fox et al., 2000; Adamo et al., 2010a,b). Stimuli were presented using Presentation® (version 14, Neurobehavioral Systems, Inc., San Francisco, CA, USA).

Each experiment consisted of 520 trials. 100 different target faces (20 actors × 5 target emotions) were each shown with five different emotional flanker categories (e.g., a happy target face was surrounded by either happy, sad, neutral, angry, or fearful facial flankers). Additionally, 20 target faces were surrounded by ‘scrambled’ non-face flankers. Conditions were presented in a pseudo-randomized order. Experiments consisted of a 6 (5 flanker emotions plus scrambled condition) times 5 (target emotion) design.

Task

In Experiment 1, target-flanker combinations were presented simultaneously for 500 ms (SOA 0 ms), followed by an emotion identification period of up to 3000 ms (Figure 1). In Experiment 2, the target-flanker presentation (500 ms) was preceded by the presentation of the flanker alone for 300 ms (SOA 300 ms). During this interval, a fixation cross was presented in the center of the facial flankers to announce the position of the subsequent target face and facilitate gaze fixation. After 300 ms, the fixation cross was replaced by the target face without any change in the surrounding flanker.

Apart from the difference in flanker-target presentation latency (SOA 0 ms vs. SOA 300 ms) the task was the same in both experiments: participants were instructed to identify the target face’s emotion (choosing from all presented emotions plus neutral) via keyboard button press. This was followed by a period of up to 3000 ms during which participants rated the intensity of the target emotion on a Likert scale ranging from 0 to 5. A blank screen was presented for 500 ms before the next trial onset. If participants chose ‘neutral,’ no intensity rating was prompted. Halfway through the experiment, participants could take a break for as long as they wanted.

Participants

Experiment 1 included 28 healthy adults (14 males, M age = 28.2 years, SD = 7.9 years). Originally, 29 participants took part in Experiment 1, but one participant was excluded due to incorrect button use. Experiment 2 included a separate sample of 28 participants (14 males, M age = 27.8 years, SD = 6.7 years). The two samples did not differ significantly in age (t(54) = 0.18, p = 0.86). Participants were recruited through local advertisements and were prescreened to confirm a negative life-time history of psychiatric disorder, neurological illness or current substance abuse (SKID light, Wittchen et al., 1997). All participants reported normal or corrected-to-normal vision.

Data Analysis

Trials with omitted responses (n = 164) were excluded from further analysis (0.5%). All participants performed under the cut-off criterion for exclusion (10%, 52 responses; Momitted = 3.21, SD = 0.06). For each flanker emotion, relative frequencies of correctly recognized trials were computed. Three repeated measures generalized estimating equations (GEEs) were carried out in IBM® SPSS® (version 20). GEEs account for binomial distributions, correct for potential violations of normal distribution and for non-sphericity, and they enable modeling subject effects and conditions with an unequal number of trials. Each model contained data from both experiments. All models included the within-subject factor ‘flanker emotion’ (six levels), the between-subject factor ‘SOA’ (two levels), and the between-subject factor ‘gender’ (two levels). All main effects and interactions were tested for significance.

Three models analyzed emotion recognition accuracy, intensity ratings of correct trials (excluding trials with neutral targets since there existed no intensity ratings), and reaction times for correct trials, including the above-mentioned factors.

Three additional GEEs (analyzing recognition accuracy, intensity ratings, and reaction time) were calculated to test whether ‘congruency of flanker and target’ (within-subject factor, two levels) had an influence on the participants’ performance. The factor ‘SOA’ was also included in these models, to check for potential interactions (between-subjects factor, two levels).

All post-hoc pairwise comparisons were corrected for multiple comparisons using Bonferroni-correction.

Results

The overall emotion identification accuracy for the target faces was M = 77.43% (SEM = 0.13) in Experiment 1 and M = 76.55% (SEM = 0.12) in Experiment 2. The difference between the two experiments was not significant (t(54) = 0.51, p = 0.61). For confidence interval sizes of all results, please see Supplementary Tables S1–S6.

Flanker Emotion

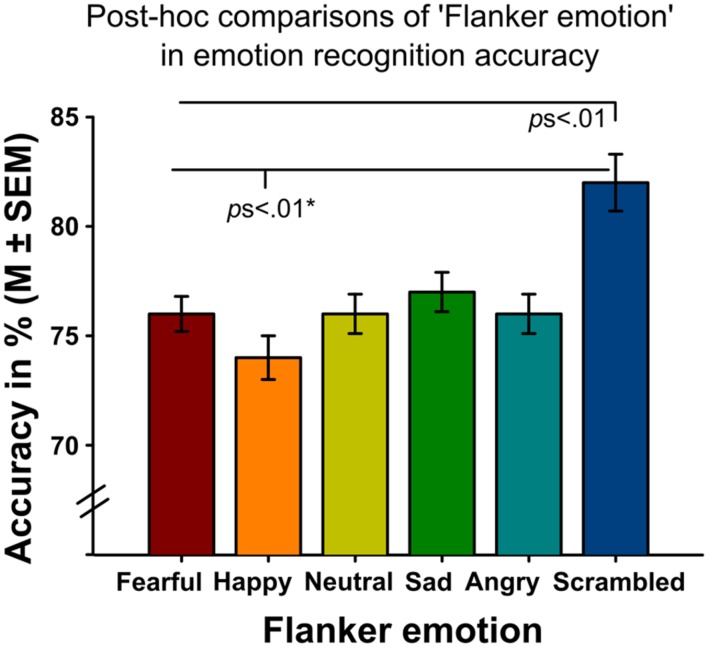

Analyses revealed significant main effects of ‘flanker emotion’ on emotion recognition accuracy (Wald-Chi2(5) = 46.109, p < 0.001, Figure 2), intensity ratings (Wald-Chi2(5) = 113.01, p < 0.001) and reaction times (Wald-Chi2(5) = 17.943, p = 0.003). Post-hoc pairwise comparisons indicated that targets surrounded by scrambled flankers were recognized better (all ps < 0.01), but more slowly (only the comparison between scrambled and sad flankers survived Bonferroni-correction, p = 0.039). Targets surrounded by scrambled flankers were additionally rated as more intense than targets surrounded by facial flankers (all ps < 0.01, Figure 3A). Target recognition was less accurate when surrounded by happy flankers (all ps < 0.01, except for angry vs. happy, p = 0.062).

FIGURE 2.

Emotion recognition accuracy of target faces surrounded by different flanker emotions (x-axis) across both experiments. The highest emotion recognition accuracy was found for targets surrounded by scrambled flankers while targets surrounded by happy flankers showed the lowest emotion recognition accuracy. Note that the comparison of happy and angry flankers only reached statistical trend level.

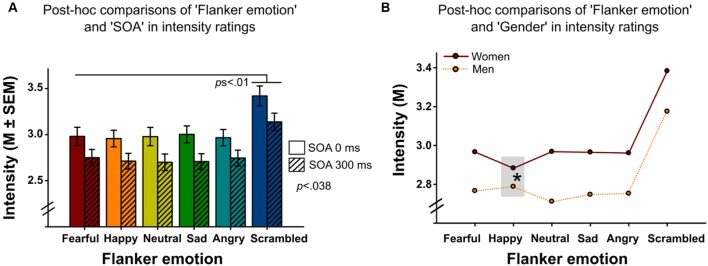

FIGURE 3.

(A) Emotion intensity ratings of target faces surrounded by different flanker emotions (x-axis) in Experiments 1 and 2. Delayed flanker-target presentations in Experiment 2 (SOA 300 ms) were associated with lower intensity ratings compared to simultaneous presentations in Experiment 1 (SOA 0 ms). Targets surrounded by scrambled flankers were rated more intense than targets surrounded by facial flankers. (B) Mean intensity ratings of target faces surrounded by different flanker emotions (x-axis) in women and men. Females rated targets surrounded by happy flankers significantly less intense than males (post-hoc comparison of the interaction between the within-subject factor ‘flanker emotion’ and the between-subject factor ‘gender’ indicated by the asterisk).

Stimulus Onset Asynchrony

The main effect of ‘SOA’ reached significance for emotion intensity ratings (Wald-Chi2(1) = 4.297, p = 0.038, Figure 3A), with higher target intensity ratings following simultaneous (SOA 0 ms, Experiment 1) compared to antecedent (SOA 300 ms, Experiment 2) presentations. Other main effects did not reach significance (SOA on emotion recognition accuracy: Wald-Chi2(1) = 0.295, p = 0.59, SOA on reaction time: Wald-Chi2(1) = 0.8, p = 0.37).

Gender

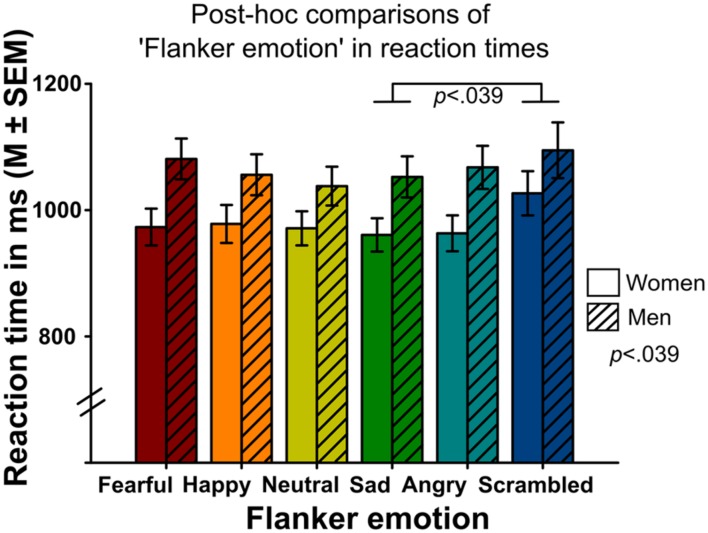

Analyses indicated a significant main effect of ‘gender’ on reaction time (Wald-Chi2(1) = 4.275, p = 0.039, Figure 4), with faster reaction times in women compared to men. Other main effects did not reach significance (gender effect on emotion recognition accuracy: Wald-Chi2(1) = 0.04, p = 0.84, gender effect on intensity ratings: Wald-Chi2(1) = 2.51, p = 0.11).

FIGURE 4.

Mean reaction times of correct responses surrounded by different flanker emotions (x-axis) in women and men. Women were faster in emotion recognition than men (main effect of the between-subject factor ‘gender’). Targets in scrambled flankers were recognized slower compared to targets surrounded by sad flankers.

The interaction of ‘gender’ by ‘flanker emotion’ was also significant (Wald-Chi2(5) = 27.376, p < 0.001, Figure 3B). Women rated targets surrounded by happy flankers as least intense, while men rated them as second most intense. Other interactions did not reach significance.

Congruency

Neither the main effect of ‘congruency’ nor any of the interactions with ‘SOA’ reached significance when testing the influence of ‘congruency’ on emotion recognition accuracy, intensity ratings, and reaction time (emotion recognition: Wald-Chi2(1) = 1.31, p = 0.25, intensity ratings: Wald-Chi2(1) = 1.06, p = 0.30, reaction time: Wald-Chi2(1) = 2.87, p = 0.09).

All analyses were run additionally without the scrambled flanker condition and revealed similar results.

Experiment 3

The results from Experiments 1 and 2 provoked the question whether the unbalanced composition of negative and positive emotions (three negative flanker emotions, one positive, one neutral emotion) might be responsible for the unexpected, less accurate identification of targets in happy flankers. As the unbalanced composition was one of the major differences in comparison to earlier studies, we wanted to specifically assess the effect of emotion category composition on target emotion recognition accuracy, intensity ratings and reaction time, respectively in a valence-balanced design.

Methods

A third experiment was carried out using a balanced composition of emotion categories (one positive, one neutral, and one negative flanker condition, as well as scrambled non-face flankers). The experiment consisted of 480 trials. Happy, neutral, or fearful target faces were surrounded by either happy, neutral, fearful, or scrambled non-face flankers. In opposite to Experiments 1 and 2 participants rated the target emotion on a 7-point scale ranging from fearful through neutral to happy. Time until first button press was considered as an approximation of reaction time. First reactions <150 ms were excluded. Apart from these differences, material, design, and task were the same as in Experiment 2 (SOA 300 ms).

Experiment 3 included 29 healthy adults (15 males, M age = 26.1 years, SD = 5.37), conforming to the same inclusion criteria as in Experiments 1 and 2.

Three GEEs repeated measures with the within-subject factor ‘flanker emotion’ (four levels) were calculated (analyzing the outcome variables recognition accuracy, intensity ratings, and reaction time) as well as paired t-tests analyzing congruency effects (for the same outcome variables). Due to their binomial distribution hit rates of emotion recognition accuracy were 2arcsin-transformed for the paired t-tests.

Results

Flanker Emotion

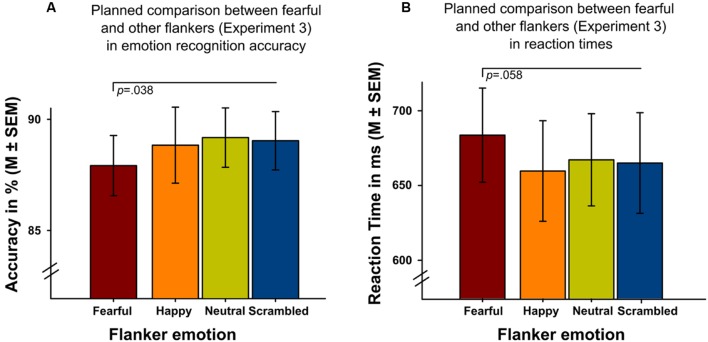

Analyses revealed a significant main effect of ‘flanker emotion’ on intensity ratings (Wald-Chi2(3) = 10.995, p = 0.012), replicating the results of the initial experiments, with higher intensity ratings of targets in scrambled flankers. Only the post-hoc comparison between scrambled and neutral flankers survived Bonferroni-correction (p = 0.006). Flanker emotion did not show significant main effects on emotion recognition accuracy (Wald-Chi2(3) = 6.027, p = 0.11) or reaction time (Wald-Chi2(3) = 4.296, p = 0.231) in a balanced design. On a descriptive level (Figure 5) it could be seen, however, that targets surrounded by fearful flanker faces were identified less accurately (Figure 5A) and more slowly (Figure 5B), compared to all other flanker conditions. Planned paired t-tests between targets surrounded by fearful flanker faces compared to all other flankers (average) were significant for emotion recognition accuracy (t(28) = 2.18, p = 0.038) and revealed a statistical trend for reaction time (t(28) = 1.98, p = 0.058) (Figure 5).

FIGURE 5.

Experiment 3 investigating emotion recognition accuracy and reaction times in target faces surrounded by four different flanker conditions (one positive, one negative, neutral and scrambled, x-axis). Planned t-tests showed (A) reduced emotion recognition accuracy (p = 0.038) and (B) longer reaction times (p = 0.058) in targets presented with fearful facial flankers compared to targets presented with other flankers.

Congruency

Results revealed that congruent target-flanker combinations were significantly better recognized than incongruent combinations (t(28) = 2.13, p = 0.042). Intensity ratings and reaction times did not differ significantly between congruent and incongruent combinations (intensity ratings: t(28) = 0.31, p = 0.76, reaction time: t(28) = 1.52, p = 0.139).

Additionally, we performed congruency analyses separately for each emotion as previous literature occasionally reported valence-specificity of congruency effects (Fenske and Eastwood, 2003; Righart and de Gelder, 2008a,b). Paired t-tests compared congruent and incongruent target-flanker combinations separately for happy and fearful target faces. Results did not reveal significant differences of fearful faces surrounded by congruent or incongruent facial flankers for emotion recognition accuracy (t(28) = 0.83, p = 0.413), intensity ratings (t(28) = 0.1, p = 0.924) or reaction time (t(28) = 0.57, p = 0.572). Happy targets, in opposite, were recognized faster (t(28) = 2.37, p = 0.025) and trendwise better (t(28) = 1.85, p = 0.074) in congruent compared to incongruent conditions (intensity ratings: t(28) = 1.11, p = 0.662).

Discussion

Two behavioral experiments investigating emotional context effects revealed reduced emotion recognition accuracy and intensity ratings of target faces embedded in facial compared to scrambled non-face flankers. Reaction times were increased for targets associated with scrambled flankers. However, contrary to our expectations, negative flankers did not have a greater deleterious effect on the accuracy or speed of emotion recognition for target faces. Rather, it was the target faces that were surrounded by happy flankers which were identified least accurately. A follow-up study (Experiment 3) tested a balanced emotion category composition; here, the results on happy flankers were comparable to those elicited by other flanker conditions. Results of this experiment suggested worse emotion recognition performance for targets surrounded by fearful flankers.

Contrary to our expectations, delayed presentation of the target (SOA 300 ms, Experiment 2) did not result in higher recognition accuracy compared to simultaneous presentation (SOA 0 ms, Experiment 1). However, simultaneously presented targets were perceived as more intense. Our results, additionally, replicate and extend previously observed gender differences in facial emotion processing (faster reaction times, Hampson et al., 2006). They provide support for a female advantage not only in response to isolated facial expressions, but also when emotional targets are surrounded by social flankers. Gender differences interacted with flanker emotion. While both genders were less accurate in identifying targets surrounded by happy flankers, only women showed additional effects on their intensity ratings.

On the most general level, these findings indicate that social flankers are more distracting than non-meaningful scrambled ones. Although the instruction was to ignore the flankers, participants appear to have automatically reoriented their attention toward socially relevant stimuli, which may serve a function as salient social distractors (Lavie et al., 2003). In hindsight, one shortcoming of our design was the absence of a ‘target-only’ condition with no flankers. Future studies should include such a condition to directly investigate the non-specific distracting effects of flanker stimuli, by comparing social and non-social flanker conditions with a non-flanker control condition.

Contrary to our hypotheses, negative facial flankers did not reduce emotion recognition performance or intensity ratings of target faces. Surprisingly, happy flankers caused the lowest emotion recognition accuracy of target faces. This partly replicates previous results stating that happy faces attract more attention when they are compared to several negative faces (Calvo and Nummenmaa, 2008). Here, the authors had applied a visual search task with faces and the advantage of happy faces was attributed to deviating low-level features of happy compared to negative emotional faces. Our data suggest that the effect of emotional flankers may depend on the specific composition of presented emotions. When using several negative emotions and one positive emotion, as we did, the happy emotion stands out more than any of the negative ones and attracts more attention than the plurality of negative emotions. However, when only one negative expression is tested during the experiment, negative facial expressions are reported to be more effective in capturing attention than positive or neutral expressions (Fenske and Eastwood, 2003). To further test this assumption, we conducted a third experiment in which the composition of emotion categories was balanced (happy, fearful, neutral, and scrambled flankers). Here, happy flankers were found to be equally distracting. Directly comparing targets after fearful flankers to an average of all other conditions revealed a stronger distraction effect for the one negative flanker emotion (lower emotion recognition accuracy and longer reaction times). Even though the effect was small, the implications are consistent with the previously reported ‘distractor hypothesis.’ Even more, they extend an attention capture advantage of negative emotions from targets to facial flankers. To confirm our hypothesis regarding the countervailing effect of including several negative emotions, future studies should systematically vary the number of distinct emotions within the negative emotion category.

We further tested whether congruent flanker-target combinations increased target face recognition, intensity ratings, or decreased reaction time. Analyses revealed no differences between congruent and incongruent combinations in the first two experiments, potentially due to the unbalanced composition of emotion categories. Experiment 3, however, revealed better recognition of congruent target-flanker combinations and thereby supported the ‘congruency hypothesis.’ At least in some studies, congruency effects were stronger for positive target-flanker combinations while authors found only little congruency effects for negative targets (Fenske and Eastwood, 2003; Righart and de Gelder, 2008a,b). Following this idea, we compared congruent and incongruent target-flanker combinations in each emotion of Experiment 3 (happy, fearful). While happy faces were recognized better and faster in congruent happy flankers, there was no differential influence of congruent and incongruent flankers on fearful targets, therewith, supporting earlier results. In general, congruent target-flanker combinations might predict better performance due to less response interference and a co-activation of similar neuron populations (Eriksen and Eriksen, 1974; Joubert et al., 2007; Righart and de Gelder, 2008b). Apparently, that holds for many non-affective stimuli as well as for emotions which do not require an immediate reaction (e.g., happy). Evolutionary relevant emotions though might present an exception to this. There is some evidence that threat-related information might be processed differently via a subcortical pathway bypassing primary cortical areas, potentially in order to be rapidly aware of any danger (Garrido et al., 2012; Garvert et al., 2014). Hence, in a dangerous situation the processing of threat-related stimuli might be prioritized, no matter what. However, at this point assumptions remain speculative and should be followed upon in further studies.

While the distraction effect of happy flankers was present for both genders, females rated target faces in happy flankers as less intense. This extends previous results of women being more influenced by neutral flanker stimuli (Merritt et al., 2007; Stoet, 2010) to social flankers. It further supports the assumption of females’ increased sensitivity to positive facial stimuli (Donges et al., 2012), even when the stimuli are irrelevant, as in the present study. Women also responded faster – a phenomenon previously associated with child-rearing and mother-child bonding. Our results thus support a female advantage in emotion processing (Hall, 1978; Hampson et al., 2006) and reinforce the idea that potential gender differences should be considered in any investigation of facial emotion processing.

With regard to the effects of SOA, our initial expectation was that preceding flanker presentation (SOA 300 ms, Experiment 2) would result in higher accuracy and intensity ratings of target faces compared to simultaneous presentation (SOA 0 ms, Experiment 1). However, we observed that target stimuli were rated as more intense when there was no delay between the flanker and the target (SOA 0 ms). We can only speculate at this point, but this could be explained, at least in part, by an amplification effect due to accumulated emotional information and a stronger contrast of the percept when multiple emotional faces are presented simultaneously (Biele and Grabowska, 2006). Yet, only a stepwise manipulation of the relative onsets of flanker and target stimuli could conclusively clarify this.

One limitation of this study, as noted, was the lack of a condition without a surrounding flanker (‘target-only’). This would have allowed an assessment of the non-specific impact of flankers, both facial and non-facial, on target face processing, something which should be addressed in future studies. Additionally, it would be interesting to assess the differential impact of all-male vs. all-female flankers on target face processing, including the potential interaction between flanker gender and receiver gender in future studies.

Summarizing our findings, in two behavioral experiments we demonstrated a reallocation of attention to unattended but salient social contexts, which influenced both emotion recognition accuracy and intensity ratings. This implies that social information is preferentially processed, even when it is located outside the attentional focus and causes distraction. We further argue that apart from gender, the emotion category composition should be considered as an important factor in studies investigating emotional context, especially when drawing conclusions about one specific emotion category. Lastly, the results of a third experiment suggest that threat-related flanker stimuli might be subject to priority-processing of evolutionary salient stimuli, which seems to ‘overrule’ a congruency effect. This might present an adaptive mechanism in order to be most sensitive to potential danger.

Author Contributions

BSH, CR, UH, FS, and BT designed the research, BSH performed the research, BSH and CR analyzed the data, BSH, CR, and UH wrote the paper, and all authors revised the manuscript and gave final approval of the version to be published.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Prof. K. Willmes-von-Hinckeldey and Dr. T. Kellermannfor assistance with the statistical analysis and E. Smith for language-editing.

Footnotes

Funding. This work was supported by the International Research Training Group (IRTG 1328) of the German Research Foundation (DFG). CR was supported by a post-doctoral fellowship of the German Academic Exchange Service (DAAD).

Supplementary Material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fpsyg.2016.00712

References

- Adamo M., Pun C., Ferber S. (2010a). Multiple attentional control settings influence late attentional selection but do not provide an early attentional filter. Cogn. Neurosci. 1 102–110. 10.1080/17588921003646149 [DOI] [PubMed] [Google Scholar]

- Adamo M., Wozny S., Pratt J., Ferber S. (2010b). Parallel, independent attentional control settings for colors and shapes. Attent. Percept. Psychophys. 72 1730–1735. 10.3758/APP.72.7.1730 [DOI] [PubMed] [Google Scholar]

- Adolphs R. (2002). Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behav. Cogn. Neurosci. Rev. 1 21–62. 10.1177/1534582302001001003 [DOI] [PubMed] [Google Scholar]

- Aviezer H., Bentin S., Dudarev V., Hassin R. R. (2011). The automaticity of emotional face-context integration. Emotion 11 1406–1414. 10.1037/a0023578 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett L. F., Robin L., Pietromonaco P. R., Eyssell K. M. (1998). Are women the “more emotional” sex? Evidence from emotional experiences in social context. Cogn. Emot. 12 555–578. 10.1080/026999398379565 [DOI] [Google Scholar]

- Biele C., Grabowska A. (2006). Sex differences in perception of emotion intensity in dynamic and static facial expressions. Exp. Brain Res. 171 1–6. 10.1007/s00221-005-0254-0 [DOI] [PubMed] [Google Scholar]

- Botella J., Barriopedro M. I., Joula J. F. (2002). Temporal interactions between target and distractor processing: posititve and negative priming effects. Psicológica 23 371–400. 10.1093/cercor/bhp036 [DOI] [Google Scholar]

- Calvo M. G., Nummenmaa L. (2008). Detection of emotional faces: salient physical features guide effective visual search. J. Exp. Psychol. Gen. 137 471–494. 10.1037/a0012771 [DOI] [PubMed] [Google Scholar]

- Coelho C. M., Cloete S., Wallis G. (2010). The face-in-the-crowd effect: when angry faces are just cross(es). J. Vis. 10 71–14. 10.1167/10.1.7 [DOI] [PubMed] [Google Scholar]

- den Stock J. V., Vandenbulcke M., Sinke C., de Gelder B. (2014). Affective scenes influence fear perception of individual body expressions. Hum. Brain Mapp. 35 492–502. 10.1002/hbm.22195 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donges U.-S., Kersting A., Suslow T. (2012). Women’s greater ability to perceive happy facial emotion automatically: gender differences in affective priming. PLoS ONE 7:e41745 10.1371/journal.pone.0041745 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eastwood J. D., Smilek D., Merikle P. M. (2001). Differential attentional guidance by unattended faces expressing positive and negative emotion. Percept. Psychophys. 63 1004–1013. 10.3758/BF03194519 [DOI] [PubMed] [Google Scholar]

- Ekman P., Friesen W. V. (1971). Constants across cultures in the face and emotion. J. Pers. Soc. Psychol. 17 124–129. 10.1037/h0030377 [DOI] [PubMed] [Google Scholar]

- Eriksen B. A., Eriksen C. W. (1974). Effects of noise letters upon identification of a target letter in a nonsearch task. Percept. Psychophys. 16 143–149. 10.3758/Bf03203267 [DOI] [Google Scholar]

- Feng Q., Zheng Y., Zhang X., Song Y., Luo Y.-J., Li Y., et al. (2011). Gender differences in visual reflexive attention shifting: evidence from an ERP study. Brain Res. 1401 59–65. 10.1016/j.brainres.2011.05.041 [DOI] [PubMed] [Google Scholar]

- Fenske M. J., Eastwood J. D. (2003). Modulation of focused attention by faces expressing emotion: evidence from flanker tasks. Emotion 3 327–343. 10.1037/1528-3542.3.4.327 [DOI] [PubMed] [Google Scholar]

- Fox E., Lester V., Russo R., Bowles R., Pichler A., Dutton K. (2000). Facial expressions of emotion: are angry faces detected more efficiently? Cogn. Emot. 14, 61–92. 10.1080/026999300378996 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujita F., Diener E., Sandvik E. (1991). Gender differences in negative affect and well-being: the case for emotional intensity. J. Pers. Soc. Psychol. 61 427–434. 10.1037/0022-3514.61.3.427 [DOI] [PubMed] [Google Scholar]

- Garrido M. I., Barnes G. R., Sahani M., Dolan R. J. (2012). Functional evidence for a dual route to amygdala. Curr. Biol. 22 129–134. 10.1016/j.cub.2011.11.056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garvert M. M., Friston K. J., Dolan R. J., Garrido M. I. (2014). Subcortical amygdala pathways enable rapid face processing. Neuroimage 102 309–316. 10.1016/j.neuroimage.2014.07.047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gelder B. D., Meeren H. K. M., Righart R., Stock J. V. D., van de Riet W. A. C., Tamietto M. (2006). Beyond the face: exploring rapid influences of context on face processing. Prog. Brain Res. 155 37–48. 10.1016/S0079-6123(06)55003-4 [DOI] [PubMed] [Google Scholar]

- Gur R. C., Sara R., Hagendoorn M., Marom O., Hughett P., Macy L., et al. (2002). A method for obtaining 3-dimensional facial expressions and its standardization for use in neurocognitive studies. J. Neurosci. Methods 115 137–143. 10.1016/S0165-0270(02)00006-7 [DOI] [PubMed] [Google Scholar]

- Hall J. (1978). Gender effects in decoding nonverbal cues. Psychol. Bull. 85 845–857. 10.1037/0033-2909.85.4.845 [DOI] [Google Scholar]

- Hall J. A., Matsumoto D. (2004). Gender differences in judgments of multiple emotions from facial expressions. Emotion 4 201–206. 10.1037/1528-3542.4.2.201 [DOI] [PubMed] [Google Scholar]

- Hampson E., van Anders S. M., Mullin L. I. (2006). A female advantage in the recognition of emotional facial expressions: test of an evolutionary hypothesis. Evol. Hum. Behav. 27 401–416. 10.1016/j.evolhumbehav.2006.05.002 [DOI] [Google Scholar]

- Hansen C. H., Hansen R. D. (1988). Finding the face in the crowd: an anger superiority effect. J. Pers. Soc. Psychol. 54 917–924. 10.1037/0022-3514.54.6.917 [DOI] [PubMed] [Google Scholar]

- Ito K., Masuda T., Hioki K. (2012). affective information in context and judgment of facial expression: cultural similarities and variations in context effects between North Americans and East Asians. J. Cross Cult. Psychol. 43 429–445. 10.1177/0022022110395139 [DOI] [Google Scholar]

- Joubert O. R., Rousselet G. A., Fize D., Fabre-Thorpe M. (2007). Processing scene context: fast categorization and object interference. Vision Res. 47 3286–3297. 10.1016/j.visres.2007.09.013 [DOI] [PubMed] [Google Scholar]

- Kret M., Gelder B. (2010). Social context influences recognition of bodily expressions. Exp. Brain Res. 203 169–180. 10.1007/s00221-010-2220-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavie N., Ro T., Russell C. (2003). The role of perceptual load in processing distractor faces. Psychol. Sci. 14 510–515. 10.1111/1467-9280.03453 [DOI] [PubMed] [Google Scholar]

- Merritt P., Hirshman E., Wharton W., Stangl B., Devlin J., Lenz A. (2007). Evidence for gender differences in visual selective attention. Pers. Individ. Dif. 43 597–609. 10.1016/j.paid.2007.01.016 [DOI] [Google Scholar]

- Öhman A., Lundqvist D., Esteves F. (2001). The face in the crowd revisited: a threat advantage with schematic stimuli. J. Pers. Soc. Psychol. 80 381–396. 10.1037/0022-3514.80.3.381 [DOI] [PubMed] [Google Scholar]

- Purcell D. G., Stewart A. L. (2010). Still another confounded face in the crowd. Atten. Percept. Psychophys. 72 2115–2127. 10.3758/App.72.8.2115 [DOI] [PubMed] [Google Scholar]

- Righart R., de Gelder B. (2006). Context influences early perceptual analysis of faces—an electrophysiological study. Cereb. Cortex 16 1249–1257. 10.1093/cercor/bhj066 [DOI] [PubMed] [Google Scholar]

- Righart R., de Gelder B. (2008a). Rapid influence of emotional scenes on encoding of facial expressions: an ERP study. Soc. Cogn. Affect. Neurosci. 3 270–278. 10.1093/scan/nsn021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righart R., de Gelder B. (2008b). Recognition of facial expressions is influenced by emotional scene gist. Cogn. Affect. Behav. Neurosci. 8 264–272. 10.3758/CABN.8.3.264 [DOI] [PubMed] [Google Scholar]

- Schmidt F., Schmidt T. (2013). No difference in flanker effects for sad and happy schematic faces: a parametric study of temporal parameters. Vis. Cogn. 21 382–398. 10.1080/13506285.2013.793221 [DOI] [Google Scholar]

- Shariff A. F., Tracy J. L. (2011). What are emotion expressions for? Curr. Dir. Psychol. Sci. 20, 395–399. 10.1177/0963721411424739 [DOI] [Google Scholar]

- Stenberg G., Wiking S., Dahl M. (1998). Judging words at face value: interference in a word processing task reveals automatic processing of affective facial expressions. Cogn. Emot. 12 755–782. 10.1080/026999398379420 [DOI] [Google Scholar]

- Stoet G. (2010). Sex differences in the processing of flankers. Q. J. Exp. Psychol. 63 633–638. 10.1080/17470210903464253 [DOI] [PubMed] [Google Scholar]

- Taylor D. A. (1977). Time course of context effects. J. Exp. Psychol. Gen. 106 404–426. 10.1037//0096-3445.106.4.404 [DOI] [Google Scholar]

- Van den Stock J., de Gelder B. (2012). Emotional information in body and background hampers recognition memory for faces. Neurobiol. Learn. Mem. 97 321–325. 10.1016/j.nlm.2012.01.007 [DOI] [PubMed] [Google Scholar]

- Van den Stock J., de Gelder B. (2014). Face identity matching is influenced by emotions conveyed by face and body. Front. Hum. Neurosci. 8:53 10.3389/fnhum.2014.00053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van den Stock J., Righart R., De Gelder B. (2007). Body expressions influence recognition of emotions in the face and voice. Emotion 7 487–494. 10.1037/1528-3542.7.3.487 [DOI] [PubMed] [Google Scholar]

- Wittchen H.-U., Wunderlich U., Gruschwitz S., Zaudig M. (1997). Strukturiertes Klinisches Interview für DSM-IV, Achse-I (SKID-I). Göttingen: Hogrefe. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.