Abstract

Objectives

The aim of this study was to determine an optimal approach to program combined acoustic plus electric (A+E) hearing devices in the same ear to maximize speech-recognition performance.

Design

Ten participants with at least 1 year of experience using Nucleus Hybrid (short electrode) A+E devices were evaluated across three different fitting conditions that varied in the frequency ranges assigned to the acoustically and electrically presented portions of the spectrum. Real-ear measurements were used to optimize the acoustic component for each participant, and the acoustic stimulation was then held constant across conditions. The lower boundary of the electric frequency range was systematically varied to create three conditions with respect to the upper boundary of the acoustic spectrum: Meet, Overlap, and Gap programming. Consonant recognition in quiet and speech recognition in competing-talker babble were evaluated after participants were given the opportunity to adapt by using the experimental programs in their typical everyday listening situations. Participants provided subjective ratings and evaluations for each fitting condition.

Results

There were no significant differences in performance between conditions (Meet, Overlap, Gap) for consonant recognition in quiet. A significant decrement in performance was measured for the Overlap fitting condition for speech recognition in babble. Subjective ratings indicated a significant preference for the Meet fitting regimen.

Conclusions

Participants using the Hybrid ipsilateral A+E device generally performed better when the acoustic and electric spectra were programmed to meet at a single frequency region, as opposed to a gap or overlap. Although there is no particular advantage for the Meet fitting strategy for recognition of consonants in quiet, the advantage becomes evident for speech recognition in competing-talker babble and in patient preferences.

INTRODUCTION

The combined acoustic plus electric (A+E) hearing approach is a treatment strategy for patients with bilateral, severe-to-profound, high-frequency sensorineural hearing loss who also have usable low-frequency hearing. In the past, providing assistance for these patients has been a challenge. Conventional amplification often proves ineffective or even detrimental (Ching et al. 1998; Hogan & Turner 1998). Also, these individuals do not typically meet the candidacy criteria for implantation with a traditional, long-electrode, cochlear implant, because they have too much existing hearing. Whereas implantation with a traditional cochlear implant often results in complete loss of residual hearing in the implanted ear, the ipsilateral A+E approach uses a hearing-preservation electrode array and advanced surgical techniques that can enable the combined the use of acoustic and electric stimulation in the same ear.

Some research groups have used a shorter, smaller electrode array surgically inserted into the basal end of the cochlea. These electrode arrays are specifically designed to minimize damage to intracochlear structures and preserve low-frequency residual hearing while allowing the high-frequency components of speech and environmental sounds to be coded electrically through the implant (Gantz & Turner 2003, 2004; Lenarz et al. 2006, 2009). Other groups have implanted standard-length electrode arrays partially inserted into the cochlea using “soft” surgical techniques aimed to minimize trauma to cochlear structures (von Ilberg et al. 1999; Skarzynski et al. 2003; Gstoettner et al. 2004; Kiefer et al. 2004; James et al. 2005). Helbig et al. (2011) presented results showing hearing preservation in a study using the MED-EL FLEXEAS electrode array at 24 mm in total length.

The rationale behind the ipsilateral A+E approach is to preserve the benefits of residual acoustic hearing, while providing the missing high-frequency sounds via electric stimulation. Low-frequency acoustic hearing can contribute to speech cues, music recognition and appreciation, voice recognition, a more natural quality to speech perception, and improved speech understanding in background noise. As documented in numerous A+E studies, the successful preservation of low-frequency hearing has made possible a synergistic combination (or integration) of acoustic and electrical stimulation in the same ear combined with acoustic abilities of the contralateral ear providing speech-recognition benefit in quiet and background noise (Gantz & Turner 2003, 2004; Gstoettner et al. 2004, 2008; Turner et al. 2004, 2008a,b, 2010; Gantz et al. 2005, 2006, 2009; Kiefer et al. 2005; Lenarz et al. 2006, 2009; Helbig et al. 2011). Benefits are also reported for song/instrument recognition and localization tasks (Gfeller et al. 2006, 2007; Dorman & Gifford 2010; Dunn et al. 2010). Cochlear implant surgeons, researchers, audiologists, and implant manufacturers have recognized the importance of preserving residual hearing, and continue to refine surgical techniques and electrode arrays to move in this direction. However, research focusing on how best to program the combined A+E devices in the same ear to optimize speech recognition is somewhat limited and conflicting at the present time and will be the topic of the present study.

Questions remain about methods for programming the hearing aid and cochlear implant in the same ear for these patients. How should the spectral information of the incoming acoustic signal be divided between acoustic processing (low-frequency) and electric processing (high-frequency)? Are there benefits or detriments associated with a gap or overlap between these delivery methods? Perhaps the acoustic and electric stimulations interfere with one another—if this is the case then a gap might minimize any negative effects. Or alternatively, in frequency regions of hearing loss perhaps it is more advantageous to use two modes of stimulation (i.e., overlap).

Simulation studies conducted using normal-hearing participants to investigate the effects of various combinations of acoustic and electric frequency allocations, provided support for improvements in speech recognition from unprocessed low-frequency acoustic information (Gantz & Turner 2003; Turner et al. 2004; Dorman et al. 2005; Chang et al. 2006; Qin & Oxenham 2006). In general, these studies found that leaving a gap between the acoustic and simulated electric spectrum did not yield the best performance. However, these simulations do not take into account sloping hearing losses and the accompanying issues related to providing acoustic speech to frequency regions with impaired auditory mechanisms. Simulation studies also do not investigate the possible interactions between acoustic and electric stimulation in the same ear, which may be detrimental (or helpful) to real patients, nor do they take into account learning, as most are acute-only studies.

For the ipsilateral A+E approach, the hearing aid used in the implanted ear is typically adjusted to amplify sounds up to a designated audiometric cut off where acoustic hearing is no longer believed to provide benefit (Kiefer et al. 2005; Lenarz et al. 2006; Vermeire et al. 2008; Gantz et al. 2009; Helbig et al. 2011). Alternative approaches for determining the amplification cut off have been proposed, which use a pitch scaling procedure (McDermott et al. 2009; Simpson et al. 2009) and pitch matching (Baumann et al. 2011).

The results have been mixed in the few studies that have examined the effects of different speech processor frequency-to-channel allocation programming with actual patients who use ipsilateral A+E technology. In the work by Kiefer et al. (2005), cochlear implants for 13 participants were programmed for three conditions: a full frequency range of 300 to 5500 Hz, a frequency range of 650 to 5500 Hz, and a frequency range of 1000 to 5500 Hz. Hearing aids were fitted to amplify across 125 to 1000 Hz. Participants used all three maps for 2 to 3 weeks, then consonant and vowel tests were given to determine an optimal electric frequency allocation. The authors reported for the majority of their participants, the full frequency range fitting was preferred and provided the best speech-perception results. Fraysse et al. (2006) compared two fitting programs for nine participants designated as ipsilateral electrical acoustic hearing users: a standard-frequency allocation map (with maximum overlap between acoustic and electric processing) and a high-frequency allocation map (with low-frequency processing only through the hearing aid and high-frequency processing through the implant). Participants were given 1 month of experience with each map and were allowed to switch between maps after 2 months. Seven of nine users expressed a preference for the minimum overlap program; however, it was reported that speech-recognition scores for both programs were approximately equivalent during the 1- to 3-month postactivation period. Vermeire et al. (2008) reported on four participants who were evaluated using eight different conditions varying the cochlear implant frequency ranges and hearing aid fittings. Participants were given 30 minutes to adjust to each new frequency range/hearing aid fitting program before sentence testing in noise. Their results indicated that reduced overlap between hearing aid and cochlear implant fitting ranges provided greater speech recognition in noise benefit compared with full overlap. Simpson et al. (2009) compared two types of frequency-to-electrode fitting strategies for five participants. Two users were fitted with ipsilateral and contralateral hearing aids, and three participants with no useful postoperative aidable hearing in the implanted ear were fitted with a contralateral hearing aid alone. A full overlap clinical default program, and a program with no overlap/no gap between acoustic and electric sensations created using a pitch scaling procedure were compared. No significant difference for word recognition in quiet and sentence recognition in noise was found between fitting strategies.

Büchner et al. (2009) reported on eight participants in whom the implant speech processors were programmed for the full frequency range and the low-pass cutoff frequency of the acoustic input was varied systematically. Thus, various amounts of overlap were present in all the A+E testing conditions. Their results for sentence testing with competing-talker noise showed that providing low-frequency acoustic information even up to 300 Hz, where acoustic speech alone was unintelligible, indicated significant speech-perception improvement as compared with electric alone. Increasing the cut off beyond 300 Hz did not result in further improved performance for these participants. In a pediatric case study by Uchanski et al. (2009), a baseline condition with electric processing only from 200 to 7000 Hz (no hearing aid in the implanted ear) was compared with a treatment condition (hearing aid used in the implanted ear) where stimuli were processed acoustically only at frequencies less than 400 Hz, acoustically and electrically at 400 to 750 Hz (some overlap), and electrically only at more than 800 Hz. The results of their testing indicated the same or slightly better speech recognition for consonant-nucleus-consonant (CNC) words in quiet and sentence testing in noise, and variable results for CNC words in noise, for the treatment condition compared with the baseline condition. The participant expressed preference for the treatment condition.

In summary, these findings do not provide a clear consensus on which method of fitting A+E devices in the same ear provides the most speech-recognition benefit for patients. It is worth noting that some of the studies provided minimal pretesting experience for the different conditions; some participants also used contralateral acoustic stimulation during testing; real-ear measurements were not always conducted to optimize acoustic amplification for the individual participants; and speech testing in noise was not conducted across all the studies.

When considering the design for the present study, the following four aspects were determined to be important for consideration and incorporation: (1) to recruit participants who used ipsilateral A+E technology for at least 1 year, because research has shown that speech-recognition abilities can continue to improve over at least the first year after initial activation (Gantz & Turner 2003; Gantz et al. 2009); (2) to give participants an opportunity to use the experimental programs for a reasonably long duration in their typical everyday listening situations outside of the laboratory environment to allow some time for adaptation (Rosen et al. 1999; Fu et al. 2002; Fu & Galvin 2007; Reiss et al. 2008); (3) to use real-ear measurements with prescriptive guidelines to optimize the acoustic fitting and determine the cut off for upper edge of acoustic amplification; and (4) to develop a fitting regimen based on each individual participant’s unique residual hearing thresholds (Vermeire et al. 2008).

This study aimed to determine an optimal method to program the combined A+E devices in the same ear to maximize speech-recognition performance. All patients were implanted with a Cochlear Nucleus Hybrid (short-electrode) cochlear implant. The general approach was to first optimize the acoustic component (i.e., hearing aid) of the signal based upon current best practices for fitting and verification of hearing aids, and then systematically vary the electric frequency-to-channel allocation programming for the Hybrid cochlear implant while holding the acoustic stimulation constant across conditions. We could therefore determine the effects of providing an “overlap,” “meet,” or “gap” between acoustic and electric hearing boundaries. Outcome measures were obtained for speech recognition in quiet and noise, and participants provided information regarding subjective preferences for these various options.

MATERIALS AND METHODS

Participants

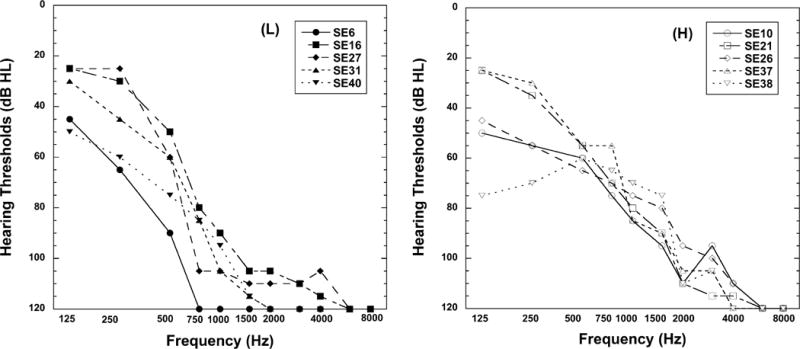

The Institutional Review Board at the University of Iowa approved this research, and the individuals were paid for their participation. Ten adults, aged 46 to 78 years (mean age = 61 years), with bilateral severe-to-profound, high-frequency, sensorineural hearing loss implanted with a Cochlear Nucleus Hybrid S8 or Nucleus Hybrid S12 cochlear implant participated in this study. The Nucleus Hybrid S8 is 10 mm in length with six active electrodes and two ground electrodes; the Nucleus Hybrid S12 is 10 mm in length with ten active electrodes and two ground electrodes. All participants used the Cochlear Nucleus Freedom Hybrid sound processor with acoustic component (i.e., hearing aid). This technology combines the acoustic and electric stimulation together in the same device. In their everyday listening, participants used an acoustic component and Hybrid cochlear implant in the ipsilateral ear and a conventional hearing aid in the contralateral ear. All participants (8 female, 2 male) were native speakers of American English and had at least 1 year of experience with the combined A+E technology. Demographic information for each individual is shown in Table 1, including age at time of testing, duration of high-frequency, severe-to-profound hearing loss before implantation, duration of ipsilateral A+E experience, implant type, stimulation rate, pulse width, and number of active electrodes. Hearing thresholds for each participant’s implanted ear at the time of this study are shown in Figure 1. The five participants (left) with upper frequency acoustic component cut offs at or below 900 Hz are designated as low (L); the five participants (right) with upper frequency cut offs above 900 Hz are designated as high (H). Nine-hundred Hz was an arbitrary value chosen to divide the participants into two equal groups.

TABLE 1.

Participant demographics

| Participant | Age at Testing (yrs) | Duration of HF S/P HL (yrs) | Duration of A+E Use (yrs;mos) | Implant Type | Stimulation Rate/Channel (Hz) | Pulse Width (μsec) | Number of Active Electrodes |

|---|---|---|---|---|---|---|---|

| SE 6 | 55 | 2 | 7;0 | S8 | 1200 | 25 | 5 |

| SE 10 | 72 | 20 | 6;0 | S8 | 900 | 25 | 6 |

| SE 16 | 65 | 10 | 5;0 | S8 | 1200 | 25 | 6 |

| SE 21 | 54 | 15 | 4;0 | S8 | 1200 | 25 | 6 |

| SE 26 | 78 | 8 | 4;0 | S8 | 2400 | 12 | 6 |

| SE 27 | 59 | 4 | 4;0 | S8 | 1200 | 25 | 6 |

| SE 31 | 57 | 23 | 4;0 | S8 | 900 | 25 | 5 |

| SE 37 | 65 | 22 | 2;0 | S12 | 900 | 50 | 9 |

| SE 38 | 59 | 2 | 1;6 | S12 | 900 | 50 | 10 |

| SE 40 | 46 | 8 | 1;0 | S12 | 900 | 25 | 9 |

HF S/P HL, high-frequency severe-to-profound hearing loss; A+E, ipsilateral acoustic plus electric; S8, Cochlear Nucleus Hybrid S8 cochlear implant; S12, Cochlear Nucleus Hybrid S12 cochlear implant.

Fig. 1.

Hearing thresholds for the implanted ear of the 10 participants at the time of the study. Left: Five participants with upper frequency acoustic component cut offs at or below 900 Hz are designated as low (L). Right: Five participants with upper frequency acoustic component cut offs above 900 Hz are designated as high (H).

Test Conditions and Device Fitting

The experimental programs were derived from each individual participant’s clinical program. Parameters manipulated for this study were the acoustic component, the electric frequency allocation range, and threshold/comfort levels. Three different experimental program conditions were created: acoustic + electric meet (Meet), acoustic + electric overlap (Overlap), and acoustic + electric gap (Gap). The descriptors used to define the conditions (Meet, Overlap, Gap) in this study are in reference to spectral meet, overlap, and gap, and not “place on the cochlea” position. With the 10 mm electrode, direct physiologic overlap is highly unlikely, and therefore all the conditions most likely represent a physiological “gap.”

The acoustic component for the ipsilateral ear was programmed using the National Acoustic Laboratories’ nonlinear fitting procedure, version 1 (NAL-NL1) guidelines (Byrne et al 2001). Real-ear aided responses were measured using an AudioScan Verifit Speechmap system with recorded speech stimuli presented at 65 dB SPL (average), and adjusted to match the NAL-NL1 targets (±7 dB). The average of the difference between target and real-ear response was 4.22 dB across participants and frequency bands. Maximum output was measured using an 85 dB SPL tone burst stimulus, and maximized according to estimated uncomfortable listening level (UCL) parameters, as well as individual subjective report of comfort level. The “upper edge of acoustic” for this study was defined as the upper frequency in the acoustic filter band where real-ear measures came within ±7 dB of NAL-NL1 target, and the acoustic filter band(s) above this frequency region were disabled. The acoustic frequency response characteristics were held constant across each of the three experimental programs. To ensure acoustic component characteristics remained stable throughout the study, real-ear measures were repeated for each new condition. (Note that targets were not met for subject SE6 because of severe hearing loss at 500 Hz.)

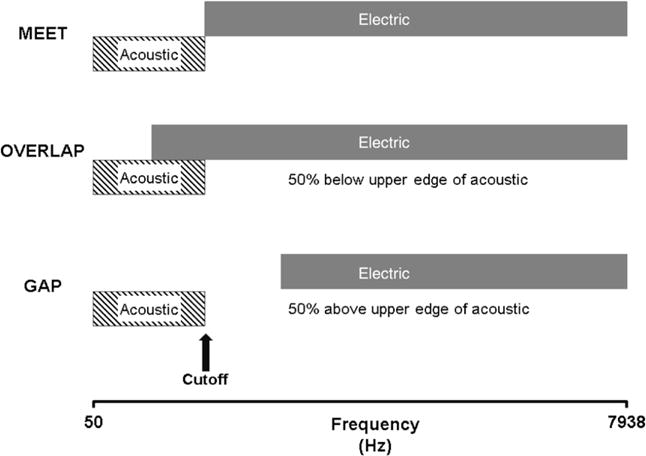

For the Meet condition, the electric lower frequency channel was mapped to begin at the upper edge of acoustic. For the Overlap condition, the electric lower frequency was chosen to begin at a frequency cut off that was 50% below each individual participant’s upper edge of acoustic. For the Gap condition, the electric lower frequency was chosen to begin at 50% above each individual participant’s upper edge of acoustic. Figure 2 illustrates the relationship between the acoustic and electric frequency regions for each of the experimental conditions. Table 2 (left side) lists the upper frequency acoustic cut offs and electric frequency ranges for the experimental programs and (right side of Table 2) the program that participants were accustomed to using before the beginning of this study (i.e., clinical program).

Fig. 2.

Diagram of the three experimental conditions. Meet, electric lower frequency begins at the upper edge of acoustic; Overlap, electric lower frequency begins at 50% below upper edge of acoustic; Gap, electric lower frequency begins at 50% above upper edge of acoustic.

TABLE 2.

Upper acoustic frequency and electric frequency ranges

| Experimental

|

Clinical

|

|||||||

|---|---|---|---|---|---|---|---|---|

| Participant | Upper Edge of Acovustic (Hz) | Acoustic + Electric Meet (Hz) | Acoustic + Electric Overlap (Hz) | Acoustic + Electric Gap (Hz) | Upper Edge of Acoustic Clinical (Hz) | Acoustic + Electric Clinical (Hz) | Clinical Program Similar To | Preferred Experimental Program |

| SE 6 | 530 | 530–7938 | 265–7938 | 795–7938 | 530 | 563–7938 | Meet | Meet |

| SE 10 | 1240 | 1240–7938 | 620–7938 | 1860–7938 | 1240 | 688–7938 | Overlap | Meet |

| SE 16 | 770 | 770–7938 | 385–7938 | 1155–7938 | ~770 | 563–7938 | Overlap | Meet and Overlap |

| SE 21 | 1240 | 1240–7938 | 620–7938 | 1860–7938 | ~530 | 688–7938 | Meet | Meet |

| SE 26 | 1760 | 1760–7938 | 880–7938 | 2640–7938 | 1760 | 1688–7938 | Meet | No preference |

| SE 27 | 630 | 630–7938 | 315–7938 | 945–7938 | 630 | 563–7938 | Meet | Meet |

| SE 31 | 630 | 630–7938 | 315–7938 | 945–7938 | 630 | 813–7938 | Gap | Meet |

| SE 37 | 1240 | 1240–7938 | 620–7938 | 1860–7938 | 1240 | 1063–7938 | Meet | No preference |

| SE 38 | 1760 | 1760–7938 | 880–7938 | 2640–7938 | 1760 | 1688–7938 | Meet | Meet |

| SE 40 | 900 | 900–7938 | 450–7938 | 1350–7938 | 900 | 813–7938 | Meet | Overlap |

The sound processors for participants with the Nucleus Hybrid S8 cochlear implant were programmed with Advanced Combination Encoder processing strategy with the number of maxima equal to the number of active channels (also known as continuous interleaved sampling strategy). Sound processors for participants with the Nucleus Hybrid S12 cochlear implant were programmed with Advanced Combination Encoder processing strategy with eight maxima. The threshold levels and the maximum comfortable levels were reassessed for each experimental program. The threshold level for each electrode was measured using a counted threshold procedure. Maximum comfort levels were established for each electrode using loudness scaling; these levels were confirmed by sweeping across the electrode array. Loudness balance between the acoustic and electric settings was checked using live speech to determine whether incoming speech with the combined devices sounded natural and balanced to each individual.

This study included four testing sessions. During the first session, speech-perception data were collected with the clinical program that each participant used before the start date of the study. During this visit, the first experimental program was created. After experience at home in their typical everyday listening situations using the first experimental program, participants returned to the lab for speech-perception testing. This format was repeated for each of the three experimental programs. Participants were instructed to use the experimental program during waking hours or what would be considered typical for them. Participants’ clinical programs were available for them to switch to at anytime, but they were instructed to use the experimental program as much as possible. Test order of the experimental conditions was randomized across participants, and they were blinded to specific program type (Meet, Overlap, Gap) until their completion of the study. For each condition, all but one participant had a minimum adaptation period of 11 days (average = 22 days). Because of traveling distance constraints, participants SE38 and SE31 participated in three sessions; for one of the experimental usage phases two experimental programs were used on alternate days for 17 days (SE38) and 35 days (SE31) before returning for speech-perception testing.

Test Measures

All speech-perception testing was performed in a sound-treated booth. The recorded acoustic stimuli were presented in a sound field environment with participants positioned at 0-degree azimuth 1 m from the loudspeaker. The nonimplanted ear was plugged and muffed during testing.

Consonant recognition in quiet was measured using a closed-set /a/-consonant-/a/ format with 16 consonants spoken by four talkers (two male and two female) (Turner et al. 1995). The /a/-consonant-/a/ syllables and talkers were randomized, and a total of 128 test items were presented to each participant for each listening mode condition after a practice test. The stimuli were presented at a comfortable listening level for each individual participant with an average level of 72 dB SPL. Although the primary interest in this study was to assess performance for the ipsilateral A+E listening mode, both ipsilateral acoustic alone (A-alone) and electric alone (E-alone) were also measured at each session for consonant recognition in quiet. The consonant recognition in quiet test measured a participant’s perception of difficult speech items.

Speech recognition in babble was measured using a closed set of 12 randomized spondees spoken by a female talker in the presence of a competing-talker background noise (2 talkers: 1 female and 1 male). The speech and babble were presented from the same speaker. The 50% correct signal-to-noise ratio value for recognition of spondees-in-babble was acquired using an adaptive procedure to vary the background level (Turner et al. 2004). Spondees were presented at an average level of 69 dB SPL. Participants completed three runs after a practice test. The speech recognition in competing-talker babble test measured a participant’s resistance to background noise, one of the advantages of the A+E approach.

To determine whether optimal allocations between acoustic and electric hearing depend upon degree of low-frequency residual hearing, the participants’ performance was evaluated by group. Those with acoustic component cut offs at or below 900 Hz are designated as low (L); those with cut offs above 900 Hz are designated as high (H) (see Fig. 1).

To gauge compliance, participants were sent home with a “program use log” and asked to keep track of approximately how many hours per day they used the different programs (experimental and clinical programs). Participants were asked to keep notes about their impressions of each experimental program and to provide subjective ratings for each program after the completion of each home adaptation period using a scale of 10 (very favorable) to 1 (very unfavorable).

RESULTS

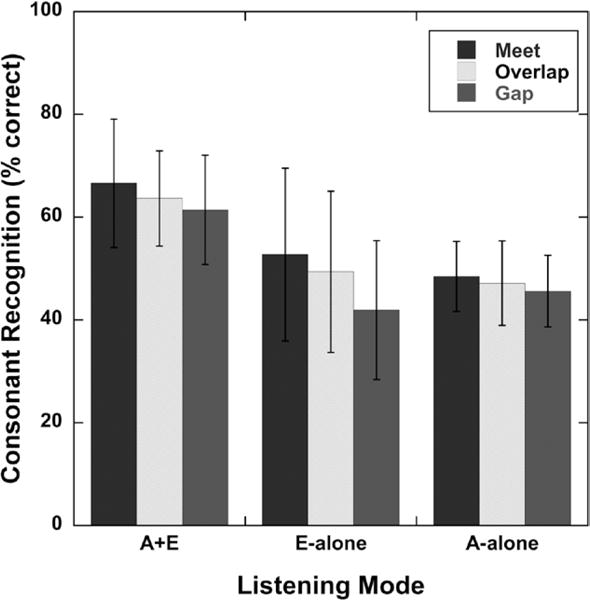

Consonant Recognition in Quiet

The results of a two-factor repeated measures analysis of variance (ANOVA), with condition and group as factors, indicated there was no significant effect for allocation between acoustic and electric processing and no effect of group for recognition of consonants in quiet background for the ipsilateral A+E listening mode. There was no interaction between condition and group. Mean consonant recognition in quiet performances for the three test conditions are plotted in Figure 3 (low and high groups were combined into a single group). This suggests there is no advantage or disadvantage for programming the acoustic and electric frequency ranges to create Meet, Overlap, or Gap processing in the speech spectrum for consonant recognition in quiet performance.

Fig. 3.

Mean consonant recognition in quiet scores (percent correct) for each test condition (Meet, Overlap, Gap) and each listening mode. A+E, ipsilateral acoustic plus electric; E-alone, electric alone; A-alone, ipsilateral acoustic alone. Error bars represent ±1 SD.

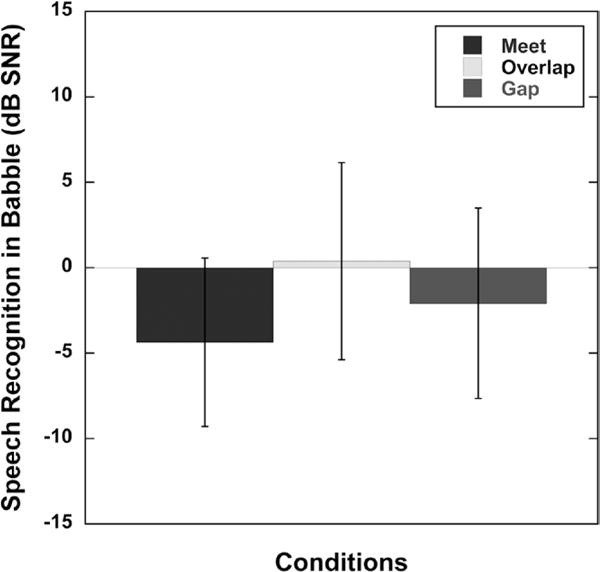

Speech Recognition in Babble

The results of a two-factor repeated measures ANOVA, with condition and group as factors, indicated a significant effect for the allocation between acoustic and electric processing for speech-recognition performance in a babble background (F(2,18) = 6.93, p < 0.0059). There was no effect of group and no interaction between condition and group. Mean speech recognition in babble performances for the three test conditions are plotted in Figure 4 (low and high groups were combined into a single group). This suggests that programming the acoustic and electric frequency ranges to create Meet, Overlap, or Gap processing can have an effect on speech recognition in babble. Post hoc comparisons using a Tukey–Kramer adjustment (with α = 0.05) revealed that the Meet condition was significantly different for speech recognition in babble performance compared with the Overlap condition (p = 0.0042).

Fig. 4.

Mean signal-to-noise ratio (dB SNR) for 50% correct speech recognition in babble for each test condition (Meet, Overlap, Gap) for the A+E listening mode. A+E, ipsilateral acoustic plus electric. Error bars represent ±1 SD.

Low Frequency Acoustic Cut Off Versus Higher Frequency Cut Off

As stated earlier, when participants’ performances were grouped and analyzed for acoustic cut offs at or below 900 Hz (L) versus those with cut offs above 900 Hz (H), there was no significant difference between the two groups. This would suggest that optimal allocations did not depend upon degree of low-frequency residual hearing or electrical responses from the cochlear implant, at least for the participant characteristics in this study.

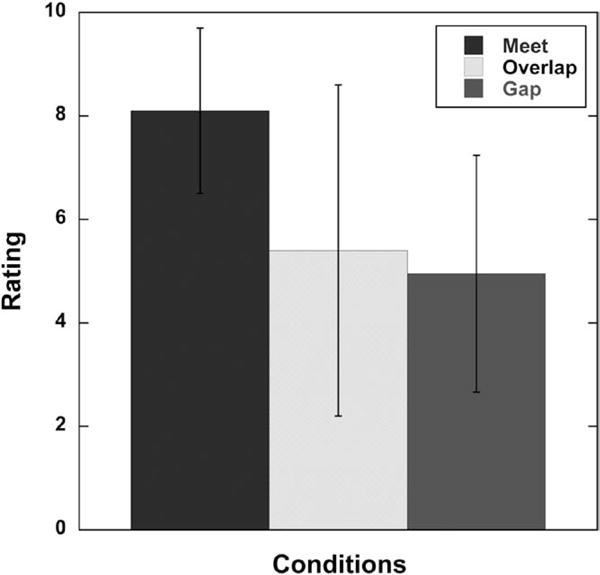

Subjective Ratings by Participants

The results of a two-factor repeated measures ANOVA, with condition and group as factors, indicated a significant effect for allocation between acoustic and electric processing for participant subjective ratings (F(2,18) = 4.45, p < 0.0269). There was no effect of group and no interaction between condition and group. Mean subjective ratings by the participants for the three test conditions are plotted in Figure 5. Results suggest that programming the acoustic and electric frequency ranges to create Meet, Overlap, or Gap processing can have an effect on subjective rating. Post hoc comparisons using a Tukey–Kramer adjustment (with α = 0.05) revealed that the Meet condition was significantly different for subjective rating compared with the Gap condition (p = 0.0331). For the Meet condition, the mean rating across participants was 8.10 and the median rating was 8.00. Nine of 10 participants gave mostly positive or neutral subjective remarks such as “sounds natural,” “better clarity,” and “liked the program for listening to song lyrics and listening to TV.” One of 10 gave mostly negative remarks such as “nasal” sounding. For the Overlap condition, the mean rating across participants was 5.40 and the median rating was 5.50. Seven of 10 participants gave mostly negative subjective remarks such as “too much echo,” “sounds quacky,” and “distorted.” Three of 10 participants gave mostly positive or neural subjective remarks such as “could hear better in the car” and “sounds similar to my clinical map.” For the Gap condition, the mean rating was 4.95 and the median rating was 5.00. Six of 10 participants gave mostly negative subjective remarks including “sounds like people are talking with marbles in their mouth,” “sounds kind of mushy,” “garbled,” “thinner sound quality—almost tinny.” Four of 10 gave mostly positive or neural remarks including “worked okay” and “pretty good.”

Fig. 5.

Mean subjective ratings by participants for each test condition (Meet, Overlap, Gap). Participant ratings for experimental programs using a scale of 10 (very favorable) to 1 (very unfavorable). Error bars represent ±1 SD.

DISCUSSION

This study explored whether there is an optimal approach to program the A+E devices in the same ear to maximize speech recognition for patients implanted with a Hybrid cochlear implant. The experimental conditions were devised based on the foundation of providing participants access to as much of their residual acoustic hearing as would be beneficial to them. The acoustic component stimulation was then held constant across all the conditions, and the electric frequency range programming was systematically varied to create Meet, Overlap, and Gap programming.

Instead of using a specified dB HL point on the audiogram, we used an objective, hearing aid based method to determine the upper edge of the acoustic range presented to the participant. Because each individual has different volume, shape, and resonance characteristics to their ear canal, real-ear measurements were conducted to adjust the output of the acoustic component to match NAL-NL1 guidelines. For example, audiologists might be able to achieve prescriptive target for one individual in whom the audiometric hearing threshold is 85 dB HL, whereas real-ear measurement for another individual with the same audiometric threshold at the same frequency may fall considerably short of (or exceed) the prescriptive target. An unintentional gap or overlap could result between actual acoustic and electric stimulation if one uses only a specified dB HL point on the audiogram to determine upper edge of acoustic or to set the lower frequency boundary for electric stimulation rather than taking into consideration real-ear measurements.

We speculated that speech-perception performance for the A+E listening mode might be significantly degraded when a gap was created between acoustic and electric processing. However, for consonant recognition in quiet, this was not the case. Only in the E-alone listening mode, did the narrower electric frequency range affect consonant recognition. This is logical because the Gap condition provides the narrowest electric frequency range among the different conditions, thus total speech information is high-pass limited. The results of this study showed that after the users were allowed to adapt, there was no significant difference among conditions for consonant recognition in quiet for the A+E listening mode (even with a moderate gap between acoustic and electric stimulation). This is in agreement with the findings of Lippmann (1996), who demonstrated with normal-hearing listeners that performance of consonant recognition in quiet could be reasonably maintained even when large amounts of mid-frequency speech energy are removed. Dorman et al. (2005) conducted simulation studies focusing on speech-recognition performance as a function of frequency gap size between acoustic and electric stimulation. Their results indicated that removal of mid-frequency information has a greater effect on vowel identification and consonant place of articulation, and little effect on consonant voicing and manner; however, the frequency gaps they employed were much larger than those of the present study. We could therefore theorize for patients using Hybrid devices, when both low (acoustic) and high (electric) frequencies are presented, a moderate gap in the spectrum may not be particularly problematic for recognition of consonants in quiet.

In contrast, for speech recognition in babble, the highest level of performance for the A+E listening mode was found with the Meet programming, and the poorest performance with the Overlap programming. Why might Overlap programming be less optimal in babble? Frequency resolution is better for acoustic hearing than for electric hearing (Henry et al. 2005). Speech that occurs in a background of other talkers requires better frequency resolution to be understood. So for speech understanding in babble, the Overlap programming might impair the advantage provided by the better frequency resolution of the acoustic hearing by introducing an electric “masking” signal on the acoustic information that diminishes the advantage of the acoustic signal.

When comparing across previous studies with participants who use acoustic and electric hearing devices in the same ear, each study is unique in design. The programming strategy contrasts in our study were made between Meet, Overlap (50% below upper edge of acoustic), and Gap (50% above upper edge of acoustic) conditions, whereas other studies made comparisons between full Overlap and variations of reduced Overlap. Our results generally agree with the principle revealed in Vermeire et al. (2008), where the participants demonstrated consistently better performance for the reduced Overlap fitting programs.

As a group, participants reported a subjective preference for the Meet fitting regimen. The Gap and Overlap programming were reported to be less natural sounding. One could speculate that subjective ratings and comments could be related to the type of programming each individual was accustomed to using, which is one of the motives behind providing participants the opportunity to use the experimental programs in their everyday listening situations for a reasonable duration of time. Values for upper edge of acoustic and electric frequency ranges for clinical programs are shown in Table 2 (right side) for each participant. Recall that “upper edge of acoustic” was redefined for the present study. Table 2 also shows whether clinical programs were similar to Meet, Overlap, or Gap, and each participant’s preferred experimental program based on subjective rating. For the participants in this study, the preferred experimental program did not always reflect the type of program (Meet, Overlap, Gap) to which they were accustomed. The clinical program for SE6, SE21, SE27, and SE38 was similar to Meet, and their preferred experimental program based on subjective ratings was Meet (note the differences between the clinical program and the experimental Meet program for SE21). Other participants (SE10, SE31, and SE40) preferred experimental programs different from their clinical program. Two participants (SE26 and SE37) rated each program the same across all experimental conditions, and participant SE16 rated the Meet program and Overlap program the same.

A possible limitation of the present study is that only the Hybrid (short-electrode) device was used. Patients with a Hybrid cochlear implant typically have their most apical electrode located at approximately the 4000 Hz place along the cochlea. This most apical electrode is assigned speech frequencies near 700 Hz. Thus, there will be a large spatial gap along the cochlea between the acoustic stimulation (whose frequencies are approximately 700 Hz and below) and the electric stimulation for frequencies above 700 Hz. No interactions between electric and acoustic stimulation have been detected in any of our patients using electrophysiology and behavioral masking (detection) measures. Therefore, potential interactions between acoustic and electric stimulation in these patients are most likely to be limited to strictly perceptual phenomena. For patients using longer electrode arrays in combined acoustic and electric stimulation, the possibility may be higher that peripheral interactions may occur, although a search of the available literature does not reveal any reports of peripheral interactions for longer electrodes. In addition, the present study examined the ipsilateral pathway; future research may want to investigate the combined condition (hearing aid in each ear plus implant).

SUMMARY

When users were allowed to adapt, there were no significant differences in performance between conditions (Meet, Overlap, Gap) for consonant recognition in quiet. However, a significant decrement in performance was found in the Overlap condition for speech recognition in competing-talker babble. These results suggest that the Overlap method may not be optimal for the programming of ipsilateral acoustic plus electric devices. Subjective ratings indicated a significant preference for the Meet fitting regimen.

We recommend the fitting process begin by optimizing the acoustic component using real-ear measures to determine the upper edge of acoustic (or highest frequency band where prescriptive targets are matched). This should be followed by selecting the electric lower frequency allocation so that a Meet fitting strategy is achieved.

Acknowledgments

The authors gratefully acknowledge Jacob Oleson for assistance with statistical analysis. The authors also thank Danielle Kelsay, Stephanie Fleckenstein, Ann Perreau, Julie Wieland, Anna Hong, and Aayesha Dhuldhoya for their contributions to this work. The authors are especially appreciative to the subjects who generously gave their time to participate in this study.

Cochlear Corporation provided some of the equipment including Nucleus Freedom Hybrid sound processors for the participants to use at home for the collection of data for this study. This work was supported in part by National Institute on Deafness and Other Communication Disorders grants 1 RC1DC010696 and 2 P50 DC000242.

Bruce J. Gantz serves as a consultant for Cochlear Corporation and Advanced Bionics. Dr. Gantz and the University of Iowa have a patent on the Hybrid cochlear implant but receive no royalties.

Footnotes

The remaining authors have no financial disclosures to report.

References

- Baumann U, Rader T, Helbig S, et al. Pitch matching psychometrics in electric acoustic stimulation. Ear Hear. 2011;32:656–662. doi: 10.1097/AUD.0b013e31821a4800. [DOI] [PubMed] [Google Scholar]

- Büchner A, Schüssler M, Battmer RD, et al. Impact of low-frequency hearing. Audiol Neurootol. 2009;14(Suppl 1):8–13. doi: 10.1159/000206490. [DOI] [PubMed] [Google Scholar]

- Byrne D, Dillon H, Ching T, et al. NAL-NL1 procedure for fitting nonlinear hearing aids: Characteristics and comparisons with other procedures. J Am Acad Audiol. 2001;12:37–51. [PubMed] [Google Scholar]

- Chang JE, Bai JY, Zeng FG. Unintelligible low-frequency sound enhances simulated cochlear-implant speech recognition in noise. IEEE Trans Biomed Eng. 2006;53(12 Pt 2):2598–2601. doi: 10.1109/TBME.2006.883793. [DOI] [PubMed] [Google Scholar]

- Ching TY, Dillon H, Byrne D. Speech recognition of hearing-impaired listeners: Predictions from audibility and the limited role of high-frequency amplification. J Acoust Soc Am. 1998;103:1128–1140. doi: 10.1121/1.421224. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH. Combining acoustic and electric stimulation in the service of speech recognition. Int J Audiol. 2010;49:912–919. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Spahr AJ, Loizou PC, et al. Acoustic simulations of combined electric and acoustic hearing (EAS) Ear Hear. 2005;26:371–380. doi: 10.1097/00003446-200508000-00001. [DOI] [PubMed] [Google Scholar]

- Dunn CC, Perreau A, Gantz B, et al. Benefits of localization and speech perception with multiple noise sources in listeners with a short-electrode cochlear implant. J Am Acad Audiol. 2010;21:44–51. doi: 10.3766/jaaa.21.1.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fraysse B, Macías AR, Sterkers O, et al. Residual hearing conservation and electroacoustic stimulation with the nucleus 24 contour advance cochlear implant. Otol Neurotol. 2006;27:624–633. doi: 10.1097/01.mao.0000226289.04048.0f. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Galvin JJ., 3rd Perceptual learning and auditory training in cochlear implant recipients. Trends Amplif. 2007;11:193–205. doi: 10.1177/1084713807301379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu QJ, Shannon RV, Galvin JJ., 3rd Perceptual learning following changes in the frequency-to-electrode assignment with the Nucleus-22 cochlear implant. J Acoust Soc Am. 2002;112:1664–1674. doi: 10.1121/1.1502901. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Hansen MR, Turner CW, et al. Hybrid 10 clinical trial: Preliminary results. Audiol Neurootol. 2009;14(Suppl 1):32–38. doi: 10.1159/000206493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gantz BJ, Turner CW. Combining acoustic and electrical hearing. Laryngoscope. 2003;113:1726–1730. doi: 10.1097/00005537-200310000-00012. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Turner C. Combining acoustic and electrical speech processing: Iowa/Nucleus hybrid implant. Acta Otolaryngol. 2004;124:344–347. doi: 10.1080/00016480410016423. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Turner C, Gfeller KE, et al. Preservation of hearing in cochlear implant surgery: Advantages of combined electrical and acoustical speech processing. Laryngoscope. 2005;115:796–802. doi: 10.1097/01.MLG.0000157695.07536.D2. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Turner C, Gfeller KE. Acoustic plus electric speech processing: Preliminary results of a multicenter clinical trial of the Iowa/Nucleus Hybrid implant. Audiol Neurootol. 2006;11(Suppl 1):63–68. doi: 10.1159/000095616. [DOI] [PubMed] [Google Scholar]

- Gfeller KE, Olszewski C, Turner C, et al. Music perception with cochlear implants and residual hearing. Audiol Neurootol. 2006;11(Suppl 1):12–15. doi: 10.1159/000095608. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Turner C, Oleson J, et al. Accuracy of cochlear implant recipients on pitch perception, melody recognition, and speech reception in noise. Ear Hear. 2007;28:412–423. doi: 10.1097/AUD.0b013e3180479318. [DOI] [PubMed] [Google Scholar]

- Gstoettner W, Kiefer J, Baumgartner WD, et al. Hearing preservation in cochlear implantation for electric acoustic stimulation. Acta Otolaryngol. 2004;124:348–352. doi: 10.1080/00016480410016432. [DOI] [PubMed] [Google Scholar]

- Gstoettner W, van de Heyning P, O’Connor AF, et al. Electric acoustic stimulation of the auditory system: Results of a multi-centre investigation. Acta Otolaryngol. 2008;128:968–975. doi: 10.1080/00016480701805471. [DOI] [PubMed] [Google Scholar]

- Helbig S, Van de Heyning P, Kiefer J, et al. Combined electric acoustic stimulation with the PULSARCI(100) implant system using the FLEX(EAS) electrode array. Acta Otolaryngol. 2011;131:585–595. doi: 10.3109/00016489.2010.544327. [DOI] [PubMed] [Google Scholar]

- Henry BA, Turner CW, Behrens A. Spectral peak resolution and speech recognition in quiet: Normal hearing, hearing impaired, and cochlear implant listeners. J Acoust Soc Am. 2005;118:1111–1121. doi: 10.1121/1.1944567. [DOI] [PubMed] [Google Scholar]

- Hogan CA, Turner CW. High-frequency audibility: Benefits for hearing-impaired listeners. J Acoust Soc Am. 1998;104:432–441. doi: 10.1121/1.423247. [DOI] [PubMed] [Google Scholar]

- James C, Albegger K, Battmer R, et al. Preservation of residual hearing with cochlear implantation: How and why. Acta Otolaryngol. 2005;125:481–491. doi: 10.1080/00016480510026197. [DOI] [PubMed] [Google Scholar]

- Kiefer J, Gstoettner W, Baumgartner W, et al. Conservation of low-frequency hearing in cochlear implantation. Acta Otolaryngol. 2004;124:272–280. doi: 10.1080/00016480310000755a. [DOI] [PubMed] [Google Scholar]

- Kiefer J, Pok M, Adunka O, et al. Combined electric and acoustic stimulation of the auditory system: Results of a clinical study. Audiol Neurootol. 2005;10:134–144. doi: 10.1159/000084023. [DOI] [PubMed] [Google Scholar]

- Lenarz T, Stöver T, Buechner A, et al. Temporal bone results and hearing preservation with a new straight electrode. Audiol Neurootol. 2006;11(Suppl 1):34–41. doi: 10.1159/000095612. [DOI] [PubMed] [Google Scholar]

- Lenarz T, Stöver T, Buechner A, et al. Hearing conservation surgery using the Hybrid-L electrode. Results from the first clinical trial at the Medical University of Hannover. Audiol Neurootol. 2009;14(Suppl 1):22–31. doi: 10.1159/000206492. [DOI] [PubMed] [Google Scholar]

- Lippmann RP. Accurate consonant perception without mid-frequency speech energy. IEEE Trans Speech and Audio Process. 1996;4:66–69. [Google Scholar]

- McDermott H, Sucher C, Simpson A. Electro-acoustic stimulation. Acoustic and electric pitch comparisons. Audiol Neurootol. 2009;14(Suppl 1):2–7. doi: 10.1159/000206489. [DOI] [PubMed] [Google Scholar]

- Qin MK, Oxenham AJ. Effects of introducing unprocessed low-frequency information on the reception of envelope-vocoder processed speech. J Acoust Soc Am. 2006;119:2417–2426. doi: 10.1121/1.2178719. [DOI] [PubMed] [Google Scholar]

- Reiss LA, Gantz BJ, Turner CW. Cochlear implant speech processor frequency allocations may influence pitch perception. Otol Neurotol. 2008;29:160–167. doi: 10.1097/mao.0b013e31815aedf4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosen S, Faulkner A, Wilkinson L. Adaptation by normal listeners to upward spectral shifts of speech: Implications for cochlear implants. J Acoust Soc Am. 1999;106:3629–3636. doi: 10.1121/1.428215. [DOI] [PubMed] [Google Scholar]

- Simpson A, McDermott HJ, Dowell RC, et al. Comparison of two frequency-to-electrode maps for acoustic-electric stimulation. Int J Audiol. 2009;48:63–73. doi: 10.1080/14992020802452184. [DOI] [PubMed] [Google Scholar]

- Skarzynski H, Lorens A, Piotrowska A. A new method of partial deafness treatment. Med Sci Monit. 2003;9:CS20–CS24. [PubMed] [Google Scholar]

- Turner CW, Gantz BJ, Karsten S, et al. Impact of hair cell preservation in cochlear implantation: combined electric and acoustic hearing. Otol Neurotol. 2010;31:1227–1232. doi: 10.1097/MAO.0b013e3181f24005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner C, Gantz BJ, Reiss L. Integration of acoustic and electrical hearing. J Rehabil Res Dev. 2008a;45:769–778. doi: 10.1682/jrrd.2007.05.0065. [DOI] [PubMed] [Google Scholar]

- Turner CW, Gantz BJ, Vidal C, et al. Speech recognition in noise for cochlear implant listeners: Benefits of residual acoustic hearing. J Acoust Soc Am. 2004;115:1729–1735. doi: 10.1121/1.1687425. [DOI] [PubMed] [Google Scholar]

- Turner CW, Reiss LA, Gantz BJ. Combined acoustic and electric hearing: Preserving residual acoustic hearing. Hear Res. 2008b;242:164–171. doi: 10.1016/j.heares.2007.11.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner CW, Souza PE, Forget LN. Use of temporal envelope cues in speech recognition by normal and hearing-impaired listeners. J Acoust Soc Am. 1995;97:2568–2576. doi: 10.1121/1.411911. [DOI] [PubMed] [Google Scholar]

- Uchanski RM, Davidson LS, Quadrizius S, et al. Two ears and two (or more?) devices: A pediatric case study of bilateral profound hearing loss. Trends Amplif. 2009;13:107–123. doi: 10.1177/1084713809336423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vermeire K, Anderson I, Flynn M, et al. The influence of different speech processor and hearing aid settings on speech perception outcomes in electric acoustic stimulation patients. Ear Hear. 2008;29:76–86. doi: 10.1097/AUD.0b013e31815d6326. [DOI] [PubMed] [Google Scholar]

- von Ilberg C, Kiefer J, Tillein J, et al. Electric-acoustic stimulation of the auditory system. New technology for severe hearing loss. ORL J Otorhinolaryngol Relat Spec. 1999;61:334–340. doi: 10.1159/000027695. [DOI] [PubMed] [Google Scholar]