Abstract

In this article, we extend the statistical detection performance evaluation of linear connectivity from Sameshima et al. (in: Slezak et al. (eds.) Lecture Notes in Computer Science, 2014) via brand new Monte Carlo simulations of three widely used toy models under different data record lengths for a classic time domain multivariate Granger causality test, information partial directed coherence, information directed transfer function, and include conditional multivariate Granger causality whose behaviour was found to be anomalous.

Keywords: Partial directed coherence, Directed transfer function , Granger causality, Null hypothesis test performance, Conditional multivariate Granger causality

Introduction

This paper compares the statistical performance of linear connectivity detection [1] using four popular neural connectivity estimators. In addition to the classic Granger causality test (GCT) from [2], we employ our recently derived rigorous results [3, 4] about the asymptotic behaviour of information PDC (iPDC) and information DTF (iDTF) [5] that, respectively, generalize partial directed coherence (PDC) [6] and directed transfer function (DTF) [7] which correctly describe coupling effect size issues. A fourth method was included in this extended version of [8] and consists of the proposal put forward by [9] (cMVGC) for detecting conditional Granger causality between time series pairs and is applied here using their published MVGC package. There have been many recent papers [10–14] aimed at comparing contending connectivity estimation procedures. In fact, almost every new connectivity estimation procedure sports some form of appraisal by counting the number of correct detection decisions. What sets the present effort apart, as emphasized by using the word statistical, is that we focus on methods that have rigourous theoretically derived asymptotic detection criteria.

In the comparisons, we used Monte Carlo simulations of three widely used toy models from the literature and verified the performance of null connectivity hypothesis rejection as a function of data record length, K. To complement the study, we also computed false positive (FP) and false negative (FN) test rates for each estimator alternative. In the MVGC package case, false detection rates were computed with and without author-recommended corrections [9].

Methods and results

Monte Carlo simulations

Following our recently proposed information PDC and information DTF [5], and their corresponding rigorous asymptotic statistics (see [3] and [4] for details), we first gauged their statistical performance against that of the well-established time-domain GCT test [2]. For added comparison here, we added results from conditional connectivity detection obtained via the MVGC package [9]. Monte Carlo simulations were performed in the MATLAB environment using its normally distributed pseudorandom number generator to simulate systems with uncorrelated zero mean and unit variance innovation noise as model inputs. To test the performance of the latter four connectivity estimators, for each toy model and at each data record length, we selected values of K = {100, 200, 500, 1000, 2000, 5000, 10000} repeating 1000 simulations for each case. For each simulation, a burn-in set of 5000 initial data points were discarded to eliminate possible transients before selecting the K value of interest. We used the Nuttall-Strand algorithm for multivariate autoregressive (MAR) model estimation and the Akaike information criterion (AIC) for model order selection [15] for GCT, iPDC and iDTF, while the Levinson–Wiggins–Robinson solution of the multivariate Yule-Walker equations was used as is default for cMVGC [9]. Detection threshold was set in compliance to . For iPDC and iDTF, p values were computed at 32 uniformly separated normalized frequency points covering the whole interval with a connection being deemed detected for a given pair of structures if its p value resulted to be less than for some frequency within the interval. This connectivity decision criterion is somewhat lax and tends to overestimate the presence of connectivity for iPDC and iDTF. In particular for iPDC, one should expect connectivity detection more often than GCT, i.e. more FPs are likely.

The reader may access our open MATLAB codes for GCT and for both iPDC and iDTF asymptotic statistics used in this study at http://www.lcs.poli.usp.br/~baccala/BIHExtension2014/.

The Web site, furthermore, contains the datasets of the employed simulation results and a copy of the exact version of the MVGC package used in the present comparisons [9]. This allows full reader accessing disclosure of the data/procedures with the possibility of cross-checking and replaying all results. Additional graphs and results are available there and may be consulted for details; only the overall representative behaviour is summed up here.

Next we describe the toy models and the allied simulations results.

Model 1: Closed-loop model

It is an -variable model, borrowed from [16] (Fig. 1), with two completely disconnected substructures, {} and {, }, which share a common frequency of oscillation. The set of descriptive equations is

| 1 |

where stand for uncorrelated Gaussian innovations.

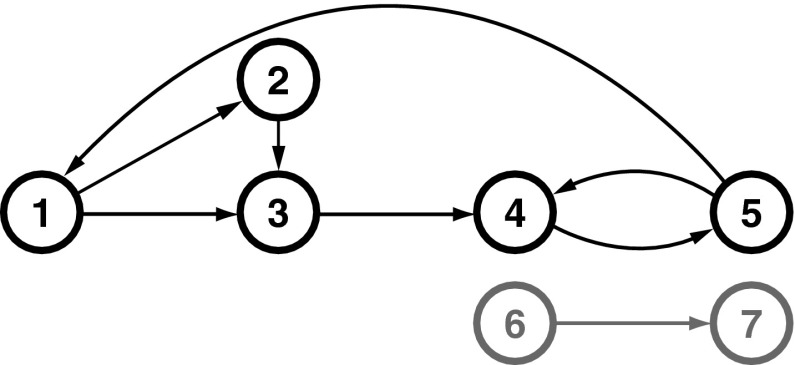

Fig. 1.

Diagram depicting the essential elements of Model 1 represented by Eq. 1 from [16]. The elements from to establish closed-loop connections, with short and long connected paths, while and are part of a completely separate substructure, i.e. disconnected from , but sharing a common frequency of oscillation

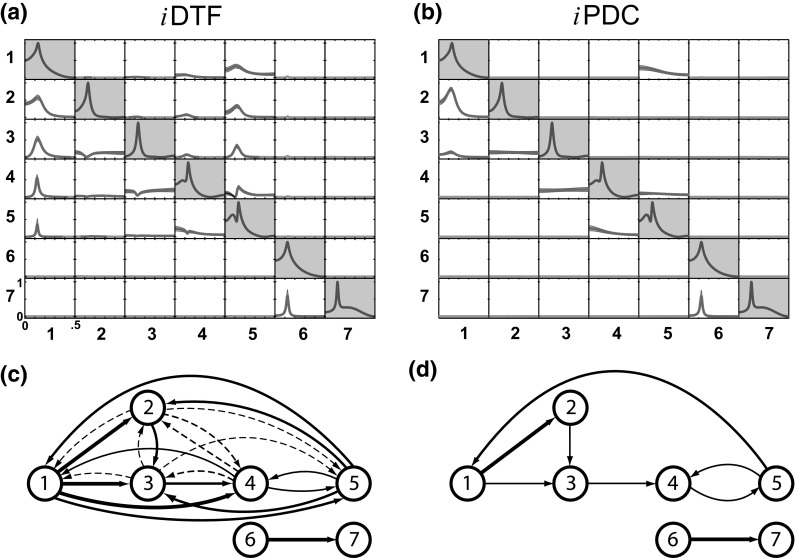

Results from a single-trial example of iDTF and iPDC connectivity estimations in the frequency domain are depicted in Fig. 2a, b, respectively, with significant values, at , represented by red solid lines. The corresponding connectivity graph diagrams are contained in Fig. 2c, d, where arrow thickness represents estimate magnitude. Note that iPDC reflects adjacent connections, Fig. 2b, d, while iDTF, Fig. 2a, c, represents graph reachability aspects of the directed structure [17, 18]. The notion of reachability refers to the net influence from a time series onto another through various signal pathways, i.e. it measures how much of one series ends up influencing another.

Fig. 2.

A single-trial results of a iDTF and b iPDC estimations obtained using a data simulation of Model 1 with K = 2000 points. In both subfigures, a, b, the main diagonal subplots with gray background contain power spectra, while each off-diagonal subplot represents iDTF or iPDC measure in the frequency domain with variables in columns representing the sources and in rows the target structures, in which significant measure is drawn in red lines at , and in green lines otherwise. c Note that, as theoretically expected, according to iDTF estimation, all nodes of set can reach one another, d while iPDC correctly exposes, similar to GCT, the immediate adjacent node’s connectivity pattern. See further discussion about the contrast between iDTF and iPDC in [17]. (Color figure online)

Granger causality test for Model 1

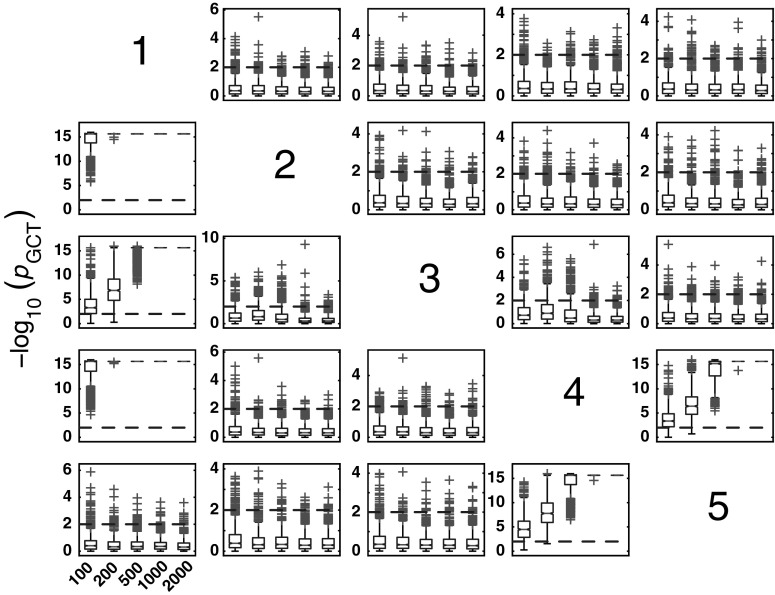

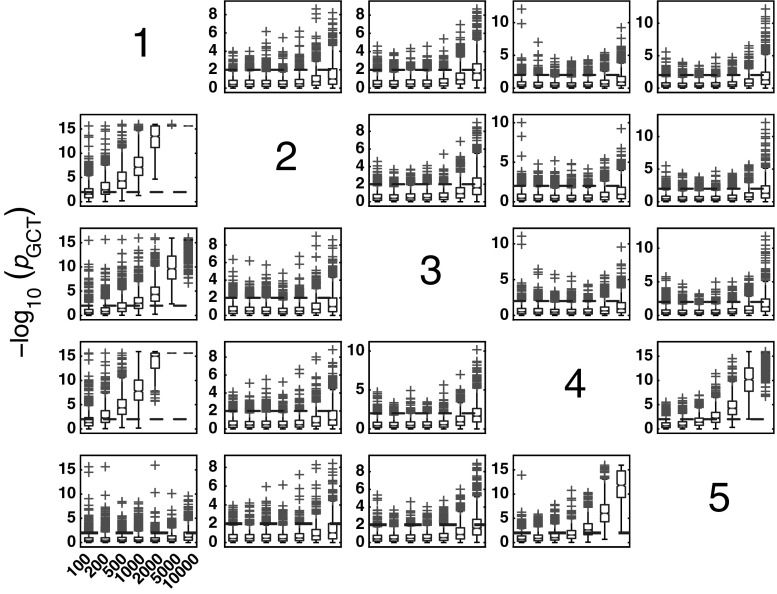

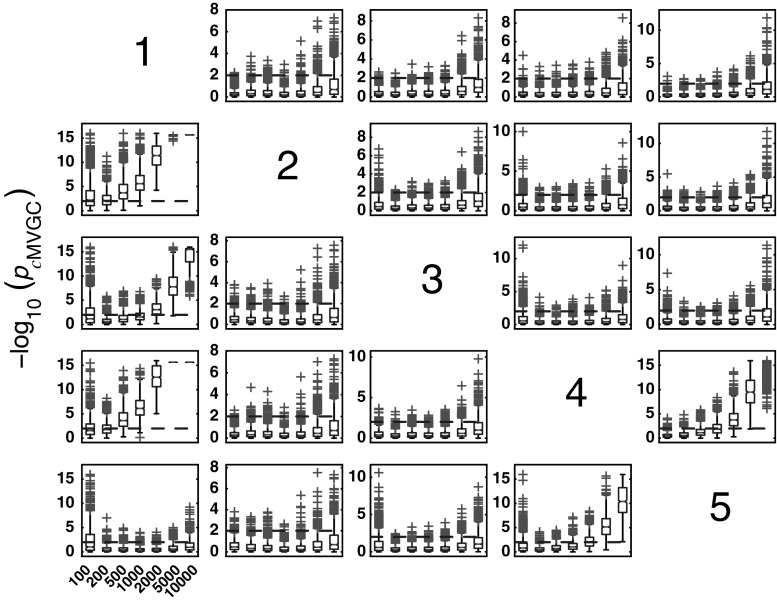

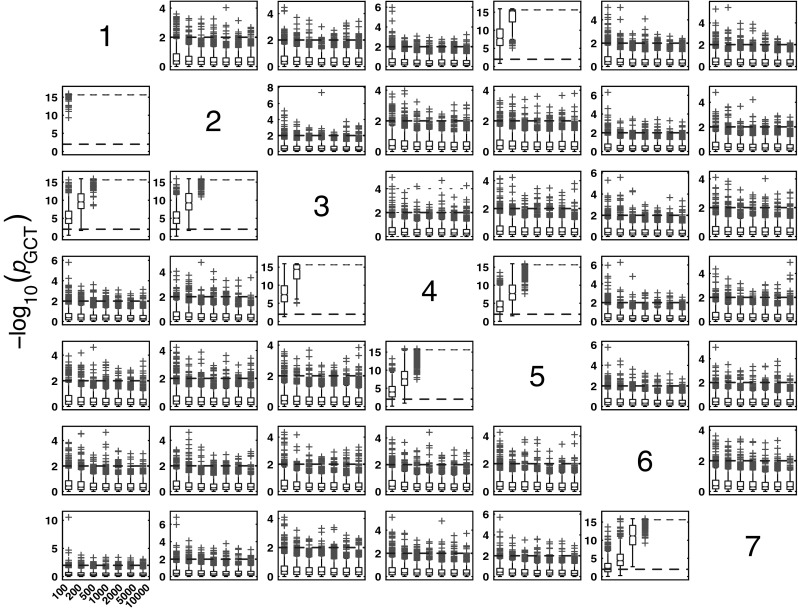

The boxplots of −log10(p value) in Fig. 3 summarize GCT performance for Model 1 and K = {100, 200, 500, 1000, 2000, 5000, 10000} data record lengths. As expected, for K > 200, it properly detects connectivity between adjacent structures with zero observed FNs for all pairs of existing connections.

Fig. 3.

The patterns (in this and all the figures of similar kind that follow) containing subplots with variables in columns representing the sources and the target structures in rows. Each subplot possesses boxplots of the distribution of GCT −log10(p value) for 1000 Monte Carlo simulations over different record lengths K = {100, 200, 500, 1000, 2000, 5000, 10000} along the x-axis of each subplot. Since , values above 2 (dashed-line) indicate rejection of the null hypothesis of connectivity absence. Red crosses indicate p value distribution outliers, and those above dashed-line represent false positives (FPs) for nonexisting connections. (Color figure online)

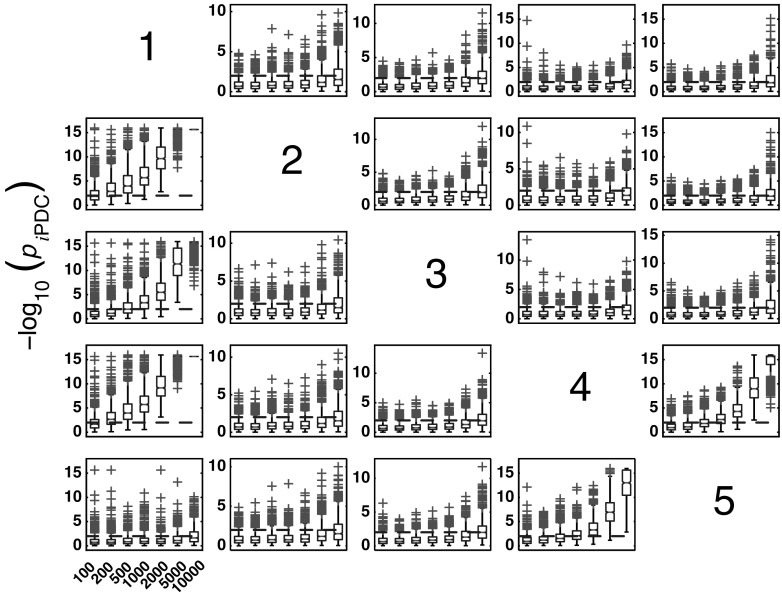

iPDC performance for Model 1

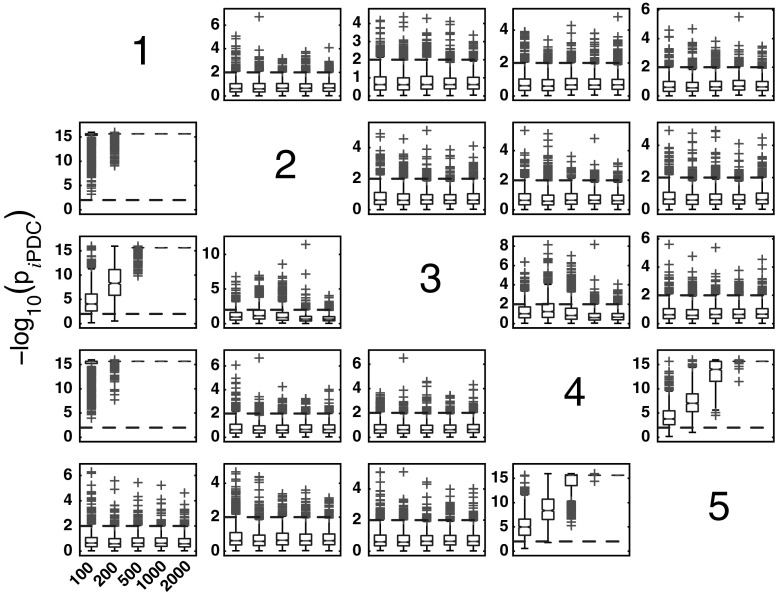

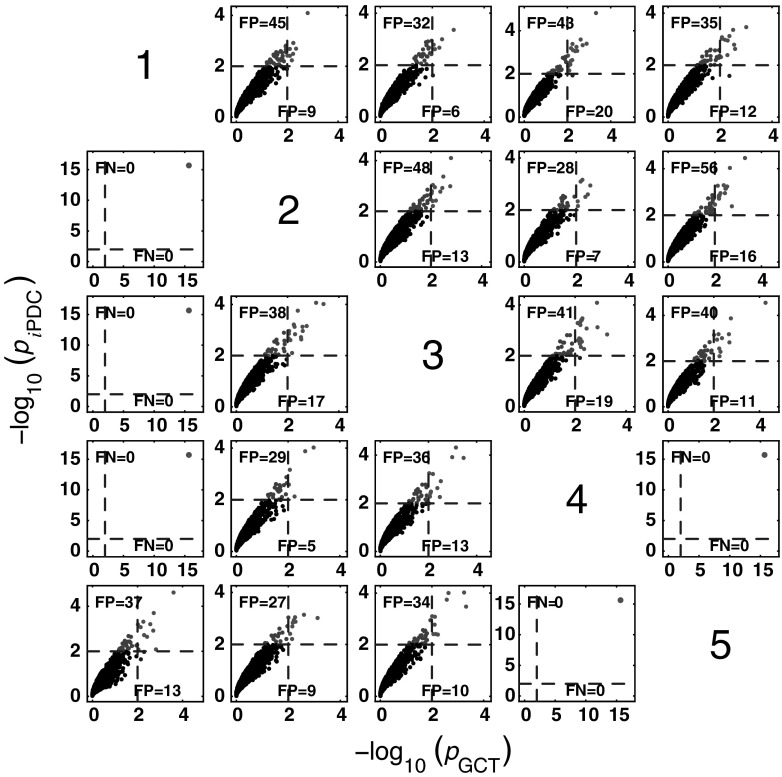

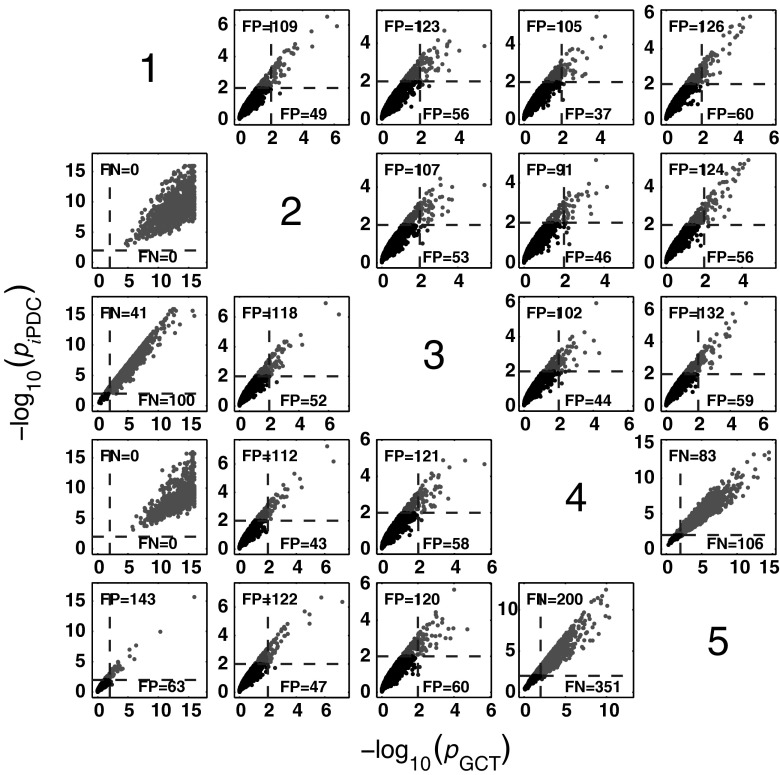

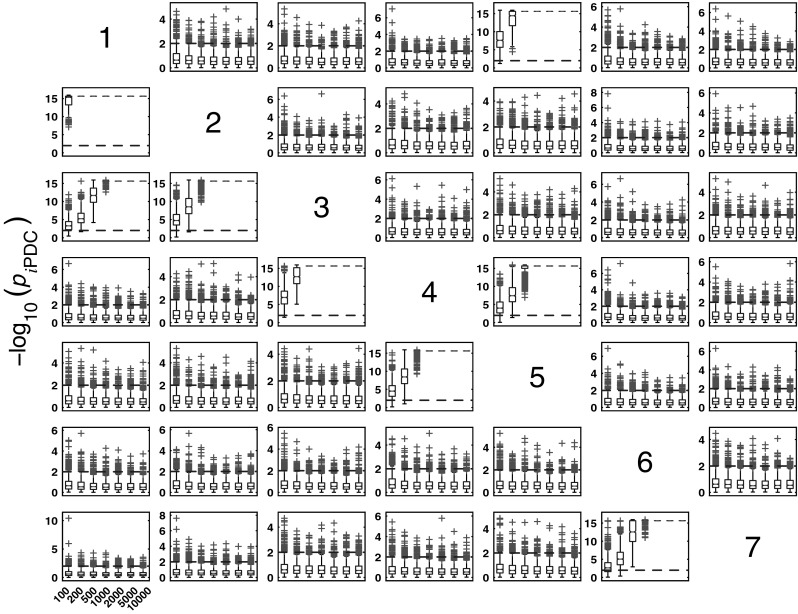

Figure 4 summarizes the asymptotic iPDC statistical performances for the same data and record lengths as for GCT in Fig. 3 with similar performance (Figs. 5, 6). Closer comparison on identical trials for each estimator leads to Fig. 7 depicting iPDC versus GCT performance (K = 2000), further revealing a pattern of consistently higher FP values for iPDC expectedly resulting from how the test was performed with iPDC decision dictated by a single maximum frequency above threshold. In Fig. 7, the average slopes are above 45° consistent with the larger number of FPs for iPDC.

Fig. 4.

Model 1 boxplot performance summary of iPDC asymptotics. Most outliers for absent connections are above the threshold decision line

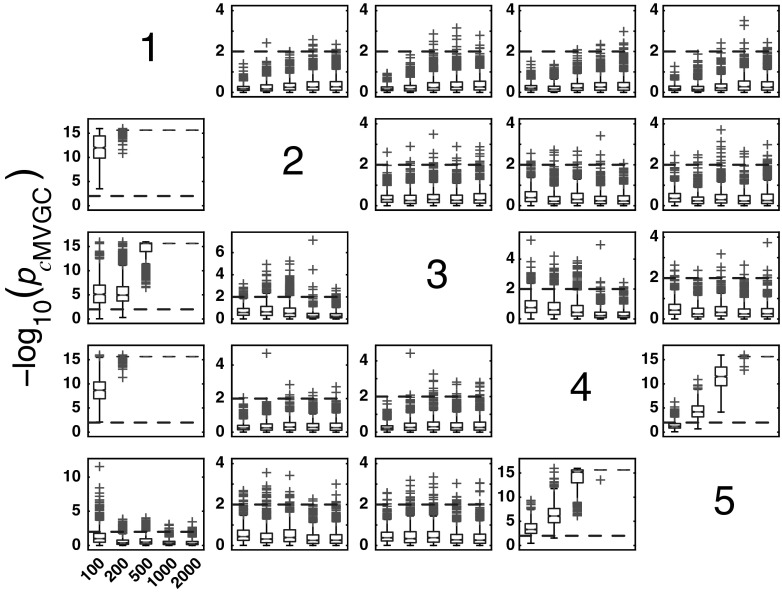

Fig. 5.

Model 1 boxplot performance summary of cMVGC asymptotics. Note that red cross outliers for the connections into and (see the first and sixth rows of subplots’ layouts) are consistently below −log10(p value) = 2, and those from consistently above for all , something that is reflected in Fig. 8. (Color figure online)

Fig. 6.

Model 1 boxplot performance summary of iDTF asymptotics. Note that every node of set can directionally reach one another, as depicted in Fig. 2c. Note also that FN rates decrease consistently for larger K

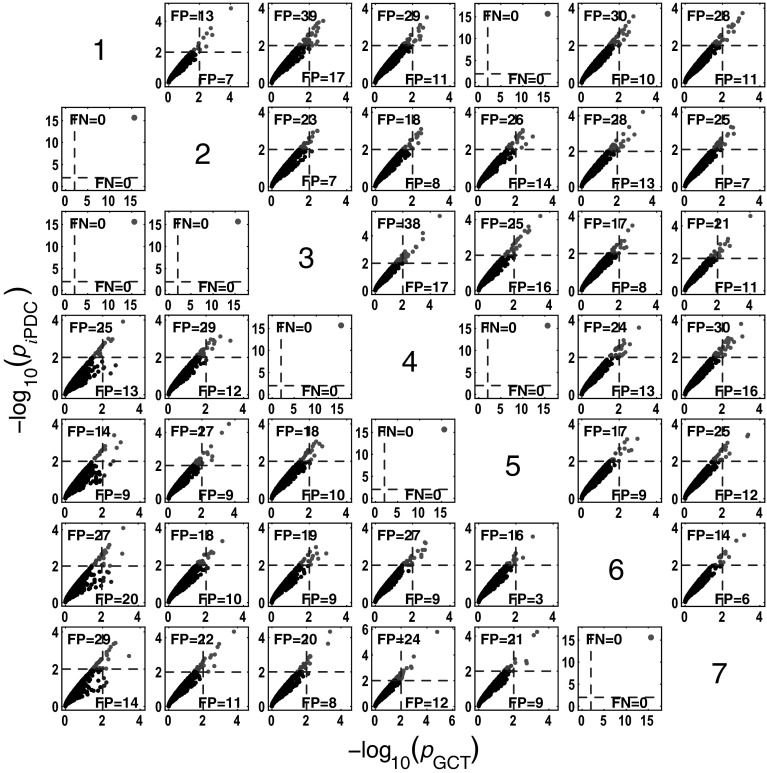

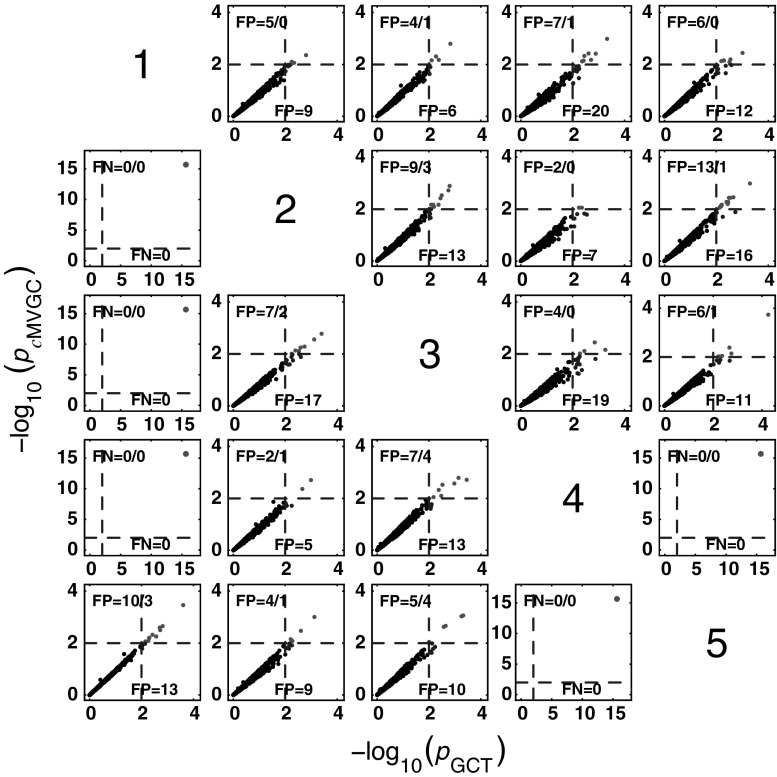

Fig. 7.

GCT and iPDC connectivity comparative detection performance (K = 2000) where iPDC −log10 (p value) for each one of the 1000 simulations is plotted against its GCT’s −log10 (p value). Results for all connections are clustered slightly above the 45° line with (median; minimum; and maximum). The number of FP and FN detections over the 1000 simulations are also shown for iPDC (top left) and GCT (bottom right) for each subplot

At this point, one should note that for trial-by-trial comparisons between methods only those against GCT are present for the sake of conciseness. Pairwise behaviour for other pairs of methods is easy to infer. GCT’s choice as a reference was dictated by its canonical behaviour in terms of the expected performance in the Neyman–Pearson hypothesis testing framework. In the Web site, it is possible to use available routines to examine the results that apply to the comparison between other pairs of methods.

cMVGC behaviour for Model 1

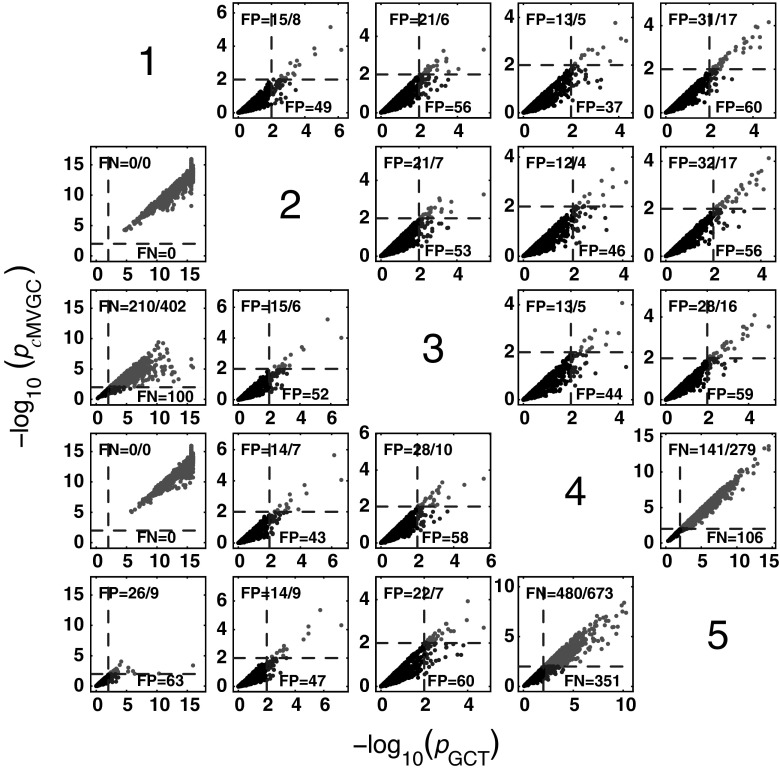

Figure 5 summarizes the performance of pairwise conditional MVGC in the form of boxplots. They asymptotically capture the structure of Fig. 1 despite differences compared to GCT and iPDC. These differences are easier to appreciate on the trial-by-trial comparison with respect to GCT (Fig. 8), which shows that cMVGC’s FP rates are sometimes well below the imposed and even become more extreme after authors’ recommended corrections [9] (K = 2000). Note how point distributions in Fig. 8 hardly ever cluster round the 45° line for connections reaching the and oscillators. For connections leaving , the pattern is reversed. It is this failure to meet the preset irrespective of which connection is under consideration, which we call anomalous here.

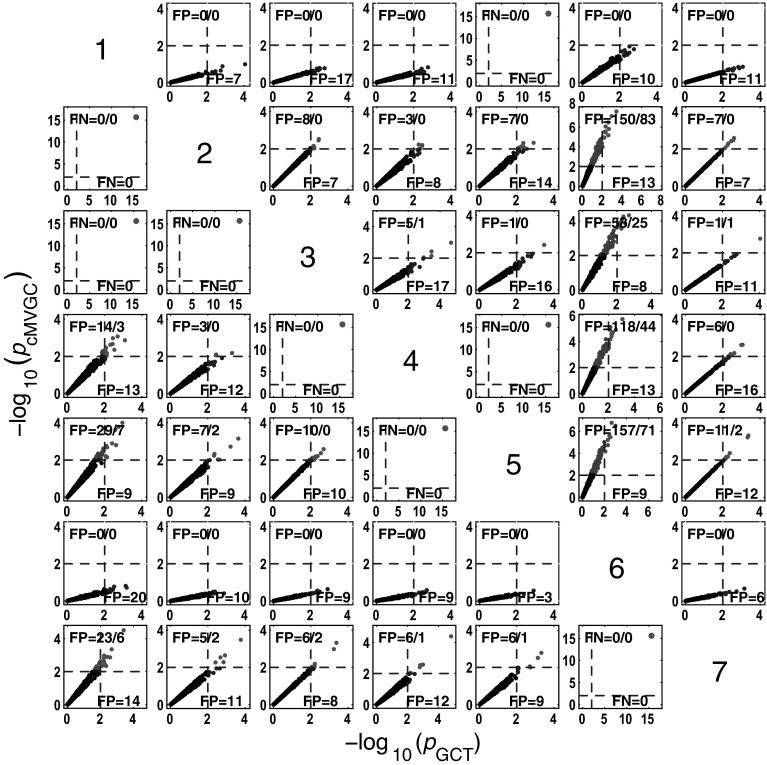

Fig. 8.

cMVGC versus GCT performance (K = 2000) clusters along lines, with high coefficient spread (median ; minimum ; and maximum ), confirming cMVGC’s abnomal behaviour. Connections out of are consistently clustered above the 45° line, which contrasts with those reaching the and consistently with low cMVGC FP values (top left) (except for ). cMVGC abnormality is apparent as FP values differ much from 10 as should happen for . cMVGC-corrected FPs (separated by slash) do not improve the situation

iDTF performance for Model 1

Figure 6 summarizes the performance of the asymptotic statistics for iDTF. The boxplots clearly show that for larger sample sizes, iDTF correctly detects the reachability structure shown in Fig. 2c. Note that the weakest, and in this case, the farthest connection () requires longer record lengths for proper detection.

Model 2: Five-variable model

Model 2 introduced by [6] is graphically represented in Fig. 9 with its corresponding set of defining equations:

| 2 |

where , as before, stand for uncorrelated Gaussian innovations. Computations were performed for K = {100, 200, 500, 1000, 2000} long records over 1000 Monte Carlo repetitions.

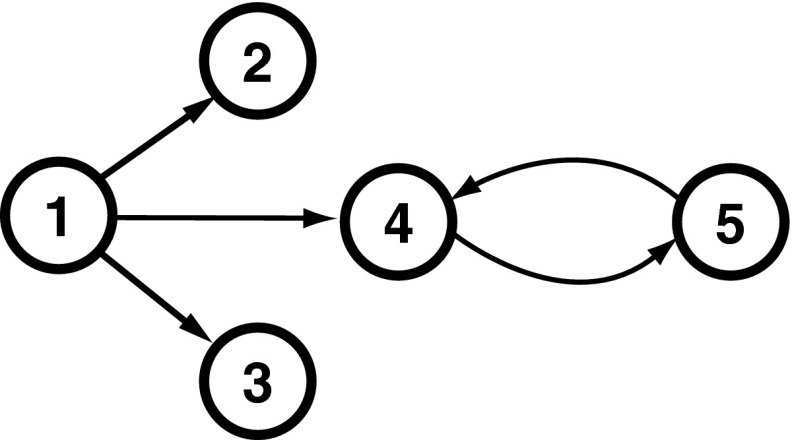

Fig. 9.

Diagram depicting the essential elements of Model 2 introduced by [6]

GCT performance

As before, Model 2 also shows that GCT’s performance improves with the increased record length (Fig. 10). At K = 200, GCT already performs well with FN rate below 5 %, reaching overall FN rates below 2 % for K = 2000.

Fig. 10.

GCT performance for Model 2 and K = {100, 200, 500, 1000, 2000} data record lengths

iPDC performance

For Model 2, FN rates are practically negligible when K > 200 for all measures of GCT, iPDC, and cMVGC (See Figs. 10–12). Overall, the pattern of iPDC performance is similar to that of GCT’s. Yet iPDC’s FP rates are slightly higher than GCT’s. For example, performance for K = 2000 is between 2.7 and 5.6 % (Fig. 13).

Fig. 11.

iPDC performance for Model 2 and K = {100, 200, 500, 1000, 2000} data record lengths

Fig. 12.

cMVGC performance for Model 2 and K = {100, 200, 500, 1000, 2000} data record lengths

Fig. 13.

Comparative performance between GCT and iPDC detection performances at K = 2000 time samples for Model 2

cMVGC asymptotic behaviour for Model 2

cMVGC performance for K = {100, 200, 500, 1000, 2000} is shown in Fig. 12. When taken with respect to GCT (Fig. 14), FPs are consistently lower than GCT’s for K = 2000 and, as in the case of the previous model, it does not conform to a preset for FP rates. This is also easy to appreciate for other values of in Fig. 12 as most outliers (red crosses) are below the −log10(p value) = 2 line for nonexisting connections.

Fig. 14.

Comparative performance between GCT and cMVGC detection performances at K = 2000 time samples for Model 2

Taking GCT as a reference, trial-by-trial comparisons of iPDC and cMVGC, respectively, confirm the pattern of higher FP for the former compared to a pattern of FP, below 1 %, for cMVGC with or without correction (See Figs. 13, 14). This is also suggestive of possible problems encountered in how the MVGC package handles the FP rate, which may be fortuitously benign to MVGC in this example, but does not represent the general case, since it does not hold for Model 1.

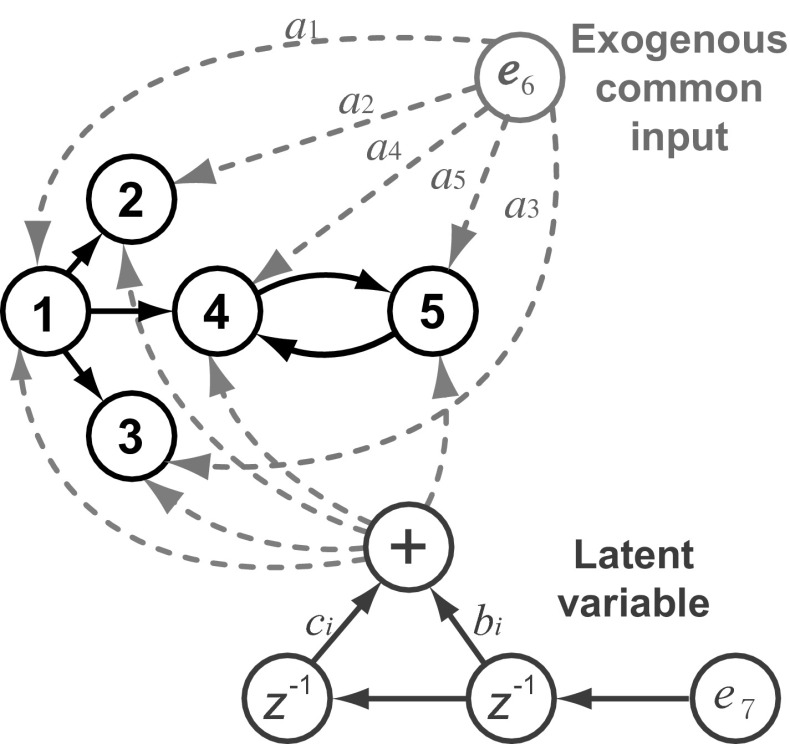

Model 3: Modified five-var model

To further probe the statistical behaviours of GCT, iPDC and cMVGC, we simulated the modified five-channel toy Model 3, originally introduced in [6], under the formulation variant proposed by [19] and reproduced here for reference (Fig. 15).

Fig. 15.

Diagram depicting the essential elements of model introduced by [19] modified from Model 2 [6]. For each simulation, the parameters were chosen randomly from a uniform distribution in interval, and all and , while the innovations, , were drawn from random variables with

The corresponding set of equations is

| 3 |

additionally containing the large exogenous input and the latent variable . In the simulations, were uncorrelated zero mean unit variance Gaussian innovation noises, and the parameters were chosen as and according to [19].

The proposal in [19] of introducing exogenous/latent variables is an interesting idea which allows investigating the influence of large common additive noise sources on the performance of GCT, iPDC and cMVGC. Here, to assess the impairment that the extra exogenous/latent variables possibly inflict on null-hypothesis testing, we repeated the procedure not just under the same conditions of [19], but also using a broader range of data record sizes:

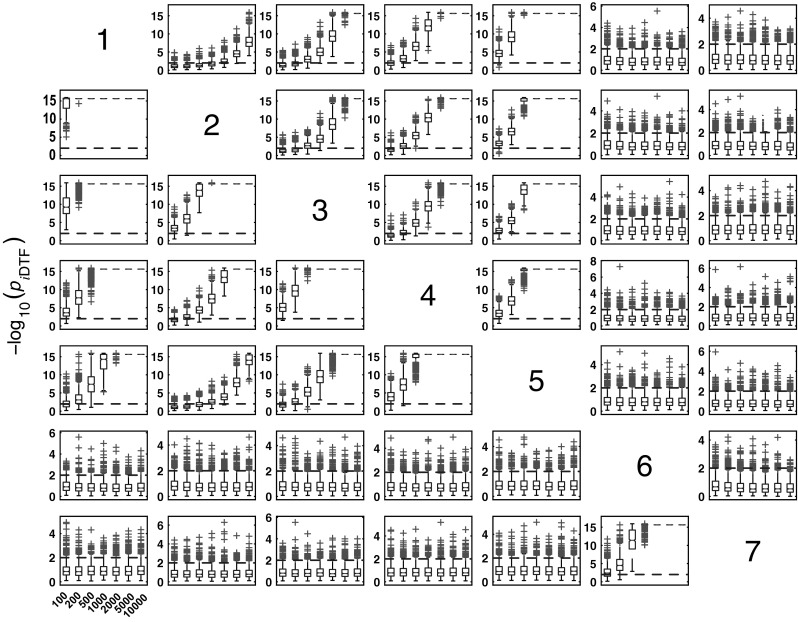

GCT performance in the presence of exogenous noise, Model 3

The GCT performance for Model 3 can be appreciated in Fig. 16. When compared with Model 2, GCT’s performance deteriorates in the presence of exogenous noises. Interestingly, its performance with respect to detecting existing connections increases with longer data records, while in the absence of connections, the FP rates increase sharply especially for the K = 10000 case. For K = 500, the overall FP rates are between 2.3 and 7.9 % with a median of 3.8 %. At , the latter rates grow to a range between 20.8 and 40.4 % with the median value of . FN rates are negligible.

Fig. 16.

GCT performance on Model 3 and K = {100, 200, 500, 1000, 2000, 5000, 10000} data record lengths

iPDC performance in the presence of exogenous noise

iPDC performance in detecting connectivity is similar to GCT’s (See Figs. 16, 17). As noted before, iPDC tends to have higher FP rates compared with GCT due possibly to the chosen frequency domain detection criterion of using a single-frequency with significant p value as indicative of a valid connection. Overall, FP rates range between 6.7 and 11.7 % (median ) at increasing to the range range (median ) at K = 10000.

Fig. 17.

iPDC performance on Model 3 and K = {100, 200, 500, 1000, 2000, 5000, 10000} data record lengths

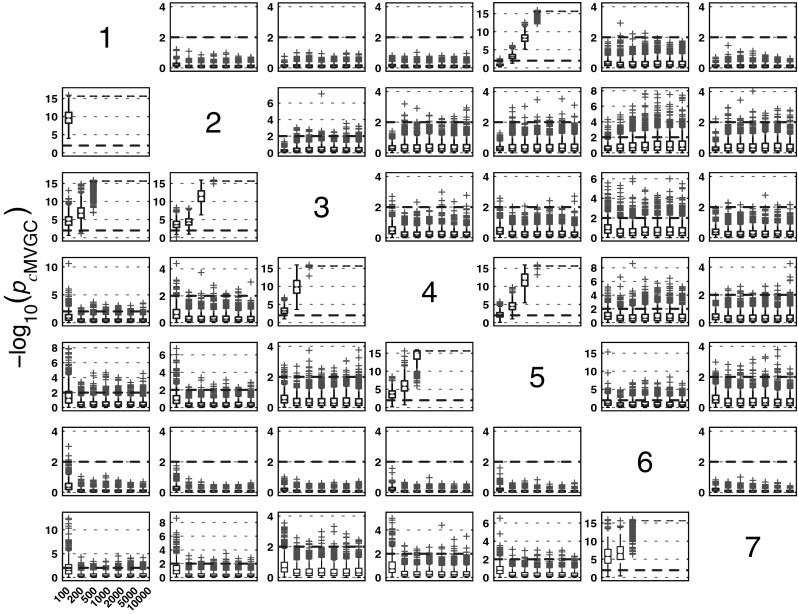

cMVGC performance for Model 3

Here (Fig. 18) the qualitative behaviour is the same as for the other estimators. However, as for Model 1, false decision rates are out of control,—sometimes, much below GCT’s, and sometimes, way above it, irrespective of corrections which fail to restore Neyman–Pearson expected behaviour. Again taking GCT as reference, Fig. 19 shows the similarity of iPDC’s result to GCT’s with the same pattern of larger FP values well above α=1%. The corresponding results for cMVGC compared with GCT portray a bias towards lower cMVGC FPs (Fig. 20).

Fig. 18.

cMVGC performance on Model 3 and K = {100, 200, 500, 1000, 2000, 5000, 10000} data record lengths

Fig. 19.

Comparative performance between GCT and iPDC detection performances at time samples for Model 3

Fig. 20.

Comparative performance between GCT and cMVGC detection performances at time samples for Model 3

Discussion

This study presents simulation evidence about the performances of statistical connectivity tests: two in time domain and using two new frequency domain measures.

One should remind the reader that the frequency domain tests, iDTF and iPDC, measure different aspects of connectivity and are not immediately comparable as discussed at length in [17, 18]. This contrasts with GCT, iPDC and cMVGC which are geared towards describing the same aspect of connectivity between adjacent structures [17]. Among the tests in the latter class, GCT proved to be the one most in accord with the expected Neyman–Pearson behaviour in the sense that observed FP rates are in accord with the preset value of justifying its employment as reference in the trial-by-trial comparisons between methods as summed up herein.

Qualitatively iPDC closely mirrors GCT behaviour, and predictably produces higher FP rates as a consequence of how iPDC connectivity was detected by deeming just one frequency above threshold as significant. Whereas one may conceivably improve on how to employ iPDC for testing, its use is recommended when there is frequency content of physiological interest.

Added for comparison, cMVGC detection proved to be biased towards a reduction of the FP rates in many cases. By contrast, examination of its behaviour for other (available in more detail from our Web site) suggests that, for small , it tends to miss existing connections more often than the other methods.

Perhaps more striking and more important, however, in the sense of Neyman–Pearson detection for compliance, is that procedures are usually constructed to impart control over FP decisions, which according to the present observations, is a condition that fails to be met by the cMVGC implementation from [9] which was used here without modification. It is also important to note that employing author-recommended decision corrections [9] usually aggravates matters. It is this lack of compliance to Neyman–Pearson criteria that we termed anomalous. Whether this happens due to an eventual software glitch, or reflects a more fundamental issue, is unknown. One should note that on many instances, cMVGC produced fewer FPs, something good in itself. This apparent quality is counterbalanced by much worse performance for some links, as in Model 1, in sharp contrast to other methods whose results attain the prescribed and are balanced for all connections to within the attainable accuracies of the Monte Carlo simulations.

Based on its good asymptotic control of FP observations, it is fair to suggest that, at least provisionally, GCT, as proposed by [2], be taken as a gold standard for detecting connectivity between adjacent structures and that iPDC and cMVGC should be used taking into account adequate forewarning of their present observed limitations.

The present Monte Carlo simulations showed good large sample fit and robustness for Models 1 and 2. In the presence of large exogenous/latent variables (Model 3), we observed poor performance for large samples possibly due to the poor performance of the MAR model estimation algorithms under low signal-to-noise ratio regardless of the statistical procedure (). In this regard, Model 3 deserves the special comment that its comparatively worse performance is not surprising since, strictly speaking, it violates the usual assumptions behind the development of all the test detection procedures discussed herein.

Finally, we propose that the present methodology represents the seed of a potential tool for systematically comparing connectivity estimators. The reason for this is twofold: (a) the framework provides a standardized approach whereby comparisons can be made systematically and (b) may be used even in the absence of formally rigorous statistical criteria, i.e. even if only ad hoc decision rules are available and is therefore not restricted to methods with theoretically well-established detection criteria. We have future plans to include bootstrap-based connectivity detection schemes under the same standardized framework for comparison purposes.

Acknowledgments

CNPq Grant 307163/2013-0 to L.A.B. and CNPq 309381/2012-6 and FAPESP 2014/12907-3 Grants to K.S. are also gratefully acknowledged, and the authors offer thanks to NAPNA - Núcleo de Neurociência Aplicada from the University of São Paulo. Part of this work was carried out during FAPESP Grant 2005/56464- 9 (CInAPCe).

Biographies

Koichi Sameshima

is a graduate in Electrical Engineering and M.D. from the University of São Paulo. He was trained in cognitive neuroscience, brain electrophysiology, and multivariate time series analysis at the University of São Paulo and the University of California at San Francisco. His research interests centre around the studies of neural plasticity, cognitive function, and information processing aspects of mammalian brain through behavioural, electrophysiological, and computational neuroscience protocols. To functionally characterize collective multichannel neural activity and correlate to animal or human behaviour, normal and pathological, he has also been seeking and developing robust and clinically useful methods and measures for brain dynamics staging, brain connectivity inferences, etc.

Daniel Y. Takahashi

holds the B.Sc. degree in Applied and Computational Mathematics (at the University of São Pulo), an M.D. (the University of São Paulo), and a Ph.D. in Bioinformatics (at the University of São Paulo). His main interest is to better understand how animal behaviours emerge from the interaction between brain, body, environment, and other animals. To achieve such understanding, he has been working on developing new methods for statistical inference of dynamic interactions and on developing new animal models of social vocal communication.

Luiz A. Baccalá

after majoring in Electrical Engineering and Physics at the University of São Paulo (1983/1984) went on to obtain his M.Sc. by studying the time series evolution of bacterial resistance to antibiotics in a nosocomial environment at the same University (1991). He has since been involved in statistical signal processing and analysis and obtained his Ph.D. from the University of Pennsylvania (1995) by proposing new statistical methods of communication channel identification and equalization. Since his return to his alma mater, he has taught courses in applied stochastic processes and advanced graduate-level statistical signal processing courses that include wavelet analysis and spectral estimation. His current research interests focus on the investigation of multivariate time series methods for neural connectivity inference and on problems of inverse source determination using arrays of sensors that include fMRi imaging and multi-electrode EEG processing.

References

- 1.Baccalá LA, Sameshima K (2014) Brain connectivity. In: Sameshima K, Baccalá LA (eds) Methods in brain connectivity inference through multivariate time series analysis. CRC Press, Boca Raton, pp 1–9

- 2.Lütkepohl H. New introduction to multiple time series analysis. New York: Springer; 2005. [Google Scholar]

- 3.Baccalá L, De Brito C, Takahashi D, Sameshima K. Unified asymptotic theory for all partial directed coherence forms. Philos Trans R Soc A. 2013;371:1–13. doi: 10.1098/rsta.2012.0158. [DOI] [PubMed] [Google Scholar]

- 4.Baccalá LA, Takahashi DY, Sameshima K (2015) Consolidating a link centered neural connectivity framework with directed transfer function asymptotic. arXiv: q-bio.nc/1166340

- 5.Takahashi D, Baccalá L, Sameshima K (2010) Information theoretic interpretation of frequency domain connectivity measures. Biol Cybern 103:463–469 [DOI] [PubMed]

- 6.Baccalá LA, Sameshima K. Partial directed coherence: a new concept in neural structure determination. Biol Cybern. 2001;84:463–474. doi: 10.1007/PL00007990. [DOI] [PubMed] [Google Scholar]

- 7.Kamiński M, Blinowska KJ. A new method of the description of the information flow in brain structures. Biol Cybern. 1991;65:203–210. doi: 10.1007/BF00198091. [DOI] [PubMed] [Google Scholar]

- 8.Sameshima K, Takahashi DY, Baccalá LA. On the statistical performance of connectivity estimators in the frequency domain. In: Slezak D, Tan AH, Peters JF, Schwabe L, editors. Lecture Notes in Computer Science. Heidelberg: Springer; 2014. pp. 412–423. [Google Scholar]

- 9.Barnett L, Seth AK. The MVGC multivariate Granger causality toolbox: a new approach to Granger-causal inference. J Neurosci Methods. 2014;223:50–68. doi: 10.1016/j.jneumeth.2013.10.018. [DOI] [PubMed] [Google Scholar]

- 10.Haufe S, Nikulin VV, Müller KR, Nolte G. A critical assessment of connectivity measures for EEG data: a simulation study. NeuroImage. 2013;64:120–133. doi: 10.1016/j.neuroimage.2012.09.036. [DOI] [PubMed] [Google Scholar]

- 11.Wu MH, Frye RE, Zouridakis G. A comparison of multivariate causality based measures of effective connectivity. Comput Biol Med. 2011;41:1132–1141. doi: 10.1016/j.compbiomed.2011.06.007. [DOI] [PubMed] [Google Scholar]

- 12.Florin E, Gross J, Pfeifer J, Fink GR, Timmermann L. Reliability of multivariate causality measures for neural data. J Neurosci Methods. 2011;198:344–358. doi: 10.1016/j.jneumeth.2011.04.005. [DOI] [PubMed] [Google Scholar]

- 13.Fasoula A, Attal Y, Schwartz D. Comparative performance evaluation of data-driven causality measures applied to brain networks. J Neurosci Methods. 2013;215:170–189. doi: 10.1016/j.jneumeth.2013.02.021. [DOI] [PubMed] [Google Scholar]

- 14.Astolfi L, Cincotti F, Mattia D, Marciani MG, de Baccala LA, Vico Fallani F, Salinari S, Ursino M, Zavaglia M, Ding L, Edgar JC, Miller GA, He B, Babiloni F. Comparison of different cortical connectivity estimators for high-resolution EEG recordings. Hum Brain Mapp. 2007;28:143–157. doi: 10.1002/hbm.20263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Marple SL Jr (1987) Digital spectral analysis: with applications. Prentice Hall, Englewood Cliffs

- 16.Baccalá LA, Sameshima K. Overcoming the limitations of correlation analysis for many simultaneously processed neural structures. Prog Brain Res Adv Neural Popul Coding. 2001;130:33–47. doi: 10.1016/S0079-6123(01)30004-3. [DOI] [PubMed] [Google Scholar]

- 17.Baccalá LA, Sameshima K. Causality and Influentiability: The need for distinct neural connectivity concepts. In: Slezak D, Tan AH, Peters JF, Schwabe L, editors. Lecture Notes in Computer Science. Heidelberg: Springer; 2014. pp. 424–435. [Google Scholar]

- 18.Baccalá LA, Sameshima K (2014) Multivariate time series brain connectivity: a sum up. In: Sameshima K, Baccalá LA (eds) Methods in brain connectivity inference through multivariate time series analysis. CRC Press, Boca Raton, pp 245–251

- 19.Guo S, Wu J, Ding M, Feng J. Uncovering interactions in the frequency domain. PLoS Comput Biol. 2008;4:e1000087. doi: 10.1371/journal.pcbi.1000087. [DOI] [PMC free article] [PubMed] [Google Scholar]