Abstract

The present paper presents a systematic analysis from a behavior analytic perspective of procedures termed feedback. Although feedback procedures are widely reported in the discipline of psychology, including in the field of behavior analysis, feedback is neither consistently defined nor analyzed. Feedback is frequently treated as a principle of behavior; however, its effects are rarely analyzed in terms of well-established principles of learning and behavior analysis. On the assumption that effectiveness of feedback procedures would be enhanced when their use is informed by these principles, we sought to provide a conceptually systematic account of feedback effects in terms of operant conditioning principles. In the first comprehensive review of this type, we compare feedback procedures with those of well-defined operant procedures. We also compare the functional relations that have been observed between parameters of consequence delivery and behavior under both feedback and operant procedures. The similarities observed in the preceding analyses suggest that processes revealed in operant conditioning procedures are sufficient to explain the phenomena observed in studies on feedback.

Keywords: Feedback, Behavioral processes, Operant conditioning, Reinforcement, Punishment, Contingency

Feedback is a term commonly used within the discipline of psychology; however, it is neither consistently defined nor analyzed. In his influential commentary, Peterson (1982) pointed out numerous shortcomings in the use of feedback in the behavior analytic literature, including his observation that it is sometimes “treated as a principle of behavior” (p. 101), yet is no better than “professional slang” (p. 102). Weatherly and Malott (2008) observed that control of feedback effects by associative learning processes is often assumed, rather than determined, further pointing out that this occurs even when the parameters of feedback are unfavorable for associative learning. Similarly, Catania (1998) noted that while it is often presumed that feedback functions as a reinforcer (or punisher), such assumptions may be misleading. Imprecision and inconsistency in defining feedback at both the stimulus and procedural level interfere with analyzing the specific function(s) that feedback serves. It has been suggested that feedback may function similarly to the following: (a) a reinforcer or punisher (Carpenter and Vul 2011; Cook and Dixon 2005; Slowiak, Dickinson, and Huitema 2011; Sulzer-Azaroff and Mayer 1991), (b) an instruction (Catania 1998; Hirst et al. 2013), (c) a guide (Salmoni et al. 1984), (d) a discriminative stimulus (Duncan and Bruwelheid 1985-1986; Roscoe et al. 2006; Sulzer-Azaroff and Mayer 1991), (e) a rule (Haas and Hayes 2006; Prue and Fairbank 1981; Ribes and Rodriguez 2001), (f) a conditioned reinforcer (Hayes et al. 1991; Kazdin 1989), and (g) a motivational (Johnson 2013; Salmoni et al. 1984) or establishing stimulus (Duncan and Bruwelheide 1985-1986).

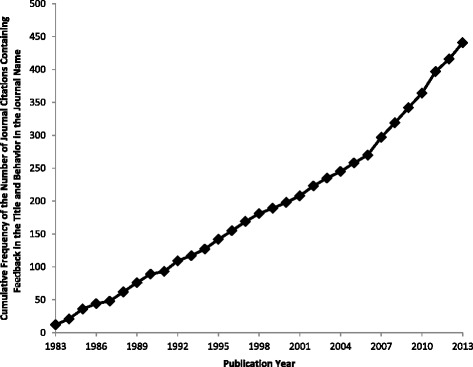

Peterson’s (1982) suggestion that the term be eradicated apparently was not well-received. Since publication of that paper, 441 articles with feedback in the title and behavior in the journal name have appeared in over 50 journals in 1983 through 2013. Publication frequency was obtained from a search conducted on PsycINFO (July 29, 2014) using the search parameters listed in Table 1. In these studies, feedback was often implemented in the following areas: (a) behavioral skills training (to improve teaching, academic, and a variety of motor skills), (b) health-related behavior (increasing exercise time and habit cessation), and organizational behavior management (to improve customer service, increase productivity, decrease absenteeism, and increase safe work habits). Table 1 lists the journals that collectively accounted for greater than 80 % (356) of the 441 publications identified in the search. The three journals that focus on organizational behavior collectively had the highest frequencies of articles, with the Journal of Organizational Behavior Management yielding the highest count of all journals searched. High frequencies were also observed for the Journal of Motor Behavior and the Journal of Applied Behavior Analysis. Cumulative frequency per year is plotted in Fig. 1. This function suggests that the rate of publication increased after 2006.

Table 1.

Citations by journal

| Journal title | Number of citations |

|---|---|

| Journal of Organizational Behavior Management | 82 |

| Journal of Motor Behavior | 56 |

| Organizational Behavior and Human Decision Processes | 50 |

| Journal of Applied Behavior Analysis | 42 |

| Computers in Human Behavior | 21 |

| Behavior Modification | 18 |

| Journal of Organizational Behavior (Journal of Occupational Behavior) | 16 |

| Behavioral Therapy | 15 |

| Games and Economic Behavior | 12 |

| Journal of Behavior Therapy and Experimental Psychiatry | 12 |

| Social Behavior and Personality | 12 |

| Law and Human Behavior | 11 |

| Small Group Research (Small Group Behavior) | 9 |

Frequency of citation was obtained from a search conducted on PsycINFO (July 29, 2014) using the following parameters: the term feedback appeared in the title and the word behavior appeared in the publication title; the source was limited to journal articles that were published between 1983 and 2013. Studies that did not include human participants were excluded. A total of 441 citations were identified, representing over 50 scholarly journals. Table 1 lists citation frequencies for the journals that collectively accounted for greater than 80 %, that is, 356 of the 441 publications identified in the search

Fig. 1.

Cumulative frequency per year of journal articles containing the word feedback in the title, appearing in journals with behavior in the journal name

While admitting the wisdom of Peterson’s (1982) suggestion that behavior analysts avoid references to feedback, Duncan and Bruwelheide (1985-1986) point out that this strategy risks limiting the influence of behavior analytic scholarship on the research and practice of those who take a nonbehavior analytic approach to problems in business and industry. As an alternative, Duncan and Bruwelheide urged “a finer-grained analysis of feedback, focused on behavior functions” (p. 112). The present report adopts this recommendation. In the first comprehensive review of this type, we compare feedback at a procedural level with well-defined operant procedures, noting overlap and dissimilarities. We also compare functional relations between parameters of consequence delivery and behavior under both feedback and operant procedures. We reason that convergence of these functions will support the conceptually systematic assertion that the processes involved in operant conditioning are sufficient to account for the phenomena revealed in studies of feedback. As pointed out by Alvero et al. (2001) and others (e.g., Duncan and Bruwelheide 1985-1986; Johnson 2013; Normand et al. 1999), to the extent that behavior control by feedback can be attributed to basic learning processes, its application can be informed by the vast literature on variables that influence the effects of operant procedures.

In the absence of a consensus on defining feedback (Houmanfar 2013), and in the tradition of Skinner’s analysis of psychological terms (Skinner 1945; also see Schlinger 2013), an operational definition was adopted for this report in order to describe the minimal stimulus conditions likely to occasion emission of feedback as an utterance or as a written word. Although the objective was a definition that is applicable to a broad range of procedures, the manner in which both feedback and operant conditioning procedures could be programmed is potentially limitless; therefore, the present formulation focuses on that which is typical, as opposed to that which is conceivable. For this report, feedback is defined as presentation of an exteroceptive stimulus whose parameters vary as a function of parameters of antecedent responding. The feedback stimulus may vary along one or more dimensions with any number of parameters of responding—both quantitative and qualitative. It may describe characteristics of the immediately prior response or of a predetermined target response (goal) and possibly the relation between the two (i.e., whether they are members of the same response class or, if not, how they differ). It may also describe the contingency between responding and the consequences of responding. The feedback stimulus may be presented in a number of forms or modalities (e.g., verbal statements, tones, images) and may vary with a single response dimension or a combination of response dimensions. While the foregoing definition and specifications are based on those of previous authors, collectively, they are more comprehensive than those identified in the literature. The intention was to provide a framework within which the planned analysis could be conducted systematically and with precision for a variety of feedback procedures.

A Comparison of Feedback with Reinforcement and Punishment Procedures

In this section, procedures labeled feedback are compared at a procedural level with common operant conditioning procedures, and functions showing behavior change as a function of procedural parameters under feedback and operant procedures are examined. A number of authors have pointed out that feedback procedures are implemented in a manner that is analogous to reinforcement (or punishment) procedures (e.g., Duncan and Bruwelheide 1985-1986; Peterson 1982). Most notably, under both feedback and operant procedures, consequences of responding are contingent on properties of responding. Likely for this reason, feedback is often assumed to function as a reinforcer or punisher (Weatherly and Malott 2008; Miltenberger 2012), yet these behavior control processes are rarely established independently, as discussed in the following section.

Unlike feedback stimuli, reinforcers and punishers are defined and classified by their effects on parameters of the response class upon which they are contingent, including probability, rate, magnitude, and latency. Reinforcement is defined as an increase in response strength (increased rate, probability, or magnitude or decreases in latency of the response), whereas changes in the opposite direction are consistent with a stimulus functioning as a punisher. Both feedback and operant procedures are classified as positive or negative; however, these terms are used differently when referring to feedback versus to operant procedures. For reinforcement and punishment procedures, the terms refer to the sign (positive or negative) of the contingency between responding and consequences, whereas for feedback procedures, positive and negative usually refer to the content of the feedback stimulus (affirmative or corrective, respectively), not to the contingency between responding and the presentation of feedback. Feedback procedures are most often programmed analogously to positive reinforcement or positive punishment procedures in that feedback stimuli are typically presented, rather than removed, contingent upon responding.

In the following sections, we examine the overlap of feedback procedures with operant conditioning, focusing on the following: (a) identification of the a priori behavior-altering effects of the consequence, (b) parameters of consequence delivery, and (c) precision of the relation between target responding and its consequences.

A Priori Establishment of Behavior-Altering Effects of Consequential Stimuli

Operant and feedback procedures can be compared in terms of whether behavioral functions are identified prior to their implementation. Traditions differ for the two types of procedures, possibly owing to differing epistemologies. In the feedback literature, a control system model is often used to account for the behavior-altering effects of feedback. In general, control system models hold that feedback stimuli provide information about current performance that is compared by the learner with preestablished goals or other standards for performance. Discrepancies result in adjustment of performance or goals (for a description of this model, see Duncan and Bruwelheide 1985-1986). Under control system models, a priori establishment of feedback stimulus effectiveness would amount to determining that stimuli accurately indicate the relation between current behavior and performance goals or standards.

In the operant tradition, identification of effective response consequences is guided by the principle of transituationality (Meehl 1950). When a stimulus is determined to function as a reinforcer or punisher for at least one response prior to implementation of a behavior change procedure, its effectiveness in the latter procedure can be attributed to its previously identified reinforcer or punisher function. When such attribution is possible, the condition of transituationality has been achieved. To help achieve transituationality, and also enhance the efficacy of operant procedures, many researchers use stimulus preference assessments to identify potentially effective stimuli (e.g., Call et al. 2012; DeLeon et al. 2001; Kelly et al. 2014; Lee et al. 2010; Reed et al. 2009; Roane 2008; Vollmer et al. 2001). In these procedures, stimuli are presented individually or in pairs, with the relative proportion of trials on which each stimulus is approached taken as an index of preference for that stimulus (Fisher et al. 1992). Fisher et al. and others (e.g., Lee et al. 2010) have shown that preference assessments yield strong predictions regarding reinforcer efficacy. Graff and Karsten (2012) surveyed practitioners who serve individuals with developmental disabilities. Most behavior analysts (approximately 89 %) who responded reported using some form of stimulus preference assessment to determine effective consequences. This literature suggests that a priori establishment of potential reinforcer or punisher effectiveness of consequent stimuli is common among behavior analysts.

The reinforcer or punisher functions of feedback stimuli generally are not established prior to their implementation (Duncan and Bruwelheide 1985-1986), thereby compromising analysis of these procedures in terms of reinforcement or punishment. Nonetheless, reinforcement is often posited as a basis for increases in responding when feedback is contingent upon the measured response, particularly when procedural parameters, such as timing of the feedback stimulus, match those of typical reinforcement procedures (e.g., Cook and Dixon 2005). The proposition that effects of feedback are reducible to reinforcement has been examined in the literature. For example, in a recent study, Johnson (2013) performed a component analysis in which effects of objective feedback—description of the previous day’s performance—and evaluative feedback—statements consistent with excellent, good, average, or poor performance on the previous day—were dissociated. Both types of feedback were associated with higher levels of performance in comparison to a no-feedback condition; however, when the two types were combined, performance was considerably higher than when either type was presented separately. The author reasoned that the evaluative feedback may function as an establishing or abolishing operation, controlling the effectiveness of objective feedback as a reinforcer or punisher. However, without a priori assessment of the behavior-altering function of the consequences presented in this and other studies of feedback, it cannot be determined if behavior change was mediated by reinforcement (or punishment) or by other processes controlled by response-contingent feedback (e.g., instruction, establishing or abolishing operations, discriminative stimulus control, or elicitation).

In the absence of direct evidence that feedback functions as a reinforcer, a review by Alvero et al. (2001) provides some indirect evidence. Those authors distinguished between feedback-alone procedures—the consequences of responding consisted only of information about the quality or quantity of prior responding—and procedures that included feedback plus consequences, where consequences could include such events as praise, money, and time off from work, that is, presumed reinforcers. In the literature reviewed by Alvero et al., consequences were included with the feedback stimulus in 34 of 64 applications; however, the authors did not indicate whether behavior-altering effects of the consequences were assessed independently of the feedback procedure. Interestingly, Alvero et al. found that feedback-alone procedures were consistently effective in 47 % of the 68 applications, while procedures that combined feedback with consequences (presumed reinforcers) were consistently effective in 58 % of the applications. In an earlier review of 126 experiments, Balcazar et al. (1985-1986) reported values of 28 and 52 % for feedback alone and feedback with consequences, respectively. Whether the positive effects of feedback-alone procedures can be attributed to reinforcement remains an open question.

Even if feedback stimuli do not include previously established reinforcers or punishers, it is likely that feedback stimuli may function as some type of conditioned reinforcer or punisher (e.g., Duncan and Bruwelheide 1985-1986; Jerome and Sturmey 2014; Johnson and Dickinson 2012); Kazdin 1989; Peterson 1982). Conditioned reinforcers or punishers are established by means of a stimulus-stimulus contingency between a stimulus having no initial reinforcing or punishing effects and a primary reinforcer or punisher, or with an established conditioned reinforcer or punisher. Under feedback procedures, these behavior-controlling functions of feedback stimuli (e.g., “Good job” or “Your output is low”) may be present prior to introduction of training (Hayes et al. 1991; Roscoe et al. 2006), or they may be acquired during the feedback procedure, provided that a stimulus-stimulus contingency is arranged with an established reinforcer (or punisher) during the procedure.

As Alvero et al. (2001) noted, in many cases, when feedback stimuli are used, they are paired with presumed or established reinforcers or punishers during training. An experiment by Hayes et al. (1991) demonstrated an untrained behavior control effect of positive and negative verbal feedback stimuli—“correct” and “incorrect”—for correct and incorrect sorting responses, respectively, and transfer of that control to the arbitrary stimuli (i.e., stimuli having no measurable response-altering effect) with which the feedback stimuli were differentially paired during training on the sorting task. In contrast to the positive findings of Hayes et al., Slowiak et al. (2011) reported mixed results in a study of acquisition of conditioned reinforcer effectiveness of feedback stimuli that were correlated with monetary payments. In an interesting design, the authors assessed reinforcer effectiveness of feedback stimuli for two different responses. In the study, participants in each of two experimental conditions performed a simulated data entry task during which they could self-deliver objective feedback—statements describing the cumulative number of responses completed during the session, the number of correct responses, and mean rate of responding during the session. For one group, pay was presented contingent upon data entry performance (termed incentive pay); for these subjects, feedback therefore corresponded to amount of money earned, creating an opportunity for feedback to acquire a conditioned reinforcer function. For the other group, pay was not continent upon performance. One measure of conditioned reinforcer effectiveness of the feedback stimuli was the rate at which participants self-delivered feedback by executing a computer keyboard response. Participants in both groups self-delivered feedback frequently; however, this did not vary between conditions, suggesting that correspondence of feedback with pay did not differentially increase the reinforcing value of feedback (as a reinforcer for solicitation of feedback). On the other hand, level of performance on the data entry task was higher for participants receiving incentive pay. Conceivably, a differential conditioned reinforcing effect of feedback for data entry (but not for self-delivered feedback) was acquired as a function of correspondence between feedback and pay; alternatively, the payment contingency alone controlled the differences in data entry performance.

In summary, there are little direct data regarding the a priori effectiveness of feedback stimuli, though two literature reviews (Alvero et al. 2001; Balcazar, et al. 1985-1986) indicate that feedback-alone procedures were ineffective in a rather large percentage of studies surveyed. Nonetheless, because the parameters of many effective feedback procedures overlap with those of operant procedures, it is likely that in some cases, feedback stimuli are functional reinforcers or punishers prior to training or become so as a result of stimulus-stimulus contingencies arranged between feedback stimuli and reinforcers or punishers under feedback procedures. These observations support design of feedback procedures that include the following: (1) a priori establishment of reinforcing or punishing effectiveness of feedback stimuli and (2) combining feedback with preestablished reinforcer or punishing stimuli.

Parameters of Consequence Delivery

Under operant and feedback procedures, presentation of reinforcement or punishment typically is controlled by a contingency (positive or negative) between responding and consequences. The contingency specifies the characteristics of responses (criterion or target responses) that are eligible for consequence delivery, a schedule of consequence delivery that stipulates the conditions under which criterion responding will occasion a consequence (reinforcer presentation or omission), characteristics of consequence(s) to be delivered, and precision of the relation between responding and consequences. In the following sections, effects of three families of parameters of consequence delivery for feedback and operant conditioning are discussed: (a) interval between target responding and consequence delivery, (b) probability of consequence delivery, and (c) precision of the relation between responding and consequences. As will be shown, the range of these parameters differs, in some cases markedly, between the two types of procedures; however, the functions relating these parameters to behavior change show considerable similarity between feedback and operant procedures.

Delay of Consequences for Responding

A thoroughly investigated parameter of both feedback and operant conditioning procedures is the response-consequence interval (delay to reinforcement, punishment, or feedback). In reinforcement and punishment procedures, the magnitude of operant responding is strongly and inversely related to delay, with intervals as short as 0.5 s causing deleterious effects (e.g., Grice 1948); however, this effect is mitigated if an exteroceptive stimulus is presented during the delay (e.g., Lattal 1984; Richards 1981; Schaal and Branch 1988). A different picture emerges for feedback procedures, in which the impact of delay of feedback on performance is inconsistent. In some cases, longer delays to feedback are associated with superior performance (e.g., Swinnen et al. 1990a, b). Maddox et al. (2003) found either no effect (experiment 1) or a deleterious effect of delay (experiment 2) on category learning tasks. In contrast, Northcraft et al. (2011) found that immediate feedback (versus feedback presented according to a fixed-time 6-min schedule) was associated with superior performance (number of units correctly completed) on a simulated college class scheduling task. In the area of semantic learning, results are mixed, with a large percentage of studies showing an advantage of delayed over immediate feedback (e.g., Smith and Kimball 2010). Salmoni et al. (1984), in a review of over 250 research reports on feedback and motor learning, pointed out that delay covaried with intertrial interval, the interval between feedback and the next opportunity to respond, or both. After accounting for these confounds, the authors concluded that there is little consistent evidence for an effect of feedback delay on acquisition or on post-acquisition performance. Metcalfe et al. (2009) targeted an analogous situation in the area of recall of educationally relevant material, where a test-retest paradigm was used. They report that in a large proportion of studies, manipulation of the interval between the response (first test) and feedback covaried with the interval between feedback and the next opportunity to respond (post-test). Under these conditions, the delay condition, in comparison with the immediate condition, provides subjects with the correct response at a shorter interval from the next opportunity to respond—a condition that likely would favor recall of the correct response, regardless of the delay between the initial response and presentation of feedback. Similarly to Salmoni et al., Metcalfe et al. pointed out that evidence of superiority of delayed versus immediate feedback can be determined only when the interval between feedback and the next opportunity to respond is controlled.

In summary, a consistent inverse relation between delay and learning exists for operant procedures, while the relation between delay and learning is inconsistent for feedback procedures and is complicated by vast procedural differences among studies that have investigated this parameter (cf. Carpenter and Vul 2011). Evidence of differential effects of delay under feedback versus operant procedures may signal a functional difference between the two types of procedures; however, the data admit to other interpretations, including learning-based accounts of delay effects that are applicable to both feedback and operant procedures (see Lattal 2010, for a comprehensive overview). Two reports have sought to account for the inconsistent effects of delay of feedback and for the discrepancy between studies with humans versus animals, in terms of single underlying mechanism. Costa and Boakes (2011) and Lieberman et al. (2008) based their studies on a version of Revusky’s (1971) concurrent interference theory. Revusky argued that deleterious effects of delay between responding and consequences can be attributed to incursion of other events, including the subject’s own behavior, during the delay interval. When consequences are immediate, the strengthening effects of reinforcement will be accrued primarily by the criterion response. When a delay intervenes between the criterion response and a consequence, responses subsequent to the criterion response may achieve greater contiguity with the consequence, possibly leading to strengthening of competing or irrelevant responses.

Although numerous reports point to differential parametric effects of consequence delay between feedback and operant procedures, the consistency of this generalization is challenged by reviews that have taken into account effects of extraneous and confounded procedural variables. Accordingly, comparative data provide insufficient basis for analyzing performance under these two procedures according to differing processes. On the contrary, there exists credible evidence that the functions relating response-consequence delay and behavior change under operant and feedback procedures are similar and, therefore, attributable to common behavioral processes. Correspondingly, similar procedures may be used to mitigate the effects of response-consequence delay for both feedback and reinforcement or punishment preparations (see, for example, Dickinson et al. 1996; Lurie and Swaminathan 2009; Metcalfe et al. 2009; Reeve et al. 1993; Stromer et al. 2000).

Probability of Consequence Delivery

Another important parametric difference between operant conditioning and feedback procedures is response-consequence probability, or p(consequence|response). Typically, in operant procedures, reinforcers are programmed with a probability of less than 1.00 following a defined target response, while for punishment procedures, a probability of 1.00 (a continuous schedule) may be used (Mazur 2006). For both reinforcement and punishment procedures, the probability of a consequence for all nontarget responses is zero. In contrast, under feedback procedures, in many instances, some form of feedback stimulus is presented as a consequence of all responses, both criterial and noncriterial (although see, for example, Maes 2003; and Murch 1969). As a result, suppression of noncriterial responding is accomplished differently in operant procedures, where extinction is often used, versus use of negative feedback under feedback procedures. Despite these systematic differences between feedback and operant procedures, the relation between behavior change and p(consequence|response) is similar for operant and feedback procedures.

It might be anticipated that speed of acquisition of criterion performance would covary with p(consequence|response), given that higher values are associated with a greater frequency of opportunities to learn. This relationship is well documented for both operant conditioning and feedback procedures; however, evidence indicates that acquisition speed under operant (e.g., Williams 1989) and feedback procedures (Salmoni et al. 1984; however, see Winstein and Schmidt 1990) is invariant with the number of obtained reinforcers, independent of probability of reinforcement. For example, Williams showed that improvement in rats’ discrimination performance increased more rapidly as a function of number of trials when each correct response was reinforced, versus when the probability of reinforcement was 0.50. However, when discrimination performance was plotted as a function of number of reinforced trials, performance did not vary with probability of reinforcement. Perhaps, more important is the observation that for both feedback and operant procedures, learning (defined as post-acquisition performance when consequence presentation is thinned or discontinued) is inversely related to p(consequence|response) during acquisition (Maas et al. 2008; Mackintosh 1974; Salmoni et al. 1984; Winstein and Schmidt 1990).

The foregoing observations are comparable to the familiar partial reinforcement extinction effect (PREE) observed in operant conditioning preparations, where responding trained with p(reinforcement|response) <1.0 is associated with greater resistance to extinction, in comparison to training with continuous reinforcement (Mackintosh 1974; Lerman and Iwata 1996). Capaldi (1967) proposed that the PREE is a generalization decrement phenomenon where resistance to extinction of conditioned responding is a function of the extent to which the contexts of training and extinction differ. When probability of response consequence is low during training, context, defined in terms of rate of consequence presentation, is more similar between training and extinction phases in comparison to a condition with a high probability of consequence presentation during training (Mackintosh 1974; Shull and Grimes 2006). Salmoni et al. (1984) presented a generalization-based account for the effects of partial feedback, suggesting that insertion of blank trials (trials without feedback) promotes acquisition of control by task-related stimuli (in addition to those accompanying the programmed feedback events), such as proprioceptive stimuli arising from responding (response-produced feedback). A related argument was made by Little and Lewandowsky (2009) in the context of category learning tasks. Those authors proposed that continuous feedback is likely to promote selective attention to the relevant cues that distinguish categories, whereas probabilistic feedback “is likely to broaden people’s attention profile” (p. 1042). Data supporting this theory were obtained: participants receiving feedback with p < 1.0, versus continuous feedback, were more likely to learn relations between irrelevant (nondiagnostic) cues in a category, as measured during transfer testing (without feedback) following training.

In summary, there is convergence from a broad range of procedures and target responses regarding effects of p(consequence|response) under feedback and operant procedures. For both types of procedures, learning is improved by implementing partial versus continuous schedules of consequence delivery during training. Although speed of acquisition tends to vary directly with p(consequence|response) for both operant and feedback procedures, this effect is minimized when acquisition data are normalized by number of consequence presentations. A conceptually systematic analysis of these phenomena in terms of basic learning principles (Capaldi 1967; Salmoni et al. 1984) effectively accounts for the effects of p(reinforcement|response) under both operant and feedback procedures. In applied settings, decisions regarding probability of consequence delivery may be governed by factors other than measures of learning, such as a goal of maintaining on-task behavior; however, the data support implementation of partial versus continuous schedules of feedback or reinforcer delivery.

Precision of the Relation Between Responding and Consequences

A multidimensional parameter of feedback and operant procedures can be characterized as precision of the programmed relation between parameters of responding and parameters of the resulting consequences. Although the term precision is intuitively meaningful, we believe that research and practice involving feedback, and operant conditioning for that matter, would benefit by unpacking the term as it applies to different manipulations. We consider precision at two levels—descriptive and quantitative (statistical). At a descriptive level, precision is determined by the conventions for defining target and nontarget responding and by the parameters of consequences arranged for both types of responding. For example, in simple operant procedures, a single response class is defined (e.g., lever press) and a single type and level of consequence (e.g., standard food pellet) is presented response-contingently only for in-class responses, resulting in a narrow range of variance between parameters of responding and consequences (low precision). Conversely, in many feedback procedures, graded response criteria are defined and responses at each level of the gradient occasion some form of feedback; feedback often is free to vary across a range of values. These practices arrange high precision in the relation between responding and consequences. Precision can also be specified in terms of the statistical relation between responding and consequences (Hammond 1980). This measure can be derived from the conditional relations arranged between a target response and a given consequence. For example, if correct serves as a feedback stimulus, the statistical relation (contingency) is based on the probability of correct given a response [p(correct|response)] and the probability of correct given no response [p(correct|no response)]. The difference between these two conditional probabilities specifies the statistical relation between responding and the consequence; it ranges from −1.0 to +1.0. A value of zero indicates absence of a relationship; −1.0 describes a situation where responding and consequence are perfectly negatively related (a consequence is delivered only in the absence of a target response), and +1.0 describes the typical relation where the consequence is delivered only when a target response has been emitted.

Manipulation of Precision Under Feedback Procedures

Many studies have investigated the relation between precision of feedback on measures of learning, where precision was manipulated by varying the grain (as in fine-grained), as well as the specificity of the feedback events and of the response class, or both (e.g., Hunt 1961; Mangiapanello 2010; McGuigan 1959; Rogers 1974, Schori 1970). For example, Schori (1970) manipulated both precision of electrocutaneous feedback for errors and response specificity. Under the least precise condition—on/off—directional feedback was presented for errors when the participant’s response was outside of the relatively broadly defined target area; error magnitude was not presented. Under the intermediate condition, feedback was presented according to the procedure of the on/off condition; however, error magnitude feedback was also provided. Under the most precise condition—continuous—the target area was narrowly defined and directional and magnitude feedback was presented for errors. Schori found that performance (time on target) was highest under the intermediate condition. In a review of the literature, Salmoni et al. (1984) found that while many studies showed no effect of precision on performance during training, those that did report effects found a positive relation between performance and precision of feedback. A similar conclusion was drawn for effects of precision when measured in no-feedback transfer tests.

Manipulation of the statistical contingency under feedback procedures occurs most often in studies on the effects of feedback accuracy, though this type of manipulation is uncommon. Maes and van der Goot (2006) found that acquisition of a response variability requirement depended upon the contingency between correct responding and feedback. Two groups of participants were required to produce sequences of three responses using three keys numbered 1, 2, and 3. Under a response-contingent feedback condition, participants received positive feedback following emission of each response sequence that met a variability requirement. For each participant under a noncontingent feedback condition, presentation of positive feedback was yoked to the across-trial distribution of feedback received by a matched participant under the contingent feedback condition. Only under the response-contingent feedback condition was there a reliable increase in variability of response sequences.

Contingency also was manipulated in a study by Hirst et al. (2013) by varying the probability of inaccurate feedback across four levels. Participants performed a task involving matching nonsense names to nonsense figures. Following each naming response, feedback (correct or incorrect) was displayed below the shape. The probability of inaccurate feedback (presentation of a feedback stimulus that did not match the participant’s performance on a given trial) was 0, 25, 50, and 75 % across groups. Accuracy of performance varied inversely with probability of inaccurate feedback. In an intriguing study, Brosvic and Finizio (1995) varied feedback accuracy for participants exposed to the Müller-Lyer illusion. On each trial, groups of participants were presented with accurate, inaccurate, or no performance feedback on the magnitude and direction of error. Improvement of estimation accuracy over trials was greatest for participants under the accurate condition and less for those in inaccurate or no-feedback conditions.

Manipulation of Precision Under Operant Procedures

Manipulation of the statistical relation between responding and consequences is common under operant procedures, and a large body of basic research shows that measures of learning are inversely related to statistical contingency (e.g., Boakes 1973; Powell and Kelly 1976; Schwartz and Williams 1972; though see Johnson and Dickinson 2012). In applied behavior analysis, contingency has been manipulated when the goal was to decrease level of reinforcer-maintained problem behavior, without altogether eliminating access to the reinforcer identified as maintaining problem responding (e.g., Carr et al. 2009; Rosales et al. 2010; Wallace et al. 2012).

Manipulation of precision by varying the definition of target responding or by manipulating the characteristics of consequences for responding has occurred infrequently under operant procedures, in comparison to feedback procedures. Definition of target responses or characteristics of consequences are not limited by the boundary conditions of the operant paradigm; rather, they are most likely guided by the research question. For some research questions, such as effects of prefeeding or conditioned reinforcement, a single response class and consequence relation likely would be programmed; however, under conditional discrimination procedures, consequences are programmed for two or more response classes (e.g., pressing two different levers); under choice procedures, differing consequences may be arranged for two or more response classes, and under shaping procedures, multiple response classes are differentially paired with consequences. We describe here two operant procedures where precision of the response-consequence relation approaches that of feedback procedures—conjugate schedules of reinforcement and response shaping.

Conjugate Reinforcement Schedules

Under conjugate schedules of reinforcement, parameters of a reinforcer (e.g., intensity) vary proportionally and, typically continuously, with parameters of responding (Morgan 2010). A classic example is provided by Rovee and Rovee (1969). In their study, young infants were placed on their backs with a mobile suspended out of reach above them. The authors noted that mobiles are effective reinforcers of behavior in infants. After baseline levels of kicking were recorded, a string was tied from the ankle to the mobile. A kick resulted in movement in the mobile. The more vigorous the kicking, the more the mobile moved. Over the course of the session, kick rate increased steadily relative to baseline. Similar findings have been reported for studies on conjugate procedures where the consequences of responding were described as feedback (for a review, see Rovee-Collier and Gekoski 1979).

Conjugate schedules of reinforcement share procedural commonalities with feedback procedures. Under both, more than one response topography will occasion a consequence; indeed, under both, potentially all instances of responding may occasion consequence delivery. In contrast to conjugate reinforcement schedules, under feedback procedures, response consequences are not necessarily proportional to parameters of antecedent responding. Despite differing conventions for programming conjugate reinforcement versus feedback procedures, level of precision potentially can be manipulated equivalently for the two types of procedures. Only one study was identified that compared conjugate schedules to a schedule of lesser precision: Voltaire et al. (2005) found that rates of responding were higher under a conjugate schedule in comparison to a schedule of continuous reinforcement.

Response Shaping

Another operant conditioning procedure where level of precision can approach that of feedback procedures is response shaping. In shaping, as with feedback procedures, consequences for a number of response variants may be programmed. Under typical shaping procedures, the probability and topography of the responses in the individual’s repertoire are altered by means of the systematic and differential reinforcement of successive approximations of specified response variants. Initially, a wide range of response variants across one or more response continua occasion a reinforcer, and variants that are outside of the range do not. Once responding is consistently within the specified range, the range of response variants that are reinforced is gradually and systematically reduced (see Catania 1998). Feedback and shaping procedures share similarities and differences. Similar to shaping procedures, a number of response variants result in a consequence under feedback procedures (e.g., Hancock et al. 1992; Nosofsky and Stanton 2005; Pashler et al. 2005; Rakitin 2005). Unlike shaping procedures, more than one type of consequence is typically presented, each associated with a specific response variant or range of response variants. This results in greater precision for feedback versus operant shaping procedures.

In an important procedural distinction, under shaping, but not typical feedback procedures, extinction for previously reinforced responding is programmed as closer approximations of the terminal response emerge. During extinction, responding can initially increase and become more variable, a phenomenon termed extinction-induced response variability (e.g., Eckerman and Lanson 1969; Morgan and Kelly 1996), after which the extinguished response topography diminishes (though likely remains in the repertoire as shown by Stokes and Balsam 1991). Shaping, particularly shaping by differential reinforcement of successive approximations, relies on extinction-induced variability to promote changes in responding (Eckerman et al. 1980). In contrast to shaping, feedback procedures rely on neither the absence of a consequence for certain response variants nor conditions of extinction to promote response variability. This is true even though there is evidence for extinction-induced variability under feedback preparations (Maes 2003).

In lieu of extinction as a means of producing variability during acquisition of new responses, other parameters of feedback procedures must be counted on to induce the response variability necessary to change behavior. One direct approach that has produced this result is instructing participants to alter their pattern of responding (Joyce and Chase 1990). Another possible variability-inducing mechanism under feedback procedures may be a change across trials in the relative valence of a feedback stimulus—either appetitive (positive) or aversive (negative). A given feedback stimulus (e.g., “good”) might be functionally positive when presented subsequent to occurrence of feedback stimuli that are less appetitive (e.g., “fair”). Conversely, good might be functionally negative when stimuli of a higher appetitive value (e.g., “very good”) have been presented. The foregoing argument is consistent with the phenomenon of behavioral contrast. Under the successive contrast procedure (Flaherty 1996), change in operant responding across trials varies with change in relative, rather than absolute, reinforcement magnitude. It should be noted, however, that based on the literature reviewed in the present paper, it is difficult to compare feedback to shaping and, furthermore, to detect the presence of contrast effects, because trial-by-trial analyses were not reported in the studies reviewed. The preceding statements are provided to suggest a direction for future research.

Summary of the Effects of Precision on Learning Under Feedback and Operant Procedures

We have presented research on the effects of precision of consequences under feedback and operant procedures. Descriptive precision depends on the number and specificity of responses eligible for consequence delivery and number and specificity of the consequences that are delivered. By this characterization of accuracy, operant procedures are typically less precise, although the paradigmatic boundaries of these two types of procedures do not dictate this distinction. Studies of descriptive precision generally have shown a positive relationship between measures of learning and precision under both feedback and operant procedures. Effects of quantitative (or statistical) precision also are parallel between feedback and operant procedures, with performance varying as a direct function of precision. These converging findings argue for interpretation in terms of uniform controlling processes. A conceptually systematic approach based on the assumption that feedback and operant consequences have response-strengthening (or weakening) functions in common would argue that precise, as opposed to imprecise, feedback provides a learner with a higher density of learning opportunities, owing to provision of relevant consequences on a higher proportion of trials. This hypothesis is testable by studying rate of acquisition as a function of cumulative information delivered in successive trials. Information in this approach could be quantified by means of an information-theoretic analysis as cumulative bits of uncertainty reduction regarding correct responding (Jensen et al. 2013). This approach would be practicable given a relatively noncomplex target response—one with low uncertainty or entropy—such as touching a single spot in a 10 × 10 cell matrix where correct responding is determined only by two dimensions (horizontal and vertical), each with ten levels, versus executing a tennis serve—a response that is characterized by multiple dimensions, each with many levels, with correspondingly high entropy.

Antecedent Stimulus Control by Operant and Feedback Procedures

Although feedback stimuli are presented as a consequence of behavior and have been shown to exert behavior control in a manner that overlaps with that of reinforcers and punishers, feedback may also exert antecedent control. This argument is particularly compelling when the response-feedback interval is long or feedback is presented in close temporal proximity to the next opportunity to respond, or both. Several authors have suggested that behavior control exerted by feedback stimuli may be attributable to their function as discriminative stimuli (e.g., Duncan and Bruwelheide 1985-1986; Peterson 1982; Prue and Fairbank 1981; Sulzer-Azaroff and Mayer 1991) or instructions (e.g., Catania 1998, Prue and Fairbank 1981). Feedback effects have also been attributed to motivating operations (e.g., Austin et al. 1999; Johnson 2013; Laraway et al. 2003; Wack et al. 2014). The following sections analyze the relation of feedback to these behavior-controlling functions.

Feedback and Discriminative Control

Antecedent stimuli acquire control over operant behavior when they participate in a contingent relation with a response-consequence contingency. In this three-term contingency, the value of the antecedent stimulus corresponds to the parameters of the response-consequence contingency, ranging from positive to null to negative. A positive discriminative stimulus (SD) is associated with a higher probability of a consequence given a specified response in comparison to the response-consequence contingency either in the absence of the SD or in the presence of another stimulus (S∆). Stimuli, including feedback stimuli, may be classified as discriminative stimuli only when their behavior control function is established by a history of differential consequences in their presence versus absence (Michael 1982, 1993). In an additional procedural distinction between operant discrimination and feedback procedures, feedback stimuli, unlike discriminative stimuli, must vary as a function of properties of the immediately prior response.

Although feedback and operant discrimination procedures are procedurally distinct, they may be analogous at a functional level if it can be shown that feedback, though a consequence of responding, exerts antecedent control. This is not implausible, as a number of authors have presented data suggesting that delivery of primary or conditioned reinforcers may exert discriminative control if they are also differentially correlated with the probability of subsequent reinforcers, given responding (e.g., Bullock and Smith 1953; Davison and Baum 2006; Lattal 1975). Thus, if the value of a feedback stimulus (e.g., “too late,” “too early”) is predictive of a response-feedback relation, control by the feedback stimulus may be attributable to its function as a discriminative stimulus.

Several authors have pointed out that feedback implies two functions—one involving information about accuracy of performance and the other involving reinforcement (Crowell et al. 1988; Prue and Fairbank 1981; Roscoe et al. 2006). The former is expected to exert discriminative control and the latter consequential control. Roscoe et al. concluded that the extent to which a feedback event will serve both functions may depend upon the specificity of the feedback stimuli and their reinforcer potency. Consistent with the former, researchers have proposed that feedback has the greatest impact on responding when feedback stimuli are free to vary between positive and negative values. For example, Hogarth et al. (1991) reported that performance on a decision-making task was highest when both positive and negative feedback stimuli were presented, as opposed to when the probability of either mostly positive or mostly negative feedback was high following a response. Hogarth et al. stated that, “Positive feedback reinforces the use of existing strategies; negative feedback encourages the search for other strategies that might work better” (p. 748). Similar points have been made by Maddox et al. (2003). In more behavior analytic terms, positive feedback not only maintains behavior, but also functions as an SD for similar, subsequent responses. In contrast, negative feedback may function as an SΔ for similar, subsequent responses.

In the study of Roscoe et al. (2006), the two putative components of feedback function—reinforcement and discriminative—were experimentally dissociated. Research participants were trainees who were learning to conduct preference assessments, a procedure that encompasses a number of skills. The results showed no improvement in performance when money was presented in direct proportion to percent of correct responses, but without behavior-specific feedback, indicating that money supported neither a reinforcer nor a discriminative function, even though it provided information on level of correct responding. Presentation of behavior-specific feedback that included description of errors and how to correct them, but without response-contingent money, was associated with performance gains to near-perfect levels. In reconciling these findings with other studies that have demonstrated reinforcement effects of contingent money, the authors suggested that their participants’ prior training may have been insufficient to permit acquisition of criterion responding in the absence of behavior-specific feedback. Stated differently, presentation of money without behavior-specific feedback would be expected to nondifferentially strengthen all emitted responses—both correct and incorrect. Further, because nonoccurrence of reinforcement was also nondifferential, extinction-induced response variability would be expected following both correct and incorrect responding. In contrast, behavior-specific feedback stimuli would be differentially predictive of response consequence; that is, they would be discriminative stimuli and may also induce response variability by describing alternate forms of responding.

Historically, effects of primary reinforcers and punishers have been attributed to strengthening and weakening processes, respectively; however, recent analyses have proposed that such stimuli may also, or alternatively, exert behavior control via their signaling properties (e.g., Davison and Baum 2006; Gallistel and Gibbon 2002; Shahan 2010; Ward et al. 2013). Similarly, Salmoni et al. (1984) argued that feedback during acquisition guides responding but does not directly promote learning. Thus, primary reinforcers and punishers may share a guidance function with feedback stimuli. Of most relevance to the present paper is Shahan’s (2010) review of the functional properties of conditioned stimuli in operant procedures. Along with many earlier writers (e.g., Schuster 1969), Shahan concluded that conditioned reinforcer effects are not consistent with a reinforcer function; rather, an analysis similar to that of Salmoni et al. (1984) regarding feedback function suggests that so-called conditioned reinforcers function as “signposts” that guide responding.

In summary, although noncomparable at a formal procedural level, compelling evidence suggests that feedback stimuli may exert antecedent discriminative control. Analysis of feedback in terms of its potential discriminative stimulus function, in addition to its guidance, reinforcing, and variability-inducing functions, should provide practitioners with a richer set of parameters when implementing feedback procedures and the means of designing potentially more effective procedures.

Feedback and Instructional Control

Another antecedent procedure that may share properties with feedback is instruction. Instructions (or rules, or contingency-specifying stimuli) are antecedent stimuli that may be verbal or nonverbal and may specify the target behavior, the consequence, or identify the contingent relation between the two (e.g., “You can go to the movie tomorrow if you finish your homework today”). Feedback is procedurally similar to instruction in that a feedback stimulus also may describe (or imply) the target behavior, the consequence, or the contingent relation between the two. However, feedback differs from instructions because the form or content of feedback stimuli is determined by the form of a prior response (e.g., “I see that you are not doing your homework; if you wait until tomorrow, you may not be able to go to the movie”), whereas instructions need not adhere to this procedural characteristic.

In accord with Skinner’s analysis, instruction “substitutes verbal antecedents for natural contingencies” (Catania 1998, p. 265). Instructional control or rule-governed behavior (behavior controlled by verbal antecedents) may occur without experiencing said consequence (Catania 1998, p. 265) and is only indirectly maintained by its consequences (Weatherly and Malott 2008; however, see Okouchi 1999,2002). Accordingly, instructional control is distinct from discriminative stimulus and reinforcer control, both of which specify acquisition of stimulus function as a result of a two or three-term contingency (Schlinger and Blakely 1987).

It is plausible that feedback stimuli that include contingency-specifying statements (e.g., “If you turn the handle more slowly next time, the latch will work more smoothly”) function in the same ways as instructions (Agnew and Redmon 1992; Hirst et al. 2013; Prue and Fairbank 1981). Weatherly and Malott (2008) pointed out that, in most instances, instructions are members of indirect contingencies in that, as stimuli, they may have no consistent temporal relation with the target behavior or its consequences. The same case may be made for delayed, contingency-specifying feedback stimuli. For example, Weatherly and Malott (2008) analyze a procedure implemented by Eikenhout and Austin (2005) where the target response for employees of a department store was customer service behavior. Graphic feedback that included performance goals (contingency-specifying feedback) was publically posted three times per week (delayed feedback), along with response definitions (instructions). Eikenhout and Austin did not analyze the intervention in terms of behavior processes; however, Weatherly and Malott pointed out that a common interpretation of such procedures is based on behavior control by delayed positive reinforcement or instruction, or both. They also point out that because presentation of the experimental stimulus events in Eikenhout and Austin’s study was not temporally contiguous with target responding, both of these analyses incorrectly implicate a direct-acting contingency. Nonetheless, Weatherly and Malott demonstrate that the procedure can be analyzed in an analogue fashion, employing the terms of a direct contingency. In their analysis, the opportunity for target responding (customer service behavior such as greeting and engaging in small talk) occurs when a customer is present in the sales area. Although there are no programmed immediate consequences for target responding, failure to respond prior to the customer’s leaving the sales floor occasions loss of an opportunity for delayed reinforcement (an increment on a publically posted performance graph). Assuming that loss of an opportunity for reinforcement (customer leaves the sales floor) is an aversive event, the scenario represents a negative reinforcement (avoidance) procedure in which, in the presence of a customer on the sales floor (discriminative stimulus), emission of customer service behavior is negatively reinforced by avoidance of the undesired outcome—loss of an opportunity to receive reinforcement.

The foregoing analysis is analogical because the status of the presumed discriminative stimulus (customer on the sales floor) is not established by immediately delivered consequences for nonresponding; rather, it is established by instructions informing sales people that performance feedback will be posted thrice weekly. Weatherly and Malott posit that such contingency-specifying instructions establish self-generated rules that mediate the discriminative stimulus status of presence of a customer on the sales floor; for example, “There is a customer. If I do not interact with him correctly, my feedback chart will suffer.” There is considerable agreement that governance of behavior by rules, as opposed to direct-acting contingencies, predominates in control of human behavior (e.g., Catania et al. 1989; Malott 1989; Normand et al. 1999; however, see Svartdal 1995), suggesting that behavior analysts must not shy away from such indirect analyses. As Vaughan (1989) pointed out, analyses of complex behavior that rely on nontechnical application of behavioral terms may have heuristic value for research directed at determining the parameters influencing control by antecedent verbal stimuli.

Feedback and Motivating Operations

The term motivating operation refers to stimulus conditions that alter the effectiveness of response consequences and the behavior maintained by those consequences (Laraway et al. 2003). Unlike discriminative stimuli, motivating stimuli are not members of the three-term (stimulus-response-consequence) contingency programmed under operant procedures; rather, they are independently determined (either by an experimenter or clinician or as a function of other environmental parameters). A classic example is level of deprivation—this parameter is independent of the three-term relation; however, it likely controls effectiveness of the three-term relation. Several authors have argued that though a member of a three-term contingency, feedback may also function as an establishing operation for the effectiveness of feedback itself. Empirical studies do not exist; however, the following theoretical analyses provide plausible explanations of some research findings. For example, Slowiak et al. (2011) proposed that under feedback procedures, individuals may acquire a self-generated performance goal. Comparison of this goal against performance feedback may influence the effectiveness of the feedback stimulus as a function of the level of match between the stimulus and the self-generated goal. In another argument, Johnson (2013) proposed that feedback may exert an establishing function insofar, as it signals to the individual that his or her performance is being monitored. Under that condition, effects of feedback (both positive and negative) on performance may be enhanced. The foregoing accounts are consistent with the empirically established effects of goal setting.

Summary and Implications

Our goals in developing this paper included provision of a comprehensive survey of procedural commonalities and distinctions between procedures labeled feedback and familiar operant conditioning procedures. The survey was intended as the basis for our second goal—assessing the extent to which processes revealed in operant conditioning procedures are sufficient to explain the phenomena observed in studies on feedback. We found that the parameters associated with typical feedback and operant conditioning implementations are, in many cases, the same. Where parametric values typically do not overlap (e.g., long response-feedback delays versus immediate reinforcement or punishment), they could do so in principle. Thus, it could be and has been argued on the basis of inductive reasoning that feedback and operant procedures are members of the same category—operant conditioning. This form of reasoning—if it looks like a duck, swims like a duck, and quacks like a duck, then it probably is a duck—is of course subject to alternate conclusions. For example, “If it looks like a duck, quacks like a duck, but needs batteries—you probably have the wrong abstraction” (Bailey 2009). However, our inductive argument is strengthened by our comparisons of the functional relations between procedural parameters and measures of learning under operant and feedback procedures. Across a broad range of procedures, we found convergence in these comparisons, consistent with control by common behavior processes under operant and feedback procedures. Clearly, the argument is still limited owing to absence of empirical studies in which functional relations are directly compared. Nonetheless, we propose that strength of the convergent data presented here supports the conceptually systematic assertion that feedback phenomena are reducible to operant conditioning.

There are other compelling empirical and theoretical arguments for analyzing feedback effects in terms of reinforcement, punishment, and/or antecedent control or for analyzing both types of effects according to a another common function. In an ongoing debate regarding the function of stimuli presented in basic operant conditioning procedures, it has been proposed that nominal conditioned or primary reinforcers exert behavioral control, not by strengthening responses on which they are contingent, but by means of their signaling function (e.g., Davison and Baum 2006; Schuster 1969; Shahan 2010; Thrailkill and Shahan 2014). Interestingly, these analyses of primary and conditioned reinforcement are strongly paralleled in the feedback literature where the discriminative or informational function of feedback stimuli is emphasized (e.g., Johnson 2013; Kang et al. 2003; Salmoni et al. 1984).

It may be argued that despite somewhat mixed results and modest effect sizes (Kluger and DeNisi 1996), the predominant observation is that feedback works. Given that, one may question the necessity of determining why it works. To this position, Normand et al. (1999) provide a convincing retort, pointing out that positive effects of feedback may lead to a superstitious approach to intervention in which procedures are selected based merely on their prior successful application to similar problems, without regard to the relation between the programmed contingencies and the source(s) of control involved in the presenting problem. Though possibly effective by some measures, thereby encouraging their continued use, such applications may be excessive or inappropriate. They may also be insufficient, particularly with regard to maintenance of behavior change. A related point is put forward by Kluger and DeNisi (1996) who state that research on feedback interventions must focus on the processes involved in feedback effects, rather than on simply questioning whether feedback improves performance. The latter strategy, they suggest, will merely join 90 years of minimally successful attempts to answer that question.

More generally, behavior analysts strive for conceptual consistency in accounting for behavior control. In this way, we avoid category mistakes, in this case, considering operant conditioning and feedback as separate categories of behavior change procedures, each with its own laws. Based on this review, we hope that readers will agree that feedback is the name of an operant conditioning procedure in which there is presentation of an exteroceptive stimulus whose parameters vary as a function of parameters of antecedent responding, that is, the definition used in the present report.

Should behavior analysts refrain from using feedback to describe their work as suggested by Peterson (1982)? This is analogous to asking if we should avoid references to choice as the name of a procedure in which two or more discriminative stimuli are differentially associated with unique response-consequence contingencies. Feedback and choice are operant conditioning procedures, capable of being analyzed as such. We hope that this review will contribute to the conceptually systematic use of feedback.

Acknowledgments

Acknowledgment

We are grateful to Bruce Brown for his review of many earlier versions of this paper, as well as his feedback on the current version of this article. We also thank Claire Poulson for helping us to apply Gilbert Ryle’s epistemology to the problems raised in this paper. An earlier version of this manuscript benefited from critiques provided by students enrolled in a course on Scientific Writing and Inference offered by the CUNY PhD Program (Behavior Analysis Subprogram) in 2012.

References

- Agnew JL, Redmon WK. Contingency specifying stimuli: the role of “rules” in organizational behavior management. Journal of Organizational Behavior Management. 1992;12:67–76. doi: 10.1300/J075v12n02_04. [DOI] [Google Scholar]

- Alvero AM, Bucklin BR, Austin J. An objective review of the effectiveness and essential characteristics of performance feedback in organizational settings (1985–1998) Journal of Organizational Behavior Management. 2001;21:3–29. doi: 10.1300/J075v21n01_02. [DOI] [Google Scholar]

- Austin J, Carr JE, Agnew JL. The need for assessment of maintaining variables in OBM. Journal of Organizational Behavior Management. 1999;19:59–87. doi: 10.1300/J075v19n02_05. [DOI] [Google Scholar]

- Bailey, D. (2009). SOLID development principles—in motivational pictures. http://lostechies.com/derickbailey/2009/02/11/solid-development-principles-in-motivational-pictures/. Accessed 11 February 2009.

- Balcazar, F., Hopkins, B., & Suarez, Y. (1985-1986). A critical, objective review of performance feedback. Journal of Organizational Behavior Management, 7, 65–75.

- Boakes RA. Response decrements produced by extinction and by response-independent reinforcement. Journal of the Experimental Analysis of Behavior. 1973;19:293–302. doi: 10.1901/jeab.1973.19-293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brosvic, G. M., & Finizio, S. (1995). Inaccurate feedback and performance on the Müller-Lyer illusion. Perceptual and Motor Skills. 80(3 Pt 1), 896–8. [DOI] [PubMed]

- Bullock DH, Smith WC. An effect of repeated conditioning-extinction upon operant strength. Journal of Experimental Psychology. 1953;46:349–352. doi: 10.1037/h0054544. [DOI] [PubMed] [Google Scholar]

- Call NA, Troscair-Lasserre NM, Findley AJ, Reavis AR, Schillingsburg MA. Correspondence between single versus daily preference assessment outcomes and reinforcer efficacy under progressive-ratio schedules. Journal of Applied Behavior Analysis. 2012;45:765–777. doi: 10.1901/jaba.2012.45-763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Capaldi EJ. A sequential hypothesis of instrumental learning. In: Spence KW, Spence JT, editors. The psychology of learning and motivation. New York: Academic Press; 1967. pp. 67–156. [Google Scholar]

- Carr JE, Severtson JM, Lepper TL. Noncontingent reinforcement is an empirically supported treatment for problem behavior exhibited by individuals with developmental disabilities. Research in Developmental Disabilities. 2009;30:44–57. doi: 10.1016/j.ridd.2008.03.002. [DOI] [PubMed] [Google Scholar]

- Carpenter SK, Vul E. Delaying feedback by three seconds benefits retention of face–name pairs: the role of active anticipatory processing. Memory & Cognition. 2011;39:1211–1221. doi: 10.3758/s13421-011-0092-1. [DOI] [PubMed] [Google Scholar]

- Catania AC. Learning. 4. Upper Saddle River, NJ: Prentice Hall; 1998. [Google Scholar]

- Catania AC, Shimoff E, Matthers B. An experimental analysis of rule-governed behavior. In: Hayes SC, editor. Rule-governed behavior: cognition, contingencies, and instructional control. New York, NY: Plenum Press; 1989. pp. 119–150. [Google Scholar]

- Cook T, Dixon MR. Performance feedback and probabilistic bonus contingencies among employees in a human service organization. Journal of Organizational Behavior Management. 2005;25:45–63. doi: 10.1300/J075v25n03_04. [DOI] [Google Scholar]

- Costa DSJ, Boakes RA. Varying temporal contiguity and interference in a human avoidance task. Journal of Experimental Psychology: Animal Behavior Processes. 2011;37:71–78. doi: 10.1037/a0021192. [DOI] [PubMed] [Google Scholar]

- Crowell CR, Anderson DC, Abel DM, Sergio JP. Task clarification, performance feedback, and social praise: procedures for improving the customer service of bank tellers. Journal of Applied Behavior Analysis. 1988;21:65–71. doi: 10.1901/jaba.1988.21-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Baum WM. Do conditional reinforcers count? Journal of the Experimental Analysis of Behavior. 2006;86:269–283. doi: 10.1901/jeab.2006.56-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deleon IG, Fisher WW, Rodriguez-Catter V, Maglieri K, Herman K, Marhefka J. Examination of relative reinforcement effects of stimuli identified through pretreatment and daily brief preference assessments. Journal of Applied Behavior Analysis. 2001;34:463–473. doi: 10.1901/jaba.2001.34-463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dickinson A, Watt A, Varga ZI. Context conditioning and free-operant acquisition under delayed reinforcement. The Quarterly Journal of Experimental Psychology. 1996;49B:97–110. [Google Scholar]

- Duncan, P. K., & Bruwelheide, L. R. (1985-1986). Feedback: use and possible behavioral functions. Journal of Organizational Behavior Management, 7, 91–114.

- Eckerman DL, Hienz RD, Stern S. Shaping the location of a pigeon’s peck: effect of rate and size of shaping steps. Journal of the Experimental Analysis of Behavior. 1980;33:299–310. doi: 10.1901/jeab.1980.33-299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eckerman DL, Lanson RN. Variability of response location for pigeons responding under continuous reinforcement, intermittent reinforcement, and extinction. Journal of the Experimental Analysis of Behavior. 1969;12:73–80. doi: 10.1901/jeab.1969.12-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eikenhout N, Austin J. Using goals, feedback, reinforcement, and a performance matrix to improve customer service in a large department store. Journal of Organizational Behavior Management. 2005;24:27–62. doi: 10.1300/J075v24n03_02. [DOI] [Google Scholar]

- Fisher W, Piazza CC, Bowman LG, Hagopian LP, Owens JC, Slevin I. A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flaherty CF. Incentive relativity. New York, NY: Cambridge University Press; 1996. [Google Scholar]

- Gallistel CR, Gibbon J. The symbolic foundations of conditioned behavior. Mahwah, NJ: Lawrence Erlbaum Associates; 2002. [Google Scholar]

- Graff RB, Karsten AM. Assessing preferences of individuals with developmental disabilities: a survey of current practices. Behavior Analysis in Practice. 2012;5:37–48. doi: 10.1007/BF03391822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grice GR. The relation of secondary reinforcement to delayed reward in visual discrimination learning. Journal of Experimental Psychology. 1948;38:1–16. doi: 10.1037/h0061016. [DOI] [PubMed] [Google Scholar]

- Haas JR, Hayes SC. When knowing you are doing well hinders performance: exploring the interaction between rules and feedback. Journal of Organizational Behavior Management. 2006;26:91–111. doi: 10.1300/J075v26n01_04. [DOI] [Google Scholar]

- Hammond LJ. The effect of contingency on the appetitive conditioning of free-operant behavior. Journal of the Experimental Analysis of Behavior. 1980;34:297–304. doi: 10.1901/jeab.1980.34-297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hancock TE, Stock WA, Kulhavy RW. Predicting feedback effects from response-certitude estimates. Bulletin of the Psychonomic Society. 1992;30:173–176. doi: 10.3758/BF03330431. [DOI] [Google Scholar]

- Hayes SC, Kohlenberg BS, Hayes LJ. The transfer of specific and general consequential functions through simple and conditional equivalence relations. Journal of the Experimental Analysis of Behavior. 1991;56:119–137. doi: 10.1901/jeab.1991.56-119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirst JM, DiGennaro Reed FD, Reed DD. Effects of varying feedback accuracy on task acquisition: a computerized translational study. Journal of Behavioral Education. 2013;22:1–15. doi: 10.1007/s10864-012-9162-0. [DOI] [Google Scholar]

- Hogarth RM, Gibbs BJ, McKenzie CRM, Marquis MA. Learning from feedback: exactingness and incentives. Journal of Experimental Psychology: Learning, Memory & Cognition. 1991;17:734–752. doi: 10.1037//0278-7393.17.4.734. [DOI] [PubMed] [Google Scholar]

- Houmanfar R. Performance feedback: from component analysis to application. Journal of Organizational Behavior Management. 2013;33:85–88. doi: 10.1080/01608061.2013.787002. [DOI] [Google Scholar]

- Hunt DP. The effect of the precision of informational feedback on human tracking performance. Human Factors. 1961;3:77–85. [Google Scholar]

- Jensen G, Ward RD, Balsam PD. Information: theory, brain, and behavior. Journal of the Experimental Analysis of Behavior. 2013;100:408–431. doi: 10.1002/jeab.49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jerome J, Sturmey P. The effects of pairing non-preferred staff with preferred stimuli on increasing the reinforcing value of non-preferred staff attention. Research in Developmental Disabilities. 2014;35:849–860. doi: 10.1016/j.ridd.2014.01.014. [DOI] [PubMed] [Google Scholar]

- Johnson DA. A component analysis of the impact of evaluative and objective feedback on performance. Journal of Organizational Behavior Management. 2013;33:89–103. doi: 10.1080/01608061.2013.785879. [DOI] [Google Scholar]

- Johnson DA, Dickinson AM. Using postfeedback delays to improve retention of computer-based instruction. The Psychological Record. 2012;62:485–496. [Google Scholar]

- Joyce JH, Chase PN. Effects of response variability on the sensitivity of rule-governed behavior. Journal of the Experimental Analysis of Behavior. 1990;54:251–262. doi: 10.1901/jeab.1990.54-251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang K, Oah S, Dickinson AM. The relative effects of different frequencies of feedback on work performance: a simulation. Journal of Organizational Behavior Management. 2003;23:21–53. doi: 10.1300/J075v23n04_02. [DOI] [Google Scholar]

- Kazdin AE. Behavior modification in applied settings. 4. Pacific Grove, CA: Brooks/Cole; 1989. [Google Scholar]