Abstract

We used whole-head magnetoencephalography to investigate cortical activity during two oromotor activities foundational to speech production. 13 adults performed mouth opening and phoneme (/pa/) production tasks to a visual cue. Jaw movements were tracked with an ultrasound-emitting device. Trials were time-locked to both stimulus onset and peak of jaw displacement. An event-related beamformer source reconstruction algorithm was used to detect areas of cortical activity for each condition. Beamformer output was submitted to iterative K-means clustering analyses. The time course of neural activity at each cluster centroid was computed for each individual and condition. Peaks were identified and latencies submitted for statistical analysis to reveal the relative timing of activity in each brain region. Stimulus locked activations for the mouth open task included a progression from left cuneus to left frontal and then right pre-central gyrus. Phoneme generation revealed the same sequence but with bilateral frontal activation. When time locked to jaw displacement, the mouth open condition showed left frontal followed by right frontal-temporal areas. Phoneme generation showed a complicated sequence of bilateral temporal and frontal areas. This study used three unique approaches (beamforming, clustering and jaw tracking) to demonstrate the temporal progression of neural activations that underlie the motor control of two simple oromotor tasks. These findings have implications for understanding clinical conditions with deficits in articulatory control or motor speech planning.

Keywords: MEG, motor planning, speech production

INTRODUCTION

Speech production is a complex human motor behaviour that requires the seamless integration of many individual components of several motor systems, including the articulatory control of oromotor structures. Non-speech mouth movements also require coordination of these same muscles and, presumably, similar neural pathways. However, the neural control of oromotor processes for both speech and non-speech tasks are not well understood.

Neuroimaging studies have contrasted cortical activation patterns during speech and non-speech oromotor tasks. fMRI studies reported that speech movements were associated with more activations in left primary motor cortex [31] and right cerebellum [20] whereas the non-speech tasks were associated with bilateral and symmetric cortical and cerebellar activations.

Converging evidence from diffusion tensor tractography and fMRI [27] demonstrated that cortical areas involved in vocal motor control showed bilateral involvement for volitional respiratory control, which is left lateralized for syllable production. They suggest that the motor cortex provides a common bilateral structural network for various basic voluntary vocal motor tasks on top of which a left-lateralized functional network involved in the production of complex voluntary vocal behaviours is overlaid. Clinical practice is built on this assumption that treatment with nonspeech oral motor exercises will facilitate the recovery of speech sound production [21]. However, this assumption has not been fully examined.

Whole-head magnetoencephalography (MEG) offers the ability to study the spatiotemporal dynamics of speech motor production in humans, but thus far, has been primarily used for the study of language comprehension (for a review see [23]). A primary challenge of using MEG to study the neural basis of speech production is the presence of artefacts generated by oromotor structures that can overwhelm the MEG signal. To address this challenge, many groups developed silent, or covert, expressive language tasks. Using dipole analysis and a silent word stem completion task, Dhond and colleagues [8] reported early activation of occipital sensory areas with left-lateralized spread to posterior temporal areas and then into insulo-opercular regions when homologous regions become involved to demonstrate late bilateral activation. Increasingly complex language tasks have identified neural areas involved in phonological processing [29], imaginary speech articulation [15], action naming [4], object naming [16] and word reading [30]. Investigations of the spatio-temporal distribution of oscillatory desynchrony changes have identified comparable neural areas involved in syntax processing for word reading [13] and verb generation [19]. Furthermore, it was demonstrated with MEG that observation and imitation of lip movements activated, in sequence, occipital to temporal to parietal to inferior frontal to primary motor areas [18]. While silent tasks have addressed the significant artefact problem, it has, unfortunately, also limited the study of the oromotor planning and motor control involved in language production.

A few studies have used overt language production. The first MEG study of expressive language used a subtraction between the overt language conditions with pure visual sensory conditions and successfully identified left inferior frontal regions [24]. With an overt naming task, areas of neural preparation for language production were identified in bilateral inferior frontal and left precentral regions [12]. A recent MEG study examined the intention to speak and found that, in addition to the expected frontal-temporal areas, parietal cortex was a key mechanism for monitoring speech intention [5].

Lesion studies demonstrated that the left precentral gyrus of the insula is a critical brain area for the coordination of complex articulatory movements [10], and this area was not implicated when patients were asked to generate words with minimal articulatory complexity [1]. MEG has been used to examine motor cortex involvement. Focussing on changes in the 20 Hz beta frequency band, which are predominant oscillations found in sensorimotor cortex, an MEG study by Salmelin & Sams [25] examined brain activity accompanying verbal and non-verbal lip and tongue movements. They reported that, while both hemispheres are active, left motor face area acts as a primary control centre while right homologous areas play a subordinate role. In another study comparing speech and non-speech mouth movements, Saarinen and colleagues [22] reported that left hemisphere 20 Hz suppression preceded the right for both speech and non-speech productions. Furthermore, consistent with the earlier cited lesion studies, left-hemisphere 20 Hz suppression in motor cortex increased with word sequence length and motor demands [22].

While the extant MEG literature has primarily used dipole source analysis to identify neural regions, we propose here an analysis strategy employing source reconstruction protocols based on spatial filtering methods. Specifically, event-related beamforming (ERB) allows a different approach to the analysis of whole-head MEG data by identifying multiple sources, without a priori specification [6]. As well, the ERB approach has demonstrated success at artefact suppression [7]. In addition to the application of ERB to whole-head MEG data, we introduce a novel clustering analysis to reduce the ERB data, and we use an innovative jaw-tracking method to time-lock our epochs. Thus, in the current study, we apply new methods to investigate the sequence of neural activations involved in two simple oromotor activities that are foundational to the motor control involved in the production of speech.

MATERIAL AND METHODS

Subjects

Thirteen right-handed adults (6 females, mean = 28 years) participated. All reported no history of neurological, speech-language, or hearing difficulties.

Tasks and Stimuli

Participants performed an oromotor task and a basic speech task that used the same initial bilabial movement. The former involved opening and closing their mouths and the latter involved speaking the phoneme /pa/ out loud; both movements were practised and made as uniform and stereotyped as possible. Subjects completed 115 trials of each movement, in separate conditions, cued by the appearance of a small circle on a screen, and at a variable inter-stimulus interval of 3500–3900 msec.

Data Acquisition

Magnetoencephalography (MEG)

MEG data were acquired using a whole-head 151-channel CTF system. Data were acquired continuously (2500Hz, DC-200Hz filters). Head movement tolerance was less than 5 mm. A 1.5T T1 3D SPGR MRI was obtained.

Jaw Motion

To track 3-dimensional jaw displacement simultaneously with MEG recordings, we used an ultrasonic tracking system (100Hz sampling rate) with a small light-weight emitting device attached just below the bottom lip at midline (Zebris Medical GmbH, Germany).

Data Analysis

Continuous MEG data were bandpass filtered from 1–30 Hz and epoched in two ways: 1) time-locked to the visual cue onset (‘cue’) with light onset as time zero; 2) time-locked to maximal jaw displacement (‘jaw’) as captured by largest deflection on Zebris ultrasonic emitter with maximal jaw displacement as time zero. Analyses with ‘cue’ triggers were epoched from − 0.5 to +1.0 sec. For the ‘jaw’ epochs, the time of maximal jaw displacement during each movement was marked and data were epoched from −1.0 to +0.5 sec.

An event-related beamformer (ERB, [6]) algorithm was used to compute time-locked neural activity over the whole head with 5mm spatial resolution. ERB images were created at 5ms increments, co-registered to individual MRIs, and normalized to the MNI template using SPM2. Images were averaged across subjects for all conditions (mouth open and /pa/) for both stimulus-locked and movement-locked activity (‘cue’ and ‘jaw’). To include only the top 15% of activations at each time slice, a threshold of 85% maximum activation was applied.

At each time point from cue onset (‘cue’) to peak movement (‘jaw’), all brain areas with supra-threshold activations were identified and these data then pooled to provide 5-dimensional plots of the magnitude of all brain area activations by latency, and magnitude for each condition. A manual sort by brain area and number of peaks removed single-point activations. Also, visual inspection of the data revealed that the beamformer localized sources of jaw movement artefact just inferior to cortical boundaries in the jaw area but also extending posteriorly, thus, we excluded brainstem and cerebellum from further analyses.

Data Reduction and Statistical Analysis

The Talairach coordinates for all brain areas remaining in the raster plot were submitted to iterative K-means clustering analysis. K-means clustering is an unsupervised learning algorithm which partitions data points into clusters [26,28]. This iterative partitioning minimized the sum, over all clusters, of the within-cluster sum of absolute differences (point-to-cluster-centroid distances). To ensure that the iterative clustering algorithm converged to a global minimum, Talairach coordinates of the largest activation in each brain area was used as cluster centroid starting locations, or seed points. For each cluster identified, a cluster centroid, which represented the component-wise median of the points in that cluster, was computed. Data points within 1.5 cluster standard deviations were retained, and a new seed point added for points located >1.5 SD from the centroid, and re-submitted to the clustering analyses. This was repeated until all data points were located <1.5 SD from a cluster centroid. This allowed an automated approach to identifying clusters and was sufficiently sensitive to differentiate adjacent clusters.

Coordinates of the cluster centroids were identified and unwarped back to each individual’s MRI. Time course data (i.e., a virtual sensor) of the neural activity at each centroid location was computed for each subject and a grand-averaged source waveform was computed for each centroid location and smoothed by filtering between 1–30 Hz. A z-test (p<0.01) on the magnitude of the signal identified virtual sensor locations with significant activations. Virtual sensors with no significant peaks were excluded from further discussion. The individual time courses from these locations were computed and manually peak picked. The latencies were submitted to one-way ANOVAs with brain region as a factor. Tukey post-hoc analyses were conducted to identify significantly different latencies. The spatiotemporal time course for each condition was obtained by mapping the significant peaks across brain areas.

RESULTS

Grand average response times between ‘cue’ and ‘jaw’ for mouth open was 456 ms ± 26.5 SEM and for /pa/ was 531 ms± 28.5 SEM. The /pa/ productions were significantly slower than mouth open (p<0.05).

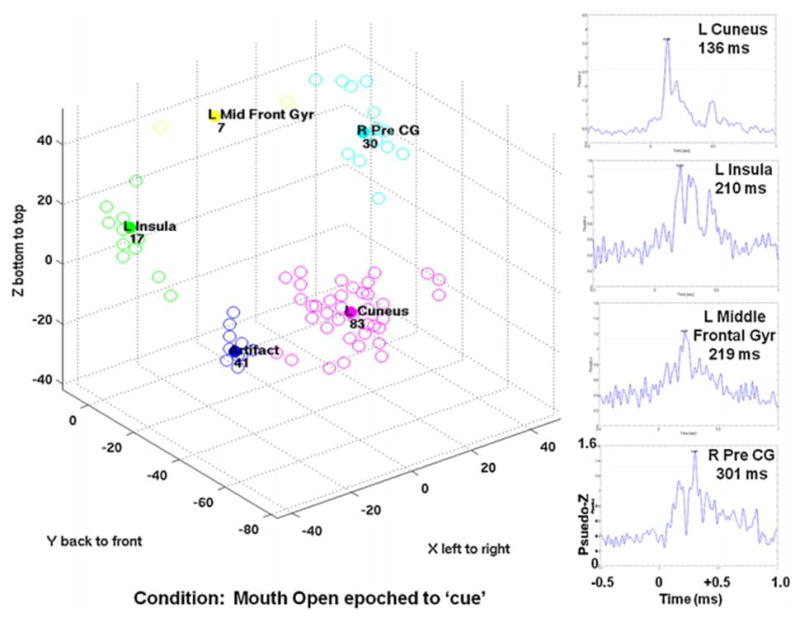

Figure 1 shows the cluster results and thresholded significant virtual sensors for the mouth open condition time-locked to the onset of the stimulus (‘cue’). Cluster analysis identified 5 regions, one of which was the mouth artefact. The virtual sensors demonstrated that all 4 brain regions showed significant peak magnitudes (see Table 1). The ANOVA showed a significant effect of location (F(3,44)=222.9; p<0.001) and post-hoc analyses (see Table 2) revealed that left insula and L MFG were not significantly different. Thus, the sequence of activations progressed from left cuneus to left frontal areas to right pre-central gyrus.

Figure 1.

Results from mouth open, time-locked to stimulus onset (‘cue’) condition. Three-dimensional plot of the cluster analysis results are on the bottom left. All clusters are labelled. On the right, time-course virtual sensors were re-constructed at the cluster centroid location and peaks with significant magnitude were identified. Each of these virtual sensors is shown with the significant peak labelled.

Table 1.

Centroid locations and latencies for peaks above 95th percentile for each of the four conditions.

| Mouth open

|

/pa/

|

||||||

|---|---|---|---|---|---|---|---|

| ‘Cue’

|

‘Jaw’

|

‘Cue’

|

‘Jaw’

|

||||

| Location | Latency (ms) | Location | Latency (ms) | Location | Latency (ms) | Location | Latency (ms) |

| L cuneus | 136 | L IFG | −74 | L cuneus | 133 | L uncus | −120 |

| L insula | 210 | L Pre CG | −70 | R insula | 176 | L STG | −118 |

| L MFG | 219 | R STG | −54 | L MFG | 206 | R IFG | −115 |

| R Pre CG | 301 | R Post CG | −54 | R IFG | 256 | R Pre CG | −110 |

| R Pre CG | −53 | R Pre CG | 262 | R STG | −96 | ||

| L IFG | −37 | L IFG | −90 | ||||

| L Pre CG | −24 | R IFG | .64 | ||||

L, left; R, right; MFG, middle frontal gyrus; IFG, inferior frontal gyrus; STG, superior temporal gyrus; Pre CG, pre-central gyrus; Post CG, post-central gyrus.

Table 2.

Results from post hoc analyses performed on locations identified as significant by ANOVA.

| “Cue”

|

“Jaw”

|

|||||||

|---|---|---|---|---|---|---|---|---|

| L cuneus | L insula | L MFG | L IFG | L pre CG | R STG | R Post CG | ||

| Mouth open | ||||||||

| L insula | p< 0.005 | L preCG | ns | |||||

| L MFG | p< 0.005 | ns | R STG | p < 0.005 | ns | |||

| R Pre CG | p< 0.005 | p< 0.005 | p< 0.005 | R Post CG | p < 0.005 | ns | ns | |

| R Pre CG | p < 0.005 | ns | ns | ns | ||||

| L cuneus | R insula | L MFG | R IFG | L uncus | L STG | R IFG | R Pre CG | R STG | ||

|---|---|---|---|---|---|---|---|---|---|---|

| /pa/ | ||||||||||

| R insula | p< 0.005 | L STG | ns | |||||||

| L MFG | p< 0.005 | ns | R IFG | ns | ns | |||||

| R IFG | p< 0.005 | p< 0.005 | p< 0.005 | R Pre CG | ns | ns | ns | |||

| R Pre CG | p< 0.005 | p< 0.005 | p< 0.005 | ns | R STG | p < 0.05 | ns | ns | ns | |

| L IFG | p< 0.05 | p <0.05 | p<0.05 | ns | ns | |||||

L, left; R, right; MFG, middle frontal gyrus; IFG, inferior frontal gyrus; STG, superior temporal gyrus; Pre CG, pre-central gyrus; Post CG, post-central gyrus.

Cluster analysis, for generation of the phoneme /pa/ time-locked to the onset of the stimulus (‘cue’), identified 8 clusters but only 5 virtual sensor magnitudes were significant (p<.01; see Table 1). In addition to areas seen with mouth open, for /pa/, right inferior frontal gyrus was also involved ((F(4,55)=4.5; p<0.001); see Table 2). Again, activations proceeded from posterior to anterior along a ventral pathway; however, bilateral frontal areas were included.

When time-locked to maximal jaw deflection (‘jaw’), the neural areas of interest occur prior to the maximal deflection, which is notated as time zero. The latencies are thus negative numbers. Cluster analysis, for mouth open time-locked to ‘jaw’, identified 9 clusters with 5 virtual sensor peaks passing significance for magnitude (p<0.01; see Table 1). While the ANOVA was significant (F(4,55)=4.17; p<.005), there were not as many differences on post-hoc testing (see Table 2). Basically, the activation progressed from frontal-motor to right temporal-motor areas.

Cluster analysis for /pa/ production time-locked to maximal jaw deflection (‘jaw’) identified 9 clusters although only 6 peaks were significant magnitude (P<0.01; see Table 1). The ANOVA showed a main effect (F(5,66)=2.69; p<0.03) but post-hoc analyses showed a more complicated pattern (see Table 2). Activations progressed from left temporal / right frontal to right temporal / left frontal areas. In both /pa/ conditions, right inferior frontal gyrus was involved.

DISCUSSION

Using MEG spatial filtering techniques, clustering analysis and a jaw tracking device, we identified neural patterns of activation for two oromotor tasks that were similar in initial motor movement, but with different goals of motor output (speech versus non-speech). Phoneme production required additional brain areas involved in phoneme selection and the articulatory control of speech, but probably utilized similar sensorimotor control areas. By contrasting stimulus and response time-locking of averaged brain activity, we could examine neural areas that responded to the stimulus to initiate the response, or motor control areas that occurred during response production. The findings of this study raise a number of points for discussion.

A meta-analysis [14] of behavioural and ERP word production studies compiled timing information and identified the following sequence of activations: visual recognition involving occipital areas and object conceptualization in ventrotemporal areas from 0–175 ms; access of mental lexicon associated with left middle temporal gyrus from 175–250 ms; phonological code retrieval involving left hemisphere posterior middle temporal and superior temporal gyri (Wernicke’s area) at 250–330 ms; and finally, oral output preparation engaging left inferior frontal gyrus (Broca’s area) and bilateral sensorimotor areas after 330 ms. Results from another MEG study [12] identified a faster timing of activations: primary sensory (auditory or visual) between 75–130 ms, association cortices (inferior and superior temporal gyri) between 150–240 ms, bilateral inferior frontal and left precentral at 220 ms. Our current results showed the same sequence of activations with comparable timing, from sensory to association and planning to motor areas. In both of our tasks, motor areas activated in the 250–300 ms range. Given that we observed a mean latency of maximal jaw deflection at 456 ms and 531 ms for mouth open and /pa/, respectively, precentral gyrus activation in the 250–300 ms range coincides with activation of motor preparatory responses.

Interestingly, the slight increase in task demands of phoneme production compared to the simpler mouth open task recruited additional activation of the right frontal areas. While we generally expected greater involvement of left inferior frontal activation in expressive language tasks, right homologous activation has been seen in other tasks involving articulatory control [25]. In fact, these data concur with an MEG study examining involvement of insular cortex during imaginary speech articulation [15]. This group found right dominant activation with verbal responses if the speech responses were easier and thus, more ‘automatic’. The use of a simple phoneme /pa/, in our study, suggests that the response might have been highly automated and thus more right-hemisphere dominant. Conversely, in the analyses of the averages time-locked to jaw displacement, we saw significant left inferior frontal activation. When time-locked to jaw displacement, the neural areas activated were most likely related to motor planning and motor control systems [14,17]. These include bilateral superior temporal gyri (BA 38 and 22) and bilateral pre-motor areas (BA 6). As well, bilateral inferior frontal gyri (BA 9) are part of the dorsolateral prefrontal cortices and are involved in maintaining attention to task. While these motor areas are identified with the jaw onset epochs, in general, fewer neural areas were identified this way, compared to using stimulus on as the epoch trigger. This is consistent with the finding of 20 Hz modulation when using speech and non-speech mouth movements [22]. These authors compared visual cue onset versus EMG-onset and found stimulus locked analyses to produce more reliable activations. This may be due to difficulty in reproducing the exact EMG onset between trials, and in the current experiment, the difficult in marking the exact maximal jaw displacement between trials. The fast and tightly-linked progression of activations in the motor system suggest that even millisecond variability in the manual marking of triggers might be sufficient to obscure the neural response with latency jitter. Further, visual inspection of the virtual sensor data suggest that there is a cascade of activations in these sensorimotor and motor control areas that remain active after maximal jaw displacement and are probably related to feedback and response monitoring functions [32]. This is more pronounced in the /pa/ condition, perhaps related to the mildly more complex nature of the task.

It is important to note that right and left frontal regions are activated in both speech and non-speech tasks in our study. We found no clear left hemisphere dominance per se for the speech task. This is consistent with more recent speculations within the literature that left greater than right asymmetries may be due to stimulation paradigms and task parameters [9]. We did observe an almost exclusive activation of right pre-central gyrus. There are reports in the literature of left hemisphere motor cortex suppression in verbal compared to bilateral suppression for non-verbal tasks [22,25], which may explain the right pre-central gyrus activations seen in our study. However, an alternative explanation, proposed by Bozic and colleagues [2], suggests that the general perceptual and cognitive aspects of speech are controlled by a bilateral distributed network, whereas the specific grammatical aspects of language, unique to humans, are subsumed in the left hemisphere [2].

Finally, our findings suggest that both ventral and dorsal pathways may be recruited for simple speech tasks. While it is agreed that both ventral and dorsal pathways connect language centres in the brain, the exact contributions of each pathway are a matter of debate [11], but it is thought that the dorsal pathway is involved in more complex syntactic functions while the ventral path is involved in comprehension (see [3]). In the current study, the spatiotemporal sequence following stimulus onset involves the ventral pathway while the spatiotemporal sequence preceding maximal jaw displacement involves the dorsal pathway. Our findings suggest that both pathways may be recruited for even simple tasks; but the fast transition between areas cannot be captured by traditional methods such as PET and fMRI, nor can it be captured by ERP due its inability to adequately resolve dorsal versus ventral activations, or dissociate them from the auditory response.

In summary, by using event-related beamforming on MEG data and time-locking our responses to stimulus onset, we identified the time course of neural activations involved in stimulus processing and response selection. By back-averaging to jaw displacement, we identified movement evoked neural activations related to two oromotor tasks that underlie language production. Much of the extant neuroimaging literature has focused on delineating the neural activations involved in language processing. The current study takes this one step further by examining the pattern of neural processes underlying the motor control of speech and non-speech motor execution which may have important implications in the study of language disorders (e.g., dyspraxia) that may involve underlying deficits in articulatory control and motor programming aspects of speech production.

Acknowledgments

This research was funded by a Canadian Institutes of Health Research operating grant (CIHR MOP-89961).

References

- 1.Baldo JV, Wilkins DP, Ogar J, Willock S, Dronkers NF. Role of the precentral gyrus of the insula in complex articulation. Cortex. 2011;47:800–807. doi: 10.1016/j.cortex.2010.07.001. [DOI] [PubMed] [Google Scholar]

- 2.Bozic M, Tyler LK, Ives DT, Randall B, Marslen-Wilson W. Bihemispheric foundations for human speech comprehension. PNAS. 2010 Oct 5;107(40):17439–17444. doi: 10.1073/pnas.1000531107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Brauer J, Anwander A, Friederici AD. Neuroanatomical prerequisites for language functions in the maturing brain. Cereb Cortex. 2011;21:459–466. doi: 10.1093/cercor/bhq108. [DOI] [PubMed] [Google Scholar]

- 4.Breier JI, Papanicolaou AC. Spatiotemporal patterns of brain activation during an action naming task using magnetoencephalography. J Clin Neurophysiol. 2008;25:7–12. doi: 10.1097/WNP.0b013e318163ccd5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Carota F, Posada a, Harquel S, Delpuech C, Bertrand O, Sirigu A. Neural dynamics of the intention to speak. Cereb Cortex. 2010;20:1891–1897. doi: 10.1093/cercor/bhp255. [DOI] [PubMed] [Google Scholar]

- 6.Cheyne D, Bakhtazad L, Gaetz W. Spatiotemporal mapping of cortical activity accompanying voluntary movements using an event-related beamforming approach. Hum Br Mapp. 2006;27:213–229. doi: 10.1002/hbm.20178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cheyne D, Bostan AC, Gaetz W, Pang EW. Event-related beamforing: a robust method for presurgical functional mapping using MEG. Clin Neurophysiol. 2007;118:1691–1704. doi: 10.1016/j.clinph.2007.05.064. [DOI] [PubMed] [Google Scholar]

- 8.Dhond RP, Buckner RL, Dale AM, Marinkovic K, Halgren E. Spatiotemporal maps of brain activity underlying word generation and their modification during repetition priming. J Neurosci. 2001;21:3564–3571. doi: 10.1523/JNEUROSCI.21-10-03564.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Démonet J-F, Thierry G, Cardebat D. Renewal of the neurophysiology of language: functional neuroimaging. Physiol Rev. 2005;85:49–95. doi: 10.1152/physrev.00049.2003. [DOI] [PubMed] [Google Scholar]

- 10.Dronkers NF. A new brain region for coordinating speech articulation. Nature. 1996;384:159–161. doi: 10.1038/384159a0. [DOI] [PubMed] [Google Scholar]

- 11.Frederici AD. Pathways to language: fibre tracts in the human brain. Trends Cogn Sci. 2009;13:175–181. doi: 10.1016/j.tics.2009.01.001. [DOI] [PubMed] [Google Scholar]

- 12.Herdman AT, Pang EW, Ressel V, Gaetz W, Cheyne D. Task-related modulation of early cortical responses during language production: an event-related synthetic aperture magnetometry study. Cereb Cortex. 2007;17:2536–2543. doi: 10.1093/cercor/bhl159. [DOI] [PubMed] [Google Scholar]

- 13.Ihara A, Hirata M, Sakihara K, Izumi H, Takahashi Y, Kono K, Imaoka H, Osaki Y, Kato A, Yoshimine T, Yorifuji S. Gamma-band desynchronization in language areas reflects syntactic process of words. Neurosci Lett. 2003;339:135–138. doi: 10.1016/s0304-3940(03)00005-3. [DOI] [PubMed] [Google Scholar]

- 14.Indefrey P, Levelt WJM. The spatial and temporal signatures of word production components. Cognition. 2004;92:101–144. doi: 10.1016/j.cognition.2002.06.001. [DOI] [PubMed] [Google Scholar]

- 15.Kato Y, Muramatsu T, Kato M, Shintani M, Kashima H. Activation of right insular cortex during imaginary speech articulation. NeuroReport. 2007;18(5):505–509. doi: 10.1097/WNR.0b013e3280586862. [DOI] [PubMed] [Google Scholar]

- 16.Liljeström M, Hultén A, Parkkonen L, Salmelin R. Comparing MEG and fMRI views to naming actions and objects. Hum Br Mapp. 2009;30:1845–1856. doi: 10.1002/hbm.20785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.McArdle JJ, Mari Z, Pursley RH, Schulz GM, Braun AR. Electrophysiological evidence of functional integration between the language and motor systems in the brain: a study of the speech bereitschaftspotential. Clin Neurophysiol. 2009;120:275–284. doi: 10.1016/j.clinph.2008.10.159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Nishitani N, Hari R. Viewing lips forms: Cortical dynamics. Neuron. 2002;36:1211–1220. doi: 10.1016/s0896-6273(02)01089-9. [DOI] [PubMed] [Google Scholar]

- 19.Pang EW, Wang F, Malone M, Kadis DS, Donner EJ. Localization of Broca’s area using verb generation tasks in the MEG: validation against fMRI. Neurosci Lett. 2011;490:215–219. doi: 10.1016/j.neulet.2010.12.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Riecker A, Ackermann H, Wildgruber D, Dogil G, Grodd W. Opposite hemispheric lateralization effects during speaking and singing at motor cortex, insula and cerebellum. NeuroReport. 2000;11:1997–2000. doi: 10.1097/00001756-200006260-00038. [DOI] [PubMed] [Google Scholar]

- 21.Rosenfeld Johnson S. Oral-motor exercises for speech clarity. Talk Tools; Tucson, Arizona: 1999. [Google Scholar]

- 22.Saarinen T, Laaksonen H, Parviainen T, Salmelin R. Motor cortex dynamics in visumotor production of speech and non-speech mouth movements. Cereb Cortex. 2006;16:212–222. doi: 10.1093/cercor/bhi099. [DOI] [PubMed] [Google Scholar]

- 23.Salmelin R. Clinical neurophysiology of language: the MEG approach. Clin Neurophysiol. 2007;118:237–254. doi: 10.1016/j.clinph.2006.07.316. [DOI] [PubMed] [Google Scholar]

- 24.Salmelin R, Hari R, Lounasmaa OV, Sams M. Dynamics of brain activation during picture naming. Nature. 1994;368:463–465. doi: 10.1038/368463a0. [DOI] [PubMed] [Google Scholar]

- 25.Salmelin R, Sams M. Motor cortex involvement during verbal versus non-verbal lip and tongue movements. Hum Br Mapp. 2002;16:81–91. doi: 10.1002/hbm.10031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Seber GAF. Multivariate Observations. John Wiley & Sons, Inc; Hoboken, New Jersey: 1984. [Google Scholar]

- 27.Simonyan K, Ostuni J, Ludlow CL, Horwitz B. Functional but not structural networks of the human laryngeal motor cortex show left hemispheric lateralization during syllable but not breathing production. J Neurosci. 2009;29:14912–14923. doi: 10.1523/JNEUROSCI.4897-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Spath H. Cluster Dissection and Analysis: Theory. In: Goldschmidt J, translator. FORTRAN Programs, Examples. Halsted Press; New York, New York: 1985. [Google Scholar]

- 29.Vihla M, Laine M, Salmelin R. Cortical dynamics of visual/semantic vs. phonological analysis in picture confrontation. NeuroImage. 2006;33:732–738. doi: 10.1016/j.neuroimage.2006.06.040. [DOI] [PubMed] [Google Scholar]

- 30.Wheat KL, Cornelissen PL, Frost SJ, Hansen PC. During visual word recognition, phonology is accessed within 100 ms and may be mediated by a speech production code: evidence from magnetoencephalography. J Neurosci. 2010;30:5229–5233. doi: 10.1523/JNEUROSCI.4448-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wildgruber D, Ackermann H, Klose U, Kardatzki B, Grodd W. Functional lateralization of speech production in the primary motor cortex: a fMRI study. Neuroreport. 1996;7:2791–2795. doi: 10.1097/00001756-199611040-00077. [DOI] [PubMed] [Google Scholar]

- 32.Wilson SM, Saygin AP, Sereno MI, Iacoboni M. Listening to speech activates motor areas involved in speech production. Nature Neurosci. 2004;7(7):701–702. doi: 10.1038/nn1263. [DOI] [PubMed] [Google Scholar]