Abstract

Survey institutes recently have changed their measurement of generalized trust from the standard dichotomous scale to an 11-point scale. Additionally, numerous survey institutes use different question wordings: where most rely on the standard, fully balanced question (asking if “most people can be trusted or that you need to be very careful in dealing with people”), some use minimally balanced questions, asking only if it is “possible to trust people.” By using two survey-embedded experiments, one with 12,009 self-selected respondents and the other with a probability sample of 2,947 respondents, this study evaluates the generalized trust question in terms of question wording and number of scale points used. Results show that, contrary to the more commonly used standard question format (used, for example, by the American National Election Studies and the General Social Survey), generalized trust is best measured with a minimally balanced question wording accompanied with either a seven- or an 11-point scale.

Introduction

Generalized trust is often seen as one of the most important societal factors that makes democracy work (Putnam 1993; Uslaner 2002; Nannestad 2008). The importance of how to measure this concept is therefore evident (Glaeser et al. 2000; Reeskens and Hooghe 2008; Dinesen 2011). In surveys, generalized trust has often been measured using the standard question “Generally speaking, would you say that most people can be trusted or that you need to be very careful in dealing with people?” The question is accompanied by a dichotomous scale with the two alternatives “You can’t be too careful” and “Most people can be trusted.” This measurement is used, for example, by the American General Social Survey (GSS), the World Values Survey institute (WVS), and the American National Election Studies (ANES).

Contrarily, in the discussion on how to measure attitudes and values in surveys, a consensus has somewhat emerged that such concepts should be measured by several-point scales (Alwin and Krosnick 1991; Krosnick and Fabrigar 1997). By using longer rather than dichotomous scales, respondents’ attitudes, which are believed to range latently along continuums, can be correctly assessed. As a result, many survey institutes have changed their generalized trust measure from a dichotomous to a several-point scale.

In addition to the discussion of what number of scale points to use, the wording of the generalized trust question also differs between survey institutes, each using a slightly different wording. While some use a fully balanced question wording, stating both viewpoints “need to be very careful” and “most people can be trusted,” others use versions closer to a minimally balanced question wording, mentioning only a single viewpoint and instead balancing the question by adding “can be trusted” or “cannot be trusted” to the accompanying scale.

In response to these changes and differences, Uslaner (2012a) tries to defend the usage of the standard generalized trust question (i.e., the question used in the GSS, ANES, and the WVS) accompanied by a dichotomous scale. He argues that longer scales invite respondents to engage in satisficing behavior instead of answering the question attentively. This defense does, however, stand in contrast to what some of the prevailing survey methodology theories have previously recommended (Schuman and Presser 1981; Krosnick and Fabrigar 1997).

To gain additional insight in this area, this study applies survey methodology theories to determine how to best measure generalized trust. For this purpose, we use two survey-embedded experiments, which compare the different wordings and the different number of scale points and assess the differences in terms of the correlations with other concepts related to generalized trust; that is, testing concurrent validity (see Krosnick and Fabrigar 1997). By employing a randomized experimental design, this article (in contrast to previous studies; see Uslaner [2012a, 2012b]) is able to accurately assess the differences between various wordings of the generalized trust question and determine whether the dichotomous or the various several-point scales perform better.

The article first presents a brief overview of the prevailing theories on how to measure attitudes in survey research. This is followed by a discussion on why generalized trust might be an exception to other attitudes, which is then followed by a description of the data and the experimental design. Finally, results from the experiments are presented, followed by concluding remarks.

A Theory on Respondents’ Responses: Optimizers versus Satisficers

When responding to an attitude question, the researcher generally desires the respondent to answer in a manner most representative of the attitude believed to be measured. According to the theory on optimizers versus satisficers (Tourangeau 1984; Krosnick 1991, 1999), the respondent has to go through four different cognitive processes. First, the respondent has to interpret and understand the question. Second, the respondent has to search his or her memory for relevant information. Third, the searched information must be interpreted into a single judgment. Finally, this judgment must be translated into one of the responses provided in the questionnaire (Krosnick 1999). All four processes are cognitively demanding, but when they are thoroughly followed, the latent attitude will be correctly assessed and the respondent is said to be optimizing the response (Krosnick 1999). On the other hand, when the respondent does not put enough effort into these processes, the respondent might give a non-optimum answer or even a random one. If so, the respondent is said to be satisficing (548). According to Krosnick (1999, 548), satisficing is more likely to occur “(a) the greater the task difficulty, (b) the lower the respondent’s ability, and (c) the lower the respondent’s motivation to optimize.” In respect to evaluation of an individual question, the researcher’s main obligation is to minimize its task difficulty in order to enable the respondent to optimize.

Reducing Task Difficulty

A number of factors can decrease task difficulty. First, the use of fewer and easier-to-understand words can reduce the difficulty in understanding the intent of the question (e.g., Krosnick 1991). A study by Shaeffer et al. (2005) compared a fully balanced question wording of an attitude measurement (mentioning both endpoints and perspectives in the question) to a minimally balanced question wording of that same attitude (mentioning only one perspective but balancing it by stating both competing viewpoints; for example, “should or should not”). They found that minimally balanced wording produces measurements equally valid to those with the fully balanced wording (Shaeffer et al. 2005). Therefore, using a minimally balanced wording decreases the amount of space, words, and time used for the question compared to a fully balanced wording.

In our study, we analyze two different generalized trust question wordings used by several survey institutes. Specifically, we use the wording from the standard trust question (most notably used in the GSS, WVS, and ANES) and the wording used by the Swedish Society, Opinion, Media Institute (SOM). The standard trust question wording (“Generally speaking, would you say that most people can be trusted or that you need to be very careful in dealing with people?”) can be said to come closer to the fully balanced type, naming both “people can be trusted” and “need to be very careful in dealing with people.” The SOM measures generalized trust with a question that comes closer to being minimally balanced, asking, “In your opinion, to what extent is it generally possible to trust people?” and balancing it instead by naming both endpoints of the scale to range from (0) “people cannot generally be trusted” to (10) “people can generally be trusted.” Throughout this article, the two different variations of the wordings will be referred to as the “fully balanced” and the “minimally balanced” question wording, respectively. If the results from Shaeffer et al. (2005) extend to the generalized trust question, the minimally balanced wording should perform as well as the fully balanced wording, but with the added benefit of being shorter and thus possibly lowering the task difficulty.

Additionally, both the number and the wording of the scale points have been shown to be correlated with task difficulty. In this respect, Krosnick and Fabrigar (1997) claim that introducing more scale points will help respondents translate their attitudes into answers. Since attitudes are believed to range along a continuum instead of existing only as a “yes or no” in the minds of the respondents, providing a categorical response scale with multiple alternatives will enable respondents to more accurately represent their true attitude (Krosnick and Fabrigar 1997). If respondents are provided only a limited number of responses, they might not be able to correctly represent their attitude; thus, the cognitive difficulty and the frustration with the question might increase. However, increasing the number of scale points ad infinitum might mean even greater task difficulty, since the respondents might find it troublesome to interpret and differentiate between the points on a detailed scale. Krosnick and Fabrigar (1997, 146) concluded that the optimum number of response categories seems to be seven. When evaluating the generalized trust questions with the different number of scale points, we should, if Krosnick and Fabrigar (1997) are correct in their general advice, end up with the most valid measurement when using the seven-point scale and the least valid measurement when using the dichotomous scale.

Is Generalized Trust an Exception?

The question of whether generalized trust can be measured by survey questions at all, as well as the comparability of the trust survey questions across groups and countries, have been discussed quite extensively (Glaeser et al. 2000; Reeskens and Hooghe 2008). However, the generalized trust survey questions have shown strong test-retest stability at the aggregate level, high correlations with factors theoretically related to generalized trust, and seem to measure the same latent constructs across groups and countries (Nannestad 2008, 418–19; Dinesen 2011). Hence, the survey questions have been accepted as actually measuring some notion of the theoretical concept of generalized trust. This article, therefore, focuses on the more specific questions of what wordings and number of scale points to use when measuring generalized trust in surveys.

Also, accepting that generalized trust can be validly measured through surveys, Uslaner (2012a) contradicts the advice from Krosnick and Fabrigar (1997) and Reeskens and Hooghe (2008) by defending the dichotomous generalized trust scale. Although he agrees that generalized trust might not be a dichotomous attitude, Uslaner still claims that one should use the fully balanced question wording with a dichotomous scale, since providing respondents with a midpoint (i.e., an odd number of scale points) could make them flee toward the middle of the scale. This is in line with Krosnick’s (1991, 1999) argument that respondents, when satisficing, search for cues within a question that make it easier to answer, and one such cue could be an easily defended midpoint or a neither/nor alternative. However, empirically, results regarding a midpoint have been both negative and positive (Schuman and Presser 1981; Alwin and Krosnick 1991; Andrews 1984; Krosnick and Fabrigar 1997). If Uslaner’s (2012a) argument is true, adding more scale points will just make the trust question harder to answer and will decrease the validity of the measurement.

Nevertheless, Uslaner’s (2012a, 2012b) studies suffer from at least two general problems. First, one of his studies, which examines the Romanian Citizenship, Involvement, Democracy (CID) data, does not contain any randomization process (Uslaner 2012a). Instead, all respondents received the dichotomous scale version, and later in the survey they all received the 11-point scale version. Second, in the ANES 2006 pilot study, Uslaner (2012b) compared the fully balanced standard trust question wording accompanied by a dichotomous scale with a differently worded generalized trust question accompanied by a five-point scale. With such a design, it is impossible to differentiate between the effects of the wording and the scale. In contrast to this, our article uses a 2x3 full factorial experimental design, comparing two different wordings and three different scales to evaluate whether survey methodology theories also apply to the generalized trust question. 1

Hypotheses

Stemming from the survey methodology theories, we pose our hypotheses concerning the generalized trust question:

H1: The minimally balanced question wording should produce an equally valid measurement as the fully balanced question wording.

H2: Including more scale points should increase the validity of the measurement; hence, both the seven-point and the 11-point scales should outperform the dichotomous scale.

Design and Data

To improve our understanding of how to best measure generalized trust, we use two studies conducted by the Laboratory of Opinion Research (LORE) at the University of Gothenburg, Sweden, during the fall of 2012 (see Martinsson, Andreasson, and Pettersson 2013).

STUDY 1—SELF-SELECTED SAMPLE

Study 1 is a survey-embedded experiment on a web-based panel consisting of 12,009 self-selected Swedish citizens. This panel has existed since 2010 and focuses on political attitudes. In the fifth wave of the panel, we included an experiment, randomizing the respondents into six groups: three groups received the fully balanced question wording, and three groups received the minimally balanced wording. Each of the fully balanced and minimally balanced wording groups was also randomized to receive a dichotomous scale, a seven-point scale, or an 11-point scale. All scales, including the dichotomous, had labeled endpoints based on their respective question wordings (see table 1).

Table 1.

Experimental Groups with Exact Question Wording, Accompanied Number of Scale Points, and Labeled Endpoints

| Question wording | Scale | |

|---|---|---|

| Group 1—Minimally Balanced. “In your opinion, to what extent is it generally possible to trust people?” | People cannot generally be trusted | People can generally be trusted |

| Group 2—Fully Balanced. “Generally speaking, would you say that most people can be trusted or that you need to be very careful in dealing with people?” (Original Scale) | Most people can be trusted | Need to be very careful in dealing with people |

| Group 3—Minimally Balanced. “In your opinion, to what extent is it generally possible to trust people?” | People cannot generally be trusted | Most people can be trusted |

| 1 2 3 4 5 6 7 | ||

| Group 4—Fully Balanced. “Generally speaking, would you say that most people can be trusted or that you need to be very careful in dealing with people?” | Need to be very careful in dealing with people | People can generally be trusted |

| 1 2 3 4 5 6 7 | ||

| Group 5—Minimally Balanced. “In your opinion, to what extent is it generally possible to trust people?” (Original Scale) | People cannot generally be trusted | People can generally be trusted |

| 0 1 2 3 4 5 6 7 8 9 10 | ||

| Group 6—Fully Balanced. “Generally speaking, would you say that most people can be trusted or that you need to be very careful in dealing with people?” | Need to be very careful in dealing with people | Most people can be trusted |

| 0 1 2 3 4 5 6 7 8 9 10 | ||

Note.—Study 1 includes all groups, and study 2 includes groups 1 and 5.

STUDY 2—PROBABILITY SAMPLE

Study 2 is a web-based, survey-embedded experiment similar to study 1, but is based on a probability sample of 2,947 Swedish citizens who were receiving their first survey. The survey included an experiment randomizing the sample into two different groups: both groups received the minimally balanced wording, but with either a dichotomous scale or an 11-point scale. The probability sample in study 2 will increase the external validity of the findings in study 1 as well as rectify the potential problem of the participants in study 1 becoming trained panelists by receiving their fifth survey. 2

As a result of these two studies and designs, and in contrast to previous research on how to measure generalized trust, we are able to assess how the wordings affect the validity of the measurements and which number of scale points produces the most valid measurement. Additional information about the samples, response rates, and effectiveness of the random assignment can be found in appendix A.

Evaluating the Generalized Trust Question: Concurrent Validity

Following the evaluation criteria of Shaeffer et al. (2005), this article evaluates the generalized trust question by using concurrent validity. Concurrent validity (sometimes referred to as predictive or correlational validity) is the degree to which a given measure can predict other variables it empirically and theoretically should be related to (Krosnick and Fabrigar 1997; see also Ciuk and Jacoby 2014). In other words, if one type of question wording or number of scale points shows stronger relationships with factors theoretically or logically related to generalized trust, it would suggest that that particular wording or scale is performing better. If a specific question wording is less suited for measuring generalized trust, be it because the wording is harder to interpret or cognitively more difficult to answer, the question will perform less effectively, hence decreasing the relationships between the question and the other factors. The same goes for evaluating the number of scale points, since a more valid scale would produce stronger relationships (Krosnick and Fabrigar 1997; Shaeffer et al. 2005; Dinesen 2011).

Embedded in the upcoming analyses is the assumption that a stronger relationship between the target measure (generalized trust) and the concurrent validity measures is evidence of a more valid approach to measuring the generalized trust concept. A potential problem that might occur with this specific way of analyzing validity would be if the true relationship/correlation between generalized trust and the concurrent validity measures were to actually be weak (e.g., r = 0.15). In such a case, strong relationships would actually correspond to lower validity. However, since we have chosen only concurrent validity measures that should at least be moderately correlated with generalized trust (between 0.4 and 0.6), and since the strongest correlation and marginal effects found in the upcoming analyses do not exceed such a value, we do not see this as a real problem in our case (see online appendix C, table 2).

The variables that have previously been found to be both theoretically and empirically related to generalized trust, and which are used to assess the concurrent validity in this article, are trust in institutions (Rothstein and Stolle 2008), satisfaction with democracy (Zmerli and Newton 2008), trust in politicians (Zmerli and Newton 2008), life satisfaction (Sønderskov 2011), and education (Huang, van den Brink, and Groot 2011). The exact wording of these questions is presented in appendix B. 3

Results: Question Wordings

We start the analysis by evaluating the two different question wordings. The analysis will be based only on the six groups in study 1, since study 2 does not include this treatment. 4

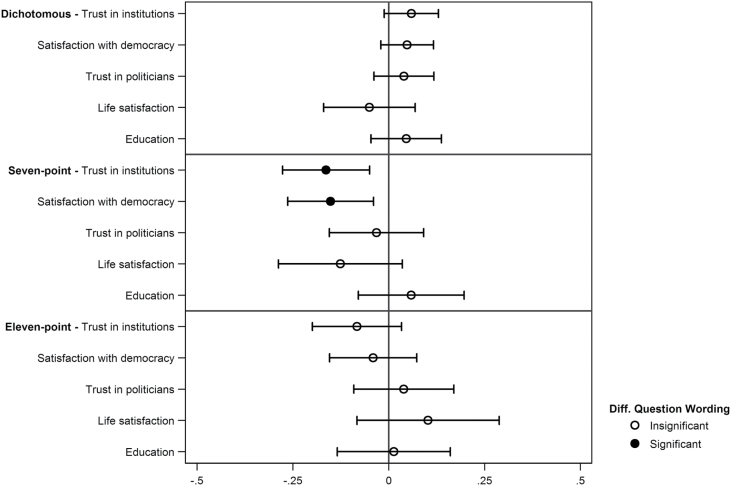

To evaluate the concurrent validity of the different question wordings, we performed OLS regressions with the variables that generalized trust should predict as dependent variables. To measure whether the strength of the relationships is significantly different from each other, a dummy variable for the question wording (0 = the minimally balanced wording, 1 = the fully balanced wording) is interacted with the generalized trust variable (cf. Shaeffer et al. 2005, 421). By performing such an analysis, large enough differences, in terms of concurrent validity between the different groups, will produce statistically significant interaction coefficients, with the sign of the coefficient telling which of the groups is performing better. The sign and statistical significance of the interaction term are important, since we argue that a statistically stronger relationship is evidence of a more valid question wording. The generalized trust variable is normalized to range from 0 to 1, irrespective of the original scale. Doing so will produce comparable coefficients in all the regressions. The interaction coefficients are presented in figure 1.

Figure 1.

Effect of Question Wording.

The interaction of generalized trust and question wording from the OLS regressions on five different generalized trust related variables. Study 1: Self-Selected Sample (15 interaction coefficients with 95% confidence intervals). Significant = p < 0.05. Interaction coefficient of generalized trust × experiment group (0 = minimally balanced, 1 = fully balanced). All variables are normalized to range from 0 to 1. The full models can be found in online appendix C, table 3.

Source.—Citizen Panel V.

When evaluating the concurrent validity, only two out of the 15 regression models show significant differences for the two question wordings, and then only when accompanied by the seven-point scale. In these two models, the minimally balanced wording outperforms the fully balanced (standard) wording with interaction coefficients of 0.15 and 0.16 (see online appendix C, table 3). Hence, the results speak slightly in favor of the minimally balanced wording, especially when it is accompanied by a seven-point scale. Furthermore, even though the fully balanced wording is designed to have a dichotomous scale, the minimally balanced wording (designed for an 11-point scale) performs just as well when using a dichotomous scale.

The inclusion of an interaction term in a model may, however, introduce collinearity, which inflates the standard errors of the coefficients. This implies that a strategy based solely on testing the statistical significance of the interaction coefficient may be incomplete. For this reason, we have also calculated a joint test of significance (F-test) to determine whether the addition of the interaction variable causes a significant increase in the fit of the model. Here, we simply follow Hamilton (1992) and compare a constrained bivariate model that uses only generalized trust as an independent variable without the interaction term to the more complex unconstrained model with the interaction included. The equation for the F-test is as follows:

| (1) |

where SSR reduced is the sum of the squared residuals for the constrained bivariate model, SSR full is the sum of the squared residuals for the unconstrained full model including the interaction term, df reduced is the degrees of freedom for the bivariate model, and df full is the degrees of freedom for the interaction model. An F-value exceeding the threshold value of 3.00 (for α = 0.05) would therefore suggest that the interaction coefficient is significantly increasing the fit of the model. 5

In our models, the goodness-of-fit is significantly increased in both groups having a significant interaction coefficient, while the rest of the models, in line with what was expected, do not show a significant improvement to the fit (see online appendix C, table 3). Summing up, changing the wording does not produce clear differences in the concurrent validity. However, when differences were found, the analysis favors the minimally balanced question wording.

Results: Number of Scale Points

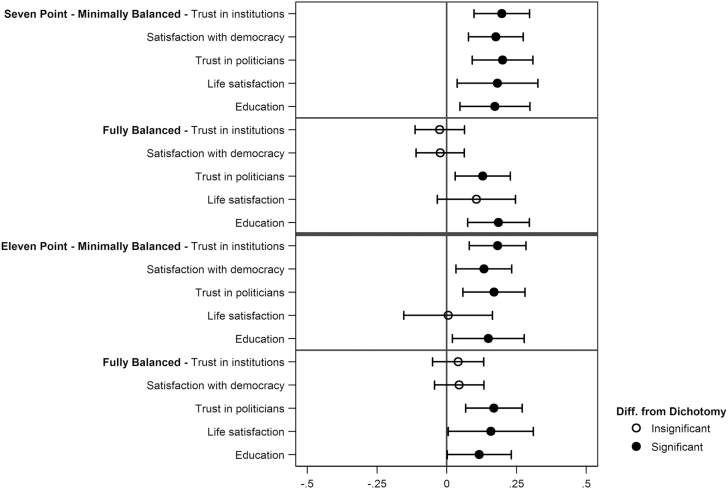

To evaluate whether one scale performs better than the other, we used the same concurrent validity criteria and regression method as above, but instead the dummy variables that are interacted with generalized trust are based on the number of scale points the respondents received, with the dichotomous scale as reference group. As above, the generalized trust variable is normalized to range from 0 to 1 irrespective of the original scale, producing comparable coefficients in all the regressions. Again, the sign of the interaction coefficient informs us which of the groups are performing better, and again, the F-test between the constrained and the unconstrained models is analyzed to see whether the interaction effects significantly improve the fit of the model. When evaluating the scales, we used the six groups in study 1 (self-selected sample) and the two groups in study 2 (probability sample). The results are presented in figures 2 and 3.

Figure 2.

Effect of Number of Scale Points.

The interaction of generalized trust and the number of scale points from the OLS regressions on five different generalized trust related variables. Study 1: Self-Selected Sample (20 interaction coefficients with 95% confidence intervals). Significant = p < 0.05. Interaction coefficient of generalized trust × experiment group (dichotomous as reference group). All variables are normalized to range from 0 to 1. The full models can be found in online appendix C, table 4.

Source.—Citizen Panel V.

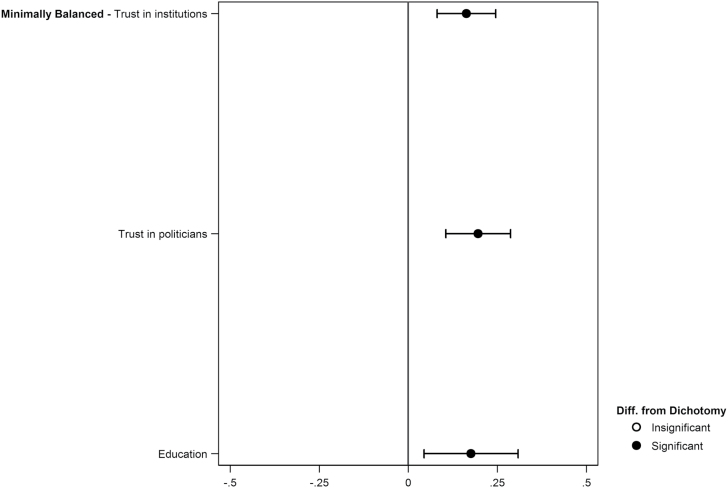

Figure 3.

Effect of Number of Scale Points.

The interaction of generalized trust and the eleven-point scale from the OLS regressions on three different generalized trust related variables. Study 2: Probability Sample (3 interaction coefficients with 95% confidence intervals). Significant = p < 0.05. Interaction coefficient of generalized trust × experiment group (0 = dichotomous, 1 = eleven-point). All variables are normalized to range from 0 to 1. In study 2, measurements of life satisfaction and satisfaction with democracy were not included. The full models can be found in online appendix C, table 5.

Source.—Probability Sample.

In study 1, when evaluating the differences in the concurrent validity between the dichotomy and the seven-point scale, seven out of the 10 regression models showed significant differences between the groups, and in all seven of these models the seven-point scale outperformed the dichotomous scale with interaction coefficients between 0.13 and 0.20 (see figure 2). When comparing the dichotomous scale to the 11-point scale, seven out of the 10 regression models showed significant differences between the groups, and in all seven of these models the 11-point scale outperformed the dichotomy with interaction coefficients between 0.13 to 0.18 (see figure 2). No significant effects exist between the seven- and 11-point scales. Hence, none of these analyses speak in favor of using the dichotomous scale over the longer scales. 6

When testing the difference in the goodness-of-fit (F-test) between the constrained and the unconstrained models, all but one model having a significant interaction coefficient showed a significant increase in the goodness-of-fit (threshold F-value = 2.60 for α = 0.05). Showing an insignificant increase in the goodness-of-fit while having a significant interaction coefficient suggests that collinearity might be a problem by inflating the standard errors of the coefficients. However, this would be of little concern, given that the interaction coefficient of that model is actually already significant (see online appendix C, table 4). In addition, one model, having an insignificant interaction coefficient (on the fully balanced question wording), shows a significant increase in the goodness-of-fit. In this model, the insignificant interaction coefficient for the 11-point scale showed an increase in the concurrent validity, while the seven-point scale showed a decrease when compared to the dichotomous scale. Regardless, in sum, the general result is that the several-point scales are more valid than the dichotomous scale in measuring generalized trust.

Study 2 contained a survey-embedded experiment that was similar to study 1 but based on a probability sample receiving their first survey. In this sample, the two groups received the minimally balanced question wording accompanied by either a dichotomous or an 11-point scale. The results are presented in figure 3.

In study 2, the 11-point scale outperformed the dichotomous scale in all the regression models with interaction coefficients between 0.16 and 0.20 (see figure 3). All models also showed a significant increase in the goodness-of-fit (F-test) compared to the constrained model (see online appendix C, table 5). In other words, the effects found in the self-selected sample are replicated and perhaps even more clearly represented in the probability sample. 7

Summing up, increasing the number of scale points produces more valid measurements than forcing respondents to choose between two response categories. Hence, the benefits of using the dichotomous scale that Uslaner (2012a, 2012b) perceived do not seem to exist when comparing the various number of scale points with the same wording. 8

Conclusions

In this article, we have compared generalized trust questions in terms of question wording and number of scale points by using a randomized experimental approach on one self-selected sample and one probability sample. We evaluated these question designs in terms of correlations with other concepts related to generalized trust; that is, testing concurrent validity.

Regarding question wording, the minimally balanced wording slightly outperformed the fully balanced standard generalized trust question wording. Hence, decreasing the number of words in the generalized trust question seems to somewhat increase the validity of the measurement, perhaps because task difficulty is reduced. An alternative or perhaps complementary explanation could be that the fully balanced wording is not balanced in an effective way, making it slightly harder for respondents to answer it validly. Since the endpoints of the scale are worded “need to be very careful in dealing with people” and “most people can be trusted,” it might invoke two different attitudes at the same time, while the minimally balanced wording is easier to attribute to just one and the same attitudinal continuum.

Regarding the number of scale points, the seven-point and 11-point scales consistently outperformed the dichotomous counterpart. In line with Krosnick and Fabrigar (1997), increasing the number of scale points produced more valid measurements, as well as increased the detail in which the latent attitude could be measured.

When evaluating the generalized trust question using survey methodology theory, generalized trust does not seem to be an exception from other attitudes. On the contrary, previous survey methodology theories shed light on how to measure this concept, and our study further strengthens the theories on how to generally measure attitudes in surveys. Contradicting Uslaner’s (2012a, 2012b) defense of a fully balanced question wording accompanied by a dichotomous scale, this article therefore has two recommendations when measuring generalized trust as well as other attitudes in surveys: (1) Use a minimally balanced wording. This produces an equally valid measurement but reduces the number of words used, making it easier for the respondent to understand the question. In addition, (2) do not use a dichotomous scale. Using a several-point scale provides a more valid as well as more substantively detailed measurement.

Supplementary Data

Supplementary data are freely available online at http://poq.oxfordjournals.org/.

Appendix A. Sample Description

STUDY 1—SELF-SELECTED SAMPLE

The sample is mainly a self-recruited sample of Swedish citizens, with some respondents (12 percent) being recruited from various probability samples of the Swedish population (Martinsson et al. 2013).

Field period: November 12–December 12, 2012. Gross sample size: 12,604. Net sample size: 12,009. E-mail bounce backs: 595. Coverage rate/Absorption rate: 95 percent. Responses: 7,918. Partial responses: 38. AAPOR response rate (RR1): 63 percent.

STUDY 2—PROBABILITY SAMPLE

The sample is a probability sample of 18- to 70-year-old Swedish citizens, based on Statens Personadressregister (SPAR), which is a full register of all persons registered as residents in Sweden. They were recruited through a postcard invitation, sent to their home address, which contained a specified log-in for the web survey.

Field period: November 14, 2012–February 21, 2013. Gross sample size: 29,000. Responses: 2,947. Partial responses: 123. AAPOR response rate (RR1): 10 percent. Gross response rate: 10 percent. The dropout analyses show significant differences in age (not answered M = 42.78, SD = 15.13; answered M = 48.54, SD = 15.01; t = –19.38, p = 0.000) and gender (not answered M = 0.51, SD = 0.50; answered M = 0.48, SD = 0.50; t = 2.63, p = 0.001); however, no significant difference could be found for living in a large city (not answered M = 0.10, SD = 0.30; answered M = 0.11, SD = 0.32; t = –1.88, p = 0.06). The external validity of study 2 might be somewhat compromised due to the low response rate. However, compared to a self-selected sample, the external validity will most likely be higher.

Effectiveness of Random Assignment

Due to randomization, all the variance between the different groups should be equally distributed. However, we test the effectiveness of the randomization by comparing the groups on all variables used in the upcoming analysis as well as the available demographic factors of gender and age.

Study 1:

As expected, in terms of gender (F = 1.15, p = 0.33), age (F = 1.91, p = 0.09), satisfaction with democracy (F = 0.52, p = 0.76), life satisfaction (F = 0.75, p = 0.59), trust in politicians (F = 1.18, p = 0.32), trust in institutions (F = 0.81, p = 0.54), and education (F = 0.33, p = 0.90), the randomization was successful. Hence, all groups in study 1 were effectively randomized.

Study 2:

Randomization in study 2 was successful in terms of gender (F = 0.65, p = 0.42), age (F = 0.19, p = 0.66), trust in politicians (F = 0.34, p = 0.56), trust in institutions (F = 0.46, p = 0.46), and education (F = 1.70, p = 0.19). Hence, all groups in study 2 were effectively randomized.

Appendix B. Measurements

The criterion variables used to assess the concurrent validity of the measurements in this study are the following:

Life Satisfaction:

Measured by the question “All things considered, how satisfied with your life as a whole are you these days?” and coded as (1) completely dissatisfied, (2) somewhat dissatisfied, (3) somewhat satisfied, and (4) completely satisfied, and was recoded to range from 0 (completely dissatisfied) to 1 (completely satisfied).

Trust in Institutions:

An index comprised of five questions asking, “In general, how much confidence do you have in the following institutions?” with the categories government, parliament, European Union (EU), local government board, and local council, with the original scale ranging from (1) very little confidence, (2) little confidence, (3) neither little nor some confidence, (4) some confidence, to (5) very much confidence. Comprising these variables into an index yields a Cronbach’s alpha of 0.82 (study 1) and 0.81 (study 2), and the scale was recoded to range from 0 (very little confidence) to 1 (very much confidence).

Satisfaction with Democracy:

An index comprised of four questions asking, “Generally speaking, how satisfied are you with the way democracy works in” the categories EU, Sweden, the region where you live, and the municipality where you live, with the original scale of (1) completely dissatisfied, (2) somewhat dissatisfied, (3) somewhat satisfied, and (4) completely satisfied. Comprising these variables into an index yields a Cronbach’s alpha of 0.79, and the scale was recoded to range from 0 (completely dissatisfied) to 1 (completely satisfied).

Trust in Politicians:

Measured by the question “Generally speaking, how much trust do you have in Swedish politicians?” with response alternatives ranging from (1) very little confidence, (2) little confidence, (3) neither little nor some confidence, (4) some confidence, to (5) very much confidence. The variable was recoded to range from 0 (very little confidence) to 1 (very much confidence).

Education:

Measured by the question “What is your highest finished degree?” with response alternatives (1) not completed elementary school, (2) elementary school, (3) studies at upper-secondary school, (4) degree from upper-secondary school, (5) studies after upper-secondary but not at university, (6) studies at university, (7) degree from university, and (8) PhD or graduate degree. The variable was recoded into three categories: elementary school (original categories 1–2, recoded to 0), upper secondary (original categories 3–5, recoded to 0.5), and university (original categories 6–8, recoded to 1).

Given that some survey institutes allow the respondents to use a “don’t know” (DK) option when using the fully balanced question accompanied with a dichotomous scale (e.g., the WVS), we also added variations in whether each of the question wordings and number of scale points were accompanied with a DK option. By doing so, we can safely conclude that the differences between the groups are not due to the respondents being unable to opt out from taking a stance, which could potentially have implications for, most notably, the dichotomous scale. However, including a DK option did not affect the results presented in this article; hence, we present only the results from the groups receiving a DK option in online appendix D.

Additionally, we replicated the experiment conducted in study 2 on a third sample using a mixed probability and self-selected sample of 1,545 Swedish citizens. The effects found in studies 1 and 2 were replicated in the third study (study 3), hence increasing the robustness of this article’s findings. For a full presentation of study 3, see online appendix E.

In study 2, the measurements of life satisfaction and satisfaction with democracy were not included.

All analyses in this article are done using the software Stata 12 SE.

A comparable strategy is used by Ciuk and Jacoby (2014), in which they also compare the amount of explained variance for a constrained and an unconstrained model. The added benefit of their method, as opposed to analyzing only the F-test, is that, while the F-test informs us whether the difference in goodness-of-fit between the two models is statistically significant, their method also shows the increase in the actual explained variance (R 2). However, in our analyses, we focus only on the F-test, since it tells us, not only whether linearity (R 2) has increased, but also whether it has done so in a statistically significant manner. It should be noted, however, that in this article, all analyses that show significant F-tests also correspond to an increase, albeit small, in explained variance (adjusted R 2). Hence, for those models having a significant interaction term, the explained variance has also increased. The constrained and unconstrained models’ adjusted R 2 and the F-test statistic can be found in online appendix C, table 3.

When including a DK option, the differences between the dichotomous scale and the seven-point scale are not as evident (only two out of the 10 regression models show significantly better results for the seven-point scale; see online appendix D). However, the inclusion of a DK option does not lower the differences between the dichotomous scale and the 11-point scale.

When including a DK option, the 11-point scale outperformed the dichotomy in all regression models as well (see online appendix D, table 8).

The results shown here are also replicated in study 3 with the mixed probability and self-selected sample (see online appendix E).

References

- Alwin Duane F., Krosnick Jon A. 1991. “The Reliability of Survey Attitude Measurement: The Influence of Question and Respondent Attributes.” Sociological Methods and Research 20:139–81. [Google Scholar]

- Andrews Frank M. 1984. “Construct Validity and Error Components of Survey Measures: A Structural Modeling Approach.” Public Opinion Quarterly 48:409–42. [Google Scholar]

- Ciuk David J., Jacoby William G. 2014. “Checking for Systematic Value Preferences Using the Method of Triads.” Political Psychology. doi: 10.1111/pops.12202. [Google Scholar]

- Dinesen Peter T. 2011. “A Note on the Measurement of Generalized Trust of Immigrants and Natives.” Social Indicators Research 103:169–77. [Google Scholar]

- Glaeser Edward L., Laibson David I., Scheinkman José A., Soutter Christine L. 2000. “Measuring Trust.” Quarterly Journal of Economics 115:811–46. [Google Scholar]

- Hamilton Lawrence C. 1992. Regression with Graphics: A Second Course in Applied Statistics. Belmont, CA: Brooks/Cole. [Google Scholar]

- Huang Jian, van den Brink Henriëtte Maassen, Groot Wim. 2011. “College Education and Social Trust: An Evidence-Based Study on the Causal Mechanisms.” Social Indicator Research 104:287–310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krosnick Jon A. 1991. “Response Strategies for Coping with the Cognitive Demands of Attitude Measures in Surveys.” Applied Cognitive Psychology 5:213–36. [Google Scholar]

- ———. 1999. “Survey Research.” Annual Review of Psychology 50:537–67. [DOI] [PubMed] [Google Scholar]

- Krosnick Jon A., Fabrigar Leandre R. 1997. “Designing Rating Scales for Effective Measurement in Surveys.” In Survey Measurement and Process Quality, edited by Lyberg Lars, Biemer Paul, Collins Martin, Leeuw Edith de, Dippo Cathryn, Schwarz Norbert, Trewin Dennis, 141–64. New York: John Wiley & Sons. [Google Scholar]

- Martinsson Johan, Andreasson Maria, Pettersson Liza. 2013. Technical Report Citizen Panel 5–2012. Gothenburg: University of Gothenburg, LORE, 16 October. Available at http://www.lore.gu.se/digitalAssets/1455/1455265_technical-report-citizen-panel-5.pdf. [Google Scholar]

- Nannestad Peter. 2008. “What Have We Learned about Generalized Trust, If Anything?” Annual Review of Political Science 11:413–36. [Google Scholar]

- Putnam Robert D. 1993. Making Democracy Work. Princeton, NJ: Princeton University Press. [Google Scholar]

- Reeskens Tim, Hooghe Marc. 2008. “Cross-Cultural Measurement Equivalence of Generalized Trust. Evidence from the European Social Survey (2002 and 2004).” Social Indicators Research 85:515–32. [Google Scholar]

- Rothstein Bo, Stolle Dietlind. 2008. “The State and Social Capital: An Institutional Theory of Generalized Trust.” Comparative Politics 40:441–67. [Google Scholar]

- Schuman Howard, Presser Stanley. 1981. Questions and Answers in Attitude Surveys: Experiments on Question Form, Wording, and Context. New York: Academic Press. [Google Scholar]

- Shaeffer Eric M., Krosnick Jon A., Langer Gary E., Merkle Daniel M. 2005. “Comparing the Quality of Data Obtained by Minimally Balanced and Fully Balanced Attitude Questions.” Public Opinion Quarterly 69:417–28. [Google Scholar]

- Sønderskov Kim. 2011. “Does Generalized Social Trust Lead to Associational Membership? Unravelling a Bowl of Well-Tossed Spaghetti.” European Sociological Review 27:419–34. [Google Scholar]

- Tourangeau Roger. 1984. “Cognitive Sciences and Survey Methods.” In Cognitive Aspects of Survey Methodology: Building a Bridge between Disciplines, edited by Jabine Thomas B., Straf Miron L., Tanur Judith M., Tourangeau Roger, 73–100. Washington, DC: National Academies Press. [Google Scholar]

- Uslaner Erik M. 2002. The Moral Foundations of Trust. Cambridge: Cambridge University Press. [Google Scholar]

- ———. 2012a. “Measuring Generalized Trust: In Defense of the ‘Standard’ Question.” In Handbook of Research Methods on Trust, edited by Lyon Fergus, Möllering Guido, Saunders Mark N. K., 72–84. Cheltenham, UK: Edward Elgar Publishing Limited. [Google Scholar]

- ———. 2012b. “Generalized Trust Questions.” In Improving Public Opinion Surveys: Interdisciplinary Innovation and the American National Election Studies, edited by Aldrich John H. and McGraw Kathleen M., 101–14. Princeton, NJ: Princeton University Press. [Google Scholar]

- Zmerli Sonja, Newton Ken. 2008. “Social Trust and Attitudes Toward Democracy.” Public Opinion Quarterly 72:706–24. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.