Abstract

Purpose

Medical school admissions committees are increasingly considering noncognitive measures like emotional intelligence (EI) in evaluating potential applicants. This study explored whether scores on an EI abilities test at admissions predicted future academic performance in medical school to determine whether EI could be used in making admissions decisions.

Method

The authors invited all University of Ottawa medical school applicants offered an interview in 2006 and 2007 to complete the Mayer–Salovey–Caruso EI Test (MSCEIT) at the time of their interview (105 and 101, respectively), then again at matriculation (120 and 106, respectively). To determine predictive validity, they correlated MSCEIT scores to scores on written examinations and objective structured clinical examinations (OSCEs) administered during the four-year program. They also correlated MSCEIT scores to the number of nominations for excellence in clinical performance and failures recorded over the four years.

Results

The authors found no significant correlations between MSCEIT scores and written examination scores or number of failures. The correlations between MSCEIT scores and total OSCE scores ranged from 0.01 to 0.35; only MSCEIT scores at matriculation and OSCE year 4 scores for the 2007 cohort were significantly correlated. Correlations between MSCEIT scores and clinical nominations were low (range 0.12–0.28); only the correlation between MSCEIT scores at matriculation and number of clinical nominations for the 2007 cohort were statistically significant.

Conclusions

EI, as measured by an abilities test at admissions, does not appear to reliably predict future academic performance. Future studies should define the role of EI in admissions decisions.

Medical school admissions committees are tasked with selecting the most suitable candidates for entrance to their programs. Traditionally, admissions committees have focused on cognitive measures, including grade point average (GPA), the Medical College Admission Test in North America, and the Graduate Medical School Admissions Test in the United Kingdom and Australia. These cognitive measures have demonstrated predictive validity and reliability.1 Although admissions committees have long acknowledged the importance of such cognitive skills in assessing applicants, more recently, they have come to recognize the importance of noncognitive skills as well.2 In addition, the Accreditation Council for Graduate Medical Education’s six core competencies are patient care, medical knowledge, practice-based learning and improvement, interpersonal and communication skills, professionalism, and systems-based practice.3 In Canada, the CanMEDS framework includes not only medical expert and scholar roles but also professional, manager, health advocate, communicator, and collaborator roles.4

To better understand applicants’ noncognitive skills, such as their communication, interpersonal, and professionalism abilities, researchers have suggested exploring the construct of emotional intelligence (EI).5 EI is defined as the ability to monitor one’s own and others’ emotions, to discriminate among them, and to use the information to guide thinking and actions.6 Outside health care, EI has been linked to individuals’ academic success, social skills, job satisfaction, and improved interpersonal relations.7,8 Within health care, EI is considered important because understanding patients’ emotions and controlling one’s own emotions are essential to maintaining effective doctor–patient relationships and to working successfully in teams. EI also may be relevant to the competencies of professionalism and systems-based practice, which require good communication skills and teamwork.9 A recent systematic review of studies with empirical data on EI in physicians or medical students revealed that higher EI scores contributed to improved doctor–patient relationships, increased empathy, and improved teamwork and communication skills, as well as better stress management, organizational commitment, and leadership skills.9 In addition, researchers have studied specifically how to increase EI in medical students.10 Together, these findings suggest that using EI in admissions decisions could have value.

However, very few studies have examined the use of EI in assessing applicants to medical school or in predicting their future performance. Carr,11 for example, found no association between EI and traditional admissions criteria. Leddy and colleagues12 found similar results—no relationship between EI at admissions, as measured by the Mayer–Salovey–Caruso Emotional Intelligence Test (MSCEIT), GPA, interview scores, and autobiographical sketch scores. If admissions committees are to use EI in high-stakes decision making, we need more information regarding the measure’s predictive validity. Specifically, we must understand if EI correlates with future academic performance and, if so, what type of performance EI predicts.

EI is a set of four distinct yet related abilities, including perceiving emotions, using emotions, understanding emotions, and managing emotions.5 To communicate effectively with patients, physicians must develop a rapport, trust, and an ethical therapeutic relationship, as well as accurately elicit and synthesize relevant information.4 Such successful communication requires physicians to complete a complex process in which they perceive their patients’ emotions, manage their own reactions, and use those emotions to facilitate their performance.5 Recently, McNaughton13 suggested that EI is a skill that is observable and measurable through assessment methods such as the objective structured clinical examination (OSCE). In an OSCE, students must communicate effectively with standardized patients while skillfully acquiring information. Thus, we expect that a student’s EI would correlate with his or her future performance on a clinical skills test, such as an OSCE, but would not correlate with his or her future performance on written examinations of knowledge.

The methods used to assess EI are relevant. In a recent systematic review of EI studies in medicine, all researchers measured EI using self-report questionnaires.9 Many raised significant concerns about relying on scores based on individuals’ subjective judgments of their own abilities because individuals could readily inflate their scores in a high-stakes environment, such as during the admissions process. Several authors demonstrated that faking on self-report tests changed the rank order of applicants.14,15 Some authors suggest that using an abilities test, such as the MSCEIT v2.0, to measure EI is preferable.11,16 Such a test directly assesses an individual’s ability to perceive emotions accurately, use emotions to facilitate thought, understand emotions, and manage emotions. It also has robust psychometric properties, is affordable, and is available in several languages.17

No gold standard exists for measuring EI, and experts in the field disagree on the construct of EI, how to measure it, and the reliability and validity of measurement tools.18 In addition, some have argued that the lack of conceptual clarity around the construct itself limits what we can interpret from EI scores.18 Thus, further research is needed to improve our understanding of this challenging construct.

The purpose of our study was to explore the use of EI as a measure of applicants’ noncognitive skills at admissions. We focused on determining the degree to which scores on an abilities test of EI predicted future academic performance on assessments of students’ cognitive and noncognitive skills in medical school.

Method

Admissions process

The University of Ottawa Medical School annually selects 160 applicants for admission from a large pool (mean n = 2,900). In a previous study, we described this process in detail.12 In summary, on the basis of the weighted GPA (wGPA) and autobiographical sketch score, the admissions committee offers approximately 18% of applicants an interview. They calculate the wGPA using a weighting ratio of 3:2:1 for applicants’ GPAs in the three most recent years of full-time undergraduate studies, and then they determine a cutoff score. For eligible applicants, trained faculty then assess the autobiographical sketches in six areas, including education, employment, volunteering, extracurricular activities, awards, and research contributions. Three independent raters subsequently individually interview and score the selected applicants and determine each applicant’s final score through an iterative process.

Participants

We invited all applicants in 2006 and 2007 who were offered an interview to participate in the study. Those who agreed to participate completed the MSCEIT v2.0 in English or French immediately after their admissions interview in March of either 2006 or 2007, approximately six months prior to their potential matriculation. We then invited participants who were accepted to the university to complete the MSCEIT v2.0 again at matriculation. We proctored all deliveries of the test, and an outsider vendor, Multi-Health Systems Inc., scored the completed tests.

MSCEIT v2.0

The MSCEIT v2.0 is an abilities-based measure of EI that consists of 141 items and is accessible online.17 Each item is scored by a general consensus method in which a respondent’s answer is scored according to the proportion of the reference sample (n = 5,000) of the adult population that endorsed the same MSCEIT answer. The respondent’s raw item scores are compiled to generate a total EI score. The MSCEIT score is reported in a way that is similar to that of intelligence scales, with 100 as the mean reference score and 15 as the standard deviation. An individual with a MSCEIT score above 115 is thought to demonstrate a high level of EI, whereas someone with a score below 85 is considered to have a level of EI that potentially could cause interpersonal problems.

Written examinations of knowledge

At the end of each of 13 preclerkship learning blocks, all students completed multiple-choice and short-answer questions to assess their knowledge. A team of content experts constructed the examinations and linked content to specific learning objectives. Faculty generated each student’s overall score for that block by combining the student’s scores on the written, laboratory, and individual and population health examinations. In this study, we calculated participants’ mean overall scores for the six year 1 and seven year 2 learning blocks.

During clerkships, faculty also assessed students’ knowledge using written examinations. They calculated each student’s overall score for each rotation as a composite of their scores on the multiple-choice and short-answer sections. In this study, we calculated participants’ mean overall score for the eight core clerkship rotations in year 3.

OSCEs

Over the course of their four-year program, all medical students completed three OSCEs, one in each of years 2, 3, and 4. Each OSCE consisted of 9 or 10 stations that tested students’ clinical skills, such as history taking, physical examination, and management, and covered content from family medicine, internal medicine, psychiatry, pediatrics, surgery, and obstetrics–gynecology. Each station was seven minutes in length and was scored by a physician examiner who used a standardized content checklist and a communication rating scale. Content experts designed the stations, and a committee of chief examiners—four faculty members with expertise in OSCE case writing—reviewed them. All physician examiners contributed to the standard-setting process using the modified borderline group method.19

The communication rating scale used to score students’ performance included four items—(1) interpersonal skills (listens carefully; treats patient as an equal); (2) interviewing skills (uses words patient can understand; organized; does not interrupt; allows patient to explain); (3) patient education (provides clear, complete information; encourages patient to ask questions; answers questions clearly; confirms patient’s understanding/opinion); and (4) response to emotional issues (recognizes, accepts, and discusses emotional issues; controls own emotional state). Physician examiners rated each student on each item using a 5-point scale from poor to excellent, for a maximum potential score of 20 points. The physician examiners were faculty members who regularly taught medical students and participated in an orientation and training prior to the examination. Almost all had previous experience as OSCE examiners. Trained standardized patients portrayed the cases but were not involved in the scoring. Each OSCE station score comprised the mean checklist score (worth 80% of the total score) and the communication rating scale (worth 20% of the total score). The scores for each station were added to provide an overall OSCE score.

Clinical nominations and failures

In every clerkship rotation, preceptors could recognize students for their outstanding clinical performance on the basis of the preceptor’s impression of the student’s knowledge, skills, and behavior in providing patient care and the student’s role as a member of the health care team. On the basis of the aggregate information from all preceptors for that rotation, the clerkship director made the final decision regarding each nomination on the medical student performance evaluation (MSPE). In our study, we tallied the number of nominations over the two years of clerkship documented in each student’s MSPE and any failures on written examinations, OSCEs, or preceptor evaluations over the four years of medical school.

Analysis

We calculated descriptive statistics and Pearson correlations to compare participants’ MSCEIT scores with their written examination and OSCE scores as well as their scores on the communication rating scale. With a potential sample size of 160 participants in each cohort, there would be enough power to detect a significant correlation as low as 0.22. In our study, we compared the two cohorts separately because examinations and assessments could differ from one academic year to the next. To protect our calculations from an increase in the familywise error rate associated with multiple correlations, we applied a Bonferroni correction to each analysis involving one or the other cohort. All data were anonymous, and personal information for all participants was protected. The Ottawa Hospital research ethics board approved our study.

Results

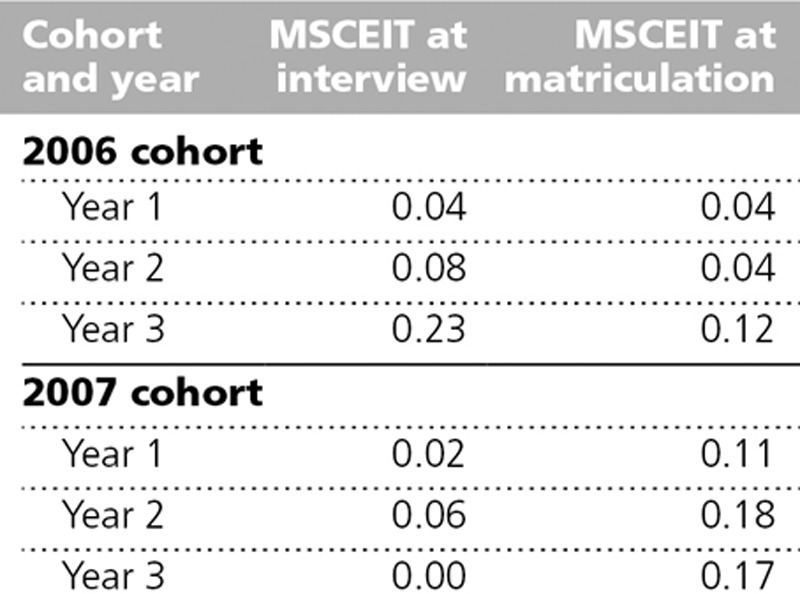

The percentage of applicants who completed the MSCEIT in 2006 was 70% (333/475) and in 2007 was 67% (326/490). Participants were similar to nonparticipants in gender and eventual offer of admission. Participation in the study (once admitted to the university) ranged from 70% (105/151) to 79% (120/151) for the 2006 cohort and 66% (101/153) to 69% (106/153) for the 2007 cohort. In Table 1, we include the MSCEIT scores for both cohorts and both time periods (at interview and at matriculation). The split-half reliability coefficient for the MSCEIT was 0.87/0.89, and Cronbach alpha was 0.86/0.87, for the 2006 and 2007 cohorts, respectively. MSCEIT scores all fell within the “usual” range, as described by the test developers, with no significant differences between the cohorts.

Table 1.

Mean (Standard Deviation [SD]), Minimum, and Maximum Scores on the Mayer–Salovey–Caruso Emotional Intelligence Test (MSCEIT), Written Examinations, and Objective Structured Clinical Examinations (OSCEs), University of Ottawa Faculty of Medicinea

Table 1 also includes the mean, standard deviation, minimum, and maximum scores for the written and OSCE examinations and the communication rating scale. The year 1, 2, and 3 written examination scores fell within the expected range. The year 2, 3, and 4 OSCE scores demonstrated typical means ranging from 67% to 80%, and the distribution of scores was similar to that in previous years.

Table 2 includes the results of our correlations analysis between students’ MSCEIT scores and their written examination scores. We found no significant correlations between scores on the MSCEIT and those on the written examinations for either cohort.

Table 2.

Correlations Between Scores on the Mayer–Salovey–Caruso Emotional Intelligence Test (MSCEIT) and on Written Examinations, University of Ottawa Faculty of Medicine

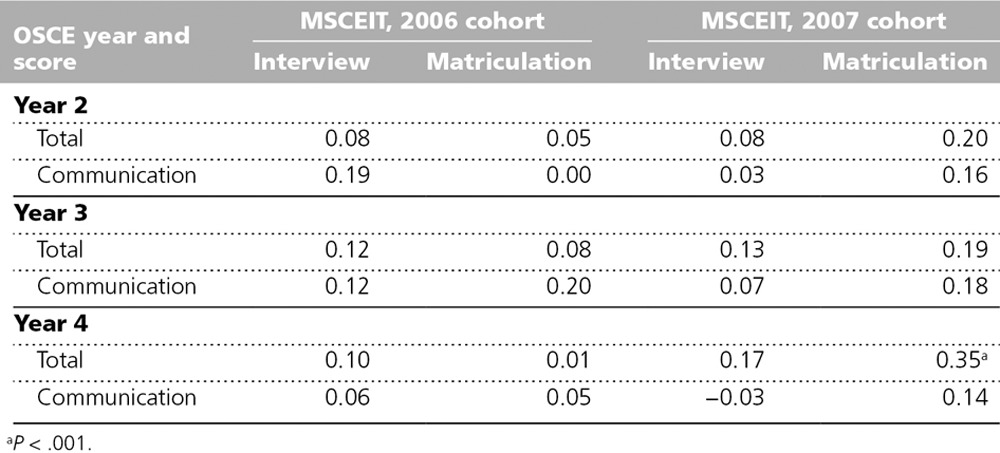

Table 3 includes the results of our correlations analysis between students’ MSCEIT scores and their OSCE scores. For the 2006 cohort, we found no significant correlation between total OSCE scores and MSCEIT scores or between communication subscores and MSCEIT scores. For the 2007 cohort, the pattern was similar, with largely small and nonsignificant correlations. The only statistically significant positive correlation we found was between the year 4 total OSCE score and the MSCEIT score at matriculation (r = 0.35, P < .001).

Table 3.

Correlations Between Scores on the Mayer–Salovey–Caruso Emotional Intelligence Test (MSCEIT) and on the Objective Structured Clinical Examinations (OSCEs), University of Ottawa Faculty of Medicine

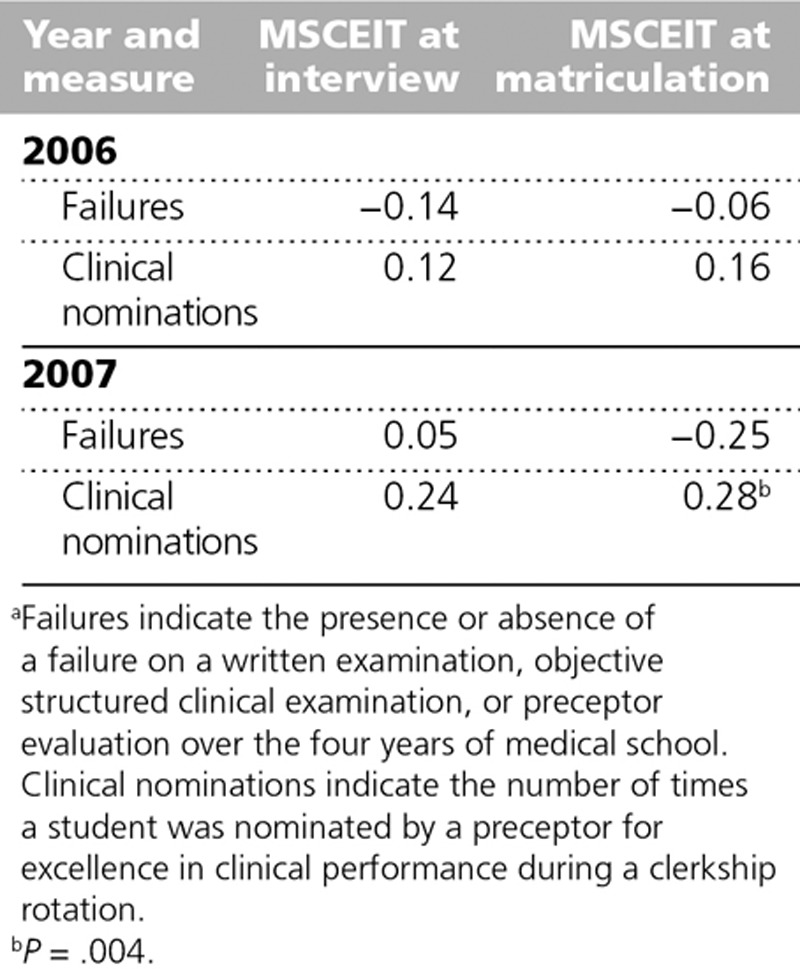

In the 2006 cohort, 37 participants (26%) never received a clinical nomination, 39 (28%) received a single clinical nomination, and 65 (46%) received two or more nominations. In the 2007 cohort, 39 participants (28%) never received a nomination, 39 (28%) received a single nomination, and 63 (45%) received two or more nominations. We found only one significant correlation between MSCEIT scores and clinical nominations—between MSCEIT scores at matriculation and clinical nominations for the 2007 cohort (see Table 4). The total number of failures on any examination (i.e., written, OSCE, and preceptor) was 26/140 (19%) for the 2006 cohort and 14/141 (10%) for the 2007 cohort. We found no significant correlations in either cohort between MSCEIT scores and the presence or absence of a failure over four years of medical school (see Table 4).

Table 4.

Correlations Between Scores on the Mayer–Salovey–Caruso Emotional Intelligence Test (MSCEIT) and Failures or Clinical Nominations, University of Ottawa Faculty of Medicinea

Discussion

In this study, we sought to determine whether EI measured at admissions predicted subsequent cognitive and noncognitive skills in medical school. We found no correlations between EI and written examination scores that reached statistical significance. However, we were not surprised by this finding—a purely cognitive test did not predict or correlate with EI. Although other studies have had similar findings, they used self-reporting tools to measure EI, potentially leading to different outcomes, whereas we used an abilities test.9

If EI is necessary for successful doctor–patient relationships, then students with higher EI scores potentially would have higher clinical skills scores, including measures of communication. Our findings did not support this prediction. We found no correlation between EI and OSCE scores or communication scores for the 2006 cohort. For the 2007 cohort, we found a weak to moderate correlation between EI at matriculation and year 4 OSCE scores. MSCEIT scores at interview, therefore, did not predict students’ OSCE performance. A previous study examined the correlation between EI and OSCE scores and found a modest correlation (in the range of 0.17–0.2) for communication skills.20 Two key differences in their methodology may explain the difference in outcomes. In their study, the authors measured communication skills using a checklist consisting of three items with dichotomous variables and EI using the Trait Meta-Mood Scale and the Davis’ Interpersonal Reactivity Index, two self-report tools.20 In our study, we used a communication rating scale that was more comprehensive with four items (interpersonal skills, interviewing skills, patient education, and response to emotional issues) and employed a rating scale rather than dichotomous variables. In addition, our EI assessment tool was an abilities test, suggested by some to be a better measure of the EI construct.11

Finally, we examined the correlation between MSCEIT scores and clinical nominations, which are a measure of excellence in clinical performance. We would argue that such excellence would necessitate many skills that contribute to EI, such as communication skills, teamwork relationships, and professionalism. Yet, we found no significant correlations between MSCEIT scores and clinical nominations, except for a weak correlation between MSCEIT scores at matriculation and clinical nominations for the 2007 cohort.

Limitations

Our study has a number of limitations. First, self-selection may have biased our samples, as applicants and students were not required to participate. Also, admissions procedures and assessment tools vary in each medical school. As we conducted our study at a single institution, it may limit the generalizability of our findings. In addition, controversy exists regarding both the construct of EI and its predictive validity. Some authors have argued that the lack of conceptual clarity informing different models and measures of EI should lead to caution in considering EI as a measure of success.18 Yet, we conducted our study in a rigorous fashion—measuring EI in two separate cohorts, at two time points, with an abilities test, and compared with standard measures of cognitive and clinical skills.

Conclusions

In conclusion, we found that EI did not correlate with written examination scores or examination failures and that it correlated inconsistently with OSCE scores and nominations for excellence in clinical performance. Thus, EI, as measured by an abilities test at admissions, does not appear to reliably predict future academic performance during medical school. To further explore the use of EI at admissions, the next phase of our study will compare EI with other academic attributes, such as professionalism, need for examination deferral or leave of absence, and need for assistance from the student affairs or wellness office.

Acknowledgments: The authors wish to thank T. Rainville, D. Clary, and M-H Urro for their administrative assistance, as well as Luanne Waddell and Vikki McHugh from the Ottawa Exam Center for their invaluable support.

Footnotes

Funding/Support: The Ontario Ministry of Health and Long Term Care supported this study with a grant.

Other disclosures: None reported.

Ethical approval: The Ottawa Hospital research ethics board approved this study.

Previous presentations: The findings from this study were shared during an oral presentation at the Canadian Conference on Medical Education, Banff, Alberta, Canada, April 2012.

References

- 1.Shulruf B, Poole P, Wang GY, Rudland J, Wilkinson T. How well do selection tools predict performance later in a medical programme? Adv Health Sci Educ Theory Pract. 2012;17:615–626. doi: 10.1007/s10459-011-9324-1. [DOI] [PubMed] [Google Scholar]

- 2.Kirch DG. AAMC President’s Address 2011: The New Excellence. Presented at: Association of American Medical Colleges Annual Meeting; November 6, 2011; Denver, Colo. https://www.aamc.org/download/266128/data/2011presidentsaddress.pdf. Accessed November 26, 2013. [Google Scholar]

- 3.Accreditation Council for Graduate Medical Education. ACGME Common Program Requirements. 2010. http://acgme-2010standards.org/pdf/Common_Program_Requirements_07012011.pdf. Accessed April 30, 2013. [No longer available.]

- 4.Frank JR. The CanMEDS 2005 Physician Competency Framework. Ottawa, Ontario, Canada: Royal College of Physicians and Surgeons of Canada; 2005. http://www.royalcollege.ca/portal/page/portal/rc/common/documents/canmeds/resources/publications/framework_full_e.pdf. Accessed November 26, 2013. [Google Scholar]

- 5.Grewal D, Davidson HA. Emotional intelligence and graduate medical education. JAMA. 2008;300:1200–1202. doi: 10.1001/jama.300.10.1200. [DOI] [PubMed] [Google Scholar]

- 6.Mayer JD, Salovey P, Caruso DR. Emotional intelligence: New ability or eclectic traits? Am Psychol. 2008;63:503–517. doi: 10.1037/0003-066X.63.6.503. [DOI] [PubMed] [Google Scholar]

- 7.Van Rooy DL, Viswesvaran C. Emotional intelligence: A meta-analytic investigation of predictive validity and nomological net. J Vocat Behav. 2004;65:71–95. [Google Scholar]

- 8.Jordan PJ, Ashkanasy NM, Härtel CEJ, Hooper GS. Workgroup emotional intelligence. Scale development and relationship to team process effectiveness and goal focus. Hum Resource Manag Rev. 2002;12:195–214. [Google Scholar]

- 9.Arora S, Ashrafian H, Davis R, Athanasiou T, Darzi A, Sevdalis N. Emotional intelligence in medicine: A systematic review through the context of the ACGME competencies. Med Educ. 2010;44:749–764. doi: 10.1111/j.1365-2923.2010.03709.x. [DOI] [PubMed] [Google Scholar]

- 10.Cherry MG, Fletcher I, O’Sullivan H, Shaw N. What impact do structured educational sessions to increase emotional intelligence have on medical students? BEME guide no. 17. Med Teach. 2012;34:11–19. doi: 10.3109/0142159X.2011.614293. [DOI] [PubMed] [Google Scholar]

- 11.Carr SE. Emotional intelligence in medical students: Does it correlate with selection measures? Med Educ. 2009;43:1069–1077. doi: 10.1111/j.1365-2923.2009.03496.x. [DOI] [PubMed] [Google Scholar]

- 12.Leddy JJ, Moineau G, Puddester D, Wood TJ, Humphrey-Murto S. Does an emotional intelligence test correlate with traditional measures used to determine medical school admission? Acad Med. 2011;86(10 suppl):S39–S41. doi: 10.1097/ACM.0b013e31822a6df6. [DOI] [PubMed] [Google Scholar]

- 13.McNaughton N. Discourse(s) of emotion within medical education: The ever-present absence. Med Educ. 2013;47:71–79. doi: 10.1111/j.1365-2923.2012.04329.x. [DOI] [PubMed] [Google Scholar]

- 14.Grubb WL, 3rd, McDaniel MA. The fakability of Bar-On’s Emotional Quotient Inventory Short Form: Catch me if you can. Hum Perform. 2007;20:43–59. [Google Scholar]

- 15.Hartman NS, Grubb WL., 3rd Deliberate faking on personality and emotional intelligence measures. Psychol Rep. 2011;108:120–138. doi: 10.2466/03.09.28.PR0.108.1.120-138. [DOI] [PubMed] [Google Scholar]

- 16.Daus CS, Ashkanasy NM. The case for the ability-based model of emotional intelligence in organizational behavior. J Organ Behav. 2005;26:453–466. [Google Scholar]

- 17.Mayer JD, Salovey P, Caruso DR, Sitarenios G. Measuring emotional intelligence with the MSCEIT V2.0. Emotion. 2003;3:97–105. doi: 10.1037/1528-3542.3.1.97. [DOI] [PubMed] [Google Scholar]

- 18.Lewis NJ, Rees CE, Hudson JN, Bleakley A. Emotional intelligence medical education: Measuring the unmeasurable? Adv Health Sci Educ Theory Pract. 2005;10:339–355. doi: 10.1007/s10459-005-4861-0. [DOI] [PubMed] [Google Scholar]

- 19.Dauphinee WD, Blackmore DE, Smee S, Rothman AI, Reznick R. Using the judgments of physician examiners in setting the standards for a national multi-center high stakes OSCE. Adv Health Sci Educ Theory Pract. 1997;2:201–211. doi: 10.1023/A:1009768127620. [DOI] [PubMed] [Google Scholar]

- 20.Stratton TD, Elam CL, Murphy-Spencer AE, Quinlivan SL. Emotional intelligence and clinical skills: Preliminary results from a comprehensive clinical performance examination. Acad Med. 2005;80(10 suppl):S34–S37. doi: 10.1097/00001888-200510001-00012. [DOI] [PubMed] [Google Scholar]