Abstract

Purpose

Team-based learning (TBL), a structured form of small-group learning, has gained popularity in medical education in recent years. A growing number of medical schools have adopted TBL in a variety of combinations and permutations across a diversity of settings, learners, and content areas. The authors conducted this systematic review to establish the extent, design, and practice of TBL programs within medical schools to inform curriculum planners and education designers.

Method

The authors searched the MEDLINE, PubMed, Web of Knowledge, and ERIC databases for articles on TBL in undergraduate medical education published between 2002 and 2012. They selected and reviewed articles that included original research on TBL programs and assessed the articles according to the seven core TBL design elements (team formation, readiness assurance, immediate feedback, sequencing of in-class problem solving, the four S’s [significant problem, same problem, specific choice, and simultaneous reporting], incentive structure, and peer review) described in established guidelines.

Results

The authors identified 20 articles that satisfied the inclusion criteria. They found significant variability across the articles in terms of the application of the seven core design elements and the depth with which they were described. The majority of the articles, however, reported that TBL provided a positive learning experience for students.

Conclusions

In the future, faculty should adhere to a standardized TBL framework to better understand the impact and relative merits of each feature of their program.

Parmelee and colleagues1 defined team-based learning (TBL) as “an active learning and small group instructional strategy that provides students with opportunities to apply conceptual knowledge through a sequence of activities that includes individual work, team work, and immediate feedback.” Although TBL can be applied to both large (>100 students) and small classes (<25 students), it generally involves multiple groups of five to seven students.1 In its purest format, TBL is highly structured and requires the implementation of specific steps and recommended core design elements.1

Originally developed more than 20 years ago by Dr. Larry Michaelsen for use in business schools, TBL has gained popularity in medical education in recent years.1 A growing number of medical schools have adopted TBL in some format.2,3 Haidet and colleagues4 found a variety of combinations and permutations of TBL across a diversity of settings, learners, and content areas in health sciences education. They subsequently proposed a set of guidelines for standardizing the way in which TBL is both reported and critiqued in the medical and health sciences literature. Using this standardized framework for both the implementation and reporting of TBL will ensure its fidelity as a learning strategy and will provide a greater opportunity for others to replicate the activities and gauge outcomes.4 According to these guidelines, in addition to outlining the scope (class size, subject, etc.) of the program, researchers should also report on the “seven core design elements that underlie the TBL method.”4 These elements are team formation, readiness assurance (RA), immediate feedback, sequencing of in-class problem solving, the four S’s (significant problem, same problem, specific choice, and simultaneous reporting), incentive structure, and peer review.

Despite the increasing number of publications providing a significant evidence base for TBL, no published systematic review has detailed the extent of TBL within medical schools. Therefore, the aim of this review was to summarize the published evidence regarding the extent, design, and practice of TBL programs within medical schools to inform curriculum planners and education designers, particularly those who are considering modifications to current teaching pedagogies and TBL strategies.

Method

In this review, we wrote and used the following definition of medical students: students enrolled in undergraduate or graduate entry university medical programs that lead to the qualification of medical doctor. Our search strategy included combinations of the following search terms: medicine; medical education; medical education, undergraduate; team-based learning; team learning; and TBL. Because of their known indexing of publications in medicine and education, we searched the MEDLINE, PubMed, Web of Knowledge, and ERIC databases. We also searched the reference lists of all identified articles. We limited our search to original articles published in the past decade (2002–2012).

Because the primary focus of our review was articles reporting on undergraduate medical education, we excluded articles reporting on postgraduate medical education, including resident training, continuing medical education, and professional development. We also excluded TBL programs in nursing and the other health sciences. Because we were interested in which components of TBL programs were implemented and how, we included articles that reported on modified TBL programs, provided the researchers considered TBL the primary teaching method. We excluded articles that presented multimethod delivery models, in which TBL was just one component of the overall teaching method, if the TBL components were not clearly defined or could not be clearly differentiated from the other delivery methods. Finally, we excluded expert opinions and other commentaries that did not contain original research on TBL programs.

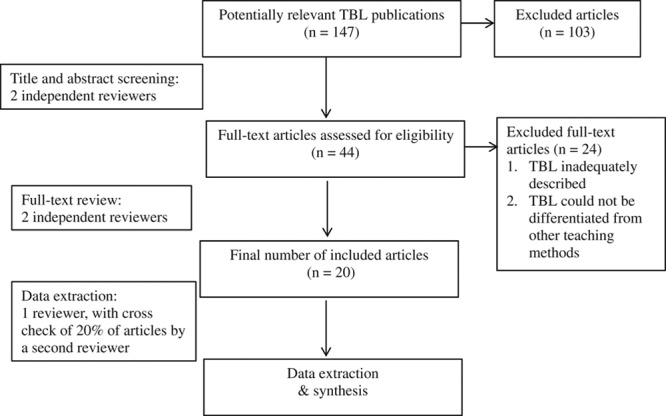

Our literature search yielded 147 potential publications on TBL in undergraduate medical programs (see Figure 1 for our complete search and study selection strategy). Following an initial review of the titles and abstracts for relevancy and removal of duplicate results, we had a total of 44 citations. Two coauthors (A.W.B. and D.M.M.) then independently appraised these 44 full-text articles for relevance. From this appraisal, we excluded 24 articles because the TBL program was inadequately described or we could not differentiate it from the other teaching methods assessed. The same two coauthors (A.W.B. and D.M.M.) then analyzed the remaining 20 articles using Haidet and colleagues’4 guidelines for reporting TBL activities.

Figure 1.

Flowchart of the literature search and study selection process in a systematic review of the literature on team-based learning (TBL) programs in medical education published between 2002 and 2012.

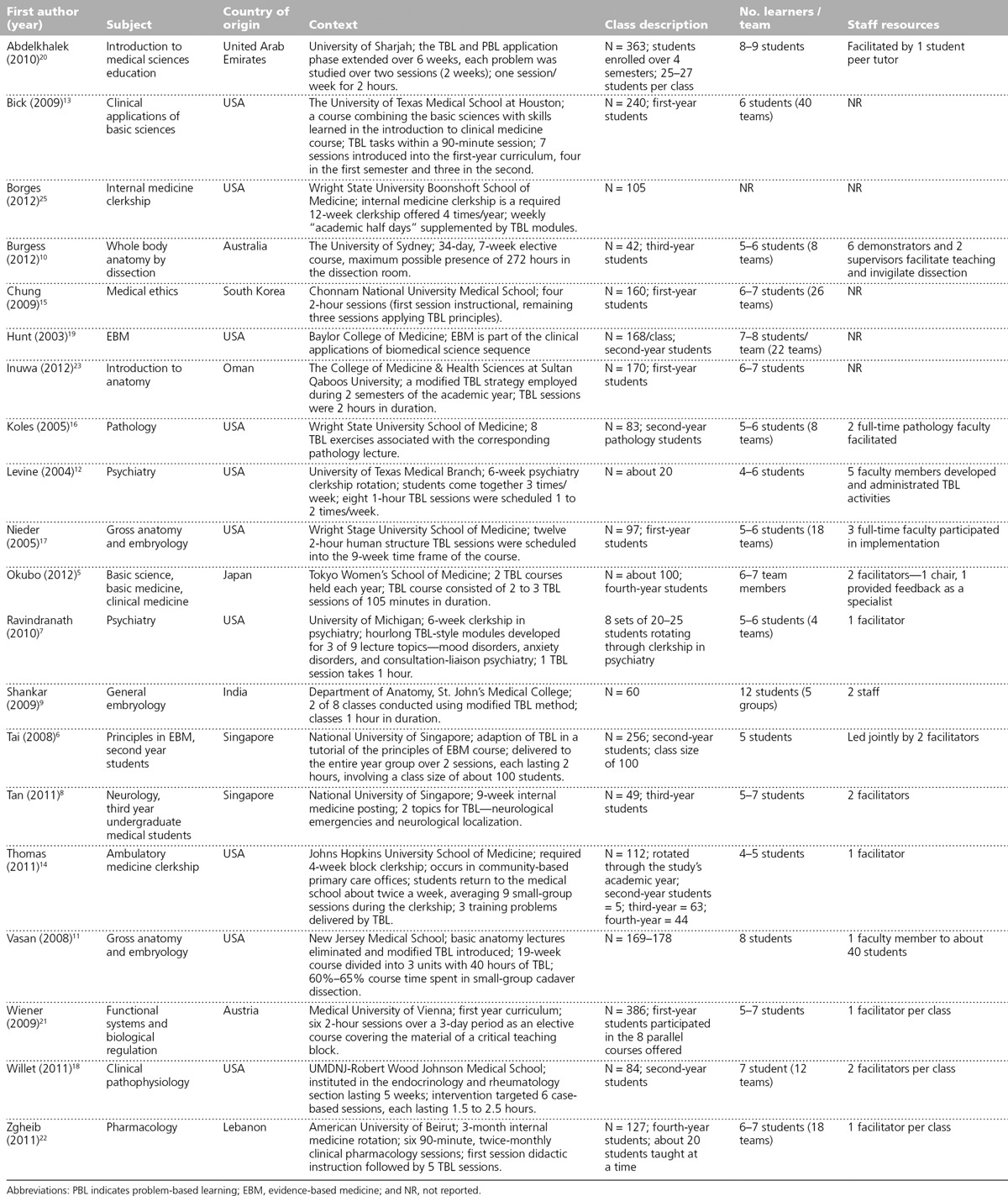

From this full-text analysis, we extracted data related to the implementation context and scope of the TBL program (see Appendix 1), including the program subject, country of implementation, scope of the program (i.e., single session, series, entire course, etc.), class description (i.e., stage of training, class size, etc.), number of learners per team, and staff resource allocation.

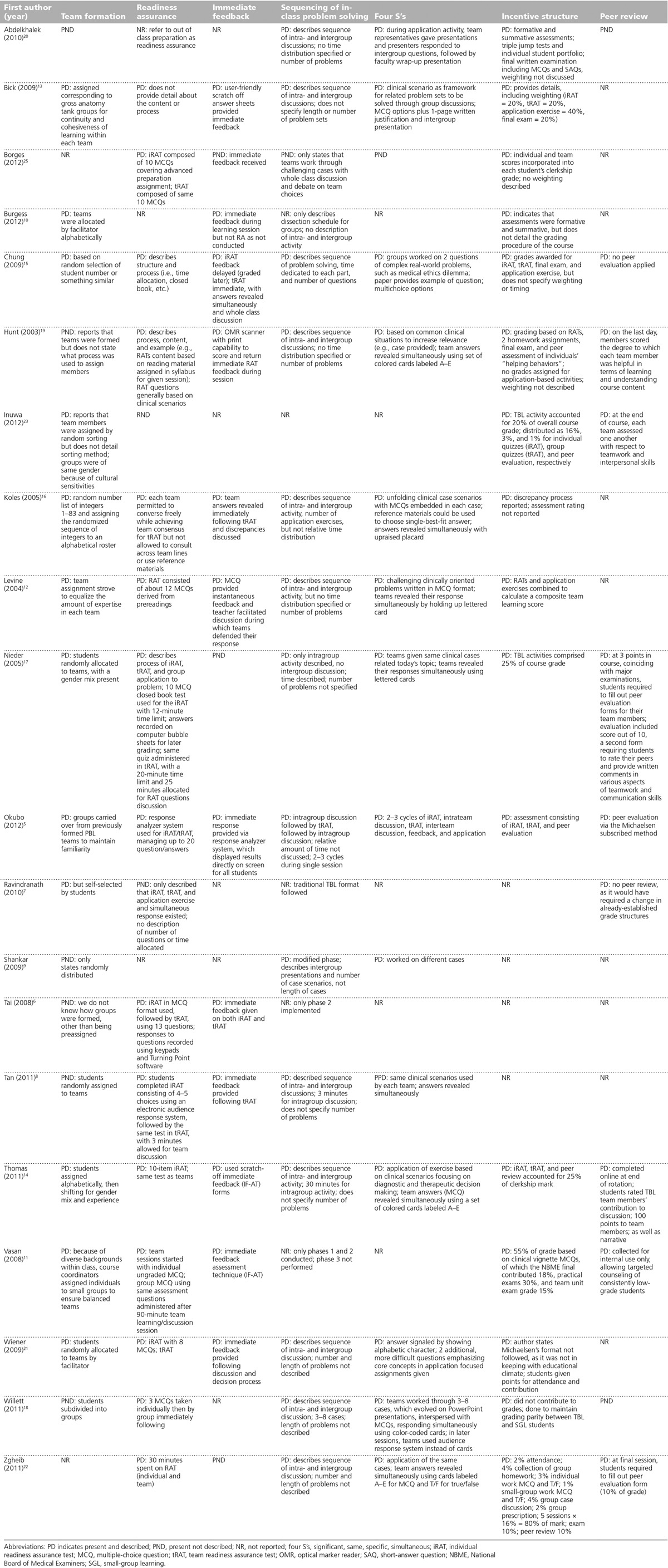

We then used the guidelines developed by Haidet and colleagues4 in critiquing the body of evidence on TBL. We appraised articles by applying these guidelines to determine the extent to which the study applied the seven core TBL design elements: team formation, RA, immediate feedback, sequencing of in-class problem solving, the four S’s, incentive structure, and peer review. We rated each of these elements as present and described, present and partially described, present but not described, or not reported, and recorded a summary of the relevant implementation details.

We considered a classic TBL program one that included three phases: (1) advanced preparation, (2) RA, and (3) application exercise.2 We considered TBL programs to be modified if they did not include one or more of the three phases or if one or more of the phases was significantly different from the implementation approach described by the TBL guide from the Association for Medical Education in Europe.1

Results

Of the 20 articles we included in our final review, 14 described a classic TBL program and 6 described modified ones. The studies were conducted in 10 different countries, with seven universities in the United States representing half (10) of the studies. Singapore was the next most commonly represented country, with two studies, both at the National University of Singapore. Other countries represented, with one study each, were Australia, the United Arab Emirates, South Korea, Oman, Japan, India, Austria, and Lebanon. See Appendix 1 for more details about the 20 included articles, such as context, class description, number of learners, and staff resource allocation.

Context of TBL programs

TBL programs were implemented in a wide range of undergraduate medical curricula, including multiple disciplines and content areas, such as the basic sciences, medical ethics, neurology, pharmacology, anatomy, evidence-based medicine, ambulatory care, psychiatry, pathology, and physiology. However, TBL programs were more commonly applied during the preclinical years (14/20) than the clinical years (6/20).

Scope

TBL programs ranged from just two to three sessions of a single course,5–7 or on specific topics within a course,8,9 to entire courses of at least eight sessions.10,11 However, the majority of TBL programs were one and a half to two hours long.

Class and team size

Class sizes ranged from 20 students in a psychiatry clerkship12 to 240 in a basic sciences clinical application course.13 The number of students per team ranged from as low as 4 members14 to up to 12 members.9

Appendix 2 summarizes the 20 studies in terms of Haidet and colleagues’4 reporting guidelines.

Team formation

Random or alphabetical allocations were the most commonly described methods of team formation. Authors described two random allocation methods—the random selection of student numbers15 and the use of a random list of integers assigned to an alphabetical roster.16 In addition to applying a random allocation strategy, Nieder and colleagues17 reported using measures to ensure gender mix, whereas Thomas and Bowen14 reported using measures to ensure both gender and experience mix.

Other methods included simple team allocation within existing team membership, such as current problem-based learning teams,13 or carrying over membership from historic teams.5 Despite recommendations to not allow students to self-select their groups,4 one article reported using this method.7

Readiness assurance

RA was assessed at both the individual student and team level. The majority of the articles (15/20) reported and described the RA process, which largely was in line with recommendations.1,4 All individual readiness assurance tests (iRATs) and team readiness assurance tests (tRATs) included multiple-choice questions (MCQs), with the same questions used for both tests. Although Willett and colleagues18 reported using as few as three MCQs, the number typically ranged from 10 to 13. In the few instances where the authors described the time allocation for RA, it varied considerably between 12 and 30 minutes.

Immediate feedback

Eleven articles reported that faculty provided immediate feedback following the tRAT using a class discussion, which also addressed disagreements amongst students and between faculty and students, regarding the correct answer to the tRATs. The remaining articles either did not report or did not provide a description of the immediate feedback design element. Alternative methods of providing immediate feedback included the immediate feedback assessment technique, which involved scratch-off answer sheets,11,13,14 electronic audience response systems, which displayed results directly on a screen for all students to see,5,8 and a scanner with print capability to score and return individual and group readiness assessment tests during the session.19

Sequencing of in-class problem solving

The majority of articles clearly discussed the sequence of intra- and intergroup discussion but did not provide detail on the length or number of problems. Only one15 provided a detailed explanation of the sequence, number of problems, and time allocation, along with an example of an application exercise question. Nieder and colleagues17 discussed intragroup activity only; their program did not include intergroup discussion during the team application of the problem (tAPP).17 Three articles described the use of group presentations during the application phase.9,13,20 Abdelkhalek and colleagues20 claimed that presentation-generated discussion and feedback satisfied the intergroup discussion element.

The four S’s

In terms of significance, several authors emphasized that the application questions required higher-level thinking and problem-solving skills than the essential knowledge recall questions typically applied during the RA process.15,16,21 Often, problems were based on complex real-world issues or common clinical scenarios to increase relevance. Next, in all but one case,9 teams worked on the same problem. In addition, MCQs were by and large the method of specific choice used for teams to indicate their single-best-fit answer. Finally, the majority of the articles described the use of simultaneous reporting. Color-coded and lettered placards were commonly used so teams could simultaneously reveal their responses. In addition to MCQs, one program used true/false questions requiring simultaneous responses.22 Bick and colleagues13 reported that, in addition to simultaneous reporting using MCQs, teams were required to submit a written one-page justification for their choice.

Incentive structure

Three articles provided detailed descriptions of incentive structures, including the weighting of grades.13,22,23 For example, Bick and colleagues13 described the following weighting structure—iRAT 20%, tRAT 20%, tAPP 40%, and final examination 20%, not including the peer review mark. The majority of authors stated that grades were awarded, but they did not provide a description of the mark distribution. Burgess and colleagues10 indicated that they used formative and summative assessments but did not detail the grading procedure for the course. Although they awarded points for attendance at TBL activities, Wiener and colleagues21 reported that they did not follow an incentive structure because it was not in keeping with their educational climate.

Peer review

Ravindranath and colleagues7 stated that they did not implement a peer review as it would have required a change in the established grading structure, and Chung and colleagues15 acknowledged that they did not use a peer review either. Several other articles reported evaluating various parameters of peer review, including helpfulness of team members in terms of learning and understanding19,22,23 and team members’ teamwork and interpersonal skills.23 Although peer review typically took place on the last day of the course, Nieder and colleagues17 reported a peer review that occurred at three points throughout the course coinciding with major examinations and that included a score out of 10 points plus written comments covering teamwork and communication skills. In the TBL program described by Thomas and Bowen,14 students completed a peer review online at the end of the rotation, which involved the rating of team members’ contributions to discussion by distributing 100 points across the team members and providing narrative feedback.

Discussion

TBL is a relatively new pedagogy in medical education.24 As a learning tool, it enables a large group of students to take part in small-group learning experiences without a large number of faculty. In addition, students are attracted to the active and collaborative approach of TBL, whereas faculty are interested in its integrated approach to developing students’ professionalism skills, such as leadership, communication, and teamwork.20 Additionally, with an increasing number of medical students and a decreasing number of teaching staff, the implementation of TBL programs offers resource-saving measures for medical schools. From the studies that we reviewed, we learned that the impact of TBL has been assessed by various outcomes, including student knowledge acquisition, student perception, and faculty perception.

Context of TBL programs

We attribute the dominance of TBL programs in the preclinical years to two factors—preclinical students often are placed in larger groups during this phase of their training, and the resource application of small-group learning could provide the greatest measurable benefit during this period. When faculty used TBL during a clinical rotation or clerkship, it was during scheduled teaching periods, such as when students returned to campus for blocks of teaching14 or weekly academic half-days.25 We found no reports of observed bias within the preclinical years of training, with examples of TBL distributed throughout that period.

Scope

We found a large range in the number of TBL sessions (from two to eight). Searle and colleagues2 suggested that a negative bias might result when exposure to TBL is so minimal (i.e., through only a few sessions) that benefits of the method may not be realized (i.e., increased in-class engagement and application of content).

Class and team size

Wiener and colleagues21 reported that undersized teams (i.e., two students) scored significantly lower than regular-sized teams (i.e., five to seven students) on RA tests, suggesting that an optimal number of team members to engage students in discussion may exist. According to Michaelsen and colleagues,26 teams should consist of between five and seven students—small enough to develop process and maximize team dynamics, yet large enough to include sufficient intellectual resources and discussion.

Similar to Haidet and colleagues,4 we found substantial variability across the articles we reviewed in terms of the application of the seven core design elements and the depth with which they were described.

Team formation

The majority of programs aimed to promote continuity of learning and cohesiveness of teams. The random allocation methods described in the articles we reviewed likely were the most closely aligned with Michaelsen and Richards’24 ideals for team allocation—that students should be assigned to teams by the facilitator using a transparent process, giving each team a diverse mix of students and ensuring that no preexisting social or friendship-based groupings are formed. Although random and alphabetical allocation methods are likely to prevent teams of friends from self-forming, these methods may not adequately achieve the preferred diversity of learner characteristics. Levine and colleagues12 and Thomas and Bowen,14 for example, noted that, in addition to using a random allocation method, they tried to equalize the expertise and gender mix on each team. In contrast, despite Michaelsen and Sweet’s27 warning that such an allocation method could threaten the group’s overall development, Ravindranath and colleagues7 reported that their students self-selected onto teams. In addition, although guidelines1 recommend that teams “stay together as long as possible,” the same authors7 reformed their teams at the start of each TBL session, which did not allow for the establishment and development of team dynamics.

Readiness assurance

In the articles we reviewed, RA tests typically included questions from the assigned readings to determine students’ preparation, comprehension, and readiness for applying the assigned content. As Koles and colleagues16 acknowledged, the RA process held students individually accountable for their preparation, and, when they failed to prepare, it affected both their individual and their team’s learning and performance. In addition, Nieder and colleagues17 reported that, by using a TBL approach in a gross anatomy and embryology course, faculty spent less time in class covering the basic factual material. We anticipate that, by testing the knowledge of individuals and then the knowledge of the team, students will come to class prepared, motivated by not wanting to let their team down, thus freeing up class time for in-class problem-solving activities.

Immediate feedback

The majority of the articles we reviewed noted that faculty provided immediate feedback to students. Michaelsen and Sweet27 describe immediate feedback following the tRAT as being inherent to the TBL process to provide students with an understanding of their content knowledge and application ability. In addition, providing immediate feedback encourages competition between both individuals and teams, is key to knowledge acquisition and retention, and affects team development.27,28

Sequencing of in-class problem solving

During the problem-solving activities, students had the opportunity to apply their knowledge of course content by working in teams to solve complex, real-life problems. As Parmelee and colleagues1 noted, students must interpret, analyze, and synthesize information to make a specific choice during the activity, and they must defend their choice to the class if necessary. Although some have described the tAPP as the heart of TBL,4 this design element was rarely mentioned in detail in the articles we reviewed.

The four S’s

The four S’s principle should guide the content, structure, and process of the TBL program—the problem needs to be significant, all teams need to have the same problem to solve, and they need to provide a specific choice in their answer, which they and the other teams need to report simultaneously. The standard use of MCQs differentiates TBL from other action-based learning techniques, such as case-based learning,16 that use open-ended questions. Burgess and colleagues,10 who applied TBL in an anatomy dissection course, did not report the use of two S’s—specific choice and simultaneous reporting. We hypothesize that their program did not incorporate these two features because it was lab based and involved hands-on cadaver dissection, rather than a paper-based scenario.

Incentive structure

Assessment has a large effect on students’ achievement of course objectives, and, in TBL, it is designed to maximize both individuals’ out-of-class preparation and team collaboration.4 Yet, in many of the articles we reviewed, the authors did not clearly describe the incentive structure. In one, they noted that they had not applied an incentive structure.21 Michaelsen and Sweet27 argued that an effective grading system is necessary to provide rewards for both individual contributions and effective teamwork and to allay students’ concerns about grading for group work. Thus, grades should be given for iRAT, tRAT, tAPP, and peer review.1

Peer review

Peer review provides an incentive for students to positively contribute to group learning and problem solving.4 In addition, Parmelee and colleagues1 recommended that, as part of the TBL process, students contribute to the grades of other students by providing qualitative and quantitative feedback to other team members. However, almost half of the articles did not report on peer review. Having not used peer review in their TBL program, Chung and colleagues15 suggested that, if they had incorporated it, intra- and intergroup discussion may have improved. Indeed, Michaelsen29 considered peer assessment to be one of the key components of TBL, because it helps to ensure student accountability. In addition, other articles in the medical education literature report that the practice of giving feedback allows students to develop professional competencies and prepare for their professional lives as clinicians with peer review responsibilities.30,31

Limitations

Although we attempted to capture all available and relevant articles, we may have overlooked some as we only included articles written in English and our search strategy may not have been comprehensive. In addition, as the implementation of TBL programs did not gain popularity within medical education until 2001,1 we have reported on only 10 years of data in this review. Thus, we may have missed earlier descriptions of TBL within medical education. However, it is unlikely that we have missed a substantial number of such publications.

Conclusions

The purpose of our systematic review was to gain a better understanding of the extent and design of TBL programs in medical schools. We used Haidet and colleagues’4 TBL reporting guidelines in our appraisal of the published literature. Although Parmelee and Michaelsen noted that TBL “works best when all of the components are included in the design and implementation,”3 our review revealed extensive variations in the design, implementation, and reporting of TBL programs. In addition, Haidet and colleagues4 argued that the higher the fidelity of the TBL program, the greater the opportunity for faculty to understand, critique, replicate, and compare learning outcomes. Thus, in the future, faculty should adhere to a standardized TBL framework, researching and reporting on their program’s outcomes, to better understand the impact and relative merits of each feature of their program.

Appendix 1

Characteristics of the 20 Studies of Team-Based Learning (TBL) Programs in Medical Schools, Identified in a Review of the Literature Published Between 2002 and 2012

Appendix 2

The Seven Core Design Elements4 of Team-Based Learning (TBL) Programs in Medical Schools, Identified in a Review of the Literature Published Between 2002 and 2012

Footnotes

Funding/Support: None reported.

Other disclosures: None reported.

Ethical approval: Reported as not applicable.

References

- 1.Parmelee D, Michaelsen LK, Cook S, Hudes PD. Team-based learning: A practical guide: AMEE guide no. 65. Med Teach. 2012;34:e275–e287. doi: 10.3109/0142159X.2012.651179. [DOI] [PubMed] [Google Scholar]

- 2.Searle NS, Haidet P, Kelly PA, Schneider VF, Seidel CL, Richards BF. Team learning in medical education: Initial experiences at ten institutions. Acad Med. 2003;78(10 suppl):S55–S58. doi: 10.1097/00001888-200310001-00018. [DOI] [PubMed] [Google Scholar]

- 3.Parmelee DX, Michaelsen LK. Twelve tips for doing effective team-based learning (TBL). Med Teach. 2010;32:118–122. doi: 10.3109/01421590903548562. [DOI] [PubMed] [Google Scholar]

- 4.Haidet P, Levine RE, Parmelee DX, et al. Perspective: Guidelines for reporting team-based learning activities in the medical and health sciences education literature. Acad Med. 2012;87:292–299. doi: 10.1097/ACM.0b013e318244759e. [DOI] [PubMed] [Google Scholar]

- 5.Okubo Y, Ishiguro N, Suganuma T, et al. Team-based learning, a learning strategy for clinical reasoning, in students with problem-based learning tutorial experiences. Tohoku J Exp Med. 2012;227:23–29. doi: 10.1620/tjem.227.23. [DOI] [PubMed] [Google Scholar]

- 6.Tai BC, Koh WP. Does team learning motivate students’ engagement in an evidence-based medicine course? Ann Acad Med Singapore. 2008;37:1019–1023. [PubMed] [Google Scholar]

- 7.Ravindranath D, Gay TL, Riba MB. Trainees as teachers in team-based learning. Acad Psychiatry. 2010;34:294–297. doi: 10.1176/appi.ap.34.4.294. [DOI] [PubMed] [Google Scholar]

- 8.Tan NC, Kandiah N, Chan YH, Umapathi T, Lee SH, Tan K. A controlled study of team-based learning for undergraduate clinical neurology education. BMC Med Educ. 2011;11:91. doi: 10.1186/1472-6920-11-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Shankar N, Roopa R. Evaluation of a modified team based learning method for teaching general embryology to 1st year medical graduate students. Indian J Med Sci. 2009;63:4–12. [PubMed] [Google Scholar]

- 10.Burgess AW, Ramsey-Stewart G, May J, Mellis C. Team-based learning methods in teaching topographical anatomy by dissection. ANZ J Surg. 2012;82:457–460. doi: 10.1111/j.1445-2197.2012.06077.x. [DOI] [PubMed] [Google Scholar]

- 11.Vasan NS, DeFouw DO, Holland BK. Modified use of team-based learning for effective delivery of medical gross anatomy and embryology. Anat Sci Educ. 2008;1:3–9. doi: 10.1002/ase.5. [DOI] [PubMed] [Google Scholar]

- 12.Levine RE, O’Boyle M, Haidet P, et al. Transforming a clinical clerkship with team learning. Teach Learn Med. 2004;16:270–275. doi: 10.1207/s15328015tlm1603_9. [DOI] [PubMed] [Google Scholar]

- 13.Bick RJ, Oakes JL, Actor JK, et al. Integrative teaching: Problem solving and integration of basic science concepts into clinical scenarios using team-based learning. Med Sci Educ. 2009;19:26–34. [Google Scholar]

- 14.Thomas PA, Bowen CW. A controlled trial of team-based learning in an ambulatory medicine clerkship for medical students. Teach Learn Med. 2011;23:31–36. doi: 10.1080/10401334.2011.536888. [DOI] [PubMed] [Google Scholar]

- 15.Chung EK, Rhee JA, Baik YH, A OS. The effect of team-based learning in medical ethics education. Med Teach. 2009;31:1013–1017. doi: 10.3109/01421590802590553. [DOI] [PubMed] [Google Scholar]

- 16.Koles P, Nelson S, Stolfi A, Parmelee D, Destephen D. Active learning in a year 2 pathology curriculum. Med Educ. 2005;39:1045–1055. doi: 10.1111/j.1365-2929.2005.02248.x. [DOI] [PubMed] [Google Scholar]

- 17.Nieder GL, Parmelee DX, Stolfi A, Hudes PD. Team-based learning in a medical gross anatomy and embryology course. Clin Anat. 2005;18:56–63. doi: 10.1002/ca.20040. [DOI] [PubMed] [Google Scholar]

- 18.Willett LR, Rosevear GC, Kim S. A trial of team-based versus small-group learning for second-year medical students: Does the size of the small group make a difference? Teach Learn Med. 2011;23:28–30. doi: 10.1080/10401334.2011.536756. [DOI] [PubMed] [Google Scholar]

- 19.Hunt DP, Haidet P, Coverdale JH, Richards B. The effect of using team learning in an evidence-based medicine course for medical students. Teach Learn Med. 2003;15:131–139. doi: 10.1207/S15328015TLM1502_11. [DOI] [PubMed] [Google Scholar]

- 20.Abdelkhalek N, Hussein A, Gibbs T, Hamdy H. Using team-based learning to prepare medical students for future problem-based learning. Med Teach. 2010;32:123–129. doi: 10.3109/01421590903548539. [DOI] [PubMed] [Google Scholar]

- 21.Wiener H, Plass H, Marz R. Team-based learning in intensive course format for first-year medical students. Croat Med J. 2009;50:69–76. doi: 10.3325/cmj.2009.50.69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zgheib NK, Simaan JA, Sabra R. Using team-based learning to teach clinical pharmacology in medical school: Student satisfaction and improved performance. J Clin Pharmacol. 2011;51:1101–1111. doi: 10.1177/0091270010375428. [DOI] [PubMed] [Google Scholar]

- 23.Inuwa IM. Perceptions and attitudes of first-year medical students on a modified team-based learning (TBL) strategy in anatomy. Sultan Qaboos Univ Med J. 2012;12:336–343. doi: 10.12816/0003148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Michaelsen L, Richards B. Drawing conclusions from the team-learning literature in health-sciences education: A commentary. Teach Learn Med. 2005;17:85–88. doi: 10.1207/s15328015tlm1701_15. [DOI] [PubMed] [Google Scholar]

- 25.Borges NJ, Kirkham K, Deardorff AS, Moore JA. Development of emotional intelligence in a team-based learning internal medicine clerkship. Med Teach. 2012;34:802–806. doi: 10.3109/0142159X.2012.687121. [DOI] [PubMed] [Google Scholar]

- 26.Michaelsen L, Parmelee D, McMahon KK, Levine RE. Team-Based Learning for Health Professions Education. Sterling, Va: Stylus; 2007. [Google Scholar]

- 27.Michaelsen LK, Sweet M. The essential elements of team-based learning. New Dir Teach Learn. 2008;116:7–27. [Google Scholar]

- 28.Hattie J, Timperley H. The power of feedback. Rev Educ Res. 2007;77:81–112. [Google Scholar]

- 29.Michaelsen LK. Getting started with team-based learning. In: Michaelsen LK, Knight AB, Fink LD, editors. Team-Based Learning: A Transformative Use of Small Groups in College Teaching. Sterling, Va: Stylus; 2004. [Google Scholar]

- 30.Cushing A, Abbott S, Lothian D, Hall A, Westwood OM. Peer feedback as an aid to learning—what do we want? Feedback. When do we want it? Now! Med Teach. 2011;33:e105–e112. doi: 10.3109/0142159X.2011.542522. [DOI] [PubMed] [Google Scholar]

- 31.Arnold L, Shue CK, Kalishman S, et al. Can there be a single system for peer assessment of professionalism among medical students? A multi-institutional study. Acad Med. 2007;82:578–586. doi: 10.1097/ACM.0b013e3180555d4e. [DOI] [PubMed] [Google Scholar]