Abstract

Development of the visual system typically proceeds in concert with the development of audition. One result is that the visual system of profoundly deaf individuals differs from that of those with typical auditory systems. While past research has suggested deaf people have enhanced attention in the visual periphery, it is still unclear whether or not this enhancement entails deficits in central vision. Profoundly deaf and typically hearing adults were administered a variant of the useful field of view task that independently assessed performance on concurrent central and peripheral tasks. Identification of a foveated target was impaired by a concurrent selective peripheral attention task, more so in profoundly deaf adults than in the typically hearing. Previous findings of enhanced performance on the peripheral task were not replicated. These data are discussed in terms of flexible allocation of spatial attention targeted towards perceived task demands, and support a modified “division of labor” hypothesis whereby attentional resources co-opted to process peripheral space result in reduced resources in the central visual field.

A fundamental property of the human brain is its plasticity—the ability to reorganize in the face of new or altered experiences. In the case of individuals who are born severe-to-profoundly deaf (a hearing loss greater than 75 dB in the better ear) it is now well known that the visual system can compensate for a lack of auditory input (Bavelier, Dye, & Hauser, 2006; Pavani & Bottari, 2012). There now exists a substantial body of research suggesting that individuals who are born profoundly deaf are better able than their hearing peers to process information in the visual periphery. Evidence for this has come from several studies which have reported differences in orienting of visual attention (Bosworth & Dobkins, 2002; Bottari et al., 2008; Colmenero, Catena, Fuentes, & Ramos, 2004; Parasnis & Samar, 1985; but see Dye, Baril, & Bavelier, 2007), leading to the suggestion that deafness may result in very rapid shifts of visual attention in response to exogenous cues (Colmenero et al., 2004). Other researchers have examined the size of the attentional fields of deaf observers, measuring how widely attentional resources are distributed to the periphery. For example, Stevens and Neville (2006) used kinetic perimetry to look at the size of visual fields within which deaf and hearing adults could detect a target. They reported larger visual fields in deaf adults than in hearing controls. Buckley, Codina, Bhardwaj, and Pascalis (2010) used another form of perimetry with the same results—deaf adults could detect the appearance of a target further out into the periphery than could hearing adults. Taken together, this body of research has provided compelling evidence for enhanced processing of objects in the visual periphery as a result of early, profound deafness.

None of these studies, however, assessed the fate of stimuli in the central visual field under conditions where task demands required responses to peripheral stimulation. In one of the earliest studies of visual processing in the deaf, Loke and Song (1991) reported that deaf adolescents were faster than hearing adolescents at detecting the onset of peripheral targets at 25° of visual angle from fixation, but did not differ in how quickly they detected centrally presented targets. In their study, central and peripheral targets were never presented together, but were presented in isolation with central or peripheral target locations determined randomly from trial-to-trial. Quittner, Smith, Osberger, Mitchell, and Katz (1994) and Smith, Quittner, Osberger, and Miyamoto (1998) used continuous performance tests to examine how well deaf children could attend to sequences of visual stimuli presented at fixation. Their finding that deaf children were less able than hearing children to isolate target sequences within long streams of visual information led them to suggest that deafness results in a deficit in visual selective attention. This was interpreted as either due to a lack of auditory information and poor subsequent multisensory integration (see Conway, Pisoni, & Kronenberger, 2009 for a more recent formulation of this hypothesis) or to a redistribution of finite attentional resources across the visual field, allowing the visual system to take on the additional function of monitoring peripheral space normally performed by the auditory system (division of labor hypothesis; Mitchell, 1996). In another study that involved both central and peripheral stimuli, Proksch and Bavelier (2002) used a perceptual load task to investigate the fate of spatial attention that “spilled-over” from a visual search task. They replicated the finding that, for hearing individuals, leftover attentional resources are devoted to processing information at fixation rather than in the periphery. In other words, by default, hearing people allocate their visual attention to the spatial location they are fixating (Beck & Lavie, 2005). In contrast, deaf individuals appeared to devote leftover resources to the visual periphery, suggesting that deafness results in a change to the default distribution of visuospatial attention across the visual field. A more recent study by Dye, Hauser, and Bavelier (2009) used a variant of the useful field of view task (UFOV; Ball, Beard, Roenker, Miller, and Griggs, 1988) where observers were presented with a complex, transient visual display that contained a central target (at fixation), a peripheral target (located at 20° of visual angle at one of eight possible locations), and a field of distracters (Figure 2). Deaf and hearing participants, some of whom used American Sign Language (ASL) and some who did not, were asked to both identify the central target (two-alternative forced choice) and localize the peripheral target on a touchscreen display. An adaptive three-up/one-down staircase procedure was employed to compute a threshold—how long did the (backward masked) display need to remain on the screen in order for the observer to be 79.4% accurate on both tasks. The data revealed that deaf observers, whether or not they used ASL, had lower thresholds for localizing the peripheral target compared with hearing observers—the deaf needed less stimulus to localize with the same level of accuracy. This may reflect more veridical or robust visual representations as a result of increased allocation of attentional resources in the visual periphery. The effect of using ASL was not statistically significant, although there was a trend for signers, deaf or hearing, to also have lower thresholds. This was interpreted as being a result of enhanced peripheral visual attention in the deaf that was allocated to potential peripheral target locations. However, Dye et al. did not compute thresholds for central task performance during this task. Rather, trials where the central target was incorrectly identified were ignored in the adaptive staircase procedure, and the thresholds were computed solely on the basis of peripheral localization performance on “center correct” trials. This procedure therefore produced a single threshold estimate, which was the time required to perform the peripheral localization when the central discrimination was always correct.

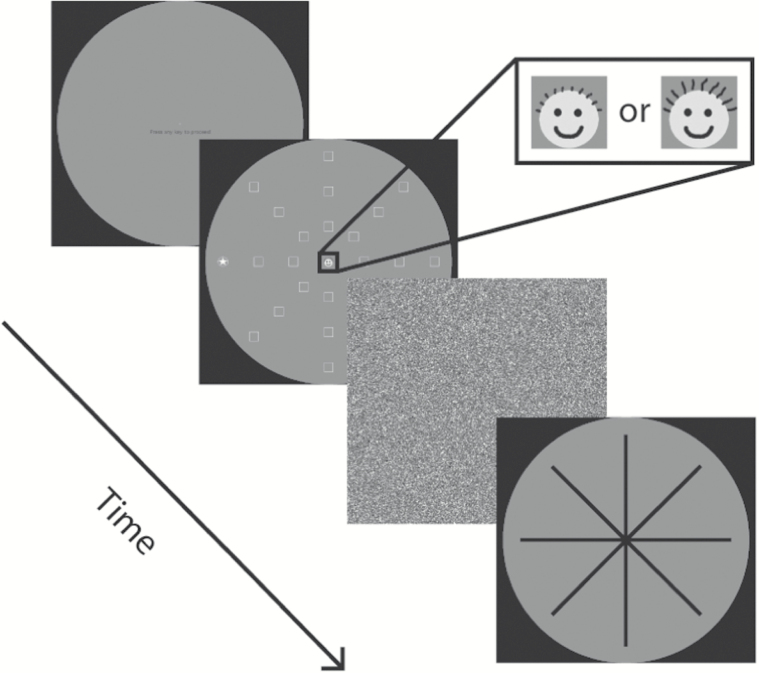

Figure 2.

The dual task with distracters required participants to make a fine discrimination judgment based upon emoticon faces presented at fixation and then indicate the peripheral location of a simultaneously presented target at 20° of visual angle. The peripheral target was embedded in a field of distracters (white squares), ensuring that the target did not “pop out” and that selective attention was required for successful localization.

An important question that remains unanswered, therefore, is whether enhanced peripheral processing comes at the cost of impaired processing of centrally presented information. That is, does deafness bring about a trade-off between processing of central and peripheral information—as predicted by a limited-resource model such as the division of labor hypothesis—or is the fate of centrally presented information independent from enhanced peripheral processing in deaf individuals? The nature of a trade-off, if any, between central and peripheral processing has significant implications for the development of theory to explain the changes in visual functions observed in deaf individuals.

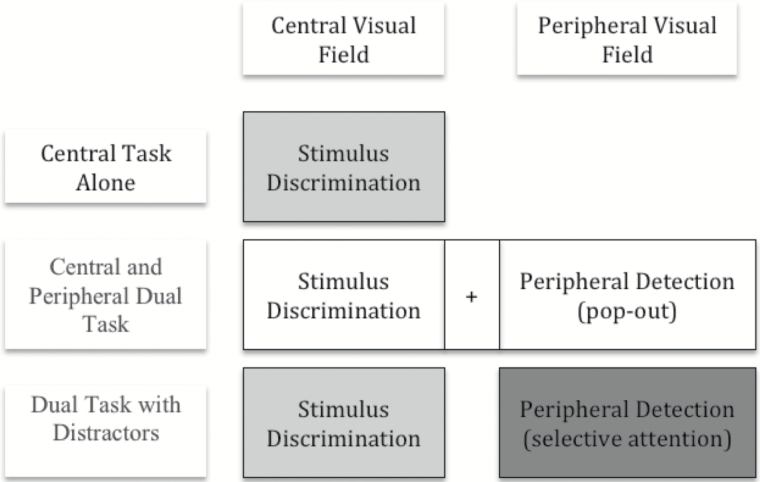

In the study reported here, a modified version of the UFOV was administered to adults with either severe-to-profound deafness or normal hearing. Unlike in the Dye et al. (2009) study, thresholds were computed separately for the central and for the peripheral task through the use of interleaved staircases. In all, three tasks were administered (Figure 2). The first task—central task alone—consisted of only a two-AFC discrimination task and provided a baseline for discrimination between two objects presented in the central visual field. The second task—central and peripheral dual task—also produced a single measure; this time of an observer’s ability to discriminate between centrally presented objects and at the same time localize a visual transient appearing in the periphery. The third and final task—dual task with distracters—yielded two measures from two tasks: first, discrimination between centrally presented objects at the same time as, second, selection and localization of a peripheral visual transient embedded in a field of distracter items.

Based upon previous studies, we predicted that deaf adults would outperform hearing adults in the peripheral detection (selective attention) task (shaded dark in Figure 1). In addition, as a direct test of the division-of-labor hypothesis, we predicted that enhanced performance on this attentionally demanding peripheral task by deaf observers would result in reduced performance on the central discrimination task. Specifically, we compared stimulus discrimination performance (shaded light in Figure 1) in the central task alone and dual task with distracters conditions, predicting that there would be a larger drop in performance from the former to the latter for deaf participants than for hearing participants.

Figure 1.

The experimental design generated four separate threshold measures. The central task alone provided a stimulus discrimination threshold for the central target presented in isolation. The central and peripheral dual task condition yielded a single, combined threshold for performing the stimulus discrimination at the same time as localizing a peripheral target in the absence of any distracters. The final dual task with distracters produced two thresholds: one was for central stimulus discrimination while also performing the concurrent peripheral task, and the other was a threshold for the peripheral task when also performing the concurrent central task. The peripheral detection threshold from this latter task provided the measure of enhanced peripheral visual selective attention, and a comparison of the two stimulus discrimination thresholds provided a measure of the “cost” to central processing performance of that enhancement.

Materials and Methods

Participants

All participants were adults aged 18–40 years, right handed, with no reported visual deficit, neurological condition, or learning disability. In addition, none of the participants played action video games for more than 5hr per week (see Green & Bavelier, 2003, 2006 for information about the effect of playing such games on selective visual attention). Informed consent was obtained from all participants who were paid for their participation. This study had ethical approval from the Institutional Review Board at all collaborating institutions. All participants were paid $10 as compensation for the 30min required to complete the study.

Deaf Participants

The deaf participants (N = 16) all had severe-to-profound hearing losses (75 dB HL or greater in better ear) and reported the onset of hearing loss to be from birth or during the first 6 months of life. The mean reported hearing loss was 91 dB (SD = 11 dB). Two participants had received a unilateral cochlear implant (at ages 11 and 13 years), although both reported that they no longer used the device. There were 5 males and 11 females, with a mean age of 22.2 years. Based upon the Hollingshead four factor index of socioeconomic status (Hollingshead, 1975), the mean SES of the deaf participants was 39 (SD = 10). All deaf participants reported communicating primarily using ASL, although only eight learned the language as infants from deaf parents (Information of age of first exposure to ASL was not obtained. As always when testing deaf individuals, it is difficult to disentangle the effects of deafness from those of using a visual-gestural language like ASL.). A deaf research assistant conducted testing in ASL.

Hearing Participants

None of the hearing participants (N = 18) reported any hearing loss, and none were familiar with ASL. There were 8 males and 10 females, with a mean age of 20.6 years that did not significantly differ from the age of the deaf group [t(32) = 1.38, p > .05]. The mean Hollingshead SES score of the hearing participants was 51 (SD = 9), which was significantly higher than that of the deaf group [t(32) = 3.54, p = .001]. As a result, SES was included as a covariate in all statistical analyses. A hearing research assistant, trained in the same lab as the research assistant who tested the deaf participants, conducted testing in English.

Design

Participants were administered three versions of the UFOV task that required them to make responses to central and peripheral targets embedded within a visual display.

The central target was a yellow emoticon “smiley” face subtending 2.0° of visual angle. The face had either “short” or “long” hair (Figure 2) and was located in the center of a circular gray field with a diameter of 45° of visual angle. “Short” targets had a hair length of 0.16° visual angle, and “long” targets had a hair length of 0.27°of visual angle. Participants were required to make a two-AFC identification, and report the identity of the target either verbally (hearing participants) or in ASL (deaf participants).

The peripheral target was a five-pointed star in a circle located at 20° of visual angle from the center of the same circular gray field (and located near the edge of that circular field). The circle had a diameter of 2.0° of visual angle. On each trial containing a peripheral target, a single such target appeared at one of the cardinal (0°, 90°, 180°, 270°) or inter-cardinal (45°, 135°, 225°, 315°) locations around the edge of the gray circle (Figure 2). The peripheral task required participants to touch the screen in order to report the location of the peripheral target.

A nonparametric adaptive method was used, whereby the length of time that a target was on the screen (before being masked) was determined based upon accuracy in preceding trials. This adaptive method, the same as that reported by Dye et al. (2009), was a transformed up-down staircase method (Levitt, 1970; Treutwein, 1995). In order to estimate the stimulus duration required for a participant to achieve 79.4% accuracy, a three-up/one-down staircase was used (Levitt, 1970): after three correct responses, the stimulus duration was reduced by one frame (60 Hz vertical refresh rate), and after one incorrect response it was increased by the same amount. The threshold was estimated by averaging the stimulus duration at the last eight reversals of the staircase (Wetherill & Levitt, 1965).

Central Task Alone

In the first of three tasks, only the central target appeared on each trial. The starting screen consisted of the gray circular field in the middle of the screen, positioned over a black background. Prompted by a fixation square at the center of the gray circle, the participant initiated each trial with a press of the space bar. On each trial, the fixation square was replaced by the central target (initial stimulus duration was nine frames), which was itself then replaced by a white noise mask subtending the whole screen. The identity (“long” or “short”) of the central target was determined randomly for each trial. The adaptive staircase procedure continued until either (a) 72 trials were completed, (b) 12 reversals in the direction of the staircase had occurred, or (c) 10 consecutive trials with stimulus duration of one frame were responded to correctly. Each participant’s threshold was estimated by averaging the stimulus duration at the last eight reversals in the observed staircase. This threshold provided an estimate of each participant’s ability to attend to and successfully identify a visual target at fixation with 79.4% accuracy.

Central and Peripheral Dual Task

The second task differed from the central task alone by the addition of the peripheral target. The central and peripheral targets appeared simultaneously, and participants were instructed to first respond to the central target (“long” or “short”) and then respond to the peripheral target by pointing to its location on the screen. The identity of the central target and the peripheral target location were determined randomly for each trial. The initial stimulus duration was nine frames, and the adaptive staircase determined the duration of subsequent stimulus displays. For the purposes of the staircase procedure, a trial was considered correct if the participant correctly identified the central target and correctly pointed to the location of the peripheral target. If either response was incorrect, then the trial was considered to be incorrect. The termination rule for was the same as for the central task alone. The threshold was computed in the same way as for the central task alone, providing an estimate of the stimulus duration required for each participant to successfully perform both tasks at the same time with 79.4% accuracy. This task was compared with performance in the center task only condition, in order to determine whether dual task demands affected deaf and hearing participants disproportionately. This task produced a single threshold derived from performance on both tasks. Therefore, performance on this task could not be compared with thresholds obtained from the dual task with distracters condition, where central and peripheral thresholds were assessed independently.

Dual Task With Distracters

On the third, and final, task each trial contained both a central and a peripheral target. However, on each trial there were distracters located along each of the eight response axes (Figure 2). The distracters were white line drawings of squares, each subtending 2.0° of visual angle. In addition, each trial was sampled from one of two independent staircases. For the central identification staircase, a correct response was one where the central target was correctly identified, and the peripheral target was accurately localized; an incorrect response was recorded when an error was made identifying the central target, but where the peripheral target was accurately localized; and all trials where the peripheral target was incorrectly localized were ignored. For the peripheral localization staircase, the inverse was true—trials where the central identification was incorrect were ignored, and trials were classified as correct if the central discrimination and peripheral localization were both accurate, and incorrect otherwise. Thus, the central identification threshold was not influenced by incorrect peripheral localization, and the peripheral localization threshold was not influenced by incorrect central identification. Rather than first completing one staircase and then the other, they were interleaved, such that the staircase from which the next trial was selected was determined randomly for each trial. Thus, if a participant prioritized the central task, then their threshold for the central identification staircase would converge on a lower threshold, whereas their independent threshold for the peripheral localization task would converge at a much longer duration (and vice versa).

The termination rule for both staircases was the same as for the central task alone, although the procedure continued to sample from both staircases until termination criteria had been met for both staircases. These additional trials for the staircase that converged first were ignored when computing thresholds. The average stimulus duration at the last eight reversals was averaged for each staircase to provide two thresholds: one an estimate of the stimulus duration required for 79.4% central identification accuracy (when peripheral localization was 100% accurate), the other for peripheral localization accuracy (when central discrimination was 100% accurate).

Procedure

After obtaining written informed consent, participants were seated and asked to keep their head still in a head- and chin-rest positioned 30cm from a touchscreen display in order to maintain the appropriate visual angles. The central task alone was administered first, and participants were instructed to maintain fixation on a small square at the center of the screen. On each trial, the fixation square was replaced with the central target and participants were asked to speak (hearing) or sign (deaf) the identity of the target (“short” or “long”). The experimenter entered their response via a keyboard. Next, the central and peripheral dual task was administered, with participants instructed to first respond with the identity of the central target, and then touch the location on the screen where they thought the peripheral target had appeared. The dual task with distracters was the final task administered. Participants were instructed to ignore the white squares on the screen, and to just respond as they had for the central and peripheral dual task—first with the identity of the central target, and then the location of the peripheral target. For all tasks, participants were told to respond as accurately and as quickly as possible.

Results

Performance in the Absence of Distracters

Thresholds from the central task only and central and peripheral dual task conditions were log10 transformed and entered into a mixed 2-way ANOVA with task (central only, central plus peripheral) as a within subjects factor and group (deaf, hearing) as a between subjects factor. SES was included as a covariate in this and all other analyses, as the deaf participants had lower self-reported socioeconomic backgrounds than the hearing participants. The main effect of task was not statistically significant: F task (1, 31) = 0.74, p = .396, partial η2 = .02. Nor did the effect of task differ as a function of group: F interaction (1, 31) = 2.44, p = .128, partial η2 = .07. Finally, the thresholds of deaf participants did not significantly differ from those of hearing participants: F group (1, 31) = 0.12, p = .737, partial η2 < .01. The untransformed threshold data (in milliseconds for comparison with Dye et al., 2009) are reported in Table 1A.

Table 1.

| Task | Deaf (N = 16) | Hearing (N = 18) | |

|---|---|---|---|

| A. No distracters | |||

| central task only | M (SD) | 26 (14) | 29 (10) |

| 95% CI | 19–34 | 23–34 | |

| Central plus peripheral task | M (SD) | 45 (25) | 38 (8) |

| 95% CI | 31–58 | 34–42 | |

| B. With distracters | |||

| central task | M (SD) | 63 (40) | 48 (19) |

| 95% CI | 42–85 | 38–57 | |

| Peripheral task | M (SD) | 121 (28) | 105 (35) |

| 95% CI | 106–136 | 87–122 | |

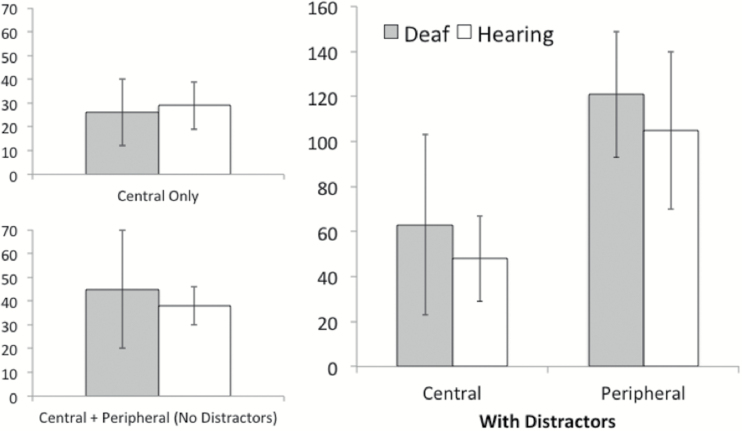

Effect of Distracters on Central Identification

In order to examine the effect of a concurrent peripheral load on central target identification, the difference was computed between the central task alone threshold and the central task threshold when performing the dual task with distracters (Table 1A,B and Figure 3). This difference score represents the increase in threshold (performance cost) due to concurrently localizing a peripheral target in a field of distracters (Due to the blocked nature of the design and the fixed order of administration, the difference score may also partially represent a fatigue effect. It is assumed here that fatigue is random and does not differ as a function of group.). The difference scores were log10 transformed and entered into a planned comparison with group (deaf, hearing) as a between subjects factor. There was a significant difference between the deaf and hearing groups in terms of how much the concurrent peripheral localization task impaired central identification performance: F group (1, 31) = 7.72, p = .009, partial η2 = .20. For deaf participants, the mean difference score was 24ms (SD = 16), and for the hearing participants it was 13ms (SD = 9).

Figure 3.

The left panel shows the thresholds (M ± SD in milliseconds) for the central-only (top) and central and peripheral dual task (bottom) conditions. Higher thresholds indicate that a longer stimulus duration was required in order to achieve 79.4% accuracy. The performance of deaf and hearing participants did not differ on these tasks, and the increase in threshold observed by adding a peripheral task was not statistically significant. The right panel displays the central task and peripheral task thresholds obtained in the dual task with distracters condition. Contrary to expectations, the deaf and hearing participants did not differ in the magnitude of their peripheral localization thresholds. However, as predicted by the division-of-labor hypothesis, the central task thresholds of the deaf participants were more elevated (compared to the central-only condition) than those of the hearing participants.

Effect of Deafness on Peripheral Localization With Distracters

Finally, we sought to determine whether the decrease in central identification performance under peripheral load was accompanied by an improvement in peripheral localization in the dual task with distracters condition (Table 1B and Figure 3). Contrary to expectations, a planned comparison of log10-transformed peripheral localization thresholds revealed no significant difference between the deaf and hearing participants: F group (1, 31) = 1.62, p = .212, partial η2 = .05). The mean threshold for deaf participants was 121ms (SD = 28), and for hearing participants it was 105ms (SD = 35).

Discussion

The primary aim of the current study was to look for the existence of a trade-off between central and peripheral processing in deaf and hearing adults. Specifically, it sought to determine whether enhanced peripheral selective attention in deaf adults, compared with hearing adults, was at the expense of attention to information presented at fixation. Such a trade-off would be predicted by studies such as Proksch and Bavelier (2002), where deaf adults were more susceptible to response-interference from peripheral than from central distracters. Previous research by Dye et al. (2009) reported evidence of enhanced peripheral selective attention in deaf adults using a variant of the UFOV, but they could not determine whether or not there was a cost incurred for centrally presented targets. This study disentangled performance on a central identification task and a concurrent peripheral localization task by using separate, interleaved adaptive staircases to determine thresholds for each task.

Deaf and hearing adults were equally able to perform the central identification in isolation, suggesting that any performance differences cannot be attributed to a simple inability to process or attend to targets presented at fixation. When asked to perform that central identification task while also localizing a simultaneously presented peripheral target—in the absence of distracters—there were small increases in thresholds that were not statistically significant given the current sample size. Nevertheless, this suggests that the additional task of localizing a peripheral target did not affect central discrimination performance for either deaf or hearing participants. This threshold for the dual task presumably reflects an upper limit on performance determined by whichever task was most challenging. The small increase in threshold beyond that obtained for the central task alone could be attributed to a more demanding peripheral localization task or to the requirement to process two spatially distinct stimuli at the same time; this cannot be determined by the current study.

Adding a field of distracter shapes to the stimulus displays did result in a significant increase in the size of thresholds obtained. Central identification thresholds were compared for two conditions: central task alone, and dual task with distracters. The increase in stimulus duration threshold resulting from adding distracters and a peripheral task was greater for deaf than for hearing participants. However, and contrary to expectations, this was not accompanied by superior deaf performance on the peripheral localization in a field of distracters. Thus, the current study failed to replicate the original finding reported by Dye et al. (2009).

While differences in spatial task performance between deaf and hearing populations have often being explained in terms of differences in the spatial allocation of attention, it is also possible that perceptual differences play an important role. One possibility is that by virtue of their deafness, the visual system of deaf individuals reorganizes and the distribution of receptive field (RF) sizes as a function of peripheral eccentricity changes. Thus, rather than having a monotonic increase in RF size with increasing eccentricity (Freeman & Simoncelli, 2011), the function may be nonlinear, or linear with a different slope. Assuming RFs in peripheral vision are smaller in deaf than hearing subjects, deaf observers should have an easier time resolving the peripheral target from distracters because it is less likely that the peripheral target and a distracter would occupy the same RF. Conversely, they may be more susceptible to crowding in central vision, where having relatively larger RFs compared to hearing observers means that the central target is more likely to occupy the same RF as distracters. The current study supports the latter interpretation, but not the former—enhanced peripheral localization in the face of distracters was not observed. It seems unlikely that an account based upon only changes in RF size can determine the pattern of findings across studies, although it is a hypothesis that should be more carefully tested in future studies. An alternative to a perceptual explanation is one that posits differences in visual attention resulting from deafness. Can an attentional account explain the lack of a peripheral advantage in this study compared with previous work, most notably that reported by Dye et al. (2009)? One potentially important difference between the two studies is that here participants were explicitly instructed to identify the central target first, before indicating the location of the peripheral target. Thus, the task demands may have biased them towards prioritizing the central target. Such a prioritization may have manifested itself by enhancing visual attention at an appropriate spatial scale for the central identification task (Hopf et al., 2006) at the expense of enhancing attention at the scale required for the peripheral localization task. According to the data reported by Freeman and Simoncelli (2011), the difference in hair length of the central target at 0° (0.16° vs. 0.27° of visual angle) would require attention to operate at the level of individual RFs within V1 (primary visual cortex). For the peripheral target at 20° eccentricity, it is more likely that attention would need to operate at sites in V2 or V4 (higher level visual areas). By prioritizing a response to the central target, this may have led to an attentional bias in V1 that precluded attention to the periphery at higher levels of the visual system. The presence of the peripheral task may still have led to some attentional resources being allocated to the purpose of visual selection in the periphery. For the deaf participants, this may have been insufficient to bring about better peripheral localization thresholds than those observed in the hearing participants, but enough to draw resources away from the central identification task. Under this account, the effect of deafness is to enable flexibility in the allocation of attention across the visual field. Task instructions, however, may have left the deaf participants in a visual “no man’s land.”

What then is the fate of the division-of-labor hypothesis? The hypothesis may well characterize the relative performance of deaf individuals under specific conditions—those where the visual periphery is prioritized over processing of information at fixation. Future research will need to carefully assess whether deafness-related changes are perceptual or attentional (or both), and the degree of flexibility of the deaf visual system in response to specific task demands. This will have important implications for reasoning about the visual performance of deaf individuals across tasks that range from requiring fine discrimination in central vision (reading) to tasks requiring processing of information in peripheral vision (signing) or those that need the constant, flexible reallocation of resources (driving).

It is important to note other potential sources for the discrepancies in findings between this and previous studies. The deaf population is highly heterogeneous (Dye & Bavelier, 2013) with a range of etiologies of hearing loss, differing communication preferences (visual and auditory), and varied educational backgrounds. Future studies will also need to carefully document potential confounding factors such as IQ, age of first exposure to natural language, and childhood education, as these are likely to play a more important role in the shaping of visual attention in deaf individuals.

Funding

This study was supported by grants from the National Institutes for Health (NIDCD R01 DC004418) and the National Science Foundation Science of Learning Center VL2 (SBE-0541953).

Conflicts of Interest

No conflicts of interest were reported.

Acknowledgments

Thanks to Ryan Barrett and Kim Scanlon for help in testing the participants.

References

- Ball K. K. Beard B. L. Roenker D. L. Miller R. L., & Griggs D. S (1988). Age and visual search: Expanding the useful field of view. Journal of the Optical Society of America A, Optics and Image Science, 5, 2210–2219. doi:10.1364/JOSAA.5.002210 [DOI] [PubMed] [Google Scholar]

- Bavelier D. Dye M. W., & Hauser P. C (2006). Do deaf individuals see better? Trends in Cognitive Sciences, 10, 512–518. doi:10.1016/j.tics.2006.09.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beck D. M., & Lavie N (2005). Look here but ignore what you see: Effects of distractors at fixation. Journal of Experimental Psychology: Human Perception and Performance, 31, 592–607. doi: 10.1037/0096-1523.31.3.592 [DOI] [PubMed] [Google Scholar]

- Bosworth R. G., & Dobkins K. R (2002). The effects of spatial attention on motion processing in deaf signers, hearing signers, and hearing nonsigners. Brain and Cognition, 49, 152–169. doi:10.1006/brcg.2001.1497 [DOI] [PubMed] [Google Scholar]

- Bottari D., Turatto M., Bonfioli F., Abbadessa C., Selmi S., Beltrame M. A., Pavani F. (2008). Change blindness in profoundly deaf individuals and cochlear implant recipients. Brain Research, 1242, 209–218. doi:10.1016/j.brainres.2008.05.041 [DOI] [PubMed] [Google Scholar]

- Buckley D., Codina C., Bhardwaj P., Pascalis O. (2010). Action video game players and deaf observers have larger Goldmann visual fields. Vision Research, 50, 548–556. doi:10.1016/j.visres.2009.11.018 [DOI] [PubMed] [Google Scholar]

- Colmenero C., Catena A., Fuentes L., Ramos M. (2004). Mechanisms of visualspatial orienting in deafness. European Journal of Cognitive Psychology, 16, 791–805. doi:10.1080/09541440340000312 [Google Scholar]

- Conway C. M., Pisoni D. B., Kronenberger W. G. (2009). The importance of sound for cognitive sequencing abilities: The auditory scaffolding hypothesis. Current Directions in Psychological Science, 18, 275–279. doi:10.1111/j.1467-8721.2009.01651.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dye M. W. Baril D. E., & Bavelier D (2007). Which aspects of visual attention are changed by deafness? The case of the Attentional Network Test. Neuropsychologia, 45, 1801–1811. doi:10.1016/j.neuropsychologia.2006.12.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dye M. W. Hauser P. C., & Bavelier D (2009). Is visual selective attention in deaf individuals enhanced or deficient? The case of the useful field of view. PLOS One, 4, e5640. doi:10.1371/journal.pone.0005640 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dye M. W. G., & Bavelier D (2013). Visual attention in deaf humans: A neuroplasticity perspective. In Kral A. Fay R. R., & Popper A. N. (Eds.), Springer handbook of auditory research: Deafness. New York, NY: Springer. [Google Scholar]

- Freeman J., & Simoncelli E. P (2011). Metamers of the ventral stream. Nature Neuroscience, 14, 1195–1201. doi:10.1038/nn.2889 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green C. S., & Bavelier D (2003). Action video game modifies visual selective attention. Nature, 423, 534–537. [DOI] [PubMed] [Google Scholar]

- Green C. S., & Bavelier D (2006). Effects of action video game playing on the spatial distribution of visual selective attention. Journal of Experimental Psychology: Human Perception and Performance, 32, 1465–1478. doi:10.1037/0096-1523.32.6.1465 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingshead A. B. (1975). Four factor index of social status. New Haven, CT: Yale University. [Google Scholar]

- Hopf J.-M. Luck S. J. Boelmans K. Schoenfeld M. A. Boehler C. N. Rieger J., & Heinze H.-J (2006). The neural site of attention matches the spatial scale of perception. The Journal of Neuroscience, 26, 3532–3540. doi:10.1523/jneurosci.4510-05.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitt H. (1970). Transformed up-down methods in psychoacoustics. The Journal of the Acoustical Society of America, 49, 467–477. [PubMed] [Google Scholar]

- Loke W. H., & Song S (1991). Central and peripheral visual processing in hearing and nonhearing individuals. Bulletin of the Psychonomic Society, 29, 437–440. [Google Scholar]

- Mitchell T. V. (1996). How audition shapes visual attention (PhD dissertation). Indiana University, Bloomington, IN. [Google Scholar]

- Parasnis I., & Samar V. J (1985). Parafoveal attention in congenitally deaf and hearing young adults. Brain and Cognition, 4, 313–327. doi:10.1016/0278-2626(85)90024–7 [DOI] [PubMed] [Google Scholar]

- Pavani F., & Bottari D (2012). Visual abilities in individuals with profound deafness: A critical review. In Murray M. M., Wallace M. T. (Eds.), The neural bases of multisensory processes. Boca Raton, FL: CRC Press. [PubMed] [Google Scholar]

- Proksch J., & Bavelier D (2002). Changes in the spatial distribution of visual attention after early deafness. Journal of Cognitive Neuroscience, 14, 1–5. doi:10.1162/08989290260138591 [DOI] [PubMed] [Google Scholar]

- Quittner A. L. Smith L. B. Osberger M. J. Mitchell T., & Katz D (1994). The impact of audition on the development of visual attention. Psychological Science, 5, 347–353. doi:10.1111/j.1467–9280.1994.tb00284.x [Google Scholar]

- Smith L. B. Quittner A. L. Osberger M. J., & Miyamoto R (1998). Audition and visual attention: The developmental trajectory in deaf and hearing populations. Developmental Psychology, 34, 840–850. doi:10.1037/0012-1649.34.5.840 [DOI] [PubMed] [Google Scholar]

- Stevens C., & Neville H (2006). Neuroplasticity as a double-edged sword: Deaf enhancements and dyslexic deficits in motion processing. Journal of Cognitive Neuroscience, 18, 701–714. doi:10.1162/jocn.2006.18.5.701 [DOI] [PubMed] [Google Scholar]

- Treutwein B. (1995). Adaptive psychophysical procedures. Vision Research, 35, 2503–2522. doi:10.1016/0042-6989(95)00016-X [PubMed] [Google Scholar]

- Wetherill G. B., & Levitt H (1965). Sequential estimation of points on a psychometric function. British Journal of Mathematical and Statistical Psychology, 18, 1–10. [DOI] [PubMed] [Google Scholar]