Abstract

Background and Objective

Laser photocoagulation is a mainstay or adjuvant treatment for a variety of common retinal diseases. Automated laser photocoagulation during intraocular surgery has not yet been established. The authors introduce an automated laser photocoagulation system for intraocular surgery, based on a novel handheld instrument. The goals of the system are to enhance accuracy and efficiency and improve safety.

Materials and Methods

Triple-ring patterns are introduced as a typical arrangement for the treatment of proliferative retinopathy and registered to a preoperative fundus image. In total, 32 target locations are specified along the circumferences of three rings having diameters of 1, 2, and 3 mm, with a burn spacing of 600 μm. Given the initial system calibration, the retinal surface is reconstructed using stereo vision, and the targets specified on the preoperative image are registered with the control system. During automated operation, the laser probe attached to the manipulator of the active handheld instrument is deflected as needed via visual servoing in order to correct the error between the aiming beam and a specified target, regardless of any erroneous handle motion by the surgeon. A constant distance of the laser probe from the retinal surface is maintained in order to yield consistent size of burns and ensure safety during operation. Real-time tracking of anatomical features enables compensation for any movement of the eye. A graphical overlay system within operating microscope provides the surgeon with guidance cues for automated operation. Two retinal surgeons performed automated and manual trials in an artificial model of the eye, with each trial repeated three times. For the automated trials, various targeting thresholds (50–200 μm) were used to automatically trigger laser firing. In manual operation, fixed repetition rates were used, with frequencies of 1.0–2.5 Hz. The power of the 532 nm laser was set at 3.0 W with a duration of 20 ms. After completion of each trial, the speed of operation and placement error of burns were measured. The performance of the automated laser photocoagulation was compared with manual operation, using interpolated data for equivalent firing rates from 1.0 to 1.75 Hz.

Results

In automated trials, average error increased from 45 ± 27 to 60 ± 37 μm as the targeting threshold varied from 50 to 200 μm, while average firing rate significantly increased from 0.69 to 1.71 Hz. The average error in the manual trials increased from 102 ± 67 to 174 ± 98 μm as firing rate increased from 1.0 to 2.5 Hz. Compared to the manual trials, the average error in the automated trials was reduced by 53.0–56.4%, resulting in statistically significant differences (P ≤ 10−20) for all equivalent frequencies (1.0–1.75 Hz). The depth of the laser tip in the automated trials was consistently maintained within 18 ± 2 μm root-mean-square (RMS) of its initial position, whereas it significantly varied in the manual trials, yielding an error of 296 ± 30 μm RMS. At high firing rates in manual trials, such as at 2.5 Hz, laser photocoagulation is marginally attained, yielding failed burns of 30% over the entire pattern, whereas no failed burns are found in automated trials. Relatively regular burn sizes are attained in the automated trials by the depth servoing of the laser tip, while burn sizes in the manual trials vary considerably. Automated avoidance of blood vessels was also successfully demonstrated, utilizing the retina-tracking feature to identify avoidance zones.

Conclusion

Automated intraocular laser surgery can improve the accuracy of photocoagulation while ensuring safety during operation. This paper provides an initial demonstration of the technique under reasonably realistic laboratory conditions; development of a clinically applicable system requires further work.

Keywords: retinal photocoagulation, robotics, visual servoing, micromanipulation

INTRODUCTION

Laser photocoagulation is an established treatment for a variety of retinal diseases such as proliferative retinopathy most commonly diabetic retinopathy [1,2], sealing retinal breaks important in the successful repair of retinal detachment [3], macular edema [4,5], and treatment of retinal vascular lesions [6,7], to name a few. The intraoperative goals of laser treatment include treatment of ischemic retina to allow regression of retinal neovascularization, formation of a chorioretinal scar around retinal breaks, treatment of leaking vascular lesions, and stimulation of the retinal pigment epithelium to reduce retinal edema.

Grid laser photocoagulation is used to directly treat a specific target region usually in the macula for treatment of macular edema [5]. Focal laser photocoagulation treats blood vessel specific lesions or a small area of the retina with a limited number of laser burns. For instance, this method can be applied to treat retinal breaks [3] or macular edema due to diabetes [4,5] or retinal vein occlusion [6,7]. On the other hand, panretinal (or scatter) laser photocoagulation delivers hundreds of laser burns to a wider area of the retina, usually in the regions outside the macula [1]. It is generally used as a treatment of any cause of proliferative retinopathy, the most common being proliferative diabetic retinopathy in order to lower the production proangiogenic and vascular hyperpermability modulators most notably VEGF that may be responsible for generation of abnormal blood vessels (neovascularization). Retinal neovascularization is the precursor to the most serious vision threatening complications of proliferative retinopathy.

For optimal clinical outcomes, those procedures require high accuracy in terms of the location and size of laser spots, with delivery of the proper amount of energy [8]. However, surgeons often encounter complications due to the limited maneuverability of surgical instruments, hand tremor, and poor visualization of surgical targets during operation. This may lead to irregular laser burns on the retinal surface and/or inadvertent coagulation of healthy retina or other ocular structures, which can result in an unintended reduction in macular function, visual field defects, or choroidal neovascularization [9]. Application of laser to unintended regions such as the optic nerve and the fovea has potential to cause permanent central vision loss [10]. In addition, long treatment sessions may impose discomfort and tedium on both patients and ophthalmologists [11,12].

Hence, automated approaches have been introduced into laser photocoagulation in order to improve treatment accuracy, increase patient safety, and reduce operating time [11–13]. Initially, the feasibility of an automated laser delivery system was demonstrated to place multiple lesions of predetermined sizes into known locations in the retina [13]. For automation, a tracking feature is essential to achieve high accuracy while compensating for the considerable movement of the eye, since the eye cannot be completely immobilized. Later research efforts led to the development of hybrid retinal tracking for automated laser photocoagulation, incorporating global tracking with digital imaging and high-speed analog local tracking [14,15]. Finally, computer-guided retinal laser surgery system has been realized, utilizing digital fundus imaging in real time, and also controlling lesion depth [12,16]. Blumenkranz et al. also introduced a semiautomated system in which the ophthalmologist has control over the treatment at all times [11]. The semiautomated system still retains the primary advantages of the automated system, while rapidly delivering up to 50 pulses with shorter pulse duration on predefined spots, using a galvanometric scanner. The semiautomated system is kept relatively simple and inexpensive by eliminating certain features that are necessary in the automated system, such as retinal tracking and automated lesion reflectance feedback. Such systems, now commercially available as Navilas® and PASCAL, have been increasingly adopted in the clinic [8,16–18]. However, these systems treat through the pupil and are designed for use in the outpatient clinic rather than the operating room.

In order to bring such benefits of automated laser photocoagulation to intraocular surgery performed during pars plana vitrectomy [19], we presented semiautomated laser photocoagulation using an active handheld instrument known as “Micron” [20]. The semiautomated system corrected error between the preoperative target and the current beam location by deflecting the laser probe attached to micron. However, the system was only able to correct error within a few hundred microns of the target due to its limited range of motion. The operator was thus required to deliberately move the laser probe in a raster-scan fashion in order to apply the full pattern of burns; i.e., the operator provided gross motion, while micron provided fine motion. The distance of the tool from the retinal surface had to be manually regulated, relying on the operator’s depth perception, once again due to the limited range of motion of the active instrument. Furthermore, the limited degrees of freedom (DOF) hindered the use of the instrument through a fulcrum such as a sclerotomy in an intact eye. Accordingly, tests were performed only in “open sky” fashion on fixed surfaces, without tracking of anatomy [20].

Recently, we proposed an automated laser photocoagulation system using an improved prototype of micron in order to overcome these drawbacks [21]. The new handheld instrument incorporates 6-DOF manipulation and provides much greater range of motion, which automated scanning of the laser probe over the entire pattern area, and accommodates use through a sclerotomy [22]. As a result, the feasibility of automated intraocular photocoagulation was demonstrated in a model eye, while tracking the movement of the retina and also maintaining a constant standoff distance from the retina.

However, further developments are still required to apply the automated system in real vitreoretinal surgery. For instance, any large error in initial calibration may lead to the failure of servoing, because the control system relies primarily on the calibration and corresponding reconstruction of the target surface. Moreover, the automated operation is susceptible to actuator saturation, since hand–eye coordination is interrupted; during the several seconds of automated execution, the operator can easily drift to the edge of the reachable workspace without knowing it.

To address these issues, this paper presents a new control scheme, based on visual servoing, that minimizes the error in the image plane instead of relying on a reconstructed 3D surface. A custom-built graphical overlay system in the operating microscope is also used, in order to maintain hand–eye coordination during the time of automated execution. In addition, intra-operative anatomical tracking is utilized in automatic enforcement of avoidance zones, in order to prevent regions such as retinal vessels or the fovea from being mistakenly photocoagulated. This paper describes the development of the new system and evaluates its effectiveness when used by trained vitreoretinal surgeons in a realistic model eye through a 23-gauge cannula-based sclerotomy.

MATERIALS AND METHODS

System Setup

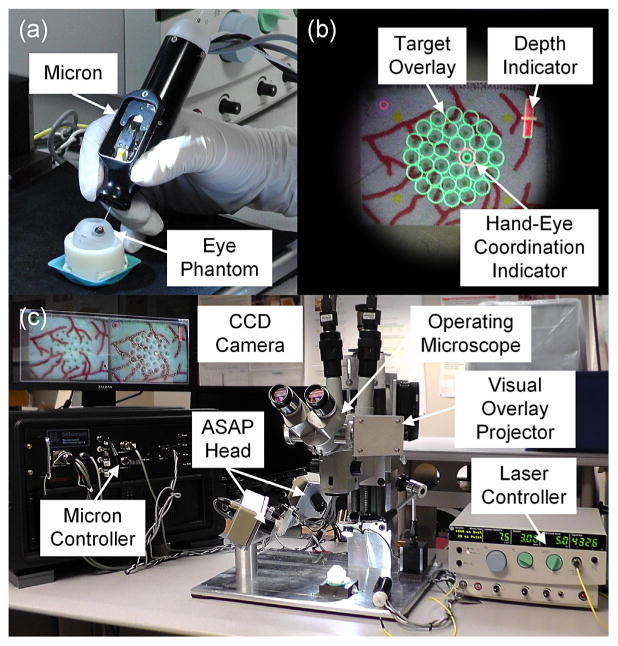

The automated laser photocoagulation system features the 6-DOF handheld instrument, micron, as shown in Figure 1a, in which the laser probe is automatically manipulated to treat preoperatively specified targets via a vision processing system. The new Micron prototype incorporates a miniature Gough–Stewart platform actuated by six piezoelectric linear motors (SQUIGGLE® SQL-RV-1.8, New Scale Technologies, Inc.) [22]. The manipulator provides 6-DOF motion of the end-effector within a cylindrical workspace 4 mm long and 4 mm in diameter, while allowing for a remote center of motion (RCM) at the point of entry through the sclera, enabling it to execute automated scanning for intraoperative optical coherence tomography (OCT) [23] or patterned laser photocoagulation [21]. For control, 6-DOF Micron senses the position and orientation of both the tool tip and the handle, using a custom-built optical tracking system (apparatus to sense accuracy of position or “ASAP”) [24]. The handheld instrument is equipped with two sets of infrared LEDs for the optical tracking system: one set on the tool mount and the other on the handle (visible in Fig. 1a). The position and orientation are retrieved by detecting the pose of each LED panel at a sampling frequency of 1 kHz over a 27-cm3 workspace; ASAP performs this tracking with less than 10 μm RMS noise. Given the goal position of the tool tip and RCM, individual motors are controlled in real time, treating undesirable hand motion (e.g., tremor) as a control disturbance.

Fig. 1.

Automated intraocular laser surgery system. (a) Handheld instrument (Micron) and eye phantom. (b) Graphical overlays shown through the right eyepiece of the operating microscope. The green circles indicate the targets. The red bar at the upper right corner is the depth indicator representing the height of the laser probe from the retinal surface. The red circle on a target is the guidance cue to maintain the hand–eye coordination. The location and size of the circle represent the lateral and vertical positions of the laser probe, respectively. (c) Overall system setup, including the Micron controller, the operating microscope, the graphical overlay system, and the laser controller.

The vision system consists of a stereo operating microscope (Zeiss OPMI®1, Carl Zeiss AG, Germany) with variable magnification (4–25 X), two CCD cameras (Flea®2, Point Grey Research, Richmond, BC, Canada), and a desktop PC. The vision system calculates the goal position of the laser tip to minimize error between the target and the detected aiming beam and delivers the goal position to the Micron controller. The system also detects tip positions in 2D images for an initial calibration between the vision system and the Micron controller. A pair of images streams to the PC at 30 Hz with 800 × 600 resolution for further processing, which is synchronized with the Micron controller via an external trigger provided by one of the cameras.

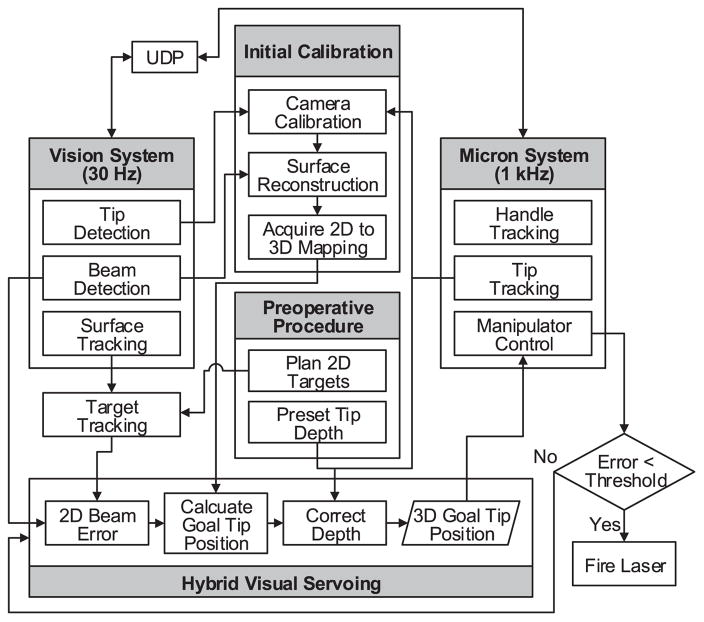

During operation, error between the target and the aiming beam is minimized by displacement of the laser tip via visual servoing. As the aiming beam reaches the target within a specified tolerance or threshold, the laser is triggered. Once the vision system detects the laser firing, the next target is assigned and the procedure is repeated until completion of photocoagulation of all targets. Figure 1c shows the overall setup with the Micron, vision, and laser systems. The overall control flow is depicted in Figure 2.

Fig. 2.

Block diagram of the system, showing data and execution flow.

For the automated treatment, any custom pattern can be introduced for a set of targets by the user interface, in terms of shape, size, and target spacing. The targets are first placed on a preoperative image and then updated with respect to the movement of the eye by tracking blood vessels on the retinal surface. The tracking feature is essential in order for the automated laser photocoagulation to compensate for movement of the eye in its socket during operation [14,15]. For example, vitreoretinal surgeons often manipulate the eye with a surgical tool to explore region of interest due to the limited field of view of operating microscopes; the patient (often sedated but not under general anesthesia) may also move their head and rarely the eye. Accordingly, voluntary/involuntary motion is inevitably introduced to the eyeball during operation, which would lead to failure of preoperative registration. To tackle this issue, we have developed an algorithm, called “eyeSLAM,” to both map and localize retinal vessels by temporally fusing and registering detected vessels in a sequence of frames [25].

In addition, the new automated system is also equipped with a monocular graphical overlay system in the operating microscope [26], since surgeons are much more accustomed to operating with the microscope. Overlaid graphical cues include the preoperative targets, the distance of the laser tip from the retinal surface, and instructive cues to maintain hand–eye coordination. The locations of the targets are displayed as a set of circles and the distance is represented as an indication bar, as shown in Figure 1b. To maintain hand–eye coordination, it also provides the displacement of the handle with respect to its initial position when automated execution was begun. As long as the operator keeps the position within a certain range of the initial position, the automated system can correct the errors and control the depth of the tool without reaching the edge of the workspace. To enhance perception of the displacement in 3D, it is represented in the display as a circle whose location and diameter correspond to lateral and vertical displacements, respectively (a larger circle indicates greater height, closer to the viewer, and vice versa).

System Calibration

To utilize vision information in control, we first register a pair of cameras in the stereo microscope to the Micron control system. Once the 2D camera coordinates are registered to the Micron control coordinates in 3D (called herein the “ASAP coordinates”), we can then define a target surface in the ASAP coordinates by matching multiple correspondences detected in the cameras. These procedures are accomplished by a single step to sweep the laser probe above the retinal surface while detecting the 2D positions of the tip and aiming beam in images.

For the registration, a set of 2D tip positions, in the left and right images, are matched with the corresponding 3D ASAP tip positions. We thus obtain camera calibration (or projection) matrices to map a point in 3D to projected points in 2D in both left and right images using a direct linear transformation (DLT) method [27]. Given the camera calibration, we also obtain a point cloud in 3D by triangulating multiple pairs of aiming beams detected on the left and right images, which belongs to the retinal surface. The resulting surface is then modeled as a plane in the ASAP space, by applying a linear least squares fit to the point cloud. Since only a relatively small area is observed through the microscope, compared to the entire retinal surface, the planar assumption does hold approximately in the area of interest, yielding error less than 100 μm.

In addition to the system calibration for control, we also need to register the visual overlay system attached to the microscope with the vision system. This calibration is to find a mapping between a virtual image projected in the right eyepiece and an actual image captured by the right CCD camera. As a result, virtual images created by the PC are overlaid on the right eyepiece with the targets, as shown in Figure 1b.

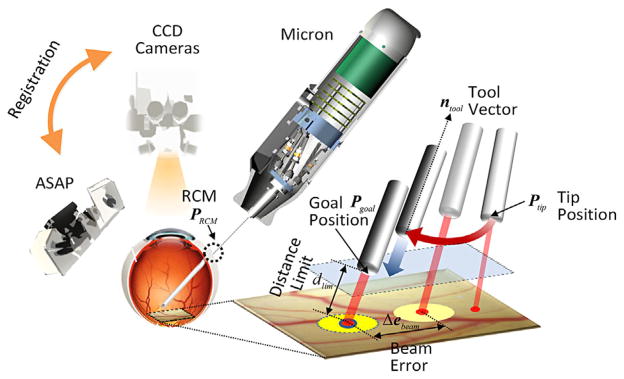

Control Principle

We introduce a hybrid control scheme for automated laser photocoagulation. The 3-DOF motion of the laser tip is decoupled into two components: the 2-DOF planar motion parallel to the target plane and the 1-DOF motion along the axis of the laser probe. The decoupled 2-DOF motion is then controlled via image-based visual servoing, to locate the laser aiming beam onto a target position using a monocular camera. The 1-DOF axial motion is then controlled to maintain the constant standoff of the laser probe from the estimated retinal surface in the ASAP coordinates.

For the image-based visual servoing, we first need to find an interaction matrix which maps differential motion in a task space to the corresponding motion in an image space. Herein, the task space defined at the ASAP coordinates is subject to the plane that models the retinal surface. The 2-DOF planar motion of the laser tip is thus allowed above the task space, where the tip motion is assumed to be parallel to the task space within a small range of motion.

The interaction matrix is derived by taking differential motions along the principal vectors of the retinal surface in 3D and their projections on image coordinates in 2D, using one of the camera calibration matrices. Accordingly, the inverse of the interaction matrix allows mapping of a differential motion in the image space to a planar motion in the task space. The inverse matrix can also be decomposed by the magnitude and direction of mapping between the image and task plane coordinates. It is noted that the scale factors can be substituted by zoom factors for a corresponding magnification of the operating microscope, while preserving the direction of motion. Hence, it would be useful for accommodating zoom optics frequently used in intraocular surgery.

Given the interaction matrix, error between a target and the aiming beam in the image coordinates is transformed to differential motion in the task plane. We then set the actual motion of the laser tip subject to minimizing the error, while accounting for the offset of the tool tip from the estimated surface. Accordingly, the actual displacement of the laser tip is defined by scaling down the differential motion in the task plane by the ratio of the lever arms pivoting around an RCM. Herein, small angular motion is assumed, because the displacement of the aiming beam is much smaller than the distance of the laser tip from the RCM. Finally, we obtain the differential motion of the laser tip in 3D, corresponding to an error between the aiming beam and the target position, using the orthonormal bases of the plane described in the ASAP coordinates. As a result, the 2D error between the current beam position and target can be minimized via the visual servoing loop, applying a proportional-derivative (PD) controller.

Although the aiming beam reaches the target by the controller, we still have a remaining degree of freedom along the axis of the tool in control; any arbitrary distance between the laser tip and the retinal surface can be set without changing the incident position of the aiming beam. Hence, this 1-DOF motion is regulated to maintain a predefined distance between the laser tip and the retinal surface. Finally, we incorporate the depth limiting feature by adjusting the goal position of the laser probe tip defined by the visual servoing loop, along the axis of the tool. For the depth limit to be kept, the operator uses an initial position of the laser tip as the limit during automated operation. The control procedure is depicted in Figure 3.

Fig. 3.

Visualization of the control procedure. The 2D error in laser beam position is minimized by image-based visual servo control parallel to the retinal surface, while maintaining the predefined distance of the laser tip from the retinal surface along the axis of the probe. During automated delivery of a laser pattern, the remote center of motion (RCM) is manipulated by the surgeon, but the laser tip position is automatically controlled.

Experimental Protocol

We used a model eye made of a hollow polypropylene ball with 25-mm diameter, in order to evaluate the performance of the automated system on moving targets. The portion presenting the cornea was open, and the sclerotomy locations for insertion of a light-pipe and the tool were formed by rubber patches. The model eye can freely rotate in a ball cup treated with water-based lubricant (glycerin and hydroxyethyl cellulose), as shown in Figure 1a. A slip of paper was attached to the inner surface of the model eye, as a target surface for burns; the colored background of the paper is a good absorptive material for the laser, yielding distinct black burns [20].

Multiple ring patterns were introduced in our experiments, as a typical arrangement for the treatment of proliferative retinopathy [5]. The targets were uniformly placed 600 μm apart around the circumference of rings 1, 2, and 3 mm in diameter. The spacing was selected for clear visibility of burns without overlap; a smaller (or larger) spacing would also be possible in practice. The arrangement thus provided 5, 11, and 16 targets on each circle, respectively, for a total of 32 targets per trial. As an indication of the targets, 200-μm green dots were printed on the paper slide so that they were used for targeting cues in manual trials. In addition to the circular arrays of targets, artificial blood vessels were also printed on the paper. This artificial vasculature allows tracking the movement of the eye, based on the eyeSLAM algorithm [25]. We also introduced four fiducial markers outside the targets to align the preoperative targets with the printed green dots. After completion of automated trials, the markers were postoperatively re-aligned to provide the ground truth for further evaluation. Hence, we evaluated errors caused by both control and tracking performance.

For laser photocoagulation, an Iridex 23-gauge EndoProbe was attached to the tool adaptor of Micron and an Iridex Iriderm Diolite 532nm Laser (Iridex, Mountain View, CA) was interfaced with the Micron controller. To burn the targets on a paper slide, the power of the laser was set at 3.0 W with a duration of 20 ms.

We evaluated the performance of the automated system, comparing with manual operation, under a board-approved protocol. Two retinal surgeons participated in the experiments; one had prior experience with Micron, whereas the other did not. Both surgeons have completed residency in ophthalmology and certified vitreoretinal fellowship, and have been in full-time practice performing hundreds of vitreoretinal procedures per year. One surgeon has 40 years of surgical experience, while the other has 8.

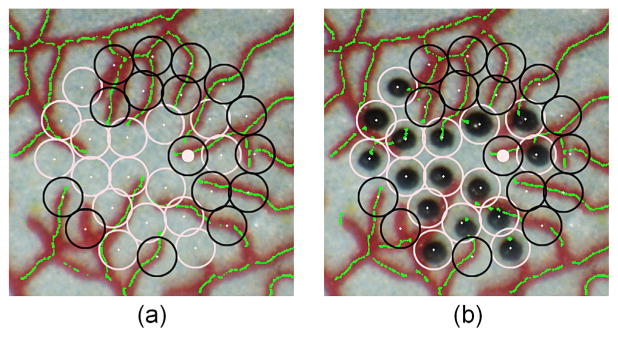

For automated operation, the targeting thresholds to trigger laser firing were set in a range of 50–200 μm, in order to investigate the effectiveness of the operation in terms of accuracy and execution time. In addition, in each trial, the initial distance between the laser tip and the tissue was used as the depth setpoint for that trial. For evaluation of targeting accuracy, all targets printed on each paper slide were subjected to treatment, without regard to any blood vessels. (The blood-vessel avoidance capability was demonstrated only in Fig. 9. In the accuracy evaluation, the vasculature is important in that it allows the system to track eye movement and keep the target registered to the retina.) In manual operation, the laser was fired at a fixed rate, regardless of convergence on the target, as is typical in intraocular laser surgery. According to typical settings used clinically, the firing rates were in the range 1.0–2.5 Hz with a step increment of 0.5 Hz. We also repeated these tests for three trials.

Fig. 9.

Automated avoidance of blood vessels in automated laser photocoagulation. (a) Target placement before the photocoagulation. Empty black circles show avoided targets within 200 μm of a vessel. The green lines represent blood vessels automatically identified by the eyeSLAM algorithm. (b) After completion of the automated laser photocoagulation.

To demonstrate the vessel-avoidance feature, we applied the eyeSLAM algorithm, capable of identifying and tracking blood vessels. A triple-ring pattern was placed in the model eye, but the system was programmed not to treat any target within a distance of 200 μm from the vessels identified. This limit of 200 μm was selected based on the size of a laser burn and the targeting threshold; since the radius of a burn is approximately 150 μm and the targeting threshold was set to be at least 50 μm, the edge of the burn could potentially touch a blood vessel located 200 μm from the center of a target. In practice, the limit can be set to any distance.

Data Analysis

To evaluate the accuracy of operation for each trial, the resulting image was binarized to identify black dots, and then underwent K-means clustering to find the centroid of each burn. Nearest-neighbor matching was then used to find corresponding targets. Since preoperative targets are subject to moving during operation, the targets were re-aligned after completion of each task for the error analysis, using the fiducial markers printed on the paper slide. Hence, we were allowed to evaluate the overall error composed of both positioning and tracking errors, by calculation of the 2D distance between the target and actual burn locations. In addition to the 2D targeting error, fluctuation in the axial distance of the laser tip with respect to a reference plane was investigated during time of operation, where the reference plane was fitted to the 3D trajectory of the probe tip. The execution time of each trial was also measured and represented as an equivalent frequency of burns per second.

We collected the data from 48 trials in total, given that the two subjects repeated each of the four test conditions of the automated and manual operation modes three times. As a result, 6 frequency measurements and 192 burn errors were obtained for each test condition and then averaged for further analysis.

Two-sample t-tests were conducted to examine statistical differences between automated and manual operation, and also differences between the subjects in each control setting. Since frequencies obtained from the automated trials were not necessarily equal to those used in the manual trials, the average error and standard deviation were interpolated within a frequency range of 1.0–1.75 Hz with a 0.25-Hz step size in order to run the t-tests under equivalent frequency settings.

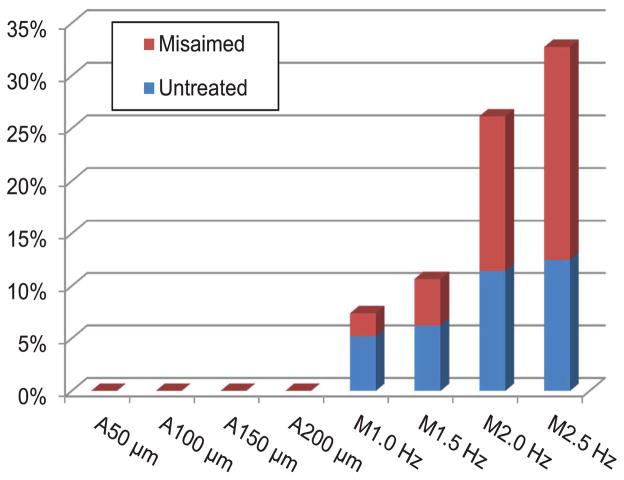

In addition to the error analysis, we dealt with invalid or spurious burns, which were found particularly in manual trials. For example, the operator sometimes failed to burn a target due to lack of energy delivered from the laser probe because the probe was too far from the retinal surface; this is noted as “untreated” in our analysis. Moreover, we call any burn “misaimed” if the error is greater than the half of the target spacing (i.e., 300 μm) or if multiple burns are aggregated around a single target, such that a misaimed burn would be totally off from an originally aimed target, or would be closer to other targets than the aimed target. Thus, only detectably one-to-one correspondences between burns and targets were taken into account in the data analysis.

RESULTS

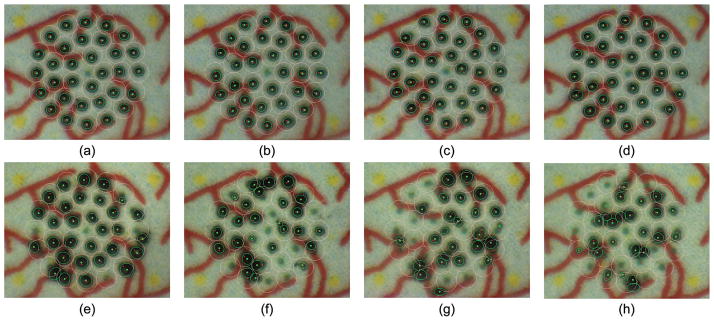

Figure 4 shows representative results from automated and manual trials. In the automated trials, the visibly regular sizes of burns are attained, whereas the sizes vary considerably in the manual trials because of the difficulty in maintaining a consistent distance from the target surface.

Fig. 4.

Results from eye phantom trials. The top row (a–d) presents automated trials with targeting tolerances of (a) 50 μm, (b) 100 μm, (c) 150 μm, and (d) 200 μm. The bottom row (e–h) shows manual trials with laser repeat rates of (e) 1.0 Hz, (f) 1.5 Hz, (g) 2.0 Hz, and (h) 2.5 Hz.

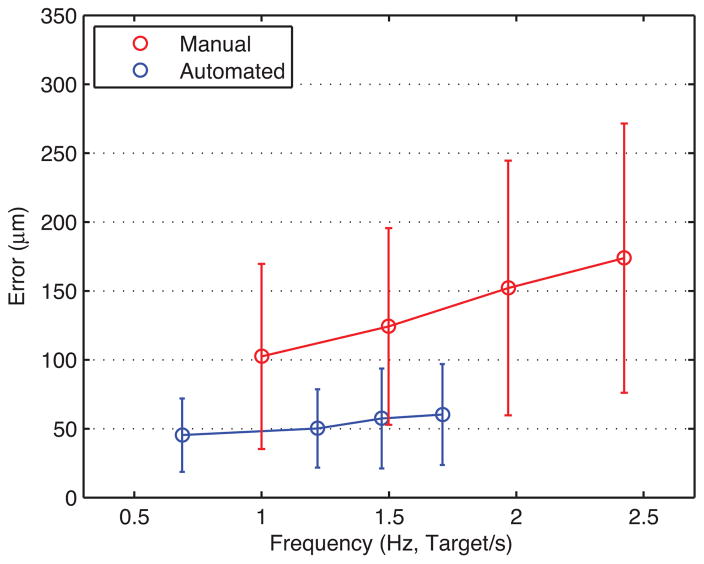

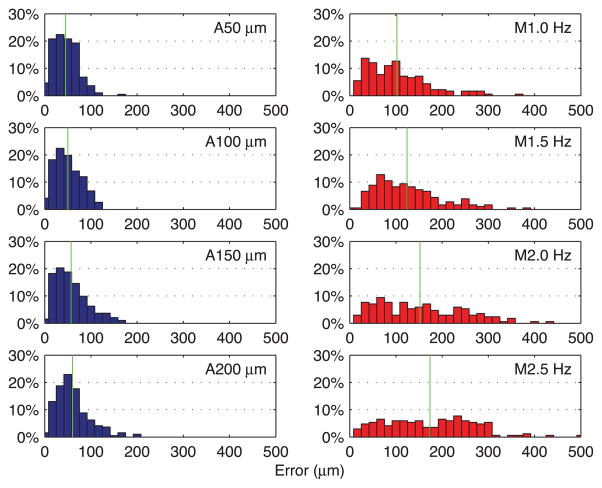

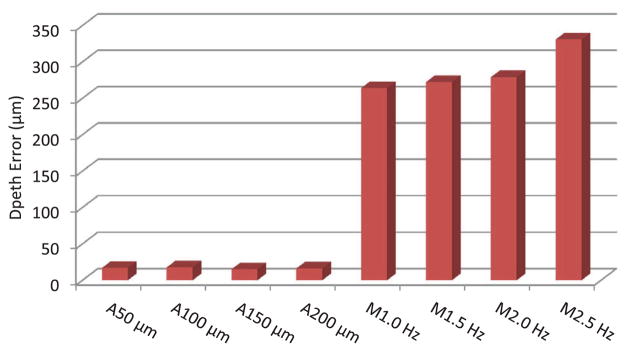

Figure 5 presents average error from automated trials in blue, with error bars indicating standard deviation. The average error gradually increases from 45 ± 27 to 60 ± 37 μm as the targeting threshold increases from 50 to 200 μm, while execution time drops from 46.6 to 18.7 s (i.e., effective frequency increases from 0.69 to 1.71 Hz). The two surgeons showed similar performance in the automated trials, regardless of prior experience with Micron. The difference in average error between the subjects for each threshold setting is found to be between 2 and 9 μm, which is negligible compared to the standard deviation of overall average error. Hence, there is no statistically significant difference between the subjects in the automated trials (P ranges from 0.10 to 0.64). Figure 6 shows the normalized histograms of error for the total 192 burns measured in each setting. According to the distributions, the errors in automated operation are tightly clustered around the average, shown as a vertical green line. During the automated trials, the distance of the laser tip was kept constant with an error of 18 ± 2 μm RMS on average, and no significant variation in depth error over the different targeting thresholds was observed, as shown in Figure 7.

Fig. 5.

Overall mean error for automated and manual trials at various speeds. Error bars indicate standard deviation of the total 192 burns at each setting. The effective frequency of automated operation increased from 0.69 to 1.71 targets/s as the targeting threshold increased from 50 to 200 μm.

Fig. 6.

Normalized histograms of error for automated and manual trials. The left column shows the normalized histograms of error obtained in the automated trials. The right column shows the normalized histograms of error for the manual trials. A green vertical line indicates the mean of each distribution.

Fig. 7.

Depth fluctuation during time of operation for automated and manual trials.

It is noted that the error is sometimes noticeably lower than the targeting thresholds specified, due to internal latency within the laser system, between triggering of the system and actual firing of the laser. The laser probe is thus kept in control during the delay (about 180 ms), even after being triggered by entering of the aiming beam into the specified targeting zone. The fastest operation is allowed under the 200-μm threshold, while its average error is slightly greater than at lower thresholds. Even the largest error in the automated trials is still acceptable in laser photocoagulation, compared to the size of the laser spot (200–400 μm). The execution time is noticeably reduced by 20.3 s when the threshold is increased from 50 to 100 μm; the reduction is less than 5.0 s between the other higher thresholds. This is because error due to hand tremor becomes a prominent source of error when the threshold is as low as 50 μm. To satisfy such a small threshold, the control system is prone to reset the laser trigger and then repeat it, which increases execution time.

The average error in the manual trials rises rapidly from 102 ± 67 to 174 ± 98 μm according to the increment of repetition rates used (shown as the red line in Fig. 5), compared to the error increment in the automated trials. As we found no statistically significant difference between the subjects in the automated trials, the same holds also for the manual trials (P ranges from 0.16 to 0.41), except at 1.0 Hz showing a difference of 30 μm in average error (P = 0.003). Compared to the distribution of error obtained in automated trials, the errors in manual operation are more widely spread over the error range of interest, as seen on the right side in Figure 6. The average depth error in the manual trials was measured as 296 ± 30 μm, which is considerably larger than the error attained from the automated trials. Moreover, the depth error increases slightly, from 269 to 339 μm, as the repetition rate increases from 1.0 to 2.5 Hz, as presented in Figure 7.

Erroneous outcomes (untreated and misaimed burns) become pronounced in the manual trials as the firing rate increases, whereas such erroneous burns are not found in any of the automated trials, as shown in Figure 8. Hence, the manual operation is marginally accomplished at the higher repetition rates due to lack of time to precisely maneuver the laser probe and also to adjust the height of the tool from the retinal surface. As a result, for example, in the manual trials at a firing rate of 2.5 Hz, erroneous burns represent 30% of the entire pattern. Interestingly, inter-surgeon variation in erroneous outcomes is noticeable. For the surgeon who had longer clinical experience and prior experience with Micron, an untreated burn is observed only at 2.5 Hz. On the other hand, for the surgeon with shorter clinical experience and without Micron experience, 10–24% of the entire pattern was untreated at the various repetition rates. The analysis details for automated and manual trials are summarized in Table 1.

Fig. 8.

Proportion of erroneous outcomes over the entire targets. The blue bar indicates the percentage of untreated targets due to lack of energy delivered to targets. The red bar stacked over the blue bar shows the percentage of misaimed burns, either yielding extraordinary error or aggregating around a single target.

TABLE 1.

Summary of Experimental Results

| Trial | Control setting | Execution time (s) | Frequency (target/s) | Error (μm) | Untreated rate | Misaimed rate | P-value between subjects |

|---|---|---|---|---|---|---|---|

| Automated | 50 μm | 46.55 | 0.69 | 45 ± 27 | 0.0% | 0.0% | 0.643 |

| 100 μm | 26.25 | 1.22 | 50 ± 28 | 0.0% | 0.0% | 0.162 | |

| 150 μm | 21.75 | 1.47 | 57 ± 36 | 0.0% | 0.0% | 0.169 | |

| 200 μm | 18.72 | 1.74 | 60 ± 37 | 0.0% | 0.0% | 0.101 | |

| Manual | 1.0 Hz | 32.01 | 1.00 | 102 ± 67 | 5.2% | 2.1% | 0.003 |

| 1.5 Hz | 21.35 | 1.50 | 124 ± 71 | 6.3% | 4.7% | 0.300 | |

| 2.0 Hz | 16.26 | 1.97 | 152 ± 92 | 11.5% | 15.0% | 0.411 | |

| 2.5 Hz | 13.20 | 2.42 | 174 ± 98 | 12.5% | 20.1% | 0.164 |

Given the interpolated data at the equivalent frequency settings, the average error in the automated trials is reduced by 53.0–56.4% compared to the manual trials, resulting in statistically significant differences for all equivalent frequencies (P ranges from 10−20 to 10−24). The interpolated data and analysis results are described in Table 2.

TABLE 2.

Comparison of Automated and Manual Trials With the Interpolated Data at Equivalent Frequencies

| Frequency (target/s) | Automated error (μm) | Manual error (μm) | Error reduction (%) | P-value |

|---|---|---|---|---|

| 1.00 Hz | 48 ± 28 | 102 ± 67 | 53.0 | 3.29 × 10−20 |

| 1.25 Hz | 51 ± 29 | 113 ± 69 | 55.0 | 1.16 × 10−23 |

| 1.50 Hz | 58 ± 36 | 124 ± 71 | 53.6 | 4.13 × 10−24 |

| 1.75 Hz | 61 ± 37 | 139 ± 83 | 56.4 | 9.17 × 10−25 |

Finally, the result of the avoidance feature is demonstrated in Figure 9, where white circles mark the targeted locations to be burned and black circles mark the target locations to be avoided. The 200-μm threshold set for the demonstration caused half of the initial targets to be bypassed.

DISCUSSION

The system presented in this study demonstrates automated laser photocoagulation for intraocular surgery using a novel 6-DOF active handheld instrument. The newly-developed manipulator enables the automated scanning of the laser probe over a range of several millimeters, maintaining a consistent distance of the probe from the retinal surface, especially along the axis of the probe. The increase in DOF in manipulation accommodates operation through a sclerotomy such that the laser tip can be independently controlled regardless of the movement of the fulcrum. Real-time tracking of anatomical features is used for compensation of the eye movement during intraocular operation and also utilized for automatic localization of specified avoidance zones. The graphical overlay system allows the surgeon to operate the system in a user-friendly fashion while directly viewing through the operating microscope. The automated system greatly improves the accuracy of laser photocoagulation, compared to conventional manual operation, showing statistically significant differences in average error. In addition, the automated depth regulation of the system forms consistent burn sizes, while ensuring safety during operation. For instance, the depth-limiting feature contributes to complete treatment of all targets without inter-surgeon variation. In contrast, in manual operation, untreated burns resulted due to failure to maintain consistent distance from the tissue. Furthermore, in Figure 9, automated avoidance of critical anatomy was demonstrated by localizing and tracking of blood vessels; this can enhance safety when operating near critical structures such as the fovea.

Compared to the semiautomated system using the 3-DOF Micron [20], the accuracy is significantly improved in terms of absolute mean error and error reduction relative to manual operation. For instance, at an effective firing rate of 1.0 Hz, the mean error is reduced from 129 ± 69 to 48 ± 28 μm by the automated system, resulting in 53.0% of error reduction compared to manual operation, whereas the semiautomated system reduced the error by only 22.3% [20]. Moreover, the automated depth regulation is featured only in the new automated system; the semiautomated system lacked this feature due to the limited workspace of the manipulator used.

We also found that the new system slightly improves the accuracy from 65 to 50 μm, compared to the initial prototype [21], while addressing the issues that arose from use of position-based servo control and lack of graphical overlay through the microscope.

Further experiments will be performed on biological tissues ex vivo and in vivo. To apply the automated system to such biological tissues, we are developing a new calibration method suitable for the complex optics of the eye in vivo, and which incorporates a supplementary contact lens to provide a wide-angle view during operation. In addition, the avoidance feature could be improved by applying a path-planning algorithm, in order to attain an optimal path for control, resulting in reduced operation time and enhanced safety [28].

Acknowledgments

Contract grant sponsor: U. S. National Institutes of Health; Contract grant number: R01 EB000526; Contract grant sponsor: Kwanjeong Educational Foundation.

The authors would like to thank Jisu Kim of the Department of Statistics at Carnegie Mellon University for assistance with statistical analysis. This work was supported by the U. S. National Institutes of Health (grant no. R01 EB000526) and the Kwanjeong Educational Foundation.

Footnotes

Conflict of Interest Disclosures: All authors have completed and submitted the ICMJE Form for Disclosure of Potential Conflicts of Interest and none were reported.

References

- 1.Diabetic Retinopathy Study Research Group. Photocoagulation treatment of proliferative diabetic retinopathy: Clinical application of diabetic retinopathy study (DRS) findings, DRS report number 8. Ophthalmology. 1981;88(7):583–600. [PubMed] [Google Scholar]

- 2.Mohamed Q, Gillies M, Wong T. Management of diabetic retinopathy: A systematic review. J Am Med Assoc. 2007;298(8):902–916. doi: 10.1001/jama.298.8.902. [DOI] [PubMed] [Google Scholar]

- 3.Sternberg P, Han DP, Yeo JH, Barr CC, Lewis H, Williams GA, Mieler WF. Photocoagulation to prevent retinal detachment in acute retinal necrosis. Ophthalmology. 1988;95:1389–1393. doi: 10.1016/s0161-6420(88)32999-4. [DOI] [PubMed] [Google Scholar]

- 4.Early Treatment Diabetic Retinopathy Study Research Group. Photocoagulation for diabetic macular edema. Early treatment diabetic retinopathy study report number 1. Arch Ophthalmol. 1985;103(12):1796–1806. [PubMed] [Google Scholar]

- 5.Bandello F, Lanzetta P, Menchini U. When and how to do a grid laser for diabetic macular edema. Doc Ophthalmol. 1999;97:415–419. doi: 10.1023/a:1002499920673. [DOI] [PubMed] [Google Scholar]

- 6.The Branch Vein Occlusion Study Group. Argon laser photocoagulation for macular edema in branch vein occlusion. Am J Ophthalmol. 1984;98:271–282. doi: 10.1016/0002-9394(84)90316-7. [DOI] [PubMed] [Google Scholar]

- 7.Pielen A, Feltgen N, Isserstedt C, Callizo J, Junker B, Schmucker C. Efficacy and safety of intravitreal therapy in macular edema due to branch and central retinal vein occlusion: A systematic review. PLoS ONE. 2013;8(10):1–21. doi: 10.1371/journal.pone.0078538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chhablani J, Mathai A, Rani P, Gupta V, Fernando Arevalo J, Kozak I. Comparison of conventional pattern and novel navigated panretinal photocoagulation in proliferative diabetic retinopathy. Investig Ophthalmol Vis Sci. 2014;55:3432–3438. doi: 10.1167/iovs.14-13936. [DOI] [PubMed] [Google Scholar]

- 9.Leaver P, Williams C. Argon laser photocoagulation in the treatment of central serous retinopathy. Br J Ophthalmol. 1979;63:674–677. doi: 10.1136/bjo.63.10.674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Frank RN. Retinal laser photocoagulation: Benefits and risks. Vision Res. 1980;20(12):1073–1081. doi: 10.1016/0042-6989(80)90044-9. [DOI] [PubMed] [Google Scholar]

- 11.Blumenkranz MS, Yellachich D, Andersen DE, Wiltberger MW, Mordaunt D, Marcellino GR, Palanker D. Semiautomated patterned scanning laser for retinal photocoagulation. Retina. 2006;26(3):370–376. doi: 10.1097/00006982-200603000-00024. [DOI] [PubMed] [Google Scholar]

- 12.Wright CHG, Barrett SF, Welch AJ. Design and development of a computer-assisted retinal laser surgery system. J Biomed Opt. 2006;11(4):041127. doi: 10.1117/1.2342465. [DOI] [PubMed] [Google Scholar]

- 13.Markow MS, Yang Y, Welch AJ, Rylander HG, Weinberg WS. An automated laser system for eye surgery. IEEE Eng Med Biol Mag. 1989;8(4):24–29. doi: 10.1109/51.45953. [DOI] [PubMed] [Google Scholar]

- 14.Wright CHG, Ferguson RD, Rylander HG, III, Welch AJ, Barrett SF. Hybrid approach to retinal tracking and laser aiming for photocoagulation. J Biomed Opt. 1997;2(2):195–203. doi: 10.1117/12.268964. [DOI] [PubMed] [Google Scholar]

- 15.Naess E, Molvik T, Ludwig D, Barrett S, Legowski S, Wright C, de Graaf P. Computer-assisted laser photocoagulation of the retina—A hybrid tracking approach. J Biomed Opt. 2002;7(2):179–189. doi: 10.1117/1.1461831. [DOI] [PubMed] [Google Scholar]

- 16.Kozak I, Oster SF, Cortes MA, Dowell D, Hartmann K, Kim JS, Freeman WR. Clinical evaluation and treatment accuracy in diabetic macular edema using navigated laser photocoagulator NAVILAS. Ophthalmology. 2011;118:1119–1124. doi: 10.1016/j.ophtha.2010.10.007. [DOI] [PubMed] [Google Scholar]

- 17.Chappelow AV, Tan K, Waheed NK, Kaiser PK. Panretinal photocoagulation for proliferative diabetic retinopathy: Pattern scan laser versus argon laser. Am J Ophthalmol. 2012;153(1):137–142. doi: 10.1016/j.ajo.2011.05.035. [DOI] [PubMed] [Google Scholar]

- 18.Sanghvi C, McLauchlan R, Delgado C, Young L, Charles SJ, Marcellino G, Stanga PE. Initial experience with the Pascal photocoagulator: A pilot study of 75 procedures. Br J Ophthalmol. 2008;92:1061–1064. doi: 10.1136/bjo.2008.139568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wilkes SR. Current therapy of diabetic retinopathy: Laser and vitreoretinal surgery. J Natl Med Assoc. 1993;85:841–847. [PMC free article] [PubMed] [Google Scholar]

- 20.Becker BC, MacLachlan RA, Lobes LA, Riviere CN. Semiautomated intraocular laser surgery using handheld instruments. Lasers Surg Med. 2010;42(3):264–273. doi: 10.1002/lsm.20897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Yang S, MacLachlan RA, Riviere CN. Toward automated intraocular laser surgery using a handheld micromanipulator. Proc IEEE/RSJ Int Conf Intell Robot Syst. 2014:1302–1307. doi: 10.1109/IROS.2014.6942725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Yang S, MacLachlan RA, Riviere CN. Manipulator design and operation of a six-degree-of-freedom handheld tremor-canceling microsurgical instrument. IEEE/ASME Trans Mechatronics. 2015;20(2):761–772. doi: 10.1109/TMECH.2014.2320858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Yang S, Balicki M, MacLachlan RA, Liu X, Kang JU, Taylor RH, Riviere CN. Optical coherence tomography scanning with a handheld vitreoretinal micromanipulator. Proc 34th Annu Int Conf IEEE Eng Med Biol Soc. 2012:948–951. doi: 10.1109/EMBC.2012.6346089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.MacLachlan RA, Riviere CN. High-speed microscale optical tracking using digital frequency-domain multiplexing. IEEE Trans Instrum Meas. 2009;58(6):1991–2001. doi: 10.1109/TIM.2008.2006132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Becker BC, Riviere CN. Real-time retinal vessel mapping and localization for intraocular surgery. Proc IEEE Int Conf Robot Autom. 2013:5360–5365. doi: 10.1109/ICRA.2013.6631345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Rodriguez Palma S, Becker BC, Lobes LA, Riviere CN. Comparative evaluation of monocular augmented-reality display for surgical microscopes. Proc 34th Annu Int Conf IEEE Eng Med Biol Soc. 2012:1409–1412. doi: 10.1109/EMBC.2012.6346203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hartley R, Zisserman A. Multiple view geometry in computer vision. New York: Cambridge university press; 2003. [Google Scholar]

- 28.Brunenberg EJ, Vilanova A, Visser-Vandewalle V, Temel Y, Ackermans L, Platel B, ter Haar R, omeny BM. Automatic trajectory planning for deep brain stimulation: A feasibility study. Lect Notes Comput Sci. 2007;4791:584–592. doi: 10.1007/978-3-540-75757-3_71. [DOI] [PubMed] [Google Scholar]