Abstract

This report describes algorithms for fitting certain curves and surfaces to points in three dimensions. All fits are based on orthogonal distance regression. The algorithms were developed as reference software for the National Institute of Standards and Technology’s Algorithm Testing System, which has been used for 5 years by NIST and by members of the American Society of Mechanical Engineers’ B89.4.10 standards committee. The Algorithm Testing System itself is described only briefly; the main part of this paper covers the general linear algebra, numerical analysis, and optimization methods it employs. Most of the fitting routines rely on the Levenberg-Marquardt optimization routine.

Keywords: coordinate measuring machine, curve fitting, least-squares fitting, Levenberg-Marquardt, orthogonal distance regression, surface fitting

1. Introduction

Mathematical software, particularly curve and surface fitting routines, is a critical component of coordinate metrology systems. An important but difficult task is to assess the performance of such routines [1,2]. The National Institute of Standards and Technology has developed a software package, the NIST Algorithm Testing System (ATS), that can assist in assessing the performance of geometry-fitting routines [3]. This system has been aiding in the development of a U. S. standard (American Society of Mechanical Engineers (ASME) B89.4.10) for software performance evaluation of coordinate measuring systems [4]. The ATS is also the basis of NIST’s Algorithm Testing and Evaluation Program for Coordinate Measuring Systems (ATEP-CMS) [5,6].

The ATS incorporates three core modules: a data generator [7] for defining and generating test data sets, a collection of reference algorithms which provides a performance baseline for fitting algorithms, and a comparator for analyzing the results of fitting algorithms versus the reference algorithms. This paper concentrates on the development of highly accurate reference algorithms.

The paper is organized as follows. We first introduce notation and certain key functions and derivatives that will be used throughout the paper. Section 2 describes fitting algorithms for linear geometries—planes and lines. Lagrange multipliers [8] are used in solving the constrained minimization problems, which are developed into standard eigenvector problems. Section 3 deals with nonlinear geometry fitting. We use an unconstrained optimization method that requires derivatives of appropriate distance functions. These required functions and derivatives are provided for the reader. Appendix A gives an outline of the unconstrained optimization algorithm (Levenberg-Marquardt) that is modified to allow for normalization of fitting parameters within the routine. Appendix B gives, for all the geometries, the appropriate derivatives needed to create a valuable check for a local minimum.

1.1 Notation and Preliminary Remarks

Assume we are fitting a set of data points, {xi}, i = 1,2,…, N, that have been translated so that their centroid is the origin. Usually scalar quantities are represented in plain type and matrix or vector quantities with boldface type. Other notation:

| x = (x,y,z) | A point in 3-dimensional space. |

| |·| | The Euclidean (L2) norm. E. g., . |

| xi = (xi,yi,zi) | The ith data point. |

| The centroid of the data,

(Note: These and all other sums in this paper are taken from i = 1,2,…,N.) |

|

| A = (A,B,C) | Direction numbers that specify an orientation, A ≠ 0. |

| a = (a,b,c) | Direction cosines that specify an orientation. Note: |a| = 1. An orientation’s direction numbers can be converted into direction cosines by: a = A/|A|. |

| J | The objective function. J is the sum of the squares of the distances from the data points to the geometry. . |

| M | The N × 3 matrix containing the data points: |

| ∇ | The gradient of a scalar function. E.g., . |

For each geometry, we show the defining parameters, the equation for the orthogonal distance from a single data point to the geometry, the objective function, and a brief description of the steps in the calculation.

2. Linear Geometries

Linear geometries (lines and planes) are solved using Lagrange multipliers on a constrained minimization problem. Both cases reduce to a rather simple eigen-problem.

2.1 Plane Fitting

Defining parameters:

x—a point on the plane.

a—the direction cosines of the normal to the plane.

Distance equation:

Objective function:

Description:

The centroid of the data must lie on the least-squares plane. This can be seen because ∇J = 0 at the least squares solution, yielding . Multiplying by 1/N gives . Distributing the summation gives , which is to say , i.e., lies on the least-squares plane. Since by assumption the data points have been translated to the origin, and since the centroid of the data must be a point on the least squares plane, we can set x = 0.

The direction of the fitted plane, a, can be found by solving the constrained minimization problem, namely, minimizing J subject to the constraint that |a| = 1. Define a function, G = |a|2 − 1, so that the problem is to minimize J subject to the constraint that G = 0. The method of Lagrange multipliers [8] tells us that the minimum occurs at a point where ∇J = λ∇G, for some real number λ. (Here, a, b, and c are treated as independent variables, since the constraint is accounted for in G. Therefore, ∇ = (∂/∂a, ∂/∂b, ∂/∂c).) But ∇G = 2a, and ∇J = 2(M⊤M)a, yielding the eigen-problem, (M⊤M)a = λa, referred to as the normal equations.

This 3 × 3 eigenvector problem can be easily solved using well-established routines (e.g., Jacobi iterations [9]). However, we note that the eigenvectors of M⊤M are also the singular vectors (from the singular value decomposition) of M [9]. This allows us to gain numerical stability by applying the singular value decomposition (SVD) to M without ever computing M⊤M, which is the method implemented in the ATS.

Finally, we must determine how to select the correct eigenvector (i.e., singular vector) of the three produced by the SVD. The normal equations can be written as follows:

Multiplying these three equations by a, b, and c, respectively, then summing the equations gives .

But the sum on the left is just the objective function, i.e., the sum of the squares of the residuals. Thus the value of λ is the objective function, hence the correct eigenvector for the least-squares solution corresponds to the smallest eigenvalue. When using the SVD, we choose the singular vector corresponding to the smallest singular value, since under these conditions the singular values are the square roots of the eigenvalues [10].

2.2 Line Fitting

Defining parameters:

x—a point on the line.

a—the direction cosines of the line.

Distance equation:

d(xi) = d(xi, x,a) = |a × (xi − x)| (which also equals where × denotes the vector cross product.

Objective function:

Description:

Since the data has been translated to the origin, and since the centroid of the data must be a point on the least squares plane (similar to the case above with the least squares plane), we set x = 0.

The direction a can be found by following the same strategy as in the case of a plane. For line fitting, the normal equations are M⊤Ma = λa just as in the case of plane fitting. Once again, the correct eigenvector must be chosen to minimize the sum-of-squares of the residuals. As shown with planes, we obtain λ = Σ(a·x)2, so J = − λ + ∑|xi|2, meaning that J is minimized when λ is maximized. Thus the correct eigenvector choice is the one corresponding to the largest eigenvalue. As in the case of plane fitting, numerical stability is gained by finding the eigenvectors of M through the SVD, rather than by solving the normal equations. Since the singular values are the square roots of the eigenvalues [10], we choose the eigenvector corresponding to the largest singular value.

3. Nonlinear Geometries

3.1 Utility Functions f and g

The line and plane distance functions arise quite often in this paper, thus we define them here, calling them f and g respectively, giving necessary derivatives, which are used throughout the rest of this paper. We compute the nonlinear fits using unconstrained minimization algorithms, so we define the line and plane distance functions in terms of direction numbers rather than direction cosines.

Let g(xi,x,A) denote the distance from the point, xi, to the plane defined by the point, x, and the normal direction, a = A/|A|. The value of g is given by: gi = g(xi,x,A) = a·(xi − x) = a(xi − x) + b(yi − y) + c(zi − z).

Let f(xi,x,A) denote the distance from the point, xi, to the line defined by the point, x, and the direction, a = A/|A|. The value of f is given by: fi = f(xi,x,A) = |a × (xi − x)|. That is,

This expression for f is used because of its numerical stability. One should note that f could also be expressed (for derivative calculations) as .

Note: A, B, and C are independent variables, whereas a, b, and c are not, because the constraint a2 + b2 + c2 = 1 causes a, b, and c to depend on each other. When dealing with the nonlinear geometries we treat f and g as functions dependent on A, as opposed to a, in order to use unconstrained minimization algorithms. Treating f and g as functions dependent on a would force us to restrict ourselves to using constrained minimization solvers. In the linear cases, we did solve constrained minimization problems. So when we differentiate with respect to A, for example, we treat a, b, and c all as functions of A, B, and C (e.g., . This yields the following array of derivatives:

These algorithms normalize A at every step, so for simplicity of expressing derivatives, assume |A| = 1. A remains unconstrained; we just assume it happens to have unit magnitude.

The derivatives for fi and gi are then

The above derivatives of fi are undefined when fi = 0 (i.e., when xi is on the line.) In this rarely needed case the gradient is given by:

For cylinders, cones, and tori, the line associated with f is the geometry’s axis. For cones the plane associated with g is the plane through the point, x, perpendicular to the axis. For tori, the plane associated with g is the plane perpendicular to the axis that divides the torus in half.

3.2 Choice of Algorithm

Good optimization algorithms can readily be found to minimize the objective function, J. Usually such an algorithm will require an initial guess, along with partial derivatives, either of J itself or of the distance function, di. Both sets of derivatives are given in this paper (those for J in the appendix). These should enable a reader to implement a least-squares algorithm even if the optimization algorithm used differs from the author’s choice, which follows.

In the ATS, nonlinear geometries are fit in an unconstrained manner using the Levenberg-Marquardt algorithm. The algorithm requires an initial guess as well as the first derivatives of the distance function. In practice it converges quickly and accurately even with a wide range of initial guesses. Details of this algorithm are given in appendix A. Additionally, the code allows us to normalize the fitting parameters after every iteration of the algorithm. For each geometry we list the distance and objective functions, the appropriate derivatives, and the parameter normalization we use.

3.3 Sphere Fitting

Defining parameters:

x—the center of the sphere.

r—the radius of the sphere.

Distance equation:

Objective function:

Normalization:

(None)

Derivatives:

3.4 Two-Dimensional Circle Fitting

This case is simply the sphere fit (above) restricted to two-dimensions:

Defining parameters:

x—the x-coordinate of the center of the circle.

y—the y-coordinate of the center of the circle.

r—the radius of the circle.

Distance equation:

Objective function:

Normalization:

(None)

Derivatives:

3.5 Three-Dimensional Circle Fitting

Defining parameters:

x—the center of the circle.

A—the direction numbers of the normal to circle’s plane.

r—the radius of the circle.

Distance equation:

Objective function:

Normalization:

| A ← A/|A| | (Here and elsewhere, “←” denotes assignment of value. In this case, the value of A is replaced by the value A/|A|.) |

Derivatives:

Description:

We use a multi-step process to accomplish 3D circle fitting:

Compute the least-squares plane of the data.

Rotate the data such that the least-squares plane is the x-y plane.

Project the rotated data points onto the x-y plane.

Compute the 2D circle fit in the x-y plane.

Rotate back to the original orientation.

Perform a full 3D minimization search over all the parameters.

Some coordinate measuring system software packages stop at step (5) and report the orientation, center, and radius as the least-squares circle in 3D. This approach is valid when the projection onto the plane is done simply to compensate for measurement errors on points which would otherwise be coplanar. But this method does not in general produce the circle yielding the least sum-of-squares possible (even though it is usually a good approximation.) In order to achieve the true 3D least-squares fit, the circle computed at step (5) is used as an initial guess in the Levenberg-Marquardt algorithm, which optimizes over all the parameters simultaneously [step (6)].

Step (2) is carried out using the appropriate rotation matrix to rotate the direction, a, to the z-direction, namely,

If c < 0, a is replaced with − a, thus rotating the direction to the minus z-direction (which is adequate for our purposes.) Step (5) is carried out using the appropriate rotation matrix to rotate the z-direction to the direction, a. Namely,

3.6 Cylinder Fitting

Defining parameters:

x—a point on the cylinder axis.

A—the direction numbers of the cylinder axis.

r—the radius of the cylinder.

Distance equation:

Objective function:

Normalization:

A ← A/|A|

X ← (point on axis closest to origin)

Derivatives:

3.7 Cone Fitting

Defining parameters:

x—a point on the cone axis (not the apex).

A—the direction numbers of the cone axis (pointing toward the apex).

s—the orthogonal distance from the point, x, to the cone.

ψ —the cone’s apex semi-angle.

Distance equation:

Objective function:

Normalization:

A ← A/|A|

x ← (point on axis closest to origin)

ψ ← ψ (mod 2π)

if ψ > π then [ψ ← ψ (mod π); A ← − A]

if then ψ ← π – ψ

if s < 0 then [s ← − s; A ← − A]

Derivatives:

3.8 Torus Fitting

Defining parameters:

x—the torus center.

A—the direction numbers of the torus axis.

r—the major radius.

R—the minor radius.

Distance equation:

Objective function:

Normalization:

A ← A/|A|

Derivatives:

4. Discussion

The algorithms have been implemented in the ATS and have been used for 5 years by NIST and by members of the ASME B89.4.10 Working Group. In general they have performed extremely well. They have successfully solved a number of difficult fitting problems that could not be solved by many commercial software packages used on Coordinate Measuring Systems (CMSs). (Some of the most difficult problems are cylinders or cones sampled over a small patch.) The ATS algorithms have an extremely broad range of convergence. Failure to converge has only been observed for pathological fitting problems (e.g., fitting a circle to collinear points). Special checks can detect most of these situations.

The ATS algorithms are generally robust. For most fits, a good starting guess is not required to reach the global minimum. This is due, in part, to the careful choice of fitting parameters, the use of certain constraints, and, for cylinders and cones, the technique of restarting a search after an initial solution is found.

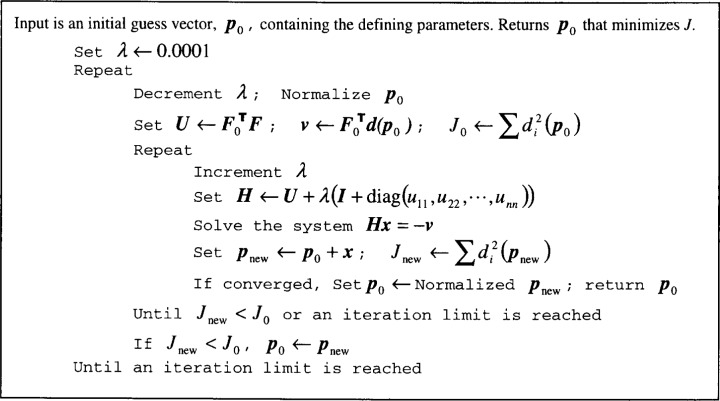

Fig. 1.

Levenberg-Marquardt algorithm.

Biography

About the author: Craig Shakarji is a mathematician in the Manufacturing Systems Integration Division of the NIST Manufacturing Engineering Laboratory. The National Institute of Standards and Technology is an agency of the Technology Administration, U.S. Department of Commerce.

5. Appendix A. The Levenberg-Marquardt Algorithm

The Levenberg-Marquardt algorithm [11] finds the vector, p, that minimizes an objective function, , where di(p) is the distance from the point, xi, to the geometry defined by the vector of parameters, p. In the case of a cylinder, for example, p = (x, A, r) = (x, y, z, A, B, C, r) and di(p) = fi − r. One derivation of the algorithm [12] begins by approximating J as a linear function of p, :

where ∇di(p0) is the gradient of di(p) evaluated at an initial guess, p0. This approximation is valid within a certain trust region radius. The derivation then considers how to minimize . A search direction is calculated based on , and a search is made in that direction within the limits of the trust region radius for a point, pnew, such that J(pnew) < J(p0). When pnew is found, it becomes the new p0 for another iteration of the above process.

The basis for the algorithm is the result that the solution, p*, to each iteration can be expressed as p* = p(λ) = − (F0⊤F0 + λD⊤D)−1 F0⊤d(p0) where F0 is a matrix having ∇di(p0) as its ith row, D is an appropriate weighting matrix, d(p0) is the vector of residuals, di(p0), and λ ≥ 0 is a variable called the Levenberg-Marquardt parameter. This parameter can be considered the Lagrange multiplier for the constraint that each search be limited to the trust region radius.

The Levenberg-Marquardt algorithm is presented in the figure below. The matrix F0⊤F0 + λD⊤D is named H and the vector F0⊤d(p0) is called v. The algorithm includes a modification suggested by Nash [11], which is to use a weighting matrix defined so that D⊤D is the identity matrix plus the diagonal of F0⊤F0. Nash’s use of the identity in the definition of D forces H to be positive definite. (Note that D is never calculated.) Since H is also symmetric, the system Hx = − v can be reliably solved using the Cholesky decomposition [9]. We chose 10 and 0.04 as factors with which to increment and decrement λ respectively. Finally, note that we normalize the parameter vector, p, at every iteration. However, normalization of p never changes the value of the objective function, J(p). This routine is the basis of the ATS implementation of the Levenberg-Marquardt algorithm.

6. Appendix B. Gradient Test for Correctness

One helpful check is to compute the gradient of the objective function at the computed minimum. The correct least-squares solution yields ΔJ = 0. Also, these derivatives are important because several minimization algorithms require that these derivatives be included. The definitions of f and g are kept the same as they were in Sec. 3. For every nonlinear geometry, assume the same definition for the objective function, J, as specified in Sec. 3. For the linear geometries, the objective functions are here defined in terms of unconstrained parameters.

Planes

Lines

Spheres

2D Circles

3D Circles

Cylinders

Cones

Tori

7. References

- 1.Hopp TH. Manufacturing Review. 4. Vol. 6. American Society of Mechanical Engineers; New York: 1993. Computational Metrology; pp. 295–304. [Google Scholar]

- 2.Walker R. CMM Form Tolerance Algorithm Testing, GIDEP Alert X1-A-88-01, Government-Industry Data Exchange Program. Department of Defense; Washington, DC; 1988. [Google Scholar]

- 3.Rosenfeld DA. User’s Guide for the Algorithm Testing System Version 20, NISTIR 5674. National Institute of Standards and Technology; Gaithersburg, MD: 1995. [Google Scholar]

- 4.ASME . Methods for Performance Evaluation of Coordinate Measuring System Software, B89.4.10-199x, DRAFT. American Society of Mechanical Engineers; New York: 1998. [Google Scholar]

- 5.Diaz C, Hopp TH. NIST Special Test Service: The Algorithm Testing and Evaluation Program for Coordinate Measuring Systems, NISTSP 250-41. National Institute of Standards and Technology; Gaithersburg, MD: to be published. [Google Scholar]

- 6.Diaz C, Hopp TH. Testing Coordinate Measuring Systems Software; Proceedings of ASQC Measurement Quality Conference; October 26–27; Gaithersburg, MD: National Institutes of Standards and Technology; 1993. [Google Scholar]

- 7.Algeo MEA, Hopp TH. Form Error Models of the NIST Algorithm Testing System. National Institute of Standards and Technology; Gaithersburg, MD: Jan, 1992. (NISTIR 4740). [Google Scholar]

- 8.Apostol TM. Calculus. Second Edition. II. John Wiley & Sons, Inc; New York: 1969. pp. 314–318. [Google Scholar]

- 9.Golub GH, van Loan CF. Matrix Computations. The Johns Hopkins University Press; Baltimore, MD: 1983. [Google Scholar]

- 10.Stewart GW. Matrix Computations. Academic Press Inc; Orlando, FL: 1973. p. 320. [Google Scholar]

- 11.Nash JC. Compact Numerical Methods for Computers: Linear Algebra and Function Minimisation. Adam Hilger, Ltd; Bristol, England: 1979. [Google Scholar]

- 12.Moré JJ. In: Dold, Eckmann, editors. The Levenberg-Marquardt Algorithm: Implementation and Theory; Numerical Analysis: Proc Biennial Conf on Numerical Analysis; Dundee, Scotland. 1977. [Google Scholar]