Abstract

Decisions take time, and as a rule more difficult decisions take more time. But this only raises the question of what consumes the time. For decisions informed by a sequence of samples of evidence, the answer is straightforward: more samples are available with more time. Indeed the speed and accuracy of such decisions are explained by the accumulation of evidence to a threshold or bound. However, the same framework seems to apply to decisions that are not obviously informed by sequences of evidence samples. Here we proffer the hypothesis that the sequential character of such tasks involves retrieval of evidence from memory. We explore this hypothesis by focusing on value-based decisions and argue that mnemonic processes can account for regularities in choice and decision time. We speculate on the neural mechanisms that link sampling of evidence from memory to circuits that represent the accumulated evidence bearing on a choice. We propose that memory processes may contribute to a wider class of decisions that conform to the regularities of choice-reaction time predicted by the sequential sampling framework.

Introduction

Most decisions necessitate deliberation over samples of evidence, leading to commitment to a proposition. Often the deliberation adopts the form of integration or accumulation, and the commitment is simply a threshold applied to the neural representation of cumulative evidence, generically termed sequential sampling with optional stopping. This simple idea explains the tradeoff between decision speed and accuracy, and a variety of other regularities in perceptual decisions (e.g. confidence, Gold and Shadlen, 2007; Kiani et al., 2014; Smith and Ratcliff, 2004).

For some perceptual decisions, such as the direction of motion of dots on a screen, the source of the evidence that is integrated is well established: a stream of noisy data (moving dots) represented by neurons in the visual cortex. However, many decisions involve more complex evaluation of preferences, reward, or memories. Interestingly, many such decisions also conform to the regularities of sequential sampling models (Bogacz and Gurney, 2007; Krajbich et al., 2010; Krajbich et al., 2012; Krajbich and Rangel, 2011; Polanía et al., 2014; Ratcliff, 1978; Wiecki and Frank, 2013). Yet, for these decisions, the evidence samples are mysterious. This is especially patent in decisions that involve choices which are presumed to be made based on internal evidence about the options, such as their value. In that case, one must ask, what constitutes the samples of evidence about value, and why would they be accumulated?

We propose that in many value-based decisions, samples are derived by querying memory for past experiences and by leveraging memory for the past to engage in prospective reasoning processes to provide evidence to inform the decision. The central hypothesis is that sequential memory retrieval enters decision making in the same way that motion transduction provides the information for integration in association areas toward a perceptual decision.

Here we will review the evidence supporting this hypothesis. We first review existing data regarding the accuracy and timing of perceptual decisions, and then value-based decisions. Next, we review existing evidence pointing to a role for memory in value-based decisions in general. Finally, we discuss a working framework for neurobiological mechanisms supporting circuit-level interactions by which sampled evidence from memory can influence value-based decisions and actions. Our speculations are at most rudimentary, but they begin to expose the sequential character of the operation and suggest putative neural mechanisms.

Evidence accumulation in perceptual decisions

The speed and accuracy of some perceptual decisions suggest that a decision is made when an accumulation of evidence reaches a threshold level in support of one of the alternatives (Gold and Shadlen, 2007; Smith and Ratcliff, 2004). A well studied example solicits a binary decision about the net direction of dynamic random dots (Figure 1). The task itself must be solved by integrating, as a function of space and time, low-level sensory information whose impact on sensory neurons is known.

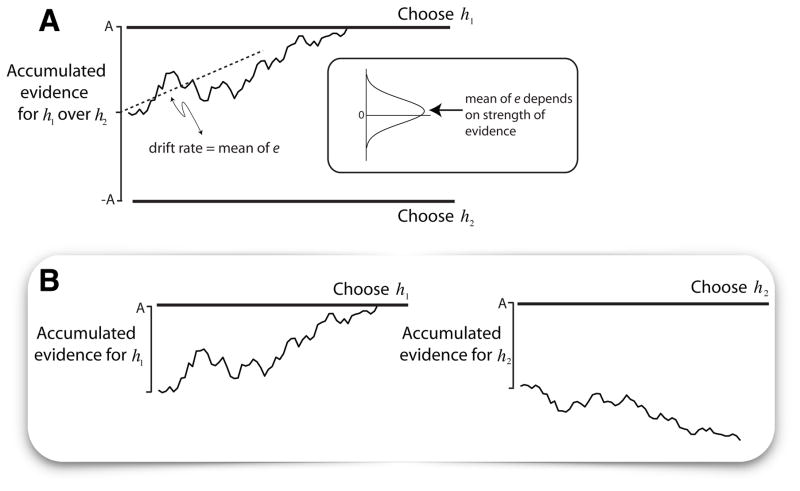

Figure 1. Bounded evidence accumulation framework explains the relationship between choice and deliberation time.

The decision is based on sequential samples of evidence until a stopping criterion is met, yielding a choice. A. Drift-diffusion with symmetric bounds applied to a binary decision. This is the simplest example of sequential sampling with optional stopping, equivalent to a biased random walk with symmetric absorbing bounds. The momentary evidence is regarded as a statistically stationary source of signal plus noise (Gaussian distribution; mean=μ, ) sampled in infinitesimal steps, δt. The resultant drift-diffusion process (noisy trace) is a decision variable that terminates at ±A (the bounds), to stop the process. If the termination is in the upper or the lower bound, the choice is for h1 or h2, respectively. B. Competing accumulators. The process is viewed as a race between two or more processes, each representing the accumulation of evidence for one of the choice alternatives. The architecture is more consistent with neural processes and has a natural extension to decisions between N>2 alternatives. The process in A is a special case, when evidence for h1 equals evidence against h2 (e.g., Gaussian distributions with opposite means and perfectly anticorrelated noise). (reprinted from Gold & Shadlen 2007, with permission).

In the random dot motion task, it is known that the firing rates of direction selective neurons in the visual cortex (area MT/V5) exhibit a roughly linear increase (or decrease) as a function of the strength of motion in their preferred (or anti-preferred) direction. The average firing rate from a pool of neurons sharing similar direction preferences provides a time varying signal that can be compared to an average of another, opposing pool. This difference can be positive or negative, reflecting the momentary evidence in favor of one direction and against the other. The idea is that neurons downstream of these sensory neurons represent in their firing rate a constant plus the accumulation—or time integral—of the noisy momentary evidence. This neural signal is referred to as a decision variable, because application of a simple threshold serves as a criterion to terminate the decision process and declare the choice (e.g., right or left). When a monkey indicates its decision with an eye movement, a neural representation of the decision variable is evident in the lateral intraparietal area (LIP) and other oculomotor association areas (for reviews see Gold and Shadlen, 2007; Shadlen and Kiani, 2013).

The explanatory power of bounded evidence accumulation and its consilience with neural mechanisms is remarkable. However, it is important to acknowledge certain amenable but contrived features of this task, which challenge simple application or extrapolation to other decisions. First, unlike most perceptual decisions, the motion task can only be solved by integration of a sequence of independent samples. Many perceptual tasks might benefit from integration of evidence across space, but the evidence does not arrive as a temporal stream (e.g., is one segment of a curve connected another). One can entertain the notion that spatial integration takes time (e.g., a dynamic process), but there is no reason, a priori, to believe that the process would involve the accumulation of sequential samples that arrive over many tenths of seconds (but see Zylberberg et al., 2010). Second, as we discuss next, for non-perceptual decisions, the source of evidence is much more diverse; thus, not only is it questionable why such decisions should require temporal integration, but indeed the very definition of momentary evidence, and where it comes from, is unknown.

Accuracy and timing of value-based decisions

Many decisions we encounter do not involve evidence about the state of a percept or a proposition but require a choice between options that differ in their potential value, for example, deciding which stock to purchase or which snack to choose. In a typical value-based decision task, participants are first asked to rate their preference for a series of items, such as snacks, and then asked to make a series of decisions between pairs of snacks. Unlike perceptual decisions, value-based decisions often do not pose a choice between an objectively correct versus incorrect option, nor do they require integration over time in any obvious way. Instead, the decision rests on subjective preferences and predictions about the subjective value of each option—which stock is predicted to be most lucrative, which snack item is likely to be more enjoyable—rather than the objective data bearing on a proposition about a sensory feature.

Yet, despite these differences, recent studies suggest that the process of comparing internal subjective value representation also conforms to bounded evidence accumulation framework (Chau et al., 2014; Hunt et al., 2012; Krajbich et al., 2010; Krajbich et al., 2012; Krajbich and Rangel, 2011; Polanía et al., 2014). Both accuracy and reaction time are explained by a common mechanism that accumulates evidence favoring the relative value of one item versus the other and terminates at a criterion level. Thus, there are intriguing parallels between perceptual and value-based decisions, which appear consistent with the bounded accumulation of samples of evidence (Figure 2). These parallels also raise a critical open question: why should any evidence require accumulation if there is a simple mapping between highly discriminable options and their subjective values? In perceptual decisions that support evidence-accumulation accounts, the stimulus itself supplies a stream of evidence, and even then integration for over a tenth of a second is only observed with difficult (e.g., near threshold) decisions.

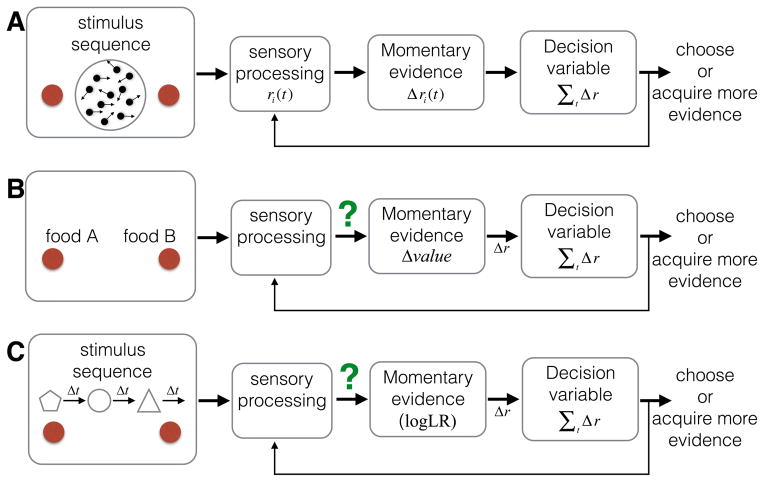

Figure 2. Similarities and differences among three types of decisions.

Each is displayed as a series of events in time (left to right): stimuli are presented on a display monitor; the subject makes a binary decision, and, when ready, communicates the decision with a response. A. Perceptual decision. The subject decides whether the net direction of a dynamic, random-dot display is leftward or rightward. B. Value-based decision. The subject decides which of two snack items she prefers. The subjective values associated with the individual items are ascertained separately before the experiment. C. Decision from symbolic associations. The subject decides whether the left or right option is more likely to be rewarded, based on a sequence of shapes that appear near the center of the display. Each shape represents a different amount of evidence favoring one or the other option. In both A and C, the display furnishes more evidence with time (i.e., sequential samples), whereas in B, all the evidence in the display is presented at once. In A, sensory processes give rise to momentary evidence, which can be accumulated in a decision variable. Both B and C require an additional step because the stimuli alone don’t contain the relevant information. We hypothesize that the stimuli elicit an association or memory retrieval process to derive their symbolic meaning or subjective value as momentary evidence.

For value-based decisions, neither the identity of the sequence of samples of value (momentary evidence) nor how (or why) they are accumulated into a decision variable is clear. We hypothesize that the momentary evidence derives from memory and that the reason that accuracy and reaction time in even simple value-based decisions conforms to sequential sampling models is because it depends on the retrieval of relevant samples from memory to make predictions about the outcome of each option. These predictions bear on the relative value of the options and thus yield momentary evidence bearing on the decision. Crucially, as explained below, there are reasons to think that these retrievals must update a decision variable sequentially.

Memory and value based decisions

Building on past events to make predictions about possible future outcomes is, arguably, exactly what memory is for. Indeed, emerging evidence indicates that memory plays an essential role in at least some kinds of value-based decisions, particularly those that rely on the integration of information across distinct past events or those that depend on prospection about multi-step events leading to outcomes (Barron et al., 2013; Bornstein and Daw, 2013; Doll et al., 2015; Shohamy and Daw, 2015; Wimmer and Shohamy, 2012).

For example, having experienced that A is more valuable than B and, separately, that B is more valuable than C, animals and humans tend to choose A over C, even if they hadn’t previously experienced this precise combination of choice options before. Decisions on this transitive inference task (Dusek and Eichenbaum, 1997; Eichenbaum, 2000; Greene et al., 2006; Heckers et al., 2004; Preston et al., 2004) and others like it, such as acquired equivalence (Grice and Davis, 1960; Shohamy and Wagner, 2008) or sensory preconditioning (Brogden, 1939; Brogden, 1947; Wimmer and Shohamy, 2012), require the integration of distinct past episodes. Another example of the role of memory in integration comes from studies asking people to make choices about new, never-experienced foods, which are made up of combinations of familiar foods (e.g. “tea-jelly” or “avocado-milkshake”; Barron et al., 2013).

In all these tasks, decisions about novel choice options depend on the integration of past memories. Moreover, such choices involve memory mechanisms in the hippocampus (Figure 3) and the ventromedial prefrontal cortex (vmPFC) (Barron et al., 2013; Camille et al., 2011; Gerraty et al., 2014; Rudebeck and Murray, 2011; Zeithamova et al., 2012). Interestingly, patients with anterograde amnesia, who have difficulty forming new memories, also have difficulty imagining future events (Hassabis et al., 2007) and learning to predict rewarding outcomes (Foerde et al., 2013; Hopkins et al., 2004; Palombo et al., 2015). These observations suggest that in addition to its central role in episodic memory, the hippocampus also contributes to value-based decisions.

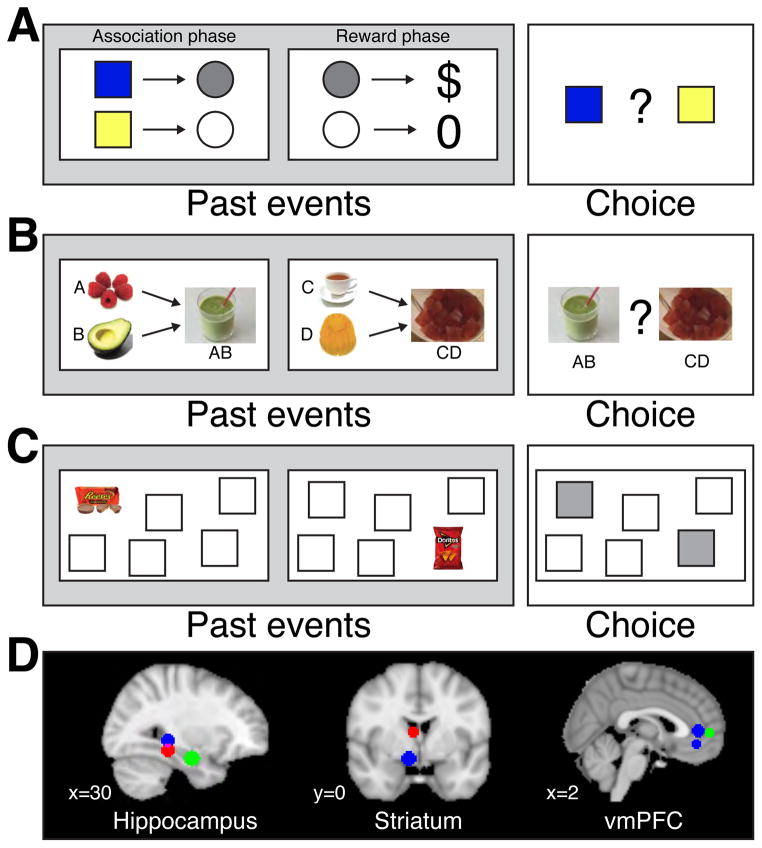

Figure 3. Memory contributions to value-based decisions are related to BOLD activity in the hippocampus, striatum, and vmPFC.

Three tasks in humans use fMRI to assess brain regions involved in value-based decisions involving memory. A. Decisions based on transfer of reward value across related memories (following (Wimmer and Shohamy, 2012). In this “Sensory Preconditioning” task, participants first learn to associate pairs of stimuli with each other (e.g. squares with circles of different colors), without any rewards (Association phase). Next, they learn that one stimulus (e.g., the grey circle) leads to monetary reward, while another (e.g., the white circle) leads to no reward (Reward phase). Finally, participants are asked to make a decision between two neutral stimuli, neither of which has been rewarded before (e.g. blue vs. yellow squares; Choice phase). Participants often prefer the blue square to the yellow square or other neutral and equally familiar stimuli, suggesting they have integrated the reward value with the blue square because of the memory associating the blue square with the rewarded grey circle. The tendency to show this choice behavior is correlated with BOLD activity in the hippocampus and functional connectivity between the hippocampus and the striatum. These sorts of tasks allow experimenters to measure spontaneous memory-based decisions, without soliciting an explicit memory or rewarding it. In actual experiments, all stimuli are controlled for familiarity, likability, value, etc. B. Decisions about new food combinations involve retrieval of memories (following (Barron et al., 2013). In this task, foods are first evaluated separately (e.g. raspberries, avocado, tea, jelly, etc.). Then, participants learn to associate each food with random shapes (e.g. Asian characters; not shown here). Finally, participants are presented with a series of choices between two configurations of abstract shapes, which represent a new configuration of foods (e.g. raspberry-avocado shake vs. tea-jelly). These new choices, which involve retrieval and integration of two previously experienced stimuli, are correlated with activity in the hippocampus and in the vmPFC. C. Decisions about preferred snacks elicit retrieval of spatial memories (following (Gluth et al., 2015). After providing participants’ subjective preference for a series of snack items (not shown), participants learn a series of associations between snacks and a spatial location on the screen. Some associations are trained twice as often as others, creating memories that are relatively strong or weak. Participants are then probed to make choices between two locations, choices that require retrieval of the memory for the location-snack association. Choice accuracy and reaction times conform to bounded evidence accumulation and are impacted by the strength of the memory. Choice value on this task is correlated with BOLD activity in the hippocampus and in vmPFC. D. Overlay of regions in the hippocampus, striatum and vmPFC where memory and value signals were reported for the studies illustrated in A (red) B (green) and C (blue). Across studies, activation in the hippocampus, striatum and vmPFC is related to the use of memories to guide decisions. These common patterns raise questions about the neural mechanisms and pathways by which memories are used to influence value representations and decisions.

In addition to its role in memory, the hippocampus also plays a role in predicting outcomes, via simulation of future events. For example, when rats perform maze-navigation tasks, hippocampal neuronal activity at key decision points appears to encode future positions en route to food reward (Johnson and Redish, 2007; Olafsdottir et al., 2015; Pfeiffer and Foster, 2013). This implies that such decisions involve prospection about the expected outcome. This sort of neuronal “preplay” activity is associated with a pattern of hippocampal activity known as sharp wave ripples, a rapid sequence of firing that appears to reflect both reactivation of previous trajectories (Buzsaki, 1986, 2007; Foster and Wilson, 2006; Lee and Wilson, 2002), as well as memory-based prospection of future trajectories (Pfeiffer and Foster, 2013; Singer et al., 2013; see Yu and Frank, 2015 for review). Similar anticipatory representations have been shown with fMRI in humans during statistical learning tasks (Schapiro et al., 2012), as well as in reward-based decision tasks (Doll et al., 2015). In humans, the hippocampus appears to be involved in decisions that involve “episodic future thinking” (Addis et al., 2007; Hassabis et al., 2007; Schacter et al., 2007; Schacter et al., 2012), which includes imagining a specific future reward-related episode (Palombo et al., 2015; Peters and Buchel, 2010).

A recent study provided a more direct test of the link between memory retrieval, evidence accumulation, and value-based decisions. Gluth and colleagues (2015) studied value based decisions that depended on associative memory between a valued snack item and a spatial location on the screen. These memory guided decisions were associated with BOLD activity in the hippocampus and the vmPFC, suggesting cooperative engagement of memory and value regions in the brain. Moreover, reaction time and accuracy on this task conform to models of bounded evidence accumulation, thus providing evidence for a link between memory, value-based decisions and bounded evidence accumulation.

Together, these studies indicate that memory contributes to value-based decisions. Our hypothesis is that recall of a memory leads to the assignment of value, which in turn furnishes the momentary evidence for a value based decision. But if memories are indeed retrieved to provide evidence towards a decision, why should the process ensue sequentially, and how does the process result in a change in a decision variable? We believe these questions are one and the same. We can begin to glimpse an answer by looking at a variation of a perceptual decision involving reasoning from a sequence of symbols. This is a convenient example because it shares features of the random dot motion task while inviting consideration of value-based associations and the use of memory retrieval as an update of a decision variable.

Linking memory to sequential sampling

In the “symbols” task (Kira et al., 2015), a sequence of highly discriminable shapes appear and disappear until the decision maker—a rhesus monkey—terminates the decision with an eye movement to a choice target (Figure 1B). The shapes have symbolic meaning because each confers a unique weight of evidence bearing on which choice is rewarded. By design, these weights are spaced linearly in units of log likelihood ratio (logLR), and the monkeys appear to learn these assignments approximately. The choices and reaction times conform to the predictions of bounded evidence accumulation, and the firing rates of neurons in area LIP reflect the running accumulation of evidence for and against the choice target in the neuron’s response field. Moreover, as in the motion task, the decision process appears to terminate when a critical level of firing rate is achieved, suggesting application of a threshold (or bound) by downstream circuits (see Kira et al., 2015). The similarity to the random dot motion task is contrived by imposing a sequential structure to the task and by associating the outcome of the decision with an eye movement. This is why the representation of accumulating evidence (i.e., a decision variable) in LIP is analogous in the two tasks.

The analogy breaks down, however, when contemplating the momentary evidence. In the symbols task, the momentary evidence derives from the identity of the shapes, which, like visual motion, undergo processing in extrastriate visual cortex (e.g., areas V4 and IT). This processing is presumably responsible for differentiating the pentagon, say, from the other shapes, but the momentary evidence we seek is a quantity suitable to update a decision variable bearing on the choice-target alternatives. This step is effectively a memory retrieval, in the sense that it must depend on a pre-learned association between the shape and its assigned weight. The monkey has learned to associate each shape with a positive or negative “weight of evidence” such that when a shape appears, it leads to an incremental change in the firing rate of LIP neurons that represent the cumulative logLR in favor of the target in its response field. The memory retrieval is, in essence, the update of a decision variable based on the associated cue.

Viewed from this perspective, the symbols task (Figure 2C) supplies a conceptual bridge between perceptual and value based decisions. It involves an update of a decision variable, not from an operation on the visual representation but from a learned association between a cue and the likelihood that a choice will be rewarded, as if instructing LIP neurons to increment or decrement their discharge by an amount (ΔFiringRate) associated with a shape. We are suggesting that a similar operation occurs in value-based decisions. Value-based decisions also involve choices between symbolic stimuli and also necessitate memory retrieval to update a decision variable. However, memory retrieval in the service of value-based decisions need not be restricted to simple associative memories but is likely to also involve episodic memory retrieval and prospection.

Putative neural mechanisms

We next speculate on the neural mechanisms whereby evidence sampled from memory might update a decision variable. We will attempt to draw an analogy between the symbols task and a value based decision between snack items (Figure 4). For concreteness, we assume that the choice is to be communicated by a simple action, such as an eye movement to one or another target. In both tasks, the visual system leads to recognition of the objects, be it a shape or the two snack items, and in both cases, this information must lead to a ΔFiringRate instruction to update a decision variable—the cumulative evidence in favor of choosing the left or right choice target. Moreover, in both cases, the visual representations are insufficient for the decisions. Instead the value associated with the objects must be retrieved.

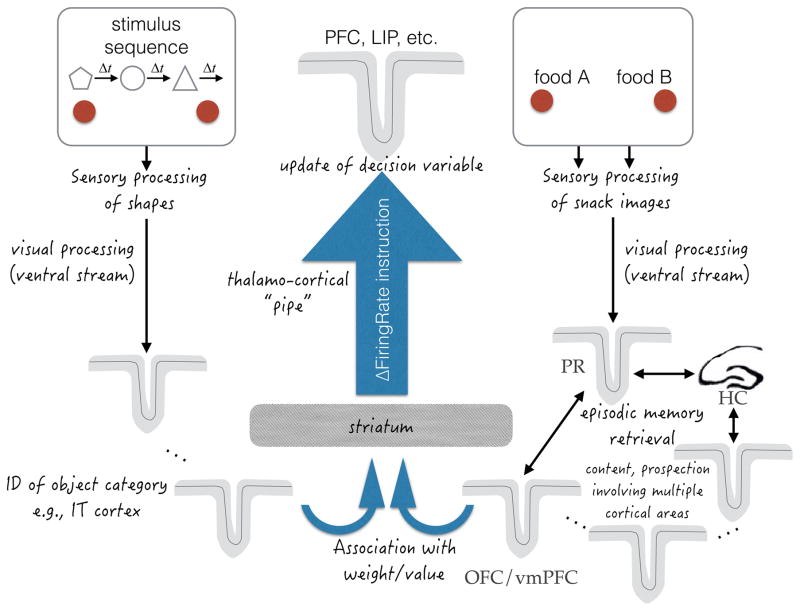

Figure 4. Putative neural mechanisms involved in updating a decision variable using memory.

The symbols task (left) and, per our hypothesis, the snacks task (right) use memory to update a decision variable. The diagram is intended to explain why the updating of a decision variable is likely to be sequential in general, when evidence is derived from memory. In both tasks, visual information is processed to identify the shapes and snack items. This information must lead to an update of a decision variable represented by neurons in associative cortex with the capacity to represent cumulative evidence. This includes area LIP when the choice is communicated by an eye movement, but there are many areas of association cortex that are likely to represent the evolving decision variable. The update of the decision variable is effectively an instruction to increment or decrement the firing rate of neurons that represent the choice targets (provisional plans to select one or the other) by an amount, ΔFiringRate. The ΔFiringRate instruction is informed by a memory retrieval process, which is likely to involve the striatum. In the symbols task this is an association between the shape that is currently displayed and a learned weight (logLR value; Fig. 2C). In the snacks task it is likely to involve episodic memory, which leads to a value association represented in the vmPFC/OFC. Notice that there are many possible sources of evidence in the symbols task and potentially many more in the snacks task. Yet there is limited access to the sites of the decision variable (thalamo-cortical “pipe”). Thus, access to this pipe is likely to be sequential, even when the evidence is not supplied as a sequence. Anatomical labels and arrows should be viewed as hypothetical and not necessarily direct. PR, perirhinal cortex; HC, hippocampus.

The striatum is likely to play a prominent role in both tasks. The striatum has been implicated in the association between objects and value in the service of action selection (Hikosaka et al., 2014). More broadly, the striatum is thought to play an important role in the integration of evidence to guide behavior (Bogacz and Gurney, 2007; Ding, 2015; Wiecki and Frank, 2013; Yu and Frank, 2015). Recent studies also highlight a role for the striatum in the retrieval of episodic memories, especially when they bear on a goal (Badre et al., 2014; Clos et al., 2015; Scimeca and Badre, 2012; Speer et al., 2014). The striatum is also well known to support the incremental updating of value representations that is essential for more habitual (rather than episodic) forms of learning (Daw et al., 2006; Eichenbaum and Cohen, 2001; Foerde and Shohamy, 2011; Glimcher and Fehr, 2013; Shohamy, 2011). Thus, the striatum is ideally positioned to funnel value-relevant information from memory to update cortical regions controlling decisions and actions (Kemp and Powell, 1971; Lee et al., 2015; Znamenskiy and Zador, 2013).

For simple, highly learned associations, the striatum may well be the site where a visual representation is converted to a ΔFiringRate instruction. This is an attractive idea for the symbols task in monkeys, which requires many tens of thousands of trials to learn. For that matter, even the motion task could exploit the striatum to convert activity of direction selective neurons to ΔFiringRate (Ding and Gold, 2012, 2013). In monkeys, simple associations between a food object and its rewarding value are thought to be mediated via an interaction between the rhinal cortices and the vmPFC/OFC (Clark et al., 2013; Eldridge et al., 2016), and this representation of value could exploit the striatum for conversion to a ΔFiringRate instruction.

However, in the snacks task—and in value based decisions in general—we hypothesize a role for more sophisticated memory systems involved in the retrieval of episodic information and in using episodic memory to prospect about the future. Humans (and perhaps monkeys too) are likely to think about the food items, remembering a variety of features, guided perhaps by the particular comparison (Constantino and Daw, 2010). For example, they might recall calorie content, which, depending on satiety, might favor one of the items; or they might play out scenarios in which the items were last consumed or imagine the near future based on those past memories. Some of these memories might be prompted by the particular comparison (e.g., freshness, time of year) or the absence of contrast on another dimension (e.g., similar sweetness). Retrieval of such memories depends on the hippocampus, surrounding medial temporal lobe (MTL) cortex, and interactions between the MTL and prefrontal cortical mechanisms (Cohen et al., 1993; Davachi, 2006; Eichenbaum and Cohen, 2001; Gordon et al., 2014; Hutchinson et al., 2015; Hutchinson et al., 2014; King et al., 2015; Mitchell and Johnson, 2009; Rissman and Wagner, 2012; Rugg and Vilberg, 2013) Indeed, these circuits are well positioned to link memory to decisions. BOLD activity in these regions has been shown to relate to trial-by-trial variability in RT and memory accuracy, and to support cortical reinstatement as a putative source of evidence during episodic memory guided decisions (e.g., Gordon et al., 2014; Kahn et al., 2004; Nyberg et al., 2000; Wheeler et al., 2000). Thus, memory retrieval can furnish information to guide many sorts of decisions, including decisions about memory itself (a topic we return to later). In the case of value-based decisions, we speculate that this process leads to the retrieval of information that bears on value.

The updating of value via retrieved memories is likely to involve the vmPFC and OFC. Although the precise contributions of these regions remains controversial, converging evidence suggests that both areas construct representations that guide value-based decisions (Camille et al., 2011; Jones et al., 2012; Kable and Glimcher, 2009; Padoa-Schioppa and Assad, 2006; Rangel et al., 2008; Rudebeck and Murray, 2011; Strait et al., 2014; Wilson et al., 2014). In human neuroimaging, BOLD activity in the vmPFC is related to subjective value and to choice behavior across many domains, including food, money, and social stimuli (Bartra et al., 2013; Clithero and Rangel, 2014; Hare et al., 2008; Hare et al., 2011; Kable and Glimcher, 2007; Krajbich et al., 2010; Krajbich et al., 2015). Of particular interest for considering the role of memory retrieval, it has been suggested that OFC represents not just reward value but also the identity of a specific reward (Klein-Flugge et al., 2013).

At the resolution of single neuron recordings in primates, there appear to be a variety of intermingled representations in the OFC, including representation of the item after it has been chosen, the relative value of the chosen item and the relative value of items offered (Padoa-Schioppa, 2011; Padoa-Schioppa and Assad, 2006). These last “offered value” neurons appear to represent momentary evidence (Conen and Padoa-Schioppa, 2015), because their firing rates modulate transiently and in a graded fashion as a function of the relative value. They do not represent a decision variable, however, because (i) their activity does not reflect the choice itself and (ii) the dynamics of the firing rate modulation appear to be identical regardless of the relative value (so they do not represent the integrated value). “Offered value” neurons could supply momentary evidence to an integrator, but they would require additional activations to provide a stream of independent samples. A related class of neurons in macaque vmPFC (area 14) appear to represent relative value of items bearing on choice, but they too have transient responses (Strait et al., 2014). Other “reward preference” neurons in OFC exhibit persistent (i.e., sustained) activity predictive of reward (e.g., Saez et al., 2015; Tremblay and Schultz, 1999). Their persistent activity does not suggest an accumulation of evidence toward valuation, but the outcome of such valuation, hence reward expectation (Schoenbaum and Roesch, 2005; Tremblay and Schultz, 2000). These neurons are also unlikely to supply a stream of independent samples of momentary evidence because the noise associated with persistent activity tends to be correlated over long time scales (Murray et al., 2014).

Representation of the decision variable likely varies depending on the required response. When a value-based decision requires a saccadic choice, neurons in area LIP appear to reflect both the decision outcome and the difficulty (e.g., relative value) (Dorris and Glimcher, 2004; Platt and Glimcher, 1999; Rorie et al., 2010; Sugrue et al., 2005), hence a quantity like a decision variable. Like perceptual decisions, this representation is not unique to LIP but can be found in parietal and prefrontal brain areas associated with planning other types of effector responses (Andersen and Cui, 2009; Kubanek and Snyder, 2015; Leon and Shadlen, 1999; Schultz, 2000; Snyder et al., 1997). The critical point is not which association areas are involved in a particular task but that areas like LIP represent a decision variable because they connect many possible inputs—here viewed as sources of evidence—to a potential plan of action, that is, the outcome of the decision.

More generally, the representation of any decision variable must reside in circuits with the capacity to hold, increment and decrement signals—that is, to represent an integral of discrete, independent samples of momentary evidence (i.e., ΔFiringRate). Activity in these circuits cannot be corrupted or overwritten by incoming information and the activity cannot precipitate immediate action. These are the association areas (e.g., LIP) whose neurons exhibit persistent activity—the substrate of working memory, planning, directing attention and decision making (Funahashi et al., 1989; Fuster, 1973; Fuster and Alexander, 1971; Shadlen and Kiani, 2013). It is useful to think of these areas as directed toward some outcome or provisional plan (e.g., a possible eye movement in the case of LIP), but this does not exclude more abstract representations about relative value itself, independent of action, in the persistent activity of neurons in the vmPFC or elsewhere (e.g. Boorman and Rushworth, 2009; Chau et al., 2014; Hunt et al., 2012; Kolling et al., 2012; Padoa-Schioppa and Rustichini, 2014). Like sensory areas involved in evidence acquisition, the circuits that establish provisional plans, strategies, rules and beliefs in propositions (Cui and Andersen, 2011; Duncan, 2010; Gnadt and Andersen, 1988; Li et al., 2015; Pastor-Bernier et al., 2012; Rushworth et al., 2012; Wallis et al., 2001) that is, form decisions—are also arranged in parallel (Cisek, 2007; Cisek, 2012; Shadlen et al., 2008).

Parallel processing and sequential updating

What then accounts for the observation that many decisions appear to evolve sequentially from multiple samples despite the likelihood that such samples can be obtained more or less simultaneously? We suggest that it is the connectivity between the many possible evidence sources to any one site of a decision variable—the matchmaking, as it were, between sources of evidence and what the brain does with that evidence. There are many possible sources of evidence that could bear on a decision, yet it seems unlikely that each association area receives direct projections from all possible sites—perceptual or mnemonic—that could process such evidence. Rather, the diverse sources must affect clusters of neurons in the association cortex through a relatively small number of connections, what we construe as limited bandwidth information conduits or thalamocortical “pipes”. We imagine these pipes to be arranged in parallel, in one-to-one correspondence with the association areas or clusters of neurons within these areas. We employ this metaphor as a reminder that the anatomical substrates we mention here are only speculative.

Figure 4 illustrates one such pipe that connects neurons in area LIP to the variety of inputs that could bear on a decision communicated with an eye movement. The key insight is that one pipe can collect input from many acquisition sources. For example, in the symbols task (Kira et al., 2005), the eight possible shapes shown at four possible locations must somehow affect the same neurons in LIP. Consequently, access must involve switching between sources, in this case the ΔFiringRate instruction that is associated with each of the shapes. It is hard to imagine a mechanism that would allow these diverse instructions simultaneous access to the same LIP neurons. Related constraints have been proposed to explain the psychological refractory period and the broad necessity for serial processing in cognitive operations (Anderson et al., 2004; Zylberberg et al., 2011). Of course, the access problem need not be solved at the projection to LIP. Another cortical area could solve the problem and stream the solution to LIP (e.g., dorsolateral prefrontal cortex), but the problem of access merely shifts to the other cortical area.

The resolution of the access problem is likely to involve circuitry that can control inputs to cortical areas that represent the decision variable. The different sources of information must be converted to a ΔFiringRate instruction by converging on structures that control the cortex. We suggested above that the striatum is a natural candidate for such conversion, and its access to the cortex is via the thalamus, either directly (e.g., to prefrontal and inferotemporal areas, Kemp and Powell, 1971; Middleton and Strick, 1996) or indirectly via cortico-cortical and subcortico-thalamo-cortical connections (e.g., superior colliculus to pulvinar to LIP) (see also Haber and Calzavara, 2009).

Given that we are speculating about controlling the cortical circuit— transmitting an instruction—rather than transmitting information, the accessory thalamic nuclei (e.g., dorsal pulvinar) and thalamic matrix (Jones, 2001) are likely to play a role. Like intralaminar thalamus, these thalamic projections target supragranular cortex, especially layer 1. This is an attractive target because control signals ought to influence the way the circuit processes without contaminating the information that is processed. For example, there are classes of inhibitory neurons that appear to play a role in modulating the activity of pyramidal cells in deeper layers, and distal apical dendritic input to deeper pyramidal cells can affect the way these integrate other inputs throughout their dendritic tree (Jiang et al., 2013; Larkum, 2013a; Larkum, 2013b). Layer 1 is also the site of termination of long range cortico-cortical feedback projections (Felleman and Van Essen, 1991; Rockland and Pandya, 1979). Thus it seems possible that thalamic input with broad arborization could target specific circuits by intersecting with feedback and other inputs (Roth et al., 2016), including the persistent calcium signals in the apical dendrites of pyramidal cells that are activated by another source (e.g., visual input representing the location of a choice target). These are mere speculations, but they lend plausibility to the suggestion that a narrow-bandwidth channel could “instruct” cortical neurons to increment or decrement (or hold) their current firing rate and thus represent a decision variable.

To summarize, we are suggesting that memory retrieval could lead to an update of a decision variable that guides value-based choice. This process must be at least partly sequential, because even if memories and decisions are evaluated in parallel, access to the sites of the decision variable is limited. This perspective can begin to explain why memory retrieval, recall and even prospective thought processes would contribute to decision time.

Conclusions and Future Directions

Our exposition exploits three types of simple, laboratory based decisions. Two are sequential by design; two make use of memory. The symbols task, because it incorporates both qualities, offers a bridge between the more extensively studied motion and snacks tasks. It seems reasonable to wonder if even the random dot motion task actually makes use of associative memory. Even perceptual decisions might involve a more circuitous set of steps, which may have more in common with memory retrieval than previously imagined.

We do not mean to imply that the three tasks depicted in Figure 2 use identical mechanisms. For example, it seems likely that value based decisions exploit a race architecture differently than perceptual decisions about motion direction. In the latter case, evidence for/against rightward motion is evidence against/for leftward, so the races are strongly anticorrelated (Indeed they are often depicted on a single graph with an upper and lower termination bound.) The races are likely to be more independent in value based decisions because memory conferring evidence for/against one of the snack items may have little bearing on the evidence against/for another item. This idea might be related to the observation that the object under scrutiny exerts greater influence in some value based choices (Krajbich et al., 2010; Krajbich et al., 2012).

We also do not mean to imply that all memory-based decisions use identical circuits. For example, some disconnection studies imply that retrieval of value can bypass the striatum (e.g., Clark et al., 2013; Eldridge et al., 2016). Further, specific subregions of OFC and vmPFC are likely to support distinct aspects of value-guided decisions and to connect with the MTL through distinct circuits. For example, some types of object-value learning appear to depend on the central part of the OFC (Rudebeck and Murray, 2011), an area that may be distinct from the frontal regions implicated in value representation in humans (Mackey and Petrides, 2010, 2014; Neubert et al., 2015). One speculative possibility is that these different circuits support two different kinds of decisions: those that involve retrieval of a specific, well-learned value association and those that involve integration of learned associations to support new decisions, inferences, and prospection.

Although our core argument is about how memory can be used to affect value based decisions, it is interesting to speculate about how these ideas apply to other kinds of decisions. In particular, the constraints of the hypothesized circuits are relevant for any decision about evidence that comprises several sources that must compete for access to the same narrow bandwidth “pipe”. This would apply even to perceptual decisions that involve sampling by means other than memory (e.g., attention). We are not suggesting that all decisions require sequential sampling. Many decisions are based on habits or on just one sample of evidence, and many other decisions rely on parallel acquisition of multiple samples and rapid integration. The necessity for sequential processing arises when diverse sources of evidence bear on the same neural targets—the same decision variable or the same anatomical site. Yet no doubt, even here there are exceptions. Multimodal areas that combine disparate sources of evidence, such as vestibular and visual information for example (e.g., VIP or MST) would obviate the necessity for sequential processing, assuming these areas acquire their information in parallel.

Sequential sampling likely also plays a role in decisions pertaining to memory itself, e.g. the decision about whether an encountered cue is old or new, or whether a retrieval search was successful or not, an idea that goes back at least to Ratcliff (1990) and Raaijmakers and Shiffrin (1992). Ratcliff exploited random walk and diffusion models to explain response times in decisions about whether an item, presented among foils, was included in a previously memorized set. Interestingly, in Ratcliff’s view, as in ours, the sequential nature of the task is in the formation of a decision variable (the mental comparisons between object features), not in the retrieval step itself. As with most proponents of random walk and diffusion models (e.g., Laming, 1968; Link, 1992), the assertion of sequential processing is assumed or inferred on the basis of long decision times. This is not to say that memory retrieval itself does not take time, but given that a single memory retrieval is accomplished very rapidly (possibly in the duration of a sharp wave ripple; e.g., on the order of 100ms, Buzsaki, 1986), it is unlikely to account for long decision times.

The hypotheses outlined here are speculative, but they suggest several new avenues for empirical work. For example, there is evidence that memory retrieval supports value-based decisions when value depends explicitly on memory (Gluth et al., 2015), but the extent to which memory retrieval accounts for reaction times in decisions between already familiar items remains unknown. Future studies could test this by combining fMRI, modeling and behavior using typical value-based decision tasks (e.g. deciding between two familiar snacks). If sequential memory retrieval supports this process, we would predict that trial-by-trial BOLD activity, particularly in the hippocampus and perhaps also in the striatum, will account for variance in trial-by-trial reaction time and accuracy. This prediction could be tested in more physiological detail by recording from neurons in the hippocampus during value based decisions. We would predict that putative physiological correlates of retrieval (e.g., sharp wave ripple events; Yu and Frank, 2015), should be observed even during simple value-based decisions and should vary with reaction time.

Causal evidence for memory contributions to value-based decisions could be obtained by testing patients with hippocampal damage. There are few studies of value-based decisions in individuals with memory impairments. Nonetheless, several recent findings suggest such patients are impaired at reward-based learning and decisions (Foerde et al., 2013; Palombo et al., 2015). A critical prediction from our proposed framework is that patients with memory loss will display abnormalities in reaction time and accuracy of value-based decisions, even in tasks that do not depend overtly on memory retrieval. Similarly, our speculation that the striatum plays a key role in updating value predicts that patients with striatal dysfunction, such as Parkinson’s disease, should also display abnormalities in reaction time and accuracy of decisions. Patients with Parkinson’s disease are known to have impaired learning of value. Recent data suggest that they are also impaired at making decisions based on value, separate from learning, consistent with our hypothesis (Shiner et al., 2012; Smittenaar et al., 2012).

Beyond value-based decisions, a central prediction emerging from our framework is that sequential sampling is needed for any decision that depends on the integration of multiple sources of evidence. For example, we predict that if the same cues from the “symbols” task (Kira et al., 2005) were presented simultaneously, rather than sequentially over time, the decision would still require sequential updating of the decision variable (reflected in long reaction times). Notably, experiments using a similar probabilistic task in humans do present the cues simultaneously, and analysis of choice data suggests that human participants integrate information across these simultaneously presented cues (Gluck et al., 2002; Meeter et al., 2006; Shohamy et al., 2004). We predict that reaction times should vary as a function of integration and will reflect a process of sequential sampling.

The idea that memory and prospection guide value-based decisions invites reconsideration of other features of value-based decisions, which contrast with perceptual decisions. Unlike perceptual decisions, which are about a state of the world, relative value is in the mind of the decider. If the evidence bearing on preference involves memory and prospection, then the values of items might change as one pits them against different items; moreover, the order in which memory is probed will affect which choice is made (Weber and Johnson, 2006; Weber et al., 2007). This implies that the notion of correct/incorrect is not only subjective but that it is constructed and therefore influenced by how memories are encoded, represented, and retrieved. This idea might also offer insight into the phenomenon of stochastic preference with repeated exposures (Agranov and Ortoleva).

The use of memory might help explain a peculiar feature of decisions between two high-value items, for which the rational choice is to take either without wasting time on deliberation. To wit, memory and prospection have the potential to introduce new dimensions of comparison that did not affect an initial assessment of value (e.g., via auction). Finally, from this perspective, the rule for terminating a decision might be more complicated, involving an assessment of whether further deliberation is likely to yield a result that outperforms the current bias. This formulation is related to the application of dynamic programming to establish termination criteria in simple perceptual decisions (Drugowitsch et al., 2012; Rao, 2010). The only difference is that for the latter, the approach yields optimal policy, given desiderata, such as collapsing decision bounds (Drugowitsch et al., 2012), whereas in value based decisions the estimate of the value of future deliberation might be approximated on the fly.

In summary, the hypothesis we propose here is that many value-based decisions involve sampling of value-relevant evidence from memory to inform the decision. We speculate on the reasons for the sequential nature of this process, proposing that there are circuit-level constraints which prohibit parallel or convergent updating of neural responses that represent the accumulation of evidence—that is, a decision variable. This constraint on how information can come to bear on the decision making process should be considered independently of the mechanism of parallel memory retrieval itself, especially its dynamics. That said, conceiving of memory retrieval as a process that updates a decision variable, at the neural level, might guide understanding of memory retrieval in its own right.

Acknowledgments

We thank Akram Bakkour for helpful discussion of ideas, Rui Costa, Peter Strick, Yael Niv, Matthew Rushworth and Daniel Salzman for advice and discussion, and Naomi Odean, Lucy Owen, Mehdi Sanayei, NaYoung So, and Ariel Zylberberg for feedback on an earlier draft of the manuscript. The collaboration is supported by the McKnight Foundation Memory Disorders Award. The work is also supported by grants from NIDA, NSF and the Templeton Foundation (to DS); HHMI, HFSP and NEI (to MNS).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Addis DR, Wong AT, Schacter DL. Remembering the past and imagining the future: common and distinct neural substrates during event construction and elaboration. Neuropsychologia. 2007;45:1363–1377. doi: 10.1016/j.neuropsychologia.2006.10.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Agranov M, Ortoleva P. Stochastic Choice and Preferences for Randomization. JPE. forthcoming. [Google Scholar]

- Andersen RA, Cui H. Intention, action planning, and decision making in parietal-frontal circuits. Neuron. 2009;63:568–583. doi: 10.1016/j.neuron.2009.08.028. [DOI] [PubMed] [Google Scholar]

- Anderson JR, Bothell D, Byrne MD, Douglass S, Lebiere C, Qin Y. An Integrated Theory of the Mind. Psychological Review. 2004:1036–1060. doi: 10.1037/0033-295X.111.4.1036. [DOI] [PubMed] [Google Scholar]

- Badre D, Lebrecht S, Pagliaccio D, Long NM, Scimeca JM. Ventral striatum and the evaluation of memory retrieval strategies. J Cogn Neurosci. 2014;26:1928–1948. doi: 10.1162/jocn_a_00596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barron HC, Dolan RJ, Behrens TEJ. Online evaluation of novel choices by simultaneous representation of multiple memories. Nature neuroscience. 2013;16:1492–1498. doi: 10.1038/nn.3515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartra O, McGuire JT, Kable JW. The valuation system: a coordinate-based meta-analysis of BOLD fMRI experiments examining neural correlates of subjective value. Neuroimage. 2013;76:412–427. doi: 10.1016/j.neuroimage.2013.02.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bogacz R, Gurney K. The basal ganglia and cortex implement optimal decision making between alternative actions. Neural computation. 2007;19:442–477. doi: 10.1162/neco.2007.19.2.442. [DOI] [PubMed] [Google Scholar]

- Boorman ED, Rushworth MF. Conceptual representation and the making of new decisions. Neuron. 2009;63:721–723. doi: 10.1016/j.neuron.2009.09.014. [DOI] [PubMed] [Google Scholar]

- Bornstein AM, Daw ND. Cortical and hippocampal correlates of deliberation during model-based decisions for rewards in humans. PLoS Comput Biol. 2013;9:e1003387. doi: 10.1371/journal.pcbi.1003387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brogden W. Sensory pre-conditioning. Journal of Experimental Psychology. 1939;25:323. doi: 10.1037/h0058465. [DOI] [PubMed] [Google Scholar]

- Brogden WJ. Sensory pre-conditioning of human subjects. J Exp Psychol. 1947;37:527–539. doi: 10.1037/h0058465. [DOI] [PubMed] [Google Scholar]

- Buzsaki G. Hippocampal sharp waves: their origin and significance. Brain research. 1986;398:242–252. doi: 10.1016/0006-8993(86)91483-6. [DOI] [PubMed] [Google Scholar]

- Buzsaki G. The structure of consciousness. Nature. 2007;446:267. doi: 10.1038/446267a. [DOI] [PubMed] [Google Scholar]

- Camille N, Griffiths CA, Vo K, Fellows LK, Kable JW. Ventromedial frontal lobe damage disrupts value maximization in humans. J Neurosci. 2011;31:7527–7532. doi: 10.1523/JNEUROSCI.6527-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chau BK, Kolling N, Hunt LT, Walton ME, Rushworth MF. A neural mechanism underlying failure of optimal choice with multiple alternatives. Nat Neurosci. 2014;17:463–470. doi: 10.1038/nn.3649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P. Cortical mechanisms of action selection: the affordance competition hypothesis. Philos Trans R Soc Lond, B, Biol Sci. 2007:1585–1599. doi: 10.1098/rstb.2007.2054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P. Making decisions through a distributed consensus. Curr Opin Neurobiol. 2012:927–936. doi: 10.1016/j.conb.2012.05.007. [DOI] [PubMed] [Google Scholar]

- Clark AM, Bouret S, Young AM, Murray EA, Richmond BJ. Interaction between orbital prefrontal and rhinal cortex is required for normal estimates of expected value. J Neurosci. 2013;33:1833–1845. doi: 10.1523/JNEUROSCI.3605-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clithero JA, Rangel A. Informatic parcellation of the network involved in the computation of subjective value. Soc Cogn Affect Neurosci. 2014;9:1289–1302. doi: 10.1093/scan/nst106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clos M, Schwarze U, Gluth S, Bunzeck N, Sommer T. Goal- and retrieval-dependent activity in the striatum during memory recognition. Neuropsychologia. 2015;72:1–11. doi: 10.1016/j.neuropsychologia.2015.04.011. [DOI] [PubMed] [Google Scholar]

- Cohen NJ, Eichenbaum H, Memory A. the Hippocampal System. MIT Press; Cambridge, MA: 1993. [Google Scholar]

- Conen KE, Padoa-Schioppa C. Neuronal variability in orbitofrontal cortex during economic decisions. J Neurophysiol. 2015;114:1367–1381. doi: 10.1152/jn.00231.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Constantino SM, Daw ND. A closer look at choice. Nat Neurosci. 2010;13:1153–1154. doi: 10.1038/nn1010-1153. [DOI] [PubMed] [Google Scholar]

- Cui H, Andersen RA. Different representations of potential and selected motor plans by distinct parietal areas. J Neurosci. 2011;31:18130–18136. doi: 10.1523/JNEUROSCI.6247-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davachi L. Item, context and relational episodic encoding in humans. Curr Opin Neurobiol. 2006;16:693–700. doi: 10.1016/j.conb.2006.10.012. [DOI] [PubMed] [Google Scholar]

- Daw ND, O’Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding L. Distinct dynamics of ramping activity in the frontal cortex and caudate nucleus in monkeys. J Neurophysiol. 2015;114:1850–1861. doi: 10.1152/jn.00395.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding L, Gold JI. Neural correlates of perceptual decision making before, during, and after decision commitment in monkey frontal eye field. Cereb Cortex. 2012;22:1052–1067. doi: 10.1093/cercor/bhr178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding L, Gold JI. The basal ganglia’s contributions to perceptual decision making. Neuron. 2013;79:640–649. doi: 10.1016/j.neuron.2013.07.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doll BB, Duncan KD, Simon DA, Shohamy D, Daw ND. Model-based choices involve prospective neural activity. Nature neuroscience. 2015 doi: 10.1038/nn.3981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorris MC, Glimcher PW. Activity in posterior parietal cortex is correlated with the relative subjective desirability of action. Neuron. 2004;44:365–378. doi: 10.1016/j.neuron.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Drugowitsch J, Moreno-Bote R, Churchland AK, Shadlen MN, Pouget A. The cost of accumulating evidence in perceptual decision making. J Neurosci. 2012;32:3612–3628. doi: 10.1523/JNEUROSCI.4010-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J. The multiple-demand (MD) system of the primate brain: mental programs for intelligent behaviour. Trends in cognitive sciences. 2010;14:172–179. doi: 10.1016/j.tics.2010.01.004. [DOI] [PubMed] [Google Scholar]

- Dusek JA, Eichenbaum H. The hippocampus and memory for orderly stimulus relations. Proc Natl Acad Sci U S A. 1997;94:7109–7114. doi: 10.1073/pnas.94.13.7109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H. A cortical-hippocampal system for declarative memory. Nat Rev Neurosci. 2000;1:41–50. doi: 10.1038/35036213. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H, Cohen NJ. From conditioning to conscious recollection: memory systems of the brain. New York: Oxford University Press; 2001. [Google Scholar]

- Eldridge MA, Lerchner W, Saunders RC, Kaneko H, Krausz KW, Gonzalez FJ, Ji B, Higuchi M, Minamimoto T, Richmond BJ. Chemogenetic disconnection of monkey orbitofrontal and rhinal cortex reversibly disrupts reward value. Nat Neurosci. 2016;19:37–39. doi: 10.1038/nn.4192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felleman D, Van Essen D. Distributed hierarchical processing in the primate cerebral cortex. Cerebral Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Foerde K, Race E, Verfaellie M, Shohamy D. A role for the medial temporal lobe in feedback-driven learning: evidence from amnesia. Journal of Neuroscience. 2013;33:5698–5704. doi: 10.1523/JNEUROSCI.5217-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foerde K, Shohamy D. The role of the basal ganglia in learning and memory: insight from Parkinson’s disease. Neurobiology of learning and memory. 2011;96:624–636. doi: 10.1016/j.nlm.2011.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster DJ, Wilson MA. Reverse replay of behavioural sequences in hippocampal place cells during the awake state. Nature. 2006;440:680–683. doi: 10.1038/nature04587. [DOI] [PubMed] [Google Scholar]

- Funahashi S, Bruce C, Goldman-Rakic P. Mnemonic coding of visual space in the monkey’s dorsolateral prefrontal cortex. J Neurophysiol. 1989;61:331–349. doi: 10.1152/jn.1989.61.2.331. [DOI] [PubMed] [Google Scholar]

- Fuster JM. Unit activity in prefrontal cortex during delayed-response performance: neuronal correlates of transient memory. J Neurophysiol. 1973;36:61–78. doi: 10.1152/jn.1973.36.1.61. [DOI] [PubMed] [Google Scholar]

- Fuster JM, Alexander GE. Neuron activity related to short-term memory. Science. 1971;173:652–654. doi: 10.1126/science.173.3997.652. [DOI] [PubMed] [Google Scholar]

- Gerraty RT, Davidow JY, Wimmer GE, Kahn I, Shohamy D. Transfer of learning relates to intrinsic connectivity between hippocampus, ventromedial prefrontal cortex, and large-scale networks. J Neurosci. 2014;34:11297–11303. doi: 10.1523/JNEUROSCI.0185-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glimcher PW, Fehr E. Neuroeconomics: Decision making and the brain. Academic Press; 2013. [Google Scholar]

- Gluck MA, Shohamy D, Myers C. How do people solve the “weather prediction” task?: individual variability in strategies for probabilistic category learning. Learning & memory. 2002;9:408–418. doi: 10.1101/lm.45202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gluth S, Sommer T, Rieskamp J, Buchel C. Effective Connectivity between Hippocampus and Ventromedial Prefrontal Cortex Controls Preferential Choices from Memory. Neuron. 2015;86:1078–1090. doi: 10.1016/j.neuron.2015.04.023. [DOI] [PubMed] [Google Scholar]

- Gnadt JW, Andersen RA. Memory related motor planning activity in posterior parietal cortex of macaque. Experimental brain research. 1988;70:216–220. doi: 10.1007/BF00271862. [DOI] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- Gordon AM, Rissman J, Kiani R, Wagner AD. Cortical reinstatement mediates the relationship between content-specific encoding activity and subsequent recollection decisions. Cereb Cortex. 2014;24:3350–3364. doi: 10.1093/cercor/bht194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene AJ, Gross WL, Elsinger CL, Rao SM. An FMRI analysis of the human hippocampus: inference, context, and task awareness. J Cogn Neurosci. 2006;18:1156–1173. doi: 10.1162/jocn.2006.18.7.1156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grice GR, Davis JD. Effect of concurrent responses on the evocation and generalization of the conditioned eyeblink. J Exp Psychol. 1960;59:391–395. doi: 10.1037/h0044981. [DOI] [PubMed] [Google Scholar]

- Haber SN, Calzavara R. The cortico-basal ganglia integrative network: the role of the thalamus. Brain Res Bull. 2009:69–74. doi: 10.1016/j.brainresbull.2008.09.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare T, O’doherty J, Camerer C, Schultz W, Rangel A. Dissociating the Role of the Orbitofrontal Cortex and the Striatum in the Computation of Goal Values and Prediction Errors. Journal of Neuroscience. 2008;28:5623. doi: 10.1523/JNEUROSCI.1309-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare TA, Schultz W, Camerer CF, O'doherty JP, Rangel A. Transformation of stimulus value signals into motor commands during simple choice. Proceedings of the National Academy of Sciences. 2011;108:18120–18125. doi: 10.1073/pnas.1109322108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Kumaran D, Vann SD, Maguire EA. Patients with hippocampal amnesia cannot imagine new experiences. Proc Natl Acad Sci U S A. 2007;104:1726–1731. doi: 10.1073/pnas.0610561104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heckers S, Zalesak M, Weiss AP, Ditman T, Titone D. Hippocampal activation during transitive inference in humans. Hippocampus. 2004;14:153–162. doi: 10.1002/hipo.10189. [DOI] [PubMed] [Google Scholar]

- Hikosaka O, Kim HF, Yasuda M, Yamamoto S. Basal ganglia circuits for reward value-guided behavior. Annu Rev Neurosci. 2014;37:289–306. doi: 10.1146/annurev-neuro-071013-013924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopkins RO, Myers CE, Shohamy D, Grossman S, Gluck M. Impaired probabilistic category learning in hypoxic subjects with hippocampal damage. Neuropsychologia. 2004;42:524–535. doi: 10.1016/j.neuropsychologia.2003.09.005. [DOI] [PubMed] [Google Scholar]

- Hunt LT, Kolling N, Soltani A, Woolrich MW, Rushworth MF, Behrens TE. Mechanisms underlying cortical activity during value-guided choice. Nat Neurosci. 2012;15:470–476. S471–473. doi: 10.1038/nn.3017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutchinson JB, Uncapher MR, Wagner AD. Increased functional connectivity between dorsal posterior parietal and ventral occipitotemporal cortex during uncertain memory decisions. Neurobiology of learning and memory. 2015;117:71–83. doi: 10.1016/j.nlm.2014.04.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutchinson JB, Uncapher MR, Weiner KS, Bressler DW, Silver MA, Preston AR, Wagner AD. Functional heterogeneity in posterior parietal cortex across attention and episodic memory retrieval. Cereb Cortex. 2014;24:49–66. doi: 10.1093/cercor/bhs278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang X, Wang G, Lee AJ, Stornetta RL, Zhu JJ. The organization of two new cortical interneuronal circuits. Nat Neurosci. 2013;16:210–218. doi: 10.1038/nn.3305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson A, Redish AD. Neural ensembles in CA3 transiently encode paths forward of the animal at a decision point. J Neurosci. 2007;27:12176–12189. doi: 10.1523/JNEUROSCI.3761-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones EG. The thalamic matrix and thalamocortical synchrony. Trends Neurosci. 2001;24:595–601. doi: 10.1016/s0166-2236(00)01922-6. [DOI] [PubMed] [Google Scholar]

- Jones JL, Esber GR, McDannald MA, Gruber AJ, Hernandez A, Mirenzi A, Schoenbaum G. Orbitofrontal cortex supports behavior and learning using inferred but not cached values. Science. 2012;338:953–956. doi: 10.1126/science.1227489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nat Neurosci. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kable JW, Glimcher PW. The neurobiology of decision: consensus and controversy. Neuron. 2009;63:733–745. doi: 10.1016/j.neuron.2009.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahn I, Davachi L, Wagner AD. Functional-neuroanatomic correlates of recollection: implications for models of recognition memory. J Neurosci. 2004;24:4172–4180. doi: 10.1523/JNEUROSCI.0624-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kemp JM, Powell TP. The connexions of the striatum and globus pallidus: synthesis and speculation. Philos Trans R Soc Lond B Biol Sci. 1971;262:441–457. doi: 10.1098/rstb.1971.0106. [DOI] [PubMed] [Google Scholar]

- Kiani R, Corthell L, Shadlen MN. Choice Certainty Is Informed by Both Evidence and Decision Time. Neuron. 2014;84:1329–1342. doi: 10.1016/j.neuron.2014.12.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- King DR, de Chastelaine M, Elward RL, Wang TH, Rugg MD. Recollection-related increases in functional connectivity predict individual differences in memory accuracy. J Neurosci. 2015;35:1763–1772. doi: 10.1523/JNEUROSCI.3219-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kira S, Yang T, Shadlen MN. A Neural Implementation of Wald’s Sequential Probability Ratio Test. Neuron. 2015;85:861–873. doi: 10.1016/j.neuron.2015.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein-Flugge MC, Barron HC, Brodersen KH, Dolan RJ, Behrens TE. Segregated encoding of reward-identity and stimulus-reward associations in human orbitofrontal cortex. J Neurosci. 2013;33:3202–3211. doi: 10.1523/JNEUROSCI.2532-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolling N, Behrens TE, Mars RB, Rushworth MF. Neural mechanisms of foraging. Science. 2012;336:95–98. doi: 10.1126/science.1216930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krajbich I, Armel C, Rangel A. Visual fixations and the computation and comparison of value in simple choice. Nat Neurosci. 2010;13:1292–1298. doi: 10.1038/nn.2635. [DOI] [PubMed] [Google Scholar]

- Krajbich I, Bartling B, Hare T, Fehr E. Rethinking fast and slow based on a critique of reaction-time reverse inference. Nat Commun. 2015;6:7455. doi: 10.1038/ncomms8455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krajbich I, Lu D, Camerer C, Rangel A. The attentional drift-diffusion model extends to simple purchasing decisions. Frontiers in psychology. 2012;3:193. doi: 10.3389/fpsyg.2012.00193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krajbich I, Rangel A. Multialternative drift-diffusion model predicts the relationship between visual fixations and choice in value-based decisions. Proceedings of the National Academy of Sciences. 2011 doi: 10.1073/pnas.1101328108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kubanek J, Snyder LH. Reward-based decision signals in parietal cortex are partially embodied. J Neurosci. 2015;35:4869–4881. doi: 10.1523/JNEUROSCI.4618-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laming DRJ. Information Theory of Choice-Reaction Times. London: Academic Press; 1968. [Google Scholar]

- Larkum M. A cellular mechanism for cortical associations: an organizing principle for the cerebral cortex. Trends in Neurosciences. 2013a;36:141–151. doi: 10.1016/j.tins.2012.11.006. [DOI] [PubMed] [Google Scholar]

- Larkum ME. The yin and yang of cortical layer 1. Nat Neurosci. 2013b;16:114–115. doi: 10.1038/nn.3317. [DOI] [PubMed] [Google Scholar]

- Lee AK, Wilson MA. Memory of sequential experience in the hippocampus during slow wave sleep. Neuron. 2002;36:1183–1194. doi: 10.1016/s0896-6273(02)01096-6. [DOI] [PubMed] [Google Scholar]

- Lee AM, Tai LH, Zador A, Wilbrecht L. Between the primate and ‘reptilian’ brain: Rodent models demonstrate the role of corticostriatal circuits in decision making. Neuroscience. 2015;296:66–74. doi: 10.1016/j.neuroscience.2014.12.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leon MI, Shadlen MN. Effect of expected reward magnitude on the response of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron. 1999;24:415–425. doi: 10.1016/s0896-6273(00)80854-5. [DOI] [PubMed] [Google Scholar]

- Li N, Chen TW, Guo ZV, Gerfen CR, Svoboda K. A motor cortex circuit for motor planning and movement. Nature. 2015;519:51–56. doi: 10.1038/nature14178. [DOI] [PubMed] [Google Scholar]

- Link SW. The Wave Theory of Difference and Similarity. Hillsdale, NJ: Lawrence Erlbaum Associates; 1992. [Google Scholar]

- Mackey S, Petrides M. Quantitative demonstration of comparable architectonic areas within the ventromedial and lateral orbital frontal cortex in the human and the macaque monkey brains. Eur J Neurosci. 2010;32:1940–1950. doi: 10.1111/j.1460-9568.2010.07465.x. [DOI] [PubMed] [Google Scholar]

- Mackey S, Petrides M. Architecture and morphology of the human ventromedial prefrontal cortex. Eur J Neurosci. 2014;40:2777–2796. doi: 10.1111/ejn.12654. [DOI] [PubMed] [Google Scholar]

- Meeter M, Myers CE, Shohamy D, Hopkins RO, Gluck MA. Strategies in probabilistic categorization: results from a new way of analyzing performance. Learning & memory. 2006;13:230–239. doi: 10.1101/lm.43006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middleton FA, Strick PL. The temporal lobe is a target of output from the basal ganglia. Proceedings of the National Academy of Sciences of the United States of America. 1996;93:8683–8687. doi: 10.1073/pnas.93.16.8683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell KJ, Johnson MK. Source monitoring 15 years later: what have we learned from fMRI about the neural mechanisms of source memory? Psychological bulletin. 2009;135:638–677. doi: 10.1037/a0015849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray JD, Bernacchia A, Freedman DJ, Romo R, Wallis JD, Cai X, Padoa-Schioppa C, Pasternak T, Seo H, Lee D, Wang XJ. A hierarchy of intrinsic timescales across primate cortex. Nat Neurosci. 2014;17:1661–1663. doi: 10.1038/nn.3862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neubert FX, Mars RB, Sallet J, Rushworth MF. Connectivity reveals relationship of brain areas for reward-guided learning and decision making in human and monkey frontal cortex. Proc Natl Acad Sci U S A. 2015;112:E2695–2704. doi: 10.1073/pnas.1410767112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nyberg L, Habib R, McIntosh AR, Tulving E. Reactivation of encoding-related brain activity during memory retrieval. Proc Natl Acad Sci U S A. 2000;97:11120–11124. doi: 10.1073/pnas.97.20.11120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olafsdottir HF, Barry C, Saleem AB, Hassabis D, Spiers HJ. Hippocampal place cells construct reward related sequences through unexplored space. Elife. 2015;4:e06063. doi: 10.7554/eLife.06063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C. Neurobiology of economic choice: a good-based model. Annual Review of Neuroscience. 2011;34:333–359. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Rustichini A. Rational Attention and Adaptive Coding: A Puzzle and a Solution. The American economic review. 2014;104:507–513. doi: 10.1257/aer.104.5.507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palombo DJ, Keane MM, Verfaellie M. The medial temporal lobes are critical for reward-based decision making under conditions that promote episodic future thinking. Hippocampus. 2015;25:345–353. doi: 10.1002/hipo.22376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pastor-Bernier A, Tremblay E, Cisek P. Dorsal premotor cortex is involved in switching motor plans. Frontiers in neuroengineering. 2012;5:5. doi: 10.3389/fneng.2012.00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters J, Buchel C. Episodic future thinking reduces reward delay discounting through an enhancement of prefrontal-mediotemporal interactions. Neuron. 2010;66:138–148. doi: 10.1016/j.neuron.2010.03.026. [DOI] [PubMed] [Google Scholar]

- Pfeiffer BE, Foster DJ. Hippocampal place-cell sequences depict future paths to remembered goals. Nature. 2013;497:74–79. doi: 10.1038/nature12112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platt ML, Glimcher PW. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- Polanía R, Krajbich I, Grueschow M, Ruff CC. Neural oscillations and synchronization differentially support evidence accumulation in perceptual and value-based decision making. Neuron. 2014;82:709–720. doi: 10.1016/j.neuron.2014.03.014. [DOI] [PubMed] [Google Scholar]

- Preston AR, Shrager Y, Dudukovic NM, Gabrieli JD. Hippocampal contribution to the novel use of relational information in declarative memory. Hippocampus. 2004;14:148–152. doi: 10.1002/hipo.20009. [DOI] [PubMed] [Google Scholar]

- Raaijmakers JG, Shiffrin RM. Models for recall and recognition. Annual review of psychology. 1992:205–234. doi: 10.1146/annurev.ps.43.020192.001225. [DOI] [PubMed] [Google Scholar]

- Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rao RPN. Decision Making Under Uncertainty: A Neural Model Based on Partially Observable Markov Decision Processes. Front Comput Neurosci (Frontiers) 2010 doi: 10.3389/fncom.2010.00146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R. A theory of memory retrieval. Psychological Review. 1978;85:59–108. [Google Scholar]

- Ratcliff R, Clark SE, Shiffrin RM. List-strength effect: I. Data and discussion. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1990:163–178. [PubMed] [Google Scholar]

- Rissman J, Wagner AD. Distributed representations in memory: insights from functional brain imaging. Annual review of psychology. 2012;63:101–128. doi: 10.1146/annurev-psych-120710-100344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rockland KS, Pandya DN. Laminar origins and terminations of cortical connections of the occipital lobe in the rhesus monkey. Brain Res. 1979;179:3–20. doi: 10.1016/0006-8993(79)90485-2. [DOI] [PubMed] [Google Scholar]

- Rorie AE, Gao J, McClelland JL, Newsome WT. Integration of sensory and reward information during perceptual decision-making in lateral intraparietal cortex (LIP) of the macaque monkey. PLoS ONE. 2010;5:e9308. doi: 10.1371/journal.pone.0009308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roth MM, Dahmen JC, Muir DR, Imhof F, Martini FJ, Hofer SB. Thalamic nuclei convey diverse contextual information to layer 1 of visual cortex. Nat Neurosci. 2016;19:299–307. doi: 10.1038/nn.4197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Murray EA. Dissociable effects of subtotal lesions within the macaque orbital prefrontal cortex on reward-guided behavior. J Neurosci. 2011;31:10569–10578. doi: 10.1523/JNEUROSCI.0091-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rugg MD, Vilberg KL. Brain networks underlying episodic memory retrieval. Curr Opin Neurobiol. 2013;23:255–260. doi: 10.1016/j.conb.2012.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]