Abstract

We evaluated a brief multiple-stimulus without replacement (MSWO) preference assessment conducted in video format with four children with autism. Specifically, we compared the results of a video-based MSWO to the results of a tangible MSWO. Toys identified as highly preferred (HP) in the video-based MSWO were also HP in the tangible MSWO for three of four participants, and correlations between video-based and tangible MSWO assessment results across participants were strong and statistically significant. Therefore, video-based MSWOs may be an accurate compliment to tangible MSWOs for children with autism.

Keywords: Preference assessment, Technology, Autism

Effective reinforcement strategies are the cornerstone of early intensive behavioral intervention (EIBI) programs for children with autism. Because of the importance of effective reinforcement, it is critical that practitioners use strategies that result in accurate reinforcer identification. The multiple-stimulus without replacement (MSWO) preference assessment, developed by DeLeon and Iwata (1996), is one strategy practitioners may use to identify potential reinforcers. An MSWO involves the simultaneous presentation of multiple items in front of the student. After the student selects an item, he or she then has an opportunity to play with or consume it. Then, the remaining items are re-arranged and the student is given another opportunity to make a selection. This process continues until the student selects all items.

A recent review of preference assessment research by Kang et al. (2013) suggests that highly preferred (HP) items identified in MSWOs are likely to function as reinforcers. Aside from the predictive validity of MSWOs in identifying reinforcers, MSWOs may take less time to complete than other types of preference assessments, such as paired-stimulus (Fisher et al. 1992) preference assessments. MSWOs also provide complete information about how a student’s preference for an item compares to preference for other items (Karsten et al. 2011). The development of the brief MSWO (Carr et al. 2000) has further decreased administration time, and as a result, brief MSWOs are arguably an optimal form of preference assessment for most students in EIBI settings because of their quick administration time and accuracy in identifying potential reinforcers (see Karsten et al. for a decision-making model for identifying an appropriate form of preference assessment for an individual with autism).

Despite the advantages of the brief MSWO, researchers have also noted its limitations. Whereas a paired-stimulus preference assessment involves the simultaneous presentation of two items at once, a brief MSWO involves, at least initially, the presentation of more than two items. Depending on the overall size of the array and the form (e.g., large toys or activities) of stimuli, administration may be difficult or impractical (Kang et al. 2013). To mitigate this issue, practitioners may depict stimuli in video format. Though this solution may seem logical, video-based MSWOs have not been systematically evaluated, and the only published study to examine video-based preference assessments evaluated a paired-stimulus format. Specifically, Snyder et al. (2012) compared the results of a tangible paired-stimulus preference assessment to the results of a video-based paired-stimulus preference assessment with six participants. For the video-based assessment, participants were shown two videos, each on a separate DVD player, of an individual interacting with a tangible item. Results yielded relatively strong correlations between both assessments, meaning items ranked as HP in the tangible paired-stimulus preference assessment were likely to be ranked as HP in the video-based assessment.

Peterson (2014) noted that video-based preference assessments allow practitioners to capture the active characteristics of each item or activity and depict stimuli within their appropriate context. Because of this benefit, and the convenience and prevalence of electronic devices used in behavioral programming for children with autism (see Kagohara et al 2013), video-based MSWOs may allow practitioners to mitigate fallbacks of the assessment (e.g., difficulty in depicting large items or activities) while presenting stimuli through a commonly used electronic device (e.g., iPad). Therefore, the purpose of this study was to extend Snyder et al. (2012) and Carr et al. (2000) by comparing the results of a brief MSWO conducted in video format (V-MSWO) to a brief MSWO conducted in tangible format (T-MSWO). An understanding of the extent to which brief V-MSWO assessment results correspond to T-MSWO assessment results may provide preliminary information about whether or not a V-MSWO accurately identifies highly preferred toys in children with autism.

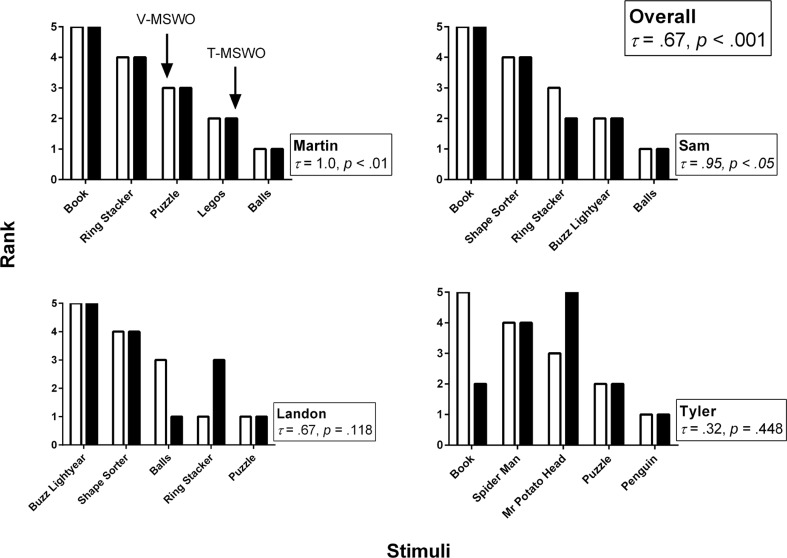

Preference assessments were conducted with four participants. For Martin, Sam, and Tyler, the HP toy identified in the V-MSWO was the same as the HP toy identified in the T-MSWO (see Fig. 1). Landon’s assessments each yielded two HP toys, with a match between one HP toy (i.e., puzzle). Using Kendall’s tau, we found a strong and statistically significant correlation between V-MSWO and T-MSWO assessments for Martin (τ = 1.0, p < 0.01) and Sam (τ = 0.95, p < 0.05). Landon had a strong correlation between assessments that was not statistically significant (τ = 0.67, p = 0.118), and we computed a moderate, non-significant correlation between Tyler’s assessment results (τ = 0.32, p = 0.448). However, we found that the overall correlation between assessments across participants was strong and statistically significant (τ = 0.67, p < 0.001).

Fig. 1.

The results of the V-MSWO and T-MSWO assessments

The results of this study provide preliminary support that brief MSWOs conducted in an electronic, video-based format may accurately identify HP toys in young children with autism. This is supported by our findings that Martin, Sam, and Tyler’s HP toy identified in their V-MSWO was the same as the HP toy identified in their T-MSWO. Two HP toys were identified in Landon’s assessments, and one of those HP toys matched between assessments. The results also provide preliminary support that overall V-MSWO assessment results may correspond to T-MSWO assessment results. This is supported by our finding that there was a strong correlation between assessments within three participants (Sam, Martin, and Tyler) and a strong and statistically significant correlation between assessments across all participants. Therefore, a V-MSWO conducted with children with autism may be an effective strategy to identify preferred toys.

Despite a strong correlation between V-MSWO and T-MSWO assessments, concordance between Tyler’s assessments was relatively low. It is possible this occurred due to lack of pre-requisite skills. Clevenger and Graff (2005) reported picture-to-object and object-to-picture matching skills may be necessary for accurate pictorial preference assessments; however, it is not clear if these results generalize to preference assessments in video format. The order of experimental conditions may have affected Tyler’s results. However, condition order was randomized and sequence effects were not observed in the remaining three participants. Finally, momentary changes in preference may have been responsible in differences in results between forms of assessments.

When implementing brief V-MSWOs in practice, we make the following recommendations. First, practitioners should evaluate whether or not each student has the appropriate visual discrimination, picture-to-object, and object-to-picture matching skills. If the student cannot discriminate between videos or make an accurate selection, assessment results may not be representative of student preference. Second, we recommend practitioners create videos that capture the critical features of the toy with minimal distractions in order to increase the probability that each student attends to the toy’s relevant features. Third, we recommend practitioners routinely conduct preference assessments in order to account for changes in student preference that may occur over time.

Because the brief T-MSWO described by Carr et al. (2000) has been shown to accurately identify stimuli that may function as reinforcers, the V-MSWO should not be considered a replacement assessment until it can be assessed across additional participants and contexts and in experiments that include a reinforcer assessment that evaluate if HP toys identified through the V-MSWO actually function as reinforcers. Therefore, a final suggestion is that practitioners should conduct V-MSWOs and T-MSWOs (an already well-established assessment) to systematically evaluate whether or not the results obtained are similar across assessments. If both assessments identify the same HP toys, then, the video-based assessment may be an appropriate complement to the T-MSWO for that particular student. Additional matches (e.g., between second- and third-ranked toys) between assessments may further suggest the V-MSWO may be a suitable compliment to the T-MSWO.

Method

We recruited four participants for this research study. Landon (6 years), Tyler (12 years), Sam (4 years), and Martin (6 years) had an autism diagnosis from an outside agency. All participants consistently scored within level 3 of the Verbal Behavior Milestones Assessment and Placement Program (Sundberg 2008). Participants could reliably discriminate between five pictures and were proficient in picture-to-object and object-to-picture matching. Finally, all participants had the motor skills to touch a toy, touch a video of a toy on an iPad, and play with each toy.

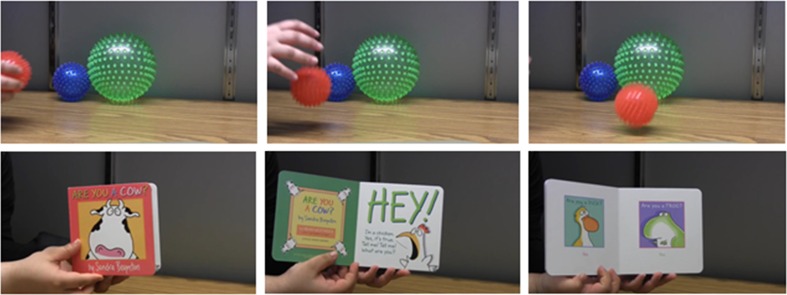

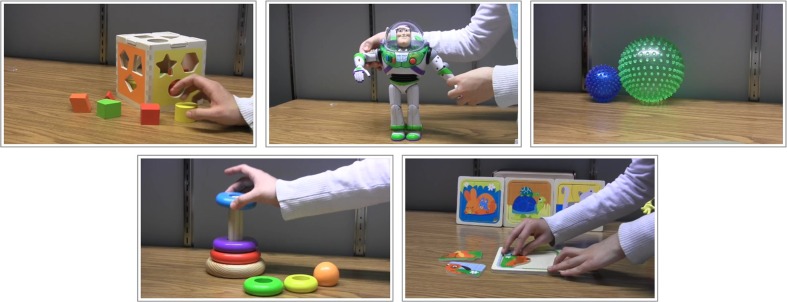

We conducted all research sessions at a local autism center in a small room with a table and two chairs. Prior to the beginning of the study, we interviewed each participant’s therapists to identify five toys for that participant’s preference assessments. Then, we recorded videos depicting each toy and its critical features. For example, a ball was depicted in a video by a hand bouncing it on a table and a book was depicted by a hand turning each page. To increase the probability that participants attended to the relevant features of the video, the toy always remained the focus of the video, and only the research assistant’s hands were visible. Videos were approximately 10 s in length (see Fig. 2 for three screenshots from the ball and book videos, respectively). Then, we uploaded videos to an Apple iPad (4th generation) and created a file in the application Keynote for each participant. After we created the file in Keynote, we embedded videos of that participant’s toys, resized them to approximately 300 × 170 pixels, and arranged them in two rows (3 × 2; see Fig. 3).

Fig. 2.

Three screen shots from videos depicting the ball (top row) and book (bottom row)

Fig. 3.

Videos embedded in Keynote

We exposed individual participants to each of their five identified toys to ensure they were familiar with the toys’ relevant features. Then, we conducted three pairs of V-MSWO and T-MSWO assessments. Each pair of preference assessments consisted of one five-item, five-trial V-MSWO and T-MSWO assessment. Within each pair of preference assessments, we determined which form of assessment we conducted first by using a random number generator.

We conducted the V-MSWO in the following steps. For the first trial, we presented the participant with five videos in two rows (3 × 2) on the iPad in the application Keynote. All videos played simultaneously and looped continuously. All physical toys were out of the participant’s sight. We said, “Touch the one you want” and the participant had 10 s to make a selection. We recorded the name of the toy that corresponded to the video if the participant touched that video within 10 s of the instruction. If the participant did not touch a video within 10 s, we repeated the instruction. When the participant touched the video of the toy, we immediately gave the toy to him. The participant had 30 s to play with the toy, and we ignored all of their attempts to interact with us. After 30 s elapsed, or when the participant was finished playing with the toy (whichever happened first), we said “My turn” and took the toy from the participant. Then, we deleted the chosen video and re-arranged the remaining videos (2 × 2). For the second trial, the participant was given an opportunity to choose between the four remaining videos, and it otherwise resembled that of the first trial. This process continued for the third, fourth, and fifth trials, where we arranged videos, in a quasi-random order, in two rows (2 × 1), one row (2 videos), and one row (1 video), respectively. The T-MSWO format was similar to that of the V-MSWO. However, in the T-MSWO, we presented toys in a single semi-circle, equidistant from each other, in front of the participant. After the third pair of preference assessments, we ranked toys from 1 (HP) to 5 (low preferred) based on the rank of sum of lowest to highest number of trials each toy was available. To assess correlations between V-MSWO and T-MSWOs, we calculated Kendall’s tau for each participant. Finally, we computed an overall correlation between the V-MSWO and T-MSWO across participants in order to examine the extent to which assessment results correlated with one another.

To assess reliability of data collection, a second observer recorded the toy each participant selected every trial. We calculated interobserver agreement (IOA) by dividing the number of agreements by the number of opportunities for agreement and multiplying it by 100. We obtained an IOA score of 100 %. A second observer also measured treatment fidelity every trial by scoring whether or not the researcher said, “Touch the one you want,” allowed the participant to play with the selected toy for 30 s, and re-arranged the materials or videos after every trial. We calculated treatment fidelity by dividing the number of occurrences by the total number of opportunities and multiplying it by 100. We obtained a treatment fidelity score of 100 %.

Footnotes

Implications for Practitioners

1. Visual discrimination, picture-to-object, and object-to-picture matching skills may be important pre-requisite skills for video-based MSWOs

2. Develop videos that capture the critical features of each toy while minimizing visual and auditory distractions

3. Routinely conduct video-based MSWOs to account for momentary changes in preference

4. Periodically conduct tangible MSWOs to determine if results are similar to video-based MSWOs

References

- Carr JE, Nicolson AC, Higbee TS. Evaluation of a brief multiple-stimulus preference assessment in a naturalistic context. Journal of Applied Behavior Analysis. 2000;33:353–357. doi: 10.1901/jaba.2000.33-353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clevenger TM, Graff RB. Assessing object-to-picture and picture-to-object matching as prerequisite skills for pictorial preference assessments. Journal of Applied Behavior Analysis. 2005;38:543–547. doi: 10.1901/jaba.2005.161-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLeon IG, Iwata BA. Evaluation of a multiple-stimulus presentation format for assessing reinforcer preferences. Journal of Applied Behavior Analysis. 1996;29:519–533. doi: 10.1901/jaba.1996.29-519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher W, Piazza CC, Bowman LG, Hagopian LP, Owens JC, Slevin I. A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kagohara D, van der Meer L, Ramdoss S, O’Reilly M, Lancioni T, Davis T, Rispoli M, Lang R, Marschik P, Sutherland D, Green V, Sigafoos J. Using iPods and iPads in teaching programs for individuals with developmental disabilities: a systematic review. Research in Developmental Disabilities. 2013;34:147–156. doi: 10.1016/j.ridd.2012.07.027. [DOI] [PubMed] [Google Scholar]

- Kang S, O’Reilly M, Lancioni G, Falcomata TS, Sigafoos J, Xu Z. Comparison of the predictive validity and consistency among preference assessment procedures: a review of the literature. Research in Developmental Disabilities. 2013;34:1125–1133. doi: 10.1016/j.ridd.2012.12.021. [DOI] [PubMed] [Google Scholar]

- Karsten AM, Carr JE, Lepper TL. Description of a practitioner model for identifying preferred stimuli with individuals with autism spectrum disorder. Behavior Modification. 2011;35:347–369. doi: 10.1177/0145445511405184. [DOI] [PubMed] [Google Scholar]

- Peterson, R. N. (2014). The effectiveness of a video-based preference assessment in identifying socially reinforcing stimuli (Masters thesis). Retrieved from http://digitalcommons.usu.edu/etd/2296.

- Snyder K, Higbee TS, Dayton E. Preliminary investigation of a video-based stimulus preference assessment. Journal of Applied Behavior Analysis. 2012;45:413–418. doi: 10.1901/jaba.2012.45-413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sundberg ML. Verbal behavior milestones assessment and placement program: the VB-MAPP. Concord: AVB Press; 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]