Abstract

Nonhuman primate and human studies have suggested that populations of neurons in the posterior parietal cortex (PPC) may represent high-level aspects of action planning that can be used to control external devices as part of a brain-machine interface. However, there is no direct neuron-recording evidence that human PPC is involved in action planning, and the suitability of these signals for neuroprosthetic control has not been tested. We recorded neural population activity with arrays of microelectrodes implanted in the PPC of a tetraplegic subject. Motor imagery could be decoded from these neural populations, including imagined goals, trajectories, and types of movement. These findings indicate that the PPC of humans represents high-level, cognitive aspects of action and that the PPC can be a rich source for cognitive control signals for neural prosthetics that assist paralyzed patients.

The posterior parietal cortex (PPC) in humans and nonhuman primates (NHPs) is situated between sensory and motor cortices and is involved in high-level aspects of motor behavior (1, 2). Lesions to this region do not produce motor weakness or primary sensory deficits but rather more complex sensorimotor losses, including deficits in the rehearsal of movements (i.e., motor imagery) (3–7). The activity of PPC neurons recorded in NHPs reflects the movement plans of the animals, and they can generate these signals to control cursors on computer screens without making any movements (8–10). It is tempting to speculate that the animals have learned to use motor imagery for this “brain control” task, but it is of course not possible to ask the animals directly. These brain control results are promising for neural prosthetics because imagined movements would be a versatile and intuitive method for controlling external devices (11). We find that motor imagery recorded from populations of human PPC neurons can be used to control the trajectories and goals of a robotic limb or computer cursor. Also, the activity is often specific for the imagined effector (right or left limb), which holds promise for bimanual control of robotic limbs.

A 32-year-old tetraplegic subject, EGS, was implanted with two microelectrode arrays on 17 April 2013. He had a complete lesion of the spinal cord at cervical level C3-4, sustained 10 years earlier, with paralysis of all limbs. Using functional magnetic resonance imaging (fMRI), we asked EGS to imagine reaching and grasping. These imagined movements activated separate regions of the left hemisphere of the PPC (fig. S1). A reach area on the superior parietal lobule (putative human area 5d) and a grasp area at the junction of the intraparietal and postcentral sulci (putative human anterior intraparietal area, AIP) were chosen for implantation of 96-channel electrode arrays. Recordings were made over more than 21 months with no adverse events related to the implanted devices. Spike activity was recorded and used to control external devices, including a 17-degree-of-freedom robotic limb and a cursor in two dimensions (2D) or 3D on a computer screen.

Recordings began 16 days after implantation. The subject could control the activity of single cells through imagining particular actions. An example of volitional control is shown in movie S1. The cell is activated when EGS imagines moving his hand to his mouth but not for movements with similar gross characteristics such as imagined movements of the hand to the chin or ear. Another example (movie S2) shows EGS increasing the activity of a different cell by imagining rotation of his shoulder, and decreasing activity by imagining touching his nose. In many cases, the subject could exert volitional control of single neurons by imagining simple movements of the upper arm, elbow, wrist, or hand.

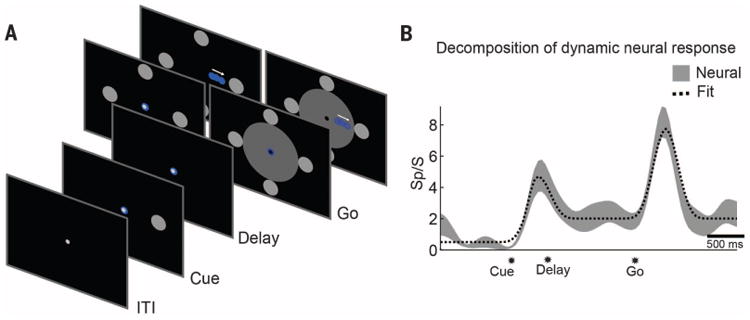

We found that EGS's neurons coded both the goal and imagined trajectory of movements. To characterize these forms of spatial tuning, we used a masked memory reach paradigm (MMR, Fig. 1A). In the task, EGS imagined a continuous reaching movement to a spatially cued target after a delay period during which the goal was removed from the screen. On some trials, motion of the cursor was blocked from view by using a mask. This allowed us to characterize spatial tuning for goals and trajectories (Fig. 1B) while controlling for visual confounds.

Fig. 1. Goal and trajectory coding in the PPC.

(A) The masked memory reach task was used to quantify goal and trajectory tuning in the PPC by dissociating their respective tuning in time. EGS imagined a continuous reaching movement to spatially cued targets after a delay period. Motion of the cursor was occluded from view by using a mask in interleaved trials. (B) Goal and trajectory fitting. Average neural response (±SE) of a sample neuron over the duration of a trial, along with a linear model reconstruction of the time course. The linear model included components for the transient early visual response, sustained goal tuning, and transient trajectory tuning. The significance of the fit coefficients was used to determine population tuning to goal and trajectory (see Fig. 2).

The number of recorded units was relatively constant through time, but units would appear and disappear on individual channels over the course of hours, days, or weeks (fig. S2). This allowed us to sample the functional properties of a large population of PPC neurons. From 124 spatially tuned units recorded across 7 days with the MMR task, 19% coded the goal of movement exclusively, 54% coded the trajectory of the movement exclusively, and 27% coded both goal and trajectory (Fig. 2A). Goal-tuned units supported accurate classification of spatial targets (>90% classification with as few as 30 units), representing the first known instance of decoding high-level motor intentions from human neuronal populations (Fig. 2B). The goal encoding was rapid with significant classification (shuffle test) occurring within 190 ms of cue presentation and remaining high during the delay period in which there was no visual goal present (Fig. 2C). Similarly, this population of neurons enabled reconstructions of the moment-to-moment velocity of the effector (Fig. 2D) with coefficient of determination (R2) comparable to those reported for offline reconstructions of velocity in human M1 studies [e.g., (12, 13); see also fig. S3]. In other tasks, trajectory-tuned units supported instantaneous volitional control of an anthropomorphic robotic limb at its endpoint (see movie S3).

Fig. 2. Neurons in PPC encode both the goal and trajectory of movements.

(A) The pie chart indicates the proportion of units that encode trajectory exclusively, goal exclusively, or mixed goal and trajectory. Insets show the activity (mean ± SE) for three example neurons. The lighter hue indicates response to the direction evoking maximal response; the darker hue indicates response for the opposite direction. Data taken from masked trials to avoid visual confounds (Fig. 1A). (B) Small populations of informative units allow accurate classification of motor goals from delay-period activity (when no visible target is present). Using a greedy algorithm, an optimized neural population for data combined across multiple days shows that >90% classification is possible with fewer than 30 units. (C) Temporal dynamics of goal representation. Offline analysis depicting accuracy of target classification through time [300-ms sliding window, 95% confidence interval (CI)]. Significant classification occurs within 190 ms of target presentation. (D) Similar to (B) but for trajectory reconstructions. All data taken from the MMR task (Fig. 1A).

In the MMR task, goal tuning was not directly used by the subject to control the computer interface; only the trajectory of the cursor was under brain control. To verify that goal-tuned units could support direct selection of spatial targets in closed-loop brain control, we used a direct goal classification (DGC) task (Fig. 3A). Target classification was performed by using neural activity taken during a delay period, after the visual cue was extinguished, so that neural activity was more likely to reflect intent. Online classification accuracy was significant (shuffle test); however, similar to the MMR task, aggregating neurons across days improved classification accuracy by providing a better selection of well-tuned units (Fig. 3, C and D). Goal decoding accuracy was enhanced despite the presence of more targets (six versus four) when the subject controlled the closed-loop interface using goal activity as compared to trajectory activity (Fig. 3C). Consistent with the idea that spatially tuned neural activity reflected volitional intent, decode accuracy was maintained whether the target was cued by a flashed stimulus or cued symbolically (Fig. 3, B and D).

Fig. 3. Goal decoding.

(A) Direct goal classification (DGC) task. EGS was instructed to intend motion toward a cued target through a delay period after the target was removed from the screen. Neural activity from the final 500 ms of the delay period was used to decode the location of the spatial target. EGS was awarded points depending on the relative location of the decoded and cued target. The decoded target location was presented at the end of each trial. (B) Symbolic task. A target grid was presented along with a number indicating the current target. The cue was removed during the delay period. A series of tones was used to cue the start and end of movements. Multiple effectors were tested in interleaved blocks. Catch trials provided a means to ensure that EGS was, on average, engaged in the task. (C) Estimated classification accuracy (mean with 95% CI) for variable population sizes. Populations were constructed by using randomly sampled units from the recorded population for the MMR and DGC tasks. Chance based on number of potential targets (MMR: four targets; DGC: six targets). (D) Greedy dropping curves show that high classification accuracy is possible whether targets are cued directly (A) or symbolically (B). Best: best single day performance; Combo: performance when combining data across days.

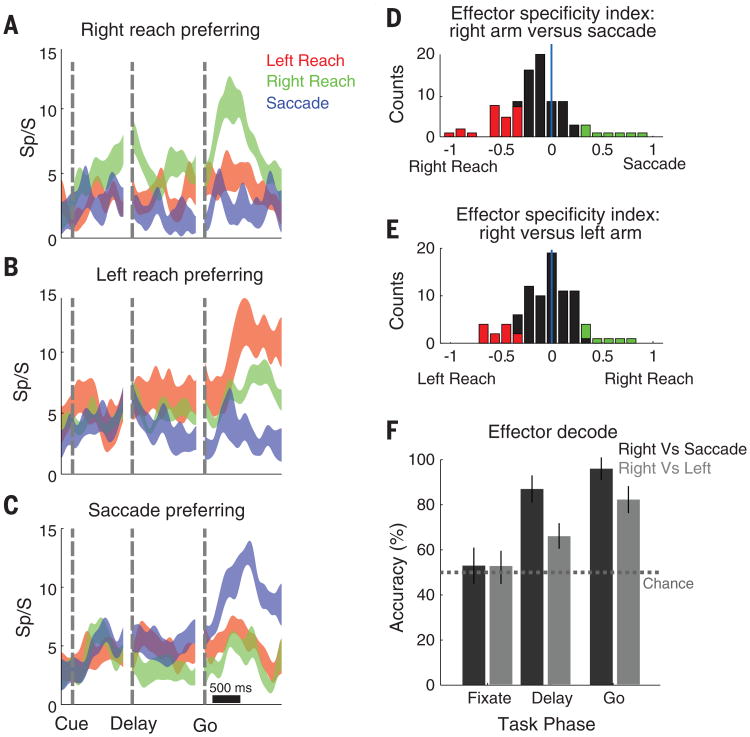

To what degree was the spatially tuned activity specific for imagined actions of the limb? Does the activity reflect the intentions to move a specific limb, or more general spatial processes? Effector specificity was tested by asking EGS to imagine moving his left or right arm, or make actual eye movements in the symbolically cued delayed movement paradigm (Fig. 3B). We found cells that showed specificity for each effector (Fig. 4, A to C). Although the degree of specificity varied for individual units, the population showed a strong bias for imagined reaches versus saccades (Student's t test, P < 0.05, Fig. 4D). Whereas some neurons showed a high degree of specificity for the left and right limb, many reach-selective neurons were bimanual, as they frequently showed no bias for which limb EGS imagined using (Fig. 4E). The population response provided sufficient information to decode which effector EGS imagined using on a given trial (Fig. 4F).

Fig. 4. Effector specificity in PPC.

(A) Unit showing preferential activation to imagined movements of the right arm. Each trace shows the neural firing rate (mean ± SE) for the movement direction evoking the maximal response for each effector. (B and C) Same as (A), but for left arm and saccade-preferring neurons. (D) Population analysis. The degree of effector specificity varied across the population. Effector specificity was quantified with a specificity index based on the normalized depth of modulation (DM) for reaches versus saccades . The specificity index for units that were spatially tuned to at least one effector is shown as a histogram. Colored bars indicate a significant preference for an effector. (E) Same as (D) but for imagined right arm versus left arm movements. (F) The effector used to perform the task could be decoded from the neural population (mean with 95% CI).

The results show the coding of motor imagery in the human PPC at the level of single neurons and the encoding of goals and trajectories by populations of human PPC neurons. Moreover, many cells showed effector specificity, being active for imagining left-arm or right-arm movements or making actual eye movements. These results tie together NHP and human research and point to similar sensorimotor functions of the PPC in both species.

It could be argued that the results reflect visual attention rather than motor imagery. The voluntary activation of single neurons with specific imagined movements (e.g., movement of the hand to the mouth) without any visual stimulation argues against this sensory interpretation. The effector specificity also cannot be easily explained by a simple attention hypothesis.

The neural activity in delayed goal tasks is very similar to the persistent activity seen with planning in the NHP literature and attributed to the animals' intent (14). The PPC in NHPs codes both trajectory and goal information (15). The dynamics of this trajectory signal in NHPs, when compared to the kinematics of the co-occurring limb movements, suggest that the signal is a forward model of the limb movement; an internal monitor of the movement command in order to match the intended movement with actual movement for online correction (15). Deficits in online control in humans with PPC lesions have led investigators to propose that the PPC uses these forward models (16). If the trajectory signal is indeed a forward model, then EGS can generate this forward model through imagery without actually moving his limbs.

Effector specificity at the single-neuron level has been routinely reported in the PPC of NHPs (17). In NHPs, there is a map of intentions with areas selective for eye (lateral intraparietal area, LIP), limb (parietal reach region, PRR, and area 5d), and grasp (anterior intraparietal region, AIP) movements (1). Bimanual activity (left and right limb) from single PRR neurons has been reported with qualitatively similar results in the NHP (18). Control of two limbs across the spectrum of human behavior is challenging and requires both independent and coordinated movement between the limbs. One possibility is that units showing effector-specific and bimanual tuning could play complementary roles in independent and coordinated movements; however, more direct evidence in which EGS attempts various bimanual actions is necessary to fully test the potential for controlling two limbs from the PPC.

We have focused on the representation of motor intentions in the human PPC. Some cells appeared to code comparatively simple motor intentions, whereas others coded coordinated ethologically meaningful actions. One unexplored possibility is that the PPC also encodes nonmotor intentions such as the desire to turn on the television, or preheat the oven. As the world becomes increasingly connected through technology, the possibility of directly decoding nonmotor intentions to control one's environment may alter approaches to brain-machine interfaces (BMIs).

Neurons that constituted the recorded population would frequently change (fig. S2). This finding presents challenges for the widespread adoption of BMIs that can be addressed through a variety of techniques. One approach is the use of robust and adaptive decoding algorithms that can adapt alongside the changing neural population [e.g., (19)]. In the long term, the development of chronic recording technologies that can stably maintain recordings should be a priority.

This study shows that the human PPC can be a source of signals for neuroprosthetic applications in humans. The high-level cognitive aspects of movement imagery have several advantages for neuroprosthetics. The goal encoding can lead to very rapid readout of the intended movement (Fig. 2C). The PPC encodes both the goal and trajectory, which in NHPs improves decoding of movement goals when the two streams of information are combined in decoders (10). The bimanual representation of the limbs may allow the operation of two robotic limbs with recordings made from one hemisphere. In terms of usefulness for neuroprosthetics, it is difficult to directly compare the performance of PPC to previous studies of M1. In NHP studies, M1 has been shown to be a rich source of neural signals correlated with the trajectory of limb movements (20). In previous human M1 recordings, primarily the trajectory was decoded (12,13,21,22). The reported offline trajectory reconstructions from M1 populations are comparable to the values we achieved from PPC neurons (Fig. 2D) (12,13). The other aspects of encoding, e.g., goals and effectors, have not yet been examined in detail in human M1. However, it can be concluded from our study that the PPC is a good candidate for future clinical applications as it contains signals both overlapping and likely complementary to those found in M1.

Supplementary Material

Acknowledgments

We thank EGS for his unwavering dedication and enthusiasm, which made this study possible. We acknowledge V. Shcherbatyuk for computer assistance; T. Yao, A. Berumen, and S. Oviedo, for administrative support; K. Durkin for nursing assistance; and our colleagues at the Applied Physics Laboratory at Johns Hopkins and at Blackrock Microsystems for technical support. This work was supported by the NIH under grants EY013337, EY015545, and P50 MH942581A; the Boswell Foundation; The Center for Neurorestoration at the University of Southern California; and Defense Department contract N66001-10-4056. All primary behavioral and neurophysiological data are archived in the Division of Biology and Biological Engineering at the California Institute of Technology.

Footnotes

References and Notes

- 1.Andersen RA, Buneo CA. Annu Rev Neurosci. 2002;25:189–220. doi: 10.1146/annurev.neuro.25.112701.142922. [DOI] [PubMed] [Google Scholar]

- 2.Culham JC, Cavina-Pratesi C, Singhal A. Neuropsychologia. 2006;44:2668–2684. doi: 10.1016/j.neuropsychologia.2005.11.003. [DOI] [PubMed] [Google Scholar]

- 3.Balint R. Monatsschr Psychiatr Neurol. 1909;25:51–81. [Google Scholar]

- 4.Perenin MT, Vighetto A. Brain. 1988;111:643–674. doi: 10.1093/brain/111.3.643. [DOI] [PubMed] [Google Scholar]

- 5.Goodale MA, Milner AD. Trends Neurosci. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- 6.Sirigu A, et al. Science. 1996;273:1564–1568. doi: 10.1126/science.273.5281.1564. [DOI] [PubMed] [Google Scholar]

- 7.Pisella L, et al. Nat Neurosci. 2000;3:729–736. doi: 10.1038/76694. [DOI] [PubMed] [Google Scholar]

- 8.Musallam S, Corneil BD, Greger B, Scherberger H, Andersen RA. Science. 2004;305:258–262. doi: 10.1126/science.1097938. [DOI] [PubMed] [Google Scholar]

- 9.Hauschild M, Mulliken GH, Fineman I, Loeb GE, Andersen RA. Proc Natl Acad Sci USA. 2012;109:17075–17080. doi: 10.1073/pnas.1215092109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mulliken GH, Musallam S, Andersen RA. J Neurosci. 2008;28:12913–12926. doi: 10.1523/JNEUROSCI.1463-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Andersen RA, Kellis S, Klaes C, Aflalo T. Curr Biol. 2014;24:R885–R897. doi: 10.1016/j.cub.2014.07.068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Truccolo W, Friehs GM, Donoghue JP, Hochberg LR. J Neurosci. 2008;28:1163–1178. doi: 10.1523/JNEUROSCI.4415-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kim SP, Simeral JD, Hochberg LR, Donoghue JP, Black MJ. J Neural Eng. 2008;5:455–476. doi: 10.1088/1741-2560/5/4/010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gnadt JW, Andersen RA. Exp Brain Res. 1988;70:216–220. doi: 10.1007/BF00271862. [DOI] [PubMed] [Google Scholar]

- 15.Mulliken GH, Musallam S, Andersen RA. Proc Natl Acad Sci USA. 2008;105:8170–8177. doi: 10.1073/pnas.0802602105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wolpert DM, Goodbody SJ, Husain M. Nat Neurosci. 1998;1:529–533. doi: 10.1038/2245. [DOI] [PubMed] [Google Scholar]

- 17.Snyder LH, Batista AP, Andersen RA. Nature. 1997;386:167–170. doi: 10.1038/386167a0. [DOI] [PubMed] [Google Scholar]

- 18.Chang SWC, Dickinson AR, Snyder LH. J Neurosci. 2008;28:6128–6140. doi: 10.1523/JNEUROSCI.1442-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dangi S, et al. Neural Comput. 2014;26:1811–1839. doi: 10.1162/NECO_a_00632. [DOI] [PubMed] [Google Scholar]

- 20.Georgopoulos AP, Kalaska JF, Caminiti R, Massey JT. J Neurosci. 1982;2:1527–1537. doi: 10.1523/JNEUROSCI.02-11-01527.1982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hochberg LR, et al. Nature. 2006;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 22.Collinger JL, et al. Lancet. 2013;381:557–564. doi: 10.1016/S0140-6736(12)61816-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.