Abstract

The purpose of the present paper is to assess the efficacy of confidence intervals for Rosenthal's fail-safe number. Although Rosenthal's estimator is highly used by researchers, its statistical properties are largely unexplored. First of all, we developed statistical theory which allowed us to produce confidence intervals for Rosenthal's fail-safe number. This was produced by discerning whether the number of studies analysed in a meta-analysis is fixed or random. Each case produces different variance estimators. For a given number of studies and a given distribution, we provided five variance estimators. Confidence intervals are examined with a normal approximation and a nonparametric bootstrap. The accuracy of the different confidence interval estimates was then tested by methods of simulation under different distributional assumptions. The half normal distribution variance estimator has the best probability coverage. Finally, we provide a table of lower confidence intervals for Rosenthal's estimator.

1. Introduction

Meta-analysis refers to methods focused on contrasting and combining results from different studies, in the hope of identifying patterns among study results, sources of disagreement among those results, or other interesting relationships that may come to light in the context of multiple studies [1]. In its simplest form, this is normally done by identification of a common measure of effect size, of which a weighted average might be the output of a meta-analysis. The weighting might be related to sample sizes within the individual studies [2, 3]. More generally there are other differences between the studies that need to be allowed for, but the general aim of a meta-analysis is to more powerfully estimate the true effect size as opposed to a less precise effect size derived in a single study under a given single set of assumptions and conditions [4]. For reviews on meta-analysis models, see [2, 5, 6]. Meta-analysis can be applied to various effect sizes collected from individual studies. These include odds ratios and relative risks; standardized mean difference, Cohen's d, Hedges' g, and Glass's Δ; correlation coefficient and relative metrics; sensitivity and specificity from diagnostic accuracy studies; and P-values. For more comprehensive reviews see Rosenthal [7], Hedges and Olkin [8], and Cooper et al. [9].

2. Publication Bias

Publication bias is a threat to any research that attempts to use the published literature, and its potential presence is perhaps the greatest threat to the validity of a meta-analysis [10]. Publication bias exists because research with statistically significant results is more likely to be submitted and published than work with null or nonsignificant results. This issue was memorably termed as the file-drawer problem by Rosenthal [11]; nonsignificant results are stored in file drawers without ever being published. In addition to publication bias, other related types of bias exist including pipeline bias, subjective reporting bias, duplicate reporting bias, or language bias (see [12–15] for definitions and examples).

The implication of these various types of bias is that combining only the identified published studies uncritically may lead to an incorrect, usually over optimistic, conclusion [10, 16]. The ability to detect publication bias in a given field is a key strength of meta-analysis because identification of publication bias will challenge the validity of common views in that area and will spur further investigations [17]. There are two types of statistical procedures for dealing with publication bias in meta-analysis: methods for identifying the existence of publication bias and methods for assessing the impact of publications bias [16]. The first includes the funnel plot (and other visualisation methods such as the normal quantile plot) and regression/correlation-based tests, while the second includes the fail-safe (also called file-drawer) number, the trim and fill method, and selection model approaches [10, 14, 18]. Recent approaches include the test for excess significance [19] and the p-curve [20].

The most commonly used method is the visual inspection of a funnel plot. This assumes that the results from smaller studies will be more widely spread around the mean effect because of larger random error. The next most frequent method used to assess publication bias is Rosenthal's fail-safe number [21]. Two recent reviews examining the assessment of publication bias in psychology and ecology reported that funnel plots were the most frequently used (24% and 40% resp.), followed by Rosenthal's fail-safe number (22% and 30%, resp.).

2.1. Assessing Publication Bias by Computing the Number of Unpublished Studies

Assessing publication bias can be performed by trying to estimate the number of unpublished studies in the given area a meta-analysis is studying. The fail-safe number represents the number of studies required to refute significant meta-analytic means. Although apparently intuitive, it is in reality difficult to interpret not only because the number of data points (i.e., sample size) for each of k studies is not defined, but also because no benchmarks regarding the fail-safe number exist, unlike Cohen's benchmarks for effect size statistics [22]. However, these versions have been heavily criticised, mainly because such numbers are often misused and misinterpreted [23]. The main reason for the criticism is that, depending on which method is used to estimate the fail-safe N, the number of studies can greatly vary.

Although Rosenthal's fail-safe number of publication bias was proposed as early as 1979 and is frequently cited in the literature [11] (over 2000 citations), little attention has been given to the statistical properties of this estimator. This is the aim of the present paper, which is discussed in further detail in Section 3.

Rosenthal [11] introduced what he called the file drawer problem. His concern was that some statistically nonsignificant studies may be missing from an analysis (i.e., placed in a file drawer) and that these studies, if included, would nullify the observed effect. By nullify, he meant to reduce the effect to a level not statistically significantly different from zero. Rosenthal suggested that rather than speculate on whether the file drawer problem existed, the actual number of studies that would be required to nullify the effect could be calculated [26]. This method calculates the significance of multiple studies by calculating the significance of the mean of the standard normal deviates of each study. Rosenthal's method calculates the number of additional studies N R, with the mean null result necessary to reduce the combined significance to a desired α level (usually 0.05).

The necessary prerequisite is that each study examines a directional null hypothesis such that the effect sizes θ i from each study are examined under θ i ≤ 0 or (θ i ≥ 0). Then the null hypothesis of Stouffer [27] test is

| (1) |

The test statistic for this is

| (2) |

with z i = θ i/s i, where s i are the standard errors of θ i. Under the null hypothesis we have Z S ~ N(0,1) [7].

So we get that the number of additional studies N R, with mean null result necessary to reduce the combined significance to a desired α level (usually 0.05 [7, 11]), is found after solving

| (3) |

So, N R is calculated as

| (4) |

where k is the number of studies and Z α is the one-tailed Z score associated with the desired α level of significance. Rosenthal further suggested that if N R > 5k + 10, the likelihood of publication bias would be minimal.

Cooper [28] and Cooper and Rosenthal [29] called this number the fail-safe sample size or fail-safe number. If this number is relatively small, then there is cause for concern. If this number is large, one might be more confident that the effect, although possibly inflated by the exclusion of some studies, is, nevertheless, not zero [30]. This approach is limited in two important ways [26, 31]. First, it assumes that the association in the hidden studies is zero, rather than considering the possibility that some of the studies could have an effect in the reverse direction or an effect that is small but not zero. Therefore, the number of studies required to nullify the effect may be different than the fail-safe number, either larger or smaller. Second, this approach focuses on statistical significance rather than practical or substantive significance (effect sizes). That is, it may allow one to assert that the mean correlation is not zero, but it does not provide an estimate of what the correlation might be (how it has changed in size) after the missing studies are included [23, 32–34]. However, for many fields it remains the gold standard to assess publication bias, since its presentation is conceptually simple and eloquent. In addition, it is computationally easy to perform.

Iyengar and Greenhouse [12] proposed an alternative formula for Rosenthal's fail-safe number, in which the sum of the unpublished studies' standard variates is not zero. In this case the number of unpublished studies n α is approached through the following equation:

| (5) |

where M(α) = −ϕ(z α)/Φ(z α) (this results immediately from the definition of truncated normal distribution) and α is the desired level of significance. This is justified by the author that the unpublished studies follow a truncated normal distribution with x ≤ z α. Φ(·) and ϕ(·) denote the cumulative distribution function (CDF) and probability distribution function (PDF), respectively, of a standard normal distribution.

There are certain other fail-safe numbers which have been described, but their explanation goes beyond the scope of the present article [35]. Duval and Tweedie [36, 37] present three different estimators for the number of missing studies and the method to calculate this has been named Trim and Fill Method. Orwin's [38] approach is very similar to Rosenthal's [11] approach without considering the normal variates but taking Cohen's d [22] to compute a fail-safe number. Rosenberg's fail-safe number is very similar to Rosenthal's and Orwin's fail-safe number [39]. Its difference is that it takes into account the meta-analytic estimate under investigation by incorporating individual weights per study. Gleser and Olkin [40] proposed a model under which the number of unpublished studies in a field where a meta-analysis is undertaken could be estimated. The maximum likelihood estimator of their fail-safe number only needs the number of studies and the maximum P value of the studies. Finally, the Eberly and Casella fail-safe number assumes a Bayesian methodology which aims to estimate the number of unpublished studies in a certain field where a meta-analysis is undertaken [41].

The aim of the present paper is to study the statistical properties of Rosenthal's [11] fail-safe number. In the next section we introduce the statistical theory for computing confidence intervals for Rosenthal's [11] fail-safe number. We initially compute the probability distribution function of , which gives formulas for variance and expectation; next, we suggest distributional assumptions for the standard normal variates used in Rosenthal's fail-safe number and finally suggest confidence intervals.

3. Statistical Theory

The estimator of unpublished studies is approached through Rosenthal's formula:

| (6) |

Let Z i, i = 1,2,…, i,…, k, be i.i.d. random variables with E[Z i] = μ and Var[Z i] = σ 2. We discern two cases:

-

(a)

k is fixed or

-

(b)

k is random, assuming additionally that k ~ Pois(λ). This is reasonable since the number of studies included in a meta-analysis is like observing counts. Other distributions might be assumed, such as the Gamma distribution, but this would require more information or assumptions to compute the parameters of the distribution.

In both cases, estimators of μ, σ 2, and λ can be calculated without distributional assumptions for the Z i with the method of moments or with distributional assumptions regarding the Z i.

3.1. Probability Distribution Function of

3.1.1. Fixed k

We compute the PDF of by following the next steps.

Step 1 . —

Z 1, Z 2,…, Z i,…, Z k in the formula of the estimator (6) are i.i.d. distributed with E[Z i] = μ and Var[Z i] = σ 2. Let S = ∑i=1 k Z i and according to the Lindeberg-Lévy Central Limit Theorem [42], we have

(7) So the PDF of S is

(8)

Step 2 . —

The PDF of Rosenthal's can be retrieved from a truncated version of (8). From (3), we get that

(9) We advocate that Rosenthal's equations (3) and (9) implicitly impose two conditions which must be taken into account when we seek to estimate the distribution of N R:

(10)

(11) Expression (10) is justified by the fact that the right hand side of (9) is positive, so then S ≥ 0. Expression (11) is justified by the fact that N R expresses the number of studies, so it must be at least 0. Hence, expressions (10) and (11) are satisfied when S is a truncated normal random variable.

The truncated normal distribution is a probability distribution related to the normal distribution. Given a normally distributed random variable X with mean μ t and variance σ t 2, let it be that X ∈ (a, b), −∞ ≤ a ≤ b ≤ ∞. Then X conditional on a < X < b has a truncated normal distribution with PDF: f X(x) = (1/σ)ϕ((x − μ t)/σ t)/(Φ((b − μ t)/σ t) − Φ((a − μ t)/σ t)), for a ≤ x ≤ b and f X(x) = 0 otherwise [43].

Let it be S *, such that . So the PDF of S * then becomes

(12) where .

Then, we have

| (13) |

The characteristic function is

| (14) |

where , , σ 1 2 = Z α 2/(Z α 2 − 2kσ 2 it).

From (14) we get

| (15) |

where .

Also,

| (16) |

where

| (17) |

Proofs for expressions (14), (15), and (16) are given in the Appendix.

Comments. Consider the following:

- (1)

-

(2)

A limiting element of this computation is that takes discrete values because it describes number of studies, but it has been described by a continuous distribution.

3.1.2. Random k

It is assumed that k ~ Pois(λ). So taking into account the result from the distribution of for a fixed k we get that the joint distribution of k and is

| (21) |

3.2. Expectation and Variance for Rosenthal's Estimator

-

(a)

When k is fixed, expressions (19) and (20) denote the expectation and variance, respectively, for . This is derived from the PDF of ; an additional proof without reference to the PDF is given in the Appendix.

-

(b)When k is random with k ~ Pois(λ), the expectation and variance of are

(22)

Proofs are given in the Appendix.

3.3. Estimators for μ, σ 2, and λ

Having now computed a formula for the variance which is necessary for a confidence interval, we need to estimate μ, σ 2, and λ. In both cases, estimators of μ, σ 2, and λ can be calculated without distributional assumptions for the Z i with the method of moments or with distributional assumptions regarding the Z i.

3.3.1. Method of Moments [44]

When k is fixed, we have

| (23) |

When k is random, we have

| (24) |

3.3.2. Distributional Assumptions for the Z i

If we suppose that the Z i follow a distribution, we would replace the values of μ and σ 2 with their distributional values. Below we consider special cases.

Standard Normal Distribution. The Z i follow a standard normal distribution; that is, Z i ~ N(0,1). This is the original assumption for the Z i [11]. In this case we have

| (25) |

Although the origin of the Z i is from the standard normal distribution, the studies in a meta-analysis are a selected sample of published studies. For this reason, the next distribution is suggested as better.

Half Normal Distribution. Here we propose that the Z i follow a half normal distribution HN(0,1), which is a special case of folded normal distribution. Before we explain the rational of this distribution, a definition of this type of distribution is provided. A half normal distribution is also a special case of a truncated normal distribution.

Definition 1 . —

The folded normal distribution is a probability distribution related to the normal distribution. Given a normally distributed random variable X with mean μ f and variance σ f 2, the random variable Y = |X| has a folded normal distribution [43, 45, 46].

Remark 2 . —

The folded normal distribution has the following properties:

- (a)

probability density function (PDF):

(26) - (b)

(27)

Remark 3 . —

When μ f = 0, the distribution of Y is a half normal distribution. This distribution is identical to the truncated normal distribution, with left truncation point 0 and no right truncation point. For this distribution we have the following.

- (a)

, for y ≥ 0.

- (b)

, Var[Y] = σ f 2(1 − 2/π).

Assumption 4 . —

The Z i in Rosenthal's estimator N R are derived from a half normal distribution, based on a normal distribution N(0,1).

Support. When a researcher begins to perform a meta-analysis, the sample of studies is drawn from those studies that are already published. So his sample is most likely biased by some sort of selection bias, produced via a specific selection process [47]. Thus, although when we study Rosenthal's N R assuming that all Z i are drawn from the normal distribution, they are in essence drawn from a truncated normal distribution. This has been commented on by Iyengar and Greenhouse [12] and Schonemann and Scargle [48]. But at which point is this distribution truncated? We would like to advocate that the half normal distribution, based on a normal distribution N(0,1), is the one best representing the Z i Rosenthal uses to compute his fail-safe N R. The reasons for this are as follows.

-

(1)

Firstly, assuming that all Z i are of the same sign does not impede the significance of the results from each study. That is, the test is significant when either Z i > Z α/2 or Z i < Z 1−α/2 occurs.

-

(2)

However, when a researcher begins to perform a meta-analysis of studies, many times Z i can be either positive or negative. Although this is true, when the researcher is interested in doing a meta-analysis, usually the Z i that have been published are indicative of a significant effect of the same direction (thus Z i have the same sign) or are at least indicative of such an association without being statistically significant, thus producing Z i of the same sign but not producing significance (e.g., the confidence interval of the effect might include the null value).

-

(3)

There will definitely be studies that produce a totally opposite effect, thus producing an effect of opposite direction, but these will definitely be a minority of the studies. Also there is the case that these other signed Z i are not significant.

Hence, in this case

| (28) |

Skew Normal Distribution. Here we propose that the Z i follow a skew normal distribution; that is, Z i ~ SN(ξ, ω, α).

Definition 5 . —

The skew normal distribution is a continuous probability distribution that generalises the normal distribution to allow for nonzero skewness. A random variable X follows a univariate skew normal distribution with location parameter ξ ∈ R, scale parameter ω ∈ R +, and skewness parameter α ∈ R [49], if it has the density

(29) Note that if α = 0, the density of X reduces to the N(ξ, ω 2).

Remark 1 . —

The expectation and variance of X are [49]

(30)

Remark 2 . —

The methods of moments estimators for ξ, ω, and δ are [50, 51]

(31) where , b 1 = (4/π − 1)a 1, m 1 = n −1∑i=1 n X i, m 2 = n −1∑i=1 n(X i − m 1)2, and m 3 = n −1∑i=1 n(X i − m 1)3. The sign of is taken to be the sign of m 3.

Explanation. The skew normal distribution allows for a dynamic way to fit the available Z-scores. The fact that there is ambiguity on the derivation of the standard deviates from each study from a normal or a truncated normal distribution creates the possibility that the distribution could be a skew normal, with the skewness being attributed that we are including only the published Z-scores in the estimation of Rosenthal's [11] estimator.

Hence, in this case and taking the method of moments estimators of ξ, ω, and δ, we get

| (32) |

where , b 1 = (4/π − 1)a 1, m 1 = n −1∑i=1 n Z i, m 2 = n −1∑i=1 n(Z i − m 1)2, and m 3 = n −1∑i=1 n(Z i − m 1)3.

3.4. Methods for Confidence Intervals

3.4.1. Normal Approximation

In the previous section, formulas for computing the variance of were derived. We compute asymptotic (1 − α/2)% confidence intervals for N R as

| (33) |

where Z 1−α/2 is the (1 − α/2)th quantile of the standard normal distribution.

The variance of for a given set of values Z i depends firstly on whether the number of studies k is fixed or random and secondly on whether the estimators of μ, σ 2, and λ are derived from the method of moments or from the distributional assumptions.

3.4.2. Nonparametric Bootstrap

Bootstrap is a well-known resampling methodology for obtaining nonparametric confidence intervals of a parameter [52, 53]. In most statistical problems one needs an estimator of a parameter of interest as well as some assessment of its variability. In many such problems the estimators are complicated functionals of the empirical distribution function and it is difficult to derive trustworthy analytical variance estimates for them. The primary objective of this technique is to estimate the sampling distribution of a statistic. Essentially, bootstrap is a method that mimics the process of sampling from a population, like one does in Monte Carlo simulations, but instead drawing samples from the observed sampling data. The tool of this mimic process is the Monte Carlo algorithm of Efron [54]. This process is explained properly by Efron and Tibshirani [55] and Davison and Hinkley [56], who also noted that bootstrap confidence intervals are approximate, yet better than the standard ones. Nevertheless, they do not try to replace the theoretical ones and bootstrap is not a substitute for precise parametric results, but rather a way to reasonably proceed when such results are unavailable.

Nonparametric resampling makes no assumptions concerning the distribution of, or model for, the data [57]. Our data is assumed to be a vector Z obs of k independent observations, and we are interested in a confidence interval for . The general algorithm for a nonparametric bootstrap is as follows.

-

(1)

Sample k observations randomly with replacement from Z obs to obtain a bootstrap data set, denoted by Z *.

-

(2)

Calculate the bootstrap version of the statistic of interest .

-

(3)

Repeat steps (1) and (2) several times, say B, to obtain an estimate of the bootstrap distribution.

In our case

-

(1)

compute a random sample from the initial sample of Z i, size k,

-

(2)

compute N R * from this sample,

-

(3)

repeat these processes b times.

Then the bootstrap estimator of N R is

| (34) |

From this we can compute also confidence intervals for N R_bootstrap.

In the next section, we investigate these theoretical aspects with simulations and examples.

4. Simulations and Results

The method for simulations is as follows.

-

(1)

Initially we draw random numbers from the following distributions:

-

(a)standard normal distribution,

-

(b)half normal distribution (0,1),

-

(c)skew normal distribution with negative skewness SN(δ = −0.5, ξ = 0, ω = 1),

-

(d)skew normal distribution with positive skewness SN(δ = 0.5, ξ = 0, ω = 1).

-

(a)

-

(2)

The numbers drawn from each distribution represent the number of studies in a meta-analysis and we have chosen k = 5, 15, 30, and 50. When k is assumed to be random, then the parameter λ is equal to the values chosen for the simulation, that is, 5, 15, 30, and 50, respectively.

-

(3)

We compute the normal approximation confidence interval with the formulas described in Section 3 and the bootstrap confidence interval. We also discern whether the number of studies is fixed or random. For the computation of the bootstrap confidence interval, we generate 1,000 bootstrap samples each time. We also study the performance of the different distributional estimators in cases where the distributional assumption is not met, thus comparing each of the six confidence interval estimators under all four distributions.

-

(4)We compute the coverage probability comparing with the true value of Rosenthal's fail-safe number. When the number of studies is fixed the true value of Rosenthal's number is

When the number of studies is random [from a Poisson (λ) distribution] the true value of Rosenthal's number is(35)

We execute the above procedure 10,000 times each time. Our alpha-level is considered 5%.(36)

This process is shown schematically in Table 1. All simulations were performed in R and the code is shown in the Supplementary Materials (see Supplementary Materials available online at http://dx.doi.org/10.1155/2014/825383).

Table 1.

Schematic table for simulation plan.

| Variance formula for normal approximation confidence intervals | Bootstrap confidence interval |

Real value of N R |

||

|---|---|---|---|---|

| Distributional | Moments | |||

|

k = 5,15,30,50 studies Draw Z i from N(0,1), HN(0,1), SN(δ = −0.5, ξ = 0, ω = 1), SN(δ = 0.5, ξ = 0, ω = 1) |

Fixed k (standard normal distribution values μ = 0, σ

2 = 1; half normal distribution , σ2 = 1 − 2/π; skew normal with negative skewness , σ2 = 1 − 1/2π; skew normal with positive skewness , σ2 = 1 − 1/2π) |

Fixed

|

Using the N R * we compute the N R_bootstrap and respectively the standard error needed to compute the confidence interval | Fixed k (standard normal distribution values μ = 0, σ

2 = 1; half normal distribution , σ2 = 1 − 2/π; skew normal with negative skewness , σ2 = 1 − 1/2π; skew normal with positive skewness , σ2 = 1 − 1/2π) |

| Random k (standard normal distribution values μ = 0, σ

2 = 1; half normal distribution , σ2 = 1 − 2/π; skew normal with negative skewness , σ2 = 1 − 1/2π; skew normal with positive skewness , σ2 = 1 − 1/2π; λ = 5,15,30,50) |

|

Using the N R * we compute the N R_bootstrap and respectively the standard error needed to compute the confidence interval | Random k (standard normal distribution values μ = 0, σ

2 = 1; half normal distribution , σ2 = 1 − 2/π; skew normal with negative skewness , σ2 = 1 − 1/2π; skew normal with positive skewness , σ2 = 1 − 1/2π; λ = 5,15,30,50) |

|

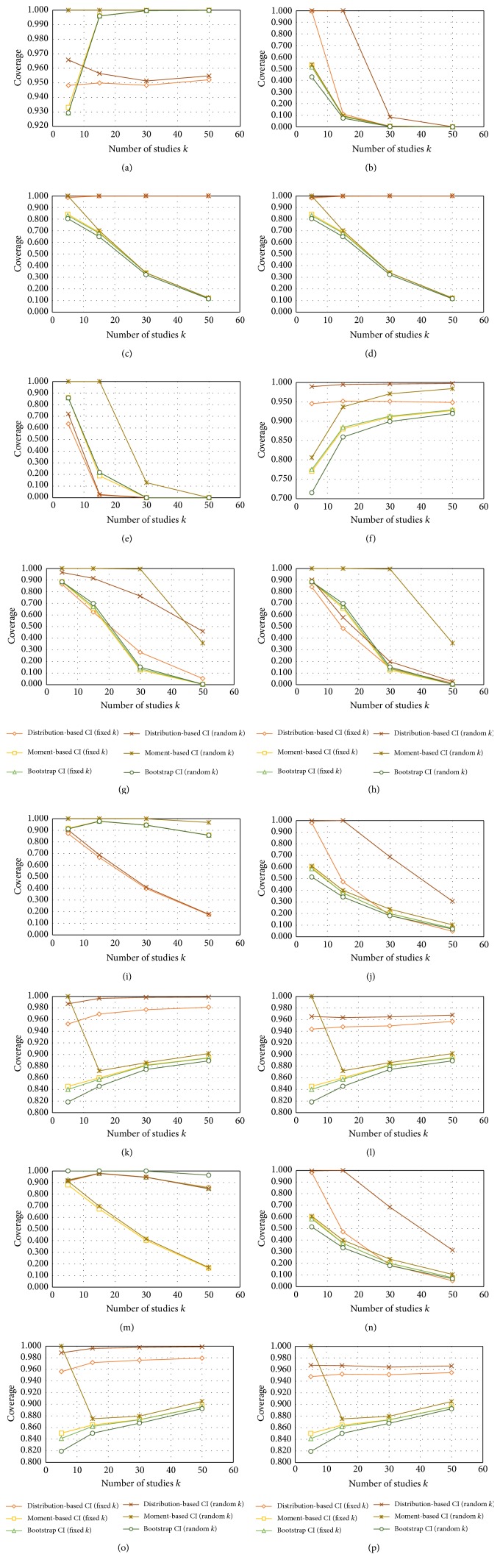

We observe from Table 2 and Figure 1 that the bootstrap confidence intervals perform the poorest both when the number of studies is considered fixed or random. The only case in which they perform acceptably is when the distribution is half normal and the number of studies is fixed. The moment estimators of variance perform either poorly or too efficiently in all cases, with coverages being under 90% or near 100%. The most acceptable confidence intervals for Rosenthal's estimator appear to be in the distribution-based method and to be much better for a fixed number of studies than for random number of studies. We also observe that, for the distribution-based confidence intervals in the fixed category, the half normal distribution HN(0,1) produces coverages which are all 95%. This is also stable for all number of studies in a meta-analysis. When the distributional assumption is not met the coverage is poor except for the cases of the positive and negative skewness skew normal distributions which perform similarly, possibly due to symmetry.

Table 2.

Probability coverage of the different methods for confidence intervals (CI) according to the number of studies k. The figure is organised as follows: the Z i are drawn from four different distributions (standard normal distribution, half normal distribution, skew normal with negative skewness, and skew normal with positive skewness).

| Draw Z i from |

Values of μ and σ

2 from the standard normal distribution |

Values of μ and σ

2 from the half normal distribution HN(0, 1) |

Values of μ and σ

2 from the skew normal distribution with negative skewness SN(δ = −0.5, ξ = 0, ω = 1) |

Values of μ and σ

2 from the skew normal distribution with positive skewness SN(δ = 0.5, ξ = 0, ω = 1) |

||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| k = 5 | k = 15 | k = 30 | k = 50 | k = 5 | k = 15 | k = 30 | k = 50 | k = 5 | k = 15 | k = 30 | k = 50 | k = 5 | k = 15 | k = 30 | k = 50 | |||

|

Standard normal distribution |

Fixed k | Distribution Based CI | 0.948 | 0.950 | 0.948 | 0.952 | 0.994 | 0.110 | 0.002 | 0.000 | 0.985 | 0.999 | 1.000 | 1.000 | 0.982 | 0.998 | 1.000 | 1.000 |

| Moments Based CI | 0.933 | 0.996 | 1.000 | 1.000 | 0.529 | 0.088 | 0.005 | 0.000 | 0.842 | 0.686 | 0.337 | 0.120 | 0.842 | 0.686 | 0.337 | 0.120 | ||

| Bootstrap CI | 0.929 | 0.996 | 1.000 | 1.000 | 0.514 | 0.089 | 0.005 | 0.000 | 0.830 | 0.680 | 0.337 | 0.120 | 0.830 | 0.680 | 0.337 | 0.120 | ||

| Random k | Distribution Based CI | 0.966 | 0.956 | 0.951 | 0.955 | 0.999 | 1.000 | 0.084 | 0.001 | 0.998 | 1.000 | 1.000 | 1.000 | 0.990 | 0.999 | 1.000 | 1.000 | |

| Moments Based CI | 1.000 | 1.000 | 1.000 | 1.000 | 0.535 | 0.094 | 0.006 | 0.000 | 1.000 | 0.702 | 0.338 | 0.122 | 1.000 | 0.702 | 0.338 | 0.122 | ||

| Bootstrap CI | 0.929 | 0.996 | 1.000 | 1.000 | 0.429 | 0.074 | 0.004 | 0.000 | 0.804 | 0.649 | 0.322 | 0.115 | 0.804 | 0.649 | 0.322 | 0.115 | ||

|

| ||||||||||||||||||

| Half normal distribution HN(0, 1) | Fixed k | Distribution Based CI | 0.635 | 0.021 | 0.000 | 0.000 | 0.945 | 0.952 | 0.951 | 0.948 | 0.864 | 0.624 | 0.279 | 0.053 | 0.841 | 0.483 | 0.142 | 0.014 |

| Moments Based CI | 0.861 | 0.187 | 0.000 | 0.000 | 0.771 | 0.880 | 0.911 | 0.927 | 0.885 | 0.657 | 0.126 | 0.003 | 0.885 | 0.657 | 0.126 | 0.003 | ||

| Bootstrap CI | 0.858 | 0.217 | 0.000 | 0.000 | 0.775 | 0.884 | 0.913 | 0.929 | 0.887 | 0.672 | 0.138 | 0.003 | 0.887 | 0.672 | 0.138 | 0.003 | ||

| Random k | Distribution Based CI | 0.720 | 0.027 | 0.000 | 0.000 | 0.989 | 0.995 | 0.996 | 0.997 | 0.966 | 0.915 | 0.762 | 0.459 | 0.901 | 0.578 | 0.198 | 0.027 | |

| Moments Based CI | 1.000 | 1.000 | 0.130 | 0.000 | 0.806 | 0.937 | 0.971 | 0.984 | 1.000 | 1.000 | 0.995 | 0.358 | 1.000 | 1.000 | 0.995 | 0.358 | ||

| Bootstrap CI | 0.858 | 0.217 | 0.000 | 0.000 | 0.715 | 0.859 | 0.899 | 0.920 | 0.885 | 0.698 | 0.152 | 0.004 | 0.885 | 0.698 | 0.152 | 0.004 | ||

|

| ||||||||||||||||||

|

Skew normal distribution with negative skewness SN(δ = −0.5, ξ = 0, ω = 1) |

Fixed k | Distribution Based CI | 0.872 | 0.666 | 0.399 | 0.174 | 0.980 | 0.472 | 0.184 | 0.048 | 0.953 | 0.970 | 0.977 | 0.981 | 0.944 | 0.948 | 0.949 | 0.957 |

| Moments Based CI | 0.917 | 0.979 | 0.944 | 0.858 | 0.597 | 0.375 | 0.200 | 0.074 | 0.845 | 0.860 | 0.882 | 0.895 | 0.845 | 0.860 | 0.882 | 0.895 | ||

| Bootstrap CI | 0.912 | 0.978 | 0.945 | 0.857 | 0.586 | 0.377 | 0.199 | 0.074 | 0.840 | 0.857 | 0.881 | 0.894 | 0.840 | 0.857 | 0.881 | 0.894 | ||

| Random k | Distribution Based CI | 0.903 | 0.688 | 0.409 | 0.178 | 0.996 | 1.000 | 0.687 | 0.306 | 0.987 | 0.997 | 0.998 | 0.999 | 0.965 | 0.964 | 0.965 | 0.968 | |

| Moments Based CI | 1.000 | 1.000 | 0.999 | 0.968 | 0.609 | 0.399 | 0.237 | 0.103 | 1.000 | 0.872 | 0.886 | 0.902 | 1.000 | 0.872 | 0.886 | 0.902 | ||

| Bootstrap CI | 0.912 | 0.978 | 0.945 | 0.857 | 0.514 | 0.342 | 0.181 | 0.066 | 0.818 | 0.845 | 0.874 | 0.889 | 0.818 | 0.845 | 0.874 | 0.889 | ||

|

| ||||||||||||||||||

|

Skew normal distribution with positive skewness SN(δ = 0.5, ξ = 0, ω = 1) |

Fixed k | Distribution Based CI | 0.880 | 0.673 | 0.402 | 0.164 | 0.982 | 0.471 | 0.186 | 0.050 | 0.956 | 0.972 | 0.976 | 0.979 | 0.948 | 0.952 | 0.951 | 0.955 |

| Moments Based CI | 0.923 | 0.980 | 0.947 | 0.852 | 0.596 | 0.372 | 0.201 | 0.076 | 0.850 | 0.865 | 0.874 | 0.896 | 0.850 | 0.865 | 0.874 | 0.896 | ||

| Bootstrap CI | 0.918 | 0.978 | 0.946 | 0.846 | 0.583 | 0.372 | 0.200 | 0.077 | 0.841 | 0.862 | 0.873 | 0.896 | 0.841 | 0.862 | 0.873 | 0.896 | ||

| Random k | Distribution Based CI | 0.911 | 0.696 | 0.415 | 0.169 | 0.996 | 1.000 | 0.683 | 0.314 | 0.989 | 0.996 | 0.998 | 0.999 | 0.967 | 0.967 | 0.964 | 0.966 | |

| Moments Based CI | 1.000 | 1.000 | 0.999 | 0.964 | 0.606 | 0.399 | 0.236 | 0.105 | 1.000 | 0.875 | 0.880 | 0.905 | 1.000 | 0.875 | 0.880 | 0.905 | ||

| Bootstrap CI | 0.918 | 0.978 | 0.946 | 0.846 | 0.514 | 0.335 | 0.180 | 0.068 | 0.819 | 0.850 | 0.868 | 0.893 | 0.819 | 0.850 | 0.868 | 0.893 | ||

Figure 1.

This figures shows the probability coverage of the different methods for confidence intervals (CI) according to the number of studies k. The figure is organised as follows: the Z i are drawn from four different distributions (standard normal distribution, half normal distribution, skew normal with negative skewness, and skew normal with positive skewness) which are depicted in ((a)–(d), (e)–(h), (i)–(l), and (m)–(p)), respectively. The different values of μ and σ 2 for the variance correspond to the standard normal distribution ((a), (e), (i), and (m)), half normal distribution ((b), (f), (j), and (n)), skew normal with negative skewness ((c), (g), (k), and (o)), and skew normal with positive skewness ((d), (h), (l), and (p)).

In the next sections, we give certain examples and we present the lower limits of confidence intervals for testing whether N R > 5k + 10, according to the suggested rule of thumb by [11]. We choose only the variance from a fixed number of studies when the Z i are drawn from a half normal distribution HN(0,1).

5. Examples

In this section, we present two examples of meta-analyses from the literature. The first study is a meta-analysis of the effect of probiotics for preventing antibiotic-associated diarrhoea and included 63 studies [24]. The second meta-analysis comes from the psychological literature and is a meta-analysis examining reward, cooperation, and punishment, including analysis of 148 effect sizes [25]. For each meta-analysis, we computed Rosenthal's fail-safe number and the respective confidence interval with the methods described above (Table 3).

Table 3.

Confidence intervals for the examples of meta-analyses.

| Fixed number of studies | Random number of studies | Bootstrap based CI | |||

|---|---|---|---|---|---|

| Distribution based CI |

Moment based CI |

Distribution based CI |

Moment based CI |

||

| Study 1 [24] Rosenthal's N R = 2124 |

(2060, 2188) | (788, 3460) | (2059, 2189) | (369, 3879) | (740, 3508) |

|

| |||||

| Study 2 [25] Rosenthal's N R = 73860 |

(73709, 74012) | (51618, 96102) | (73707, 74013) | (40976, 106745) | (51662, 96059) |

We observe that both fail-safe numbers exceed Rosenthal's rule of thumb, but some lower confidence intervals, especially in the first example, go as low as 369, which only slightly surpasses the rule of thumb (5∗63 + 10 = 325 in this case). This is not the case with the second example. Hence the confidence interval, especially the lower confidence interval value, is important to establish whether the fail-safe number surpasses the rule of thumb.

In the next section, we present a table with values according to which future researchers can get advice on whether their value truly supersedes the rule of thumb.

6. Suggested Confidence Limits for N R

We wish to answer the question whether N R > 5k + 10 for a given level of significance and the estimator , which is the rule of thumb suggested by Rosenthal. We formulate a hypothesis test according to which

| (37) |

An asymptotic test statistic for this is

| (38) |

under the null hypothesis.

So we reject the null hypothesis if .

In Table 4 we give the limits of N R above which we are 95% confident that N R > 5k + 10. For example if a researcher performs a meta-analysis of 25 studies, the rule of thumb suggests that over 5 · 25 + 10 = 135 studies there is no publication bias. The present approach and the values of Table 4 suggest that we are 95% confident for this when N R exceeds 209 studies. So this approach allows for inferences about Rosenthal's and is also slightly more conservative especially when Rosenthal's fail-safe number is characterised from overestimating the number of published studies.

Table 4.

95% one-sided confidence limits above which the estimated N R is significantly higher than 5k + 10, which is the rule of thumb suggested by Rosenthal [11]. k represents the number of studies included in a meta-analysis. We choose the variance from a fixed number of studies when the Z i are drawn from a half normal distribution HN(0, 1), as this performed best in the simulations.

| k | Cutoff point | k | Cutoff point | k | Cutoff point | k | Cutoff point |

|---|---|---|---|---|---|---|---|

| 1 | 17 | 41 | 369 | 81 | 842 | 121 | 1394 |

| 2 | 26 | 42 | 380 | 82 | 855 | 122 | 1409 |

| 3 | 35 | 43 | 390 | 83 | 868 | 123 | 1424 |

| 4 | 45 | 44 | 401 | 84 | 881 | 124 | 1438 |

| 5 | 54 | 45 | 412 | 85 | 894 | 125 | 1453 |

| 6 | 63 | 46 | 423 | 86 | 907 | 126 | 1468 |

| 7 | 71 | 47 | 434 | 87 | 920 | 127 | 1483 |

| 8 | 79 | 48 | 445 | 88 | 934 | 128 | 1498 |

| 9 | 86 | 49 | 456 | 89 | 947 | 129 | 1513 |

| 10 | 93 | 50 | 467 | 90 | 960 | 130 | 1528 |

| 11 | 99 | 51 | 479 | 91 | 973 | 131 | 1543 |

| 12 | 106 | 52 | 490 | 92 | 987 | 132 | 1558 |

| 13 | 112 | 53 | 501 | 93 | 1000 | 133 | 1573 |

| 14 | 118 | 54 | 513 | 94 | 1014 | 134 | 1588 |

| 15 | 125 | 55 | 524 | 95 | 1027 | 135 | 1603 |

| 16 | 132 | 56 | 536 | 96 | 1041 | 136 | 1619 |

| 17 | 140 | 57 | 547 | 97 | 1055 | 137 | 1634 |

| 18 | 147 | 58 | 559 | 98 | 1068 | 138 | 1649 |

| 19 | 155 | 59 | 571 | 99 | 1082 | 139 | 1664 |

| 20 | 164 | 60 | 582 | 100 | 1096 | 140 | 1680 |

| 21 | 172 | 61 | 594 | 101 | 1109 | 141 | 1695 |

| 22 | 181 | 62 | 606 | 102 | 1123 | 142 | 1711 |

| 23 | 190 | 63 | 618 | 103 | 1137 | 143 | 1726 |

| 24 | 199 | 64 | 630 | 104 | 1151 | 144 | 1742 |

| 25 | 209 | 65 | 642 | 105 | 1165 | 145 | 1757 |

| 26 | 218 | 66 | 654 | 106 | 1179 | 146 | 1773 |

| 27 | 228 | 67 | 666 | 107 | 1193 | 147 | 1788 |

| 28 | 237 | 68 | 679 | 108 | 1207 | 148 | 1804 |

| 29 | 247 | 69 | 691 | 109 | 1221 | 149 | 1820 |

| 30 | 257 | 70 | 703 | 110 | 1236 | 150 | 1835 |

| 31 | 266 | 71 | 716 | 111 | 1250 | 151 | 1851 |

| 32 | 276 | 72 | 728 | 112 | 1264 | 152 | 1867 |

| 33 | 286 | 73 | 740 | 113 | 1278 | 153 | 1883 |

| 34 | 296 | 74 | 753 | 114 | 1293 | 154 | 1899 |

| 35 | 307 | 75 | 766 | 115 | 1307 | 155 | 1915 |

| 36 | 317 | 76 | 778 | 116 | 1322 | 156 | 1931 |

| 37 | 327 | 77 | 791 | 117 | 1336 | 157 | 1947 |

| 38 | 338 | 78 | 804 | 118 | 1351 | 158 | 1963 |

| 39 | 348 | 79 | 816 | 119 | 1365 | 159 | 1979 |

| 40 | 358 | 80 | 829 | 120 | 1380 | 160 | 1995 |

7. Discussion and Conclusion

The purpose of the present paper was to assess the efficacy of confidence intervals for Rosenthal's fail-safe number. We initially defined publication bias and described an overview of the available literature on fail-safe calculations in meta-analysis. Although Rosenthal's estimator is highly used by researchers, its properties and usefulness have been questioned [48, 58].

The original contributions of the present paper are its theoretical and empirical results. First, we developed statistical theory allowing us to produce confidence intervals for Rosenthal's fail-safe number. This was produced by discerning whether the number of studies analysed in a meta-analysis is fixed or random. Each case produces different variance estimators. For a given number of studies and a given distribution, we provided five variance estimators: moment- and distribution-based estimators based on whether the number of studies is fixed or random and on bootstrap confidence intervals. Secondly, we examined four distributions by which we can simulate and test our hypotheses of variance, namely, standard normal distribution, half normal distribution, a positive skew normal distribution, and a negative skew normal distribution. These four distributions were chosen as closest to the nature of the Z i s. The half normal distribution variance estimator appears to present the best coverage for the confidence intervals. Hence, this might support the hypothesis that Z i s are derived from a half normal distribution. Thirdly, we provide a table of lower confidence intervals for Rosenthal's estimator.

The limitations of the study initially stem from the flaws associated with Rosenthal's estimator. This usually means that the number of negative studies needed to disprove the result is highly overestimated. However, its magnitude can give an indication for no publication bias. Another possible flaw could come from the simulation planning. We could try more values for the skew normal distribution, for which we tried only two values in present paper.

The implications of this research for applied researchers in psychology, medicine, and social sciences, which are the fields that predominantly use Rosenthal's fail-safe number, are immediate. Table 4 provides an accessible reference for researchers to consult and apply this more conservative rule for Rosenthal's number. Secondly, the formulas for the variance estimator are all available to researchers, so they can compute normal approximation confidence intervals on their own. The future step that needs to be attempted is to develop an R-package program or a Stata program to execute this quickly and efficiently and make it available to the public domain. This will allow widespread use of these techniques.

In conclusion, the present study is the first in the literature to study the statistical properties of Rosenthal's fail-safe number. Statistical theory and simulations were presented and tables for applied researchers were also provided. Despite the limitations of Rosenthal's fail-safe number, it can be a trustworthy way to assess publication bias, especially under the more conservative nature of the present paper.

Supplementary Material

The Supplementary Materials contain the simulation code used in R and the Z-values from the meta-analyses used as examples in Section 5.

Appendices

A. Proofs for Expressions (19), (20), and (22)

(a) Fixed k. Z 1, Z 2,…, Z i,…, Z k in the formula of the estimator (9) are i.i.d. distributed with E[Z i] = μ and Var[Z i] = σ 2. Let S = ∑i=1 k Z i; then, according to the Lindeberg-Lévy Central Limit Theorem [42], we have

| (A.1) |

So we have

| (A.2) |

Then, from (6) we get

| (A.3) |

Now we seek to compute E[S 4], E[S 2]. For this we need the moments of the normal distribution, which are given in Table 5 [59].

Table 5.

Moments of the Normal distribution with mean μ and variance σ 2.

| Order | Noncentral moment | Central moment |

|---|---|---|

| 1 | μ | 0 |

| 2 | μ 2 + σ 2 | σ 2 |

| 3 | μ 3 + 3μσ 2 | 0 |

| 4 | μ 4 + 6μ 2 σ 2 + 3σ 4 | 3σ 4 |

| 5 | μ 5 + 10μ 3 σ 2 + 15μσ 4 | 0 |

So

| (A.4) |

(b) Random k. In this approach, we additionally assume that k ~ Pois(λ). So S is a compound Poisson distributed variable [60]. Hence, from the law of total expectation and the law of total variance [44], we get

| (A.5) |

Thus, from (6) we get

| (A.6) |

To compute the final variance, it is more convenient to compute each component separately.

We will need the moments of a Poisson distribution [60], which are given in Table 6.

Table 6.

Moments of the Poisson distribution with parameter λ.

| Order | Noncentral moment | Central moment |

|---|---|---|

| 1 | λ | λ |

| 2 | λ + λ 2 | λ |

| 3 | λ + 3λ 2 + λ 3 | λ |

| 4 | λ + 7λ 2 + 6λ 3 + λ 4 | λ + 3λ 2 |

| 5 | λ + 15λ 2 + 25λ 3 + 10λ 4 + λ 5 | λ + 10λ 2 |

We then have

| (A.7) |

So

| (A.8) |

Also

| (A.9) |

Hence, we finally have

| (A.10) |

B. Proof of Expression (14): The Characteristic Function

From (13) we have that

| (B.1) |

because Φ(+∞) − Φ((−μ 1 − λ *)/σ 1) = 1 − Φ(−(μ 1 + λ *)/σ 1) = Φ((μ 1 + λ *)/σ 1).

C. Proof of Expressions (15) and (16)

The cumulant generating function is

| (C.1) |

For simplicity, let Δ = (μ 1 + λ *)/σ 1. Then

| (C.2) |

with .

Then, g′(0) leads to (15).

Next

| (C.3) |

Then g′′(0) leads to (16).

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Borenstein M., Hedges L. V., Higgins J. P. T., Rothstein H. R. Introduction to Meta-Analysis. 2nd. Chichester, UK: John Wiley & Sons; 2011. [Google Scholar]

- 2.Hedges L. V., Vevea J. L. Fixed- and random-effects models in meta-analysis. Psychological Methods. 1998;3(4):486–504. doi: 10.1037/1082-989X.3.4.486. [DOI] [Google Scholar]

- 3.Whitehead A., Whitehead J. A general parametric approach to the meta-analysis of randomized clinical trials. Statistics in Medicine. 1991;10(11):1665–1677. doi: 10.1002/sim.4780101105. [DOI] [PubMed] [Google Scholar]

- 4.Rothman K. J., Greenland S., Lash T. L. Modern Epidemiology. Philadelphia, Pa, USA: Lippincott Williams & Wilkins; 2008. [Google Scholar]

- 5.Normand S. L. T. Meta-analysis: formulating, evaluating, combining, and reporting. Statistics in Medicine. 1999;18(3):321–359. doi: 10.1002/(sici)1097-0258(19990215)18:3<321::aid-sim28>3.0.co;2-p. [DOI] [PubMed] [Google Scholar]

- 6.Brockwell S. E., Gordon I. R. A comparison of statistical methods for meta-analysis. Statistics in Medicine. 2001;20(6):825–840. doi: 10.1002/sim.650. [DOI] [PubMed] [Google Scholar]

- 7.Rosenthal R. Combining results of independent studies. Psychological Bulletin. 1978;85(1):185–193. doi: 10.1037/0033-2909.85.1.185. [DOI] [Google Scholar]

- 8.Hedges L. V., Olkin I. Statistical Methods for Meta-Analysis. New York, NY, USA: Academic Press; 1985. [Google Scholar]

- 9.Cooper H., Hedges L. V., Valentine J. C. Handbook of Research Synthesis and Meta-Analysis. 2nd. New York, NY, USA: Russell Sage Foundation; 2009. [Google Scholar]

- 10.Sutton A. J., Song F., Gilbody S. M., Abrams K. R. Modelling publication bias in meta-analysis: a review. Statistical Methods in Medical Research. 2000;9(5):421–445. doi: 10.1191/096228000701555244. [DOI] [PubMed] [Google Scholar]

- 11.Rosenthal R. The file drawer problem and tolerance for null results. Psychological Bulletin. 1979;86(3):638–641. doi: 10.1037/0033-2909.86.3.638. [DOI] [Google Scholar]

- 12.Iyengar S., Greenhouse J. B. Selection models and the file drawer problem. Statistical Science. 1988;3(1):109–117. [Google Scholar]

- 13.Begg C. B., Berlin J. A. Publication bias: a problem in interpreting medical data (C/R: pp. 445-463) Journal of the Royal Statistical Society Series A: Statistics in Society. 1988;151(3):445–463. [Google Scholar]

- 14.Thornton A., Lee P. Publication bias in meta-analysis: its causes and consequences. Journal of Clinical Epidemiology. 2000;53(2):207–216. doi: 10.1016/S0895-4356(99)00161-4. [DOI] [PubMed] [Google Scholar]

- 15.Kromrey J. D., Rendina-Gobioff G. On knowing what we do not know: an empirical comparison of methods to detect publication bias in meta-analysis. Educational and Psychological Measurement. 2006;66(3):357–373. doi: 10.1177/0013164405278585. [DOI] [Google Scholar]

- 16.Rothstein H. R., Sutton A. J., Borenstein M. Publication Bias in Meta-Analysis: Prevention, Assessment and Adjustments. Chichester, UK: John Wiley & Sons; 2006. [DOI] [Google Scholar]

- 17.Nakagawa S., Santos E. S. A. Methodological issues and advances in biological meta-analysis. Evolutionary Ecology. 2012;26(5):1253–1274. doi: 10.1007/s10682-012-9555-5. [DOI] [Google Scholar]

- 18.Kepes S., Banks G., Oh I.-S. Avoiding bias in publication bias research: the value of “null” findings. Journal of Business and Psychology. 2014;29(2):183–203. doi: 10.1007/s10869-012-9279-0. [DOI] [Google Scholar]

- 19.Ioannidis J. P. A., Trikalinos T. A. An exploratory test for an excess of significant findings. Clinical Trials. 2007;4(3):245–253. doi: 10.1177/1740774507079441. [DOI] [PubMed] [Google Scholar]

- 20.Simonsohn U., Nelson L. D., Simmons J. P. P-curve: a key to the file-drawer. Journal of Experimental Psychology: General. 2014;143(2):534–547. doi: 10.1037/a0033242. [DOI] [PubMed] [Google Scholar]

- 21.Ferguson C. J., Brannick M. T. Publication bias in psychological science: prevalence, methods for identifying and controlling, and implications for the use of meta-analyses. Psychological Methods. 2012;17(1):120–128. doi: 10.1037/a0024445. [DOI] [PubMed] [Google Scholar]

- 22.Cohen J. Statistical Power Analysis for the Behavioral Sciences. Hillsdale , NJ, USA: Lawrence Erlbaum; 1988. [Google Scholar]

- 23.Becker B. J. The failsafe N or file-drawer number. In: Rothstein H. R., Sutton A. J., Borenstein M., editors. Publication Bias in Meta-Analysis: Prevention, Assessment and Adjustments. Chichester, UK: John Wiley & Sons; 2005. pp. 111–126. [Google Scholar]

- 24.Hempel S., Newberry S. J., Maher A. R., et al. Probiotics for the prevention and treatment of antibiotic-associated diarrhea: a systematic review and meta-analysis. The Journal of the American Medical Association. 2012;307(18):1959–1969. doi: 10.1001/jama.2012.3507. [DOI] [PubMed] [Google Scholar]

- 25.Balliet D., Mulder L. B., van Lange P. A. M. Reward, punishment, and cooperation: a meta-analysis. Psychological Bulletin. 2011;137(4):594–615. doi: 10.1037/a0023489. [DOI] [PubMed] [Google Scholar]

- 26.McDaniel M. A., Rothotein H. R., Whetz D. L. Publication bias: a case study of four test vendors. Personnel Psychology. 2006;59(4):927–953. doi: 10.1111/j.1744-6570.2006.00059.x. [DOI] [Google Scholar]

- 27.Stouffer S. A., Suchman E. A., De Vinney L. C., et al. The American Soldier: Adjustment During Army Life. Vol. 1. Oxford, UK: Princeton University Press; 1949. (Studies in social psychology in World War II). [Google Scholar]

- 28.Cooper H. M. Statistically combining independent studies: a meta-analysis of sex differences in conformity research. Journal of Personality and Social Psychology. 1979;37(1):131–146. doi: 10.1037//0022-3514.37.1.131. [DOI] [Google Scholar]

- 29.Cooper H. M., Rosenthal R. Statistical versus traditional procedures for summarizing research findings. Psychological Bulletin. 1980;87(3):442–449. doi: 10.1037/0033-2909.87.3.442. [DOI] [PubMed] [Google Scholar]

- 30.Zakzanis K. K. Statistics to tell the truth, the whole truth, and nothing but the truth: formulae, illustrative numerical examples, and heuristic interpretation of effect size analyses for neuropsychological researchers. Archives of Clinical Neuropsychology. 2001;16(7):653–667. doi: 10.1016/S0887-6177(00)00076-7. [DOI] [PubMed] [Google Scholar]

- 31.Hsu L. M. Fail-safe Ns for one- versus two-tailed tests lead to different conclusions about publication bias. Understanding Statistics. 2002;1(2):85–100. [Google Scholar]

- 32.Becker B. J. Combining significance levels. In: Cooper H., Hedges L. V., editors. The Handbook of Research Synthesis. New York, NY, USA: Russell Sage; 1994. pp. 215–230. [Google Scholar]

- 33.Aguinis H., Pierce C. A., Bosco F. A., Dalton D. R., Dalton C. M. Debunking myths and urban legends about meta-analysis. Organizational Research Methods. 2011;14(2):306–331. doi: 10.1177/1094428110375720. [DOI] [Google Scholar]

- 34.Mullen B., Muellerleile P., Bryant B. Cumulative meta-analysis: a consideration of indicators of sufficiency and stability. Personality and Social Psychology Bulletin. 2001;27(11):1450–1462. doi: 10.1177/01461672012711006. [DOI] [Google Scholar]

- 35.Carson K. P., Schriesheim C. A., Kinicki A. J. The usefulness of the “fail-safe” statistic in meta-analysis. Educational and Psychological Measurement. 1990;50(2):233–243. [Google Scholar]

- 36.Duval S., Tweedie R. A nonparametric “trim and fill” method of accounting for publication bias in meta-analysis. Journal of the American Statistical Association. 2000;95(449):89–98. doi: 10.2307/2669529. [DOI] [Google Scholar]

- 37.Duval S., Tweedie R. Trim and fill: a simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics. 2000;56(2):455–463. doi: 10.1111/j.0006-341X.2000.00455.x. [DOI] [PubMed] [Google Scholar]

- 38.Orwin R. G. A fail-safe N for effect size in meta-analysis. Journal of Educational Statistics. 1983;8(2):157–159. [Google Scholar]

- 39.Rosenberg M. S. The file-drawer problem revisited: a general weighted method for calculating fail-safe numbers in meta-analysis. Evolution. 2005;59(2):464–468. doi: 10.1111/j.0014-3820.2005.tb01004.x. [DOI] [PubMed] [Google Scholar]

- 40.Gleser L. J., Olkin I. Models for estimating the number of unpublished studies. Statistics in Medicine. 1996;15(23):2493–2507. doi: 10.1002/(SICI)1097-0258(19961215)15:23<2493::AID-SIM381>3.0.CO;2-C. [DOI] [PubMed] [Google Scholar]

- 41.Eberly L. E., Casella G. Bayesian estimation of the number of unseen studies in a meta-analysis. Journal of Official Statistics. 1999;15(4):477–494. [Google Scholar]

- 42.Lindeberg J. W. Eine neue herleitung des exponentialgesetzes in der Wahrscheinlichkeitsrechnung. Mathematische Zeitschrift. 1922;15(1):211–225. doi: 10.1007/BF01494395. [DOI] [Google Scholar]

- 43.Johnson N. L., Kotz S., Balakrishnan N. Continuous Univariate Distributions. 2nd. Vol. 2. New York, NY, USA: John Wiley & Sons; 1994. [Google Scholar]

- 44.Casella G., Berger R. L. Statistical Inference. 2nd. Pacific Grove, Calif, USA: Duxbury/Thomson Learning; 2002. [Google Scholar]

- 45.Elandt R. C. The folded normal distribution: two methods of estimating parameters from moments. Technometrics. 1961;3(4):551–562. [Google Scholar]

- 46.Leone F. C., Nelson L. S., Nottingham R. B. The folded normal distribution. Technometrics. 1961;3(4):543–550. [Google Scholar]

- 47.Mavridis D., Sutton A., Cipriani A., Salanti G. A fully Bayesian application of the Copas selection model for publication bias extended to network meta-analysis. Statistics in Medicine. 2013;32(1):51–66. doi: 10.1002/sim.5494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Schonemann P. H., Scargle J. D. A generalized publication bias model. Chinese Journal of Psychology. 2008;50(1):21–29. [Google Scholar]

- 49.Azzalini A. A class of distributions which includes the normal ones. Scandinavian Journal of Statistics. 1985;12(2):171–178. [Google Scholar]

- 50.Lin T. I., Lee J. C., Yen S. Y. Finite mixture modelling using the skew normal distribution. Statistica Sinica. 2007;17(3):909–927. [Google Scholar]

- 51.Arnold B. C., Beaver R. J., Groeneveld R. A., Meeker W. Q. The nontruncated marginal of a truncated bivariate normal distribution. Psychometrika. 1993;58(3):471–488. doi: 10.1007/BF02294652. [DOI] [Google Scholar]

- 52.Frangos C. C. An updated bibliography on the jackknife method. Communications in Statistics—Theory and Methods. 1987;16(6):1543–1584. doi: 10.1080/03610928708829454. [DOI] [Google Scholar]

- 53.Tsagris M., Elmatzoglou I., Frangos C. C. The assessment of performance of correlation estimates in discrete bivariate distributions using bootstrap methodology. Communications in Statistics—Theory and Methods. 2012;41(1):138–152. doi: 10.1080/03610926.2010.521281. [DOI] [Google Scholar]

- 54.Efron B. Bootstrap methods: another look at the jackknife. The Annals of Statistics. 1979;7(1):1–26. doi: 10.1214/aos/1176344552. [DOI] [Google Scholar]

- 55.Efron B., Tibshirani R. J. An Introduction to the Bootstrap. Boca Raton, Fla, USA: Chapman & Hall; 1993. [DOI] [Google Scholar]

- 56.Davison A. C., Hinkley D. V. Bootstrap Methods and Their Application. New York, NY, USA: Cambridge University Press; 1997. [DOI] [Google Scholar]

- 57.Carpenter J., Bithell J. Bootstrap confidence intervals: when, which, what? A practical guide for medical statisticians. Statistics in Medicine. 2000;19(9):1141–1164. doi: 10.1002/(sici)1097-0258(20000515)19:9<1141::aid-sim479>3.0.co;2-f. [DOI] [PubMed] [Google Scholar]

- 58.Scargle J. D. Publication bias: the “File-Drawer” problem in scientific inference. Journal of Scientific Exploration. 2000;14(1):91–106. [Google Scholar]

- 59.Patel J. K., Read C. B. In: Handbook of the Normal Distribution. 2nd. Owen D. B., Schucany W. R., editors. New York, NY, USA: Marcel Dekker; 1982. (Statistics: Textbooks and Monographs). [Google Scholar]

- 60.Haight F. A. Handbook of the Poisson Distribution. New York, NY, USA: Wiley; 1967. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

The Supplementary Materials contain the simulation code used in R and the Z-values from the meta-analyses used as examples in Section 5.