Abstract

Converging evidence suggests that the fusiform gyrus is involved in the processing of both faces and words. We used fMRI to investigate the extent to which the representation of words and faces in this region of the brain is based on a common neural representation. In Experiment 1, a univariate analysis revealed regions in the fusiform gyrus that were only selective for faces and other regions that were only selective for words. However, we also found regions that showed both word-selective and face-selective responses, particularly in the left hemisphere. We then used a multivariate analysis to measure the pattern of response to faces and words. Despite the overlap in regional responses, we found distinct patterns of response to both faces and words in the left and right fusiform gyrus. In Experiment 2, fMR adaptation was used to determine whether information about familiar faces and names is integrated in the fusiform gyrus. Distinct regions of the fusiform gyrus showed adaptation to either familiar faces or familiar names. However, there was no adaptation to sequences of faces and names with the same identity. Taken together, these results provide evidence for distinct, but overlapping, neural representations for words and faces in the fusiform gyrus.

Keywords: face, FFA, name, VWFA, word

Introduction

The ability to recognize written words is a relatively recent development in human evolution. As a result, the neural processes involved in reading are unlikely to be facilitated by innate neural mechanisms dedicated to written word processing, but instead involve preexisting neural mechanisms that can be adapted to the demands of reading (Dehaene and Cohen 2007, 2011). In contrast to words, neural processing of faces is often thought to be highly conserved during evolution and to involve specialized neural circuits (Polk et al. 2007; Zhu et al. 2010). Although previous studies have reported selective responses to both printed words and faces in what appear to be similar regions of occipito-temporal cortex (Kanwisher et al. 1997; Cohen et al. 2000), it remains unclear to what extent the neural functioning associated with words is spatially and functionally distinct from neural processing associated with faces.

Two opposing accounts have been offered to explain how the occipital–temporal cortex is functionally optimized to process different types of visual information, such as words and faces (Behrmann and Plaut 2013). The domain-specific approach proposes that discrete cortical regions process specific categories of visual information (Kanwisher 2010). An alternative perspective, the domain-general approach, proposes a distributed and overlapping representation of visual information along the occipital–temporal lobe (Haxby et al. 2001).

Support for a domain-specific representation has often been considered to come from neuropsychological studies. For example, selective lesions to the fusiform gyrus can result in severe deficits in face recognition (prosopagnosia) but leave printed word recognition relatively intact, whereas other lesions result in severe deficits to word recognition (pure alexia) but leave face recognition relatively intact [Farah 1991; Behrmann et al. 1992; for reviews, see Barton (2011) and Susilo and Duchaine (2013)]. Further support for domain specificity is found in fMRI studies that have demonstrated that a discrete neural region along the fusiform gyrus (fusiform face area, FFA) responds selectively to faces compared with non-face objects (Puce et al. 1995; Kanwisher et al. 1997), whereas another region in the fusiform gyrus (visual word form area, VWFA) responds more to visually presented words compared with a range of control stimuli (Cohen et al. 2000; Cohen and Dehaene 2004; Baker et al. 2007).

However, other studies provide support for a domain-general neural organization. For example, other studies of individuals with prosopagnosia after damage to the fusiform gyrus show that they are not only impaired at face recognition, but can also show some degree of impairment in recognizing words (Behrmann and Plaut 2014). Similarly, individuals with alexia after lesions to the VWFA region are not only impaired at word recognition, but can also be impaired at recognizing numbers (Starrfelt and Behrmann 2014), objects (Behrmann et al. 1998), and even faces (Behrmann and Plaut 2014; Roberts et al. 2012). fMRI studies using multivariate analysis methods also provide some support for domain-general processing by showing that overlapping patterns of response across the entire ventral stream may be important for the discrimination of different object categories (Haxby et al. 2001). The potential importance of the whole pattern of neural response is demonstrated by the fact that the ability to discriminate particular object categories is still evident when the most category-selective regions are removed from the analysis.

Taken together, the existing literature is therefore unclear as to whether the neural representation of words is spatially and functionally distinct from the processing of other visual categories. To address this issue, we directly compared the neural response with words and faces in the fusiform gyrus. In Experiment 1, we compared the fMRI response with words and faces with a range of control stimuli. Using a univariate analysis, we determined whether face-selective and word-selective responses overlapped within the fusiform gyrus. From a domain-specific organization, the prediction is that there should not be any overlap between the response to words and faces. In contrast, our prediction from a domain-general perspective is that there should be overlapping representations that reflect some form of shared neural processing. We then complemented this univariate approach with an MVPA to determine whether the patterns of response to faces and words were distinct within the fusiform gyrus. In Experiment 2, we used an fMR-adaptation paradigm to determine whether information about words and faces is functionally integrated in this region of the brain. We determined adaptation to blocks of same versus different faces or blocks of same versus different names to find regions showing adaptation to each stimulus type. We then compared the response to blocks with mixed familiar names and faces with the same identity with the response to blocks that included familiar names and faces with different identities. Our prediction was that if information about words and faces is integrated in the fusiform gyrus, then we should get adaptation when the faces and names have the same identity.

Methods

Participants

Twenty participants (9 females; mean age, 24) took part in both Experiment 1 and Experiment 2. All participants were right-handed and had normal or corrected-to-normal vision. All participants provided written informed consent. The study was approved by the YNiC Ethics Committee at the University of York.

Experiment 1

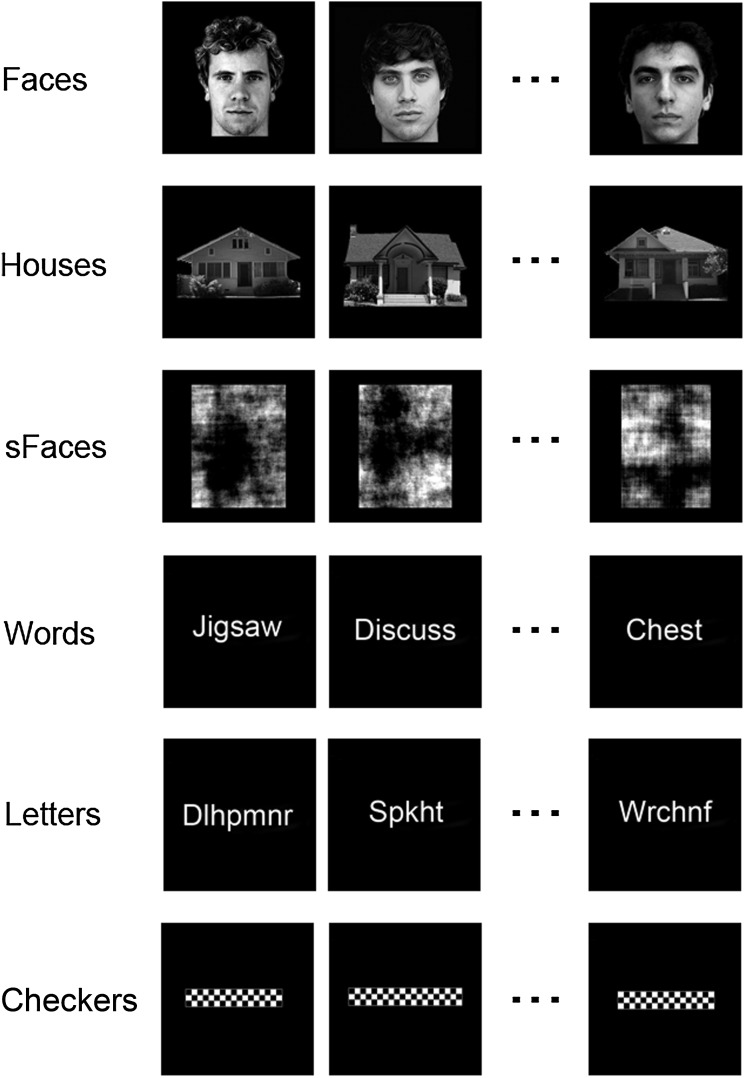

Participants viewed blocks of images in the following 6 conditions (1) “faces,” (2) “houses,” (3) “scrambled faces,” (4) “words,” (5) “letter-strings,” and (6) “checkerboards” (Fig. 1). Face images were selected from the Psychological Image Collection at Stirling University (http://pics.psych.stir.ac.uk) and other internet sources. Faces displayed neutral expressions, were forward facing, and were counterbalanced for gender. Faces were approximately 7 × 10° in size. Images of houses were taken from a variety of internet sources and were approximately 13 × 7° in size. Scrambled faces were phase-scrambled images of the faces. Words were selected from 4 categories: Animals, body parts, objects, and verbs. Words varied in length: Short words (3–5 letters, ∼6°), medium words (6–8 letters, ∼11°), and long words (8+ letters, 12+ deg). To generate letter-strings, the vowels were removed from the original words and replaced with consonants. Words and letter-strings were presented in Arial font in size 40 (height = ∼3.5°). Checkerboards were generated to match the visual extent of the words. Images were back-projected onto a screen within the bore of the scanner 57 cm from the participants' eyes.

Figure 1.

Examples of the stimuli from each of the experimental conditions in Experiment 1.

Images were presented in a block design with 9 images per block. Each image was presented for 800 ms with a 200-ms black screen interstimulus interval. Each stimulus block was separated by a 9-s period in which a white fixation cross was displayed on a black background. Each condition was repeated 5 times, giving a total of 30 blocks. To ensure participants maintained attention throughout the experiment, participants had to detect the presence of a red dot which was superimposed onto the faces, houses, scrambled faces, and checkerboards or the presence of a red letter in the words and letter-strings. No significant differences in red dot detection were evident across experimental conditions (accuracy: 98%, F1,19 = 2.77, P = 0.11; response time (RT): 606 ms, F1,19 = 0.10).

Experiment 2

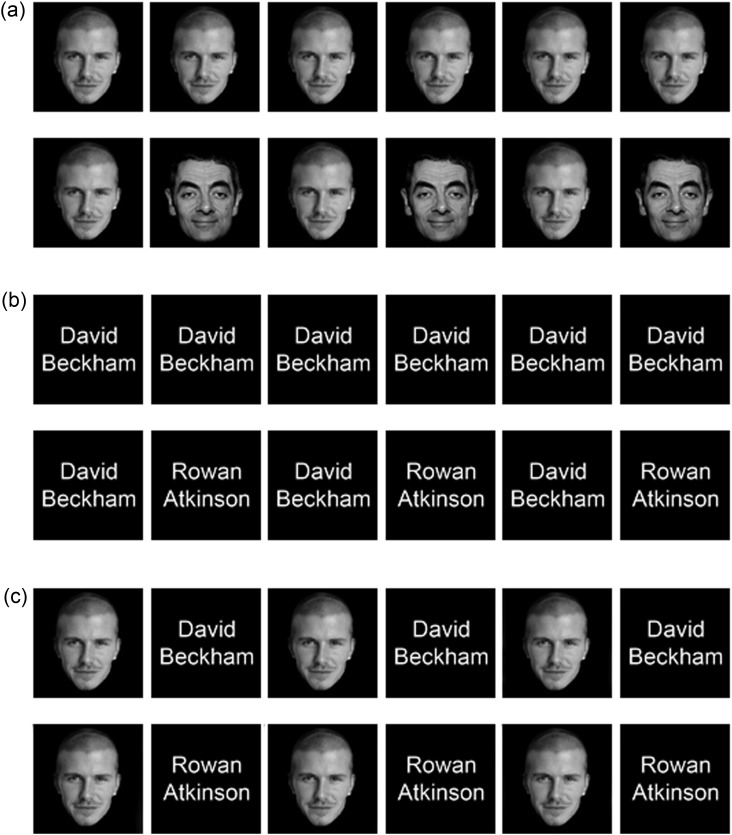

There were 6 conditions in Experiment 2: (1) “face same,” (2) “face different,” (3) “name same,” (4) “name different,” (5) “face + name same,” and (6) “face + name different” (Fig. 2). Face images were grayscale images of 8 famous male identities (Rowan Atkinson, David Beckham, Tom Cruise, Robert DeNiro, Tom Hanks, Gary Lineker, Brad Pitt, and Bruce Willis). Faces were approximately 8 × 12° in size. The name stimuli were the written names of the 8 selected celebrities presented in Arial font (∼13 × 6°). All participants were tested to show that they were very familiar with the identities used in this study.

Figure 2.

Examples of stimuli from the experimental conditions in Experiment 2: (a) Same-face and different-face conditions, (b) same-name and different-name conditions, and (c) same identity, face + name, and different identity face + name.

Images were presented in a block design with 6 images per block. In the “same” conditions, images within a block represented the same identity. In the “different” conditions, images from 2 different identities alternated within a block. The “face + name” conditions involved interleaved presentations of faces and names. Within a block, images were presented for 800 ms and separated by a black screen presented for 200 ms. Stimulus blocks were separated by a 9-s fixation cross. Each condition was repeated 8 times in a counterbalanced order, giving a total of 48 blocks. The task of each participant was to detect the presence of a red dot or red letter superimposed on one of the images in the block. No significant differences in red dot detection were evident across experimental conditions (accuracy: 97%, F1,19 = 0.35; RT: 619 ms, F1,19 = 1.99, P = 0.17).

Imaging Parameters

All imaging experiments were performed using a GE 3-Tesla HD Excite MRI scanner at York Neuroimaging Centre at the University of York. A Magnex head-dedicated gradient insert coil was used in conjunction with a birdcage, radiofrequency coil tuned to 127.4 MHz. A gradient-echo echo-planar imaging (EPI) sequence was used to collect data from 38 contiguous axial slices (repetition time = 3 s, echo time = 25 ms, field of view = 28 × 28 cm, matrix size = 128 × 128, slice thickness = 4 mm). These were coregistered onto a T1-weighted anatomical image (1 × 1 × 1 mm) from each participant. To improve registrations, an additional T1-weighted image was taken in the same plane as the EPI slices.

fMRI Analysis

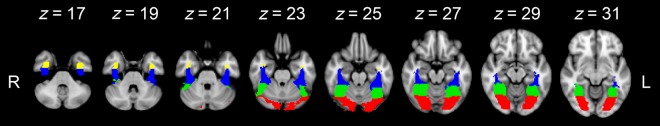

Univariate analysis of the fMRI data was performed with FEAT v 5.98 (http://www.fmrib.ox.ac.uk/fsl). In both experimental scans, the initial 9 s of data were removed to reduce the effects of magnetic saturation. Motion correction (MCFLIRT, FSL) was applied followed by temporal high-pass filtering (Gaussian-weighted least-squares straight line fitting, sigma = 50 s). Spatial smoothing (Gaussian) was applied at 6 mm (full width at half maximum). Individual participant data were entered into a higher-level group analysis using a mixed-effects design (FLAME, http://www.fmrib.ox.ac.uk/fsl). Functional data were first registered to a high-resolution T1-anatomical image and then onto the standard MNI brain (ICBM152). In both experiments, we were interested in characterizing the neural response to faces and words/names along the fusiform gyrus. To achieve this, we generated a region of interest mask of the fusiform gyrus at the group level using the Harvard Oxford Atlas. To ensure the fusiform masks encompassed the anatomical extent of the fusiform gyrus, 4 masks were selected from the atlas: (1) Occipital fusiform, (2) occipital temporal fusiform, (3) posterior fusiform, and (4) anterior fusiform. From these 4 masks, a combined anatomical fusiform mask was generated which was used for subsequent analysis (Fig. 3).

Figure 3.

Fusiform gyrus mask. The analysis was restricted to a bilateral fusiform mask generated by combining the following masks from the Harvard Oxford Atlas in the MNI space: (1) Occipital fusiform (red), (2) occipital temporal fusiform (green), (3) posterior fusiform (blue), and (4) anterior fusiform (yellow). This mask was back-transformed into each participants EPI space for analyses which were performed in the individual's EPI space.

In Experiment 1, we first used a univariate approach to determine whether the neural response to faces and words in the right and left fusiform was spatially distinct, that is, whether voxels within the fusiform gyrus show an overlapping response to both faces and words or whether the responses are spatially segregated. Statistical maps of face-selective voxels were generated at the individual level using the following contrasts: “faces > houses” and “faces > scrambled faces.” The rationale for using houses as a control stimulus is that it is a different object category with images that vary at the subordinate level. The rationale for using scrambled faces as a control stimulus is that they contain many of the same low-level properties of the face, but are not perceived as a face. Statistical maps of word-selective voxels were generated at the individual level using the following contrasts “words > letter-strings” and “words > checkerboards.” The rationale for using letter-strings as a control stimulus is that they contain letters, but do not generate words. The rationale for using checkerboards as a control stimulus is that they control for low-level activation of the visual field.

Individual statistical maps were entered into a higher-level group analysis. To quantify the differences between these statistical maps, each map was thresholded at Z > 2.3. The 2 face-selective statistical maps were then combined to produce a face-selective statistical map and the 2 word-selective statistical maps were combined to produce a word-selective statistical map. These maps were compared to calculate the number of voxels within the right and left fusiform gyrus, which demonstrated a face-selective response, a word-selective response, and both a face- and word-selective response.

Next, we investigated whether the neural pattern of response to faces and words was functionally distinct using multivariate pattern analysis. Multivariate pattern analysis was performed at the individual level and restricted to the fusiform mask. The parameter estimates for the words and faces from the univariate analysis were normalized by subtracting the mean response across all conditions. Pattern analyses were then performed using the PyMVPA toolbox (http://www.pymvpa.org/; Hanke et al. 2009). To determine the reliability of the data within individual participants, the parameter estimates for faces and words were correlated across odd (1, 3) and even (2, 4) blocks across all voxels in the fusiform gyrus mask (Haxby et al. 2001). This allowed us to determine whether the correlations between the patterns of response to faces across odd and even blocks or the correlations between the patterns of response to words across odd and even blocks (within-category correlations) were higher than those between the patterns of response to faces and words across odd and even blocks (between-category correlations). A Fisher's transformation was applied to the within-category and between-category correlations prior to further statistical analyses.

In Experiment 2, an fMR-adaptation paradigm was used to determine the selectivity of responses to faces and names within the fusiform gyrus (Grill-Spector et al. 1999; Grill-Spector and Malach 2001). We used the following contrasts: (1) “different-face > same-face,” (2) “different-name > same-name,” and (3) “different-face + name > same-face + name.” In this way, we could investigate whether voxels within the fusiform gyrus demonstrated adaptation to: (1) faces, (2) names, or (3) faces + names. Statistical maps were thresholded at Z > 2.3.

Results

Experiment 1

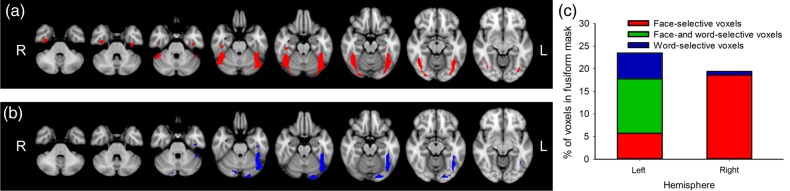

In Experiment 1, we first used a univariate approach to characterize the pattern of response in the left and right fusiform gyrus to faces and words. Face-selective voxels were defined by combining statistically significant voxels from the following contrasts: faces > houses, faces > scrambled faces. Word-selective voxels were defined by combining statistically significant voxels from the following contrasts: words > letter-strings, words > checkerboards. Figure 4a shows face-selective and word-selective voxels within the fusiform gyrus. Face-selective voxels were found within the left and the right fusiform gyrus, whereas word-selective voxels were found exclusively in the left fusiform gyrus. Next, we quantified the extent to which the neural responses to faces and words were distinct. Figure 4b shows the percentage of voxels in the right and left fusiform gyrus that responded selectively to faces, to words, or to both faces and words. In the left fusiform gyrus, similar numbers of voxels responded selectively to only faces (5.8%) or to only words (5.7%). However, the majority of active voxels within the left fusiform gyrus responded to both faces and words (12.0%). This shows a predominantly overlapping representation of faces and words in the left fusiform gyrus. In contrast, the right fusiform gyrus contained more voxels that were face-selective (18.6%) compared with voxels that were word-selective (0.8%). Moreover, there were no voxels in the right fusiform gyrus that showed a significant response to both faces and words. Supplementary Table 1 summarizes the number of significant and overlapping voxels at different threshold levels. Supplementary Figure 1 shows the proportion of significant voxels in different anatomical regions of the fusiform gyrus (Fig. 3). This shows that the majority of significant voxels were found in the posterior regions of the fusiform gyrus.

Figure 4.

Face-selective and word-selective voxels in the fusiform gyrus. (a) Voxels within the fusiform gyrus that responded selectively to faces are shown in red. (b) Voxels within the fusiform gyrus that responded selectively to words are shown in (blue). (c) Percentage of voxels within the fusiform gyrus that responded selectively to faces (red), selectively to words (blue), and both to faces and words (green). This shows predominantly overlapping responses to faces and words in the left fusiform gyrus. However, in the right fusiform gyrus, the response is predominately to faces. Statistical images were thresholded at Z > 2.3.

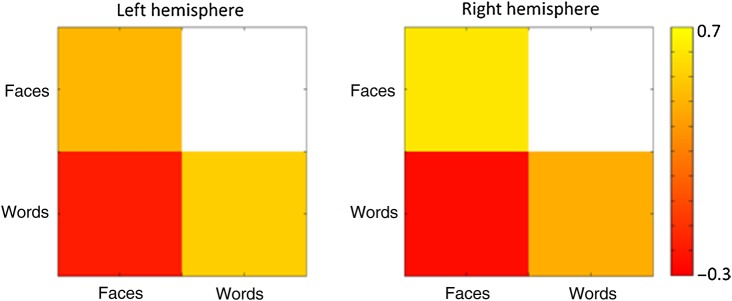

Next, we used MVPA to ask whether the patterns of response to faces and words were distinct. Correlation-based MVPA methods (Haxby et al. 2001) were used to measure the neural response to words and faces in the left and right fusiform gyrus. Figure 5 shows the similarity in the pattern of response to words and faces in the fusiform gyrus. We found that the within-category correlation to faces was greater than the between-category correlation between faces and words in both the left (t(19) = 5.8, P < 0.0001) and right (t(19) = 6.9, P < 0.0001) hemisphere. Similarly, we found that the within-category correlation to words was greater than the between-category correlation between faces and words in both the left (t(19) = 6.3, P < 0.0001) and right (t(19) = 4.8, P < 0.0001) hemisphere. Supplementary Figure 2 shows this analysis across each of the subregions of the fusiform gyrus. In addition, this shows that distinct patterns of response were evident in more posterior regions of the fusiform gyrus.

Figure 5.

MVPA showing the within-category and between-category correlations for the patterns of response to words and faces in the fusiform gyrus mask. Correlations were based on the data from odd and even blocks. There were distinct patterns of response to both faces and words in both the right and the left fusiform gyrus.

Next, we determined whether distinct patterns of neural response to words and faces were evident when the analysis was restricted to regions of the fusiform gyrus that were both face-selective and word-selective. Supplementary Figure 3 shows that there were distinct patterns to both conditions. We found that the within-category correlation to faces was greater than the between-category correlation between faces and words (t(19) = 5.69, P < 0.0001). Similarly, we found that the within-category correlation to words was greater than the between-category correlation between faces and words (t(19) = 4.93, P < 0.0001). To complement this analysis, we also asked whether distinct patterns to faces and words were evident in regions that were only selective to faces or only selective to words. In face-selective regions, we found that within-category correlation to faces was greater than the between-category correlation between faces and words (t(19) = 9.237, P < 0.0001). Similarly, we found that the within-category correlation to words was greater than the between-category correlation between faces and words (t(19) = 8.223, P < 0.0001). In word-selective regions, we found that within-category correlation to faces was greater than the between-category correlation between faces and words (t(19) = 3.838, P = 0.001). Similarly, we found that the within-category correlation to words was greater than the between-category correlation between faces and words (t(19) = 5.037, P < 0.0001).

Experiment 2

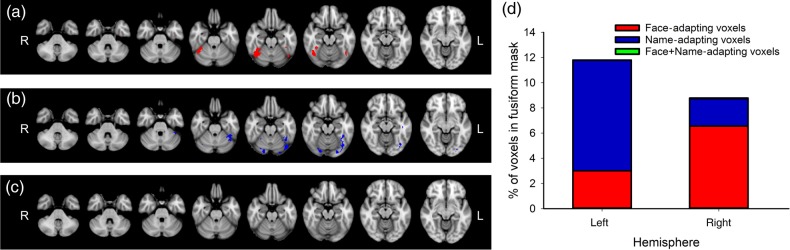

In Experiment 2, fMR adaptation was used to measure the functional selectivity of the response to faces and names. Figure 6 shows the location of voxels that showed adaptation to faces and names within the fusiform gyrus. Adaptation to faces was defined by voxels that responded more to “different-face” blocks compared with “same-face” blocks. Adaptation to names was defined by voxels that responded more to “different-name” blocks compared with “same-name” blocks. At a threshold of Z> 2.3, adaptation to faces (different-face > same-face) was found in 6.6% of voxels in the right fusiform gyrus and 3.0% of voxels in the left fusiform gyrus. In contrast to the right-lateralized adaptation to faces, adaptation to names (different-name > same-name) was found in 8.6% of voxels in the left fusiform gyrus, but in only 2.2% of the voxels in the right fusiform gyrus. To determine whether adaptation to faces and names occurred in the same voxels, we compared the overlap in adaptation. Although adaptation to faces and names occurred in more posterior regions of the fusiform gyrus, we found minimal overlap in the adaptation (left hemisphere: 1.9% and right hemisphere: 0%). Supplementary Table 2 shows the percentage of significant voxels at different threshold levels. Supplementary Figure 4 shows this analysis across each of the subregions of the fusiform gyrus. This shows that significant voxels were more evident in more posterior regions of the fusiform gyrus.

Figure 6.

Adaptation to faces and words in the fusiform gyrus. (a) Voxels within the fusiform gyrus showing adaptation to familiar faces are shown in red. (b) Voxels within the fusiform gyrus showing adaptation to familiar names are shown in blue. (c) Voxels within the fusiform gyrus showing adaptation to familiar faces + names are shown in green. (d) Percentage of voxels within the fusiform gyrus that showed adaptation to faces (red), adaptation to names (blue), and faces + names (green). There was bilateral adaptation to both faces and names; however, we found no significant adaptation to blocks of faces + names. Statistical images were thresholded at Z > 2.3.

Next, we asked whether information about faces and names is integrated in the fusiform gyrus by determining whether there are neural responses based on a common identity indicated by the person's face or name. To address this question, we compared the response to blocks of stimuli involving a familiar face and familiar name with the same identity (i.e., David Beckham's face and David Beckham's name) with the response to blocks involving a familiar face and familiar name with a different identity (i.e., David Beckham's face and Rowan Atkinson's name). Supplementary Table 2 presents the percentage of significant voxels at different threshold levels. This shows that <1% of voxels showed adaptation to the face + name condition, even at the lowest threshold (P < 0.05, uncorrected).

Discussion

The aim of this study was to determine the extent to which the neural representation of words and faces is spatially and functionally distinct within the fusiform gyrus. In Experiment 1, we found regions of the posterior fusiform gyrus that were only selective to either words or faces and other regions that showed selectivity for both words and faces. An MVPA showed distinct patterns of neural response to words and faces within the fusiform gyrus. In Experiment 2, we used an fMR-adaptation paradigm to explore the integration of information about familiar faces and names. There was minimal overlap in the adaptation to faces and names in the fusiform gyrus. There was also little evidence for adaptation to faces and names, suggesting that information about familiar faces and names is not integrated at this stage of processing.

Our results show that there are spatially distinct regions of the fusiform gyrus that are only selective for faces or words. In Experiment 1, we compared the response to faces and words with different control conditions. Face-selective regions were found predominantly in the right hemisphere. In contrast, word-selective regions were predominantly found in the left hemisphere. In Experiment 2, we also found regions of the fusiform gyrus that only adapted to either familiar faces or to familiar names. Adaptation to familiar faces was found in both hemispheres, but was biased toward the right hemisphere. In contrast to faces, adaptation to names was lateralized toward the left hemisphere. The finding that there are distinct face-selective and word-selective regions in the fusiform gyrus is relevant to neuropsychological studies that have reported cases of prosopagnosia or alexia in which either face or word recognition can be differentially impaired [Farah 1991; Behrmann et al. 1992; for reviews, see Barton (2011) and Susilo and Duchaine (2013)]. It is conceivable that lesions that only disrupt regions of the fusiform gyrus that are selective for faces would lead to relatively pure cases of prosopagnosia, whereas lesions to regions of the fusiform gyrus that are selective for words would result in relatively pure cases of alexia. It is interesting to note that the hemispheric bias for deficits affecting words and faces in the neuropsychological evidence is also reflected in the pattern of fMRI response.

However, we also found regions of the fusiform gyrus that were both face-selective and word-selective. In both Experiments 1 and 2, there were voxels in both the right and left hemisphere that showed selectivity or adaptation for both words and faces. These results suggest that words and faces might share to some degree common neural processing. This spatial overlap is not predicted by a strict domain-specific organization in which discrete neural regions respond selectively to specific categories of visual information. Instead, the overlap we show is consistent with a domain-general perspective in which common neural processes contribute to the visual processing of faces and words. Evidence from neuropsychological case studies is also relevant here, as testing of patients reveals that lesions within the fusiform gyrus rarely lead to completely circumscribed deficits in specific visual categories (Behrmann and Plaut 2013). For example, individuals with prosopagnosia after damage to the fusiform gyrus are not only severely impaired at face recognition, but can also show less dramatic impairments in recognizing words (Behrmann and Plaut 2014). Similarly, individuals with pure alexia after lesions to the VWFA region are not only impaired at word recognition, but can also be impaired to some degree at recognizing faces (Behrmann and Plaut 2014; Roberts et al. 2012).

To probe the extent to which overlapping patterns of response might be able to predict patterns of response to words and faces, we performed an MVPA using all the voxels in the fusiform gyrus. We found that the pattern of response could be used to discriminate both words and faces. In contrast to our univariate analysis, we found that the discrimination or reliability of patterns of response to words and faces was not significantly different in either the right or left hemisphere. Based on the lateralized responses to faces and words that we and others have reported, a domain-specific account would predict that the pattern of response to faces would be more distinct in the right hemisphere and the pattern of response to words would be more distinct in the left hemisphere. However, we even found distinct patterns to words in the right hemisphere, which shows very little selectivity for words [see also Nestor et al. (2012)]. This favors the domain-general interpretation and is consistent with the finding that patterns of fMRI response can distinguish between different object categories even when the most selective voxels are removed from the analysis (Haxby et al. 2001). Finally, we asked whether there were distinct patterns of response to words and faces in regions that were both face-selective and word-selective. Our results show that even in these regions it was possible to discriminate between faces and words, offering strong support to the domain-general position.

Although we were able to demonstrate spatial overlap in the response to faces and words in the fusiform gyrus, an important question concerns whether information from words and faces is functionally integrated at this stage of processing. In Experiment 2, we directly tested whether information about faces and words is combined in the fusiform gyrus, by looking at the response to familiar faces and names. Models of person recognition (Bruce and Young 1986; Burton et al. 1990) suggest that information about faces and names is integrated through a common person identity node. Accordingly, we asked whether this integration of face and name information could be found in the fusiform gyrus. To do this, we compared the response to sequences of faces and names that have the same identity with sequences in which the identity is different. We found no difference in the response between these 2 conditions in the fusiform gyrus. Thus, our data fail to provide any evidence for the functional integration of words and faces at this stage of cortical processing.

A variety of evidence that has suggested patterns of response in the ventral visual pathway are linked to the categorical or semantic information that the images convey (Chao et al. 1999; Kriegeskorte et al. 2008; Connolly et al. 2012). The lack of integration of responses to familiar names and faces suggests that the neural representation in the fusiform gyrus may not be based on the semantic properties of visual information. Indeed, it is unclear from these studies how the selectivity for high-level properties in the ventral visual pathway might arise from the image-based representations found in early stages of visual processing (Andrews et al. 2015). Recently, we proposed a solution to this problem by showing that image properties of visual objects can predict patterns of response in the ventral visual pathway (Rice et al. 2014; Watson et al. 2014). These findings suggest that similarities and differences in the pattern of response to words and faces that we report in this study may reflect the relative similarities in the image properties of these object categories.

In conclusion, we found a bilateral representation of faces and words in the fusiform gyrus. However, there was a bias toward the left hemisphere for words and the right hemisphere for faces. Although we found that there were regions of the fusiform gyrus that were only selective for either words or faces, MVPA showed evidence for distinct patterns of response and overlapping neural representations for words and faces, which is consistent with a domain-general representation. Finally, we could not find any evidence for any integration of face and word information in the fusiform gyrus.

Funding

This work was supported by a grant from the Wellcome Trust (WT087720MA).

Supplementary Material

Notes

We thank Alex Mitchell, David Watson, Hollie Byrne, Martyna Slezak, and Zeynep Guder for their help on this project. Conflict of Interest: None declared.

References

- Andrews TJ, Watson DM, Rice GE, Hartley T. 2015. Low-level properties of natural images predict topographic patterns of neural response in the ventral visual pathway. J Vis. 15(7):3, 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker CI, Liu J, Wald LL, Kwong KK, Benner T, Kanwisher N. 2007. Visual word processing and experiential origins of functional selectivity in human extrastriate cortex. Proc Natl Acad Sci USA. 104:9087–9092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barton J. 2011. Disorder of higher visual function. Curr Opin Neurol. 24:1–5. [DOI] [PubMed] [Google Scholar]

- Behrmann M, Plaut DC. 2014. Bilateral hemispheric processing of words and faces: evidence from word impairments in prosopagnosia and face impairments in pure alexia. Cereb Cortex. 24:1102–1118. [DOI] [PubMed] [Google Scholar]

- Behrmann M, Plaut DC. 2013. Distributed circuits, not circumscribed centres, mediate visual recognition. TICS. 17:210–219. [DOI] [PubMed] [Google Scholar]

- Behrmann M, Plaut DC, Nelson JA. 1998. Visual complexity in letter-by-letter reading: pure alexia is not so pure. Neuropsychologia. 36:1115–1132. [DOI] [PubMed] [Google Scholar]

- Behrmann M, Winocur G, Moscovitch M. 1992. Dissociation between mental imagery and object recognition in a brain damaged patient. Nature. 359:636–637. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. 1986. Understanding face recognition. Br J Psychol. 77:305–327. [DOI] [PubMed] [Google Scholar]

- Burton M, Bruce V, Johnston RA. 1990. Understanding face recognition with an interactive activation model. Br J Psychol. 81:361–380. [DOI] [PubMed] [Google Scholar]

- Chao L, Haxby JV, Martin A. 1999. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 2:913–919. [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S. 2004. Specialization within the ventral stream the case for the visual word form area. Neuroimage. 22:466–476. [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Naccache L, Lehéricy S, Dehaene-Lambertz G, Hénaff MA, Michel F. 2000. The visual word form area: spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain. 123:291–307. [DOI] [PubMed] [Google Scholar]

- Connolly AC, Guntupalli JS, Gors J, Hanke M, Halchenko YO, Wu Y, Abdi H, Haxby JV. 2012. Representation of biological classes in the human brain. J Cogn Neurosci. 24:868–877. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S, Cohen L. 2007. Cultural recycling of cortical maps. Neuron. 56:384–398. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Cohen L. 2011. The unique role of the visual word form area in reading. TICS. 15:254–262. [DOI] [PubMed] [Google Scholar]

- Farah MJ. 1991. Patterns of co-occurrence among the associative agnosias: implications for visual object representation. Cogn Neuropsychol. 8:1–19. [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Avidan G, Itzchak Y, Malach R. 1999. Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron. 24:187–203. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. 2001. fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychol. 107:293–321. [DOI] [PubMed] [Google Scholar]

- Hanke M, Halchenk YO, Sederberg PB, Olivetti E, Fründ I, Rieger JW, Herrmann CS, Haxby JV, Pollmann S. 2009. PyMVPA: a unifying approach to the analysis of neuroscientific data. Front Neuroinform. 3:3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini M, Furey M, Ishai A, Schouten J, Pietrini P. 2001. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 293:2425–2430. [DOI] [PubMed] [Google Scholar]

- Kanwisher N. 2010. Functional specificity in the human brain: a window into the functional architecture of the mind. Proc Natl Acad Sci USA. 107:11163–11170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. 1997. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 17:4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Ruff DA, Kiani R, Bodurka J, Esteky H, Tanaka K, Bandettini PA. 2008. Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron. 60:1126–1141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nestor A, Behrmann M, Plaut DC. 2012. The neural basis of visual word form processing: a multivariate investigation. Cereb Cortex. 23:1673–1684. [DOI] [PubMed] [Google Scholar]

- Polk TA, Park J, Smith MR, Park DC. 2007. Nature versus nurture in ventral visual cortex: a functional magnetic resonance imaging study of twins. J Neurosci. 27:13921–13925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Allison T, Gore JC, McCarthy G. 1995. Face-sensitive regions in human extrastriate cortex studied by functional MRI. J Neurophysiol. 74:1192–1199. [DOI] [PubMed] [Google Scholar]

- Rice GE, Watson DM, Hartley T, Andrews TJ. 2014. Low-level image properties of visual objects predict patterns of neural response across category-selective regions of the ventral visual pathway. J Neurosci. 34:8837–8844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts DJ, Woolams AM, Kim E, Beeson PM, Rapscak SZ, Lambon Ralph MA. 2012. Efficient visual object and word recognition relies on high spatial frequency coding in the left posterior fusiform gyrus: evidence from a case-series of patients with ventral occipitotemporal cortex damage. Cereb Cortex. 23:2568–2580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Starrfelt R, Behrmann M. 2011. Number reading in pure alexia: a review. Neuropsychologia. 49:2283–2298. [DOI] [PubMed] [Google Scholar]

- Susilo T, Duchaine B. 2013. Dissociations between faces and words: comment on Behrmann and Plaut. TICS. 17:545. [DOI] [PubMed] [Google Scholar]

- Watson DM, Hartley T, Andrews TJ. 2014. Patterns of response to visual scenes are linked to the low-level properties of the image. NeuroImage. 99:402–410. [DOI] [PubMed] [Google Scholar]

- Zhu Q, Song Y, Hu S, Li X, Tian M, Zhen Z, Dong Q, Kanwisher N, Liu J. 2010. Heritability of the specific cognitive ability of face perception. Curr Biol. 20:137–142. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.