Abstract

Background

Narrative data entry pervades computerized health information systems and serves as a key component in collecting patient-related information in electronic health records and patient safety event reporting systems. The quality and efficiency of clinical data entry are criticalin arriving at an optimal diagnosis and treatment. The application of text prediction holds potential for enhancing human performance of data entry in reporting patient safety events.

Objective

This study examined two functions of text prediction intended for increasing efficiency and data quality of text data entry reporting patient safety events.

Methods

The study employed a two-group randomized design with 52 nurses. The nurses were randomly assigned into a treatment group or a control group with a task of reporting five patient fall cases in Chinese using a web-based test system, with or without the prediction functions. T-test, Chi-square and linear regression model were applied to evaluating the outcome differences in free-text data entry between the groups.

Results

While both groups of participants exhibited a good capacity for accomplishing the assigned task of reporting patient falls, the results from the treatment group showed an overall increase of 70.5% in text generation rate, an increase of 34.1% in reporting comprehensiveness score and a reduction of 14.5% in the non-adherence of the comment fields. The treatment group also showed an increasing text generation rate over time, whereas no such an effect was observed in the control group.

Conclusion

As an attempt investigating the effectiveness of text prediction functions in reporting patient safety events, the study findings proved an effective strategy for assisting reporters in generating complementary free text when reporting a patient safety event. The application of the strategy may be effective in other clinical areas when free text entries are required.

Keywords: Data Entry, Text Prediction, Usability Evaluation, two-group randomized design

1. Introduction

Many attempts have been made to investigate the difficulties in clinical data entry in order to promote the acceptance and quality-in-use of clinical information systems[1–4]. With the advance of non-narrative entry templates and natural language processing techniques, the application of structured entries in clinical information systems is increasing because of the merit of interoperability and reuse. However, given the complexity of healthcare, failure to include essential fields and lack of options in structure entries are still common. Structured entries also received critiques due to lack of flexibility and expressiveness in clinical communication. Therefore, unstructured narrative data entry plays an indispensable role in clinical data entry.

For patient safety event reporting, narrative entry has been a prevalent and dominant format. However, it is now in a transition of becoming a supplementary format to non-narrative forms released by national and international organizations[5, 6]. Narrative comment field is intended to collect case details beyond structured entries. Previous studies showed that voluntary reporters usually ignored this field or described events with inaccurate and incomplete terms and sentences[7, 8]. Two major barriers are identified due to the multi-tasking and busy nature of healthcare and the lack of languages or knowledge for reporters to describe patient safety events in detail[9]. To help remove the barriers and achieve quality-in-use, it requires a user-centered design process which embraces cultural, strategic and technical considerations[10]. We proposed to examine the utilization of text prediction applied in the narrative fields in reporting patient safety events.

Text prediction, also known as word, sentence or context prediction originated in augmentative and alternative communication (AAC) to increase text generation rates for people with the disabilities of motor or speech impairment[11, 12]. The advance of natural language processing techniques has brought text prediction into a broader scope of daily computing activities, such as mobile computing[13] and radiography reports[14]. Studies on text prediction have been focusing on optimization[15] and impact[16]. This study focusing on the impact evaluation, would respond three basic concerns regarding text prediction in reporting patient safety events. These concerns include whether text prediction would increase (1) reporter’s engagement in the comment field; (2) quality of narrative entry, which is highly valuable in generating actionable knowledge yet received little attention; (3) efficiency of reporting patient safety events, which remains unclear based on mixed results across the fields[16]. In this study, we employed a two-group randomized experiment to examine the impact of text prediction in the three aspects.

2. Background

This study is grounded in a user-centered design of patient safety event reporting systems. Reporting systems in use show the problems of underreporting[17] and low quality of reports[8, 18], though efforts from all levels are made to improve the systems for years[19, 20]. Prior to this study, we have consecutively conducted heuristic evaluation, cognitive task analysis and think-aloud user testing[4, 7, 21, 22] which revealed interface representational issues and identified that the introduction of text prediction holds promise in increasing efficiency and quality of event reporting. Therefore, text prediction functions on data entry were proposed to bridge the information gaps induced by competing tasks in the work domain and language or knowledge needed for quality reporting[23].

In this study, we prototyped and examined two prediction functions aiming at facilitating data entry in free text format. The process may be helpful for the introduction and customization of domain specific, pre-defined drop-down lists as well as the optimization of text prediction accuracy and interface representation.

3. Methods and Materials

3.1. Participants

Potential candidates who were nurses and experienced in reporting and analyzing patient safety events in Tianjin First Central Hospital (TFCH) in Tianjin, China were identified and invited to participate in the study. Two candidates were on leave of absence during the study period, and three candidates felt not confident with operating computers. As a result, the remaining 52 nurses from 21 clinical departments enrolled in the study. All nurses were female between the ages of 30 and 52 years. On average, they had approximately 20 years of nursing experience and reported patient safety events for at least four years since the implementation of a citywide computerized reporting system in 2009. None of them used the interfaces of this study before. Each participant signed an informed consent form approved by the Ethics Committee at the TFCH (No.E2012022K). This study was also approved by the Institutional Review Board at the University of Texas Health Science Center at Houston (No. HSC-SBMI-12-0767).

3.2. Interfaces

Two experimental interfaces were developed for the purpose of control over the configurations and data collection. The interfaces have two language versions in English and Chinese. The contents and layouts of two interfaces were identical, carrying the same task of the 13 multiple-choice questions (MCQs)[24] and a narrative field for the collection of case details. The MCQs and narrative field were developed based on the Common Formats released by AHRQ. A sample of MCQs is shown in Figure 2. A single exception between the two experimental interfaces was the provision of text prediction functions to the narrative field. During the experiment, participants had to complete the MCQs prior to the comment field. We added branching logic to the MCQs to ensure that only the relevant questions were displayed and irrelevant questions were skipped based on the response to a branching question. For example, when ‘assisted fall’ was selected, all questions associated with ‘unassisted fall’ would be skipped. The branching also helped prepare prediction functions.

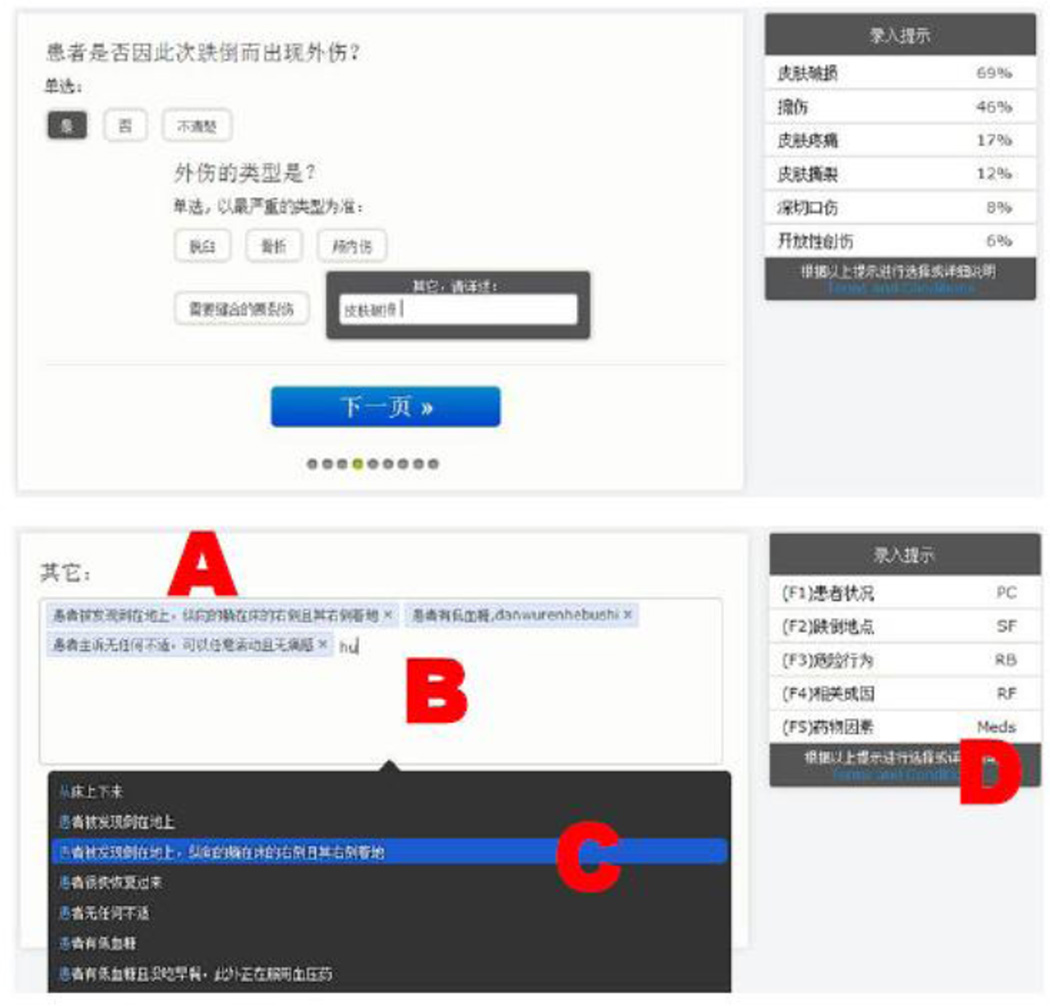

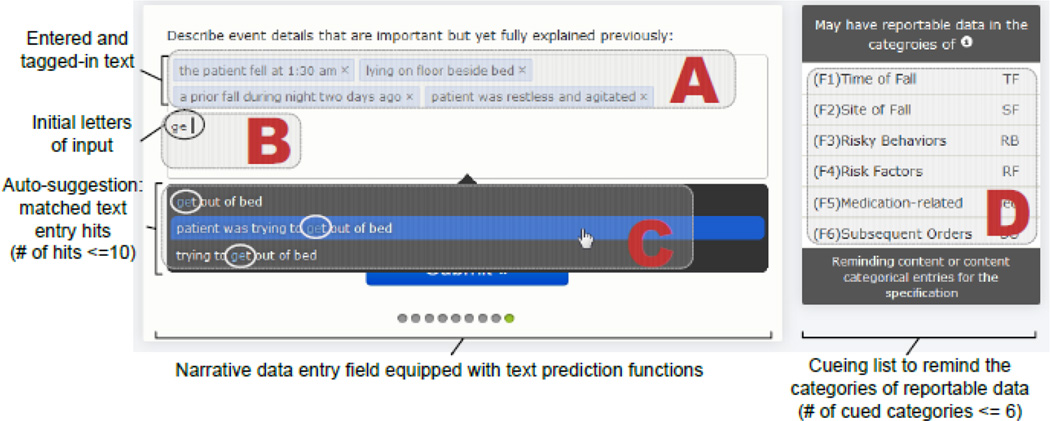

Figure 2.

The layout in the Chinese equivalent of Figure 1 based on domain experts’ review. This version of interface was used in Tianjin First Central Hospital where the randomized tests were conducted.

Figure 1 illustrates the interface in English and Figure 2 illustrates the one in Chinese where the narrative comment field resides with text prediction functions. The interfaces with prediction functions were developed using PHP 5.2.6, JavaScript, MySQL 5.0.51b plus a JavaScript library (JQuery 1.7[25]) and two open source modules (SlidesJS[26] and Tag-it[27]).

Figure 1.

the layout of interface elements associated with the narrative field and text prediction functions, cueing list (CL) and autosuggestion (AS). CL (shown in box D) served as a reminder of the key characteristics of reportable data associated with the event. Matched AS was triggered and listed as entry options when initial letters (shown in box B & C) of description were typed in. Matched letters and cursor line were highlighted in blue as shown in the box C. The reporter was expected to select one of them and make any changes where deemed necessary.

Following the experimental designs in peer reviewed publications[16, 28], the predicted text items were manually selected per case from a pool of entries which was automatically generated based upon a collection of fall cases. Two domain experts (X.L. & Y.S.) reviewed all testing cases, transcribed and categorized the key elements with colloquial language in both Chinese characters and Pinyin Input. These contents were populated into a text entry dictionary, a pool of AS and CL items. A Soundex-based phonetic matching function of MySQL was employed for AS to retrieve and present matched entries in the dictionary. The number of listing items for AS and CL was not greater than ten, a trade-off number balancing inspecting efforts against predicting sensitivity[15]. On the treatment interface where AS and CL were offered, participants were allowed to choose from the predicted entries and modify the entries. On the control interface where no prediction was offered, participants had to key in narratives by using a standard QWERTY keyboard.

3.3. Testing cases

Each participant was asked to report five cases in a randomized sequence. The cases were selected from two sources – a repository of de-identified 346 fall cases[18] and a publicly accessible online database of Morbidity and Mortality (Web M&M)[29]. The cases were translated into Chinese, rephrased and reviewed by two domain experts for the purpose of quality, readability, and consistent complexity. The narrative excerpted from one of cases is shown as an instance below.

“… patient was alert and oriented X3 (person, time and location) upon assessment, and instructed upon admittance not to getting up without assistance. He had been sleeping and attempted to get up to go to the bathroom. He forgot to call the staff to have plexipulses (a device) undone, and tripped on plexi tubing and attempted to catch himself on the overhead bars. He landed on the floor…”

3.4. Experimental design

With a permuted-block algorithm and random block[30], fifty-two participants were randomly assigned to two groups. Twenty-five participants were allocated into the control group and twenty-seven into the treatment group. During each participant’s testing, the presenting sequence of five cases was randomly determined at the time of allocation. According to stratified randomization, one case was fixed at the first position and the other four were fully randomized. Prior to each testing, there was a mandatory training session combined with verbal instructions and hands-on practice. All participants were trained on both control and treatment interfaces until they felt comfortable with the interfaces. For investigating comments or any suggestions, each participant was briefly surveyed after completing experiment for collecting any comments or suggestions on the reporting test.

In practice, when a patient safety event occurred, a reporter would initiate a report upon witness’s word-of-mouth information. Similarly, the interfaces presented a case on the first page. Participants were expected to read the case descriptions and report it based on recall. Pauses and questions during the timed sessions were discouraged except when participants were in transition between reports. Keystroke level operations including mouse clicks and keystrokes were timed and logged into a MySQL database. All reporting sessions were recorded by using Camtasia Studio® 7 for data reconciliation.

3.5. The processing of data

We first analyzed the completion time and response accuracy on the MCQs through SQL queries of logged keystrokes. A t-test was conducted to examine whether or not the identical part prior to text data entry was of significant difference between the groups. Then, we analyzed ten dependent measures (shown in Table I) in the text data entry to investigate each participant’s performance and variation in terms of efficiency, effectiveness, and engagement. Apart from the nine measures retrieved from the logged data, report comprehensiveness was measured based on a blind review of two domain experts. The experts used de-identified narrative comments of both groups for rating. As illustrated in Section (A) of Figure 1, the narrative comments were segmented by a pipeline symbol “|” that was added as participants pressed <Enter> key to conclude the description of an event. The experts independently reviewed the text chunks by grading them in a binary score either one or zero. Score one indicates a chunk was event-related and not a duplicate, the chunk thus contributed one point to an overall comprehensiveness score of the report. The range of overall comprehensiveness scores was between one and twelve in the study. Any rating differences that were larger than one point were resolved as an agreement by the research team. The mean of comprehensiveness scores was used to represent the report comprehensiveness.

Table I.

Dependent measures on efficiency, effectiveness, engagement and autosuggestion

| Measures | Data collection | Evaluating dimensions |

Methods |

|---|---|---|---|

| Efficiency-related | |||

| Completion time | Recorded at the millisecond level by interfaces |

Time length of completing a narrative comment |

Descriptive statistics, and t- test |

| Keystrokes | Recorded by interfaces | Keystroke counts of completing a comment |

Descriptive statistics, and t- test |

| Text generation rate | Text length divided by completion time |

The speed of text generation, at the unit of “letters/second” |

Descriptive statistics, and t- test |

| Effectiveness-related | |||

| Text length | Recorded and calculated at the unit of letter |

Text length (in letters) of a narrative comment |

Descriptive statistics, and t- test |

| Text chunks | As demonstrated in Figure 1, pressing <enter> resulted in a tag in the text segment, i.e. text chunk |

Number of text chunks in a comment field |

Descriptive statistics |

| Chunk length | Text length divided by number of text chunks |

Mean length of text chunks in a comment |

Descriptive statistics |

|

Reporting comprehensiveness |

A blind review by two experts; reached an agreement when score difference > 1 |

Number of event characteristics described in the text |

Expert review, descriptive statistics and t- test |

| Engagement-related | |||

| Non-adherence rate | Amount of unanswered commentary fields divided by amount of commentary fields in each group |

Proportion of narrative comment fields that were ignored |

Descriptive statistics, and Chi-squared test |

| AutoSuggestion (AS)-related | |||

|

Influenced chunks by AS |

Identified text contained in original AS |

Number of text chunks that accepted the text suggested by AS |

Descriptive statistics |

| AS influential rate | Number of influenced chunks divided by the number of total text chunks in a comment |

Percentage of text chunks contained the text selected via AS rather than key in |

Descriptive statistics |

To examine the impact of text prediction (CL and AS) functions on participants’ performance, a t-test and Chi-squared test were conducted between the two groups. Kernel density was applied to examine the distributions of text generation rate and the reporting comprehensiveness of narrative comments between the groups. A linear regression model was also used in examining the interactions between the measures. All statistical computing were executed by using MySQL embedded functions and R Studio ver 0.97.

4. Results

All participants, with each reported five cases, successfully completed the experiment and thus generated 260 reports. There were 25 participants allocated in the control and 27 in the in the treatment group, accounting for 125 and 135 reports respectively. The means of participants’ ages were 43.6±5.8 versus 41.1±6.6 years in the control and treatment group. The 260 reports contained 2,849 MCQs answers and 238 unstructured narrative comments. The completion time of MCQs was 131.0±50.0 versus 129.5±45.7 and the response accuracy were 79.4% versus 79.2% in the control and treatment group. No significant differences between the groups were found in the abovementioned variables according to the t-test at significance level of 0.05. Of the 238 unstructured narrative comments, 105 comments were contributed by the control group and the rest 133 comments by the treatment group. The ignored comment fields were 20 versus 2 between the two groups. The detailed findings on narrative comment fields are shown in Table II.

Table II.

Participants’ performance on the narrative comment field between groups

| Measures | Samples adjusted excluding blank fields | |||

|---|---|---|---|---|

| Control (N=105) |

Treatment (N=133) |

Variation | p-value | |

| Efficiency-related | ||||

| Completion time (seconds) | 139.6±99.6 | 142.9±82.2 | ↑ 2.3% | 0.782 |

| Keystrokes | 144.9±110.7 | 104.0±86.9 | ↓ 28.2% | 0.002 |

| Text generation rate (letters/second) | 0.95±0.35 | 1.62±0.99 | ↑ 70.5% | 0.000 |

| Effectiveness-related | ||||

| Text length (letters) | 127.9±96.6 | 185.1±86.4 | ↑ 44.7% | 0.000 |

| Text chunks | 4.1±2.5 | 5.4±2.5 | ↑ 31.7% | 0.000 |

| Chunk length (letters) | 30.3±13.1 | 37.7±18.6 | ↑ 24.4% | 0.000 |

| Reporting comprehensiveness | 3.8±2.3 | 5.1±2.4 | ↑ 34.2% | 0.000 |

| Engagement-related | ||||

| Non-adherence rate | 20/125(16.0%) | 2/135(1.5%) | ↓ 14.5% | 0.000 |

| AS-related | ||||

| Influenced chunks (N=120) | - | 3.8±1.9 | - | - |

| Influential rate | - | 66.9% | - | - |

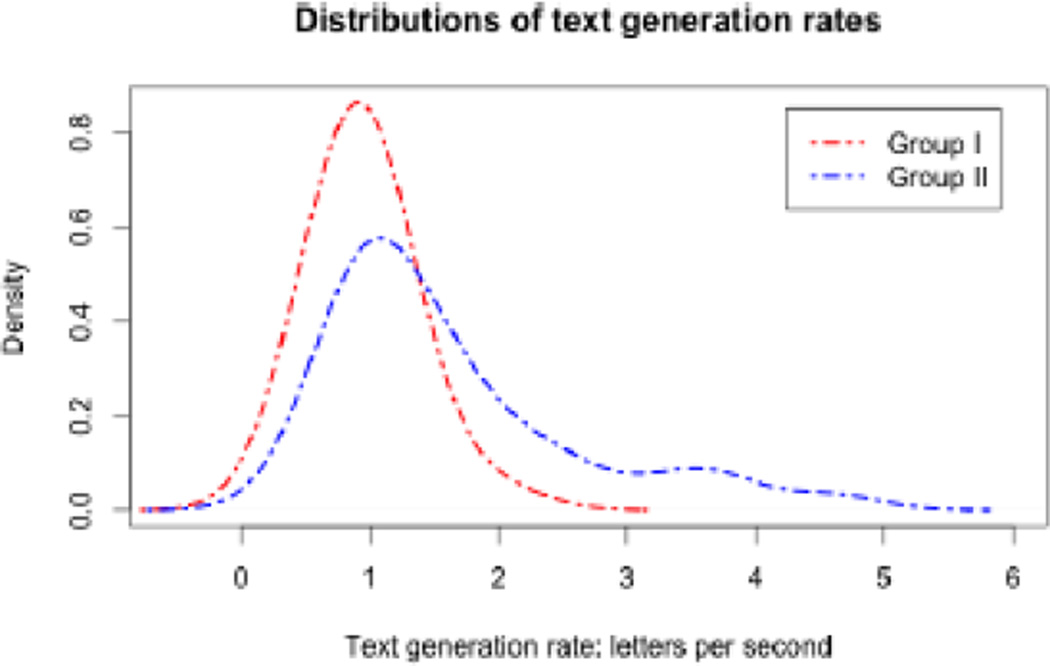

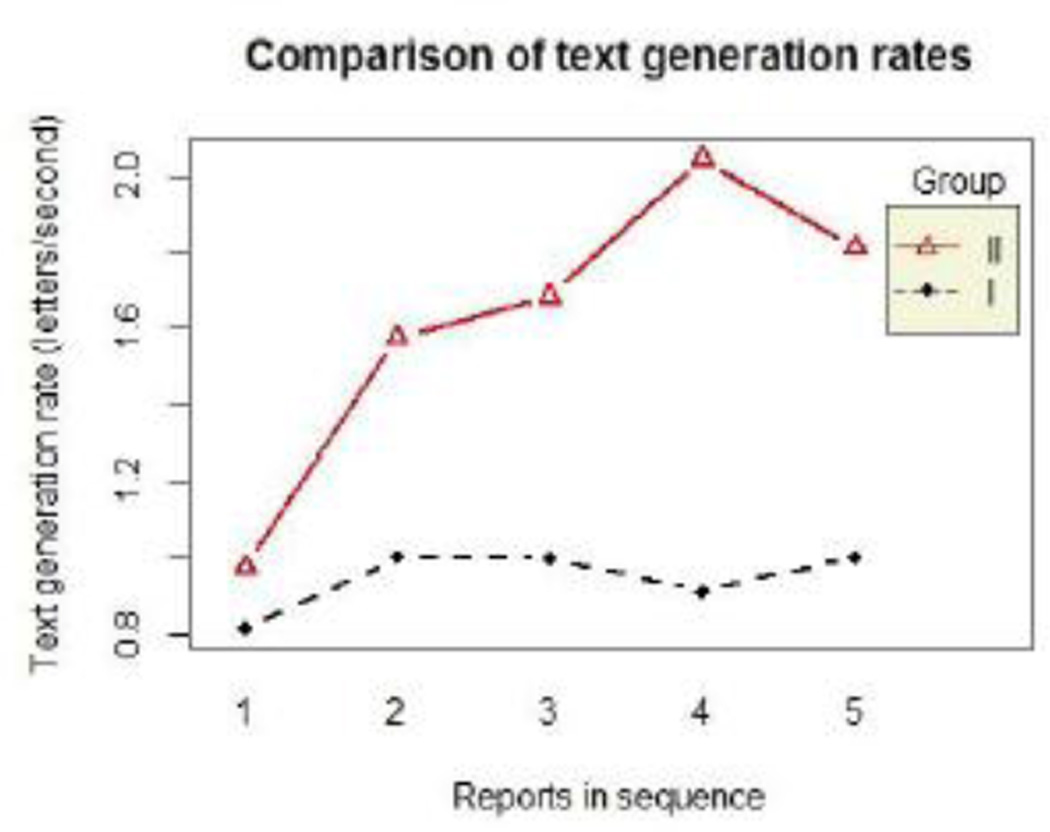

Efficiency – text generation rate

According to Table II, the completion time of the narrative comments did not show a significant effect (p = 0.782) between the groups. However, the participants in the treatment group contributed 44.7% more text with 28.2% fewer keystrokes than those in the control group, accounting for a 70.5 % increase in the text generation rate, which was a significant improvement in reporting efficiency at significant level of 0.01. Figure 3 shows a comparison of distributions of text generation rates between the two groups, which reveals the text prediction helped the participants in the treatment group reach a higher rate in generating text (P < 0.01).

Figure 3.

Text generation rates in the control group (I) and treatment group (II)

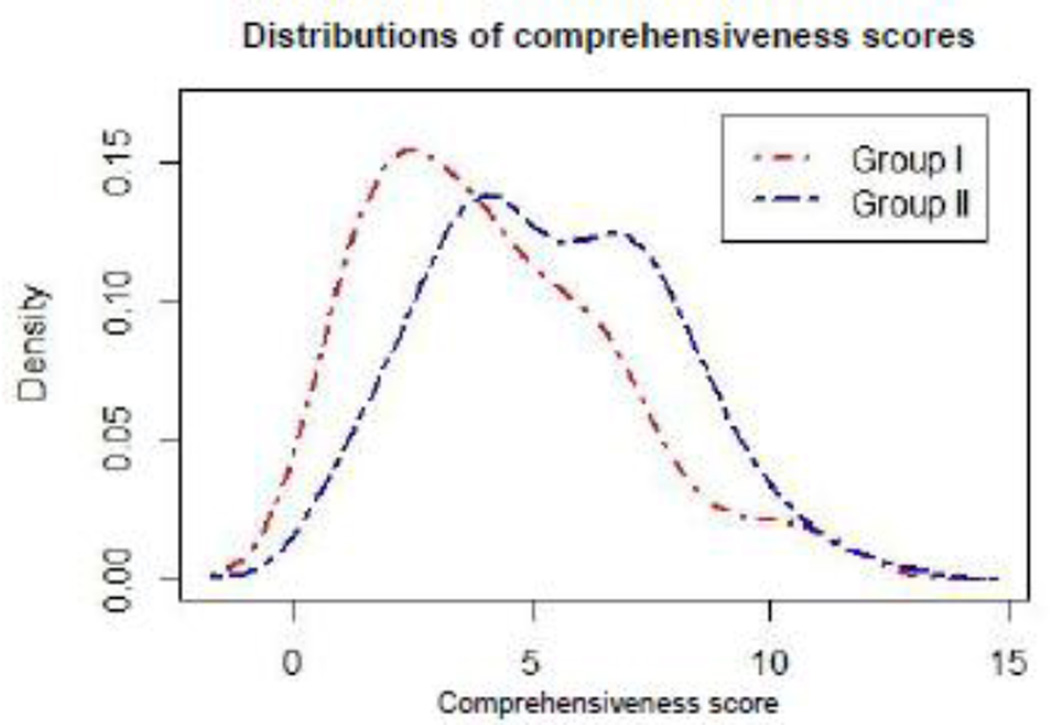

Effectiveness – reporting comprehensiveness

The number of text chunks (5.4±2.5) and the mean length of the chunks (37.7±18.6) in the treatment group are significantly (p < 0.001) greater than those (4.1±2.5 and 30.3±13.1) in the control group. Most text chunks received review scores for reporting comprehensiveness, ranging from 5.1±2.4 in the treatment to 3.8±2.3 in the control group. Given the number of text chunks as the denominator and the comprehensiveness score as the nominator, the approximate qualified text rate was derived as 92.7% versus 94.5% (p=0.984) between the control and treatment group. Figure 4 illustrates the distribution of scores between the groups. The difference of comprehensiveness scores is statistically significant, indicating the effective intervention because of the prediction functions.

Figure 4.

Completeness scores in the control group (I) and treatment group (II)

Participant’s engagement – non-adherence rate

The Chi-squared test identified a significant difference in the non-adherence rates of the narrative comment field between the groups. There were 20 comment fields out of 125 reports were left blank in the control group; whereas 2 out of 135 in the treatment group. The lower non-adherence rate indicates that participants were more actively engaged in describing the event details. Such an effect was expected because of the contribution CL in the control group.

AS – utility and influence rate

Among 133 narrative comments generated in the treatment group, AS was used 460 times during the text input on 120 comments, accounting for an AS utility rate of 90.2%. On average, AS influenced 3.8±1.9 text chunks with an overall influential rate of 66.9%. The regression analysis showed this influential rate increased as the experiment proceeded (p=0.029). Meanwhile, the text generation rate in the treatment group increased at a steady pace as shown in Figure 5. In contrast, the text generation rates in the control group showed certain flatness along the process of reporting.

Figure 5.

Text generation rates varying as the experiment proceeded in the control group (I) and treatment group (II)

5. Discussion

Clinicians working under time constraints are usually expected to enter data for documentation in a timely and efficient manner[31, 32]. The comprehensiveness of entered data is critical to clinicians’ decision-making and creation of actionable knowledge. To accommodate the expectation in patient safety event reporting, we introduced two text prediction functions of CL and AS, which are attached to narrative comment field pervasively used in patient safety reporting systems. A two-group randomized design examined the impact of the predictions on participant performance in terms of efficiency, effectiveness and engagement. The results are positive and may be of guidance towards designing and optimizing reporting systems towards a safer healthcare system.

5.1.Reporting efficiency

One of the major findings explains that the text predictions can improve participant’s efficiency, which is critical to busy clinicians. The study scrutinized three measures associated with the efficiency in terms of completion time, keystrokes and text generation rates. During a similar period of time, the treatment group produced much more text, which translated into a higher text generation rate, than the control group. The upward trend of text generation rate in the treatment group implies the prediction functions can be manifest when users are adapted to them.

In the treatment group, participants generated more text with 28.2% fewer keystrokes. This is consistent with the study results in a variety of fields[14, 33]. Nevertheless, the introduction of the predictions, accounted for increased cognitive loads, eye gaze movements and the total number of mouse clicks, presents mixed results because keystroke savings alone may not adequately explain the increased efficiency in this study[34–36]. With the trend of health information systems migrating from desktops to mobile terminals, the core value of keystroke savings with on-screen keyboards would be amplified. Usually, keystrokes with on-screen keyboards have a greater time cost than those with regular computer keyboards.

5.2. Reporting comprehensiveness

The prediction function CL and AS contributed to the comprehensiveness score of narrative comments by cueing frequent characteristic categories, sentences and terms associated with the event, and leveraging the breadth and depth of comments. The functions served as mnemonic tips which transform a pure recall process into a mixed effort comprising of recall and recognition. Consequentially, the participants in the treatment group generated more information in terms of text length, number of information chunks and comprehensiveness scores.

Qualified text rate measures the percentage of valid and useful text reporter generated. We noted that typographical errors or imprecise phrases are inevitable within the text generated from both groups. However, the treatment group had a tendency of fewer issues than the control group. Qualified text rate in the study was merely an estimate, cueing functions that were utilized in the treatment group imply a supportive application for event reporting systems.

5.3. Participant’s engagement

In contrast to our previous study which showed a 73.3% non-adherence rate among inexperienced users[7], the experienced users of both groups in this study were much more engaged in generating comments. We believe that experienced participants possess better knowledge and reporting skills which help them identify the essence of reports and complete the case descriptions in depth.

As shown in Table I, the non-adherence rate of control group was 14.5% and treatment group was 1.5% as a result of the introduction of CL in the comment field. Through the post experiment survey, some participants in the control group explained their non-adherence as (1) a slip of skipping the field unintentionally; (2) having no clues on further describing event characteristics and (3) memory fade. As a remedy, the dynamic display of CL may have altered the optional nature of the comment field or may be more inviting to participant’s conscious attention[37] to the interface. This dynamic CL rendered a compelling message to the participants signaling the importance of filling the field.

5.3. Limitations and Future Research

We noticed that the investigated comment fields served as a complementary component in a single narrative data field functioning as a catch-up after the MCQs regarding an identical topic. As a result, the findings may be not totally applicable to pure free text fields without an introduction of structured entries. The topics on patient safety can be more complex when beyond one single event type.

The high quality CL and AS entries prepared by a manual process may not be fully scalable when a completely automatic approach is employed based on case similarity and event frequency. Expert review of CL is highly suggested for reaching a similar quality and completeness effect presented in this study. The AS carefully prepared by domain experts in the study demonstrates high quality and may not fully represent the AS potentially created by an automatic approach. Further studies developing domain specific AS must include an expert review process so as to ensure the performance and user experience.

The number of predicted listings may vary from one setting to another. In general, an extended list would cost more time for a reporter to skim or inspect, and thus there would be a greater chance of missing correct responses when time is limited. We are interested in investigating further if there is a tradeoff between accuracy and length of the predicted listings and how the tradeoff would impact participant’s performance. For this reason, the increased comprehensiveness can only be partially credited to the introduced AS and CL functions.

Although the text generation rate and accuracy increased, AS function may become a constraint which limits the participant’s breadth of recall and thinking at certain points. This is because busy users tend to recognize or ignore choices rather than specify answers [8, 38, 39], when predefined options are provided in MCQs, event classification, error types. Thus, it is worthwhile in future research paying attention to such an open question between speed and quality.

6. Conclusion

Text data entry, as an indispensable format in clinical information systems, was demonstrated to be enhanced by the text prediction functions in terms of efficiency, data quality and engagement in a two-group randomized experiment. This study disclosed the necessity of the text prediction functions in facilitating experienced domain users in text data entry when reporting patient safety events. The quality of event reporting plays a key role in learning from the events. Simply counting numbers of the reports without an insight into the quality of the contents beyond the structured data fields may undermine the learning value of the reports.

Highlights in this study.

A two-group randomized study of testing the usability of two proposed text prediction functions in patient safety event reporting

52 experienced nurses in a top-level hospital in China participated the experiment

Text prediction prompted the user’s engagement of the narrative comment field

Text prediction improved the efficiency by leveraging the text generation rate

Text prediction ameliorated the data completeness

Summary Table.

Known on the topic

Text data entry is indispensable towards the quality care delivery but suffered from the low quality and user’s engagement

Text prediction is widely used in mobile computing.

Our contribution

Text prediction can increase the efficiency, data quality and user’s engagement of text data entry if the functions were properly designed;

Text prediction would be still helpful for experienced users to enhance their performance;

An initial investigation about the text prediction functions in support of documentation for reporting patient safety events.

Acknowledgments

The authors express heartfelt thanks to Xiao Liu, RN and Ying Sun, RN at the Office of Nursing at Tianjin First Central Hospital in China who made numerous efforts helping review and edit the testing cases, derived proper text predictor responses, scheduled the testing sessions, and graded the subjects’ commentary data. This project was in part supported by a grant on patient safety from the University of Texas System and a grant from AHRQ, grant number 1R01HS022895.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Authors’ Contributions

Yang Gong: Responsible for study design, data collection and analysis, and co-author with review and revision responsibilities

Lei Hua: Responsible for the conceptualization, study design, prototype development, testing session observation, data collection and analysis, co-authored the manuscript.

Shen Wang: Organized and coordinated with the study participants, assisted in preparing testing cases, data analysis and had review responsibilities.

Conflicts of Interest

We declare there are no conflicts of interest involved in the research.

References

- 1.McDonald CJ. The barriers to electronic medical record systems and how to overcome them. Journal of the American Medical Informatics Association. 1997 May 1;4(3):213–221. doi: 10.1136/jamia.1997.0040213. 1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kaplan B. Reducing barriers to physician data entry for computer-based patient records. Topics in health information management. 1994 Aug;15(1):24–34. PubMed PMID: 10135720. Epub 1994/07/07. eng. [PubMed] [Google Scholar]

- 3.Walsh SH. The clinician's perspective on electronic health records and how they can affect patient care. BMJ. 2004 May 13;328(7449):1184–1187. doi: 10.1136/bmj.328.7449.1184. 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hua L, Wang S, Gong Y. Text prediction on structured data entry in healthcare: a two-group randomized usability study measuring the prediction impact on user performance. Applied clinical informatics. 2014;5(1):249–263. doi: 10.4338/ACI-2013-11-RA-0095. PubMed PMID: 24734137. Pubmed Central PMCID: 3974259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.AHRQ. Users' guide AHRQ Common formats for patient safety organizations : Agency for healthcare Research and Quality. 2008 [Google Scholar]

- 6.Towards an international classification for patient safety : the conceptual framework 2009. doi: 10.1093/intqhc/mzn054. Available from: http://library.tue.nl/csp/dare/LinkToRepository.csp?recordnumber=657653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hua L, Gong Y. Design of a user-centered voluntary patient safety reporting system: understanding the time and response variances by retrospective think-aloud protocols. Studies in health technology and informatics. 2013;192:729–733. PubMed PMID: 23920653. Epub 2013/08/08. eng. [PubMed] [Google Scholar]

- 8.Gong Y. Data consistency in a voluntary medical incident reporting system. Journal of medical systems. 2009 Aug;35(4):609–615. doi: 10.1007/s10916-009-9398-y. PubMed PMID: 20703528. Epub 2010/08/13. eng. [DOI] [PubMed] [Google Scholar]

- 9.Holden RJ, Karsh BT. A review of medical error reporting system design considerations and a proposed cross-level systems research framework. Human Factors. 2007 Apr;49(2):257–276. doi: 10.1518/001872007X312487. PubMed PMID: 17447667. Epub 2007/04/24. eng. [DOI] [PubMed] [Google Scholar]

- 10.Klein G. Sources of power: how people make decisions. revised. The MIT Press; 1999. [Google Scholar]

- 11.Beukelman D, Mirenda P. Augmentative and alternative communication. 2005 [Google Scholar]

- 12.Garay-Vitoria N, Abascal J. Text prediction systems: a survey. Univers Access Inf Soc. 2006;4(3):188–203. [Google Scholar]

- 13.Mackenzie SI, Soukoreff WR. Text entry for mobile computing: Models and methods, theory and practice. Human-Computer Interaction. 2002;17(2 & 3):147–198. [Google Scholar]

- 14.Eng J, Eisner JM. Informatics in radiology (infoRAD): Radiology report entry with automatic phrase completion driven by language modeling. Radiographics. 2004 Sep 1;24(5):1493–1501. doi: 10.1148/rg.245035197. 2004. [DOI] [PubMed] [Google Scholar]

- 15.Hunnicutt S, Carlberger J. Improving word prediction using markov models and heuristic methods. Augmentative & Alternative Communication. 2001;17(4):255–264. [Google Scholar]

- 16.Koester HH, Levine SP. Learning and performance of able-bodied individuals using scanning systems with and without word prediction. Assistive technology : the official journal of RESNA. 1994;6(1):42–53. doi: 10.1080/10400435.1994.10132226. PubMed PMID: 10147209. Epub 1993/12/09. eng. [DOI] [PubMed] [Google Scholar]

- 17.Kim J, Bates DW. Results of a survey on medical error reporting systems in Korean hospitals. Int J Med Inform. 2006 Feb;75(2):148–155. doi: 10.1016/j.ijmedinf.2005.06.005. PubMed PMID: 16095963. Epub 2005/08/13. eng. [DOI] [PubMed] [Google Scholar]

- 18.Gong Y. Proceedings of the 1st ACM International Health Informatics Symposium. Arlington, Virginia, USA. 1882996: ACM; 2010. Terminology in a voluntary medical incident reporting system: a human-centered perspective; pp. 2–7. [Google Scholar]

- 19.Rosenthal J, Takach M. 2007 Guide to State Adverse Event Reporting Systems. National Academy for State Health Policy. 2007 [Google Scholar]

- 20.Kohn LT, Corrigan JM, Donaldson MS. Report of Committee on Quality of Healthcare in America. Institute of Medicine, National Academy of Science; 1999. To err is human: building a safer health system. [Google Scholar]

- 21.Hua L, Gong Y. Developing a user-centered voluntary medical incident reporting system. Studies in health technology and informatics. 2010;160(Pt 1):203–207. [PubMed] [Google Scholar]

- 22.Hua L, Gong Y. Usability evaluation of a voluntary patient safety reporting system: Understanding the difference between predicted and observed time values by retrospective think-aloud protocols. In: Kurosu M, editor. Human-Computer Interaction Applications and Services. Lecture Notes in Computer Science. 8005. Springer Berlin Heidelberg: 2013. pp. 94–100. [Google Scholar]

- 23.Hua L, Gong Y. Information gaps in reporting patient falls: the challenges and technical solutions. Studies in health technology and informatics. 2013;194:113–118. PubMed PMID: 23941941. Epub 2013/08/15. eng. [PubMed] [Google Scholar]

- 24.AHRQ. Common Formats 2011. Available from: http://www.pso.ahrq.gov/formats/commonfmt.htm.

- 25.JQuery. Available from: http://jquery.com/ [Google Scholar]

- 26.SlidesJS. Available from: http://www.slidesjs.com. [Google Scholar]

- 27.Ehlke A, Challand S, Schmidt T, Carneiro L. Tag-it. Available from: http://aehlke.github.io/tag-it/ [Google Scholar]

- 28.Higginbotham DJ, Bisantz AM, Sunm M, Adams K, Yik F. The effect of context priming and task type on augmentative communication performance. Augmentative and Alternative Communication. 2009;25(1):19–31. doi: 10.1080/07434610802131869. [DOI] [PubMed] [Google Scholar]

- 29.AHRQ. Web M&M Mobidity and Mortality Rounds on the Web: Cases & Commentaries. [cited 2011 12/4]; Available from: http://www.webmm.ahrq.gov/home.aspx.

- 30.Matts JP, Lachin JM. Properties of permuted-block randomization in clinical trials. Controlled Clinical Trials. 1988;9(4):327–344. doi: 10.1016/0197-2456(88)90047-5. [DOI] [PubMed] [Google Scholar]

- 31.Allan J, Englebright J. Patient-centered documentation: an effective and efficient use of clinical information systems. Journal of Nursing Administration. 2000;30(2):90–95. doi: 10.1097/00005110-200002000-00006. [DOI] [PubMed] [Google Scholar]

- 32.Poissant L, Pereira J, Tamblyn R, Kawasumi Y. The impact of electronic health records on time efficiency of physicians and nurses: A systematic review. Journal of the American Medical Informatics Association. 2005 Sep 01;12(5):505–516. doi: 10.1197/jamia.M1700. 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tuttle MS, Olson NE, Keck KD, Cole WG, Erlbaum MS, Sherertz DD, et al. Metaphrase: an aid to the clinical conceptualization and formalization of patient problems in healthcare enterprises. Methods of information in medicine. 1998;37(4–5):373–383. [PubMed] [Google Scholar]

- 34.Light J, Lindsay P, Siegel L, Parnes P. The effects of message encoding techniques on recall by literate adults using AAC systems. Augmentative & Alternative Communication. 1990;6(3):184–201. [Google Scholar]

- 35.Koester HH, Levine S. Effect of a word prediction feature on user performance. Augmentative & Alternative Communication. 1996;12(3):155–168. [Google Scholar]

- 36.Goodenough-Trepagnier C, Rosen M. Predictive assessment for communication aid prescription: Motor-determined maximum communication rate. The vocally impaired: Clinical practice and research. 1988:167–185. [Google Scholar]

- 37.Norman DA. Designing interaction. Cambridge University Press; 1991. Cognitive artifacts; pp. 17–38. [Google Scholar]

- 38.Tamuz M, Harrison MI. Improving patient safety in hospitals: contributions of high-reliability theory and normal accident theory. Health services research. 2006;41(4p2):1654–1676. doi: 10.1111/j.1475-6773.2006.00570.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Tamuz M, Thomas E, Franchois K. Defining and classifying medical error: lessons for patient safety reporting systems. Quality and Safety in Health Care. 2004;13(1):13–20. doi: 10.1136/qshc.2002.003376. [DOI] [PMC free article] [PubMed] [Google Scholar]