Abstract

PReMiuM is a recently developed R package for Bayesian clustering using a Dirichlet process mixture model. This model is an alternative to regression models, non-parametrically linking a response vector to covariate data through cluster membership (Molitor, Papathomas, Jerrett, and Richardson 2010). The package allows binary, categorical, count and continuous response, as well as continuous and discrete covariates. Additionally, predictions may be made for the response, and missing values for the covariates are handled. Several samplers and label switching moves are implemented along with diagnostic tools to assess convergence. A number of R functions for post-processing of the output are also provided. In addition to fitting mixtures, it may additionally be of interest to determine which covariates actively drive the mixture components. This is implemented in the package as variable selection.

Keywords: profile regression, clustering, Dirichlet process mixture model

1. Introduction

Profile regression is an alternative to regression models when one wishes to make inference beyond main effects for datasets with potentially correlated covariates. In particular, profile regression non-parametrically links a response vector to covariate data through cluster membership (Molitor et al. 2010). We have implemented this method in the R (R Core Team 2014) package PReMiuM (Hastie, Liverani, and Richardson 2015).

PReMiuM performs Bayesian clustering using a Dirichlet process mixture model and it allows binary, categorical, count and continuous response, as well as continuous and discrete covariates. Moreover, predictions may be made for the response, and missing values for the covariates are handled. Several samplers and label switching moves are implemented along with diagnostic tools to assess convergence. A number of R functions for post-processing of the output are also provided. In addition to fitting mixtures, it may additionally be of interest to determine which covariates actively drive the mixture components. This is implemented in the package as variable selection.

In order to demonstrate the PReMiuM package, it is helpful to present an overview of the Dirichlet process. We begin this section by re-familiarizing the reader with such a process, introducing notation that we shall call upon throughout the paper. Formally, let (Ω, 𝓕, P) be a probability space comprising a state space Ω with associated σ-field 𝓕 and a probability measure P. We say that a probability measure P follows a Dirichlet process with concentration parameter α and base distribution PΘ0 parametrized by Θ0, written P ~ DP(α, PΘ0) if

| (1) |

for all A1, A2, …, Ar ∈ 𝓕 such that Ai ∩ Aj = Ø for all i ≠ j and

1.1. The stick-breaking construction

Although Definition 1 is perhaps rather abstract, proof of the existence of such a process has been determined in a variety of ways, using a number of different formulations (Ferguson, 1973 and Blackwell and MacQueen, 1973). In this paper we focus on Dirichlet process mixture models (DPMM), based upon the following simplified constructive definition of the Dirichlet process, due to Sethuraman (1994). If

| (2) |

where δx denotes the Dirac delta function concentrated at x and Θc is independent of Vc for c ∈ ℤ+, then P ~ DP(α, PΘ0). This formulation for V and ψ is known as a stick-breaking distribution. Importantly, the distribution P is discrete, because draws from P can only take the values in the set {Θc : c ∈ ℤ+}.

As noted by many authors (for example Ishwaran and James, 2001 and Kalli, Griffin, and Walker, 2011) it is possible to extend the above formulation to more general stick-breaking formulations, for example allowing Vc ~ Beta(ac, bc) independently, resulting in a generalized Dirichlet process, such as the two parameter Poisson-Dirichlet process (Pitman and Yor 1997). The methods and results that we propose within this paper hold for such generalized processes, but at present the package is only coded to implement the Dirichlet process in Equation 2 and the Poisson-Dirichlet process with Vc ~ Beta(1 − d, α − cd) where d ∈ [0, 1) and α > −d. For d = 0 the Dirichlet process is a special case of the Poisson-Dirichlet process.

Typically, because of the complexity of the models based on the stick-breaking construction, inference is made in a Bayesian framework using Markov chain Monte Carlo (MCMC) methods. Until recently, a perceived difficulty in making inference about this model was the infinite number of parameters within the stick breaking construction. Historically, this obstacle has resulted in the use of algorithms that either explore marginal spaces where some parameters are integrated out or use truncated approximations to the full Dirichlet process mixture model, see for example Neal (2000) and Ishwaran and James (2001).

More recently, two alternative innovative approaches to sampling the full DPMM have been proposed. The first, introduced by Walker (2007), uses a novel slice sampling approach, resulting in full conditionals that may be explored by the use of a Gibbs sampler. The slice sampling method updates the cluster allocations jointly as opposed to the marginal methods which require as many Gibbs steps to update as the number of observations to cluster. The difficulty of the proposed approach is the introduction of constraints that complicate the updates of the mixture component weights, leading to potential mixing issues. To overcome this, Kalli et al. (2011) generalize this sampler, adding further auxiliary variables, and report good convergence results, although the authors note that the algorithm is sensitive to these additional parameters. The second distinct MCMC sampling approach was proposed in parallel by Papaspiliopoulos and Roberts (2008). The proposed sampler again uses a Gibbs sampling approach, but is based upon an idea termed retrospective sampling, allowing a dynamic approach to the determination of the number of components (and their parameters) that adapts as the sampler progresses. The cost of this approach is an ingenious but complex Metropolis-within-Gibbs step, to determine cluster membership.

Despite the apparent differences between the two strategies, Papaspiliopoulos (2008) noted that the two algorithms can be effectively combined to yield an algorithm that improves either of the originals. The resulting sampler was implemented and presented by Yau, Papaspiliopoulos, Roberts, and Holmes (2011), and a similar version was presented by Dunson (2009) for DPMM. The current sampler presented in this paper is our interpretation of these ideas, implemented as an R package. This package, called PReMiuM, is based upon efficient underlying C++ code for general DPMM sampling.

The aims behind the product partition model (PPMx) in Müller, Quintana, and Rosner (2011) and Quintana, Müller, and Papoila (2013) are very similar to ours. Furthermore, both sets of work adopt flexible Bayesian partition models based on the Dirichlet process (DP), although the PPMx approach is adaptable to formulations other than the DP. However, there are significant differences in how the two models are built. For example, the PPMx model is built by considering the likelihood of the partition given the covariates and variable selection parameters, using similarity functions. In contrast, we consider the likelihood of the covariates given the partition and variable selection parameters. The two modeling approaches offer different options for defining the dependence structure between the quantities of interest, and we would argue that it is a matter of personal preference which one should be adopted.

In Section 2 we describe formally the Dirichlet process mixture model implemented in PReMiuM and the blocked MCMC sampler used. In Section 3 we discuss profile regression and its link with the response and covariate models included in the package while in Section 4 we discuss how predictions are computed. In Section 5 we give an overview of the postprocessing tools available in PReMiuM to learn from the rich output produced by our Bayesian model and in Section 6 we discuss diagnostic tools that we propose to investigate the convergence of the MCMC. Finally, in Section 7 we give a brief overview of the structure of the code and show examples of its use in Section 8. We also give an indication of run times.

2. Sampling the Dirichlet process mixture model

2.1. Definition and properties

Perhaps the most common application of the Dirichlet process is in clustering data, where it can be used as the prior distribution for the parameters of an infinite mixture model. Consider again the stick breaking construction in Equation 2. For the DPMM, the (possibly multivariate) observed data D = (D1, D2, …, Dn) follow an infinite mixture distribution, where component c of the mixture is a parametric density of the form fc(·) = f (·|Θc, Λ) parametrized by some component specific parameter Θc and some global parameter Λ. Defining (latent) parameters as draws from a probability distribution P following a Dirichlet process DP (α, PΘ0) and again denoting the Dirac delta function by δ, this system can be written as

| (3) |

with Θc independent of Vc for c ∈ ℤ+.

When making inference using mixture models (either finite or infinite) it is common practice to introduce a vector of latent allocation variables Z. Such variables enable us to explicitly characterize the clustering and additionally facilitate the design of MCMC samplers. Adopting this approach and writing ψ = (ψ1, ψ2,…) and Θ = (Θ1, Θ2, …), we re-write Equation 3 as

| (4) |

with Θc independent of Vc for c ∈ ℤ+.

The likelihood of Di associated with the DPMM is simply the first line of Equation 4. Integrating out the latent variable Zi we obtain the more recognizable mixture likelihood

The remainder of Equation 4 provides the prior specification for the DPMM, allowing us to write the joint posterior distribution as

| (5) |

Of course, additional layers of hierarchy could easily be introduced, for example through hyper-priors for Θ0.

2.2. The MCMC sampler

A common approach to MCMC sampling from the DPMM is to integrate out V and use a Gibbs sampler on the resulting space. Such samplers are commonly referred to as Pólya urn samplers, since they are motivated by the Pólya urn representation of a Dirichlet process introduced by Blackwell and MacQueen (1973). Ishwaran and James (2001) provide a review of this approach and demonstrate that conditionals of the type p(Zi|Z−i) can be derived, where Z−i = (Z1, …, Zi−1, Zi+1, …, Zn). Many other authors have focused on developing alternative samplers of this nature, including Neal (2000) and Green (2010). However, as noted by many authors, samplers of this nature, where the allocation of a single observation is conditional on the allocations of all other observations, can often suffer from poor mixing. This motivates the need for an alternative class of samplers that sample from the full model in Equation 4.

In the full model, the posterior conditionals for the allocation variables Z depend upon an infinite number of variables V and Θ. One way to bypass this complication is to truncate the definition in Equation 4 to mixtures with C components (of which potentially only a subset will be non-empty). Ishwaran and James (2001) demonstrate that under this model the conditional distributions are standard distributions which can be easily sampled from. This is one of the three samplers implemented in our R package, and we refer to it as truncated. For the truncated sampler we have also implemented the more general Poisson-Dirichlet stick-breaking formulation (Pitman and Yor 1997), constructed by allowing Vc ~ Beta(1−d, α−cd) where d ∈ [0, 1) and α > −d in Equation 2. For this model α and d are fixed parameters.

Although the approach of Ishwaran and James (2001) resolves the challenges of sampling from the full model, if C is not chosen to be sufficiently large, then the posterior may be quite different on the truncated model space compared to the full model space. The authors provide some guidance for choice of C, but more recent work by Walker (2007) and Papaspiliopoulos and Roberts (2008) demonstrate techniques that alleviate the need for such a truncation, whilst retaining many of the sampling properties of the full conditionals.

The first step is to borrow the idea of Walker (2007) and introduce auxiliary variables U = (U1, U2, …, Un) such that the joint posterior can be re-written as

| (6) |

| (7) |

Here, 1A, is the function that takes the value 1 over the set A and 0 elsewhere. By combining this auxiliary variable approach with the notion of retrospective sampling (i.e., adopting a just-in-time approach to sampling empty mixture component parameters, as introduced in Papaspiliopoulos and Roberts, 2008), it is possible to construct an efficient Gibbs sampler for sampling from the joint posterior in Equation 7. Integrating U out of Equation 7 with respect to the Lebesgue measure yields the DPMM posterior distribution given in Equation 5 meaning that marginalizing samples of the joint distribution over U results in samples from the desired distribution.

Kalli et al. (2011) extend the idea of Walker (2007) to a general class of slice samplers by writing

| (8) |

where ξ1, ξ2, … is any positive sequence.

When ξi = ψi, this corresponds to the efficient Gibbs sampler proposed by Papaspiliopoulos (2008) in the context of DPMM using parameter blocking. This is the second of the three samplers implemented in our R package, and we refer to it as slice dependent, in accordance with Kalli et al. (2011).

For the last of the three samplers implemented in our R package, that we refer to as slice independent in accordance with Kalli et al. (2011), we set ξi = (κ − 1)κi−1 with κ = 0.8 as proposed by Kalli et al. (2011).

Most importantly, these slice samplers permit the introduction of label switching moves, without which it is very difficult to obtain sufficient mixing. We discuss this in detail in Section 6.

We continue by defining some new notation which is required to present our slice samplers. First, given the allocation variables Z, define

Similarly, given the auxiliary variables U and the vector V, define

and

| (9) |

It is important to emphasize that these values potentially change at each sweep of the sampler, as the underlying variables change, although for simplicity of exposition we have omitted explicitly labeling the parameters with the sweep. The purpose of the variable C⋆ is to provide an upper limit on which mixture components need updating at each sweep. Specifically, although there are infinitely many component parameters in the model, since P(Zi = c|Ui > ψc) = 0, we need only concentrate our updating efforts on those components c for which ψc > Ui for some i = 1, 2, …, n. By defining C⋆ as in Equation 9 it can be shown (see Appendix A) that ψc < Ui for all c > C⋆ and all i = 1, 2, …, n. Assuming that the Markov chain is initialized accordingly and is updated using the correct conditionals, it is possible to show Z⋆ ≤ C⋆ < ∞ almost surely (details provided in Appendix A).

With these definitions in place we make use of the following sets and vectors (which again will change at each sweep)

Here A, P and I are disjoint sets (updated at every sweep of the MCMC algorithm) that partition ℤ+, with names chosen to denote Active, Potential and Inactive components respectively. It is possible that P = Ø. By definition, all observations are allocated to a mixture component labeled by one of the indices in A. Components labeled with an index in P or I are necessarily empty (i.e., have no observations allocated to them), the difference being that at the next update of the allocation variables, components with labels in P may potentially become non-empty.

The blocked infinite DPMM algorithm can now be defined using the following blocked Gibbs updates to sample from the relevant conditionals.

DPMM algorithm

Suppose we are at sweep t of the sampler. Update as follows:

Step A. Compute Z⋆ and the set A.

Step B. Sample

Step C. Compute U⋆. Recompute Z⋆ and the set A.

Step D. Sample computing C⋆ and the set P in the process.

Step E. Sample

Step F. Sample

Step G. Sample

While this algorithm is somewhat generic, the blocking strategy is clearly highlighted. Further details explaining each of the steps are provided in Appendix B. The key idea is that by doing joint updates, we can marginalize out an infinite number of variables when necessary, to ensure that we are always sampling from conditional distributions that depend only upon a finite number of parameters. In particular, after marginalization, the parameters corresponding to the inactive set I do not contribute to the conditional distributions of the other parameters, so we do not actually need to sample their values. Since these parameters have no contribution to the likelihood, if values are subsequently required they can simply be sampled from the prior retrospectively as necessary. Although the sampler is written as a blocked Gibbs sampler, where it is not possible to sample directly from full conditionals (for example in the update of Θ, depending upon the choices of f and ) Metropolis-within-Gibbs steps are applied. Depending on the application, the Gibbs updates specified above may comprise several different Gibbs or Metropolis-within-Gibbs steps (for example updating Θ and Λ). Typically, where Metropolis-Hastings updates are required we advocate adopting an adaptive Metropolis-Hastings approach: see Andrieu and Thoms (2008) for a review.

3. Example models

The general sampler of the previous section is applicable for many specific models, depending on the choices of f and In this section we provide further details for some of the models that are implemented within our software. We detail the prior choices that are made within our implementation, but, of course, alternative priors could be chosen.

3.1. Gaussian mixtures

Perhaps the most common model to be implemented under the DPMM framework is the Gaussian mixture model, where D = X for some covariate data X, and X assumes a mixture of Gaussian distributions. Under this setting for each cluster c, the cluster specific parameters are given by Θc = (µc, Σc), where µc is a mean vector and Σc is a covariance matrix. There are no additional global parameters Λ. Under this setting

| (10) |

By choosing µc ∼ Normal(µ0, Σ0) and Σc ∼ InvWishart(R0, κ0) (for each c) for our prior model we have a conjugate model, permitting Gibbs updates for the parameters µA and ΣA associated with the active clusters, and also those (µP and ΣP) associated with the potential clusters. The choice of values for the hyperparameters Θ0 = (µ0, Θ0, R0, κ0) is discussed further in Section 7.

3.2. Discrete mixtures

Clearly the DPMM model applies to mixtures other than the Gaussian one. Consider for example the case where for each individual i, Di = Xi is a vector of J locally independent discrete categorical random variables, where the number of categories for covariate j = 1, 2, …, J is Kj. Then we can write Θc = Φc = (Φc,1, Φc,2 …, Φc,J) with and

| (11) |

Again, there are no global parameters Λ.

Letting Θ0 = a = (a1, a2, …, aJ), where for and adopting conjugate Dirichlet priors Φc,j ∼ Dirichlet(aj), each Φc can be updated directly using Gibbs updates. For full details of the posterior conditional distribution see Molitor et al. (2010).

3.3. Mixed mixtures

An alternative model is given by a mixture of some continuous and discrete random variables. Following the notation used above for Gaussian and discrete mixtures, for J1 continuous random variables and J2 discrete random variables,

| (12) |

where is the subset of the continuous random variables in Di and is the subset of the categorical random variables in Di. Note that we are assuming independence between continuous and categorical data conditional on the cluster allocations.

3.4. Profile regression

Recently, interest has grown in using DPMM as an alternative to regression models, non-parametrically linking a response vector Y to covariate data X through cluster membership. This idea has been pioneered by several authors including Dunson, Herring, and Siega-Riz (2008), Bigelow and Dunson (2009), Molitor et al. (2010), Papathomas, Molitor, Richardson, Riboli, and Vineis (2011), and Molitor et al. (2011). Our presentation is most similar to the latter three of these articles which refer to this idea as “profile regression”.

For the case of either Gaussian or discrete mixtures, as described above, our implementation permits the joint modeling of a response vector, for various response models which we present below. Formally, the data D = (Y, X) are now extended to contain response data Yi and covariate data Xi for each individual i, where the contribution of the covariate data to the response may be cluster dependent. There is also the possibility to include additional fixed effects Wi for each individual, which are constrained to only have a global (i.e., non-cluster specific) effect on the response Yi.

The data Di are then jointly modeled as the product of a response model and a covariate model, to give the following likelihood:

The covariate likelihood fX is of either of the forms presented in Sections 3.1 or 3.2. The likelihood fY depends upon the choice of response model.

Binary response

Adopting a binary response model, each parameter vector Θc is extended to include an additional parameter θc. We also introduce the global parameter vector Λ = β, of the same length L as the fixed effects vector Wi, to capture the contribution of these effects. Then, is given by

For each cluster c, we adopt a t location-scale distribution for θc, with hyperparameters µθ and σθ with 7 degrees of freedom, as discussed by Molitor et al. (2010). Similarly, for each fixed effect l, we adopt the same prior for βl, but with hyperparameters µβ and σβ.

The components of Θc corresponding to the covariate model for X retain the possibility of being updated according to Gibbs samples. However, since conjugacy is not achieved with our prior choice, updating θc for each cluster (and βl for each fixed effect l) requires a Metropolis-within-Gibbs sampler. In our implementation we propose the use of adaptive random-walk-Metropolis moves.

Categorical response

The categorical response model that we use is a simple extension of the binary response model of the previous section. In particular, each parameter vector Θc additionally contains an extra parameter vector θc = (θc,1, θc,2, …, θc,R−1) of length R − 1, where R is the number of possible categories represented in the response data Y. Treatment of fixed effects is also extended, so that for each response category r = 1, 2, …, R − 1, there is a vector βr = (βr,1, βr,2, …, βr,L), where βr,l is the coefficient for each fixed effect l (l = 1, 2, …, L). This gives as

and

In our sampler we use the same priors for each θc,r as for θc and βr,l as for βl in the binary case, with the resulting observation about Metropolis-within-Gibbs updates remaining true. Note that θc,r and βc,r are independent across r.

Count response modeled as Binomial

By providing a number of trials Ti associated with each individual i (in this model an “individual” might correspond to an area or “experiment”) we can extend the binary response model to a Binomial response model. In particular,

where

Priors used are identical to the binary case. Molitor et al. (2011) provide an example where this model is employed.

Count response modeled as Poisson

For count-type response data, an alternative to the Binomial model is the Poisson model. Under this model, each individual i is associated with an expected offset Ei, and the response is then modeled as

where

Prior models for θc and β are as above.

Extra variation in the response

For the binary, Binomial and Poisson models above, it is possible that we may wish to allow for extra variation in the response. In the case of the Poisson model this corresponds to modeling counts with a Negative Binomial distribution. Our sampler is designed to achieve this by alternatively modeling λi (as defined in the above response models) by

Prior distributions for θc and β are unchanged, but in this model Λ contains an additional parameter, for which prior specification is required. For simplicity we work in terms of the precision and adopt a Gamma distribution with shape parameter and rate parameter This approach permits a simple Gibbs update of this parameter. In order to make inference about this model, it is also necessary to update the latent variables λi at every sweep of the MCMC sampler. These parameters are considered an extension of Λ (as they are not directly associated with a specific cluster) and are therefore updated in Step F of the DPMM algorithm. Updates to these parameters are done using adaptive random-walk-Metropolis steps.

Gaussian response

Our sampler is able to handle continuous response data. As for many of the discrete response models, Θc is extended to contain θc for each c. As before Λ contains β, but also These parameters allow us to write the response model as:

where

We impose the same prior settings as for the discrete response models, with the additional prior on being Gamma where and are the shape and rate hyper parameters that extend Θ0. Adopting this conjugate prior, updates for τY are simple Gibbs updates.

3.5. Variable selection

In addition to fitting mixtures, potentially linking covariates and responses, it may additionally be of interest to determine which covariates actively drive the mixture components, and which share characteristics common to all components. This can be formulated as a question of variable selection. Below we present details of how this idea can be modeled, first in the case of discrete covariates, as considered by Papathomas, Molitor, Hoggart, Hastie, and Richardson (2012), and then in the context of Gaussian covariates, a formulation which, as far as we are aware, has not been reported elsewhere. Relevant to the variable selection formulation we have adopted is the model described in Chung and Dunson (2009). A different modeling approach is presented in Quintana et al. (2013). Quintana et al. (2013) consider the logit of the binary cluster specific selection switches and impose an additional level in the hierarchy using Normal densities and associated hyper-parameters. A normalization step is then required. In contrast, we impose an additional level in the hierarchy considering Bernoulli distributions for the binary switches, without the requirement of a normalization step.

Discrete covariates

Following the approach taken by Papathomas et al. (2012) our sampler implements two types of variable selection. We outline these approaches briefly in this section but for full details the reader is referred to this paper.

The first is a cluster specific variable selection approach, based on a modification of the model in Chung and Dunson (2009). Each mixture component c has an associated vector γc = (γc,1, γc,2, …, γc,J), where γc,j is a binary random variable that determines whether covariate j is important to mixture component c. Let ϕ0,,j,k be the observed proportion of covariate j taking the value k throughout the whole covariate dataset X. Define the new composite parameters,

The above expression is substituted into Equation 11 in place of ϕc,j,k, to provide the likelihood for the covariate model. Under this model, each parameter vector Θc is extended by γc. We assume that, given ρj, the γc,j, c = 1, …, C, are independent Bernoulli variables with γc,j ~ Bernoulli(ρj). We further consider a sparsity inducing prior for ρj with an atom at zero, so that

where wj ~ Bernoulli(pw). Therefore, additional parameters ρj and wj are introduced into Λ. Here, αρ and βρ are fixed, and can be specified by the user. The parameter pw is set equal to 0.5 by default, but it can also be specified by the user, also allowing for the atom at zero to be removed. The binary nature of γc,j means that direct Gibbs updates can be used. This is an approach that allows for local cluster specific covariate selection, considering the γc,j parameters, as well as global covariate selection, considering the overall selection probabilities ρj.

An alternative approach to the variable selection presented above is a type of soft variable selection, where each covariate j, is associated with a latent variable ζj, taking values in [0, 1], which informs whether variable j is important in terms of supporting a mixture distribution. We define the new composite parameters as,

which, as in the first variable selection model, is substituted into Equation 11 in place of ϕc,j,k, to provide the likelihood for the covariate model. Similarly to the first specification, we consider a sparsity inducing prior for ζj with an atom at zero, so that

where vj ~ Bernoulli(pw). The parameter pw is set equal to 0.5 by default, but it can also be specified by the user, also allowing for the atom at zero to be removed. Conjugacy for ϕc,j is no longer retained, meaning that Metropolis-within-Gibbs updates are necessary. We use adaptive random-walk-Metropolis proposals. The second alternative approach only allows for global variable selection and, in principle, is less likely to encounter mixing problems, compared to the more elaborate first formulation. Nevertheless, we have not yet observed considerable mixing problems when adopting either approach using PReMiuM. For extended details of the conditional posteriors and updating strategy the interested reader is referred to Papathomas et al. (2012).

Gaussian covariates

The two variable selection methods described above can equally be applied to the Gaussian mixture case. Defining is the average sample value of covariate j, we can define the vector where either

or

depending on which variable selection approach is being adopted. We then replace µc,j with in Equation 10. Using identical priors for ζj or γc,j and ρj, these parameters are updated as for the discrete covariate case. The posterior conditional distributions for updating µc are shown in Appendix C, whereas those for updating other parameters are as before, with µc,j replaced with as appropriate.

4. Predictions

An important feature of our software is the computation of predicted responses for prediction scenarios. Suppose that we wish to understand the role of a particular covariate or group of covariates. We can specify a number of predictive scenarios (or pseudo-profiles), that capture the range of possibilities for the covariates that we are interested in. At each iteration the predictive subjects are assigned to one of the current clusters according to their covariate profiles. Seeing how these pseudo-profiles are allocated allows us to understand the risk associated with these profiles.

The predictive subjects have no impact on the likelihood and so do not determine the clustering or parameters at each iteration and missing values in the predictive scenarios are ignored. At each sweep r of the MCMC sampler we define an additional “allocation” variable, corresponding to each predictive scenario s. Our software produces predicted values based on simple allocations or a Rao-Blackwellized estimate of predictions. The predicted values based on a simple allocation of cluster c assign For the Rao-Blackwellized predictions the probabilities of allocations are used instead of actually performing a random allocation. For each pseudo-profile we compute the posterior probabilities With these probabilities we construct a cluster-averaged estimate of θ for each particular pseudo-profile at each sweep. Specifically,

Looking at the density of these predictions over MCMC sweeps gives us an estimate of the effect of a particular pseudo-profile, and its comparison to other pseudo profiles, allowing us to derive a better understanding of the role of specific covariates. Moreover, the impact of ignoring missing values in the pseudo-profiles essentially means that the missing value will reflect the covariate patterns present in the main sample. Because of this, the marginal effect of covariates or groups of covariates that is derived has to be interpreted as a population average effect, over a population with similar characteristics to that under study.

If a subject is missing fixed effects, then the mean value or 0 category fixed effect is used in the predictions. In this case, effectively, the fixed effects do not contribute to the predicted response. If the offset or number of trials is missing, this value is taken to be 1 when making predictions.

5. Postprocessing of the MCMC output

The rich posterior output produced can be used to learn about the partition space and its uncertainty. It is useful to show a representative partition, as an effective way to convey the output of the clustering algorithm. Moreover, it is also of interest to assess the uncertainty associated with subgroups of this best partition.

We discuss below the necessary steps. See also Molitor et al. (2010).

-

1.

Computation of the dissimilarity matrix. Due to the problem of “label switching”, i.e the labels associated with each cluster change during the MCMC iterations, we can not simply assign each observation to the cluster that maximizes the average posterior probability. Methods that deal with label switching, like the relabeling algorithm of Stephens (2000), require the number of clusters K to be fixed. Using the Dirichlet process mixture models, we allow the number of clusters to vary from one MCMC sample to the next. One possible solution is to choose the partition based on a posterior similarity matrix. At each iteration of the sample, we record pairwise cluster membership and construct a score matrix, with entries equal to 1 for pairs belonging to the same cluster and 0 otherwise. Averaging these matrices over the whole MCMC run leads to a similarity matrix S, which can be then used to identify an optimal partition.

-

2.

Identifying the optimal partition. Many methods to identify the optimal partition using the posterior similarity matrix have been proposed in the literature. The similarity matrix computed by PReMiuM can be processed using the R package mcclust (Fritsch and Ickstadt 2009). We have implemented directly in PReMiuM two deterministic clustering procedures to characterize the optimal partition.

The first finds the best partition by choosing the one which minimizes the least-square distance to the matrix S. This approach is equivalent to the Binder’s loss method (Fritsch and Ickstadt 2009). It is fast, but in our experience it is susceptible to Monte Carlo error.

The second procedure implemented in the package is partitioning around medoids (PAM) on the dissimilarity matrix 1 − S. PAM is available in R in the package cluster (Maechler, Rousseeuw, Struyf, Hubert, and Hornik 2014) and it robustly assigns individuals to clusters in a way consistent with matrix S. PAM is implemented for each possible number of clusters up to a specified maximum, and for each fixed number of clusters the best PAM partition is selected. A final representative cluster is then chosen by maximizing the average silhouette width across these best PAM partitions.

-

3.

Computation of the average risk and profile and the corresponding credible intervals. Given an optimal partition P* obtained as above, we examine the MCMC output to assess whether or not the model consistently clusters individuals in a manner similar to P*.

For example, for Bernoulli response, we obtain a distribution of the baseline risks for each cluster defined by P*. At each iteration of the sampler we compute the average of baseline risks pzi, defined in Section 3.4, for all individuals within a particular cluster k of the optimal partition. This average baseline risk for cluster k is computed as follows:

where nk denotes the number of individuals in cluster k. This provides an empirical sample from the baseline risk associated with cluster k. Consistent clustering leads to narrower credible intervals derived from this distribution. In a similar way we can compute the distribution of cluster parameters for other response and covariates types.

6. Mixing of the MCMC algorithm

The likelihood of the DPMM is invariant to the order of cluster labels but the prior specification of the stick breaking construction is not. Therefore, to ensure adequate mixing across orderings, it is important to include label switching moves. In this package we have implemented the two label switching moves proposed by Papaspiliopoulos and Roberts (2008) as well as a third label switching move proposed by Hastie, Liverani, and Richardson (2014). This latter move updates the cluster weights so that for each cluster being updated, the proposed new weight is the expected value of the weight conditional upon the new allocations, adjusted by the ratio of the existing weight and its expected value conditional upon the existing allocations, with the weights appropriately normalized. See Equation 6 of Hastie et al. (2014) for details of the move, and more generally for a review of the sampler performance.

Even with these label switching moves, convergence may be problematic and the user must address this issue using diagnostic tools. One difficulty in this respect is that there are no parameters in the model that can reliably demonstrate convergence. The parameters of the fixed effects tend to converge very quickly, regardless of the underlying clustering, as they are not cluster specific and therefore are not a good indication of the overall convergence. Plotting functions to assess convergence of the global parameters are included in the package and are discussed in Section 8. The cluster parameters, such as the θc’s, cannot be tracked as their number (and their labels) can change from one iteration to the next. The concentration parameter α is not a reliable indicator of convergence either, as discussed in Hastie et al. (2014).

To overcome this challenge, we have implemented the computation of the marginal model posterior p(Z|D) as an additional diagnostic tool. This represents the posterior distribution of the allocations given the data, having marginalized out all the other parameters (Hastie et al. 2014). The marginal model posterior is computed for each run of the MCMC and it has proved very effective for our real examples to compare runs with different initializations and identify runs that were significantly different from others. Our experience suggests that it is harder for the MCMC algorithm to split rather than merge clusters. This means that it is important to initialize the algorithm with a number of cluster which is greater than the number of clusters that the algorithm will convergence to. The marginal model posterior can help to assess what such number is for each specific example.

Finally, while optimal partitions allow visualization of the result of a clustering algorithm, such an approach must be applied with care as we are not aware of any effective method to directly quantify nor visualize clustering uncertainty. For this reason, we advise using predictions as an additional tool to assess convergence and visualize the output of the algorithm, as their posterior distributions can be compared across runs using standard methods. More details of using the package for predictions can be found in Section 8. We have observed that these posterior predictive distributions tend to be more stable than optimal partitions.

7. Software

Our implementation of the DPMM algorithm is available as an R package from the Comprehensive R Archive Network (CRAN) at. http://CRAN.R-project.org/package=PReMiuM. The software is primarily written in C++ and R.

The sampler implements the algorithm exactly as detailed in the current paper, although continued work is in progress to extend the scope of the software to cover additional models.

The program is further customizable through the specification of hyperparameters, providing name-value pairs for the various hyperparameters used within the model being run. If the value is not set for a specific hyperparameter, it takes its default value. Default values can be found within the full documentation that is available as part of the software and an example in provided in Section 8.3.

Moreover, this package can produce predicted values based on random allocations, or a Rao-Blackwellized estimate of predictions, where the probabilities of allocations are used instead of actually performing a random allocation.

8. Examples

8.1. Simulated example

We simulated 1,000 subjects, partitioned into 5 groups in a balanced manner. Ten binary covariates were considered. To demonstrate one of the variable selection approaches within the sampler, only the first eight covariates support a clustering structure. The response is binary. This dataset can be simulated as follows.

R> library("PReMiuM")

R> inputs <- generateSampleDataFile(clusSummaryVarSelectBernoulliDiscrete())

We used the default values for all hyperparameters: Dirichlet conjugate priors with aj = 1 for the covariates and

where and

| (13) |

We initialized all chains allocating subjects randomly to 20 groups. We ran the chain for 10,000 iterations after a burn-in sample of 20,000 iterations. While ensuring convergence is a complex problem, we have observed good stability in all our runs, with results from independent chains virtually identical.

R> runInfoObj <- profRegr(yModel = inputs$yModel, xModel = inputs$xModel,

+ nSweeps = 10000, nBurn = 20000, data = inputs$inputData,

+ output = "output", covNames = inputs$covNames, nClusInit = 20,

+ run = TRUE)

R> dissimObj <- calcDissimilarityMatrix(runInfoObj)

R> clusObj <- calcOptimalClustering(dissimObj)

R> riskProfileObj <- calcAvgRiskAndProfile(clusObj)

R> clusterOrderObj <- plotRiskProfile(riskProfileObj, "summary-sim.png")

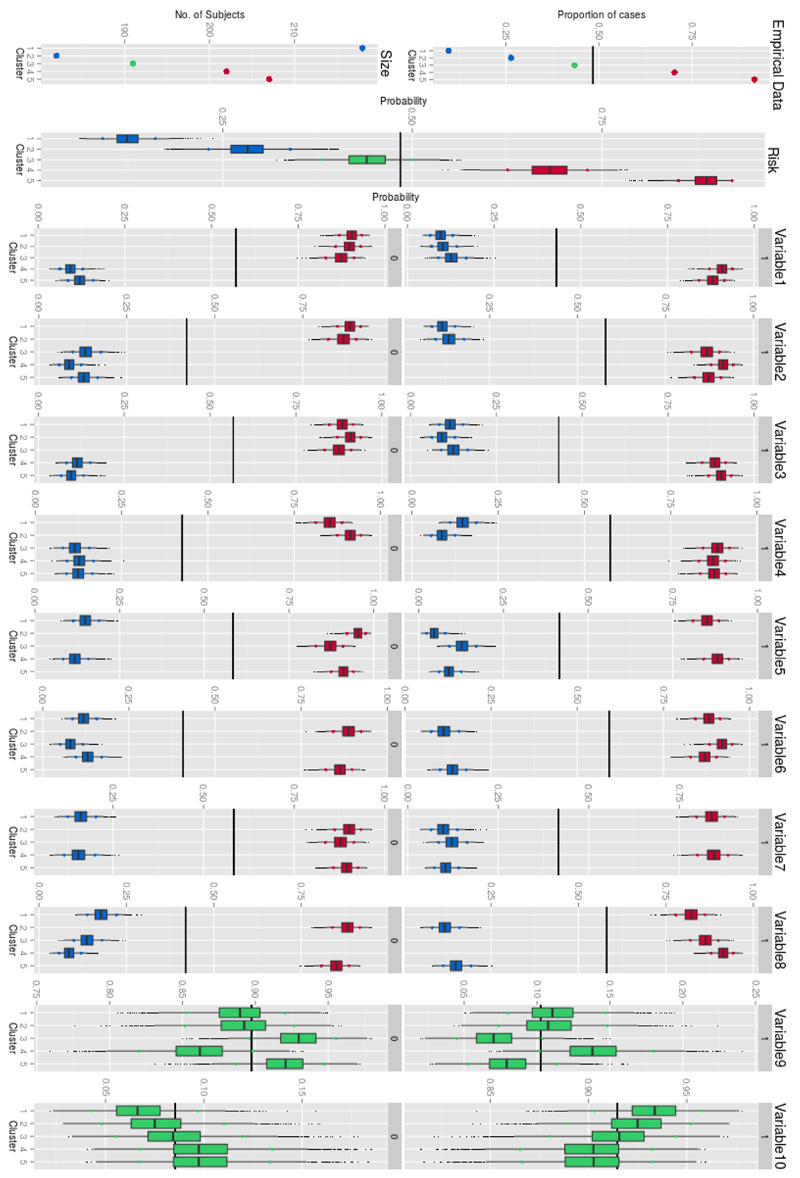

Figure 1 shows a box-plot of the posterior distribution for the probabilities of the response and the covariates for the 5 clusters that form the representative clustering. Additionally, the package includes the function heatDissMat() which produces a heatmap of the dissimilarity matrix, rearranged such that observations with high pairwise cluster membership appear consecutively.

Figure 1.

Posterior distributions of the parameters for binary response and discrete covariates for the representative clustering.

8.2. Predictions

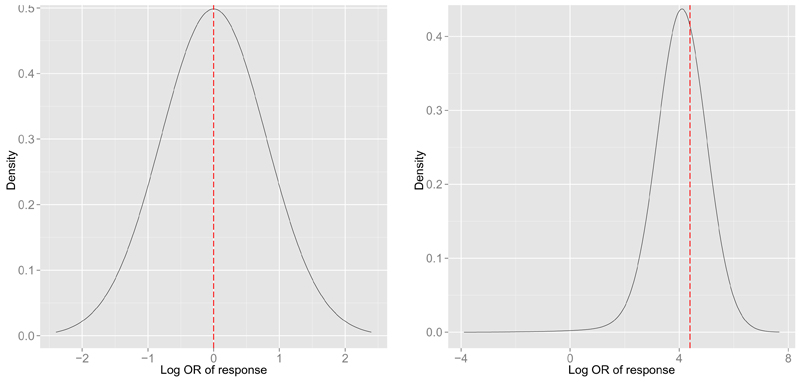

PReMiuM can produce predicted values based on simple allocations (the default), or a Rao-Blackwellized estimate of predictions, where the probabilities of allocations are used instead of actually performing a random allocation. The following code can be used to reproduce the predictive distribution plotted in Figure 2. As discussed in Section 4, the missing values, as in the second prediction scenario given below, are ignored and their marginal effect can be interpreted as a population average effect. The predictions are consistent with the simulated data.

Figure 2.

The predictive distribution of the response for two prediction scenarios. The covariate values for the predictive scenarios are [2, 2, 2, 2] and [0, 0, NA, 0, 0] respectively. ‘NA’ represents a missing value. The dashed lines represent the data generating parameters corresponding to the clusters that we expect these two predictive scenarios to be assigned to.

R> inputs <- generateSampleDataFile(clusSummaryBernoulliDiscrete())

R> preds <- data.frame(matrix(c(2, 2, 2, 2, 2, 0, 0, NA, 0, 0), ncol = 5,

+ byrow = TRUE))

R> colnames(preds) <- names(inputs$inputData)[2:(inputs$nCovariates+1)]

R> runInfoObj <- profRegr(yModel = inputs$yModel, xModel = inputs$xModel,

+ nSweeps = 1000, nBurn = 1000, data = inputs$inputData,

+ output = "output", covNames = inputs$covNames, predict = preds,

+ fixedEffectsNames = inputs$fixedEffectNames)

R> dissimObj <- calcDissimilarityMatrix(runInfoObj)

R> clusObj <- calcOptimalClustering(dissimObj)

R> riskProfileObj <- calcAvgRiskAndProfile(clusObj)

R> predictions <- calcPredictions(riskProfileObj,

+ fullSweepPredictions = TRUE, fullSweepLogOR = TRUE)

R> plotPredictions(outfile = "predictiveDensity.pdf",

+ runInfoObj = runInfoObj, predictions = predictions, logOR = TRUE)8.3. Variable selection

Note that covariates 9 and 10 in Figure 1 have similar profile probabilities for all clusters, as they have been simulated not to affect the clustering. The variable selection approach will identify the covariates that do not contain clustering support and exclude them from affecting the clustering.

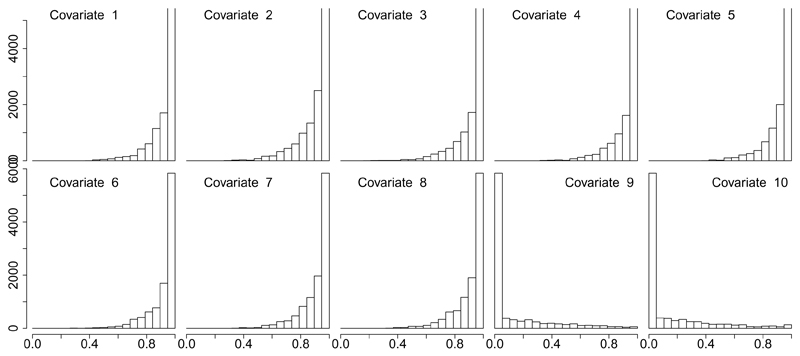

We initialized the chains as in the simulated example above, with additional prior specifications given by

where wj ~ Bernoulli(0.5). The algorithm consistently sampled values ρp in accordance with the simulated data, as shown in Figure 3. This figure can be reproduced as follows.

Figure 3.

The sampled values of the binary variable ρj, used for variable selection, for each covariate.

R> inputs <- generateSampleDataFile(clusSummaryVarSelectBernoulliDiscrete())

R> hyp <- setHyperparams(aRho = 0.5, bRho = 0.5, atomRho = 0.5)

R> runInfoObj <- profRegr(yModel = inputs$yModel, xModel = inputs$xModel,

+ nSweeps = 10000, nBurn = 10000, data = inputs$inputData,

+ output = "output", covNames = inputs$covNames,

+ varSelectType = "BinaryCluster", hyper = hyp)

R> rho <- summariseVarSelectRho(runInfoObj)

R> par(mfrow = c(5, 2))

R> for (k in 1:runInfoObj$nCovariates)

+ hist(rho$rho[, k], xlim = c(0, 1), main = "")8.4. Assessing convergence

There is no method that can assure us that our MCMC chains have converged to the posterior probability distribution but there are several methods that can investigate whether there is evidence against convergence.

We have implemented the function globalParsTrace() which provides a basic diagnostic plot of the trace of some global parameters such as α, β and the number of clusters. For more convergence diagnostics we recommend using the R package coda (Plummer, Best, Cowles, and Vines 2006). coda is an R package to perform convergence diagnostics and statistical and graphical output analysis of the output from an MCMC sampler.

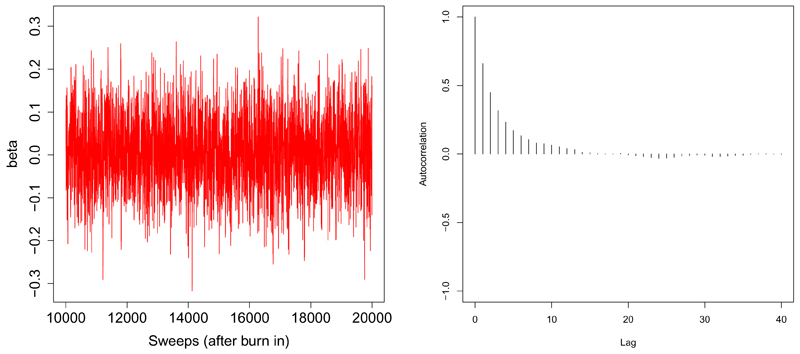

The following code can be used to reproduce the trace plot and autocorrelation plot in Figure 4 for parameter β1.

Figure 4.

Convergence diagnostics for parameter β1: trace plot and autocorrelation plot done using coda.

R> inputs <- generateSampleDataFile(clusSummaryBernoulliDiscrete())

R> runInfoObj <- profRegr(yModel = inputs$yModel, xModel = inputs$xModel,

+ nSweeps = 10000, nBurn = 10000, data = inputs$inputData,

+ output = "output", covNames = inputs$covNames,

+ fixedEffectsNames = inputs$fixedEffectNames)

R> globalParsTrace(runInfoObj, parameters = "beta", plotBurnIn = FALSE,

+ whichBeta = 1)

R> library("coda")

R> betaChain <- mcmc(read.table("output_beta.txt")[, 1])

R> autocorr.plot(betaChain)

The cluster specific parameters cannot be plotted as easily due to label switching and assessing their convergence is not an easy task. Hastie et al. (2014) introduce the marginal model posterior as a tool to assess convergence for Dirichlet process mixtures. We define the marginal partition posterior as p(Z|D). This quantity represents the posterior distribution of the allocations given the data, having marginalized out all the other parameters.

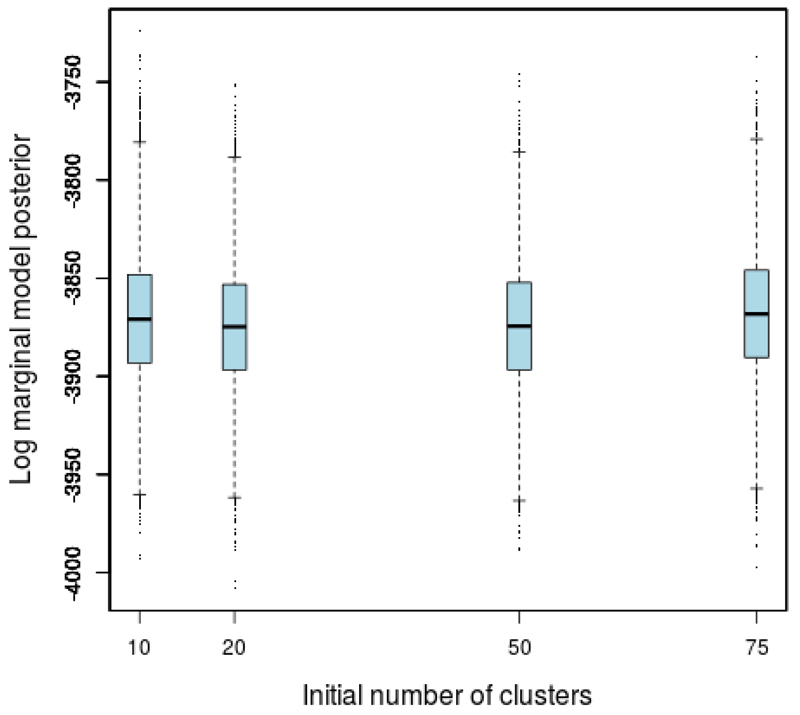

The marginal model posterior can be computed in PReMiuM. The code below computes the marginal model posterior for four different runs of profile regression on the same dataset with different initializations – different number of initial clusters. As seen in Figure 5, plotted using the code below, for the given simulated dataset, all the MCMC runs appear to converge to subsets of the model space with equivalent marginal model posterior. This does not imply convergence, but it is a useful diagnostic tool as it can highlight a lack of convergence in certain circumstances (Hastie et al. 2014). The marginal model posterior can also be plotted using the function globalParsTrace().

Figure 5.

The log marginal model posterior for four runs of profile regression, on the same dataset but with different initializations (i.e., different initial number of clusters).

R> inputs <- generateSampleDataFile(clusSummaryBernoulliDiscrete())

R> nClusInit <- c(10, 20, 50, 75)

R> for (i in 1:length(nClusInit)) {

+ runInfoObj <- profRegr(yModel = inputs$yModel, xModel = inputs$xModel,

+ nSweeps = 10000, nBurn = 10000, data = inputs$inputData,

+ output = paste("init", nClusInit[i], sep = ""),

+ covNames = inputs$covNames, alpha = 1,

+ fixedEffectsNames = inputs$fixedEffectNames,

+ nClusInit = nClusInit[i])

+ margModelPosterior(runInfoObj)

+ }

R> mmp <- list()

R> for (i in 1:length(nClusInit)) {

+ mmp[[i]] <- read.table(paste("init", nClusInit[i], "_margModPost.txt",

+ sep = ""))[, 1]

+ }

R> plot(c(head(nClusInit, n = 1) - 0.5, tail(nClusInit, n = 1) + 0.5),

+ c(min(unlist(mmp)), max(unlist(mmp))), type = "n",

+ ylab = "Log marginal model posterior",

+ xlab = "Initial number of clusters", cex.lab = 1.3, xaxt = "n")

R> axis(1, at = nClusInit, labels = nClusInit)

R> for (i in 1:length(nClusInit)) {

+ boxplot(mmp[[i]], add = TRUE, at = nClusInit[i], pch = ".",

+ boxwex = 5, col = "lightblue")

+ }8.5. Run times

We have run simulations to test PReMiuM’s speed and how it scales when the number of subjects or covariates increases. The code was run in serial on an Intel Core i7-2600 CPU clocked at 3.40GHz, on a system with 8GB RAM.

9. Conclusions

The structure of PReMiuM objects gives rise to a wider variety of uses than can be described in detail here. Our intention was to provide a tutorial for Dirichlet process clustering and to illustrate the basic features of the sampler and post-processing tools that we have implemented in PReMiuM to demonstrate its utility. Our long-term goal is to continue to develop this package for analysis on complex and high dimensional datasets as well to increase the flexibility with regards to the data types that can be analyzed.

Supplementary Material

Table 1.

Time required to run 100 iterations of PReMiuM for Bernoulli response and discrete covariates.

| Number of covariates | |||

|---|---|---|---|

| Number of Subjects | 100 | 1,000 | 10,000 |

| 1,000 | 7 sec | 43 sec | 9 min |

| 2,500 | 10 sec | 1 min | 18 min |

| 5,000 | 18 min | 2 min | 34 min |

Table 2.

Time required to run 500 iterations of PReMiuM for continuous response and continuous covariates.

| Number of covariates | ||

|---|---|---|

| Number of Subjects | 50 | 100 |

| 3,000 | 40 sec | 3 min |

| 5,000 | 1 min | 7 min |

Acknowledgments

The first two authors of this article have contributed equally. Silvia Liverani acknowledges support from the Leverhulme Trust (ECF-2011-576). This research was carried out while Silvia was a Leverhulme Early Career Fellow at Imperial College London, with support from the Medical Reserch Council Biostatistics Unit in Cambridge (UK). David I. Hastie acknowledges support from the INSERM grant (P27664). We are grateful for helpful discussions with Sara K. Wade.

A. Properties of the upper limit of the number of components

We provide the following proposition to support our assertions regarding C⋆.

Proposition 1. Suppose that we have a model with posterior as given in Equation 7. Suppose Z⋆, U⋆ and C⋆ are defined as in Section 2.2. Then:

-

(i)

ψc < Ui for all i = 1, 2, …, n and all c > C⋆ almost surely;

-

(ii)

C⋆ ≥ Z⋆ almost surely; and

-

(iii)

C⋆ < ∞ almost surely.

Proof. We rely on the fact that if V1, Vc ~ Beta(1, α), ψ1 = V1 and ψc = Vc ∏l<c(1 − Vc) for c = 2, 3, … then A proof of this result for the DPMM (in terms of more general conditions) is provided by Ishwaran and James (2001). Then:

-

(i)By definition, for all i = 1, 2, …, n

-

(ii)Let i⋆ be an individual i such that Zi = Z⋆. Again, by definition, This implies meaning

By definition of C⋆, this implies C⋆ ≥ Z⋆ almost surely. -

(iii)

Since this is a convergent series. By definition of a convergent series and because U⋆ > 0 we have C⋆ < ∞ almost surely.

B. MCMC scheme and blocking strategy

Below are additional comments to explain the blocking strategy employed in the DPMM algorithm. We use ‘·’ to denote “all other parameters and data”.

Step A. This step is a straightforward calculation of Z⋆, which (potentially) changes at each iteration (with the update of Z). The set A is defined immediately conditional on this value.

-

Step B. This is a joint update of U and the parameters corresponding to the active components in A, with the inclusion of label switching moves. The principle is to use the identity p(VA, ΘA, Z, U|·) = p(VA, ΘA, Z|·)p(U|VA, ΘA, Z, ·). We proceed by first updating (VA, ΘA) ~ p(VA, ΘA|Z, ·) = ∫ p(VA, ΘA, U|Z, ·)dU. Due to the conditional independence of V and Θ, this can be done in two steps: updating V (B.1) then updating Θ (B.2). The moves are presented as Gibbs updates, but in fact they are Metropolis-Hastings moves, where the variable of interest (for example VA) is sampled from its full conditional, and the other variables (for example ΘA and Z) are kept fixed. This results in an acceptance probability of 1, making the Gibbs update equivalent. The updated values are then used as interim values for V and Θ in (Metropolis-Hastings) label switching moves (see Section 6) which are applied in B.3. The moves can change the values of V as well as their order. The resulting sample of V and Θ are the final updated values of these parameters for this sweep. The updated allocation vector Z is used as an interim value throughout the remainder of the steps of the sweep, before the final updated value of Z is sampled in Step G. The final part (B.4) of Step B is to update U conditional upon the updated value of V and Θ and the interim value of Z.

B.1 Integrating out U and taking advantage of the conjugacy of the distribution for V inherent in the DPMM, along with the conditional independence structure, each component of vector VA is updated by sampling c ∈ A, where and The Dirichlet process is a special case of the Pitman-Yor process for d = 0.

B.2 Integrating out U and taking advantage of the conditional independence structure, ΘA is updated from p(ΘA|Z, Θ0, D). The full details of this will depend upon the application and the choice of f and Examples are given in Section 3.

B.3 This step implements the Metropolis-Hastings label switching moves detailed in Section 6. These moves update VA, ΘA and Z jointly from their conditional distribution with U integrated out. These moves are conditional upon the values of VA and ΘA sampled in steps B.1 and B.2. The third label switching move is proposed and implemented for the Dirichlet process only.

B.4 Conditioning on the updated values of VA, ΘA and Z from step B.3 , this step samples each Ui, i = 1, …, n, independently according to the full conditional distribution, as detailed in Walker (2007).

Step C. To compute U⋆ is straightforward given the updated value of U from step B.4. The value of Z⋆ (and with it the set A) can only change from that computed in Step A if the mixture component corresponding to the old Z⋆ was involved in a label switching move, and then only if the component it was switched with was empty. By design of the label switching moves (see Section 6) this means that Z⋆ and A can only get smaller, with the consequence that parameters corresponding to a small number of components may be updated twice per MCMC sweep (once in Step B as part of the active components A, and once in Step D and Step E as part of the updated potential components P). This has no ill-effects as long as the most recently updated parameter values are used at each subsequent step.

-

Step D. This is a joint update of α, VP and VI. The principle is to use the following identity: p(α, VP, VI|·) = p(α|·)p(VP, VI|α, ·). We proceed by first updating α ~ p(α|·) = ∫ p(α, VP, VI|·)dVPdVI (step D.1) and then sampling p(VP, VI|α, ·) (step D.2). To update VP, we need to alternate Gibbs samples with checks to evaluate whether the component just updated is C⋆. In this way the set P is determined on the fly. As mentioned in Section 2.1, no actual sampling is done for the inactive components in set I as these would just be samples from the prior and have no impact on the likelihood or any other conditionals in the MCMC sweep.

-

D.1 Since VP and VI both correspond to empty mixture components, the only contribution to the joint posterior conditional is through the prior. This allows us to easily integrate out VP and VI. Due to the conditional independence, the resulting posterior from which this step samples is p(α|VA, Z). Typically this cannot be sampled directly, so we employ a Metropolis-within-Gibbs move to update α, using an adaptive random-walk-Metropolis proposal on the log-scale.

If the prior for α is a Gamma distribution then it is alternatively possible to sample directly from the conditional with VA also marginalized (see Walker, 2007 and Escobar and West, 1995 for details). We retain our version as any prior for α can be potentially used, even though only a Gamma prior is available in the code at the moment.

D.2 We begin by setting C = Z⋆. We then repeat the following two steps until the stop condition is reached. First, check if Next, if the condition is met we set C⋆ = C and stop, otherwise we set C = C + 1 and sample VC ~ Beta(1 − d, α + dC). The Dirichlet process is a special case of the Pitman-Yor process for d = 0.

-

-

Step E. This step updates the parameters ΘP and ΘI from the distribution p(ΘP, ΘI|·). The set P is fully determined from step D.2. The parameters correspond to empty mixture components, so updated values of ΘP are sampled directly from the prior. As with other inactive parameter ΘI play no part in this MCMC sweep and so are not updated.

E.1 Taking advantage of the conditional independence structure, in this step we update ΘP by doing a Gibbs sample from the prior, such that Θc ~ p(Θc|Θ0) for each c ∈ P. As Θc may be a vector of parameters, this may involve a number of Gibbs updates per component c. The full details depend upon the choice of See Section 3 for examples.

-

Step F. Here, the global (non-cluster specific) likelihood parameters Λ associated with f are updated. There are only a finite number of such parameters so no special updates are needed.

F.1 Sample Λ ~ p(Λ|ΘA, Z, D). Due to the conditional independence structure of the model the update only depends on the current value of the active likelihood parameters ΘA, the allocations Z, and the data D. Λ may contain multiple parameters, so this stage may contain many Gibbs and / or Metropolis-within-Gibbs steps. Full details will depend upon the choice of f and p(Λ), see Section 3 for examples.

-

Step G. The final step of the algorithm is to update the parameter allocations Z, conditional on the newly updated values of the other parameters.

G.1 We sample Z ~ p(Z|VA, VP, ΘA, ΘP, U, Λ, D). Because of the independence of the individuals i, this is a series of Gibbs updates for each i = 1, 2, …, n, sampling Zi ~ p(Zi = c|VA, VP, ΘA, ΘP, Ui, Λ, Di) where Di is the data for individual i. For each update, since the conditional p(Zi = c|·) has no posterior mass for clusters c where Ui > ψc, this update depends only on the parameters associated with the finite number of clusters in the sets A and P, making the update a simple multinomial sample according to a finite vector of weights. The full details of the weights will depend upon the choice of f and

C. Posterior conditional distributions for variable selection

In the case of variable selection for continuous covariates, define the J × J matrix Γc as

for the first variable selection method, and

for the second method as presented in Section 3.5. Let IJ denote the J × J identity matrix, nc be the number of individuals allocated to cluster c, be as defined in Section 3.5 and such that The posterior conditional distributions for updating µc for c ∈ A are then given by

where

and

Contributor Information

Silvia Liverani, Brunel University London.

David I. Hastie, Imperial College London

Lamiae Azizi, MRC Biostatistics Unit Cambridge.

Michail Papathomas, University of St Andrews.

Sylvia Richardson, MRC Biostatistics Unit Cambridge.

References

- Andrieu C, Thoms J. A Tutorial on Adaptive MCMC. Statistics and Computing. 2008;18(4):343–373. [Google Scholar]

- Bigelow JL, Dunson DB. Bayesian Semiparametric Joint Models for Functional Predictors. Journal of the American Statistical Association. 2009;104(485):26–36. doi: 10.1198/jasa.2009.0001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blackwell D, MacQueen JB. Ferguson Distributions via Polya Urn Schemes. The Annals of Statistics. 1973;1(2):353–355. [Google Scholar]

- Chung Y, Dunson DB. Nonparametric Bayes Conditional Distribution Modeling with Variable Selection. Journal of the American Statistical Association. 2009;104(488):1646–1660. doi: 10.1198/jasa.2009.tm08302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunson DB. Nonparametric Bayes Local Partition Models for Random Effects. Biometrika. 2009;96(2):249–262. doi: 10.1093/biomet/asp021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunson DB, Herring AB, Siega-Riz AM. Bayesian Inference on Changes in Response Densities over Predictor Clusters. Journal of the American Statistical Association. 2008;103(484):1508–1517. doi: 10.1198/016214508000001039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Escobar MD, West M. Bayesian Density Estimation and Inference Using Mixtures. Journal of the American Statistical Association. 1995;90(430):577–588. [Google Scholar]

- Ferguson TS. A Bayesian Analysis of Some Nonparametric Problems. The Annals of Statistics. 1973;1(2):209–230. [Google Scholar]

- Fritsch A, Ickstadt K. Improved Criteria for Clustering Based on the Posterior Similarity Matrix. Bayesian Analysis. 2009;4(2):367–391. [Google Scholar]

- Green PJ. Colouring and Breaking Sticks: Random Distributions and Heterogeneous Clustering. In: Bingham NH, Goldie CM, editors. Probability and Mathematical Genetics: Papers in Honour of Sir John Kingman. Cambridge University Press; Cambridge: 2010. pp. 319–344. [Google Scholar]

- Hastie DI, Liverani S, Richardson S. Sampling from Dirichlet Process Mixture Models with Unknown Concentration Parameter: Mixing Issues in Large Data Implementations. Statistics and Computing. 2014 doi: 10.1007/s11222-014-9471-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hastie DI, Liverani S, Richardson S. PReMiuM: Dirichlet Process Bayesian Clustering, Profile Regression. R package version 3.1.0. 2015 doi: 10.18637/jss.v064.i07. URL http://CRAN.R-project.org/package=PReMiuM. [DOI] [PMC free article] [PubMed]

- Ishwaran H, James LF. Gibbs Sampling Methods for Stick-Breaking Priors. Journal of the American Statistical Association. 2001;96(453):161–173. [Google Scholar]

- Kalli M, Griffin JE, Walker SG. Slice Sampling Mixture Models. Statistics and Computing. 2011;21(1):93–105. [Google Scholar]

- Maechler M, Rousseeuw P, Struyf A, Hubert M, Hornik K. cluster: Cluster Analysis Basics and Extensions. R package version 1.15.3. 2014 URL http://CRAN.R-project.org/package=cluster. [Google Scholar]

- Molitor J, Papathomas M, Jerrett M, Richardson S. Bayesian Profile Regression with an Application to the National Survey of Children’s Health. Biostatistics. 2010;11(3):484–498. doi: 10.1093/biostatistics/kxq013. [DOI] [PubMed] [Google Scholar]

- Molitor J, Su JG, Molitor NT, Gómez Rubio V, Richardson S, Hastie D, Morello-Frosch R, Jerrett M. Identifying Vulnerable Populations through an Examination of the Association Between Multipollutant Profiles and Poverty. Environmental Science & Technology. 2011;45(18):7754–7760. doi: 10.1021/es104017x. [DOI] [PubMed] [Google Scholar]

- Müller P, Quintana F, Rosner GL. A Product Partition Model with Regression on Covariates. Journal of Computational and Graphical Statistics. 2011;20(1):260–278. doi: 10.1198/jcgs.2011.09066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neal RM. Markov Chain Sampling Methods for Dirichlet Process Mixture Models. Journal of Computational and Graphical Statistics. 2000;9(2):249. [Google Scholar]

- Papaspiliopoulos O. A Note on Posterior Sampling from Dirichlet Mixture Models. Technical Report 8, CRISM Paper. 2008 [Google Scholar]

- Papaspiliopoulos O, Roberts GO. Retrospective Markov Chain Monte Carlo Methods for Dirichlet Process Hierarchical Models. Biometrika. 2008;95(1):169–186. [Google Scholar]

- Papathomas M, Molitor J, Hoggart C, Hastie DI, Richardson S. Exploring Data from Genetic Association Studies Using Bayesian Variable Selection and the Dirichlet Process: Application to Searching for Gene × Gene Patterns. Genetic Epidemiology. 2012;6(36):663–74. doi: 10.1002/gepi.21661. [DOI] [PubMed] [Google Scholar]

- Papathomas M, Molitor J, Richardson S, Riboli E, Vineis P. Examining the Joint Effect of Multiple Risk Factors Using Exposure Risk Profiles: Lung Cancer in Non-Smokers. Environmental Health Perspectives. 2011;119:84–91. doi: 10.1289/ehp.1002118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitman J, Yor M. The Two-Parameter Poisson-Dirichlet Distribution Derived from a Stable Subordinator. The Annals of Probability. 1997;25(2):855–900. [Google Scholar]

- Plummer M, Best N, Cowles K, Vines K. coda: Convergence Diagnosis and Output Analysis for MCMC. R News. 2006;6(1):7–11. URL http://CRAN.R-project.org/doc/Rnews/ [Google Scholar]

- Quintana FA, Müller P, Papoila AL. Cluster-Specific Variable Selection for Product Partition Models. 2013 Submitted. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; Vienna, Austria: 2014. URL http://www.R-project.org/ [Google Scholar]

- Sethuraman J. A Constructive Definition of Dirichlet Priors. Statistica Sinica. 1994;4(2):639–650. [Google Scholar]

- Stephens M. Dealing with Label Switching in Mixture Models. Journal of the Royal Statistical Society B. 2000;62(4):795–809. [Google Scholar]

- Walker SG. Sampling the Dirichlet Mixture Model with Slices. Communications in Statistics – Simulation and Computation. 2007;36(1):45–54. [Google Scholar]

- Yau C, Papaspiliopoulos O, Roberts GO, Holmes C. Bayesian Non-Parametric Hidden Markov Models with Applications in Genomics. Journal of the Royal Statistical Society B. 2011;73(1):37–57. doi: 10.1111/j.1467-9868.2010.00756.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.