Abstract

This study examined the contributions of vocabulary and spelling to the reading comprehension of students in grades 6–10 who were and were not classified as English language learners. Results indicate that vocabulary accounted for greater between-grade differences and unique variance (ΔR2 = .11 to .31) in comprehension as compared to spelling (ΔR2 = .01 to .09). However, the contribution of spelling to comprehension was higher in the upper grade levels included in this cross-sectional analysis and functioned as a mediator of the impact of vocabulary knowledge at all levels. The direct effect of vocabulary was strong but lower in magnitude at each successive grade level from .58 in grade 6 to .41 in grade 10 while the indirect effect through spelling increased in magnitude at each successive grade level from .09 in grade 6 to .16 in grade 10. There were no significant differences between the language groups in the magnitude of the indirect impact, suggesting both groups of students relied more on both sources of lexical information in higher grades as compared to students in lower grades.

Keywords: spelling, vocabulary, reading comprehension, adolescents, English Learners

Perfetti and Hart (2002) have asserted that reading comprehension depends on having knowledge of words and their orthographic, phonological, and semantic constituents. Thus, reading skill improves when the reader has more high quality representations of words and can draw synchronously upon an understanding of their form and meaning (Perfetti, 2007). Previous research suggests this integrated lexical knowledge takes time to develop because vocabulary and orthographic learning occur gradually with repeated exposures to words (Castles, Davis, Cavalot, & Forster, 2007; Nation, 2009). Therefore, facility with these skills should continue contributing to the reading comprehension of adolescents long after the impact of phonological awareness begins to asymptote (Berninger, Abbott, Nagy, & Carlisle, 2010). In fact, college students’ (average age of 19.1 years) vocabulary and spelling abilities were found to make unique contributions to their lexical retrieval (Andrews & Lo, 2012). In the present study, we were interested in how vocabulary and spelling abilities uniquely and jointly explained the reading comprehension performance of students in grades 6–10.

Theoretical Framework

Numerous studies (c.f., Florit & Cain, 2011) on the components of reading have supported the Simple View (SV; Gough & Tunmer, 1986) in which decoding and language comprehension undergird reading comprehension in either an additive (Chen & Vellutino, 1997) or multiplicative (Hoover & Gough, 1990) fashion. Nevertheless, Florit and Cain (2011) pointed out that the relationships among the constructs have varied based on (a) whether the language in which an individual was reading had a shallow or deep orthography, (b) the ways in which the skills were measured, and (c) the number of years the individual had been reading. These distinctions have motivated the design of the present study in which the relationships among reading constructs were modeled for adolescents who were and were not English language learners (ELLs) and using print-based measures of the constructs.

Studies of the SV commonly employ picture vocabulary to test language comprehension and also may rely upon oral assessments of passage comprehension (e.g., the examiner reads a sentence or story and has the test taker provide a missing word or retell the story). The oral approach to measuring language and comprehension skills assumes that “potential reading comprehension capacity will be limited by the capacity to comprehend equivalent material in spoken form” (Braze, Tabor, Shankweiler, & Mencl, 2007, p. 227). Some researchers have identified limitations to this approach and posited that picture vocabulary tests may not present a sufficiently challenging language task to predict reading comprehension (Farnia & Geva, 2013; Tannenbaum, Torgessen, & Wagner, 2006).

As readers mature, comprehension requires understanding the meanings of words encountered in text without the benefit of pictorial or verbal cues, so measuring language comprehension orally might enable compensatory behaviors to obscure an individual’s true reading ability (Bruck, 1990; Keenan & Betjemann, 2007). This could be particularly problematic when assessing ELLs, who might have more exposure to oral than written language (Mancilla-Martinez, Kieffer, Biancarosa, Christodoulou, & Snow, 2011) and might be more dependent upon the supportive context and prosodic cues of speech (Braze et al., 2007).

Similarly, the age effect on the decoding-reading comprehension relationship (e.g., Garcia & Cain, 2014) may be a function of how word-level skills are measured in SV studies. Decoding is typically assessed with measures of pseudoword reading and sight word identification. However, older readers must be able to process polysyllabic words, sophisticated orthographic patterns, and words with inconsistent orthographic-phonological mappings. This difference in reading task demands may explain why assessments of word-level skills administered in early elementary have been insufficient predictors of who will have difficulty with reading comprehension in upper elementary, middle, or high school (Catts, Adlof, & Weismer, 2006; Lipka, Lesaux, & Siegel, 2006). It also may offer insight into why decoding tasks have predicted more variance in the reading comprehension of ELLs in grades K-2 (Manis, Lindsey, & Bailey, 2004) than those in grade 4 (Proctor, Carlo, August, & Snow, 2005).

Alternatively, using print-based measures of vocabulary and word-level skills may better reflect the quality of adolescents’ lexical knowledge that can be brought to bear in comprehending printed passages. The sections that follow review the literature base that supports considering written vocabulary knowledge and spelling as proxies for language comprehension and decoding, respectively, among older students who are and are not ELLs.

Vocabulary knowledge

Although the oral language experiences of young children are associated with the size and growth of their vocabulary knowledge (Hoff, 2006), learning to read and write requires a transfer of what was initially oral language ability to written language ability. In a longitudinal study of grades 1–6, basic oral vocabulary contributed to children’s early reading acquisition, but early reading comprehension skill was described as the fuel for advanced vocabulary development by upper elementary (Verhoeven, Van Leeuwe, & Vermeer, 2011). Moreover, older students’ scores on written vocabulary measures are highly correlated with and predictive of their reading comprehension scores (Cromley & Azevedo, 2007; Leach, Scarborough, & Rescorla, 2003; Valencia & Buly, 2004). Students with better comprehension also tend to have better vocabulary performance, and students with poor comprehension tend to have poor written vocabulary scores.

The relation may be even more pronounced for ELLs who not only know fewer words than their native English-speaking counterparts, but know fewer meanings and applications of the words they do know (August, Carlo, Dressler, & Snow, 2005; Laufer & Yano, 2001). Incorporating written vocabulary instruction in English, particularly when emphasizing the morphemes within the words, has resulted in improved reading performance among ELLs in upper elementary and middle school (Carlo et al., 2004; Lesaux, Kieffer, Faller, & Kelley, 2010). Furthermore, Laufer (2001) found that having ELLs work with a new English word in writing greatly improved their learning and retention of that word. The relation between vocabulary and comprehension is believed to become more pronounced and critical with age—through adulthood (Nation, 2001). In particular, knowledge of morphologically complex words requires more time to develop (Berninger et al., 2010; Nippold & Sun, 2008).

In a study of 11–13 year-olds, greater understanding of the meaning of inflectional endings (-ed, -ing) on words was related to greater accuracy of spelling inflected verbs (Hauerwas & Walker, 2003). Similarly, knowledge of morphemes has demonstrated a unique contribution to spelling that is stronger for middle school as compared to elementary students (Nagy, Berninger, & Abbott, 2006). Although spelling is considered a lower-level reading skill and vocabulary a higher-level skill with greater impact on reading comprehension (Landi, 2010), sharper connections between orthographic and meaning processors may be critical to skilled reading (Adams, 1990). Therefore, we sought to determine how adolescents’ vocabulary knowledge not only directly contributed to their reading comprehension performance, but also whether it had an indirect effect through spelling. A greater magnitude of effect would suggest integrated knowledge was more influential than word meaning alone.

Spelling

Both decoding and spelling rely on knowledge of the grapho-phonemic patterns of the language (Robbins, Hosp, Hosp, & Flynn, 2010). A review of research found that integrating decoding and spelling instruction in the lower elementary grades led to significant gains in phonemic awareness, alphabetic decoding, word reading, fluency, and comprehension (Weiser & Mathes, 2011). Moreover, the authors believed the spelling instruction might have fostered closer attention to the details of words’ orthographic representations. This seems supported by the results of a longitudinal study of children from ages 8–9 to ages 12–13 in which independent contributions to reading comprehension were made by children’s ability to use larger graphophonic units and morphemes to decode words (Nunes, Bryant, & Barros, 2012).

Ehri (2000) considered accurate spelling to be reflective of more advanced linguistic knowledge because it requires the integration of phonological, orthographic, and morphological knowledge. This may explain why poor readers identified in primary grades demonstrated poor spelling ability across grades 2–4, but students in the study with a late emerging reading disability only demonstrated low performance compared to typical readers in fourth grade (Lipka et al., 2006). As the words became more challenging and complex, participants identified as having a late-emerging reading disability exhibited more difficulty with representing the phonemes in the words. Although the most common spelling errors were phonological, the researchers concluded the students’ phonological skills were likely sufficient to support early reading activities but not advanced enough for the more difficult reading and spelling tasks of third and fourth grade. Difficulties in reading irregular words also have been attributed to orthographic weaknesses (Harm & Seidenberg, 1999). More precise, high-quality lexical representations of words are evidenced by more accurate spelling (Perfetti, 2007), and the results of previous research have shown that students remember vocabulary words better when spellings are shown as the words are learned orally than when the words are only practiced orally without spelling (Ricketts, Bishop, & Nation, 2009; Rosenthal & Ehri, 2008).

Evidence suggests that the development of orthographic knowledge proceeds in a similar fashion for both native English-speaking students and ELLs. For example, the spelling error rates and distributions of 9–11 year-old native English speakers have been found comparable to those of adult ELLs, with only certain categories of errors (e.g., consonant doubling) demonstrating higher prevalence among individuals of particular language backgrounds (Bebout, 1985; Cook, 1997). Apart from these idiosyncratic error types, the acquisition of English spelling rules and patterns seems to map onto a developmental sequence (Young, 2007). Whereas phonological and orthographic processes in another language may transfer to English and facilitate English reading development, spelling development likely takes more time and accumulated experiences with the English language (Sun-Alperin & Wang, 2011).

Given adolescent ELLs’ potentially delayed acquisition of complex word form and meaning constituents as compared to their native English speaking peers, we were interested in examining the contributions of vocabulary and spelling to the reading comprehension of students disaggregated by language group. This information would be useful in designing instruction for a large and growing subgroup of students who have demonstrated increased risk for comprehension difficulties (National Center for Education Statistics, 2013).

Purpose and Research Question

Despite relative agreement in the field that vocabulary and orthography are related to skilled reading, models of adolescent reading have not specifically included a spelling construct (e.g., Berninger & Abbott, 2010; Cromley & Azevedo, 2007). Moreover, recent studies that have explored whether the SV needs to be expanded have included fluency and vocabulary as factors separate from decoding and language, but these studies have not addressed the role of spelling in the model or measured written vocabulary knowledge (Braze et al., 2007; Farnia & Geva, 2013; Sabitini, Sawaki, Shore, & Scarborough, 2010; Tunmer & Chapman, 2012; Wagner, Herrera, Spencer, & Quinn, 2015). Even when assessing reading comprehension, rather than oral passage comprehension, the SV studies have continued to use picture vocabulary tests (Braze et al., 2007; Tunmer & Chapman, 2012; Wagner et al., 2015). Moreover, only one study examined differences between native English speakers and ELLs in their reading performance and growth (Farnia & Geva, 2013).

Two of the recent studies tested models of the SV with vocabulary affecting decoding and vice versa. Tunmer and Chapman’s (2012) structural equation model seemed to challenge the essential tenet of the SV (i.e., that oral language and decoding have independent effects on reading comprehension) because the authors reported that oral vocabulary affected decoding. Although Wagner et al. (2015) found a misspecification in that model, their corrected model of the SV was equivalent to a model in which oral language had a direct effect on decoding, thus supporting Tunmer and Chapman’s alternative view. This alternative view of the SV is investigated in the present study by analyzing the independent effects of written vocabulary knowledge and productive spelling ability on reading comprehension as well as the direct effect vocabulary might have on spelling. Given that older students acquire vocabulary words mainly by seeing their spellings as they are reading text (Ricketts et al., 2009; Rosenthal & Ehri, 2008), exploring spelling as a mediator of the relationship between vocabulary and reading comprehension is warranted.

Hence, the purposes of this study were to inform how vocabulary supports reading at the supralexical level (Bowers, Kirby, & Deacon, 2010) and the ways in which form and meaning information separately and jointly contribute to the comprehension of adolescent readers who are and are not native English speakers (Perfetti, 2007). The following research questions guided this study: How much variance in reading comprehension is uniquely and jointly explained by measures of spelling and vocabulary for ELLs and native English speakers in grades 6–10? Does spelling mediate the relation between vocabulary and reading comprehension, and is the mediation moderated by language subgroups across grades 6–10?

Method

Participants

A total of 2,813 students (sixth n = 538, seventh n = 539, eighth n = 520, ninth n = 697, tenth n = 519) participated in formative state testing of reading skills in the winter (November-January) followed by annual accountability testing in the spring. Students were enrolled in approximately 100 classrooms within a large district in Florida. Among the participants, 50% were males and 60% were eligible for free or reduced-price lunch, meaning they were considered economically disadvantaged. Approximately 1% of the students were Asian, 21% African American, 26% Hispanic, 3% Multiracial, 47% White. Less than 1% were Native American or Pacific Islander, and 3% were not reported. Fifteen percent of students were identified with disabilities. By language background, 14% of the students were classified as limited English proficient (LEP) due to low scores on the Comprehensive English Language and Learning Assessment (CELLA; Educational Testing Service, 2005). For the purposes of this study, LEP status is used as the identifier for ELL1. The sample sizes (based on the aggregation into language groups) for the research questions are reported in Table 1.

Table 1.

Sample Sizes by Grade and Language Group

| Grade | Non-LEP | LEP |

|---|---|---|

| 6 | 447 | 91 |

| 7 | 466 | 73 |

| 8 | 441 | 79 |

| 9 | 596 | 101 |

| 10 | 460 | 59 |

Note. LEP = served under the federal limited English proficient status (used as the identifier for English language learner in this study)

Measures

The measures used in this study were from the Florida Comprehension Assessment Test (FCAT; Florida Department of Education [FDOE], 2001) and Florida Assessments for Instruction in Reading (FAIR; FDOE, 2009). All data were obtained from the archived data core, which is a statewide repository for students’ de-identified reading data. Subtests from the FAIR included the Word Analysis Task (used as a proxy for decoding) and the Vocabulary Knowledge Task (used as a proxy for language comprehension). Both subtests were computer administered via the Internet. Students listened to directions on headphones and completed practice items with feedback before beginning the scored items.

Reading comprehension

The FCAT served as the state’s summative (i.e., end of the academic year) assessment of student achievement in reading, writing, mathematics, and science prior to the 2014–15 school year (FDOE, 2001). The reading portion of the FCAT was a group administered, criterion-referenced test consisting of six to eight informational and literary reading passages provided in print (FDOE, 2005). Students in third through tenth grades responded in paper and pencil format to 6–11 multiple choice items for each passage and were assessed across four content clusters. At the time of the study, these clusters were called reading comprehension in the areas of words and phrases in context, main idea, comparison/cause and effect, and reference and research.

There were five proficiency levels on FCAT, with Level 1 being the lowest. Students were considered to be passing FCAT at Level 3. Standard scores were used in the current analyses. Reliability for the FCAT was high at .90. In addition, test score content and concurrent validity were established through a series of expert panel reviews and data analysis (FDOE, 2001). The construct validity of the FCAT as a comprehensive assessment of reading outcomes received strong empirical support in an analysis of its relations with a variety of other reading comprehension, language, and basic reading measures (Schatschneider et al., 2004).

Word analysis task

The FAIR Word Analysis Task was a computer-adaptive test of spelling that assessed students’ knowledge of the phonological, orthographic, and morphological information necessary for accurate representations of English orthography. For the purposes of this study we will refer to the FAIR Word Analysis Task as the spelling task. The selection of words involved a multi-stage process developed by Authors (2005). First, words were grouped by grade levels according to spelling patterns taught in commonly used spelling programs and those identified in the state standards. Next, words representative of the spelling patterns were assessed for familiarity at the designated grade level using the Living Word Vocabulary levels (Dale & O’Rourke, 1981), which indicate the percentage of students at the grade level who understand the word. Finally, the frequency of each word was evaluated with the Educator’s Word Frequency Guide (Zeno, Ivens, Millard, & Duvvuri, 1995). In general, words became longer, more complex, and less frequent with each increase in grade level.

Students listened with headphones to a recorded word stated orally and provided within the context of a sentence. They then spelled by typing the word. Students were presented with five words at their grade level before the system became adaptive and delivered harder or easier words, depending on student ability, up to a maximum of 30 words. The average number of words spelled was approximately 12. This test provided information about the strength of students’ knowledge of written words, which is fundamental to accurate identification of words in text. It might also be considered indicative of the quality of a student’s lexical representation because of the need to integrate knowledge of each word’s form and meaning as the student spelled the dictated word that fit within the given sentence-level context. A spelling dictation task has high face validity because it captures the most common notion of what it means to spell a word. That is, an individual hears or imagines a word, segments its sounds, attaches letters to produce an orthographic representation of the sounds, and then checks the spelling to verify that the written word looks and sounds right. Because spelling so defined requires attention to a complete and, hence, orthographic representation, the research team hypothesized it was a potential mediator between vocabulary knowledge and reading comprehension.

The spelling task provided developmental ability scores, which are vertically scaled scores with a mean of 500 and standard deviation of 100 across the grade 3–10 developmental span. Estimates of reliability ranged from .90 in grades 4–6 to .95 in eighth grade. Because the spelling task was part of a reading assessment system, the focus was on its relation to sentence-level and text-level reading comprehension rather than its relation to other spelling measures (Authors, 2010). Correlations between the spelling task and FCAT were moderate (FDOE, 2009): r = .54 (grade 6), r = .44 (grade 7), r = .46 (grade 8), r = .44 (grade 9), and r = .45 (grade 10). The task was designed to be computer adaptive in order to increase precision at the extremes of the spelling distribution, so its correlation with group administered, standardized spelling measures was less meaningful.

Vocabulary knowledge task

In this task, students selected one of three morphologically related words that best completed a sentence such as: The student [attained*, retained, detained] a high grade in the class through hard work. Knowledge of affixes, particularly derivational morphemes, was required as can be seen in the following example: Her book was [illustrious, illustration, illustrative*] of 18th century prose. Words included on this task were selected in a multi-step fashion. First, words were chosen from the academic list compiled by Coxhead (2000) and then ordered by frequency using the Educator’s Word Frequency Guide (Zeno et al., 1995) such that words became increasingly more complex (particularly due to the use of derivational morphemes) and less frequent. After the sentences containing the target and distractor items were written, they were assigned to a grade level using the Flesch-Kincaid grade-level readability formula and expert judgment.

Scores were based on the total number of items answered correctly. Reliability estimates ranged from .90 in grade 3 to .95 in grade 8 (Authors, 2012). Score types with associated ranges were similar to those described for the FAIR spelling task, and the vocabulary developmental ability scores were used in the analyses. Correlations between the vocabulary task and reading comprehension measured by the FAIR were (FDOE, 2009): r = .60 (grade 6), r = .59 (grade 7), r = .62 (grade 8), r = .57 (grade 9), and r = .50 (grade 10). Concurrent correlations with the Peabody Picture Vocabulary Task-4th edition (PPVT; Dunn & Dunn, 2007) ranged from .47 to .67 (Authors, 2014). The lower magnitude correlations were expected as the vocabulary knowledge task is intended to assess deeper lexical knowledge; whereas, the PPVT is intended to assess vocabulary breadth.

Procedures

The FAIR was administered to Florida students in grades K-12 three times a year to monitor progress toward reaching state standards and benchmarks. The FDOE, in consultation with district superintendents, established testing windows of 60 days for each of the three yearly administrations. The window from which data for this study were gathered was from November to January. The students administered the tests are usually those who do not yet have FCAT scores or who scored at the lower levels of proficiency, Levels 1–3, on the FCAT because the assessment was primarily designed to inform instruction for students who were struggling with reading (Authors, 2009). However, districts could elect to administer the FAIR to students at all ability levels, Levels 1–5 on the FCAT.

Students typically took the FAIR in computer labs or in their classrooms if wireless laptop carts were available. Rarely, students might have taken turns completing the FAIR on a designated classroom computer. The administration was overseen by the classroom teacher in elementary schools or by the reading intervention teacher in middle and high schools. Students usually began with a Reading Comprehension screen (not included in the present analyses), which took 10–30 minutes, and could either take the other tests immediately thereafter or in another class period. This decision was made by the teacher overseeing the administration. The total testing time typically was less than 40 min.

Analytic Approach

The first research question was addressed with a set of hierarchical regression analyses predicting comprehension. Unique variance was estimated by reversing the order of entry for the spelling and vocabulary predictors so that the variance represented a partially controlled effect. Common variance was a difference score computed by the unique variances of each predictor subtracted from the total variance explained.

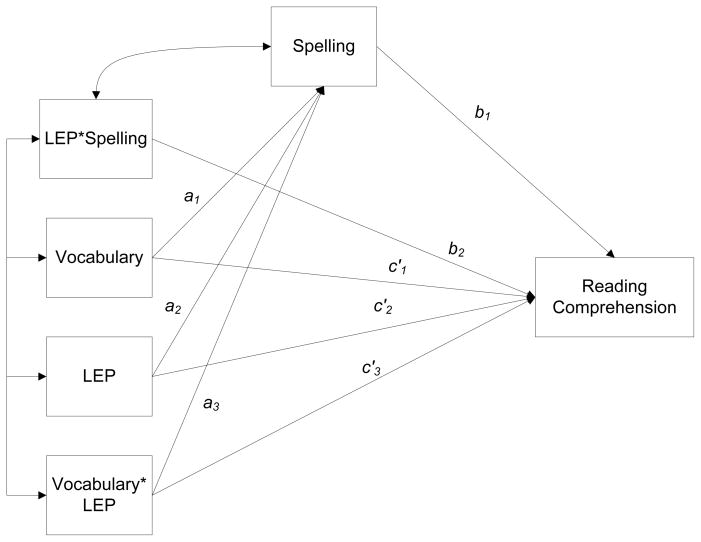

Multiple group moderated mediation analysis using Mplus 6.1 (Muthen & Muthen, 1998–2010) was used to ascertain the extent to which the mediation of spelling between vocabulary and reading comprehension was moderated by language status across the grade levels. This process required a sequence of analytic methods, first tested by a multiple group simple mediation analysis, which is illustrated in Figure 1. In this representation there is an initial variable (X), an outcome (Y), and a mediator (M). With the present data, it is initially hypothesized that the effect of vocabulary (X) on reading comprehension (Y) is mediated by spelling (M), with vocabulary still uniquely predicting comprehension. Baron and Kenny (1986) outlined three key steps for testing mediation and in conceptual terms: 1) establish that vocabulary is related to reading comprehension (i.e., testing c’ in Figure 1); 2) establish that vocabulary is related to spelling (testing a1 in Figure 1); and 3) establish that spelling is related to reading comprehension when controlling for vocabulary (testing b1 in Figure 1). If, in the third step the path c’ is shown to be 0 when a1and b1are estimated, then spelling completely mediates the relation between vocabulary and reading comprehension. Alternatively, if c’ is non-zero, then partial mediation can be ascertained. The estimate and significance of the indirect effect was assessed using bootstrapping, which has been shown to be advantageous in simple mediation models (MacKinnon, Lockwood, & Williams, 2004).

Figure 1.

General mediation model.

Our initial approach was to take advantage of the complex structure of the data, as the individual students were nested within classrooms. In the process of modeling the hierarchical data, the estimates at the classroom level demonstrated suppressor effects in some grades (because very high correlations among the skills were observed) and non-positive definite variance-covariance matrices. As such, rather than specifying a model for each level of the clustered data, we opted to account for the non-independence of observations by using a sandwich estimator to provide cluster-corrected standard errors. This approach is an appropriate method for accounting for the nesting nature of data without specifically answering questions about variance components at different level (Asparouhov, 2006).

Once mediation was evaluated, we extended the analysis to test whether the indirect effect was moderated by language group status across the grades. Although the term moderated mediation has conveyed a host of different interpretations, both substantively and analytically, Preacher, Rucker, and Hayes (2007) defined the general approach as a test of conditional indirect effects. Under this framework, they highlighted five popular statistical approaches which differentially test for moderation either by: 1) treating the independent variable as the moderator, 2) testing if path a (Figure 1) is moderated, 3) testing if path b (Figure 1) is moderated, 4) testing if path a is moderated by one variable and path b is moderated by another, and 5) testing if paths a and b are moderated by one variable. The present study used this last definition as we believe both paths were subject to moderation via the indirect effect.

The model estimation of conditional indirect effects necessitates an expansion of the model presented in Figure 1 to that provided in Figure 2. The components of X, M, and Y still remain; however, it may be seen that additional paths and observed variables appear. In this model, LEP represents the moderator, LEP*Spelling is the interaction between the moderator and the mediator, and Vocabulary*LEP is the interaction between the initial variable and the moderator. The indirect effect from this model may be estimated from paths with

Figure 2.

Total effect moderation model. LEP = served under the federal limited English proficient status (used as the identifier for English language learner in this study)

where Wv is the value of the moderator of interest to test. In the present data, our moderator was a set of dummy-coded covariates to test the pair-wise comparison across language-comprehension stati, thus, the equation reduced to Indirect = a1*b1. For each of the moderator comparisons, a Wald test was used to evaluate hypothesis testing of a no differences in the indirect effect. Because multiple comparisons were conducted on the outcome within each grade, a Benjamini-Hochberg correction (Benjamini & Hochberg, 1995) was applied within each grade to any statistically significant effect in order to guard against the false-discovery rate.

Results

No data were missing for this study. The battery of measures used was part of a screening and diagnostic assessment system used in Florida, which was required for all students within districts who opted to use the battery. Descriptive statistics for the three outcomes are reported in Table 2. Because the vocabulary and spelling tasks were developmentally sensitive, larger scores across the grade levels indicated higher ability. It can be seen that for both outcomes, the ability scores were progressively higher across the grade levels; however, greater between-grade differences were observed when considering vocabulary scores compared to spelling. For example, the mean difference between grades 6 and 7 means in vocabulary was 35 points. Because the standard deviation for the norm sample developmental scale was 100, a 35-point difference represents approximately a .35 standard deviation difference (i.e., 35/100). The spelling scores differed by a mean of 7 (i.e., .07 SD). This trend was observed across the grade levels, suggesting that greater separation in ability existed for vocabulary compared to spelling. When transformed to a standardized coefficient (i.e., Cohen’s d), the effect size difference across grades ranged from small (d = 0.16) to large (d = 1.12) for vocabulary scores, compared to a more modest range of d = 0.08 to 0.59 for spelling score differences (Table 3).

Table 2.

Descriptive Statistics by Grade and LEP Status

| Grade | Group | Measure | Minimum | Maximum | Mean | Std. Dev. |

|---|---|---|---|---|---|---|

| 6 | Total | Vocabulary | 250 | 779 | 510.06 | 103.886 |

| Spelling | 200 | 751 | 501.97 | 94.211 | ||

| Reading Comprehension | 128 | 500 | 309.69 | 51.406 | ||

| Non-LEP | Vocabulary | 250 | 779 | 519.20 | 103.40 | |

| Spelling | 200 | 751 | 504.41 | 94.44 | ||

| Reading Comprehension | 128 | 500 | 313.11 | 50.88 | ||

| LEP | Vocabulary | 302 | 714 | 465.17 | 94.67 | |

| Spelling | 252 | 702 | 489.97 | 92.66 | ||

| Reading Comprehension | 140 | 397 | 292.88 | 50.92 | ||

|

| ||||||

| 7 | Total | Vocabulary | 274 | 959 | 545.44 | 133.408 |

| Spelling | 270 | 769 | 509.06 | 85.633 | ||

| Reading Comprehension | 135 | 500 | 322.48 | 55.286 | ||

| Non-LEP | Vocabulary | 274 | 959 | 552.76 | 136.90 | |

| Spelling | 270 | 769 | 514.77 | 85.46 | ||

| Reading Comprehension | 135 | 500 | 324.88 | 56.56 | ||

| LEP | Vocabulary | 358 | 785 | 498.69 | 96.84 | |

| Spelling | 270 | 716 | 472.61 | 77.88 | ||

| Reading Comprehension | 153 | 428 | 307.19 | 43.67 | ||

|

| ||||||

| 8 | Total | Vocabulary | 297 | 885 | 564.37 | 108.887 |

| Spelling | 200 | 770 | 519.91 | 96.846 | ||

| Reading Comprehension | 100 | 500 | 308.91 | 51.710 | ||

| Non-LEP | Vocabulary | 297 | 885 | 572.48 | 107.49 | |

| Spelling | 200 | 770 | 524.66 | 93.79 | ||

| Reading Comprehension | 136 | 500 | 313.64 | 50.06 | ||

| LEP | Vocabulary | 327 | 885 | 519.13 | 106.18 | |

| Spelling | 200 | 685 | 493.38 | 109.27 | ||

| Reading Comprehension | 100 | 382 | 282.51 | 53.15 | ||

|

| ||||||

| 9 | Total | Vocabulary | 249 | 834 | 585.38 | 115.214 |

| Spelling | 200 | 777 | 532.06 | 83.836 | ||

| Reading Comprehension | 100 | 500 | 309.60 | 49.383 | ||

| Non-LEP | Vocabulary | 262 | 834 | 594.57 | 110.29 | |

| Spelling | 200 | 777 | 537.91 | 79.75 | ||

| Reading Comprehension | 100 | 500 | 313.08 | 48.58 | ||

| LEP | Vocabulary | 249 | 834 | 531.15 | 128.60 | |

| Spelling | 200 | 687 | 497.52 | 98.31 | ||

| Reading Comprehension | 135 | 404 | 289.01 | 49.32 | ||

|

| ||||||

| 10 | Total | Vocabulary | 340 | 881 | 626.26 | 104.411 |

| Spelling | 200 | 777 | 558.36 | 97.524 | ||

| Reading Comprehension | 100 | 500 | 310.37 | 56.882 | ||

| Non-LEP | Vocabulary | 340 | 881 | 635.40 | 98.76 | |

| Spelling | 200 | 777 | 564.96 | 95.10 | ||

| Reading Comprehension | 100 | 500 | 315.24 | 54.94 | ||

| LEP | Vocabulary | 355 | 870 | 554.98 | 119.77 | |

| Spelling | 267 | 777 | 506.90 | 101.66 | ||

| Reading Comprehension | 132 | 391 | 272.41 | 57.92 | ||

Note. LEP = served under the federal limited English proficient status (used as the identifier for English language learner in this study); Vocabulary = FAIR Vocabulary Knowledge Task; Spelling = FAIR Word Analysis Task; Reading Comprehension = FACT Reading test

Table 3.

Between-grade Effect Size (Cohen’s d) Differences for Vocabulary and Spelling

| Grade | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|

| 6 | - | 0.08 | 0.19 | 0.34 | 0.59 |

| 7 | 0.30 | - | 0.12 | 0.27 | 0.54 |

| 8 | 0.52 | 0.16 | - | 0.14 | 0.40 |

| 9 | 0.68 | 0.32 | 0.19 | - | 0.29 |

| 10 | 1.12 | 0.67 | 0.58 | 0.37 | - |

Note. Upper-diagonal are pair-wise effect size differences for spelling, below-diagonal are pair wise effect size differences for vocabulary.

Reading comprehension scores ranged from 308.91 in grade 8 to 310.37 in grade 10. As previously described, the reading comprehension score used in this study was not a developmental ability score as were the vocabulary and spelling scores, but instead represented a grade-level standard score. The average reading comprehension scores observed in this sample approximated the mean state-level performance reported by the FDOE (2010) across the grades: grade 6 (sample = 310, state = 315), grade 7 (sample = 322, state = 322), grade 8 (sample = 309, state = 312), grade 9 (sample = 310, state = 317), grade 10 (sample = 310, state = 310).

Unique and Joint Effects on Reading Comprehension

Individual hierarchical regressions by grade for students who were and were not classified LEP demonstrated that the vocabulary task was, with one exception, a stronger determinant of reading comprehension compared to spelling ability (Table 4). In grade 6, 19% of variance in comprehension was uniquely explained by vocabulary compared to only 2% for spelling for non-LEP students. Similarly, when considering LEP students, 25% unique variance was explained by vocabulary compared with 2% in spelling. This pattern was replicated in grade 7 for both non-LEP (25% vocabulary vs. 4% spelling) and LEP students (22% vocabulary vs. 1% spelling), as well as grade 8 non-LEP students (24% vocabulary vs. 3% spelling). A dissimilar effect occurred for the LEP students in grade 8, where 16% of the unique variance was explained by spelling and only 4% by vocabulary. Grades 9 and 10 demonstrated that both predictors roughly explained the same amount of unique variance pertaining to the non-LEP students (i.e., 12% vocabulary vs. 8% spelling in grade 9; 11% vocabulary vs. 9% spelling in grade 10), with larger discrepancies observed for the LEP students in grades 9 (31% vocabulary vs. 3% spelling) and 10 (19% vocabulary vs. 3% spelling).

Table 4.

Results of Hierarchical Regressions Using Reading Comprehension as the Dependent Variable

| Grade | Group | Model | B | SE B | Beta | t | R2 | Unique | Common | |

|---|---|---|---|---|---|---|---|---|---|---|

| 6 | Non-LEP | 1 | Spelling | .27 | .02 | .50 | 12.21* | .25 | ||

| Vocabulary | .27 | .02 | .54 | 12.38* | .44 | .19 | ||||

| 2 | Vocabulary | .32 | .02 | .65 | 18.03* | .42 | ||||

| Spelling | .10 | .02 | .18 | 4.09* | .44 | .02 | .23 | |||

| LEP | 1 | Spelling | .28 | .05 | .51 | 5.59* | .26 | |||

| Vocabulary | .32 | .05 | .60 | 6.75* | .51 | .25 | ||||

| 2 | Vocabulary | .38 | .04 | .70 | 9.27* | .49 | ||||

| Spelling | .10 | .05 | .18 | 1.96 | .51 | .02 | .24 | |||

|

| ||||||||||

| 7 | Non-LEP | 1 | Spelling | .34 | .03 | .52 | 12.99* | .27 | ||

| Vocabulary | .24 | .02 | .57 | 15.56* | .52 | .25 | ||||

| 2 | Vocabulary | .28 | .01 | .69 | 20.51* | .48 | ||||

| Spelling | .16 | .02 | .24 | 6.43* | .52 | .04 | .23 | |||

| LEP | 1 | Spelling | .24 | .06 | .43 | 3.98* | .18 | |||

| Vocabulary | .25 | .05 | .56 | 5.09* | .40 | .22 | ||||

| 2 | Vocabulary | .28 | .04 | .63 | 6.76* | .39 | ||||

| Spelling | .07 | .06 | .12 | 1.14 | .40 | .01 | .17 | |||

|

| ||||||||||

| 8 | Non-LEP | 1 | Spelling | .25 | .02 | .47 | 11.29* | .23 | ||

| Vocabulary | .26 | .02 | .56 | 13.92* | .46 | .24 | ||||

| 2 | Vocabulary | .31 | .02 | .66 | 18.42* | .44 | ||||

| Spelling | .10 | .02 | .19 | 4.69* | .46 | .03 | .19 | |||

| LEP | 1 | Spelling | .32 | .04 | .66 | 7.63* | .43 | |||

| Vocabulary | .12 | .05 | .25 | 2.35* | .47 | .04 | ||||

| 2 | Vocabulary | .28 | .05 | .56 | 5.93* | .31 | ||||

| Spelling | .24 | .05 | .50 | 4.72* | .47 | .16 | .27 | |||

|

| ||||||||||

| 9 | Non-LEP | 1 | Spelling | .33 | .02 | .53 | 15.42* | .29 | ||

| Vocabulary | .18 | .02 | .40 | 11.06* | .41 | .12 | ||||

| 2 | Vocabulary | .25 | .01 | .57 | 16.97* | .33 | ||||

| Spelling | .20 | .02 | .33 | 9.03* | .41 | .08 | .21 | |||

| LEP | 1 | Spelling | .27 | .04 | .54 | 6.35* | .29 | |||

| Vocabulary | .25 | .03 | .66 | 8.82* | .60 | .31 | ||||

| 2 | Vocabulary | .29 | .03 | .76 | 11.58* | .58 | ||||

| Spelling | .10 | .04 | .20 | 2.65* | .60 | .03 | .26 | |||

|

| ||||||||||

| 10 | Non-LEP | 1 | Spelling | .29 | .02 | .50 | 12.33* | .25 | ||

| Vocabulary | .21 | .02 | .37 | 8.99* | .36 | .11 | ||||

| 2 | Vocabulary | .29 | .02 | .52 | 13.01* | .27 | ||||

| Spelling | .19 | .02 | .34 | 8.13* | .36 | .09 | .16 | |||

| LEP | 1 | Spelling | .33 | .06 | .59 | 5.45* | .34 | |||

| Vocabulary | .28 | .06 | .58 | 4.85* | .54 | .19 | ||||

| 2 | Vocabulary | .35 | .04 | .71 | 7.72* | .51 | ||||

| Spelling | .12 | .07 | .21 | 1.76 | .54 | .03 | .32 | |||

Note. LEP = served under the federal limited English proficient status (used as the identifier for English language learner in this study) Vocabulary = FAIR Vocabulary Knowledge Task; Spelling = FAIR Word Analysis Task. Unique = unique variance of each predictor; Common = the common variance across predictors.

p < .05

Table 4 also highlights the unique effects of vocabulary and spelling as well as the common effect for each subgroup by grade. For non-LEP students in grade 6, the results suggested that the two predictors individually accounted for less total variance (i.e., 19% + 2% = 21%) than they did jointly (23%); however, for the LEP students, greater unique variance was explained (27%) compared to common variance (24%). Grade 7 demonstrated that greater unique variance was accounted for in reading comprehension for vocabulary and spelling compared to common variance for both non-LEP (29% unique vs. 23% common) and LEP students (23% unique vs. 17% common). Although greater unique variance was explained for non-LEP students in grade 8 compared to common variance (27% unique vs. 20% common), the reverse was found for the LEP students (20% unique vs. 27% common). This phenomenon was replicated in grade 10 as well (20% unique vs. 16% common, non-LEP; 32% unique vs. 22% common, LEP). Grade 9 differences highlighted that unique and common variances explained comprehension at an equal magnitude of 20% for non-LEP students, while the unique effects for LEPs were larger (34%) than the common effect (26%).

Multiple Group Mediation

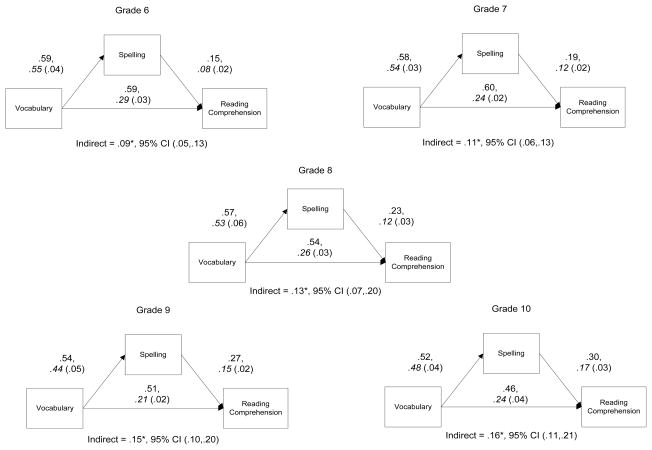

The previous set of analyses show that both spelling and vocabulary are unique and joint determinants of reading comprehension ability across the grades. Beyond a direct effects model of predicting comprehension, we were further interested in testing the extent to which spelling mediated the relation between vocabulary and comprehension, and the invariance of such effects across grades 6–10. Typical multiple group specification involves testing a model where the direct and indirect effects (as well as other parameters) are assumed to be the same across the groups (i.e., fully constrained). Based on the findings from the first research question, we hypothesized that the grades would vary in the magnitude of direct effects of spelling and vocabulary on comprehension as well as the indirect effect. The fully constrained model yielded an Akaike Information Criteria (AIC) value of 61402, a sample size adjusted Bayesian Information Criteria (BIC) estimate of 61421, and a log likelihood value of 30694 with 35 degrees of freedom. By freeing the direct and indirect effect constraints, the most parsimonious model was one which freely estimated the parameters at each grade level (Figure 3). This yielded an AIC value of 61034, a sample adjusted BIC of 61131, and log likelihood value of 30482 with 7 degrees of freedom.

Figure 3.

Multiple group mediation model grades 6–10. The first parameter value for each pathway is the standardized coefficient; the raw coefficient is italicized; the cluster corrected standard error is in parenthesis.

By taking the difference between these two models for both fit indices, an absolute difference of 368 on AIC and 290 on the sample adjusted BIC was estimated. Raftery (1995) showed that BIC differences greater than 10 between two models demonstrated very strong evidence for parsimony differences in the model specification. Furthermore, results from the likelihood ratio test converged on the BIC value, supporting the model differentiation (Δ−2LL = 212, Δdf = 28, p < .001). Accordingly, we used the unconstrained model for reporting the direct and indirect effects across the grades, as the findings suggest that the estimated path coefficients were significantly differentiated across grades.

Using the steps outlined by Baron and Kenny (1986), steps 1 and 2 were achieved through specifications of the model and differentially constraining the non-tested path (e.g., the relation between vocabulary and spelling in step 1 or the relation between vocabulary and reading comprehension in step 2) to be equal to zero. The final step of the model freed all paths to be estimated, with those standardized results reported in Figure 3. As can be seen across the grade levels, the direct effect of vocabulary on reading comprehension was not zero; this indicated that complete mediation was not achieved, and that spelling partially mediated the vocabulary-comprehension relation. The path analysis shows that the direct partial effect of vocabulary on comprehension was stronger in grades 6 and 7 (.59 and .60 respectively), compared to grades 8–10 (.54 in grade 8, .51 in grade 9, and .46 in grade 10). Conversely, the partial direct effect of spelling on comprehension was significantly, positively different in magnitude as the grades increased. The indirect effect in grade 6 was estimated at .09 compared with .11 in grade 7, .13 in grade 8, .15 in grade 9, and .16 in grade 10. This demonstrated a small effect across the grades, but it was statistically different across grades 6 to 10.

Addressing the latter half of our second research question involved a test of multiple group moderated mediation to study whether the indirect effect was moderated by language status. Across the grades, no significant differences were estimated in the magnitude of the indirect effect between language groups: grade 6 (Wald = 0.07, p = .791), grade 7 (Wald = 0.11, p = .741), grade 8 (Wald = 1.66, p = .200), grade 9 (Wald = 0.03, p = .874), and grade 10 (Wald = 1.68, p = .195).

Discussion

This study explored the contributions of vocabulary and spelling to reading comprehension among native English-speaking students and ELLs in grades 6–10. Vocabulary was measured by a sentence completion task that tapped knowledge of morphologically complex words as well as semantic and syntactic knowledge. Spelling was measured by a dictation task that required the integration of phonological, orthographic, and morphological knowledge of English words.

Consistent with previous research conducted with younger children through grade 6 (Berninger et al., 2010), the larger sample of adolescents included here demonstrated higher vertically scaled developmental ability scores in both word meaning and productive spelling skills across grades 6–10. This was observed even though students’ reading comprehension scores remained centered on the average performance for each grade level, which seems to support Perfetti and Hart’s (2002) assertion that quality of lexical knowledge exists along a continuum and not within a binary distinction of an individual student’s ability. That is, the students in grade 6 did not have poor lexical knowledge, and the students in grade 10 good lexical knowledge. Rather, any improvements in overall comprehension from grade-to-grade were likely accompanied by a gradual refinement in vocabulary and spelling abilities such that the older students had more high quality word representations upon which to draw.

As has been documented in previous studies examining the contributions of spelling and vocabulary to the reading comprehension of native English-speaking students ages 8–13 (Nunes et al., 2012) and adults (Landi, 2010), vocabulary knowledge emerged as particularly important to students’ reading development. It not only accounted for greater between-grade differences compared to spelling, but also made a larger unique contribution to reading comprehension for both ELLs and native English-speaking students at all grade levels (6–10) — save that of ELLs in grade 8. The Vocabulary Knowledge Task included mostly derivational forms of words, which are prevalent in middle and high school textbooks (Nippold & Sun, 2008) and are considered the key to vocabulary growth above grade 4 (Anglin, Miller, & Wakefield, 1993). Hence, the higher magnitude contribution of vocabulary among older students in our study was likely due to their improved understanding of the kinds of words and the morphological structure of words that were necessary for comprehending upper grade-level text. The task used to measure students’ vocabulary targeted deeper lexical knowledge than tests used to measure vocabulary breadth.

Derivational knowledge also supports orthographic development (Adams, 1990; Seymour, 1997). In the latter stages of spelling development, students achieve derivational constancy or the ability to spell morphologically complex words (Frith, 1985). As might be expected then, findings from the present study revealed that morphologically complex vocabulary shared variance (i.e., the common contribution) in comprehension with spelling and had an indirect impact on reading comprehension through spelling. Although spelling accounted for less separation in reading ability at each successive grade level as compared to vocabulary, there were positive differences (i.e., successively higher score values) from grade to grade. Notably, the mean difference between students in grades 9 and 10 (0.26 SD) was double the mean difference between students in grades 8 and 9 (0.13 SD). Among the high school non-ELL participants, the unique variance in comprehension accounted for by spelling was very near that accounted for by vocabulary.

We note that the cross-sectional nature of the data cannot allow for conclusions regarding students’ growth from year to year, but the spelling and vocabulary performance of the older students in our sample suggest that developing greater spelling ability and knowledge of vocabulary could mean students were better able to synchronously retrieve knowledge of the words’ forms and meanings to support their comprehension. However, students’ tenth grade ELL peers, who would have had less accumulated experience with the printed language, still demonstrated great discrepancies between the unique variance in comprehension explained by vocabulary and spelling. This seems consistent with Florit and Cain’s (2011) meta-analytic findings that the strength of the relationship among the constructs of reading in the SV vary by language background and overall reading experience.

Further support for the importance of integrated rather than independent word knowledge is seen in the results of the mediation model. The direct effect of vocabulary on comprehension was strong but negatively different across grades 6 to 10 while the indirect effect through spelling demonstrated positive differences (i.e., successively higher indirect effects). It could be that when reading upper grade-level text, knowledge of word meanings alone becomes less predictive of comprehension than word knowledge applied in the production of complex spellings. The latter is the ultimate test of a students’ lexical knowledge because all letters must be correctly represented to count as a correct spelling. Therefore, orthographic representations may ensure access to word meanings and, hence, text understanding.

The lack of significant differences between language groups in the magnitude of indirect effects suggests that, with higher grade-level text, reading comprehension in a first or second language involves more synchronous retrieval of lexical information (Perfetti, 2007). Including spelling as a mediator across grades 6–10 extends previous findings that the ability of fourth and fifth grade ELLs to decompose derived words was a significant and strengthening predictor of reading comprehension (Kieffer & Lesaux, 2008).

Theoretical Implications

This study differed from other explorations of the SV (e.g., Braze et al., 2007; Farnia & Geva, 2013; Sabitini et al., 2010; Tunmer & Chapman, 2012; Wagner et al., 2015) in that it employed more complex measures of vocabulary and spelling as proxies for the language comprehension and decoding components. Similar to findings for the oral vocabularies of young adults (Braze et al., 2007), the print-based vocabulary knowledge task demonstrated the stronger relationship to reading comprehension in grades 6–10. However, spelling mediated this relationship, thus offering another perspective on the alternative view of the SV in which language comprehension has a direct effect on decoding—rather than the two components only making independent contributions to reading comprehension (Tunmer & Chapman, 2012; Wagner et al., 2015). In addition, results presented here demonstrated the partial direct effect of spelling on reading comprehension was successively higher across the grade levels. This is in contrast to other studies of the SV which have found the decoding-reading comprehension relationship diminished with age when using measures of pseudo- and sight-word reading (e.g., Garcia & Cain, 2014). Taken together, these findings reveal that interpretations of the SV may depend, as Florit & Cain (2011) suggested, not only on who is being assessed (by age and language background) and how their reading skills are being measured (orally or in print), but also on the ways in which the constructs are modeled (with independent effects on comprehension or a mediation model).

Practical Implications

Results of this study suggest that the middle and high school years may be important periods for continuing the growth of students’ vocabulary and orthographic knowledge. Although it is likely few would disagree with offering adolescents vocabulary instruction (see, for example, the meta-analysis by Elleman, Lindo, Morphy & Compton, 2009), there has not been as much research on the effects of spelling for older students. Spelling instruction has reportedly received little attention at any grade level (Cooke, Slee, & Young, 2008), but particularly so in grades 8–12 (Authors, 2010). Hence, extant literature does not indicate whether the amount of variance in reading comprehension accounted for by spelling might be altered with more active efforts to improve students’ orthographic knowledge. The latter stage of spelling development involves morphologically complex words, so it would seem instruction could easily emphasize both form and meaning constituents. In fact, Berninger and colleagues’ (2010) found that continued growth in morphological awareness was dependent upon knowledge of the word formation process and not just on vocabulary. The results of the mediation model in the present study also indicate that spelling may be necessary to capitalize on vocabulary knowledge when reading higher grade-level text.

It should be noted that ELLs demonstrated similar associations among vocabulary, spelling, and reading comprehension as their native English-speaking peers in this study. Therefore, the instructional implications apply to adolescents regardless of their language backgrounds. In fact, ELLs stand to benefit even more because, although they follow a similar developmental path in acquiring morphological and orthographic knowledge as native English speakers do, they are not on pace chronologically (Bebout, 1985; Cook, 1997). This likely means ELLs will need instruction more appropriate to earlier stages of spelling and vocabulary development, such as with inflectional endings, before being able to take advantage of the instruction in derivational suffixes afforded their non-ELL peers. Targeted and systematic instruction in these skills may be a critical element of remediating the elevated risk ELLs display for reading comprehension problems (NCES, 2009, 2010).

Limitations and Directions for Future Research

Using extant data obtained from the Florida assessment system facilitated the study of a large sample, but it also presented two distinct limitations. First, it precluded the administration of multiple tasks for each construct, which would have allowed for conclusions above the level of correlations between specific tests. In particular, we did not have data on students’ morphological awareness or a normative measure of their vocabulary breadth. Future research might examine the extent to which vocabulary breadth mediates the relation between morphological awareness and reading comprehension. The second limitation imposed by the use of an archival database was that information on the home language and proficiency levels of the ELLs was not available. The fact that there were differences across LEP and non-LEP students with regard to the percentages of unique and joint variance accounted for by form and meaning suggests future research might explore the dimensionality of the vocabulary and spelling constructs across and within the populations.

When examining studies of vocabulary intervention by learner characteristics, better outcomes have been associated with ELLs (Carlo et al., 2004; Lesaux et al., 2010). The present analysis revealed no significant differences in the magnitude of indirect effects of vocabulary on comprehension (as mediated by spelling ability) between native English-speaking students and ELLs, but different patterns in the unique and joint effects of the lexical skills on reading comprehension. Native English-speakers were more likely to have had the number and range of exposures to printed English that would enable the development of greater lexical expertise (Andrews & Bond, 2009). However, examining student performance by prior reading comprehension ability would further strengthen interpretations about the differences in lexical knowledge by reader characteristics. Such subgroup analyses were not possible due to small sample sizes when dividing the ELLs into high versus low prior comprehension performance.

Although the results of this study indicate that integrated knowledge of word form and meaning may play a role in more advanced reading, it is still not clear how this integration can be facilitated and with what relative dosage of spelling and vocabulary instruction. Given the reported inattention to teaching middle and high school students the meaningful units of words (Kieffer & Lesaux, 2008) and their spelling (Authors, 2010), it may be that students’ development is happening somewhat intuitively—perhaps out of necessity for coping with advanced reading demands. Therefore, it remains to be seen what the contributions of vocabulary and spelling ability to comprehension would be under more purposeful guidance.

Acknowledgments

This research was supported by Grant P50HD052120 from the National Institute of Child Health and Human Development, Grant R305A100301 from the Institute of Education Sciences in the U.S. Department of Education, and Grant R305F100005 from the Institute of Education Sciences.

Footnotes

Students are identified as LEP or ELL in a two-step process. First, a caretaker must report on the school’s home language survey that a language other than English is spoken in the home. Second, the student’s listening, speaking, reading, and writing proficiency (as assessed by a validated measure such as the CELLA) must be below the average English proficiency level of English speaking students at the same age and grade. In Florida, these steps are governed by Rules 6A-6.0901 and 6A-6.0902.

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Child Health and Human Development, the National Institutes of Health, or the Institute of Education Sciences.

Contributor Information

Deborah K. Reed, University of Iowa, Iowa Reading Research Center

Yaacov Petscher, Florida State University, Florida Center for Reading Research

Barbara R. Foorman, Florida State University, Florida Center for Reading Research

References

- Authors. (2005).

- Authors. (2009).

- Authors. (2010).

- Authors. (2012).

- Authors. (2014).

- Adams MJ. Beginning to read: Thinking and learning about print. Cambridge, MA: MIT Press; 1990. [Google Scholar]

- Andrews S, Bond R. Lexical expertise and reading skill: Bottom-up and top-down processing of lexical ambiguity. Reading and Writing. 2009;22:687–711. doi: 10.1007/s11145-008-9137-7. [DOI] [Google Scholar]

- Andrews S, Lo S. Not all skilled readers have cracked the code: Individual differences in masked form priming. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2012;38:152–163. doi: 10.1037/a0024953. [DOI] [PubMed] [Google Scholar]

- Anglin JM, Miller GA, Wakefield PC. Vocabulary development: A morphological analysis. Monographs of the Society for Research in Child Development. 1993;58(10) i+iii+v-vi+1–186. [Google Scholar]

- Asparouhov T. General multi-level modeling with sampling weights. Communications in Statistics: Theory and Methods. 2006;35:439–460. doi: 10.1080/03610920500476598. [DOI] [Google Scholar]

- August D, Carlo M, Dressler C, Snow C. The critical role of vocabulary development for English language learners. Learning Disabilities Research and Practice. 2005;20:50–57. doi: 10.1111/j.1540-5826.2005.00120.x. [DOI] [Google Scholar]

- Baron RM, Kenny DA. The moderator-mediator variable distinction in social psychological research: Conceptual, strategic and statistical considerations. Journal of Personality and Social Psychology. 1986;51:1173–1182. doi: 10.1037/0022-3514.51.6.1173. [DOI] [PubMed] [Google Scholar]

- Bebout L. An error analysis of misspellings made by learners of English as a first and as a second language. Journal of Psycholinguistic Research. 1985;14:569–593. doi: 10.1007/BF01067386. [DOI] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society, Series B (Methodological) 1995;57:289–300. doi: 10.2307/2346101. [DOI] [Google Scholar]

- Berninger VW, Abbott RD. Listening comprehension, oral expression, reading comprehension, and written expression: Related yet unique language systems in grades 1, 3, 5, and 7. Journal of Educational Psychology. 2010;102:635–651. doi: 10.1037/a0019319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berninger VW, Abbott RD, Nagy W, Carlisle J. Growth in phonological, orthographic, and morphological awareness in grades 1 to 6. Journal of Psycholinguistic Research. 2010;39:141–163. doi: 10.1007/s10936-009-9130-6. [DOI] [PubMed] [Google Scholar]

- Bowers PN, Kirby JR, Deacon SH. The effects of morphological instruction on literacy skills: A systematic review of the literature. Review of Educational Research. 2010;80:144–179. doi: 10.3102/0034654309359353. [DOI] [Google Scholar]

- Braze D, Tabor W, Shankweiler DP, Menci WE. Speaking up for vocabulary: Reading skill differences in young adults. Journal of Learning Disabilities. 2007;40:226–243. doi: 10.1177/00222194070400030401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruck M. Word recognition skills of adults with childhood diagnoses of dyslexia. Developmental Psychology. 1990;26:439–454. doi: 10.1037/0012-1649.26.3.439. [DOI] [Google Scholar]

- Carlo MS, August D, McLaughlin B, Snow CE, Dressler C, Lippman DN, Lively TJ, White CE. Closing the gap: Addressing the vocabulary needs of English-language learners in bilingual and mainstream classrooms. Reading Research Quarterly. 2004;39:188–215. doi: 10.1598/RRQ.39.2.3. [DOI] [Google Scholar]

- Castles A, Davis C, Cavalot P, Forster K. Tracking the acquisition of orthographic skills in developing readers: Masked priming effects. Journal of Experimental Child Psychology. 2007;97:165–182. doi: 10.1016/j.jecp.2007.01.006. [DOI] [PubMed] [Google Scholar]

- Catts HW, Adlof SM, Weismer SE. Language deficits in poor comprehenders: A case for the simple view of reading. Journal of Speech, Language, and Hearing. 2006;49:278–293. doi: 10.1044/1092-4388(2006/023). [DOI] [PubMed] [Google Scholar]

- Chen R, Vellutino FR. Prediction of reading ability: A cross-validation study of the simple view of reading. Journal of Literacy Research. 1997;29:1–24. doi: 10.1080/10862969709547947. [DOI] [Google Scholar]

- Cook VJ. L2 users and English spelling. Journal of Multilingual and Multicultural Development. 1997;18:474–488. doi: 10.1080/01434639708666335. [DOI] [Google Scholar]

- Cooke NL, Slee JM, Young CA. How is contextualized spelling used to support reading in first-grade core reading programs? Reading Improvement. 2008;45(1):26–45. doi: 10.2307/23014367. [DOI] [Google Scholar]

- Coxhead A. A new academic word list. TESOL Quarterly. 2000;34:213–238. doi: 10.2307/3587951. [DOI] [Google Scholar]

- Cromley JG, Azevedo R. Testing and refining the direct and inferential mediation model of reading comprehension. Journal of Educational Psychology. 2007;99(2):311–325. doi: 10.1037/0022-0663.99.2.311. [DOI] [Google Scholar]

- Dale E, O’Rourke J. Living word vocabulary. Chicago, IL: World Book/Childcraft International; 1981. [Google Scholar]

- Dunn LM, Dunn DM. Peabody Picture Vocabulary Test. 4. Bloomington, MN: NCS Pearson, Inc; 2007. [Google Scholar]

- Educational Testing Service. Comprehensive English Language Learning Assessment CELLA): Technical Report. Princeton, NJ: Author; 2005. [Google Scholar]

- Ehri LC. Learning to read and learning to spell: Two sides of a coin. Topics in Language Disorders. 2000;20(3):19–36. doi: 10.1097/00011363-20020030-00005. [DOI] [Google Scholar]

- Elleman A, Lindo E, Morphy P, Compton D. The impact of vocabulary instruction on passage-level comprehension of school-age children: A meta-analysis. Journal of Research on Educational Effectiveness. 2009;2:1–44. doi: 10.1080/19345740802539200. [DOI] [Google Scholar]

- Farnia F, Geva E. Growth and preditors of change in English language learners’ reading comprehension. Journal of Research in Reading. 2013;36:389–421. doi: 10.1111/jrir.12003. [DOI] [Google Scholar]

- Florida Department of Education. FCAT handbook — A resource for educators. Tallahassee, FL: Author; 2001. [Google Scholar]

- Florida Department of Education. FCAT briefing book. Tallahassee, FL: Author; 2005. [Google Scholar]

- Florida Department of Education. Florida Assessments for Instruction in Reading (FAIR) Tallahassee: Author; 2009. [Google Scholar]

- Florida Department of Education. Reading scores statewide comparison for 2001 to 2010. 2010 [Data file]. Retrieved from http://fcat.fldoe.org/mediapacket/2010/pdf/2010ReadingComparison.pdf.

- Florit E, Cain K. The simple view of reading: Is it valid for different types of alphabetic orthographies? Educational Psychology Review. 2011;23:553–576. doi: 10.1007/s10648-011-9175-6. [DOI] [Google Scholar]

- Frith U. Beneath the surface of surface dyslexia. In: Patterson KE, Marshall JC, Coltheart M, editors. Surface dyslexia: Neuropsychological and cognitive studies of phonological reading. London: Routledge and Kegan Paul; 1985. pp. 301–330. [Google Scholar]

- Garcia JR, Cain K. Decoding and reading comprehension: A meta-analysis to identify which reader and assessment characteristics influence the strength of the relationship in English. Review of Educational Research. 2014;84(1):74–111. doi: 10.3102/0034654313499616. [DOI] [Google Scholar]

- Gough PB, Tunmer WE. Decoding, reading, and reading disability. Remedial and Special Education. 1986;7:6–10. doi: 10.1177/074193258600700104. [DOI] [Google Scholar]

- Harm M, Seidenberg M. Phonology, reading acquisition, and dyslexia: Insights from connectionist models. Psychological Review. 1999;106:491–528. doi: 10.1037/0033-295X.106.3.491. [DOI] [PubMed] [Google Scholar]

- Hauerwas LB, Walker J. Spelling of inflected verb morphology in children with spelling deficits. Learning Disabilities Research & Practice. 2003;18:25–35. doi: 10.1111/1540-5826.00055. [DOI] [Google Scholar]

- Hoff E. How social contexts support and shape language development. Developmental Review. 2006;26:55–88. doi: 10.1016/j.dr.2005.11.002. [DOI] [Google Scholar]

- Hoover WA, Gough PB. The simple view of reading. Reading and Writing: An Interdisciplinary Journal. 1990;2:127–160. doi: 10.1007/BF00401799. [DOI] [Google Scholar]

- Keenan JM, Betjemann RS. Comprehension of single words: The role of semantics in word identification and reading disability. In: Grigorenko E, Naples A, editors. Single-word reading: Behavioral and biological perspectives. Mahwah, NJ: Lawrence Erlbaum Associates, Inc; 2007. [Google Scholar]

- Kieffer MJ, Lesaux NK. The role of derivational morphology in the reading comprehension of Spanish-speaking English language learners. Reading and Writing Quarterly. 2008;21:783–804. doi: 10.1007/s11145-007-9092-8. [DOI] [Google Scholar]

- Landi N. An examination of the relationship between reading comprehension, higher-level and lower-level reading sub-skills in adults. Reading and Writing. 2010;23:701–717. doi: 10.1007/s11145-009-9180-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laufer B. Vocabulary acquisition in a second language: Do learners really acquire most vocabulary by reading? Some empirical evidence. Canadian Modern Language Review. 2001;59:567–587. doi: 10.3138/cmlr.59.4.567. [DOI] [Google Scholar]

- Laufer B, Yano Y. Understanding unfamiliar words in a text: Do L2 learners understand how much they don’t understand? Reading in a Foreign Language. 2001;13:549–66. [Google Scholar]

- Leach J, Scarborough H, Rescorla L. Late-emerging reading disabilities. Journal of Educational Psychology. 2003;95:211–224. doi: 10.1037/0022-0663.95.2.211. [DOI] [Google Scholar]

- Lesaux NK, Keiffer MJ, Faller SE, Kelley JG. The effectiveness and ease of implementation of an academic vocabulary intervention for linguistically diverse students in urban middle schools. Reading Research Quarterly. 2010;45:196–228. doi: 10.1598/RRQ.45.2.3. [DOI] [Google Scholar]

- Lipka O, Lesaux NK, Siegel LS. Retrospective analysis of the reading development of grade 4 students with reading disabilities: Risk status and profile over 5 years. Journal of Learning Disabilities. 2006;39:364–378. doi: 10.1177/00222194060390040901. [DOI] [PubMed] [Google Scholar]

- MacKinnon DP, Lockwood CM, Williams J. Confidence limits for the indirect effect: Distribution of the product and resampling methods. Multivariate Behavioral Research. 2004;39:99–128. doi: 10.1207/s15327906mbr3901_4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mancilla-Martinez J, Kieffer MJ, Biancarosa G, Christodoulou JA, Snow CE. Investigating English reading comprehension growth in adolescent language minority learners: Some insights from the simple view. Reading and Writing: An Interdisciplinary Journal. 2011;24:339–354. doi: 10.1007/s11145-009-9215-5. [DOI] [Google Scholar]

- Manis FR, Lindsey KA, Bailey CE. Development of reading in grades K-2 in Spanish-speaking English-language learners’ word decoding and reading comprehension. Reading and Writing. 2004;20:691–719. [Google Scholar]

- Muthén LK, Muthén BO. Mplus User’s Guide. 6. Los Angeles, CA: Muthén & Muthén; 1998–2010. [Google Scholar]

- Nagy W, Berninger VW, Abbott RD. Contributions of morphology beyond phonology to literacy outcomes of upper elementary and middle-school students. Journal of Educational Psychology. 2006;98:134–147. doi: 10.1037/0022-0663.98.1.134. [DOI] [Google Scholar]

- Nation, I. S. P. Learning vocabulary in another language. Cambridge, England: Cambridge University Press; 2001. [Google Scholar]

- Nation K. Form-meaning links in the development of visual word recognition. Philosophical Transactions of the Royal Society of London, Section B: Biological Sciences. 2009;364:3665–3674. doi: 10.1098/rstb.2009.0119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Center for Education Statistics (NCES) The nation’s report card: A first look: 2013 mathematics and reading. Washington, D.C: National Center for Education Statistics, Institute of Education Sciences, U.S. Department of Education; 2013. [Google Scholar]

- Nippold MA, Sun L. Knowledge of morphologically complex words: A developmental study of older children and young adolescents. Language, Speech, and Hearing Services in Schools. 2008;39:365–373. doi: 10.1044/0161-1461(2008/034). 0161-1461/08/3903-0365. [DOI] [PubMed] [Google Scholar]

- Nunes T, Bryant P, Barros R. The development of word recognition and its significance for comprehension and fluency. Journal of Educational Psychology. 2012;104:959–973. doi: 10.1037/a0027412. [DOI] [Google Scholar]

- Perfetti CA. Reading ability: Lexical quality to comprehension. Scientific Studies of Reading. 2007;11:357–383. doi: 10.1080/10888430701530730. [DOI] [Google Scholar]

- Perfetti CA, Hart L. The lexical quality hypothesis. In: Verhoeven L, Elbro C, Reitsma P, editors. Precursors of functional literacy. Philadelphia, PA: John Benjamins Publishing Company; 2002. pp. 189–213. [Google Scholar]

- Preacher KJ, Rucker DD, Hayes AF. Assessing moderated mediation hypotheses: Theory, methods, and prescriptions. Multivariate Behavioral Research. 2007;42:185–227. doi: 10.1080/00273170701341316. [DOI] [PubMed] [Google Scholar]

- Proctor CP, Carlo M, August D, Snow C. Native Spanish-speaking children reading in English: Toward a model of comprehension. Journal of Educational Psychology. 2005;97:246–256. doi: 10.1037/0022-0663.97.2.246. [DOI] [Google Scholar]

- Raftery AE. Bayesian model selection in social research (with discussion) Sociological Methodology. 1995;25:111–196. [Google Scholar]

- Ricketts J, Bishop DVM, Nation K. Orthographic facilitation in oral vocabulary acquisition. Quarterly Journal of Experimental Psychology. 2009;62:1948–1966. doi: 10.1080/17470210802696104. [DOI] [PubMed] [Google Scholar]

- Robbins KP, Hosp JL, Hosp MK, Flynn LJ. Assessing specific grapho-phonemic skills in elementary students. Assessment for Effective Intervention. 2010;36:21–34. doi: 10.1177/1534508410379845. [DOI] [Google Scholar]

- Rosenthal J, Ehri L. The mnemonic value of orthography for vocabulary learning. Journal of Educational Psychology. 2008;100:175–191. doi: 10.1037/0022-0663.100.1.175. [DOI] [Google Scholar]

- Sabitini JP, Sawaki Y, shore JR, Scarborough HS. Relationships among reading skills of adults with low literacy. Journal of Learning Disabilities. 2010;43:122–138. doi: 10.1177/0022219409359343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schatschneider C, Buck J, Wagner R, Hassler L, Hecht S, Powell-Smith K. A multivariate study of individual differences in performance on the reading portion of the Florida Comprehensive Assessment Test: A brief report. Florida Center for Reading Research; Tallahassee, FL: 2004. Technical Report No. 5. [Google Scholar]

- Seymour PHK. Foundations of orthographic development. In: Perfetti CA, Rieben L, Fayol M, editors. Learning to spell: Research, theory, and practice among languages. Hillsdale, NJ: Erlbaum; 1997. pp. 319–337. [Google Scholar]

- Sun-Alperin MK, Wang M. Cross-language transfer of phonological and orthographic processing skills from Spanish L1 to English L2. Reading and Writing. 2011;24:591–614. doi: 10.1007/s11145-009-9221-7. [DOI] [Google Scholar]

- Tunmer W, Chapman J. The simple view of reading redux: Vocabulary knowledge and the independent components hypothesis. Journal of Learning Disabilities. 2012;45:453–466. doi: 10.117/0022219411432685. [DOI] [PubMed] [Google Scholar]

- Valencia SW, Buly MR. Behind test scores: What struggling readers really need. Reading Teacher. 2004;57:520–531. [Google Scholar]

- Verhoeven L, van Leeuwe J, Vermeer A. Vocabulary growth and reading development across the elementary school years. Scientific Studies of Reading. 2011;15:8–25. doi: 10.1080/10888438.2011.536125. [DOI] [Google Scholar]

- Wagner RK, Herrera S, Spencer M, Quinn J. Reconsidering the Simple View of Reading in an intriguing case of equivalent models: Commentary on Tunmer and Chapman (2012) Journal of Learning Disabilities. 2015;48:115–119. doi: 10.1177/0022219414544544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiser B, Mathes P. Using encoding instruction to improve the reading and spelling performance of elementary students at risk for literacy difficulties: A best-evidence synthesis. Review of Educational Research. 2011;81:170–200. doi: 10.3102/0034654310396719. [DOI] [Google Scholar]

- Young K. Developmental stage theory of spelling: Analysis of consistency across four spelling-related activities. Australian Journal of Language and Literacy. 2007;30:203–220. [Google Scholar]

- Zeno SM, Ivens SH, Millard RT, Duvvuri R. The educator’s word frequency guide. NY: Touchstone Applied Science Associates, Inc; 1995. [Google Scholar]