Abstract

Background

Information on reporting completeness of passive surveillance systems can improve the quality of and public health response to surveillance data and better inform public health planning. As a result, we systematically reviewed available literature on reporting completeness of hepatitis A in non-endemic countries.

Methods

We searched Medline, EMBASE and grey literature sources, restricting to studies published in English between 1997 and 21 May 2015. Primary studies on hepatitis A surveillance and underreporting in non-endemic countries were included, and assessed for risk of bias. A pooled proportion of reporting completeness was estimated using a DerSimonian-Laird random-effects model.

Results

Diagnosed hepatitis A cases identified through positive laboratory tests, physician visits, and inpatient hospital discharges were underreported to public health in all eight included studies. Reporting completeness ranged from 4 to 97 % (pooled proportion 59 %, 95 % confidence interval = 32 %, 84 %). Substantial heterogeneity was observed, which may be explained by differences in the referent data sources used to identify diagnosed cases and in case reporting mechanisms and/or staffing infrastructure. Completeness was improved in settings where case reporting was automated or where dedicated staff had clear reporting responsibilities.

Conclusions

Future studies that evaluate reporting completeness should describe the context, components, and operations of the surveillance system being evaluated in order to identify modifiable characteristics that improve system sensitivity and utility. Additionally, reporting completeness should be assessed across high risk groups to inform equitable allocation of public health resources and evaluate the effectiveness of targeted interventions.

Electronic supplementary material

The online version of this article (doi:10.1186/s12879-016-1636-6) contains supplementary material, which is available to authorized users.

Keywords: Hepatitis A, Population surveillance, Disease notification, Underreporting

Background

Hepatitis A is a disease caused by infection with hepatitis A virus (HAV), which is most often transmitted through the faecal-oral route. In young children, infection with HAV is typically asymptomatic, while in older children and adults, infection leads to jaundice in approximately 70 % of cases [1]. The disease is usually self-limiting; however, approximately one in four cases are hospitalized, the proportion increasing with age [2, 3]. In low-income countries, where the disease is considered endemic (i.e. the prevalence of anti-HAV antibodies in the population is ≥ 90 % by age 10), most infections occur before the age of 5 years, when infections are asymptomatic and as a result, there are few susceptible adolescents or adults and few symptomatic infections [1]. By contrast, in high-income, non-endemic countries, the prevalence of anti-HAV antibody is very low (<50 % are immune by age 30) [1] owing to improved sanitary conditions, along with introduction of an effective vaccine in the mid 1990’s. In Canada, reported rates have declined from 10.6 cases per 100,000 population in 1991 to 0.9 cases per 100,000 population in 2008 [4]. Similar trends have been observed in the United States (US) [3, 5]. The risk of infection is low in these countries, despite a large population of susceptible adults, because the high standards of living have reduced the effective reproduction number below 1, meaning a very low risk of exposure as there is little circulation of the virus. Despite this, hepatitis A remains of public health importance. In 2012 and 2013, outbreaks affecting more than 250 individuals were reported across Europe, Canada and the US as a consequence of travel to endemic regions, as well as importation of contaminated frozen fruit from endemic regions [6–8]. Susceptible populations also remain at high risk for exposure, including travelers to endemic regions, men who have sex with men and injection drug users [1].

Hepatitis A cases are typically diagnosed by physicians on the basis of clinical and epidemiological features, as well as serological testing to detect immunoglobulin M (IgM) antibody to HAV [5]. The majority of World Health Organization (WHO) Member States (71 %, 90/126) reported having a national surveillance system for acute hepatitis A infection in a 2012 survey; however, only 47 % of low-income countries responded to the survey (compared to 80 % of high-income countries) making it challenging to understand the true landscape of hepatitis A surveillance globally [9]. In most high-income countries, hepatitis A is a notifiable disease. While no universal case definition exists, in the US, cases reported to public health are classified as confirmed if they have acute illness with discrete onset of symptoms, and jaundice or elevated serum aminotransferase levels, and are laboratory-confirmed (IgM antibody to HAV (anti-HAV positive)), or they meet the clinical case definition and have an epidemiologic link with a person who has laboratory-confirmed hepatitis A [10]. Epidemiological surveillance is conducted passively through physician and/or laboratory reporting in order to detect and control outbreaks, as well as to guide and evaluate public health interventions.

A recognized limitation of these data are their sensitivity (i.e. data underestimate the total number of cases in the population). System sensitivity is a function of both case ascertainment and disease reporting. Under-ascertainment refers to cases not captured in a surveillance system due to fact that health care was not sought; this can occur if cases are asymptomatic, if they do not visit a care provider due to mild symptoms or other reasons, or if their care provider does not test for the disease [11, 12]. An estimated 15 % of hepatitis A cases are asymptomatic (although this proportion can be as high as 70 % in children <6 years [13]) and 12 % of symptomatic patients are estimated to not seek care [14]. Underreporting, by contrast, refers to cases that are diagnosed but are not reported to the appropriate public health authorities; this can result from a lack of knowledge that the disease is notifiable and/or understanding of how to report the disease, as well as errors in the reporting system/mechanism [11, 12].

While not all cases of hepatitis A need to be captured to achieve maximum control levels (as not all secondary cases need to be prevented to bring the number of infections produced on average per case to <1, which is required for control), a modelling study examining the impact of reporting delays found that hepatitis A outbreak control would not possible with underreporting >29 % [15]. As a result, ongoing evaluation and improvement of surveillance systems is necessary to optimize control efforts. Similarly, completeness of disease ascertainment and reporting should be assessed and corrected for when estimating the burden of disease from surveillance data, as illustrated by recent infectious disease burden studies in Canada and Europe [16, 17]. Adjustment is particularly important across key epidemiological characteristics such as age, sex and risk status, as completeness of data have been shown to be related to these characteristics [18, 19]. This information can, in turn, better inform public health planning, priority setting and equitable distribution of limited resources.

While the literature on reporting completeness for notifiable diseases has been synthesized overall and for broad disease groups in the US and the United Kingdom [12, 20], no such reviews exist for hepatitis A. Disease-specific data are important as the degree of underreporting has been shown to vary substantially by disease [12, 20]. As a result, the objective of this study was to determine the proportion of diagnosed hepatitis A cases that were reported to public health bodies or agencies in non-endemic countries. A secondary objective was to synthesize and critically appraise the methods used to estimate underreporting to provide recommendations for future studies.

Methods

We followed guidelines from the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) 2009 statement [21], which is available in Additional file 1.

Search and study selection

Medline and EMBASE databases were searched on 21st May 2015. A sensitive strategy comprising two searches was designed in consultation with two public health librarians. In search one, mapped subject headings and keywords for hepatitis A were joined with terms for disease notification, surveillance and underreporting. In search two, search terms for hepatitis A were joined with terms for incidence, prevalence and epidemiology, as well as travel, non- or low-endemic countries, and country names. World regions with estimated ‘very low’ endemicity, defined as <50 % of the population immune by age 30, were classified as non-endemic [22]. The Medline search strategy is shown in Table 1. The scope was limited to non-endemic countries to minimize heterogeneity in factors known to affect disease surveillance and reporting, including disease exposure, surveillance and health care systems, socioeconomic development, and availability of treatments [11]. The search was restricted to English articles published after 1 January 1997 to limit the review to the time period in which the hepatitis A vaccine was introduced (1996 in Canada; 1995 and 1996 in the US) [4, 23]. Commentaries, editorials, letters, news reports and case reports were excluded. Reference lists of all studies included for full-text review were manually searched for relevant studies. Additionally, a snowball search was performed for all included articles using the ‘find all related’ function in PubMed to retrieve studies on underreporting of multiple infectious diseases that did not map ‘hepatitis A’ as a subject heading or keyword but nonetheless may have included it as a disease of interest.

Table 1.

Example Medline search strategy to systematically retrieve literature on hepatitis A underreporting

| Search 1a | Search Terms |

| Disease notification, surveillance and underreporting | Mandatory Reporting/ OR Disease Notification/ OR Contact Tracing/ OR exp Population Surveillance/ OR Public Health Informatics/ OR exp Data Collection/ OR Disclosure/ OR exp Informatics/ OR underreport$.mp. OR under-report$.mp. OR (under adj1 report$).mp. OR surveillance.mp. OR reporting.mp. OR ((reported OR true OR estimate$) adj2 (incidence OR prevalence)).mp. OR undetect$.mp. OR capture-recapture.mp. AND |

| Hepatitis A | Hepatitis A/ OR Hepatitis A Virus, Human/ OR Hepatitis A Antibodies/ OR “hepatitis a”.mp. |

| Search 2a | Search Terms |

| Incidence, prevalence and epidemiology | Incidence/ OR Prevalence/ OR Epidemiology/ OR Statistics & Numerical Data.fs. OR Epidemiology.fs. OR Epidemiologic Measurements/ OR Statistics as Topic/ OR Data Interpretation, Statistical/ OR incidence.mp. OR prevalence.mp. OR epidemiolog$.mp. AND |

| Hepatitis A | Hepatitis A/ OR Hepatitis A Virus, Human/ OR Hepatitis A Antibodies/ OR “hepatitis a”.mp. AND |

| Travel, non- or low-endemic countries | Travel/ OR Travel Medicine/ OR Developed Countries/ OR exp Australia/ OR exp North America/ OR New Zealand/ OR Andorra/ OR Austria/ OR Belgium/ OR Finland/ OR exp France/ OR exp Germany/ OR Gibraltar/ OR exp Great Britain/ OR Greece/ OR Iceland/ OR Ireland/ OR exp Italy/ OR Liechtenstein/ OR Luxembourg/ OR Monaco/ OR Netherlands/ OR Portugal/ OR San Marino/ OR exp Scandinavia/ OR Spain/ OR Switzerland/ OR Japan/ OR Singapore/ OR Hong Kong/ OR (non-endemic$ OR nonendemic$ OR (non adj1 endemic$) OR low-endemic$ OR (low adj1 endemic$) OR travel$ OR developed countr$ OR Australia OR North America OR Canada OR United States OR New Zealand OR Western Europe OR Andorra OR Austria OR Belgium OR Finland OR France OR Germany OR Gibraltar OR Great Britain OR England OR Scotland OR Ireland OR United Kingdom OR Wales OR Greece OR Iceland OR Italy OR Liechtenstein OR Luxembourg OR Monaco OR Netherlands OR Portugal OR San Marino OR Scandinavia OR Spain OR Switzerland OR Cyprus OR Denmark OR Norway OR Sweden OR Greenland OR Japan OR Singapore OR Hong Kong).mp. |

aSearches 1 and 2 were combined using the Boolean operator ‘or’. exp explode – includes all narrower/more specific subheadings in the search, $, allows for different endings to a word to be searched; mp multi-purpose - searches in the title and abstract as well as the subject heading, adj adjacent, fs floating subheading – facilitates a broader search (floats over all indexed subject headings)

Grey literature sources, including websites of the World Health Organization (WHO), United States Centers for Disease Control and Prevention (US CDC), Public Health Agency of Canada (PHAC), European Centre for Disease Prevention and Control (ECDC) and EUROHEP.NET were searched using keywords; these searches were restricted to English and to 2010–2013. Abstract books from the European Scientific Conference on Applied Infectious Disease Epidemiology (ESCAIDE), the International Conference on Emerging Infectious Diseases (ICEID), and ID Week, held between 2009 and 2013, were also searched.

The primary reviewer (R.D.S.) performed a preliminary review of article titles and abstracts to exclude irrelevant studies on non-viral hepatitis, chronic hepatitis, liver cancer, non-A hepatitis, laboratory detection methods for hepatitis, and surveillance in non-humans (e.g. animals, food, water). The remaining article titles and abstracts were independently reviewed by two reviewers (R.D.S. and K.A.B.); primary studies on hepatitis A surveillance and underreporting in non-endemic countries were considered relevant for full-text review. Mathematical modelling papers on hepatitis A were also reviewed in full-text as a validation measure to ensure all relevant primary studies were captured in the search, as these models often account for underreporting. Discordant views on eligibility were discussed to reach consensus. Inter-rater reliability between the two reviewers was assessed using the kappa statistic, where >0.75 indicated excellent agreement, 0.40–0.75 intermediate to good agreement, and <0.40 poor agreement [24].

Full-text review was then performed independently by the two reviewers (R.D.S. and K.A.B.); studies were eligible for inclusion if they assessed underreporting by comparing hepatitis A disease reports received by public health with one or more independently retrieved data source(s) of diagnosed cases as the reference standard (e.g. IgM anti-HAV positive laboratory test results). Studies whose aims were to exclusively identify the source of case reports (i.e. evaluate the proportion of cases that were reported to public health by physicians, laboratories, etc.) were excluded as they did not make comparisons with a reference standard. Studies also needed to provide a quantitative measure of underreporting or provide data to calculate the degree of underreporting for hepatitis A. Reasons for exclusion were recorded, and as before, discordant views on eligibility were discussed to reach consensus and inter-rater reliability was assessed.

Data collection

The primary reviewer (R.D.S.) extracted data on the last name of the first author, year of publication, study setting, the passive surveillance system used to report hepatitis A cases, the data source of diagnosed cases used to measure underreporting, the proportion of diagnosed cases reported to public health overall and by age, sex and/or risk status if provided, and the study’s sample size using a structured data extraction form.

Risk of bias

The methodological quality of included studies was assessed independently by two reviewers (R.D.S. and K.A.B.) using Public Health Ontario’s meta-tool for quality assessment of public health evidence (Table 2) [25]. The validated tool uses a component or checklist approach to facilitate the assessment of four domains: relevancy, reliability, validity and applicability. The tool integrates adapted study-design specific critical appraisal tools and reporting guidelines, such as PRISMA [21], CONSORT [26] and CASP [27], to assess the study’s validity; however, finding that none of these tools aligned with the study designs included in this review, a checklist was created based on two existing reviews of reporting, as well as the US CDC’s updated guidelines for evaluating public health surveillance systems [12, 28, 29]. If the study methodology was inappropriate, biased (e.g. failed to validate diagnosis in the data source of diagnosed cases), or unclear (e.g. failed to describe diagnostic or data linkage methods), the study was assigned a high risk of bias. If the methodology was appropriate, clearly defined, and bias-free, the study was assigned a low risk of bias. If the study presented findings objectively with justified conclusions, but the methods were unclear or potentially biased, the study was rated as moderate risk. Inter-rater reliability was assessed using the kappa statistic.

Table 2.

Adapted meta-tool for quality assessment of public health evidence (Meta QAT) from Public Health Ontario [25]

| Item | Criteria | Assessment |

|---|---|---|

| Validity | a) Are findings presented objectively? • Clear rationale and justification • Findings presented and discussed within appropriate context • Similar to existing literature and if not, reasons explained • Conflict of interest statement |

Yes No Unclear N/A |

| b) Are the authors conclusions justified? • Transparency • Results consistent with those described in discussion • Conclusions based on empirical findings |

Yes No Unclear N/A |

|

| Reliability | a) Is the research methodology clearly described? • Study population and surveillance system described • Can identify the research design • Methods carried out in a rigorous and transparent manner |

Yes No Unclear N/A |

| b) Is methodology appropriate for the scope of research?a

• Comparison with an appropriate reference standard (i.e. a data source other than notification data)? o Is the sensitivity of the reference standard described? Are cases verified? o Is the population base for notifications and supplementary data sources drawn from same catchment area? o Is there an adequate description of case ascertainment? o Are cases ascertained for the same time period in both datasets? • Are the data sources to be compared clearly identified and described/referenced? • What case definition is used? Is the same definition applied to all data sources? • Are the methods for linkage described? o Were the identifiers unique and available? o How complete was the link? (% linked) o How accurate was the link? (i.e. were individuals linked who were not supposed to be or vice versa) o Were duplicate cases identified and removed? • How precise are results? (confidence interval width) • Were any statistical measures of agreement used (did authors express level of agreement using a statistic)? • For capture-recapture methods: o If dependency between data sources is suspected, are ≥3 or more data sources used, along with log linear modelling methods, to account for this dependency? |

Yes No Unclear N/A |

|

| c) Is the research methodology free from bias? • Are there major sources of bias? • Can the study be reproduced? |

Yes No Unclear N/A |

|

| d) Are ethics procedures described? • Approval from ethics review board • Individual consent |

Yes No Unclear N/A |

|

| e) Can I be confident about findings? • Were sources of information quality assessed? • Any major methodological flaws? |

Yes No Unclear N/A |

|

| Applicability | Can results be applied within the scope of public health? • Do the results of the study apply to the issue under consideration (i.e. are surveillance systems and supplementary data sources similar to Ontario?)? |

Yes No Unclear N/A |

Statistical analysis

Reporting completeness was estimated as the number of diagnosed cases reported to public health divided by the total number of diagnosed cases ascertained through the reference standard. If the diagnosis was validated in the reference standard (e.g. ICD-9 coded hospital discharge records were reviewed to determine whether hepatitis A-coded visits met the surveillance case definition), the corrected denominator was used.

The pooled reporting completeness was derived by averaging study estimates, weighted by the inverse of their variance, and transformed using a double arcsine transformation [30]. The DerSimonian and Laird random-effects model was used to incorporate between-study variance and to pool the transformed proportions [31, 32]. Statistical heterogeneity was assessed by calculating Cochran’s Q statistic with a significance level of P < 0.10 and the I2 statistic [33], which reflects between-study heterogeneity. Values of above 75 % were used to indicate high heterogeneity [33]. All analyses were performed using MetaXL (http://www.epigear.com). Publication bias was assessed by visually examining Begg’s funnel plot and performing Egger’s regression asymmetry test using Stata, version 12.0 (StataCorp LP, College Station, Texas).

Results

Primary studies

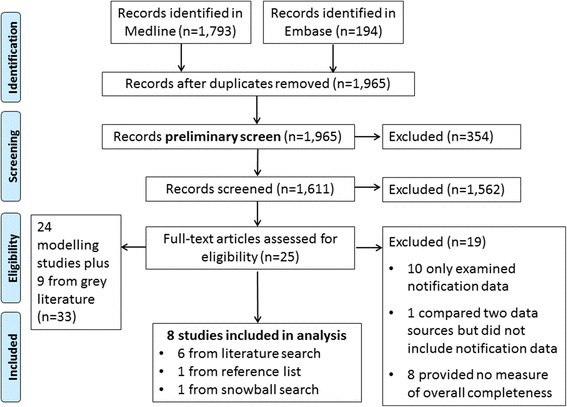

Overall, 1965 studies were retrieved from Medline and EMBASE, excluding duplicates (Fig. 1). After excluding 354 irrelevant studies in the preliminary review, 1611 studies were screened. Twenty-five studies were considered eligible for full-text review with good agreement between reviewers (kappa = 0.60, 95 % confidence interval (CI) = 0.45, 0.75) (see Additional file 2 for complete reference list). In total, eight studies were included; six from the search, one from the reference list of reviewed full-text articles, and one from the snowball search [34–41]. There was good agreement between reviewers at the final screen (kappa = 0.78, 95 % confidence interval (CI): 0.49, 1.00).

Fig. 1.

Flow chart of search strategy results and selection of papers

One relevant ECDC project, the ‘Current and Future Burden of Communicable Diseases in the European Union and EEA/EFTA countries’ (BCoDE) study, was identified in the grey literature search. Results from an unpublished literature scan conducted to aid in the development of multiplication factors to correct for under-ascertainment and underreporting of hepatitis A were shared by the study lead (written communication, Dr. Mirjam Kretzschmar). Four articles on underreporting were cited in this scan, only one of which met our inclusion/exclusion criteria and had already been retrieved and included in this review [40]. Annual viral hepatitis surveillance reports published on the US CDC website were also considered relevant as reports included adjustment for underreporting using a probabilistic model with factored probabilities of symptoms (I), referral to care and treatment (II), and rates of reporting to local and state department (III) [42]. The methodology is published and was retrieved in the original, peer-reviewed literature search [14]. Of the two model inputs for (III), the one study that met our inclusion/exclusion criteria had already been retrieved and included in this review [36].

Modelling studies

In addition to the 25 primary studies reviewed in full-text, 24 modelling studies were reviewed (Additional file 2). An additional nine studies were reviewed from the BCoDE literature scan, for a total of 33 modelling studies (Additional file 2). No new primary studies on underreporting of hepatitis A were identified.

Characteristics of included primary studies

Studies were published between 1998 and 2011, and conducted between 1995 and 2007 (Table 3). The majority (6/8) were from the US [34–37, 39, 41]; one study was from England [40] and another from New Zealand [38]. Hepatitis A was notifiable, meaning that it was legislated to be reported to public health, for all study settings during the study time periods. In most settings, there was a mandatory dual (physician and laboratory) reporting mechanism. Aside from one study that examined the proportion of positive test results identified within a managed care organization’s reporting system and another study that examined electronic medical records from a group practice [34, 36], all studies were population-based, meaning that cases could emerge from anywhere in the population and through any healthcare setting. Only one study measured underreporting in an outbreak context [40].

Table 3.

Characteristics of eight included studies published between January 1997 and May 2015

| Study Characteristics | Methods | Results | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Study and Year | Location | Time Period | Study Population | Notification to Public Health | Design | Referent Data of Diagnosed Cases | Diagnostic criteria | Consistent with surveillance criteria and possible effect | % Report | N |

| Backer HD et al. 2001 [34] | California, US | 1997 | Kaiser Permanente Northern California members | Mandatory dual reporting | Data Linkage | Laboratory Tests | Positive IgM HAV antibody | No; Under-estimate completeness |

88.4 % | 402 |

| Boehmer TK et al. 2011 [35] | Colorado, US | 2003–2005 | Population-based | Mandatory dual reporting | Data Linkage | Inpatient hospital discharges and medical chart review | ICD-9-CM codes 070.0 and 070.1 with review using surveillance definition | Yes | 67 % | 6 |

| Klompas M et al. 2008 [36] | Massachu-setts, US | June 2006–July 2007 | Patients of a multi-specialty group practice of 35 clinics | Not described | Data Linkage | Electronic medical records with e-support for public health system (ESP) | ALT or AST >2 times upper normal limit, or ICD-9 code 782.4 for jaundice, and positive IgM HAV antibody | No; Over-estimate |

25 % | 4 |

| Matin N et al. 2006 [40] | England (North East and East Midlands), UK | 2002 and 2003 | Population-based | Mandatory reporting by physicians; voluntary reporting by laboratories with good participation | CRC | 1. Cases identified by local public health, 2. laboratory tests and 3. genotyping results | Not described | Unclear | 81.7 % (outbreak a) and 27.8 % (outbreak b) | 236 and 1107 |

| Overhage JM et al. 2008 [39] | Indiana- polis, Indiana, US |

First quarter of 2001 | Population-based | Mandatory dual reporting | Data Linkage | Hospital infection-control databases (IC), and an electronic laboratory reporting (ELR) database | Not described; system scans test results labels for a match to CDC notifiable condition mapping tables | Unclear | 4.0 % (IC, study a) and 97.3 % (ELR, study b) | 150 |

| Roels TH et al. 1998 [37] | Wisconsin, US | 1995 | Population-based | Mandatory dual reporting | Data Linkage/Compar-ison | Laboratory Tests | Positive IgM HAV antibody | No; Under-estimate |

74 % | 156 |

| Sickbert-Bennett EE et al. 2011 [41] | North Carolina, US | 1995–2006 (excl. 1998, 1999) | Population-based | Mandatory reporting by physicians; dual reporting starting 1998 | Data Linkage | Inpatient hospital discharges and medical chart review | ICD-9-CM (code not specified) with review using surveillance definition |

Yes | 40.02 % corrected | 67 |

| Simmons G et al. 2002 [38] | Auckland, New Zealand | 2000 | Population-based | Mandatory reporting by physicians; some laboratories | Data Linkage | Laboratory Tests | Positive IgM HAV antibody | No; Under-estimate |

65 % | 54 |

US United States, IgM immunoglobulin M, HAV hepatitis A virus, ICD international classification of diseases, ALT alanine aminotransferase, AST asparatate aminotransferase, UK United Kingdom, CRC capture-recapture methods, n/a not available

Risk of bias

There was good agreement between the two reviewers for bias assessment (kappa = 0.79, 95 % confidence interval (CI): 0.41, 1.00). Overall, two studies were assessed to have a low risk of bias, four studies a moderate risk, and two a high risk (Table 4). One of the most common study limitations was a failure to verify the diagnosis in the reference standard. For example, only two studies that used ICD-9 coded hospital discharge data to ascertain cases of hepatitis A verified the diagnosis though medical chart review [35, 41]. Study authors found that this process identified several false positives, which had they not been detected, would have led to an artificially low completeness of reporting estimate. For studies that linked diagnostic and public health data to estimate underreporting, the methods for matching and accuracy of the match were poorly described in most studies. Additionally, several studies did not report the case definitions or criteria used to ascertain cases and whether the definitions were consistent across data sources [34, 40]; although, it should be noted that a national surveillance case definition for hepatitis A did not exist in England at the time of the study conducted by Matin et al. [40] Reportable disease surveillance data varies by system design and implementation; despite this, most studies provided only a cursory description of the various data sources and population coverage of those sources and did not include relevant contextual information important to interpreting reporting completeness estimates.

Table 4.

Risk of bias assessment using Public Health Ontario’s meta-tool for quality assessment [25]

| Item | Criteria | Backer 2001 [34] | Boehmer 2011 [35] | Klompas 2008 [36] | Matin 2006 [40] | Overhage 2008 [39] | Roels 1998 [37] | Sickbert-Bennett 2011 [41] | Simmons 2002 [38] |

|---|---|---|---|---|---|---|---|---|---|

| Relevancy | Topic(s) relevant | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Validity | Presented objectively | Unclear | Unclear | Unclear | Unclear | Yes | Yes | Yes | Yes |

| Conclusions justified | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | |

| Reliability | Clear methods | Yes | Yes | Yes | Yes | Unclear | Unclear | Yes | Unclear |

| Appropriate methods | Unclear | Yes | Yes | Yes | Unclear | Unclear | Yes | Yes | |

| Free from bias | No | Yes | Unclear | No | Unclear | Unclear | No | Unclear | |

| Ethics described | Unclear | Unclear | Unclear | Unclear | No | Unclear | Yes | Yes | |

| Confident in findings | Unclear | Yes | Unclear | Unclear | No | No | Unclear | No | |

| Applicability | Applicable | Unclear | Yes | Yes | Unclear | Unclear | Yes | Yes | Yes |

| Overall Risk | High | Low | Moderate | Moderate | High | Moderate | Low | Moderate | |

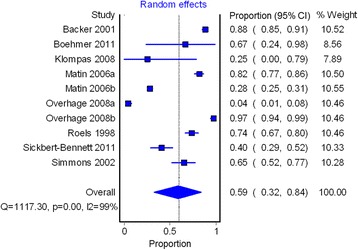

Data synthesis

In all included studies, hepatitis A reporting was found to be incomplete. The proportion of hepatitis A cases reported to public health ranged from 4 to 97 % (pooled proportion = 59 %, 95 % CI = 32 %, 84 %) (Table 3 and Fig. 2). High heterogeneity was observed (Q = 1117.30, P < 0.001; I2 = 99 %). Reporting completeness estimates were not stratified by age, sex or risk status in any of the included studies (data not shown).

Fig. 2.

Pooled proportion of hepatitis A reporting completeness to public health in eight studies. CI confidence interval

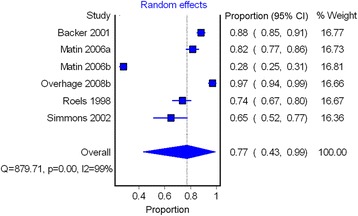

The majority of studies (7/8) linked public health notification data with an independently retrieved data source of diagnosed cases to measure underreporting (Table 3) [34–39, 41]. The most commonly used reference standard was positive IgM anti-HAV test results from laboratories [34, 37–39]; two studies used inpatient hospital discharge data validated through medical record review to assess underreporting [35, 41], while another used electronic medical records [36]. Only one study used capture-recapture methods applied to two hepatitis A outbreaks to measure underreporting [40]. The heterogeneity in reporting completeness may be explained in part by the differing standards used. In a post-hoc subgroup analysis, studies which assessed completeness by comparing public health data with laboratory testing data found that a higher proportion of cases were reported to public health (pooled proportion = 77 %, 95 % CI = 43 %, 99 %); however, significant residual heterogeneity remained (Fig. 3).

Fig. 3.

Pooled hepatitis A reporting completeness in studies with laboratory testing data as the reference standard. CI confidence interval

With the exception of one study [37], no studies reported which anti-HAV IgM test was used, the sensitivity and specificity of the test, and whether there were any changes to laboratory testing procedures over the study time period, making it difficult to assess whether the heterogeneity could be explained by differences in testing practices/methods. The variation could also be explained by differences in how (or the mechanism by which) cases were reported to public health (e.g. automated versus manual reporting methods, electronic versus paper, staff resource level, etc.) and legislation across and within study settings. For example, higher estimates of reporting completeness were observed in the two studies with automated laboratory reporting (97 and 88 %) [34, 39] relative to the other two studies that relied on manual reporting methods (74 and 65 %) [37, 38]. The latter also cited specific challenges with reporting including poor information exchange with private laboratories [37], and lack of routine reporting by selected laboratories [38]. If data from these four studies were pooled, cases detected in automated reporting systems would have had 3.92 times the odds of being reported to public health compared to cases detected in manual reporting systems. Other studies that similarly relied on manual, passive reporting but in different settings (by infection control practitioners [39] and primary care providers [36]) also found lower proportions of complete reporting (4 and 25 %). In addition to between study variation, Sickbert-Bennett et al. (2011) noted heterogeneity in reporting mechanisms within one health region, finding higher reporting completeness in hospitals with dedicated staff (i.e. public health epidemiologists or infection control practitioners) responsible for disease reporting [41].

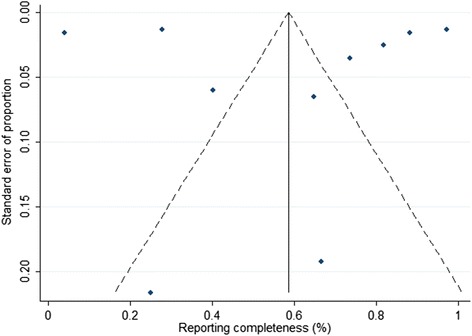

There was no evidence of publication bias based on funnel plot symmetry between the proportion of reporting completeness and the standard error of the proportion (Fig. 4) or from Egger’s test (P = 0.977).

Fig. 4.

Funnel plot for assessing publication bias of hepatitis A reporting completeness in non-endemic countries

Discussion

The body of literature on the sensitivity of passive surveillance systems to capture diagnosed hepatitis A cases reveals that hepatitis A is underreported in non-endemic countries. The majority of studies were conducted in the US; despite this, there was substantial variability in reporting completeness (range: 4 to 97 %). Previous systematic reviews on completeness of disease notification for all reportable diseases in the US and UK similarly found variability in estimates depending on the disease, ranging from 9 to 99 % and 3 to 95 %, respectively [12, 20]. These studies, however, covered all notifiable diseases and found that reporting completeness was strongly correlated to the disease itself and not to study characteristics such as study location, time period, study design, and study size. Consequently, heterogeneity in estimates was attributed to the wide number of diseases being evaluated.

We found that the disparate reference standards (data sources of diagnosed cases) used by the studies in this review contributed in part to the observed variation in reporting completeness; however, substantial heterogeneity remained among studies which evaluated reporting completeness through comparison with laboratory testing data. Reporting completeness was highest where laboratory reporting to public health was automated relative to manual reporting methods and in settings where dedicated staff were responsible for disease reporting. These findings provide specific direction as to how surveillance systems can be improved to minimize underreporting.

Differences in important contextual factors, namely disease incidence and vaccination policy, in US states may have also influenced laboratory and physician reporting completeness. Unlike New Zealand and England vaccine recommendations which target high risk groups, HAV vaccination recommendations in the US have employed a phased approach, starting first with high risk groups, then moving in 1999 to target 17 states with reported rates above the national average [23] and in 2006 to all children over 1 year of age [5]. California and Colorado were both included in the targeted approach in 1999; it is possible that the improved reporting completeness relative to the studies from other settings was a result of heightened vigilance of reporting in this specific context [34, 35].

Other contributing factors to the observed heterogeneity could include varying study time periods, quality, sample size and/or legislative requirements for notification; although, no clear trend was observed across these factors. There may be additional features unique to each surveillance system that influence reporting completeness (e.g. regular auditing or other quality improvement activities, etc.). These features are challenging to elucidate, however, as many studies only provided a cursory description of their surveillance system, despite recommendations from the US CDC’s Guidelines Working Group for Evaluating Public Health Surveillance Systems to report on the context, operations, and system components, including the level of integration with other systems, when conducting an evaluation [23]. Efforts to contact study authors to obtain this information were either unsuccessful or impeded by recall issues. As this information is vital to identify those unique features that improve or impede system sensitivity, and can be used to improve the quality of systems everywhere, we recommend that future evaluation studies prioritize the inclusion of this information.

Methodological limitations identified in included studies provide further insight into how future studies evaluating the sensitivity of surveillance systems can be improved (recommendations summarized in Table 5). Several studies did not describe how cases were diagnosed in the reference standard and many did not take steps to validate the diagnosis in any way. For studies using positive IgM anti-HAV laboratory test results as the reference standard, false positive test results or repeat testing may have overestimated the denominator and underestimated the proportion of cases reported to public health, although, the specificity of IgM anti-HAV testing has been shown to be ≥99 % [43]. By contrast, lack of clinical information in an electronic medical record may have resulted in false negatives, which would have overestimated reporting completeness [36]. Application of a different case definition in the reference standard from that used for surveillance may have also led to disease misclassification. For example, using positive IgM anti-HAV laboratory test results alone to ascertain cases in laboratory data without consideration of the presence of clinically compatible symptoms, a component of the surveillance case definition, may have underestimated reporting completeness. Similarly, studies have demonstrated that using only ICD-9 hepatitis A discharge diagnosis codes, without verification by medical chart review, overestimates cases as defined by the surveillance case definition [35, 41]. Lastly, methods used to match records in the diagnostic and public health datasets, such as deterministic or probabilistic linkage, and the accuracy of the match was often not described; record linkage errors may have similarly underestimated reporting completeness or resulted in other errors. Given that surveillance reports are typically brief in nature, it is unclear whether these concerns reflect true methodological issues, or issues related to reporting quality.

Table 5.

Summary of recommendations for future studies evaluating completeness of surveillance by linking public health surveillance data with a reference standard

| 1. Report on the context, operations, and system components of the public health surveillance system being evaluated, including the level of integration with other systems. | |

| 2. Provide the surveillance case definition (if available), and describe how cases were diagnosed in the reference standard. If possible, apply the surveillance case definition to the reference standard to avoid misclassification in ascertaining cases. | |

| 3. Validate the diagnosis of cases in the reference standard if possible, particularly if using inpatient hospital discharges or electronic medical records as reference standards. | |

| 4. Describe methods used to match or link records in the diagnostic and public health datasets, such as deterministic or probabilistic linkage, and the accuracy of the match. |

Strengths of this review include a comprehensive search strategy which included two key scientific literature databases and relevant grey literature sources identified by experts in hepatitis A and public health surveillance. Search methods were validated using modelling studies and communication with public health professionals at two core knowledge-generating public health organizations. Additionally, adaptation of the critical appraisal tool better facilitated the identification of fundamental issues affecting each included study’s internal validity.

The scope of the study was restricted to focus on underreporting and not under-ascertainment as reporting practices are amenable to quality improvement, while issues related to under-ascertainment are less so, particularly when cases are asymptomatic, mild or have self-limiting infections that do not require medical care. Nonetheless, under-ascertainment remains an important contributor to the sensitivity of passive surveillance systems and can have substantial effects on disease control and prevention. Improving health literacy (knowledge of the severity or duration of an illness, and when to seek care), and removing administrative, financial and cultural barriers to healthcare may improve case ascertainment [19]. From a methodological perspective, the degree to which under-ascertainment occurs for a particular disease in a community can be estimated through the use of a wide range of active case finding methods, including serial serosurveys, as described by Gibbons et al. (2014) [19]. These estimates can then, in turn, be used to calibrate surveillance data.

Conclusions

Given that complete and timely reporting of notifiable diseases is important to prevent outbreaks and person-to-person transmission, efforts should be undertaken to improve and evaluate surveillance on an ongoing basis. Automating case reporting to public health and/or providing dedicated staffing infrastructure with clear staff reporting responsibilities can improve reporting completeness. To identify additional modifiable features of surveillance systems and provide specific direction on how to improve system sensitivity and utility, future studies should describe the context, operations, and components of their system when conducting an evaluation, as well as critically reflect on how these features contributed to low or high sensitivity. Lastly, priority should be given to improving the quality of risk factor data recorded in surveillance systems to enable estimation of reporting completeness across high risk groups; these data are essential to examine equity issues, inform allocation of public health resources including control measures, and evaluate the effectiveness of public health interventions including vaccine recommendations.

Abbreviations

HAV, hepatitis A virus; IgM, immunoglobulin M; PRISMA, Preferred Reporting Items for Systematic Reviews and Meta-Analyses; WHO, World Health Organization; US CDC, United States Centers for Disease Control and Prevention; PHAC, Public Health Agency of Canada; ECDC, European Centre for Disease Prevention and Control; ICD, International Classification of Diseases.

Acknowledgments

The authors thank Public Health Ontario library staff for their assistance in devising the study search strategy.

Funding

This project was supported by a doctoral award from the Canadian Institutes of Health Research (CIHR) (GSD-128757). CIHR was not involved in the design, collection, analysis, or interpretation of data.

Availability of data and materials

The dataset supporting the conclusions of this article is included within the article (Table 3).

Authors’ contributions

RDS conceived and designed the study, performed the search, led data collection, performed data analyses and drafted the manuscript. LCR conceived and supervised the study, contributed to the study’s design, as well as the analysis and interpretation of the data, and helped draft the manuscript. KAB performed data collection, contributed to the interpretation of the data, and helped draft the manuscript. KK contributed to the design and interpretation of the data, and helped draft the manuscript. NSC conceived and supervised the study, contributed to the design, as well as the analysis and interpretation of the data, and helped draft the manuscript. All authors read, provided feedback on and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

Not applicable.

Additional files

Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement checklist. (DOCX 29 kb)

Reference list of all articles reviewed in full-text. (DOCX 26 kb)

Contributor Information

Rachel D. Savage, Email: rachel.savage@mail.utoronto.ca

Laura C. Rosella, Email: laura.rosella@utoronto.ca

Kevin A. Brown, Email: kevin.brown@mail.utoronto.ca

Kamran Khan, Email: Khank@smh.ca.

Natasha S. Crowcroft, Email: natasha.crowcroft@oahpp.ca

References

- 1.World Health Organization WHO position paper on hepatitis A vaccines - June 2012. Wkly Epidemiol Rec. 2012;87:261–276. [Google Scholar]

- 2.Vrbova L, Johnson K, Whitfield Y, Middleton D. A descriptive study of reportable gastrointestinal illnesses in Ontario, Canada, from 2007 to 2009. BMC Public Health. 2012;12:970-2458-12-970. doi: 10.1186/1471-2458-12-970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ly KN, Klevens RM. Trends in disease and complications of hepatitis A virus infection in the United States, 1999–2011: a new concern for adults. J Infect Dis. 2015 doi: 10.1093/infdis/jiu834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Public Health Agency of Canada - National Advisory Committee on Immunization. Part 4 - Active Vaccines, Hepatitis A. In: Canadian Immunization Guide. http://www.phac-aspc.gc.ca/publicat/cig-gci/p04-hepa-eng.php. Accessed 18 Dec 2013.

- 5.Advisory Committee on Immunization Practices (ACIP) Fiore AE, Wasley A, Bell BP. Prevention of hepatitis A through active or passive immunization: recommendations of the Advisory Committee on Immunization Practices (ACIP) MMWR Recomm Rep. 2006;55(RR-7):1–23. [PubMed] [Google Scholar]

- 6.Sane J, MacDonald E, Vold L, Gossner C, Severi E, Outbreak Investigation Team Multistate foodborne hepatitis A outbreak among European tourists returning from Egypt--need for reinforced vaccination recommendations, November 2012 to April 2013. Euro Surveill. 2015;20:21018. doi: 10.2807/1560-7917.ES2015.20.4.21018. [DOI] [PubMed] [Google Scholar]

- 7.Swinkels HM, Kuo M, Embree G, Fraser Health Environmental Health Investigation Team. Andonov A, Henry B, et al. Hepatitis A outbreak in British Columbia, Canada: the roles of established surveillance, consumer loyalty cards and collaboration, February to May 2012. Euro Surveill. 2014;19:20792. doi: 10.2807/1560-7917.ES2014.19.18.20792. [DOI] [PubMed] [Google Scholar]

- 8.Collier MG, Khudyakov YE, Selvage D, Adams-Cameron M, Epson E, Cronquist AJ, et al. Outbreak of hepatitis A in the USA associated with frozen pomegranate arils imported from Turkey: an epidemiological case study. Lancet Infect Dis. 2014;14:976–981. doi: 10.1016/S1473-3099(14)70883-7. [DOI] [PubMed] [Google Scholar]

- 9.World Health Organization. Global policy report on the prevention and control of viral hepatitis. 2013. http://www.who.int/hepatitis/publications/global_report/en/. Accessed 21 Mar 2016.

- 10.Centers for Disease Control and Prevention Case definitions for infectious conditions under public health surveillance. MMWR Recomm Rep. 1997;46(RR-10):1–55. [PubMed] [Google Scholar]

- 11.European Centre for Disease Prevention and Control: Methodology protocol for estimating the burden of communicable diseases. 2010. http://ecdc.europa.eu/en/publications/Publications/1106_TER_Burden_of_disease.pdf. Accessed 12 Jan 2016.

- 12.Doyle TJ, Glynn MK, Groseclose SL. Completeness of notifiable infectious disease reporting in the United States: an analytical literature review. Am J Epidemiol. 2002;155:866–874. doi: 10.1093/aje/155.9.866. [DOI] [PubMed] [Google Scholar]

- 13.Hadler SC, Webster HM, Erben JJ, Swanson JE, Maynard JE. Hepatitis A in day-care centers. A community-wide assessment. N Engl J Med. 1980;302:1222–1227. doi: 10.1056/NEJM198005293022203. [DOI] [PubMed] [Google Scholar]

- 14.Klevens RM, Liu S, Roberts H, Jiles RB, Holmberg SD. Estimating acute viral hepatitis infections from nationally reported cases. Am J Public Health. 2014;104:482–487. doi: 10.2105/AJPH.2013.301601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bonacic Marinovic A, Swaan C, van Steenbergen J, Kretzschmar M. Quantifying reporting timeliness to improve outbreak control. Emerg Infect Dis. 2015;21:209–216. doi: 10.3201/eid2102.130504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kwong JC, Ratnasingham S, Campitelli MA, Daneman N, Deeks SL, Manuel DG, et al. The impact of infection on population health: results of the Ontario burden of infectious diseases study. PLoS One. 2012;7 doi: 10.1371/journal.pone.0044103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Plass D, Mangen MJ, Kraemer A, Pinheiro P, Gilsdorf A, Krause G, et al. The disease burden of hepatitis B, influenza, measles and salmonellosis in Germany: first results of the burden of communicable diseases in Europe study. Epidemiol Infect. 2014;142:2024–2035. doi: 10.1017/S0950268813003312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Alter MJ, Mares A, Hadler SC, Maynard JE. The effect of underreporting on the apparent incidence and epidemiology of acute viral hepatitis. Am J Epidemiol. 1987;125:133–139. doi: 10.1093/oxfordjournals.aje.a114496. [DOI] [PubMed] [Google Scholar]

- 19.Gibbons CL, Mangen MJ, Plass D, Havelaar AH, Brooke RJ, Kramarz P, et al. Measuring underreporting and under-ascertainment in infectious disease datasets: a comparison of methods. BMC Public Health. 2014;14:147-2458-14-147. doi: 10.1186/1471-2458-14-147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Keramarou M, Evans MR. Completeness of infectious disease notification in the United Kingdom: a systematic review. J Infect. 2012;64:555–564. doi: 10.1016/j.jinf.2012.03.005. [DOI] [PubMed] [Google Scholar]

- 21.Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ. 2009;339:b2535. doi: 10.1136/bmj.b2535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jacobsen KH, Wiersma ST. Hepatitis A virus seroprevalence by age and world region, 1990 and 2005. Vaccine. 2010;28:6653–6657. doi: 10.1016/j.vaccine.2010.08.037. [DOI] [PubMed] [Google Scholar]

- 23.Advisory Committee on Immunization Practices (ACIP) Prevention of hepatitis A through active or passive immunization: Recommendations of the Advisory Committee on Immunization Practices (ACIP) MMWR Recomm Rep. 1999;48(RR-12):1–37. [PubMed] [Google Scholar]

- 24.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. doi: 10.2307/2529310. [DOI] [PubMed] [Google Scholar]

- 25.Rosella LC, Bowman C, Pach B, Morgan S, Fitzpatrick T, Bornbaum C, et al. The development and validation of a meta-tool for quality appraisal of public health evidence: Meta Quality Appraisal Tool (MetaQAT) 2015. [DOI] [PubMed] [Google Scholar]

- 26.Schulz KF, Altman DG, Moher D, CONSORT Group CONSORT 2010 Statement: Updated guidelines for reporting parallel group randomised trials. J Clin Epidemiol. 2010;63:834–840. doi: 10.1016/j.jclinepi.2010.02.005. [DOI] [PubMed] [Google Scholar]

- 27.Better Value Healthcare (Oxford). Critical Appraisal Skills Programme (CASP) - CASP Checklists. http://www.casp-uk.net/#!checklists/cb36. Accessed 21 Mar 2016.

- 28.Pillaye J, Clarke A. An evaluation of completeness of tuberculosis notification in the United Kingdom. BMC Public Health. 2003;3:31. doi: 10.1186/1471-2458-3-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.German RR, Lee LM, Horan JM, Milstein RL, Pertowski CA, Waller MN, et al. Updated guidelines for evaluating public health surveillance systems: recommendations from the Guidelines Working Group. MMWR Recomm Rep. 2001;50(RR-13):1–35. [PubMed] [Google Scholar]

- 30.Barendregt JJ, Doi SA, Lee YY, Norman RE, Vos T. Meta-analysis of prevalence. J Epidemiol Community Health. 2013;67:974–978. doi: 10.1136/jech-2013-203104. [DOI] [PubMed] [Google Scholar]

- 31.DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7:177–188. doi: 10.1016/0197-2456(86)90046-2. [DOI] [PubMed] [Google Scholar]

- 32.Deeks JJ, Altman DG, Bradburn MJ. Statistical methods for examining heterogeneity and combining results from several studies in meta-analysis. In: Egger M, Smith GD, Altman DG, editors. Systematic reviews in health care: meta-analysis in context. 2. London: BMJ Books; 2008. pp. 285–312. [Google Scholar]

- 33.Higgins JP, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327:557–560. doi: 10.1136/bmj.327.7414.557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Backer HD, Bissell SR, Vugia DJ. Disease reporting from an automated laboratory-based reporting system to a state health department via local county health departments. Public Health Rep. 2001;116:257–265. doi: 10.1016/S0033-3549(04)50041-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Boehmer TK, Patnaik JL, Burnite SJ, Ghosh TS, Gershman K, Vogt RL. Use of hospital discharge data to evaluate notifiable disease reporting to Colorado’s Electronic Disease Reporting System. Public Health Rep. 2011;126:100–106. doi: 10.1177/003335491112600114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Centers for Disease Control and Prevention (CDC) Automated detection and reporting of notifiable diseases using electronic medical records versus passive surveillance--Massachusetts, June 2006-July 2007. MMWR - Morbidity & Mortality Weekly Report. 2008;57:373–376. [PubMed] [Google Scholar]

- 37.Roels TH, Christl M, Kazmierczak JJ, MacKenzie WR, Davis JP. Hepatitis A infections in Wisconsin: trends in incidence and factors affecting surveillance, 1986–1995. WMJ. 1998;97:32–38. [PubMed] [Google Scholar]

- 38.Simmons G, Whittaker R, Boyle K, Morris AJ, Upton A, Calder L. Could laboratory-based notification improve the control of foodborne illness in New Zealand? N Z Med J. 2002;115:237–240. [PubMed] [Google Scholar]

- 39.Overhage JM, Grannis S, McDonald CJ. A comparison of the completeness and timeliness of automated electronic laboratory reporting and spontaneous reporting of notifiable conditions. Am J Public Health. 2008;98:344–350. doi: 10.2105/AJPH.2006.092700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Matin N, Grant A, Granerod J, Crowcroft N. Hepatitis A surveillance in England--how many cases are not reported and does it really matter? Epidemiology & Infection. 2006;134:1299–1302. doi: 10.1017/S0950268806006194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Sickbert-Bennett EE, Weber DJ, Poole C, MacDonald PD, Maillard JM. Completeness of communicable disease reporting, North Carolina, USA, 1995–1997 and 2000–2006. Emerg Infect Dis. 2011;17:23–29. doi: 10.3201/eid1701.100660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Centers for Disease Control and Prevention (CDC). Surveillance for Viral Hepatitis – United States, 2011. 2011. http://www.cdc.gov/hepatitis/statistics/2011surveillance/index.htm. Accessed 12 Jan 2016.

- 43.Abbott DD. Architect System - HAVAb-IgM, Product Monograph. 2004. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset supporting the conclusions of this article is included within the article (Table 3).