Abstract

Human language involves combining items into meaningful, syntactically structured wholes. The evolutionary origin of syntactic abilities has been investigated by testing pattern perception capacities in nonhuman animals. New World primates can respond spontaneously to structural changes in acoustic sequences and songbirds can learn to discriminate between various patterns in operant tasks. However, there is no conclusive evidence that songbirds respond spontaneously to structural changes in patterns without reinforcement or training. In this study, we tested pattern perception capacities of common ravens, Corvus corax, in a habituation–discrimination playback experiment. To enhance stimulus salience, call recordings of male and female ravens were used as acoustic elements, combined to create artificial territorial displays as target patterns. We habituated captive territorial raven pairs to displays following a particular pattern and subsequently exposed them to several test and control playbacks. Subjects spent more time visually orienting towards the loudspeaker in the discrimination phase when they heard structurally novel call combinations, violating the pattern presented during habituation. This demonstrates that songbirds, much like primates, can be sensitive to structural changes in auditory patterns and respond to them spontaneously, without training.

Keywords: call combination, common raven, pattern perception, songbird, spontaneous response, territoriality

Highlights

-

•

Pattern perception capacities were tested by simulating a territorial intrusion.

-

•

Ravens are spontaneously sensitive to structural changes in an acoustic pattern.

-

•

Male ravens displayed a stronger interest in pattern changes than females.

-

•

Songbirds, much like primates, can perceive pattern structure without training.

The vocal communication systems of nonhuman animals possess a number of similar features, representing either homologues or analogues of particular attributes of language (Fitch, 2000, Fitch, 2005, Hauser, 1996, Hurford, 2007). For example, primates and nonprimate mammals produce vocalizations that, like the elements in human speech, can inform a receiver about the production context (Arnold et al., 2011, Manser, 2001, Seyfarth et al., 1980) and the caller-specific features, e.g. identity (Proops et al., 2009, Townsend et al., 2012) and/or its body size (Fitch and Fritz, 2006, Reby and McComb, 2003). However, in human language the smallest elements possess little or no contextual denotation by themselves; it is combinations of elements that create meaning. Humans excel at forming and comprehending such combinatorial structures (Schulz, Schmalbach, Brugger, & Witt, 2012). To better understand the evolution of these capacities, multiple experiments on acoustic pattern perception by nonhuman animals have been conducted in the last decade (ten Cate & Okanoya, 2012). Many mammals naturally produce sequences containing different call types (Collier, Bickel, van Schaik, Manser, & Townsend, 2014) and certain primate species combine different calls depending on the current context (Arnold and Zuberbühler, 2006, Arnold and Zuberbühler, 2008, Candiotti et al., 2012, Ouattara et al., 2009). Sensitivity to structural changes in patterns of acoustic elements has also been studied with primates under laboratory conditions. In two habituation–discrimination studies, cottontop tamarins, Saguinus oedipus, and squirrel monkeys, Saimiri sciureus, showed spontaneous differential responses to novel arrangements of familiar acoustic elements (Fitch and Hauser, 2004, Ravignani et al., 2013). The stimuli consisted of sequences containing human spoken syllables (tamarins) or pure tones (squirrel monkeys) from two different categories. During the habituation phase, monkeys were exposed to sequences of various lengths, following a particular pattern. In the test phase, subjects looked more often at the sound source when they heard structurally novel arrangements of the same elements. Animals' responses in these experiments were not reinforced, and were thus mediated only by the novelty of the test stimuli to untrained animals.

A successful operant conditioning study on European starlings, Sturnus vulgaris, further suggested that testing with conspecific vocalizations may increase the salience of stimuli and hence improve the animals' performance in artificial grammar learning experiments (Gentner, Fenn, Margoliash, & Nusbaum, 2006). However, for nonhuman primates testing pattern perception with conspecific calls presents major potential problems. To construct a pattern with call recordings, the chosen calls would have to be acoustically diverse to the extent that they could perceptually be assigned to at least two distinct categories. At the same time, the calls would have to be of similar behavioural relevance and hence best be linked to the same context. Nonhuman primate vocal behaviour is highly context specific with very little acoustic variation within individual calls (Janik and Slater, 1997, Janik and Slater, 2000, Nowicki and Searcy, 2014). If more than one call type is produced in the same overall context, the call combination might convey qualitatively different information (Arnold & Zuberbühler, 2008), or address two different audiences (Fedurek, Slocombe, & Zuberbühler, 2015). In the case of primates, studies with natural vocalizations thus risk conflating item-based reaction with pattern perception. However, many songbirds, being vocal learners, produce several different discrete acoustic elements used in the same context. For example, a population of wild common ravens, Corvus corax, in western Switzerland was shown to produce 79 distinct call types in a simulated territorial interaction, with the individual males exhibiting a repertoire of 4–19 and the females 5–18 long-distance calls (Enggist-Dueblin & Pfister, 2002). Hence, songbirds can readily be tested with artificially created patterns of their own vocalizations (Berwick, Okanoya, Beckers, & Bolhuis, 2011).

Several studies have shown that songbirds can learn to discriminate between patterns of different complexities in operant-conditioning paradigms, based not only on structural but also prosodic and phonological cues (Gentner et al., 2006, van Heijningen et al., 2013, Seki et al., 2013). While songbirds thus clearly possess the cognitive capacity to learn about patterns, it has so far not been shown whether they show similar sensitivity to patterns without reinforcement. Would songbirds, like monkeys in previous studies, show a spontaneous response to an unfamiliar arrangement of known elements based solely on the novelty of the pattern, without training? Habituation–discrimination experiments can reveal such spontaneous reactions (ten Cate & Okanoya, 2012). A previous study aimed at demonstrating this capacity in Bengalese finches, Lonchura striata domestica (Abe & Watanabe, 2011), but this study only used a single conspecific song for the acoustic stimulus; thus it is possible that the birds discriminated between the playbacks based on superficial resemblance rather than the pattern itself, a fatal confound (Beckers, Bolhuis, Okanoya, & Berwick, 2012). A recent study confirmed that songbirds are indeed very sensitive to just how a sequence sounds, not necessarily how its elements are arranged. In a go–no-go task, zebra finches, Taeniopygia guttata, were operantly conditioned to discriminate between two patterns of spoken syllables by prosodic cues, and were subsequently also able to identify the target pattern in the absence of these particular cues (Spierings & ten Cate, 2014). In this study, prosody seemed to be the most salient cue rather than the structure of the test sequences. Hence, the question whether songbirds are sensitive to structural changes without reinforcement remains open.

In the present study we tested common ravens in a habituation–discrimination experiment using conspecific stimuli arranged into patterns used in a previous primate study (Fitch & Hauser, 2004). Common ravens are among the largest songbirds, have large brains and possess complex cognitive capacities which are in many respects comparable to those of primates (Heinrich, 2011). They are highly responsive to playbacks of conspecific vocalizations (Boeckle, Szipl, & Bugnyar, 2012), and discriminate between the calls of familiar and unfamiliar individuals (Szipl, Boeckle, Wascher, Spreafico, & Bugnyar, 2015). Ravens can acoustically identify former affiliation partners (Boeckle & Bugnyar, 2012), and are capable of third-party recognition of in- and out-group individuals based on playbacks of vocal interactions (Massen, Pasukonis, Schmidt, & Bugnyar, 2014).

To increase the behavioural relevance and cognitive salience of our stimuli, we used raven long-distance calls as the elements of the tested patterns. Territorial breeding pairs typically combine long-distance calls alternately to produce pair-specific vocal displays, possibly in order to strengthen and advertise the pair bond as well as to advertise their joint claim on the territory (Enggist-Dueblin & Pfister, 2002). Long-distance calls are acquired during ontogeny and facilitate individual discrimination (Boeckle & Bugnyar, 2012). Because male ravens are larger than females, they have longer vocal tracts and produce long-distance calls with lower resonance frequencies. Hence a call sequence of two individual ravens of two different sizes is acoustically recognizable as a joint display of a territorial pair (Boeckle, 2012). Similarly to a previous primate study (Fitch & Hauser, 2004), which used syllables of two categories as the elements to create the patterns, one spoken by a man, the other by a woman, we used the sex of the birds to define our categories, one containing calls of male ravens and the other calls from females. We hypothesized that territorial raven pairs would be highly attentive to sequences of long-distance calls of a male and a female raven, especially because this stimulus could be interpreted as a territorial vocal display of an unfamiliar but coordinated breeding pair intruding upon the subjects' territory.

We tested whether raven subjects would show differential responses to changes in the pattern of long-distance calls. For accurate comparison with the previous primate study (Fitch & Hauser, 2004) we used the same pattern for the habituation phase, and in the first test at the discrimination phase employed the same types of violations. This first test also included control sequences to investigate whether the subjects could generalize across the tested pattern. We further implemented a second test to see whether ravens could internalize the syntactic rule used to generate the stimuli of the habituation phase. Given the behavioural relevance of the set-up and the cognitive capacities of common ravens, we hypothesized that subjects would show discrimination in the first test. As there is currently no conclusive evidence that nonhuman species perceive more complex patterns (ten Cate & Okanoya, 2012), we did not necessarily expect ravens to discriminate in the second test, particularly given our experiment's lack of reinforcement.

Methods

Subjects

The project was conducted with 24 adult common ravens housed in pairs (thus 12 males, 12 females) in outdoor aviaries at a variety of zoological gardens, research stations and with private keepers in Austria, Germany and Sweden. All pairs had attempted to breed or bred successfully, and were reported to frequently engage in joint displays, suggesting that they considered their home aviary their territory. Birds at all sites were already habituated to unfamiliar humans standing in front of their aviary taking pictures and video recording them (e.g. visitors at zoos; former experimenters, documentary film teams at research stations).

Stimuli

We used a pattern used in the previous primate study (Fitch & Hauser, 2004): a very simple finite state grammar following the rule (AB)n. A and B are categories each containing several elements (a1, a2, a3, etc.) and n represents the number of repetitions (e.g. for (AB)2 n = 2 implies abab). Elements are never repeated within a stimulus. Calls of seven adult ravens (four males, three females) were used as the acoustic elements. These vocalizations (collected by S.A.R and M.B.) were long-distance calls, which are commonly produced in the context of territorial defence (Pfister-Gfeller, 1995). From each raven eight high-quality recordings of this idiosyncratic call (highly stereotyped and typical for an individual) were selected. The calls of all seven providers were of comparable length (mean duration ± SE = 0.319 ± 0.026 s). All recordings were amplitude-equalized to the same root-mean square amplitude (SPL) using Adobe Audition (version 4.0x1815, Adobe Systems Inc., San Jose, CA, U.S.A.). The playback tracks for habituation and the two test phases for each set of trials were all artificial vocal displays composed of calls from the same male and female raven, both of whom were unfamiliar to the subjects. The calls of the male served as category A elements and those of the female as category B elements (e.g. for abab: male–female–male–female). The primate study used eight recordings of single syllables spoken by one man and another eight spoken by one woman as the elements (16 recordings in total) for all stimulus patterns. The present study maintained this element variance (16 recordings: eight male + eight female calls) for individual experimental sessions while increasing the variation between sessions by assembling six different provider pairs (out of seven providers). Within a display stimulus, consecutive call elements were separated by 0.3 s of silence. This intercall interval duration is typical for natural raven calling behaviour and resulted in a display sequence duration of approximately 2.2–4.7 s (depending on n). For all playbacks, the time delay between two display sequence onsets was 30 s. This interstimulus interval is far longer than in a previous primate study (Fitch & Hauser, 2004), but none the less represents a rather high repetition rate for ravens (Boeckle & Bugnyar, 2012). All display sequences were randomly assembled using custom Python scripts (programmed by Jinook Oh, Department of Cognitive Biology, University of Vienna). The software constructed each sequence for a specific pattern by randomly choosing call recordings from two predefined provider categories. Each individual call recording could only occur once in a given display sequence, but was reused in the random assembling process for subsequent sequences. Additional custom Python scripts were used to randomly combine the display sequences into playback tracks. The habituation phase playback was a 120 min long track of display sequences with two or three element pairs (n = 2 or 3) and thus four to six elements. The two tests in the discrimination phase each involved two playbacks with three display sequences and 10 s of silence preceding the first call (100 s in total length each, see Fig. 1 for an example). Test 1 represented the direct comparison with the primate study and was hence always conducted before Test 2, which aimed to reveal the extent of ravens' pattern recognition abilities. Both tests (Test 1 and Test 2) included violations of the habitation phase's grammar and a novel grammatical control playback. In Test 1, the violation tracks consisted of displays following a different pattern, and in Test 2 displays followed the original pattern but with the last element pair missing the B element (n = 2 or 3 in both tests). The control stimuli in both tests were made of displays consistent with the pattern of the habituation phase, but including one or two grammatically correct elongations (n = 4). The relatively low number of pattern elements (16 call recordings for each stimulus set) and their frequent use in the habituation playback track resulted in high familiarity of the subjects with the individual call recordings. Any responses in the discrimination phase could hence not be attributed to the novelty of a call recording. At the same time, the automated procedure of assembling patterns randomly prevented the familiarization of the subjects with specific display sequences (e.g. for a display sequence with n = 3, there were 8 × 8 × 7 × 7 × 6 × 6 = 112 896 options). Each pair of subjects received an individually randomized habituation playback (consisting of novel display sequences) and a novel stimulus set for the discrimination phase.

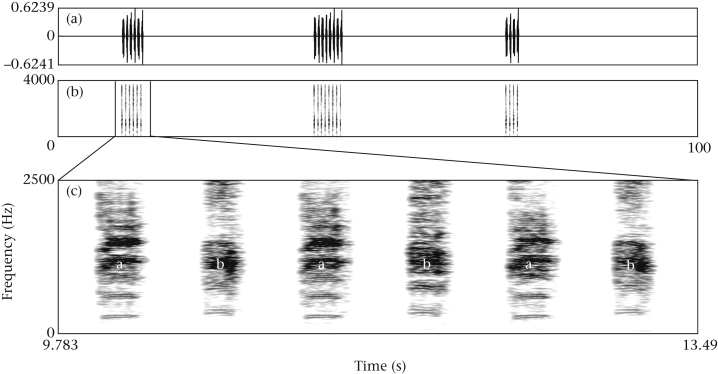

Figure 1.

Example of a control playback track with an (AB)n pattern, shown as (a) waveform and (b) spectrogram with (c) the first artificial display enlarged. After 10 s of silence the first display starts with n = 3; individual calls are separated by 0.3 s, display onsets by 30 s, and a single display never contains the same recording twice (spectrogram settings in Praat: window length [s]: 0.09; dynamic range [rel dB]: 50.0).

Playback Set-up

All experiments used a habituation–discrimination paradigm and were conducted by two experimenters working together (S.A.R. and G.S. or S.A.R. and J.J.). Before testing, one experimenter approached the aviary from a dead (invisible) angle, placing a battery-powered loudspeaker (‘LD Systems Roadboy 65’ or ‘Ion Audio IPA16 Block Rocker AM/FM Portable PA System’; minimal frequency response: 80–15 000 Hz) approximately 10 m from the outer fence and concealing it by vegetation and/or by means of camouflage nets. The speaker was connected to an iPod touch device (www.apple.com, 4th generation, MC540LL/A) for sound generation via a radio transmitter–receiver system (Sennheiser EW 112-p G3-A Band, 516–558 MHz). This set-up allowed one experimenter to play back the stimuli without any visible connection to the sound source. Each experimenter videotaped the behaviours of one raven throughout the experiment (Canon HD camcorders, Legria series).

Preceding the experiment, 10 min of behavioural baseline data were recorded. Each experimenter took note of the rate of the most conspicuous behaviour (loud calls > flying > walking > object/food manipulation > preening) displayed by the subject (e.g. highly vocal individual: counting calls/min; highly mobile on the ground: time spent walking/min), to obtain a criterion for an individual-specific behavioural baseline level. Then the habituation playback phase began, during which the target pattern track was played for at least 30 min. An online, pair-specific habituation criterion was used: the strongest observable responses of the subjects during the 30 s period between two stimulus onsets were counted for the first three stimuli and the period with the greatest number of responses was determined. The ravens were considered to be habituated when their response rate to at least three consecutive periods between two stimulus onsets had dropped by 50%, compared to their initial response (Boeckle & Bugnyar, 2012). After at least 30 min, and after the habituation criterion had been fulfilled, playbacks were stopped (on average after 39 min 16 s, minimum 30 min 15s, maximum 60 min 13 s), and the ravens then allowed to return to their initial behavioural baseline level. After both experimenters confirmed that their subject had reached the previously established individual behavioural baseline (rate of the most conspicuous behaviours at the initial rate or lower), the discrimination phase began (on average after 8 min 21 s, minimum 5 min 21 s, maximum 26 min 30 s; see Fig. 2). Before the initiation of every playback in Test 1 and Test 2 the birds' activity was allowed to return to behavioural baseline level again (on average 8 min 23 s, minimum 3 min 17 s, maximum 21 min 13 s). After the final playback the ravens were videotaped for another 10 min. The order of stimulus playbacks within the two tests was automatically randomized between experiments, and unknown at playback to the experimenters. Playback amplitude, measured at the aviary's outer fence, reached on average 55 dB (SPL, measured with a Voltcraft SL-100 Digital Sound Level Meter 5 Hz–8 kHz).

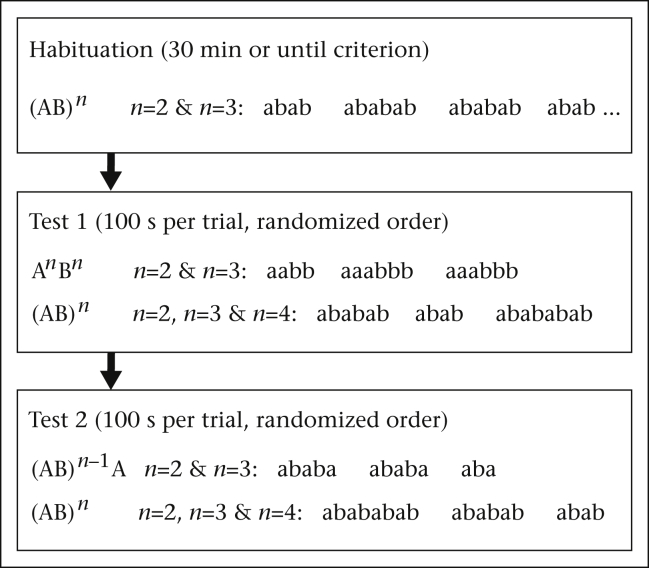

Figure 2.

Sequence of the habituation–discrimination experiment. The discrimination phase contained two separate test sessions: both tests included two playbacks with different patterns; for each pattern the possible number of element pairs (n) and an example of a playback's composition are depicted. Each control playback in either test contained at least one artificial display with n = 4.

Video Coding

Digital videos from the cameras were transferred to a MacBook Pro and converted to codable ‘.mp4’ files using HandBrake (version 0.9.9 x86_64) on Mac. Each individual trial was extracted with iMovie and coded using Solomon coder (http://solomoncoder.com) on a PC. Recorded behaviours included locomotion (durations of walking and flying; frequency of perch location changes, e.g. hopping from one branch to another), rate of horizontal and vertical head turns in any direction, and the duration of visual orientation (‘looking’) towards the loudspeaker. Corvids in general and members of the genus Corvus in particular have a wide binocular overlap in their vision (Fernandez-Juricic, O'Rourke, & Pitlik, 2010); common ravens were measured to have a maximum binocular overlap of 43.2° (±2.4) (Troscianko, von Bayern, Chappell, Rutz, & Martin, 2012), which is higher than in most raptors (e.g. 20° in short-toed eagle, Circaetus gallicus) and almost as high as in owls (Martin & Katzir, 1999). Hence, ravens were considered to be visually oriented towards the loudspeaker (positioned at least 10 m away) when facing it directly with the beak tip pointing towards it. In addition to the duration of visual orientation at the loudspeaker, any head turn was coded as a measure of agitation. ‘Head turns’ are particularly challenging to code in birds. Ravens often move their beak in several directions while fixating the same point with both eyes. A head turn was coded when the beak tip crossed an imaginary line running parallel to the initial position of the ‘sagittal suture’ at the lateral edge of the raven's head (see Fig. S1 in the Supplementary material). Trials were 90 s long (three stimulus onsets every 30 s plus the 30 s after the last stimulus onset), and the 90 s before the first playback of the trial were also coded. Owing to opaque structures in the aviaries, subjects could temporarily disappear from sight. For an accurate comparison of baseline and trial (each 90 s), and subsequently between trials, this missing time had to be accounted for. As a first measure, additional video material from the subsequent/antecedent breaks between trials was coded to make up for missing time. For example if the raven was only visible for 85.4 s before the stimulus played, the 4.6 s of antecedent video material with the subject in view (seconds 94.6 to 90 before the onset) were additionally coded. This time compensation was employed in 55% of the 192 cases (96 trials before and after; mean compensation = 8.8 s, median = 1.4 s; mean difference subsequent/antecedent period per trial = 2.2 s). However, the compensation was limited to maximally 60 s after the 90 s of the trial and before the 90 s preceding the first playback of a trial. In cases where this added buffer still could not make up for the missing time (ca. 5% of all videos), the values for coded behaviours were multiplied by a correction factor (90 s/number of seconds with bird in sight). Coding was performed blind to condition (by S.A.R.). To evaluate inter-rater reliability, 25% of all videos were recoded by two other authors (12.5% by G.S. and 12.5% by J.J.). Intraclass correlation coefficients (ICC, Hallgren, 2012) were calculated for all behaviours used in the subsequent statistical analysis and showed excellent agreement (Cicchetti, 1994) between the coders (ICC between 0.767 and 0.945, all F > 9.1, all P < 0.001).

Statistical Analysis

The amount of time spent being visually oriented towards the loudspeaker was used as the main response variable. The duration of this behaviour was chosen over frequency of individual looks because the ravens were exposed to only one stimulus source (Crockford, Wittig, Seyfarth, & Cheney, 2007). In addition, a variable representing the bird's overall agitation (hereafter referred to as the ‘agitation component’) was created by conducting a principal component analysis (PCA) for the behaviours ‘walking’, ‘flying’ (both durations), ‘perch change’ and ‘head turn’ (both frequencies). The first component explained 42% of the overall variance (eigenvalue = 1.67, rotation = varimax). Following Kaiser's criterion, this single component was extracted in the PCA (see Table 1 for factor loadings), which was preceded by a factor analysis and a Bartlett's test on the correlation matrix (χ26 = 43.7, P < 0.001).

Table 1.

Component matrix of the principal component analysis for the agitation component using the raw data and the difference scores

| PC1 raw data | PC1 difference scores | |

|---|---|---|

| Perch changes | 0.87 | 0.8 |

| Flying | 0.38 | 0.33 |

| Walking | 0.86 | 0.76 |

| Head turns | 0.19 | 0.4 |

| Eigenvalue | 1.67 | 1.49 |

| % of variance explained | 42 | 37 |

Standardized loadings (pattern matrix) based upon correlation matrix.

For both variables, ‘oriented towards loudspeaker’ and ‘agitation component’, the analysis was conducted with the raw values observed during the 90 s playback period. In addition, difference scores were calculated for a more nuanced investigation of the influence of the playbacks on subjects' behaviour, taking individual differences into account (Massen et al., 2014): the values for each variable recorded in the 90 s baseline preceding a trial were subtracted from those observed during the 90 s after the first stimulus onset (playback–baseline). Difference scores, in contrast to raw data, can account for a subject's changing motivational state, which could be affected to different extents depending on the keeping facility (e.g. relatively standardized conditions in research institutions versus a variety of disturbances in zoos such as visitors, keepers and wild birds). ‘Oriented towards loudspeaker’ and the ‘agitation component’ were both used as response variables in separate generalized linear mixed models (GLMMs). The models were run once with the raw data and once with the difference scores. As the difference scores and PCA components contained negative values, the data were transformed to be positive by adding the absolute value of the most negative data point followed by taking the square root. All GLMMs were run using a Gaussian distribution (with a log link function) and contained subject identity nested within pair identity as a random effect. The main effects included ‘condition’ (violation and control), playback window ‘order’ (1–4), sex (female and male) and the two-way interactions between ‘condition’ and the other two main effects. Only biologically meaningful models (13 models/response variable) were considered and ranked by computing corrected Akaike information criterion (AICc) values. The difference in AICc between the models (ΔAICc) was calculated by subtracting the lowest value from all the others (Burnham, Anderson, & Huyvaert, 2010). Using ΔAICc, the relative likelihood (exp(−0.5/ΔAICc)) and the Akaike weight or probability (relative likelihood/sum of all relative likelihoods) were calculated (Burnham et al., 2010). These measures served to evaluate the different models, identifying those with the strongest support. All computed models and their AICc based measurements are listed in Table 2. In addition to the models with the strongest support, models with ΔAICc ≤ 2 were also considered (Richards, 2005, Symonds and Moussalli, 2011). Details of these models are shown in Table 3. For post hoc analyses, the values of the two raven pair members were summed to avoid pseudoreplication, reducing the sample size from 24 to 12. Friedman tests were used to detect differences between experimental units. Pairwise comparisons were conducted using exact Wilcoxon signed-rank tests (Mundry & Fischer, 1998), and Cohen's d effect sizes (Cohen, 1988) were calculated. Analyses were again performed using both the raw data and the difference scores. Statistical analysis was carried out in R (version 3.0.2 GUI 1.62 for Mac; R packages: corpcor, GPArotation, psych, glmmADMB version 0.7.7., coin; The R Foundation for Statistical Computing, Vienna, Austria, http://www.r-project.org).

Table 2.

Model selection from the 13 generalized linear mixed models computed for the four response variables

| Response variable | Random factor | Model | AICc | ΔAICc | Relative likelihood | Akaike weight |

|---|---|---|---|---|---|---|

| Orientation raw | (1 | pair/individual) | Condition*Order+Condition*Sex | 361.893 | 4.426 | 0.109 | 0.023 |

| Sex+Condition*Order | 360.255 | 2.789 | 0.248 | 0.053 | ||

| Order+Condition*Sex | 360.355 | 2.889 | 0.236 | 0.050 | ||

| Condition+Order+Sex | 358.773 | 1.306 | 0.520 | 0.111 | ||

| Condition*Order | 359.873 | 2.406 | 0.300 | 0.064 | ||

| Condition*Sex | 359.173 | 1.706 | 0.426 | 0.091 | ||

| Condition+Order | 358.544 | 1.077 | 0.584 | 0.125 | ||

| Condition+Sex | 357.644 | 0.177 | 0.915 | 0.196 | ||

| Order+Sex | 363.144 | 5.677 | 0.059 | 0.013 | ||

| Order | 362.767 | 5.300 | 0.071 | 0.015 | ||

| Sex | 362.167 | 4.700 | 0.095 | 0.020 | ||

| Condition | 357.467 | 0.000 | 1.000 | 0.214 | ||

| Intercept only | 361.840 | 4.373 | 0.112 | 0.024 | ||

| Orientation difference scores | (1 | pair/individual) | Condition*Order+Condition*Sex | 276.093 | 4.026 | 0.134 | 0.038 |

| Sex+Condition*Order | 273.655 | 1.589 | 0.452 | 0.128 | ||

| Order+Condition*Sex | 276.855 | 4.789 | 0.091 | 0.026 | ||

| Condition+Order+Sex | 274.473 | 2.406 | 0.300 | 0.085 | ||

| Condition*Order | 274.573 | 2.506 | 0.286 | 0.081 | ||

| Condition*Sex | 275.573 | 3.506 | 0.173 | 0.049 | ||

| Condition+Order | 273.673 | 1.606 | 0.448 | 0.127 | ||

| Condition+Sex | 273.244 | 1.177 | 0.555 | 0.158 | ||

| Order+Sex | 281.244 | 9.177 | 0.010 | 0.003 | ||

| Order | 279.967 | 7.900 | 0.019 | 0.005 | ||

| Sex | 280.167 | 8.100 | 0.017 | 0.005 | ||

| Condition | 272.067 | 0.000 | 1.000 | 0.284 | ||

| Intercept only | 278.940 | 6.873 | 0.032 | 0.009 | ||

| Agitation raw | (1 | pair/individual) | Condition*Order+Condition*Sex | 109.193 | 9.253 | 0.010 | 0.004 |

| Sex+Condition*Order | 107.855 | 7.916 | 0.019 | 0.007 | ||

| Order+Condition*Sex | 107.155 | 7.216 | 0.027 | 0.010 | ||

| Condition+Order+Sex | 105.873 | 5.933 | 0.051 | 0.018 | ||

| Condition*Order | 105.773 | 5.833 | 0.054 | 0.019 | ||

| Condition*Sex | 105.273 | 5.333 | 0.069 | 0.025 | ||

| Condition+Order | 103.744 | 3.804 | 0.149 | 0.053 | ||

| Condition+Sex | 104.044 | 4.104 | 0.128 | 0.046 | ||

| Order+Sex | 103.644 | 3.704 | 0.157 | 0.056 | ||

| Order | 101.667 | 1.727 | 0.422 | 0.151 | ||

| Sex | 101.967 | 2.027 | 0.363 | 0.130 | ||

| Condition | 102.067 | 2.127 | 0.345 | 0.123 | ||

| Intercept only | 99.940 | 0.000 | 1.000 | 0.358 | ||

| Agitation difference scores | (1 | pair/individual) | Condition*Order+Condition*Sex | 65.393 | 9.353 | 0.009 | 0.003 |

| Sex+Condition*Order | 63.255 | 7.216 | 0.027 | 0.008 | ||

| Order+Condition*Sex | 63.055 | 7.016 | 0.030 | 0.009 | ||

| Condition+Order+Sex | 60.973 | 4.933 | 0.085 | 0.027 | ||

| Condition*Order | 60.873 | 4.833 | 0.089 | 0.028 | ||

| Condition*Sex | 61.873 | 5.833 | 0.054 | 0.017 | ||

| Condition+Order | 58.644 | 2.604 | 0.272 | 0.085 | ||

| Condition+Sex | 59.944 | 3.904 | 0.142 | 0.044 | ||

| Order+Sex | 59.544 | 3.504 | 0.173 | 0.054 | ||

| Order | 57.267 | 1.227 | 0.541 | 0.169 | ||

| Sex | 58.267 | 2.227 | 0.328 | 0.103 | ||

| Condition | 57.667 | 1.627 | 0.443 | 0.139 | ||

| Intercept only | 56.040 | 0.000 | 1.000 | 0.313 |

Bold type indicate the models with the strongest support based on relative likelihood, Akaike weights and ΔAICc (≤2.0).

Table 3.

Values of the GLMMs with the highest power to explain the variation in the model selection procedure; for each response variable the models are listed by decreasing relative likelihood

| Response variable | Model | Coefficients | Estimate | SE | CI (2.5%) | CI (97.5%) | z | P |

|---|---|---|---|---|---|---|---|---|

| Orientation raw | Condition | Intercept | 0.862 | 0.120 | 0.635 | 1.089 | 7.210 | <0.001 |

| Condition | 0.226 | 0.087 | 0.061 | 0.390 | 2.610 | 0.009 | ||

| Condition+Sex | Intercept | 0.765 | 0.140 | 0.498 | 1.031 | 5.450 | <0.001 | |

| Condition | 0.229 | 0.087 | 0.064 | 0.394 | 2.640 | 0.008 | ||

| Sex | 0.192 | 0.129 | −0.054 | 0.437 | 1.480 | 0.138 | ||

| Condition+Order | Intercept | 0.963 | 0.150 | 0.678 | 1.248 | 6.410 | <0.001 | |

| Condition | 0.222 | 0.086 | 0.059 | 0.385 | 2.580 | 0.010 | ||

| Order | −0.041 | 0.037 | −0.112 | 0.030 | −1.090 | 0.275 | ||

| Condition+Order+Sex | Intercept | 0.866 | 0.168 | 0.548 | 1.185 | 5.170 | <0.001 | |

| Condition | 0.225 | 0.086 | 0.062 | 0.389 | 2.620 | 0.009 | ||

| Order | −0.041 | 0.037 | −0.112 | 0.030 | −1.080 | 0.278 | ||

| Sex | 0.189 | 0.128 | −0.054 | 0.432 | 1.480 | 0.140 | ||

| Condition*Sex | Intercept | 0.821 | 0.150 | 0.537 | 1.105 | 5.490 | <0.001 | |

| Condition | 0.136 | 0.132 | −0.115 | 0.387 | 1.030 | 0.300 | ||

| Sex | 0.095 | 0.168 | −0.224 | 0.414 | 0.560 | 0.570 | ||

| Condition*Sex | 0.159 | 0.175 | −0.173 | 0.491 | 0.910 | 0.360 | ||

| Orientation difference scores | Condition | Intercept | 1.636 | 0.027 | 1.585 | 1.688 | 60.610 | <0.001 |

| Condition | 0.110 | 0.035 | 0.042 | 0.177 | 3.090 | 0.002 | ||

| Condition+Sex | Intercept | 1.617 | 0.032 | 1.555 | 1.679 | 49.830 | <0.001 | |

| Condition | 0.110 | 0.035 | 0.043 | 0.176 | 3.110 | 0.002 | ||

| Sex | 0.038 | 0.035 | −0.029 | 0.104 | 1.080 | 0.280 | ||

| Sex+Condition*Order | Intercept | 1.588 | 0.066 | 1.463 | 1.712 | 24.230 | <0.001 | |

| Condition | 0.248 | 0.085 | 0.086 | 0.410 | 2.910 | 0.004 | ||

| Order | 0.011 | 0.022 | −0.031 | 0.053 | 0.510 | 0.611 | ||

| Sex | 0.038 | 0.034 | −0.027 | 0.103 | 1.120 | 0.261 | ||

| Condition*Order | −0.057 | 0.032 | −0.117 | 0.003 | −1.800 | 0.072 | ||

| Condition+Order | Intercept | 1.677 | 0.048 | 1.585 | 1.769 | 34.660 | <0.001 | |

| Condition | 0.108 | 0.035 | 0.041 | 0.175 | 3.060 | 0.002 | ||

| Order | −0.016 | 0.016 | −0.047 | 0.014 | −1.010 | 0.314 | ||

| Agitation raw | Intercept only | Intercept | −0.127 | 0.079 | −0.277 | 0.023 | −1.610 | 0.110 |

| Order | Intercept | −0.063 | 0.115 | −0.281 | 0.154 | −0.550 | 0.580 | |

| Order | −0.026 | 0.034 | −0.090 | 0.039 | −0.750 | 0.450 | ||

| Agitation difference scores | Intercept only | Intercept | 0.488 | 0.024 | 0.442 | 0.534 | 20.200 | <0.001 |

| Order | Intercept | 0.446 | 0.048 | 0.354 | 0.537 | 9.240 | <0.001 | |

| Order | 0.017 | 0.016 | −0.014 | 0.048 | 1.030 | 0.300 | ||

| Condition | Intercept | 0.473 | 0.031 | 0.414 | 0.532 | 15.290 | <0.001 | |

| Condition | 0.031 | 0.037 | −0.040 | 0.102 | 0.820 | 0.410 |

Bold type indicate P≤0.05, CI = confidence interval, z = effect size.

Sex Differences

Pair members were always tested simultaneously and could hence influence each other's behaviour. None the less, one sex might have shown stronger responses to the stimuli. The data from males and females were compared separately for playbacks in which the main analyses identified a differential response to the stimuli.

Ethical Note

The subjects for this study were 24 captive-reared adult common ravens kept in pairs: 12 males and 12 females ranging in age between 3 and 18 years. The data collection consisted of behavioural observations only. None of the subjects were handled in the course of the experiments. All subjects were housed in outdoor aviaries in areas where common ravens naturally occur; hence exposure to vocalizations of unfamiliar conspecifics cannot be considered a novel stressor. All aviaries were equipped with natural soil (gravel, sand, earth or wood bark), branches, breeding niches and natural vegetation. The aviaries differed in size between the different facilities, in each case complying with the respective guidelines. Aviaries run by the University of Vienna had a minimum size of 55 m2. Water was provided ad libitum and the animals fed daily with a variety of food items (e.g. meat, eggs, fruit) in accordance with their omnivorous diet. The experiments do not fall under the Austrian Animal Experiments Act (§ 2. Federal Law Gazette number 114/2012) and the procedures complied with all current laws of the respective countries.

Results

The most strongly supported GLMMs for the response variable ‘oriented towards loudspeaker’ using raw data always contained the main effect ‘condition’ (Table 2). As the detailed values for these models (Table 3) predicted, ‘condition’ strongly influenced the time the raven pairs spent visually orienting towards the loudspeaker (mean duration bird/treatment, pairs summed: exact Wilcoxon signed-rank test: N = 12, z = 2.197, P = 0.025; Cohen's d = 0.449). Alternative models to the GLMM with the highest likelihood (‘condition’ as sole effect) also contained the effects ‘sex’ and ‘order’, which were found to have marginal influence on the orientation time (‘sex’, mean duration bird/all playbacks: exact Wilcoxon signed-rank test: N = 12, z = 1.334, P = 0.204; d = 0.272; ‘order’ duration bird/playback, pairs summed: Friedman test: N = 12, χ23 = 2.11, P = 0.55).

For the same dependent variable using difference scores, all models with high support again included ‘condition’ (Table 2) and it strongly affected the duration of visual orientation (mean duration bird/treatment, pairs summed: exact Wilcoxon signed-rank test: N = 12, z = 2.589, P = 0.007; d = 0.528). The other main effects in alternative models were again found to be weak predictors (‘sex’ mean duration bird/all playbacks: exact Wilcoxon signed-rank test: N = 12, z = 1.02, P = 0.339; d = 0.208; ‘order’ duration bird/playback, pairs summed: Friedman test: N = 12, χ23 = 1.7, P = 0.637).

Concerning the ‘agitation component’, the models based on the raw data with the highest power to explain the variation only contained the random factor or the intercept and ‘order’ which was not supported to influence the agitation of the raven pairs (‘order’ PCA component bird/playback, pairs summed: Friedman test: N = 12, χ23 = 0.3, P = 0.96). The strongest models using difference scores for this variable also included only the intercept or additionally one of two main effects, which did not significantly influence agitation (‘order’ PCA component bird/playback, pairs summed: Friedman test: N = 12, χ23 = 5.7, P = 0.127; ‘condition’ mean duration bird/all playbacks: exact Wilcoxon signed-rank test: N = 12, z = 1.098, P = 0.301; d = 0.224).

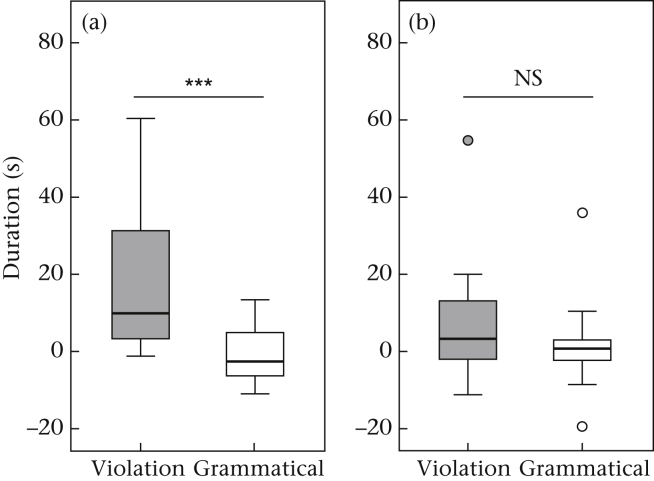

In Test 1, the ravens spent more time visually orienting towards the loudspeaker when they were exposed to violations than when they heard control playbacks. This was confirmed by testing the raw data of ‘oriented towards loudspeaker’ (exact Wilcoxon signed-rank test: N = 12, z = 2.08, P = 0.038; d = 0.425), as well as the difference scores (N = 12, z = 3.061, P < 0.001; d = 0.625; Fig. 3a). However, in Test 2 no difference was found in the amount of time spent visually oriented towards the loudspeaker in the raw data (N = 12, z = 1.647, P = 0.11; d = 0.336) or the difference scores (N = 12, z = 0.746, P = 0.482; d = 0.152; Fig. 3b). Thus ravens only exhibited a differential response to the novel pattern in Test 1.

Figure 3.

Duration of time spent visually oriented towards the loudspeaker in (a) Test 1 and (b) Test 2. Violations were a novel pattern (AnBn) in Test 1 and ABA or ABABA in Test 2. The control (grammatical) stimulus (habituation pattern) was (AB)n in Test 1 and Test 2. Data shown are difference scores, box plots represent the 25th and 75th percentiles, the line in the box indicates the median, whiskers represent the nonoutlier range and dots are outliers. ***P < 0.001.

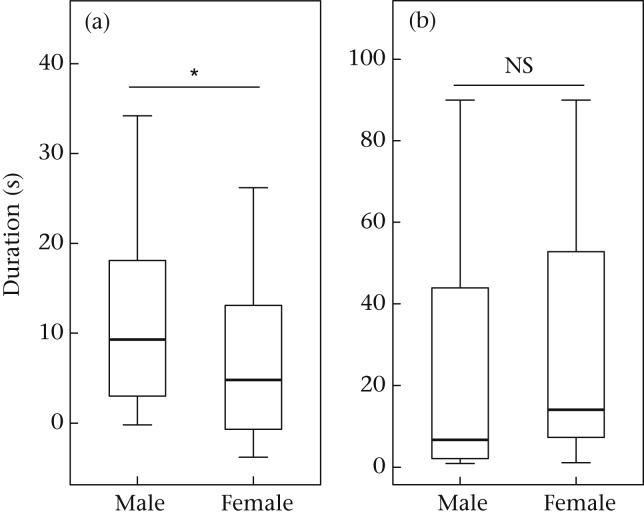

A sex comparison within this Test 1 playback phase showed that males visually oriented themselves longer towards the loudspeaker than their respective female partner (exact Wilcoxon signed-rank test with difference scores: N = 12, z = 2.197, P = 0.027; d = 0.448; Fig. 4a). However, an additional analysis of the latency to respond to the stimulus showed that males were not necessarily the first to look towards the loudspeaker. Latencies to respond differed only minimally between males and females (exact Wilcoxon signed-rank test with latency until first look (s): N = 12, z = −0.235, P = 0.85; d = −0.048; Fig. 4b).

Figure 4.

Sex difference in the reaction to the novel pattern in Test 1. (a) Visual orientation towards the loudspeaker. (b) Latency to look towards the loudspeaker for the first time. Data shown are difference scores in (a) and latency until first look towards the loudspeaker in (b), box plots represent the 25th and 75th percentiles, the line in the box indicates the median, whiskers represent the nonoutlier range. *P < 0.05.

Discussion

We found that raven pairs spontaneously spent more time visually orienting towards the loudspeaker after playbacks that violated the habituation pattern. This demonstrates that they can perceive a structural change from the original pattern without training. The novel pattern caused a stronger reaction than control stimuli, although the controls also contained novel extensions with different lengths from the habituation stimuli. Ravens thus appeared to perceive the novel pattern as more behaviourally relevant than elongations of the previously habituated pattern.

Our raven pairs did not, however, discriminate between controls and sequences missing the final element. This suggests that ravens, although being sensitive to structural differences, did not internalize precisely the syntactic rule that we intended. However, in this test the controls always contained more elements than the violation playback. While a missing B element did not cause discrimination, the absence of a reaction to the controls further supports the conclusion that the length of the stimuli had no significant effect. We always conducted Test 1 before Test 2 (counterbalancing violation and control playbacks within each), owing to the risk of motivational decline during the discrimination phase, as our main priority was the rigorous comparison of the performance of ravens with previous results from tamarins (achieved by Test 1 alone). However, our analyses revealed a very limited effect of treatment order, decreasing the chance that the lack of discrimination observed during Test 2 can be explained solely by a decline in motivation. It is theoretically possible that the absence of a detectable difference in Test 2 could have been caused by the more contrasting violation always being played first (Test 1: different pattern; Test 2: missing element). Future studies could profit from conducting separate habituation phases before individual tests.

Turning to agitation scores, ravens were not more agitated during the different treatments of the discrimination phase. Although the violations in Test 1 caused them to orient longer towards the loudspeaker than did control playbacks, the stimuli in either case seemed to convey the same overall context: an ongoing territorial interaction with unknown intruders. Because this context did not change, we conclude that the difference in reaction indicates sensitivity to a change in vocal display structure. The null result (absence of discrimination in Test 2) does not show that ravens are unable to perceive these structural differences; these small differences in structure might simply be of little behavioural relevance. Although habituation–discrimination experiments can demonstrate a spontaneous sensitivity to structural changes, they cannot determine an animal's perceptual limits (ten Cate & Okanoya, 2012): boundaries of pattern perception capacities in songbirds are better probed using training (operant conditioning) paradigms.

In previous playback studies investigating communicative capacities in nonhuman animals, spontaneous discrimination between two or more broadcast vocalizations was observable when the stimuli differed to a sufficient extent both perceptually and in their behavioural, motivational and/or ecological relevance for a given subject (Cheney and Seyfarth, 1999, Manser, 2001, McComb et al., 1993). This method not only demonstrates the presence of a cognitive/perceptual capacity, but also in what situations the demonstrated ability is behaviourally relevant, and may thus provide hints concerning what drove its evolution.

Our method simulated the appearance of a pair of birds near the territory of the subject pair. The individual acoustic elements of our playbacks did not differ between the conditions, and the observed shift in behavioural relevance must thus be linked to the patterns, not the elements themselves. The high attentiveness of common ravens to conspecific vocalizations, particularly when produced by a pair, might lead to an increased reaction to a novel pattern. In addition, perceiving structural aspects of vocal displays may also have evolutionary advantages. Acoustic duets are used in territory defence by many avian species (Hall, 2004) and are a joint behaviour of the two partners in songbirds (Benedict, 2008, Hall and Peters, 2008) and nonsongbirds (Odom & Mennill, 2010). They might communicate not only the readiness to dispute the territorial claim, but also the degree to which male and female are well synchronized, and their motivation for joint action in general. Furthermore, the detailed structure of a raven pair's vocal displays may convey information concerning other relevant cognitive abilities (e.g. to cooperate in foraging, agonistic interactions and/or in raising offspring). We thus suggest that pair display structure could reflect the strength of potential rival pairs, and hence carry functionally relevant information to defenders. Our study focused on the pattern of ‘duets’ rather than their temporal synchrony. A previous study on canebrake wrens, Thryothorus modestus zeledoni, also provided evidence for learning in duet performance after pair bond formation, which was more pronounced for duet repertoire consistency than temporal coordination (Marshall-Ball, Mann, & Slater, 2006). These studies together suggest that the evolutionary importance of avian duet structure, in addition to temporal synchrony, may have been underestimated to date.

Overall, raven males reacted more strongly than their partners during the playback of the violation in Test 1. We can only speculate on the causes for such a sex difference. Sex-specific responses to playbacks of conspecific duets were also observed in other passerine species (Mennill and Vehrencamp, 2008, van den Heuvel et al., 2014), which demonstrates that shared territory defence is probably the main yet not the only factor driving the evolution of vocal duetting (Logue & Gammon, 2004). In a recent playback study on common ravens, males were found to be more attentive than females to third-party interactions in out-group individuals (Massen et al., 2014). In ravens, males are the larger sex, and may take the lead in territorial defence; this might cause them to be more actively responsive to duets of potential rivals. None of our GLMMs contained ‘sex’ as a strongly supported main effect. Because gaze following is well documented in common ravens (Bugnyar, Stowe, & Heinrich, 2004), pair members are clearly sensitive to each other's attention and orientation; thus the mutual influence between pair partners might have obscured a sex difference. Although males paid more attention to the structural changes of the territorial display, there was no difference between the sexes in the latency to respond to the playback violating the target pattern. Thus, it seems that the females were equally sensitive to the structural changes but perhaps less interested in them than the males.

In conclusion, we have found that ravens are spontaneously sensitive to structural changes in an (AB)n pattern, much like cottontop tamarins in a previous artificial grammar learning study (Fitch & Hauser, 2004). With so few species studied using nontraining paradigms, whether these capacities evolved convergently in songbirds and primates remains unclear and further studies in other mammal and bird species are needed to answer this question.

Acknowledgments

We are grateful to Jinook Oh for programming time-saving software, Christian Herbst for bioacoustics advice, Andrius Pasukonis, Andrea Ravignani, Nina Stobbe and Claudia Stephan for productive discussions on the experimental set-up and the manuscript, Gesche Westphal-Fitch for editing the first draft, Nadja Kavcik for drawing the ravens for the figure, and Bruno Gingras and Jorg Massen for advice on statistical analysis. Further thanks go to Kurt Kotrschal and all co-workers at the Konrad Lorenz Research Station, and to Simone Pika with her team at the MPI for Ornithology in Seewiesen. For access to ravens we thank the Alpenzoo Innsbruck, Cumberland Wildpark Grünau, Tierpark Stadt Haag, Welser Tiergarten, Wildlife Enclosure in the National Park Center Lusen, Tierpark Hellabrunn, Gymnasium Sponga, and Gerti Drack. The authors have no competing interests. S.A.R. designed the study, collected sound recordings, created the stimuli, carried out experiments, coded videos, analysed the data and wrote the manuscript; M.B. collected sound recordings and participated in the data analysis; G.S. helped carry out experiments, coded videos and participated in the data analysis; J.J. helped carry out experiments, and coded videos; T.B. helped coordinate the study and draft the manuscript; W.T.F. helped conceive and design the study and write the manuscript. All authors gave final approval for publication. This research was funded by the ERC Advanced Grant SOMACCA (230604) to W.T.F. and the Austrian Science Fund (FWF) projects W1234-G17 to T.B. and W.T.F. and Y366-B17 to T.B.

MS number 15-00765R

Footnotes

Supplementary material related to this article can be found, in the online version, at http://dx.doi.org/10.1016/j.anbehav.2016.04.005. This includes all data used for this article, including the raw data, difference scores, principal component values and details concerning the principal component analysis. Original videos are stored on the server of the Department of Cognitive Biology at the University of Vienna, Austria. For size and owner privacy reasons these videos are not publicly available; for access by interested scientists please contact W.T.F. at tecumseh.fitch@univie.ac.at.

Contributor Information

Stephan A. Reber, Email: stephan.reber@univie.ac.at.

W. Tecumseh Fitch, Email: tecumseh.fitch@univie.ac.at.

Supplementary Material

The following is the Supplementary material related to this article:

References

- Abe K., Watanabe D. Songbirds possess the spontaneous ability to discriminate syntactic rules. Nature Neuroscience. 2011;14(8):1067–1074. doi: 10.1038/nn.2869. nn.2869 [pii] [DOI] [PubMed] [Google Scholar]

- Arnold K., Pohlner Y., Zuberbühler K. Not words but meanings? Alarm calling behaviour in a Forest Guenon. In: Sommer V., Ross C., editors. Vol. 35. Springer; New York, NY: 2011. pp. 437–468. (Primates of Gashaka). [Google Scholar]

- Arnold K., Zuberbühler K. Semantic combinations in primate calls. Nature. 2006;441(7091):303. doi: 10.1038/441303a. [DOI] [PubMed] [Google Scholar]

- Arnold K., Zuberbühler K. Meaningful call combinations in a non-human primate. Current Biology. 2008;18(5):R202–R203. doi: 10.1016/j.cub.2008.01.040. [DOI] [PubMed] [Google Scholar]

- Beckers G.J.L., Bolhuis J.J., Okanoya K., Berwick R.C. Birdsong neurolinguistics: songbird context-free grammar claim is premature. Neuroreport. 2012;23(3):139–145. doi: 10.1097/WNR.0b013e32834f1765. [DOI] [PubMed] [Google Scholar]

- Benedict L. Occurrence and life history correlates of vocal duetting in North American passerines. Journal of Avian Biology. 2008;39(1):57–65. [Google Scholar]

- Berwick R.C., Okanoya K., Beckers G.J.L., Bolhuis J.J. Songs to syntax: the linguistics of birdsong. Trends in Cognitive Sciences. 2011;15(3):113–121. doi: 10.1016/j.tics.2011.01.002. [DOI] [PubMed] [Google Scholar]

- Boeckle M. University of Vienna; Vienna, Austria: 2012. Acoustic communication of common ravens. (Unpublished Ph.D. thesis) [Google Scholar]

- Boeckle M., Bugnyar T. Long-term memory for affiliates in ravens. Current Biology. 2012;22(9):801–806. doi: 10.1016/j.cub.2012.03.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boeckle M., Szipl G., Bugnyar T. Who wants food? Individual characteristics in raven yells. Animal Behaviour. 2012;84(5):1123–1130. doi: 10.1016/j.anbehav.2012.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bugnyar T., Stowe M., Heinrich B. Ravens, Corvus corax, follow gaze direction of humans around obstacles. Proceedings of the Royal Society B: Biological Sciences. 2004;271(1546):1331–1336. doi: 10.1098/rspb.2004.2738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burnham K.P., Anderson D.R., Huyvaert K.P. AIC model selection and multimodel inference in behavioral ecology: some background, observations, and comparisons. Behavioral Ecology and Sociobiology. 2010;65(1):23–35. [Google Scholar]

- Candiotti A., Zuberbühler K., Lemasson A. Context-related call combinations in female Diana monkeys. Animal Cognition. 2012;15(3):327–339. doi: 10.1007/s10071-011-0456-8. [DOI] [PubMed] [Google Scholar]

- ten Cate C., Okanoya K. Revisiting the syntactic abilities of non-human animals: natural vocalizations and artificial grammar learning. Royal Society Philosophical Transactions Biological Sciences. 2012;367(1598):1984–1994. doi: 10.1098/rstb.2012.0055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheney D.L., Seyfarth R.M. Recognition of other individuals' social relationships by female baboons. Animal Behaviour. 1999;58:67–75. doi: 10.1006/anbe.1999.1131. [DOI] [PubMed] [Google Scholar]

- Cicchetti D.V. Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychological Assessment. 1994;6(4):284–290. [Google Scholar]

- Cohen J. Statistical power analysis for the behavioral sciences. Perceptual and Motor Skills. 1988;67(3):1007. [Google Scholar]

- Collier K., Bickel B., van Schaik C.P., Manser M.B., Townsend S.W. Language evolution: syntax before phonology? Proceedings of the Royal Society B: Biological Sciences. 2014;281(1788) doi: 10.1098/rspb.2014.0263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crockford C., Wittig R.M., Seyfarth R.M., Cheney D.L. Baboons eavesdrop to deduce mating opportunities. Animal Behaviour. 2007;73:885–890. [Google Scholar]

- Enggist-Dueblin P., Pfister U. Cultural transmission of vocalizations in ravens, Corvus corax. Animal Behaviour. 2002;64:831–841. [Google Scholar]

- Fedurek P., Slocombe K.E., Zuberbühler K. Chimpanzees communicate to two different audiences during aggressive interactions. Animal Behaviour. 2015;110:21–28. [Google Scholar]

- Fernandez-Juricic E., O'Rourke C., Pitlik T. Visual coverage and scanning behavior in two corvid species: American crow and Western scrub jay. Journal of Comparative Physiology A. 2010;196(12):879–888. doi: 10.1007/s00359-010-0570-0. [DOI] [PubMed] [Google Scholar]

- Fitch W.T. The evolution of speech: a comparative review. Trends in Cognitive Sciences. 2000;4(7):258–267. doi: 10.1016/s1364-6613(00)01494-7. [DOI] [PubMed] [Google Scholar]

- Fitch W.T. The evolution of language: a comparative review. Biology & Philosophy. 2005;20(2–3):193–230. [Google Scholar]

- Fitch W.T., Fritz J.B. Rhesus macaques spontaneously perceive formants in conspecific vocalizations. Journal of the Acoustical Society of America. 2006;120(4):2132–2141. doi: 10.1121/1.2258499. [DOI] [PubMed] [Google Scholar]

- Fitch W.T., Hauser M.D. Computational constraints on syntactic processing in a nonhuman primate. Science. 2004;303(5656):377–380. doi: 10.1126/science.1089401. [DOI] [PubMed] [Google Scholar]

- Gentner T.Q., Fenn K.M., Margoliash D., Nusbaum H.C. Recursive syntactic pattern learning by songbirds. Nature. 2006;440(7088):1204–1207. doi: 10.1038/nature04675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall M.L. A review of hypotheses for the functions of avian duetting. Behavioral Ecology and Sociobiology. 2004;55(5):415–430. [Google Scholar]

- Hall M.L., Peters A. Coordination between the sexes for territorial defence in a duetting fairy-wren. Animal Behaviour. 2008;76(1):65–73. [Google Scholar]

- Hallgren K.A. Computing inter-rater reliability for observational data: an overview and tutorial. Tutorials in quantitative methods for psychology. 2012;8(1):23–34. doi: 10.20982/tqmp.08.1.p023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauser M.D. MIT Press; Cambridge, MA: 1996. The evolution of communication. [Google Scholar]

- van Heijningen C.A.A., Chen J., van Laatum I., van der Hulst B., ten Cate C. Rule learning by zebra finches in an artificial grammar learning task: which rule? Animal Cognition. 2013;16(2):165–175. doi: 10.1007/s10071-012-0559-x. [DOI] [PubMed] [Google Scholar]

- Heinrich B. Conflict, cooperation, and cognition in the common raven. Advances in the Study of Behavior. 2011;43:189–237. San Diego, CA: Elsevier Academic Press Inc. [Google Scholar]

- van den Heuvel I.M., Cherry M.I., Klump G.M. Land or lover? Territorial defence and mutual mate guarding in the crimson-breasted shrike. Behavioral Ecology and Sociobiology. 2014;68(3):373–381. [Google Scholar]

- Hurford J.R. Oxford University Press; Oxford, U.K.: 2007. The origins of meaning: Language in the light of evolution. [Google Scholar]

- Janik V.M., Slater P.J.B. Vocal learning in mammals. Advances in the Study of Behavior. 1997;26:59–99. [Google Scholar]

- Janik V.M., Slater P.J.B. The different roles of social learning in vocal communication. Animal Behaviour. 2000;60(1):1–11. doi: 10.1006/anbe.2000.1410. [DOI] [PubMed] [Google Scholar]

- Logue D.M., Gammon D.E. Duet song and sex roles during territory defence in a tropical bird, the black-bellied wren, Thryothorus fasciatoventris. Animal Behaviour. 2004;68(4):721–731. [Google Scholar]

- Manser M.B. The acoustic structure of suricates' alarm calls varies with predator type and the level of response urgency. Proceedings of the Royal Society B: Biological Sciences. 2001;268(1483):2315–2324. doi: 10.1098/rspb.2001.1773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marshall-Ball L., Mann N., Slater P.J.B. Multiple functions to duet singing: hidden conflicts and apparent cooperation. Animal Behaviour. 2006;71(4):823–831. [Google Scholar]

- Martin G.R., Katzir G. Visual fields, foraging and binocularity in birds. In: Adams N.J., Slotow R.H., editors. Proceedings of the 22nd International Ornithological Congress, Durban. South Africa; Johannesburg: 1999. pp. 2711–2728. [Google Scholar]

- Massen J.J.M., Pasukonis A., Schmidt J., Bugnyar T. Ravens notice dominance reversals among conspecifics within and outside their social group. Nature Communications. 2014;5 doi: 10.1038/ncomms4679. 3679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McComb K., Pusey A., Packer C., Grinnell J. Female lions can identify potentially infanticidal males from their roars. Proceedings of the Royal Society B: Biological Sciences. 1993;252(1333):59–64. doi: 10.1098/rspb.1993.0046. [DOI] [PubMed] [Google Scholar]

- Mennill D.J., Vehrencamp S.L. Context-dependent functions of avian duets revealed by microphone-array recordings and multispeaker playback. Current Biology. 2008;18(17):1314–1319. doi: 10.1016/j.cub.2008.07.073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mundry R., Fischer J. Use of statistical programs for nonparametric tests of small samples often leads to incorrect P values: examples from. Animal Behaviour. 1998;56:256–259. doi: 10.1006/anbe.1998.0756. [DOI] [PubMed] [Google Scholar]

- Nowicki S., Searcy W.A. The evolution of vocal learning. Current Opinion in Neurobiology. 2014;28(0):48–53. doi: 10.1016/j.conb.2014.06.007. [DOI] [PubMed] [Google Scholar]

- Odom K.J., Mennill D.J. Vocal duets in a nonpasserine: an examination of territory defence and neighbour-stranger discrimination in a neighbourhood of barred owls. Behaviour. 2010;147(5–6):619–639. [Google Scholar]

- Ouattara K., Lemasson A., Zuberbühler K. Campbell's monkeys use affixation to alter call meaning. PLoS One. 2009;4(11) doi: 10.1371/journal.pone.0007808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfister-Gfeller U. Universität Bern; Bern, Switzerland: 1995. Kolkrabenkommunikation. (Unpublished Inaugural dissertation) [Google Scholar]

- Proops L., McComb K., Reby D. Cross-modal individual recognition in domestic horses (Equus caballus) Proceedings of the National Academy of Sciences of the United States of America. 2009;106(3):947–951. doi: 10.1073/pnas.0809127105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ravignani A., Sonnweber R.S., Stobbe N., Fitch W.T. Action at a distance: dependency sensitivity in a New World primate. Biology Letters. 2013;9(6) doi: 10.1098/rsbl.2013.0852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reby D., McComb K. Anatomical constraints generate honesty: acoustic cues to age and weight in the roars of red deer stags. Animal Behaviour. 2003;65:519–530. [Google Scholar]

- Richards S.A. Testing ecological theory using the information-theoretic approach: examples and cautionary results. Ecology. 2005;86(10):2805–2814. [Google Scholar]

- Schulz M.A., Schmalbach B., Brugger P., Witt K. Analysing humanly generated random number sequences: a pattern-based approach. PLoS One. 2012;7(7) doi: 10.1371/journal.pone.0041531. e41531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seki Y., Suzuki K., Osawa A.M., Okanoya K. Songbirds and humans apply different strategies in a sound sequence discrimination task. Frontiers in Psychology. 2013;4 doi: 10.3389/fpsyg.2013.00447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seyfarth R.M., Cheney D.L., Marler P. Vervet monkey alarm calls–semantic communication in a free-ranging primate. Animal Behaviour. 1980;28(NOV):1070–1094. [Google Scholar]

- Spierings M.J., ten Cate C. Zebra finches are sensitive to prosodic features of human speech. Proceedings of the Royal Society B: Biological Sciences. 2014;281(1787) doi: 10.1098/rspb.2014.0480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Symonds M.R.E., Moussalli A. A brief guide to model selection, multimodel inference and model averaging in behavioural ecology using Akaike's information criterion. Behavioral Ecology and Sociobiology. 2011;65(1):13–21. [Google Scholar]

- Szipl G., Boeckle M., Wascher C.A.F., Spreafico M., Bugnyar T. With whom to dine? Ravens' responses to food-associated calls depend on individual characteristics of the caller. Animal Behaviour. 2015;99(0):33–42. doi: 10.1016/j.anbehav.2014.10.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Townsend S.W., Allen C., Manser M.B. A simple test of vocal individual recognition in wild meerkats. Biology Letters. 2012;8(2):179–182. doi: 10.1098/rsbl.2011.0844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Troscianko J., von Bayern A.M.P., Chappell J., Rutz C., Martin G.R. Extreme binocular vision and a straight bill facilitate tool use in New Caledonian crows. Nature Communication. 2012;3:1110. doi: 10.1038/ncomms2111. http://www.nature.com/ncomms/journal/v3/n10/suppinfo/ncomms2111_S1.html [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.