Abstract

We consider a network of coupled excitatory and inhibitory theta neurons which is capable of supporting stable spatially-localized “bump” solutions. We randomly add long-range and simultaneously remove short-range connections within the network to form a small-world network and investigate the effects of this rewiring on the existence and stability of the bump solution. We consider two limits in which continuum equations can be derived; bump solutions are fixed points of these equations. We can thus use standard numerical bifurcation analysis to determine the stability of these bumps and to follow them as parameters (such as rewiring probabilities) are varied. We find that under some rewiring schemes bumps are quite robust, whereas in other schemes they can become unstable via Hopf bifurcation or even be destroyed in saddle-node bifurcations.

Keywords: Ott/Antonsen, theta neuron, bump, small-world, working memory, bifurcation

1. Introduction

Spatially-localized “bumps” of activity in neuronal networks have been studied for many years, as they are thought to play a role in short term memory (Camperi and Wang, 1998; Compte et al., 2000; Bressloff, 2012; Wimmer et al., 2014) and the head direction system (Redish et al., 1996; Zhang, 1996), among other phenomena. Some models of bump formation have used a firing rate description (Wilson and Cowan, 1973; Amari, 1977; Laing et al., 2002; Laing and Troy, 2003; Owen et al., 2007) while others have considered networks of spiking neurons (Gutkin et al., 2001; Laing and Chow, 2001; Wang, 2001). The simplest models typically have “Mexican-hat” connectivity in a single population of neurons, where nearby neurons are excitatorily coupled and more distant ones are inhibitorily coupled (Ermentrout, 1998; Bressloff, 2012). However, more realistic models consider both excitatory and inhibitory neurons with non-negative connectivity within and between populations (Pinto and Ermentrout, 2001; Blomquist et al., 2005). Almost all previous models have considered homogeneous and isotropic networks, which typically support a continuous family of reflection-symmetric bumps, parameterized by their position in the network. Some exceptions are (Brackley and Turner, 2009, 2014), in which a spatially-inhomogeneous coupling function is used, and (Thul et al., 2016), in which a spatially-varying random firing threshold is imposed.

In this paper we further investigate the effects of breaking the spatial homogeneity of neural networks which support bump solutions, by randomly adding long-range connections and simultaneously removing short-range connections in a particular formulation of small-world networks (Song and Wang, 2014). Small-world networks (Watts and Strogatz, 1998) have been much studied and there is evidence for the existence of small-worldness in several brain networks (Bullmore and Sporns, 2009). In particular, we are interested in determining how sensitive networks which support bumps are to this type of random rewiring of connections, and thus how precisely networks must be constructed in order to support bumps.

We will consider networks of heterogeneous excitatory and inhibitory theta neurons, the theta neuron being the canonical model for a Type I neuron for which the onset of firing is through a saddle-node on an invariant circle bifurcation (Ermentrout and Kopell, 1986; Ermentrout, 1996). In several limits such networks are amenable to the use of the Ott/Antonsen ansatz (Ott and Antonsen, 2008, 2009), and we will build on previous work using this ansatz in the study of networks of heterogeneous theta neurons (Luke et al., 2013; Laing, 2014a, 2015; So et al., 2014). We present the model in Section 2.2 and then consider two limiting cases: an infinite number of neurons (Section 2.3) and an infinite ensemble of finite networks with the same connectivity (Section 2.4). Results are given in Section 3 and we conclude in Section 4. The Appendix contains some mathematical manipulations relating to Section 2.4.

2. Materials and methods

2.1. Introduction

First consider an all-to-all coupled network of N heterogeneous theta neurons whose dynamics are given by

| (1) |

| (2) |

for i = 1, 2, …N where θi ∈ [0, 2π) is the phase of the ith neuron, and an is a normalization factor such that . The function Pn is meant to mimic the action potential generated when a neuron fires, i.e., its phase increases through π; n controls the “sharpness” of this function. The Ii are input currents randomly chosen from some distribution, g is the strength of connectivity within the network (positive for excitatory coupling and negative for inhibitory), and τ is a time constant governing the synaptic dynamics. The variable r is driven up by spiking activity and exponentially decays to zero in the absence of activity, on a timescale τ.

The model (1)–(2) with τ = 0 (i.e., instantaneous synapses) was studied by Luke et al. (2013), who found multistability and oscillatory behavior. The case of τ > 0 was considered in Laing, unpublished and similar forms of synaptic dynamics have been considered elsewhere (Börgers and Kopell, 2005; Ermentrout, 2006; Coombes and Byrne, unpublished). The model presented below results from generalizing Equations (1) and (2) in several ways. Firstly, we consider two populations of neurons, one excitatory and one inhibitory. Thus, we will have two sets of variables, one for each population. Such a pair of interacting populations was previously considered by Luke et al. (2014); Börgers and Kopell (2005); Coombes and Byrne, unpublished; and Laing, unpublished. Secondly, we consider a spatially-extended network, in which both the excitatory and inhibitory neurons lie on a ring, and are (initially) coupled to a fixed number of neurons either side of them. Networks with similar structure have been studied by many authors (Redish et al., 1996; Compte et al., 2000; Gutkin et al., 2001; Laing and Chow, 2001; Laing, 2014a, 2015).

2.2. Model

We consider a network of 2N theta neurons, N excitatory and N inhibitory. Within each population the neurons are arranged in a ring, and there are synaptic connections between and within populations, whose strength depends on the distance between neurons, as in Laing and Chow (2002) and Gutkin et al. (2001) (In the networks we will consider, connection strengths are either 1 or 0, i.e., neurons are either connected or not connected). Inhibitory synapses act on a timescale τi, whereas the excitatory ones act on a timescale, τ. θi ∈ [0, 2π) is the phase of the ith excitatory neuron and ϕi ∈ [0, 2π) is the phase of the ith inhibitory one. The equations are

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

| (8) |

for i = 1, 2…N, where

| (9) |

| (10) |

| (11) |

| (12) |

where Pn is as in Section 2.1. The positive integers MIE, MEE, MEI, and MII give the width of connectivity from excitatory to inhibitory, excitatory to excitatory, inhibitory to excitatory, and inhibitory to inhibitory populations, respectively. The non-negative quantities gEE, gEI, gIE and gII give the overall connection strengths within and between the two populations (excitatory to excitatory, inhibitory to excitatory, excitatory to inhibitory, and inhibitory to inhibitory, respectively). The variable vi (when multiplied by gEE) gives the excitatory input to the ith excitatory neuron, and whose dynamics are driven by ri, which depends on the activity of the excitatory neurons with indices between i − MEE and i + MEE. Similarly, ui (when multiplied by gIE) gives the excitatory input to the ith inhibitory neuron, and is driven by qi, which depends on the activity of the excitatory neurons with indices between i − MIE and i + MIE. gEIyi is the inhibitory input to the ith excitatory neuron, driven by si, which depends on the activity of the inhibitory neurons with indices between i − MEI and i + MEI. Lastly, gIIzi is the inhibitory input to the ith inhibitory neuron, driven by wi, which depends on the activity of the inhibitory neurons with indices between i − MII and i + MII.

For simplicity, and motivated by the results in Pinto and Ermentrout (2001), we assume that the inhibitory synapses act instantaneously, i.e. τi = 0, and that there are no connections within the inhibitory population, i.e. gII = 0. Thus (8) and (12) become irrelevant and from (7) we have that yi = si in (3).

The networks are made heterogeneous by randomly choosing the currents Ii from the Lorentzian

| (13) |

and the currents Ji from the Lorentzian

| (14) |

I0 and J0 are the centers of these distributions, and for simplicity we assume that both have the same width, Δ. The heterogeneity of the neurons (i.e., the positive value of Δ) is not necessary in order for the network to support bumps, but it is necessary for the Ott/Antonsen ansatz, used extensively below, to be valid (Ott et al., 2011). Networks of identical phase oscillators are known to show non-generic behavior which can be studied using the Watanabe/Strogatz ansatz (Watanabe and Strogatz, 1993, 1994). We want to avoid non-generic behavior, and having a heterogeneous network is also more realistic. For typical parameter values we see the behavior shown in Figures 1, 2, i.e., a stable stationary bump in which the active neurons are spatially localized.

Figure 1.

A bump solution of Equations (3)–(6). Top: sin θi. Bottom: sin ϕi. Parameter values: N = 1024, Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, MIE = 40, MEE = 40, MEI = 60 and τ = 10.

Figure 2.

Average frequency for excitatory population (blue) and inhibitory (red) for the solution shown in Figure 1.

While these bumps may look superficially like “chimera” states in a ring of oscillators (Abrams and Strogatz, 2004, 2006; Laing, 2009; Panaggio and Abrams, 2015) they are different in one important aspect. Chimera states in the references above occur in networks for which the dynamics depend on only phase differences. Thus these systems are invariant with respect to adding the same constant to all oscillator phases, and can be studied in a rotating coordinate frame in which the synchronous oscillators have zero frequency, i.e., only relative frequencies are meaningful. In contrast, networks of theta neurons like those studied here are not invariant with respect to adding the same constant to all oscillator phases. The actual value of phase matters, and the neurons with zero frequency in Figure 2 have zero frequency simply because their input is not large enough to cause them to fire.

We now want to introduce rewiring parameters in such a way that on average, the number of connections is preserved as the networks are rewired. This is different from other formulations of small-world networks in which additional edges are added (Newman and Watts, 1999; Medvedev, 2014; but see Puljic and Kozma, 2008 for an example in which the number of connections to a node is precisely conserved). The reason for doing this is to keep the balance of excitation and inhibition constant. If we were to add additional connections, for example, within the excitatory population, the results seen might just be a result of increasing the number of connections, rather than their spatial arrangement. We are interested in the effects of rewiring connections from short range to long range, and thus use the form suggested in Song and Wang (2014). We replace Equations (9) to (11) by

| (15) |

where

| (16) |

| (17) |

and

| (18) |

where |i − j| refers to the shortest distance between neurons i and j, measured on the ring. When p1 = p2 = p3 = 0, Equation (15) reverts to Equations (9) to (11). Note that when p1 = 1, the probability of being 1 is independent of i and j, and that the expected number of nonzero entries in a row of (i.e., the expected number of connections from the excitatory population to an inhibitory neuron) is independent of p1. Similar statements apply for the other two matrices and their parameters p2 and p3. Typical variation of AIE with p1 is shown in Figure 3 and it is clear that increasing p1 interpolates between purely local connections (p1 = 0) and uniform random connectivity (p1 = 1).

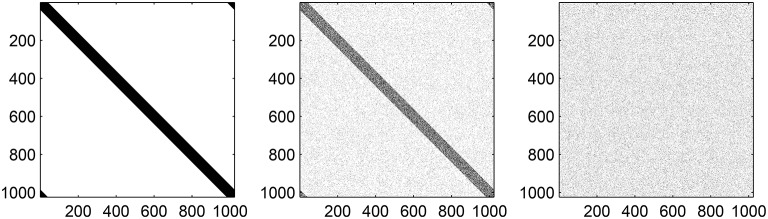

Figure 3.

Typical realizations of AIE for p1 = 0 (left) 0.5 (middle) and 1 (right). N = 1024, MIE = 40. Black corresponds to a matrix entry of 1, white to 0.

We could simply simulate Equations (3)–(6) with Equation (15) for particular values of p1, p2, and p3 but we would like to gain a deeper understanding of the dynamics of such a network. The first approach is to take the continuum limit in which the number of neurons in each network goes to infinity, in a particular way.

2.3. Continuum limit

We take the continuum limit: N, MEI, MEE, MIE → ∞ such that MEI/N → αEI, MEE/N → αEE and MIE/N → αIE, where 0 < αEI, αEE, αIE < 1/2, and set the circumference of the ring of neurons to be 1. In this limit the sums (Equation 15) are replaced by integrals (more specifically, convolutions) with the connectivity kernels

| (19) |

| (20) |

| (21) |

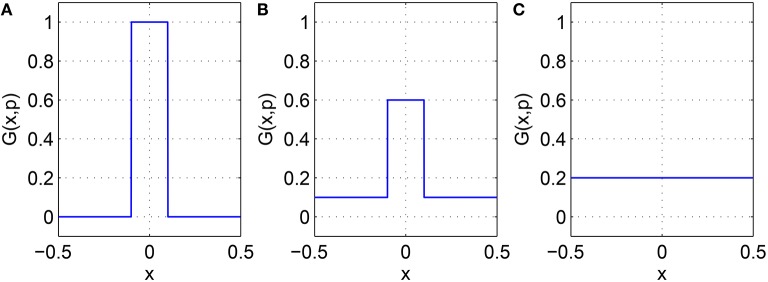

where GIE(x, p1) is the probability that a point in the excitatory population is connected to a point in the inhibitory population a distance x away, and similarly for the other two kernels. The effect of varying pj, j = 1, 2, 3, on one of the functions Equations (19)–(21) is shown in Figure 4. Taking GIE for example, we see that independent of p1, i.e., the expected total number of connections is preserved, and similarly for the other two functions.

Figure 4.

One of the functions Equations (19)–(21) with (A): p = 0, (B): p = 0.5, and (C): p = 1. For this example, α = 0.1. Note the similarity with the middle row of the matrices shown in Figure 3.

Taking the continuum limit of Equations (3)–(6) we describe the dynamics of the θi and ϕi in terms of probability densities FE(θ, x, I, t) and FI(ϕ, x, J, t), respectively, where x and t are (continuous) space and time, and I and J are random variables with densities h(I) and g(J) respectively. FE satisfies the continuity equation (Luke et al., 2013)

| (22) |

and similarly FI satisfies

| (23) |

where

| (24) |

| (25) |

and

| (26) |

| (27) |

| (28) |

The forms of Equations (22) and (23) mean that they are amenable to the use of the Ott/Antonsen ansatz (Ott and Antonsen, 2008, 2009). This ansatz states that if the neurons are not identical (i.e., Δ > 0 for the networks studied here), solutions of the continuity equation [Equations (22) and (23)] decay exponentially onto a lower-dimensional manifold on which the θ and ϕ dependence of FE and FI, respectively, have a particular form. This form is a Fourier series in θ (or ϕ) in which the nth coefficient is some function to the nth power. [See Equation (A8), for example]. Thus, we can restrict Equations (22) and (23) to this manifold, thereby simplifying the dynamics.

The standard Kuramoto order parameter for an all-to-all coupled network with phases {θj} is the expected value of (Strogatz, 2000). For the network studied here we can define the analogous spatially-dependent order parameters for the excitatory and inhibitory networks as

| (29) |

and

| (30) |

respectively. For fixed x and t, zE(x, t) is a complex number with a phase and a magnitude. The phase gives the most likely value of θ and the magnitude governs the “sharpness” of the probability distribution of θ (at that x and t), and similarly for zI(x, t) and ϕ (Laing, 2014a, 2015). We can also determine from zE and zI the instantaneous firing rate of each population (see Section 3.1 and Montbrió et al., 2015).

Performing manipulations as in Laing (2014a, 2015), Luke et al. (2013), and So et al. (2014) we obtain the continuum limit of Equations (3)–(6): evolution equations for zE and zI

| (31) |

| (32) |

together with Equations (24) and (25), where

| (33) |

| (34) |

| (35) |

and

| (36) |

where

| (37) |

and where by |x − y| in Equations (26)–(28) we mean the shortest distance between x and y given that they are both points on a circle, i.e., |x − y| = min (|x − y|, 1 − |x − y|).

The advantage of this continuum formulation is that bumps like that in Figure 1 are fixed points of Equations (31) and (32) and Equations (24) and (25). Once these equations have been spatially discretized, we can find fixed points of them using Newton's method, and determine the stability of these fixed points by finding the eigenvalues of the linearization around them. We can also follow these fixed points as parameter are varied, detecting (local) bifurcations (Laing, 2014b). The results of varying p1, p2 and p3 independently are shown in Section 3.1.

2.4. Infinite ensembles

We now consider the case where N is fixed and finite, and so are the matrices AIE, AEE and AEI, but we average over an infinite ensemble of networks with these connectivities, where each member of the ensemble has a different (but consistent) realization of the random currents Ii and Ji (Barlev et al., 2011; Laing et al., 2012). This procedure results in 4N ordinary differential equations (ODEs), 2N of them for complex quantities and the other 2N for real quantities. Thus, there is no reduction of dimension from the original system (Equations 3–6), but as in Section 2.3, bump states will be fixed points of these ODEs.

Letting the number of members in the ensemble go to infinity, we describe the state of the excitatory network by the probability density function

| (38) |

and that of the inhibitory one by

| (39) |

which satisfy the continuity equations

| (40) |

and

| (41) |

where dθj/dt and dϕj/dt are given by Equations (3) and (4).

Performing the manipulations in the Appendix we obtain

| (42) |

| (43) |

for j = 1, 2, …N where

| (44) |

| (45) |

| (46) |

and

| (47) |

| (48) |

for i = 1, 2, …N. Equations (42)–(48) form a complete description of the expected behavior of a network with connectivities given by the matrices AIE, AEE and AEI. Note the similarities with Equations (31)–(35) and Equations (24) and (25). As mentioned above, the advantage of this formulation is that states like that in Figure 1 will be fixed points of Equations (42)–(48), for the specified connectivities.

Recalling that the matrices AIE, AEE and AEI depend on the parameters p1, p2 and p3 respectively we now investigate how solutions of Equations (42)–(48) depend on these parameters. One difficulty in trying to vary, say, p1, is that the entries of AIE do not depend continuously on p1. Indeed, as presented, one should recalculate AIE each time p1 is changed. In order to generate results comparable with those from Section 2.3 we introduce a consistent family of matrices, following Medvedev (2014). Consider AIE (similar procedures apply for the other two matrices) and define an N × N matrix r, each entry of which is independently and randomly chosen from a uniform distribution on the interval (0, 1). The matrix r is now considered to be fixed, and we define as follows:

| (49) |

where Θ is the Heaviside step function and the indices are taken modulo N. Comparing this with Equation (16) we see that for a fixed p1, generating a new r and using Equation (49) is equivalent to generating AIE using Equation (16). The reason for using Equation (49) is that since the rij are chosen once and then fixed, an entry in AIE will switch from 0 to 1 (or vice versa) at most once as p1 is varied monotonically in the interval [0, 1].

The effects of quasistatically increasing p1 and p3 for Equations (42)–(48) are shown in Section 3.2.

3. Results

3.1. Results for continuum limit

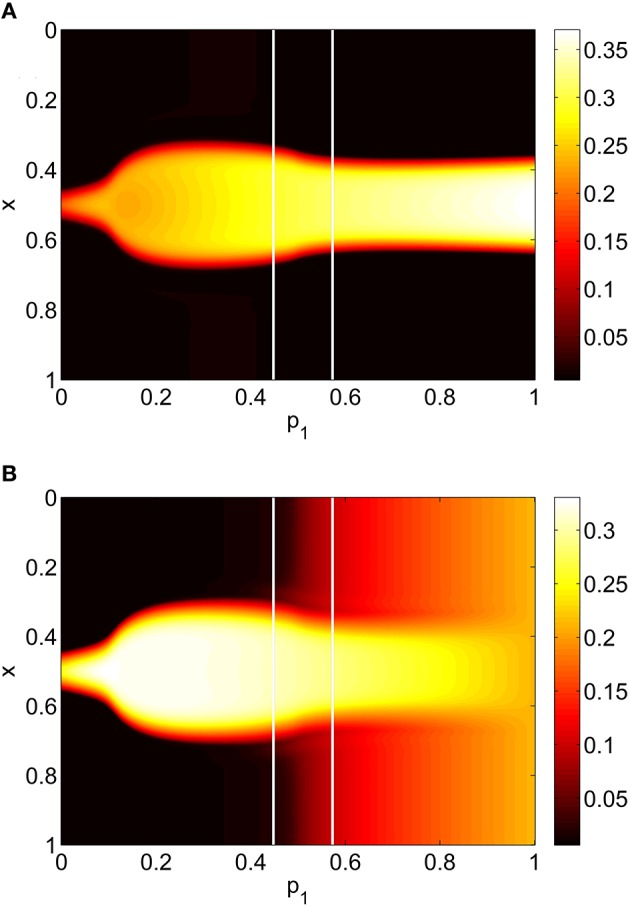

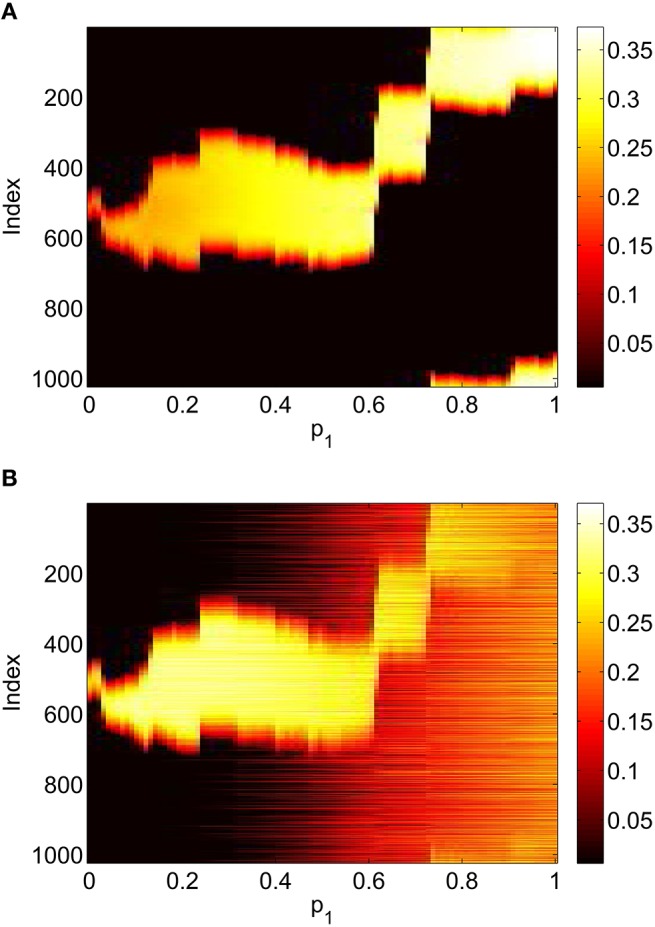

For the system Equations (31) and (32) and Equations (24) and (25) we discretize the spatial domain into 1024 evenly spaced points and approximate the integrals in Equations (33)–(35) with Riemann sums. We numerically integrate the spatially-discretized evolution equations in time, using appropriate initial conditions, until a steady state is reached. This steady state is then continued using pseudo-arclength continuation, and the stability of the solutions found determined by examining the eigenvalues of the Jacobian evaluated at them (Laing, 2014b). The increment between successive values of the pi found during continuation is not fixed and the numerical results found were interpolated to a uniform grid for plotting in Figures 5, 7, 8. We consider varying p1, p2 and p3 independently, keeping the other two parameters fixed at zero. The results of varying p1 are shown in Figure 5, where we plot the firing rate of the two populations, derived as Re(wi)/π where for i = I, E, as in Montbrió et al. (2015), where the zi are fixed points of Equations (31) and (32). We see an increase and then decrease in bump width as p1 is increased. There is also a pair of supercritical Hopf bifurcations, between which the bump is unstable (It is only weakly unstable, with the rightmost eigenvalue of the Jacobian having a maximal real part of 0.015 in this interval). At the leftmost Hopf bifurcation the Jacobian has eigenvalues ±1.8191i and at the rightmost it has eigenvalues ±1.7972i, with all other eigenvalues having negative real parts. One notable aspect is the increase in firing rate of the inhibitory population “outside” the bump as p1 is increased, such that when p1 = 1 the firing rate in this population is spatially homogeneous. This is to be expected, as there are no inhibitory-to-inhibitory connections, and when p1 = 1 all inhibitory neurons receive the same input from the excitatory population.

Figure 5.

Firing rate for (A): excitatory population and (B): inhibitory population, as a function of p1, with p2 = p3 = 0. There is a Hopf bifurcation on both white vertical lines and the bump is unstable between these. Other parameters: Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, αIE = 40/1024, αEE = 40/1024, αEI = 60/1024 and τ = 10.

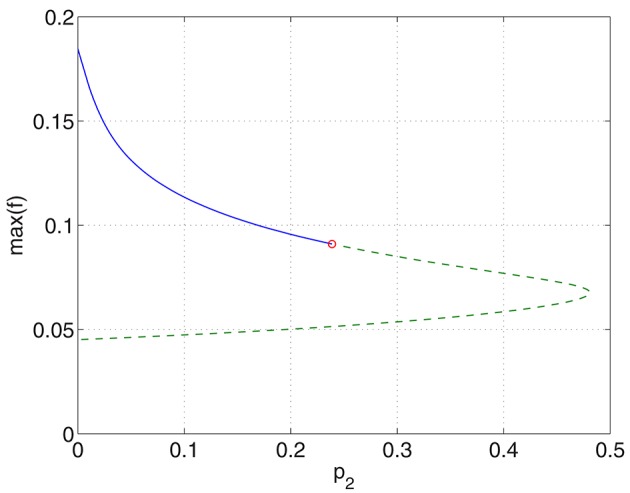

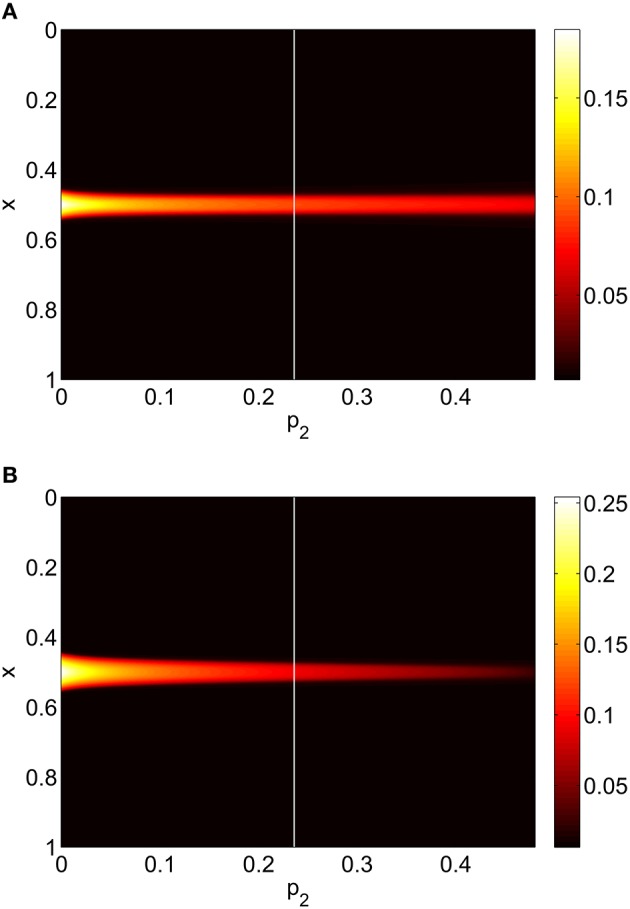

Increasing p2 while keeping p1 = p3 = 0 we find that the bump undergoes a Hopf bifurcation (Jacobian has eigenvalues ±0.3404i) and then is destroyed in a saddle-node bifurcation at p2 ≈ 0.48, as shown in Figure 6. The behavior of the bumps for 0 ≤ p2 ≤ 0.48 is shown in Figure 7.

Figure 6.

Maximum (over x) of the firing rate for the excitatory population as a function of p2 with p1 = p3 = 0. Solid: stable; dashed: unstable. The Hopf bifurcation is marked with a circle. Other parameters: Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, αIE = 40/1024, αEE = 40/1024, αEI = 60/1024 and τ = 10.

Figure 7.

Firing rate for (A): excitatory population and (B): inhibitory population, as a function of p2, with p3 = p1 = 0. There is a Hopf bifurcation at the white vertical line and the bump is destroyed in saddle-node bifurcation at p2 ≈ 0.48. Other parameters: Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, αIE = 40/1024, αEE = 40/1024, αEI = 60/1024 and τ = 10.

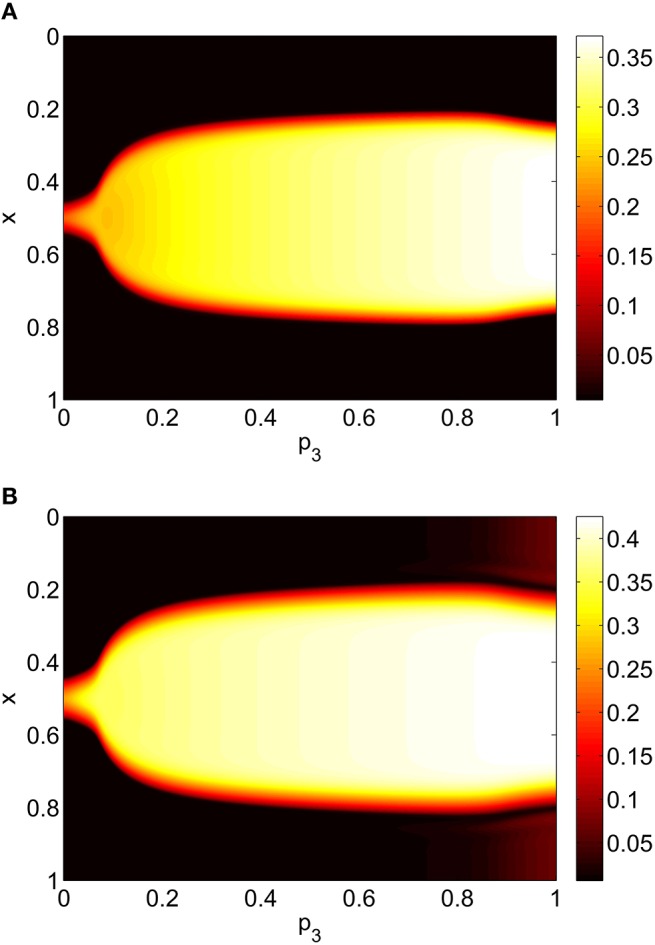

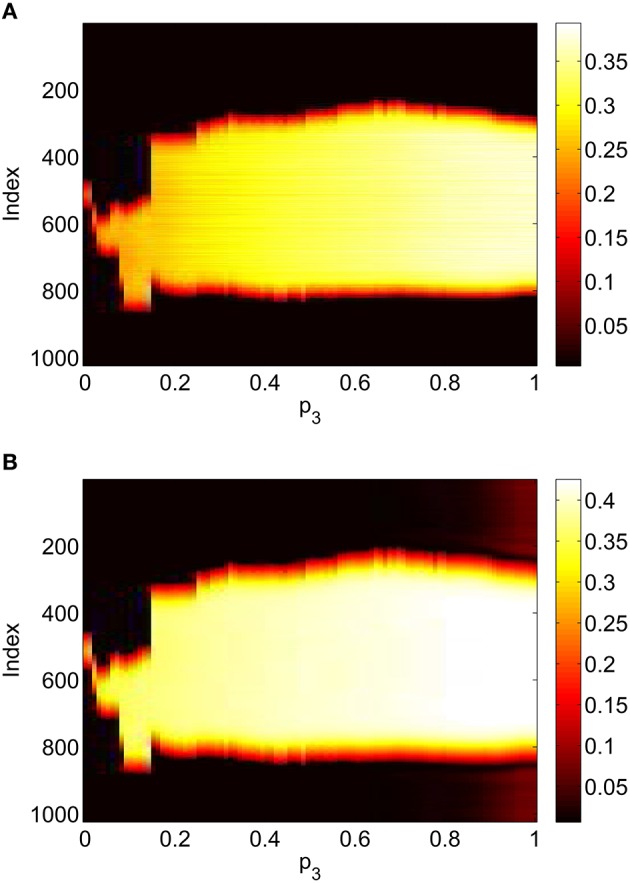

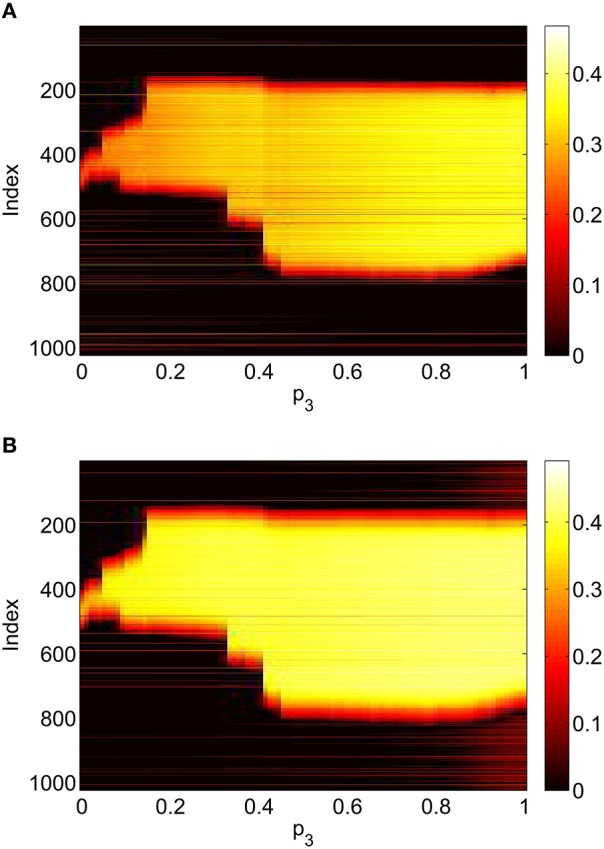

Varying p3 we obtain Figure 8, where there are no bifurcations as p3 is increased all the way to 1, corresponding to the case where all excitatory neurons feel the same inhibition, just a weighted mean of the output from the inhibitory population. We again see an increase and then slight decrease in bump width as p3 is increased.

Figure 8.

Firing rate for (A): excitatory population and (B): inhibitory population, as a function of p3, with p2 = p1 = 0. Other parameters: Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, αIE = 40/1024, αEE = 40/1024, αEI = 60/1024 and τ = 10.

While a Hopf bifurcation of a bump may seem undesirable from a neurocomputational point of view, it should be kept in mind that oscillations are an essential phenomenon in many different neural networks, and they are widely studied (Ashwin et al., 2016).

We have only varied one of p1, p2 and p3, keeping the other two probabilities at zero. A clearer picture of the system's behavior could be obtained by simultaneously varying two, or all three, of these probabilities. We leave this as future work, but mention that for the special case p1 = p2 = p3 = p, the bump persists and is stable up to p ≈ 0.49, where it undergoes a saddle-node bifurcation (not shown).

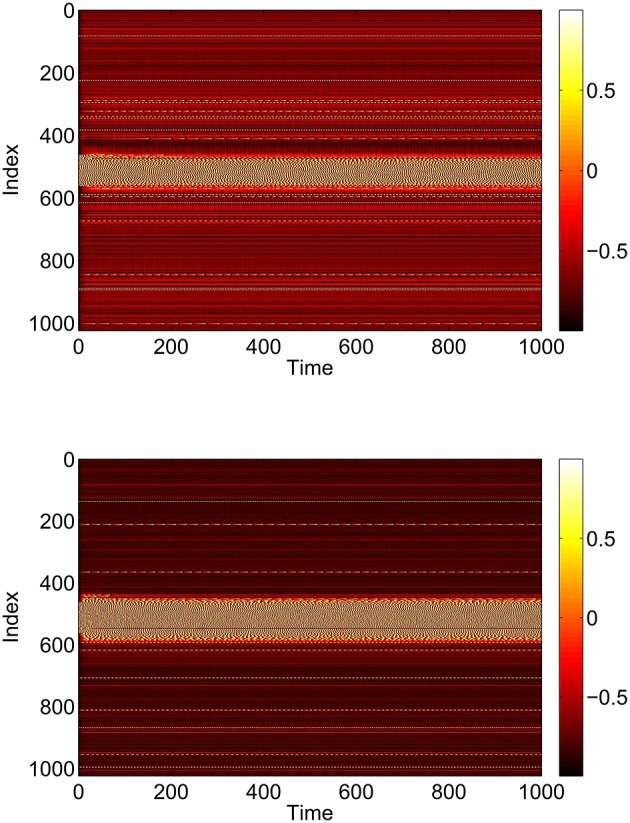

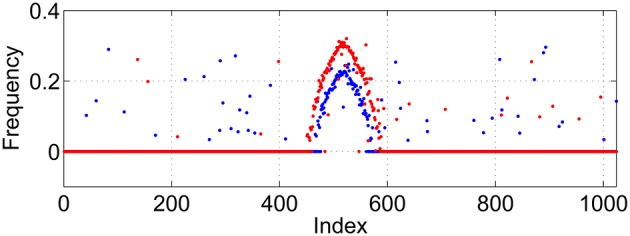

3.2. Results for infinite ensemble

This section refers to Equations (42)–(48). In Figure 9 we show the results of slowly increasing p1, while keeping p2 = p3 = 0. We initially set p1 = 0 and integrated Equations (42)–(48) to a steady state, using initial conditions that give a bump solution. We then increased p1 by 0.01 and integrated Equations (42)–(48) again for 10,000 time units, using as an initial condition the final state of the previous integration. We continued this process up to p1 = 1. The firing rate for the jth excitatory neuron is Re(wj)/π where , and similarly for an inhibitory neuron. Comparing Figure 9 with Figure 5 we see the same behavior, the main difference being that the bump now moves in an unpredictable way around the domain as p1 is increased. This is due to the system no longer being translationally invariant, and the bump moving to a position in which it is stable (Thul et al., 2016). Unlike the situation shown in Figure 5 we did not observe any Hopf bifurcations, for this realization of the AIE. Presumably this is also a result of breaking the translational invariance and the weakly unstable nature of the bump shown in Figure 5 between the Hopf bifurcations.

Figure 9.

Firing rate for (A): excitatory population and (B): inhibitory population, as a function of p1, with p2 = p3 = 0. Compare with Figure 5. Other parameters: Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, N = 1024, MIE = 40, MEE = 40, MEI = 60 and τ = 10.

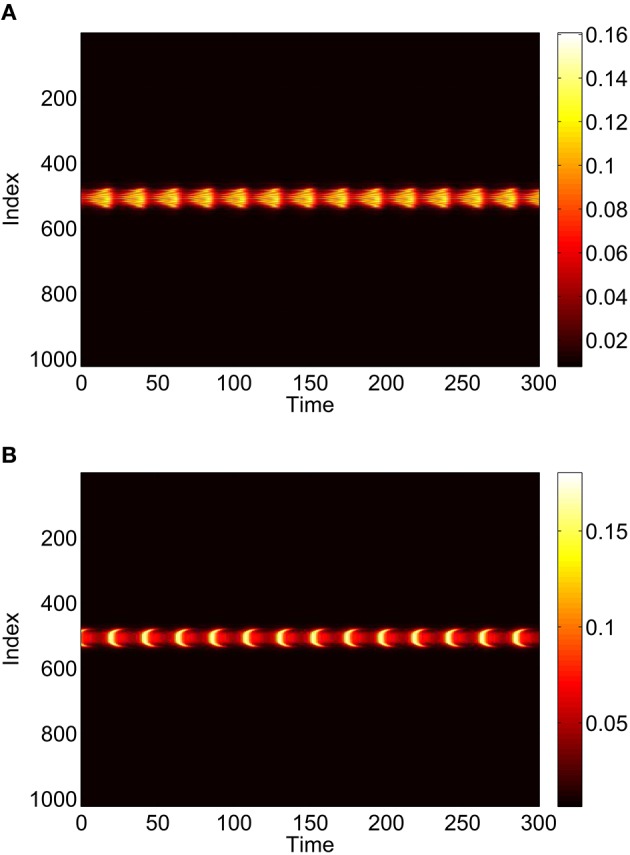

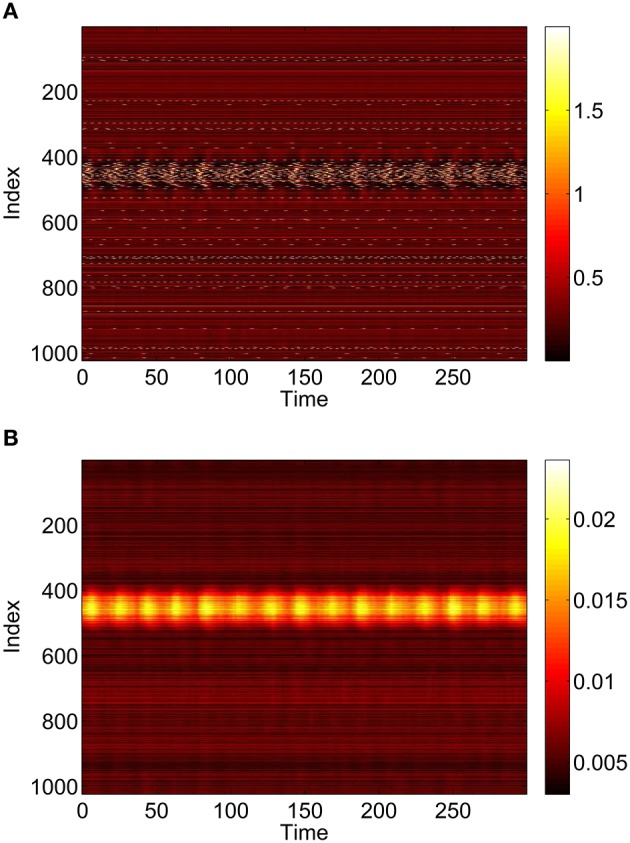

Repeating this process as p3 is varied with p1 = p2 = 0 we obtain the results in Figure 10. Comparing with Figure 8 we see very good agreement, although the bump does move considerably for small p3, as in Figure 9. Varing p2 with p1 = p3 = 0 we obtain similar results to those in Figure 7 (not shown). Typical behavior of (42)–(48) with p2 = 0.3 (i.e., beyond the Hopf bifurcation shown in Figure 7) is shown in Figure 11, where the oscillations are clearly seen.

Figure 10.

Firing rate for (A): excitatory population and (B): inhibitory population, as a function of p3, with p2 = p1 = 0. Compare with Figure 8. Other parameters: Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, N = 1024, MIE = 40, MEE = 40, MEI = 60 and τ = 10.

Figure 11.

Instantaneous firing rate for (A): excitatory population and (B): inhibitory population, with p2 = 0.3 and p3 = p1 = 0. Other parameters: Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, N = 1024, MIE = 40, MEE = 40, MEI = 60 and τ = 10.

3.3. Results for original network

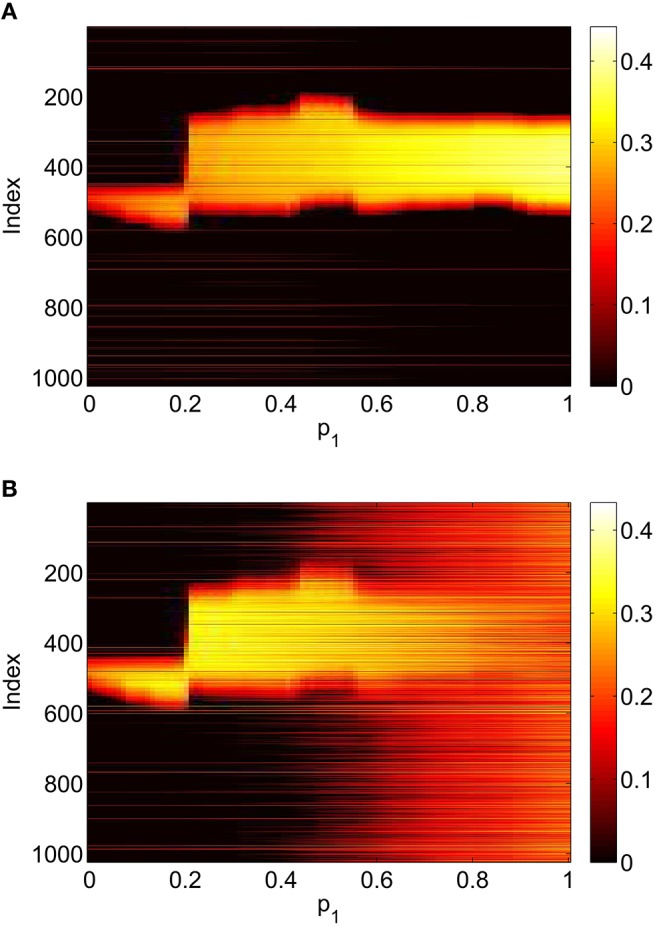

To verify the results obtained above we ran the full network Equations (3)–(6) but using the connectivity Equation (49) (and similar constructions for AEE and AEI) to calculate Equation (15). The frequency was measured directly from simulations. Varying p1 we obtain the results in Figure 12; again, no oscillatory behavior associated with a Hopf bifurcation was observed and the results are similar to those in Figure 9. Varying p3 we obtained Figure 13 (compare with Figure 10). Figure 14 shows oscillatory behavior at p2 = 0.3, p1 = p3 = 0 (the same parameter values as used in Figure 11).

Figure 12.

Firing rate for (A): excitatory population and (B): inhibitory population in the full network Equations (3)–(6), averaged over a time window of length 500, as a function of p1 with p2 = p3 = 0. Compare with Figure 9. Other parameters: Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, N = 1024, MIE = 40, MEE = 40, MEI = 60 and τ = 10.

Figure 13.

Firing rate for (A): excitatory population and (B): inhibitory population in the full network Equations (3)–(6), averaged over a time window of length 500, as a function of p3 with p2 = p1 = 0. Compare with Figure 10. Other parameters: Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, N = 1024, MIE = 40, MEE = 40, MEI = 60 and τ = 10.

Figure 14.

Behavior of the full network Equations (3)–(6) with p2 = 0.3 and p3 = p1 = 0. (A): 1 − cos θj, (B): vj. Compare with Figure 11. Other parameters: Δ = 0.02, I0 = −0.16, J0 = −0.4, n = 2, gEE = 25, gIE = 25, gEI = 7.5, N = 1024, MIE = 40, MEE = 40, MEI = 60 and τ = 10.

Note that the results in Figures 9–14 are each for a single realization of a typical (parameterized) small-world network. To gain insight into general small-world networks it would be of interest to study the statistics of the behavior of such networks.

4. Discussion

We have considered the effects of randomly adding long-range and simultaneously removing short-range connections in a network of model theta neurons which is capable of supporting spatially localized bump solutions. Such rewiring makes the networks small-world, at least for small values of the rewiring probabilities. By using theta neurons we are able to use the Ott/Antonsen ansatz to derive descriptions of the networks in two limits: an infinite number of neurons, and an infinite ensemble of finite networks, each with the same connectivity. The usefulness of this is that the bumps of interest are fixed points of the dynamical equations derived in these ways, and can thus be found, their stability determined, and followed as parameters are varied using standard dynamical systems techniques.

For the parameters chosen we found bumps to be surprisingly robust: in several cases a rewiring probability could be taken from 0 to 1 without destroying a bump. However, rewiring connections within the excitatory population (increasing p2) was found to destabilize a bump through a Hopf bifurcation and later destroy the unstable bump in a saddle-node bifurcation. Simulations of the full network were used to verify our results.

The network studied has many parameters: the spatial spread of local couplings, the timescale of excitatory synapses, the connection strengths within and between populations, and the distributions of heterogeneous input currents. These were all set so that the network without rewiring supported a stable bump solution, but we have not investigated the effects of varying any of these parameters. However, even without considering rewiring, Equations (31)–(35) and Equations (24) and (25) provide a framework for investigating the effects of varying these parameters on the existence and stability of bump solutions, since these continuum equations are derived directly from networks of spiking neurons, unlike many neural field models.

Author contributions

All authors listed, have made substantial, direct and intellectual contribution to the work, and approved it for publication.

Conflict of interest statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

I thank the referees for their useful suggestions.

Appendix

Mathematical details relating to section 2.4

In the limit of an infinite ensemble we have

| (A1) |

| (A2) |

| (A3) |

where is the marginal distribution for θj, given by

| (A4) |

and similarly

| (A5) |

Multiplying the continuity equation (40) by ∏k≠j dθk dIk and integrating we find that each satisfies

| (A6) |

Similarly each satisfies

| (A7) |

Using the Ott/Antonsen ansatz we write

| (A8) |

and

| (A9) |

for some functions and , where “c.c.” means the complex conjugate of the previous term. Substituting Equation (A8) into Equation (A6) and Equation (A9) into Equation (A7) we find that

| (A10) |

and

| (A11) |

Substituting Equations (A8) and (A9) into Equations (A1)–(A3) we obtain

| (A12) |

| (A13) |

| (A14) |

where H is given by Equation (36). Using standard properties of the Lorentzian one can perform the integrals in Equations (A12)–(A14) and defining and we have

| (A15) |

| (A16) |

| (A17) |

Evaluating Equation (A10) at Ij = I0 + iΔ and Equation (A11) at Jj = J0 + iΔ we obtain

| (A18) |

| (A19) |

for j = 1, 2, …N. Equations (A15)–(A19) are Equations (42)–(46) in Section 2.4.

References

- Abrams D., Strogatz S. (2004). Chimera states for coupled oscillators. Phys. Rev. Lett. 93, 174102. 10.1103/PhysRevLett.93.174102 [DOI] [PubMed] [Google Scholar]

- Abrams D., Strogatz S. (2006). Chimera states in a ring of nonlocally coupled oscillators. Int. J. Bifurcat. Chaos 16, 21–37. 10.1142/S0218127406014551 [DOI] [Google Scholar]

- Amari S.-I. (1977). Dynamics of pattern formation in lateral-inhibition type neural fields. Biol. Cybern. 27, 77–87. 10.1007/BF00337259 [DOI] [PubMed] [Google Scholar]

- Ashwin P., Coombes S., Nicks R. (2016). Mathematical frameworks for oscillatory network dynamics in neuroscience. J. Math. Neurosci. 6, 1–92. 10.1186/s13408-015-0033-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barlev G., Antonsen T. M., Ott E. (2011). The dynamics of network coupled phase oscillators: an ensemble approach. Chaos 21:025103. 10.1063/1.3596711 [DOI] [PubMed] [Google Scholar]

- Blomquist P., Wyller J., Einevoll G. T. (2005). Localized activity patterns in two-population neuronal networks. Physica D 206, 180–212. 10.1016/j.physd.2005.05.004 [DOI] [Google Scholar]

- Börgers C., Kopell N. (2005). Effects of noisy drive on rhythms in networks of excitatory and inhibitory neurons. Neural Comput. 17, 557–608. 10.1162/0899766053019908 [DOI] [PubMed] [Google Scholar]

- Brackley C., Turner M. S. (2009). Persistent fluctuations of activity in undriven continuum neural field models with power-law connections. Phys. Rev. E 79:011918. 10.1103/PhysRevE.79.011918 [DOI] [PubMed] [Google Scholar]

- Brackley C. A., Turner M. S. (2014). Heterogeneous connectivity in neural fields: a stochastic approach, in Neural Fields, eds Coombes S., beim Graben P., Potthast R., Wright J. J. (Berlin; Heidelberg: Springer; ), 213–234. [Google Scholar]

- Bressloff P. C. (2012). Spatiotemporal dynamics of continuum neural fields. J. Phys. A 45:033001 10.1088/1751-8113/45/3/033001 [DOI] [Google Scholar]

- Bullmore E., Sporns O. (2009). Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 10, 186–198. 10.1038/nrn2575 [DOI] [PubMed] [Google Scholar]

- Camperi M., Wang X.-J. (1998). A model of visuospatial working memory in prefrontal cortex: recurrent network and cellular bistability. J. Comput. Neurosci. 5, 383–405. 10.1023/A:1008837311948 [DOI] [PubMed] [Google Scholar]

- Compte A., Brunel N., Goldman-Rakic P. S., Wang X.-J. (2000). Synaptic mechanisms and network dynamics underlying spatial working memory in a cortical network model. Cereb. Cortex 10, 910–923. 10.1093/cercor/10.9.910 [DOI] [PubMed] [Google Scholar]

- Ermentrout B. (1996). Type i membranes, phase resetting curves, and synchrony. Neural Comput. 8, 979–1001. 10.1162/neco.1996.8.5.979 [DOI] [PubMed] [Google Scholar]

- Ermentrout B. (1998). Neural networks as spatio-temporal pattern-forming systems. Rep. Prog. Phys. 61, 353–430. 10.1088/0034-4885/61/4/002 [DOI] [Google Scholar]

- Ermentrout B. (2006). Gap junctions destroy persistent states in excitatory networks. Phys. Rev. E 74:031918. 10.1103/PhysRevE.74.031918 [DOI] [PubMed] [Google Scholar]

- Ermentrout G. B., Kopell N. (1986). Parabolic bursting in an excitable system coupled with a slow oscillation. SIAM J. Appl. Math. 46, 233–253. 10.1137/0146017 [DOI] [Google Scholar]

- Gutkin B. S., Laing C. R., Colby C. L., Chow C. C., Ermentrout G. B. (2001). Turning on and off with excitation: the role of spike-timing asynchrony and synchrony in sustained neural activity. J. Comput. Neurosci. 11, 121–134. 10.1023/A:1012837415096 [DOI] [PubMed] [Google Scholar]

- Laing C., Chow C. (2001). Stationary bumps in networks of spiking neurons. Neural Comput. 13, 1473–1494. 10.1162/089976601750264974 [DOI] [PubMed] [Google Scholar]

- Laing C. R. (2009). The dynamics of chimera states in heterogeneous Kuramoto networks. Physica D 238, 1569–1588. 10.1016/j.physd.2009.04.012 [DOI] [Google Scholar]

- Laing C. R. (2014a). Derivation of a neural field model from a network of theta neurons. Phys. Rev. E 90:010901. 10.1103/PhysRevE.90.010901 [DOI] [PubMed] [Google Scholar]

- Laing C. R. (2014b). Numerical bifurcation theory for high-dimensional neural models. J. Math. Neurosci. 4, 13 10.1186/2190-8567-4-13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laing C. R. (2015). Exact neural fields incorporating gap junctions. SIAM J. Appl. Dyn. Syst. 14, 1899–1929. 10.1137/15M1011287 [DOI] [Google Scholar]

- Laing C. R., Chow C. C. (2002). A spiking neuron model for binocular rivalry. J. Comput. Neurosci. 12, 39–53. 10.1023/A:1014942129705 [DOI] [PubMed] [Google Scholar]

- Laing C. R., Rajendran K., Kevrekidis I. G. (2012). Chimeras in random non-complete networks of phase oscillators. Chaos 22:013132. 10.1063/1.3694118 [DOI] [PubMed] [Google Scholar]

- Laing C. R., Troy W. (2003). PDE methods for nonlocal models. SIAM J. Appl. Dyn. Syst. 2, 487–516. 10.1137/030600040 [DOI] [Google Scholar]

- Laing C. R., Troy W. C., Gutkin B., Ermentrout G. B. (2002). Multiple bumps in a neuronal model of working memory. SIAM J. Appl. Math. 63, 62–97. 10.1137/S0036139901389495 [DOI] [Google Scholar]

- Luke T. B., Barreto E., So P. (2013). Complete classification of the macroscopic behavior of a heterogeneous network of theta neurons. Neural Comput. 25, 3207–3234. 10.1162/NECO_a_00525 [DOI] [PubMed] [Google Scholar]

- Luke T. B., Barreto E., So P. (2014). Macroscopic complexity from an autonomous network of networks of theta neurons. Front. Comput. Neurosci. 8:145. 10.3389/fncom.2014.00145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Medvedev G. S. (2014). Small-world networks of kuramoto oscillators. Physica D 266, 13–22. 10.1016/j.physd.2013.09.008 [DOI] [Google Scholar]

- Montbrió E., Pazó D., Roxin A. (2015). Macroscopic description for networks of spiking neurons. Phys. Rev. X 5:021028 10.1103/PhysRevX.5.021028 [DOI] [Google Scholar]

- Newman M. E., Watts D. J. (1999). Renormalization group analysis of the small-world network model. Phys. Lett. A 263, 341–346. 10.1016/S0375-9601(99)00757-4 [DOI] [Google Scholar]

- Ott E., Antonsen T. M. (2008). Low dimensional behavior of large systems of globally coupled oscillators. Chaos 18:037113. 10.1063/1.2930766 [DOI] [PubMed] [Google Scholar]

- Ott E., Antonsen T. M. (2009). Long time evolution of phase oscillator systems. Chaos 19:023117. 10.1063/1.3136851 [DOI] [PubMed] [Google Scholar]

- Ott E., Hunt B. R., Antonsen T. M. (2011). Comment on “Long time evolution of phase oscillator systems” [Chaos 19, 023117 (2009)]. Chaos 21:025112. 10.1063/1.3574931 [DOI] [PubMed] [Google Scholar]

- Owen M., Laing C., Coombes S. (2007). Bumps and rings in a two-dimensional neural field: splitting and rotational instabilities. New J. Phys. 9, 378 10.1088/1367-2630/9/10/378 [DOI] [Google Scholar]

- Panaggio M. J., Abrams D. M. (2015). Chimera states: coexistence of coherence and incoherence in networks of coupled oscillators. Nonlinearity 28:R67 10.1088/0951-7715/28/3/R67 [DOI] [Google Scholar]

- Pinto D. J., Ermentrout G. B. (2001). Spatially structured activity in synaptically coupled neuronal networks: II. lateral inhibition and standing pulses. SIAM J. Appl. Math. 62, 226–243. 10.1137/S0036139900346465 [DOI] [Google Scholar]

- Puljic M., Kozma R. (2008). Narrow-band oscillations in probabilistic cellular automata. Phys. Rev. E 78:026214. 10.1103/PhysRevE.78.026214 [DOI] [PubMed] [Google Scholar]

- Redish A. D., Elga A. N., Touretzky D. S. (1996). A coupled attractor model of the rodent head direction system. Network 7, 671–685. 10.1088/0954-898X_7_4_004 [DOI] [Google Scholar]

- So P., Luke T. B., Barreto E. (2014). Networks of theta neurons with time-varying excitability: macroscopic chaos, multistability, and final-state uncertainty. Physica D 267, 16–26. 10.1016/j.physd.2013.04.009 [DOI] [Google Scholar]

- Song H. F., Wang X.-J. (2014). Simple, distance-dependent formulation of the Watts-Strogatz model for directed and undirected small-world networks. Phys. Rev. E 90:062801. 10.1103/PhysRevE.90.062801 [DOI] [PubMed] [Google Scholar]

- Strogatz S. (2000). From Kuramoto to Crawford: exploring the onset of synchronization in populations of coupled oscillators. Physica D 143, 1–20. 10.1016/S0167-2789(00)00094-4 [DOI] [Google Scholar]

- Thul R., Coombes S., Laing C. R. (2016). Neural field models with threshold noise. J. Math. Neurosci. 6, 1–26. 10.1186/s13408-016-0035-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang X.-J. (2001). Synaptic reverberation underlying mnemonic persistent activity. Trends Neurosci. 24, 455–463. 10.1016/S0166-2236(00)01868-3 [DOI] [PubMed] [Google Scholar]

- Watanabe S., Strogatz S. (1993). Integrability of a globally coupled oscillator array. Phys. Rev. Lett. 70, 2391–2394. 10.1103/PhysRevLett.70.2391 [DOI] [PubMed] [Google Scholar]

- Watanabe S., Strogatz S. (1994). Constants of motion for superconducting Josephson arrays. Physica D 74, 197–253. 10.1016/0167-2789(94)90196-1 [DOI] [Google Scholar]

- Watts D. J., Strogatz S. H. (1998). Collective dynamics of “small-world” networks. Nature 393, 440–442. 10.1038/30918 [DOI] [PubMed] [Google Scholar]

- Wilson H. R., Cowan J. D. (1973). A mathematical theory of the functional dynamics of cortical and thalamic nervous tissue. Kybernetik 13, 55–80. 10.1007/BF00288786 [DOI] [PubMed] [Google Scholar]

- Wimmer K., Nykamp D. Q., Constantinidis C., Compte A. (2014). Bump attractor dynamics in prefrontal cortex explains behavioral precision in spatial working memory. Nat. Neurosci. 17, 431–439. 10.1038/nn.3645 [DOI] [PubMed] [Google Scholar]

- Zhang K. (1996). Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: a theory. J. Neurosci. 16, 2112–2126. [DOI] [PMC free article] [PubMed] [Google Scholar]