Abstract

Cortical auditory evoked potentials (CAEPs) are influenced by the characteristics of the stimulus, including level and hearing aid gain. Previous studies have measured CAEPs aided and unaided in individuals with normal hearing. There is a significant difference between providing amplification to a person with normal hearing and a person with hearing loss. This study investigated this difference and the effects of stimulus signal-to-noise ratio (SNR) and audibility on the CAEP amplitude in a population with hearing loss. Twelve normal-hearing participants and 12 participants with a hearing loss participated in this study. Three speech sounds—/m/, /g/, and /t/—were presented in the free field. Unaided stimuli were presented at 55, 65, and 75 dB sound pressure level (SPL) and aided stimuli at 55 dB SPL with three different gains in steps of 10 dB. CAEPs were recorded and their amplitudes analyzed. Stimulus SNRs and audibility were determined. No significant effect of stimulus level or hearing aid gain was found in normal hearers. Conversely, a significant effect was found in hearing-impaired individuals. Audibility of the signal, which in some cases is determined by the signal level relative to threshold and in other cases by the SNR, is the dominant factor explaining changes in CAEP amplitude. CAEPs can potentially be used to assess the effects of hearing aid gain in hearing-impaired users.

Keywords: Cortical auditory evoked potential, hearing-impaired, normal-hearing, hearing aid, signal-to-noise ratio, audibility

Learning Outcomes: As a result of this activity, the participant will be able to identify the effects of hearing aid gain on the amplitude of cortical auditory evoked potentials in normal-hearing and hearing-impaired participants.

Cortical auditory evoked potentials (CAEPs) originate from the auditory cortex in response to the onset, change, or the offset of a sound. In most common recording paradigms, isolated acoustic stimuli are presented not faster than twice a second to an awake subject and with recording electrodes spanning the temporal regions of the scalp. In these conditions, the CAEP in adults consists of a positive deflection (P1) occurring around 50 milliseconds after stimulation followed by a negative peak (N1) around 100 milliseconds and another positive wave (P2) around 180 milliseconds.1 In infants and children up to the age of 12, most studies indicate that the negative N1 has not developed yet due to maturation.2 Only a positive P1 wave is visible, starting with a latency of up to 300 milliseconds at birth and decreasing down to adult latencies in adulthood, provided the individual has had proper access to sound.3 The presence of the cortical complex indicates that the stimulus has been detected at the level of the auditory cortex.4

CAEPs have several applications.4 They can be used for hearing threshold estimation, with the most established studies summarized by Lightfoot.5 Cortical responses have been applied clinically for the evaluation of hearing aid and cochlear implant fittings,6 7 8 where the issue of undesirable cochlear implant artifacts is still very relevant.9 10 11 CAEPs can be used for the tracking of maturation of the auditory system and the effects of plasticity.12 13 14 15 Cortical waveforms in response to changes in stimulus intensity, frequency, or phase are called acoustic change complexes (ACCs) and can potentially be utilized to investigate discrimination between different speech sounds and localization ability.16 17 18 19 In addition, CAEPs are useful in the investigation of auditory or temporal processing,20 21 auditory training,22 23 loudness growth and comfortable levels,24 and the effect of aging.25 26 27

Concerning the effect of hearing aid processing on CAEPs, the main feature investigated so far is hearing aid gain. Earlier publications described case studies that showed an increase of CAEP amplitudes when a child with a hearing loss was aided.28 29 Recent studies systematically investigated the effect of increasing hearing aid gain on CAEP amplitudes and latencies on larger populations. Billings et al presented a 1-kHz tone to 13 normal-hearing young adults at seven different stimulus levels, both aided (20 dB amplification) and unaided.30 No significant effect of amplification on CAEP amplitudes was found. Conversely, there was a significant latency delay when aiding. The results were assumed not to be attributed to audibility alone, but several other possible explanations were listed like compression characteristics of the hearing aid, saturation of the neural response, stimulus rise time, signal-to-noise ratio (SNR) alteration, and the introduction of amplitude overshoot caused by activation of compression circuitry. A warning was put forward that the interaction between hearing aid processing and the central auditory system needed to be investigated further prior to interpretation of any results. Investigations were continued by the same first author with a similar experimental protocol but with the focus on the effects of SNR on the CAEP.31 They discovered a clear effect of SNR on CAEP amplitudes, but when signal levels were defined in terms of output level, aided CAEPs were surprisingly smaller and delayed relative to unaided CAEPs. This was attributed to increasing noise levels caused by the hearing aid. It was critically questioned whether it would be actually possible to control all the potential hearing aid variables in a clinical setting while aiding. Marynewich et al and Jenstad et al confirmed that aiding normally hearing individuals does not increase CAEP amplitudes significantly and that differences exist between hearing aids.32 33 Because onset CAEPs are predominantly sensitive to the rise time, presentation level, and frequency of the first 30 milliseconds of the stimulus,34 acoustic recordings were made at 30 milliseconds after stimulus onset. For both short (60 milliseconds) and long (757 milliseconds) stimuli, a reduced hearing aid gain was found at 30 milliseconds after onset, when compared with the real-ear insertion gain measured with standard hearing aid test signals. In addition, the digital hearing aids altered the rise time of the stimuli such that maximum gain was reached well past 30 milliseconds after stimulus onset, with different rise times across these hearing aids.33 They concluded that aided cortical results must be cautiously interpreted and that further research is required for clinical application.

In contrast with the previous studies that used normal-hearing participants, Korczak et al tested 14 participants with a hearing loss.35 They assessed the detectability of CAEPs using /ba/ and /da/ speech sounds at two different stimulus levels (a “low” 65 dB and a “high” 80 dB sound pressure level [SPL]). They noted that the use of hearing aids substantially improved CAEP morphology (i.e., increased CAEP amplitudes and decreased CAEP latencies). There was a difference between the types of hearing loss. For moderately to severely hearing-impaired groups, aiding had an impact on response presence at the lower stimulus intensity. Conversely, this was only true for higher stimulus intensities in the severe-profound hearing loss group. Hence, amplification effects (i.e., differences between unaided and aided conditions) were more likely to occur near threshold than at suprathreshold levels. Some reservations about this study were identified however by Souza and Tremblay,36 who indicated that the hearing aid gains were significantly off target.

The study reported in this article was initiated by our deep reservations about the use of normal hearers instead of hearing-impaired users in all the studies that found that amplification did not increase the amplitude or decrease the latency of cortical responses and hence concluded that cortical responses may not be appropriate for assessing the effects of amplification. The investigation examines whether signal level, hearing aid gain, SNR, or audibility are responsible for the observations seen in other publications. It was opted to refer to audibility throughout the article instead of sensation level, which is commonly perceived as the difference between signal level and a person's hearing threshold. Audibility is defined here as the difference between signal level and the greater of either hearing threshold or acoustic background/microphone noise, an important factor in this study.

The study's hypotheses are:

Amplification does not increase CAEP amplitudes in normal hearers, and the observations in this study hence will agree with earlier published work.

Amplification does increase CAEP amplitudes in hearing-impaired users, at least when the unaided stimuli are not audible or barely audible.

CAEP amplitude is inherently related to stimulus audibility, not just stimulus SNR or stimulus absolute level.

Methods

This study was conducted with the approval of the Australian Hearing Human Research Ethics Committee and conformed to National Health & Medical Research Committee guidelines.

Participants

Twelve normal-hearing participants (9 women) and 12 participants with a hearing loss (6 men) participated in this study. For the normal hearers, mean age was 32 years (range 23 to 48), with a 4 frequency average hearing loss (4FAHL) (0.5, 1, 2, 4 kHz) of 6 dB hearing level (HL) for the tested ear (all tested frequencies ≤ 15 dB HL). For the hearing-impaired participants, mean age was 74 years (range 47 to 83), with a 4FAHL of 56 dB HL for the tested ear. Details about their audiometric profiles can be found in Table 1. The difference in population age is a possible confounding factor in this study. There is no published evidence available that suggests age has a significant effect on the relationship between cortical amplitude and audibility, the two main factors investigated in this study.

Table 1. Audiometric and Hearing Aid Information of Participants with a Hearing Loss.

| Hearing Thresholds (in dB HL per frequency) | ||||||

|---|---|---|---|---|---|---|

| Subject | 0.25 kHz | 0.5 kHz | 1 kHz | 2 kHz | 4 kHz | 8 kHz |

| 1 | 45 | 50 | 50 | 50 | 55 | 65 |

| 2 | 30 | 40 | 50 | 60 | 70 | 70 |

| 3 | 30 | 35 | 45 | 55 | 70 | 75 |

| 4 | 40 | 55 | 60 | 55 | 60 | 80 |

| 5 | 50 | 45 | 60 | 70 | 70 | 80 |

| 6 | 40 | 40 | 45 | 65 | 65 | 55 |

| 7 | 40 | 55 | 60 | 60 | 65 | 65 |

| 8 | 35 | 45 | 60 | 65 | 65 | 65 |

| 9 | 35 | 50 | 65 | 60 | 60 | 65 |

| 10 | 45 | 50 | 45 | 65 | 65 | 65 |

| 11 | 30 | 35 | 35 | 55 | 70 | 65 |

| 12 | 55 | 45 | 45 | 60 | 65 | 70 |

| Rounded Compression Knee Point (in dB) | ||||||

| 0–0.55 kHz | 0.55–1.12 kHz | 1.12–1.8 kHz | 1.8–2.8 kHz | 2.8–4.5 kHz | >4.5 kHz | |

| Knee point | 51 | 48 | 42 | 42 | 39 | 36 |

| Actual Insertion Gains (in dB) across Participants (Means ± Standard Deviations) | ||||||

| 0.25 kHz | 0.5 kHz | 1 kHz | 2 kHz | 4 kHz | ||

| Program 2 (PG −10 dB) | 2.5 ± 3.0 | 3.9 ± 3.7 | 9.4 ± 2.9 | 17.2 ± 3.9 | 16.8 ± 5.3 | |

| Program 1 (PG +0 dB) | 7.3 ± 4.2 | 9.0 ± 4.5 | 15.8 ± 4.2 | 22.9 ± 4.7 | 22.7 ± 4.7 | |

| Program 3 (PG +10 dB) | 11.9 ± 5.1 | 14.8 ± 4.9 | 21.6 ± 3.5 | 29.2 ± 4.4 | 27.5 ± 4.5 | |

| Compression Ratios across Participants (Means ± Standard Deviations) | ||||||

| 0–0.55 kHz | 0.55–1.12 kHz | 1.12–1.8 kHz | 1.8–2.8 kHz | 2.8–4.5 kHz | ||

| X:1 | 2.4 ± 0.6 | 2.8 ± 0.4 | 2.5 ± 0.3 | 2.5 ± 0.3 | 2.3 ± 0.2 | |

| Attack And Release Times across Participants (Means ± Standard Deviations) | ||||||

| Attack | Release | |||||

| Program 1 (PG +0 dB) | Program 2 (PG −10 dB) | Program 3 (PG +10 dB) | Program 1 (PG +0 dB) | Program 2 (PG −10 dB) | Program 3 (PG +10 dB) | |

| Time (ms) | 8.9 ± 2.8 | 8.9 ± 2.8 | 8.3 ± 3.4 | 59.2 ± 19.5 | 58.9 ± 17.3 | 69.2 ± 14.8 |

Abbreviations: HL, hearing loss; PG, prescribed gain.

Stimuli

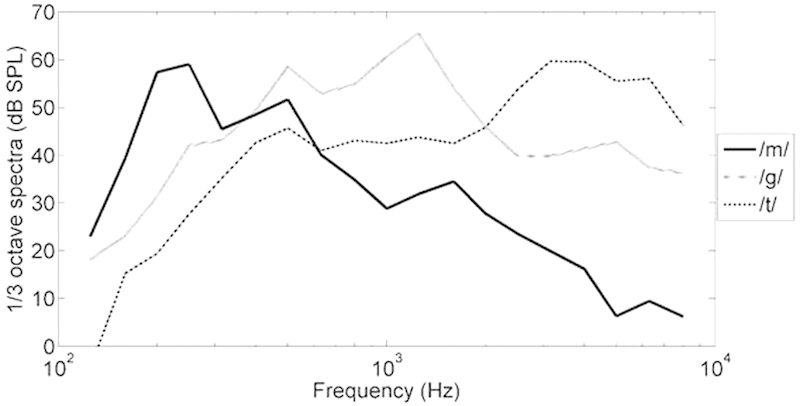

Three types of speech stimuli were used (/m/, /g/, and /t/) with durations of 30, 21, and 30 milliseconds, respectively. These stimuli have been applied in other studies from the authors' research group.37 38 39 40 41 The stimuli were extracted from a recording of uninterrupted dialogue spoken by a female with an average Australian accent, with a sampling rate of 44.1 kHz. The speech sounds /m/, /g/, and /t/ have a spectral emphasis in the low-, mid-, and high-frequency regions (250, 1,250, and 3,250 Hz, respectively), as shown in Fig. 1. Stimulus lengths were sufficiently short to avoid inclusion of vowel transition. They were deemed sufficiently long to be processed by a hearing aid, as their duration was considerably longer than the attack time of the hearing aids used, and so will provide the prescribed gain during the majority of stimulus presentation. In the unlikely event this assumption is incorrect, its effect will be limited as all gain conditions will be affected by this limitation.

Figure 1.

The one-third-octave power spectra for speech sounds /m/, /g/, and /t/ with overall levels normalized to 65 dB sound pressure level (SPL).

Stimuli were presented in the free field, from a B&W (Bowers and Wilkins, Worthing, United Kingdom) loudspeaker, at 0 degrees azimuth and 1.8 m distance. The room and speaker response combination were spectrally equalized. Stimulus levels were 55, 65, and 75 dB SPL (maximum root mean square level measured with an 35-millisecond impulse time constant) for the unaided condition, and 55 dB SPL for the aided condition. Hence, for unaided conditions, low, medium, and high output levels equaled 55, 65, and 75 dB SPL. For aided conditions, output levels were those determined by applying the low, medium, and high gains, respectively, to a signal at 55 dB SPL input level.

Stimuli were presented in alternating ears for successive normal hearers and to the ear closest to the center of the target audiogram range in hearing-impaired participants: thresholds of 30 to 65 dB HL between 250 and 1,000 Hz and of 50 to 80 dB HL between 2,000 and 4,000 Hz. Individuals with air conduction threshold asymmetries or air–bone gaps greater than 20 dB at two or more frequencies between 250 to 4,000 Hz were excluded from the study.

Hearing Aid Parameters

The same Siemens Motion (Erlangen, Germany) 101S behind-the-ear (BTE) hearing aid was used for all measurements to control for potentially subtle differences in hearing aid circuitry and output. Table 2 shows an overview of the relevant hearing aid parameters for both normal-hearing participants and those with a hearing loss. Hearing aid settings were identical for all normal hearers (Table 3). For hearing-impaired participants, hearing aids were fitted based on their hearing loss, as depicted in Table 1. The variations in measured attack and release times evident in Table 3 probably reflect random measurement error as these parameters were not programmed differently for different participants. Possibly, however, changes in gain may have interacted with the standard variations in input level that test boxes use to measure attack and release times and so produced an apparent variation in the estimates of these parameters. Three different gains were used in the aided condition. As feedback reduction was disengaged throughout the experiment, a gain setting that caused feedback was not used for testing. Replaceable foam molds, without a vent, were connected to the hearing aid via a tube.

Table 2. Hearing Aid Information for Normal-Hearing Participants.

| Rounded Compression Knee Points (in dB) | ||||||

|---|---|---|---|---|---|---|

| 0–0.55 kHz | 0.55–1.12 kHz | 1.12–1.8 kHz | 1.8–2.8 kHz | 2.8–4.5 kHz | >4.5 kHz | |

| Knee point | 51 | 48 | 42 | 42 | 39 | 36 |

| Actual Insertion Gains (in dB) across Participants (Means ± Standard Deviations) | ||||||

| 0.25 kHz | 0.5 kHz | 1 kHz | 2 kHz | 4 kHz | ||

| Program 1 (+0 dB) | −2.0 ± 1.1 | −3.4 ± 1.0 | −2.8 ± 1.2 | −7.0 ± 2.7 | −5.4 ± 3.7 | |

| Program 2 (+10 dB) | 5.7 ± 0.7 | 6.4 ± 1.2 | 7.7 ± 1.5 | 4.0 ± 3.1 | 4.6 ± 4.8 | |

| Program 3 (+20 dB) | 14.9 ± 0.9 | 15.7 ± 1.9 | 17.5 ± 1.7 | 12.8 ± 2.2 | 13.2 ± 4.0 | |

| Attack and Release Times | ||||||

| Attach | Release | |||||

| Program | 1 (+0 dB) | 2 (+10 dB) | 3 (+20 dB) | 1 (+0 dB) | 2 (+10 dB) | 3 (+20 dB) |

| Time (ms) | 10 | 2 | 22 | 56 | 54 | 54 |

Table 3. Hearing Aid Parameters for Normal-Hearing Participants and Participants with a Hearing loss.

| With Normal Hearing | With a Hearing Loss | |

|---|---|---|

| Frequency range (Hz) | 100–6,500 | |

| Compression ratio | 2:1 | NAL-NL2 |

| Compression knee point | NAL-NL2 | NAL-NL2 |

| Attack (ms) | Fixed 2–22 | Variable 0–12 |

| Release (ms) | Fixed 54–56 | Variable 16–84 |

| Compression | Syllabic | Syllabic |

| Insertion gain | 55, 65, 75 (average-based) | 55, 65, 75 (target-matched) |

| Programs | Flat (0, +10, +20 dB) | Prescribed gain, −10, +10 dB |

| Microphone | Omni | Omni |

| Noise, wind, and feedback reduction | Disengaged | Disengaged |

Abbreviations: NAL-NL2, National Acoustic Laboratories - Non Linear 2.

Acoustic Recordings

Background Noise Recordings

For background noise recordings, the foam mold of the hearing aid was plugged into the ear canal of a G.R.A.S KB0065 Knowles Electronic Manikin for Acoustic Research (KEMAR, Holte, Denmark) large artificial right ear. The artificial ear was connected to a G.R.A.S IEC60711 ear simulator, which in turn was connected to a Brüel & Kjær, Nærum, Denmark 2250 digital sound level meter. Averaged background noise was recorded for 30 seconds and for several conditions: an unblocked ear canal (i.e., room background noise) and hearing aid internal noise at three different gains for a universal normal-hearing fitting and for each subject-specific hearing-impaired fitting. All sessions were conducted in the same sound-treated booth, with octave room background noise levels at 16-, 13-, 12-, 22-, 16-, and 20 dB SPL at 0.25, 0.5, 1, 2, 4, and 8 kHz, respectively.

Simultaneous Signal-to-Noise Recordings

SNR recordings were conducted in the right ear of a Brüel & Kjær Head and Torso Simulator (HATS) 4128C, which was connected to a Brüel & Kjær 2636 measuring amplifier and an RME Audio, Haimhausen, Germany digital audio card. SNR was defined as the difference between signal and noise level (room background noise or hearing aid internal noise, whichever is the greater). It was not considered sufficient to record the hearing aid internal noise during noise-only periods, as hearing aid gain (and hence internal noise) changed during stimulus presentation due to compression or expansion. To account for this, the simultaneous SNR during stimulus presentation is estimated by presenting alternating-phase stimuli to obtain a more accurate SNR estimate. By appropriately adding and subtracting these alternating aided stimuli,42 a more realistic estimate could be obtained of both signal and noise during signal presentation by averaging over 24 stimulus presentations (12 of each phase).

Calculation of SNR and Audibility

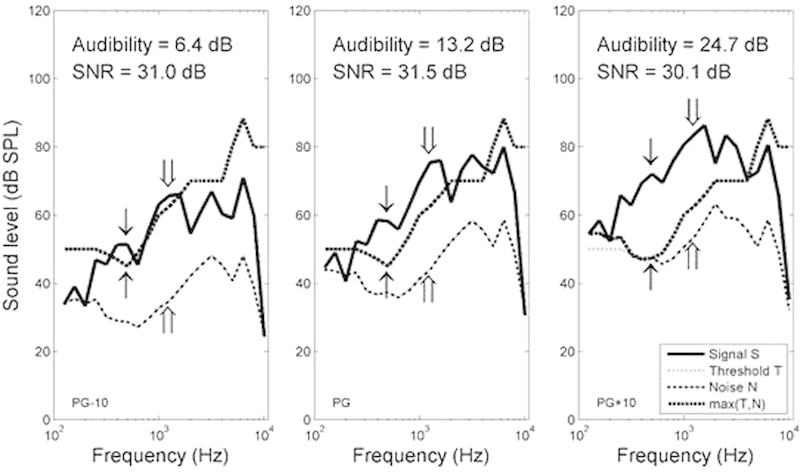

For all subjects, stimulus, and gain conditions, both SNR and audibility were derived from the acoustic recordings. The SNR was defined as described previously. Audibility constituted the difference between signal level and the maximum of hearing threshold and noise level. The SNR and audibility were estimated as the maximum value across different bands of the one-third octave spectrum on the basis that audibility will most strongly be determined by the frequency region in which it is greatest. Fig. 2 illustrates how SNR and audibility were defined for three different gains in a subject with a hearing loss. In this particular example, it can be observed that audibility increases considerably while SNR does not change with increasing hearing aid gain. In this case, audibility as a function of frequency is almost identical to sensation level, except for the lowest frequencies at the highest gain setting (right pane) where hearing aid noise becomes audible to the user with a hearing loss.

Figure 2.

Audibility and signal-to-noise ratio for three different gains in aided hearing-impaired subject 5 for speech sound /g/. All recordings are “simultaneous” (i.e., noise is measured during stimulus presentation). Audibility is defined as the maximum difference (across all one-third octaves) between S and the greater of T and N. In the left and middle panes, the T is greater than N for all frequencies. The down- and upward single lined arrow pair indicates the frequency at which audibility is highest. Audibility increases significantly with increasing gain. The SNR is expressed similarly, as the maximum difference (across all one-third octaves) between S and N. The down- and upward double lined arrow pair shows the frequency with the highest SNR. The SNR remains the same for all gains. Abbreviations: N, hearing aid noise; S, signal; SNR, signal-to-noise ratio; SPL, sound pressure level; T, hearing threshold.

Procedure

Participants gave informed consent prior to the experiment. Otoscopy was performed on both ears. Participants with wax presence that contraindicated the use of foam insert earphones in either ear were excluded from immediate participation in this study. Tympanometry was conducted in both ears using an Interacoustics Titan tympanometer (Middelfart, Denmark). Participants were reimbursed for travel costs with a gratuity of 30 Australian dollars.

Behavioral Assessment

All participants underwent pure tone audiometry for each ear using Etymotic Research ER-3A (Elk Grove Village, IL) foam insert earphones. Frequencies were tested at 0.25, 0.5, 1, 2, 4, and 8 kHz with an audiometer (Diagnostic Audiometer AD28, Interacoustics, Middelfart, Denmark). Stimuli were calibrated at 70 dB HL using a IEC126 HA2 2-cc coupler, incorporating a 1-inch 4144 microphone, a 1-to-½ inch DB0375 adaptor, and a 2231 sound level meter (all Brüel & Kjær) using the relevant International Organization for Standardization 389–2 norms.43

Hearing AID Fitting

For normal hearers, programs 1, 2, and 3 were equivalent to an overall flat +0, +10, and +20 dB insertion gain response from 250 to 6,000 Hz, respectively. The maximum gain of +20 dB was matched to the hearing aid gain of +20 dB used for normal hearers in Billings et al to allow replication of their data.30 For participants with a hearing loss, the three programs were fitted to the National Acoustic Laboratories - Non Linear (NAL-NL2) correct prescribed gain (+0 dB, program 1), to the prescribed gain minus 10 dB (−10 dB gain, program 2) and the prescribed gain plus 10 dB (+10 dB gain, program 3). This gain variation was therefore similar to that provided to the normal hearers. Real ear insertion gain measures were verified with an insert probe tube into the subject's ear and compared with 55, 65, and 75 dB SPL fitting targets. Attack and release times were measured in a hearing aid test box. Tables 1 and 2 present the relevant hearing aid parameters for both populations.

Cortical Recordings

The participants were kept awake and attentive during testing by allowing them to watch a silent movie with closed captions. An earplug was inserted into the subject's nontest ear. Electrode sites were prepared using a cotton applicator and electrode gel. Single-use Ambu Blue Sensor N (Copenhagen, Denmark) self-adhesive electrodes were used. The active electrode was placed on Cz, the reference electrode on the mastoid (randomized), and the common electrode on the high forehead.44 Electrode impedance was checked before and after each recording. If necessary the preparation was repeated to achieve an impedance under 5 kOhm between active and common, and between reference and common. During recording using the HEARLab system (Frye Electronics, Tigard, Oregon), the electroencephalogram activity was amplified by a factor of 1,210, and band-pass filtered between 0.3 and 30 Hz. The recording window consisted of a 200-millisecond prestimulus and a 600-millisecond poststimulus. Artifact rejection was set at ± 150 μV. Baseline correction was applied to each individual epoch based on the average over 100 milliseconds prior to stimulus onset.

A total of 12 recordings were conducted, comprising a test and retest of six different conditions. The conditions included three unaided presentations at 55, 65, and 75 dB SPL in the free field and three aided recordings at 55 dB SPL in the free field with three different gains. The presentation order was randomized. Each recording consisted of three speech sounds being rotated in blocks of 25, presented with a stimulus onset asynchrony of 1,125 milliseconds. Data acquisition stopped after 70 accepted epochs for each speech sound.

For the N1 component, the mean amplitude over a latency interval of 75 to 135 milliseconds was calculated. For the P2 component, the amplitude was averaged over the latency interval from 150 to 210 milliseconds. This approach was used to minimize the effect of residual noise in the averaged waveform on cortical response amplitude. The difference between P2 and N1 mean amplitudes was analyzed as the main dependent variable.

Results

Hearing Aid Background Noise Levels

Fig. 2 provides an example of signal and noise levels during stimulus presentation in an aided hearing-impaired user. It shows increasing hearing aid noise levels (and signal output) with increasing hearing aid gain.

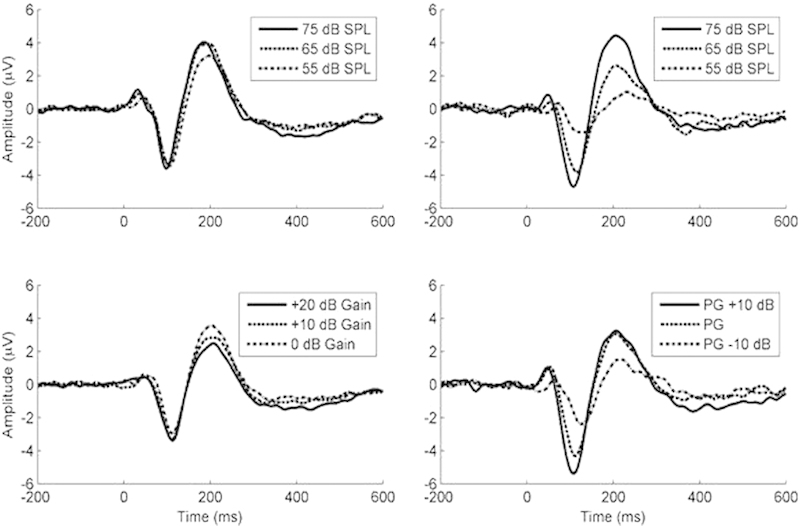

CAEPs

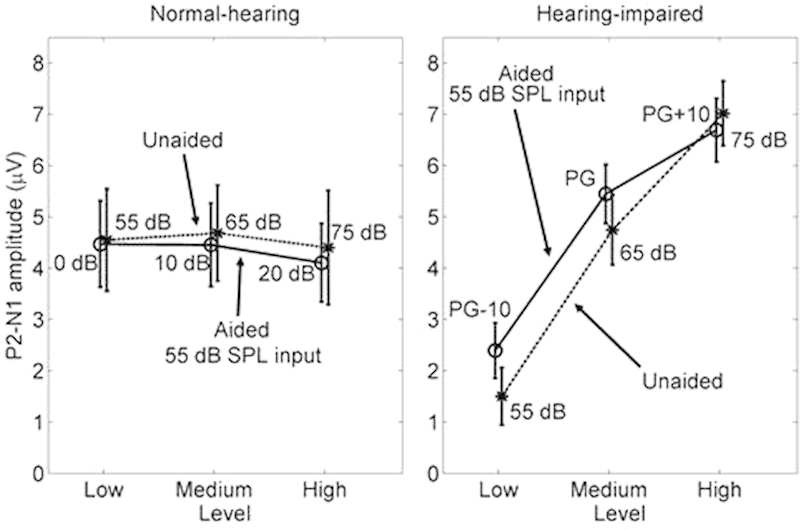

Fig. 3 shows the CAEP grand averages at three different output levels from 12 normal-hearing (left column) and 12 hearing-impaired (right column) listeners, for both unaided (top row) and aided conditions (bottom row). It is qualitatively clear that cortical responses grow significantly with increasing output level in hearing-impaired listeners only, be it either unaided (in response to changes in input level) or aided (in response to changes in hearing aid gain). This is confirmed quantitatively by Fig. 4, which displays P2-N1 CAEP mean amplitudes for the waveforms introduced in Fig. 3.

Figure 3.

Grand average cortical auditory evoked potentials representing 12 participants drawn from normal-hearing and hearing-impaired populations for aided and unaided conditions with three stimulus levels. Top left: Unaided normal hearers at 55, 65, and 75 dB SPL input level. Top right: Unaided participants with a hearing loss at 55, 65, and 75 dB SPL input level. Bottom left: Aided normal-hearing users with 0, 10, and 20 dB flat gain at 55 dB SPL input level. Bottom right: Aided hearing-impaired users with prescribed gain +0, −10, and +10 dB at 55 dB SPL input level. Abbreviations: PG, prescribed gain; SPL, sound pressure level.

Figure 4.

P2-N1 mean amplitudes at three different output levels for normal-hearing and hearing-impaired participants. Error bars denote 95% confidence intervals. Abbreviations: N1, negative peak around 100 milliseconds; P2, positive wave; SPL, sound pressure level.

A five-way analysis of variance (ANOVA) was performed on response amplitude with hearing loss status as a between-groups variable, and aiding condition (aided versus unaided), stimulus (/m/, /g/ and /t/), output level (low, medium, high) and testing condition (test, retest) as repeated-measures variables.

Output Level

A significant interaction effect was found between output level and hearing loss status (F(2,22) = 90.1, p < 0.00001). This indicates that the effect of output level is significantly different between the two groups. To investigate further, the effect of output level on CAEP amplitude was assessed for both populations using two four-way repeated-measures ANOVAs (aiding × output level × stimulus × test). For the normal hearers, no significant effect of level (F(2,22) = 1.59, p = 0.23) was found. Conversely, a significant effect (F(2,22) = 109.4, p < 0.00001) was found in listeners with a hearing loss. For the conversational-level speech sounds used in this experiment, the effect of stimulus level on CAEP amplitudes was therefore apparent only in hearing-impaired users.

Aiding

A significant interaction effect was found between aiding and hearing loss status (F(1,11) = 8.1, p = 0.016). This indicates that the effect of aiding is significantly different between the two groups. To investigate further, the effect of aiding on CAEP amplitude was assessed for each population separately using two four-way repeated-measures ANOVAs (aiding × output level × stimulus × test). For the normal hearers, no significant effect of aiding (F(1,11) = 0.95, p = 0.35) was found. Conversely, a significant effect (F(1,11) = 9.8, p = 0.009) was found in participants with a hearing loss. This suggests that the effect of aiding on CAEP amplitudes is only apparent in hearing-impaired users.

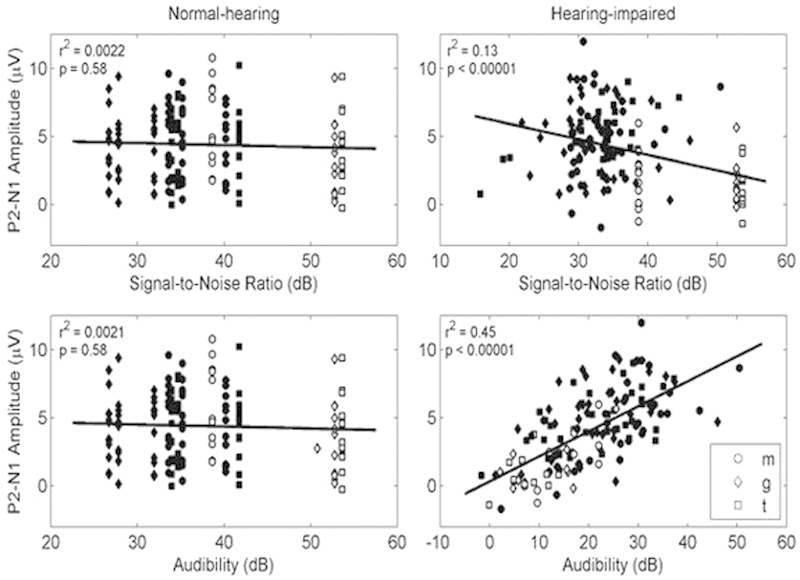

SNR versus Audibility

Fig. 5 shows four scatterplots for participants with normal hearing (left panes) and for participants with a hearing loss (right panes). The top row displays P2-N1 CAEP mean amplitudes versus (simultaneous) SNR, which is the difference between signal and background (maximum of room or hearing aid) noise level while the signal is presented. The bottom row presents P2-N1 CAEP mean amplitudes versus audibility, which is the difference between signal level and the greater of background noise level and the participant's hearing threshold.

Figure 5.

Scatterplots of cortical auditory evoked potential cortical auditory evoked potential mean amplitude versus signal-to-noise ratio and audibility, for normal-hearing and hearing-impaired participants. In both aided (closed markers) and unaided (open markers) conditions, only a 55 dB sound pressure level input level is shown. Abbreviations: N1, negative peak around 100 milliseconds; P2, positive wave.

For participants with a hearing loss, a significant negative correlation was found between CAEP amplitudes and SNR (r 2 = 0.13, p < 0.00001). This was unexpected as normally CAEP amplitudes do not reduce with increasing SNR.45 It is evident, however, that this correlation exists only because the CAEP amplitudes are lower in the unaided condition (for which the SNRs were high) than in the aided condition (for which the SNRs were lower). Although the SNR indeed worsens when any person is aided due to the introduction of hearing aid noise, aiding a person with a hearing loss improves audibility significantly, thus increasing CAEP amplitudes because audibility is the determining factor. This explanation is evidenced by the significant positive correlation between CAEP amplitudes and audibility (r 2 = 0.45, p < 0.00001) shown in the bottom right panel. When increasing hearing aid gain, signal levels and hearing aid noise both increase, with the latter mostly staying inaudible to the hearing-impaired listener.

For normal hearers, no significant correlation was found between CAEP amplitudes and both SNR and audibility (r 2 = 0.002, p = 0.58). Audibility and SNR are identical for a normal-hearing population because hearing thresholds in normal hearers are lower than any background noise during aided or unaided conditions. In addition, audibility is at such high levels that any increase does not affect the CAEP amplitude because CAEP amplitude plateaus with increasing audibility.

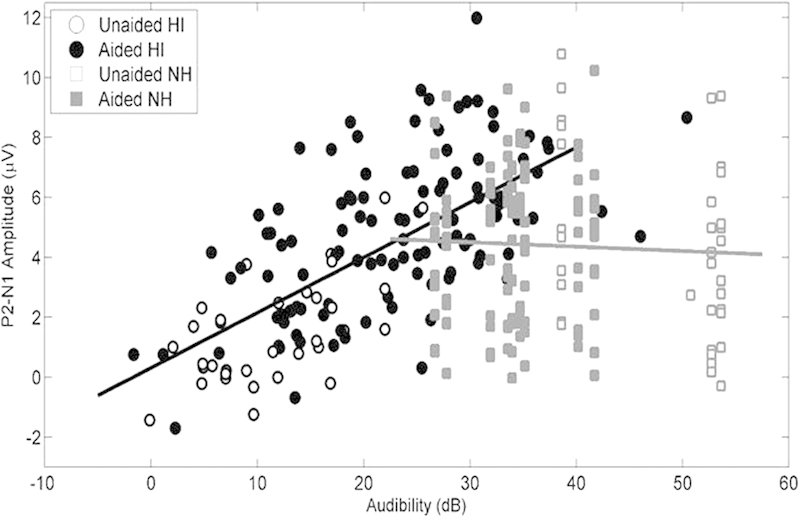

CAEP amplitudes are being determined by the audibility to the person and not just by the stimulus SNR, though these quantities are identical (for conversational level speech sounds) if one restricts the evaluation to a population with normal hearing. The relationship between CAEP amplitude and audibility seems to saturate for high levels of audibility, as shown in Fig. 6. This saturation is similar to what is observed in other research where increasing signal levels result in growing CAEPs up to a certain point before saturation sets in.46 47 For speech at conversational levels, Fig. 6 indicates that, whether aided or unaided, audibility for normal hearers is sufficiently high that variations in level have no effect on cortical response amplitude. Both Figs. 5 and 6 also indicate that for normal hearers, amplified speech has a lower audibility than unamplified speech due to the internal noise of the hearing aid.

Figure 6.

Scatterplot of P2-N1 mean amplitude versus audibility for both aided (closed markers) and unaided (open markers) conditions, and regression lines fitted to the data from each group of participants (black: hearing-impaired; gray: normal-hearing). Abbreviations: HI, hearing-impaired subjects; N1, negative peak around 100 milliseconds; NH, normal-hearing subjects; P2, positive wave around 180 milliseconds.

Discussion

There has been considerable discussion about the appropriateness of using evoked cortical responses to evaluate hearing aid fittings in people without the capacity to behaviorally respond, such as infants.31 32 48 The concerns originally arose from the finding that providing 20 dB of amplification did not change the amplitude of the cortical response,30 31 whereas changing the input level by 20 dB often does increase the amplitude of the cortical response for unaided people. The studies that gave rise to this concern were based on hearing aids fitted to research participants with normal hearing. For participants with normal hearing, hearing aids can only ever reduce the audibility of sounds (even if they increase the SPL), because the internal noise floor of hearing aids exceeds the equivalent internal noise floor of the normal human ear.49 By contrast, the equivalent input noise of hearing aids lies beneath the unaided threshold of people with hearing loss, so the hearing aid will increase both the SPL and the audibility for such listeners. Consequently, although the rationale behind using normal hearers is understandable as it does away with confounding factors being introduced when dealing with hearing loss (e.g., broadened auditory filters, recruitment), any caution against the use of CAEPs in hearing-impaired subjects based on studies with normal hearers are inappropriate as these populations are highly distinct. We should not expect hearing aids to have the same impact on the cortical responses of people with normal hearing that they do on people with hearing loss. The limitation of the previous studies has already been commented on by Tremblay et al.48

The results of this study confirm and expand upon the theoretical expectations outlined in the previous paragraph. Fig. 4 shows that for the hearing-impaired participants, amplification increased cortical response amplitude and greater amplification further increased response amplitude, but neither of these occurred for the normal-hearing participants. The measurements in this study show that there are two reasons why amplification had no effect on cortical response for the normal-hearing participants. First, Figs. 5 and 6 show that, as expected, amplification did not actually increase audibility of the stimuli for these participants. Second, as Fig. 6 shows, speech at conversational levels was already well above threshold, so that even if the audibility were to be increased by amplification, no further increase in cortical response amplitude would be expected, which as Fig. 4 displays was certainly the case. As Fig. 6 also shows, one can expect amplification to increase cortical response amplitude if it causes the audibility of speech to increase, but only up to audibilities of around 30 dB. Close inspection of Fig. 5 indicates that amplification did increase audibility (as expected) for the hearing-impaired participants, and as a consequence, so too did cortical response amplitude.

It therefore seems entirely reasonable to use evoked cortical responses to assess whether hearing aids have made speech audible, or increased the audibility of speech, for hearing-impaired people. It will commonly be the case that the audibility of conversational level speech will be low, or even negative when unaided. Calculations based on the speech spectra shown in Fig. 1 indicate that, for speech at 65 dB SPL or lower, the audibility of the stimuli used in this experiment was 20 dB or less for hearing loss of moderate degree or greater. This application of cortical responses has appropriately been referred to as using the cortical responses to assess the detection of speech.50 The presence of a CAEP tells us that at least something is being detected at the auditory cortex. This can be seen as important information about the first essential step for language development. It is relevant to point out that ∼25% of the hearing-impaired clients in pediatric audiology have additional disabilities,51 which often makes it more difficult to obtain behavioral information, even after these children reach an age where normally developing children can perform behavioral hearing tests like visual reinforcement audiometry (VRA), typically at an age of 6–8 months.

Although the original issue of amplification seeming to have no effect on cortical responses need not be considered further based on these findings, discussion in the literature over the appropriateness of using cortical potentials to assess hearing aid fittings has raised several other issues that might affect the validity of assessing hearing aid fittings using cortical response measurement. Hearing aids typically employ nonlinear processing, which certainly can change the acoustics of speech, either by design or as a by-product of their intended effect. This is not inherently a problem. If the purpose of performing cortical response measurement is to determine if speech is audible when the hearing aid is being worn in real life, then it is desirable that the hearing aid also affects the acoustics of the speech signal during the cortical response measurement.

The potential problem arises only if the hearing aid has a different effect on the speech sounds used for cortical response measurement than it does when those same speech sounds occur in the midst of ongoing speech. Nonlinear schemes primarily comprise compression to reduce the dynamic range of the signal, low-level expansion to attenuate low-level internal hearing aid noise, adaptive noise reduction to attenuate high-level external noise, and frequency compression to reduce the bandwidth of the signal. Each of these schemes has to be implemented with some time constants that control the rate at which the amplification characteristics change when the characteristics of the input signal (speech, noise, or both) change. Given that cortical responses are typically measured with brief sounds spaced apart by a second or so, there is certainly the potential for these sounds to be amplified differently in isolation than they are in their original speech context. Compression can cause their onset to be amplified with more gain than it would receive in ongoing speech. Low-level expansion (if the interstimulus interval is long enough to activate it) can cause the opposite to occur. Adaptive noise reduction is unlikely to have any effect if the cortical response is being measured in a quiet place. The limited research that has so far occurred on this topic indicates that changes in the shape of the speech stimuli caused by nonlinear processing do not significantly affect the resulting cortical responses.52 Similarly, despite the undoubted potential for the gain applied to a speech sound to vary with the context in which the speech sound occurs, the variations in gain so far measured seem to not be large. However, it has been reported that in up to 13% of tested phonemes, the increased gain in running speech—when compared with isolated speech sounds—exceeded that of an audiometric step size of 5 dB and therefore may be clinically important. Hence, if output levels of phonemes in running speech are considered to be the reference condition of interest, CAEP test measures may slightly underestimate audibility when phonemes are presented in isolation.53 Similarly, in an unaided condition, larger CAEPs have been obtained to /sh/ sounds in a medial position of a word when compared with the initial location.54 These differences were attributed to the sound in the medial position having a more abrupt onset than at the start of the word. Past research has also confirmed that the increased audibility of high-frequency sounds caused by nonlinear frequency compression can be observed via measurement of cortical responses.55 56

Some of the issues raised in the literature are relevant: the effects of attack/release times, compression ratios, stimulus length, context (i.e., isolated versus running speech), dynamic noise reduction, and frequency compression or transposition on effective gain and stimulus waveform should be investigated further. In addition, it would be interesting to develop a longitudinal design to explore how the brain adjusts to these altered speech cues, to analyze whether the cortical measures correlate with speech intelligibility for real words and sentences, and to determine a reliable way (e.g., ACC16 or mismatch negativity57) to evaluate a person's (future) ability to discriminate between speech sounds and develop language. Some studies have been recently published about the effects of frequency transposition and running speech on CAEPs,54 55 56 and more specifically the difference between onset and offset responses.58 The effect of hearing aids on consonant-vowel transitions59 (recorded using ACCs) also has been investigated. It is clear more research is needed, but so far the effect of gain, SNR and audibility on CAEPs is established.

Conclusion

This study investigated the effect of hearing aid gain on CAEP amplitudes of participants with normal hearing and participants with hearing loss. It also examined the relationship between cortical responses, SNR, and audibility. Results showed that CAEP amplitudes significantly increased with hearing aid gain only for the hearing-impaired participants and not for the normal-hearing participants. The increase in CAEP amplitude for the hearing-impaired participants was associated with the increased audibility that amplification caused. For the normal-hearing participants, audibility was already high when unaided and did not increase when aided, despite the increased stimulus amplitude, because internal hearing aid noise reduced the SNR. These results have implications for testing in aided hearing-impaired individuals: an effect of aiding should be expected for CAEP amplitudes in this subject group, but not necessarily with normal-hearing individuals. Hence, any future investigations in relation with hearing aids should at least be conducted with a group of hearing-impaired subjects.

Acknowledgments

The authors acknowledge the financial support of the HEARing CRC, established and supported under the Cooperative Research Centres Program—an initiative of the Australian Government, the National Acoustic Laboratories the Australian Government Department of Health for its financial support, all participants in this study, and the reviewers for their valuable comments.

References

- 1.Martin B A, Tremblay K L, Stapells D R. Philadelphia, PA: Lippincott Williams and Wilkins; 2007. Principles and applications of cortical auditory evoked potentials; pp. 482–507. [Google Scholar]

- 2.Ponton C W, Eggermont J J, Kwong B, Don M. Maturation of human central auditory system activity: evidence from multi-channel evoked potentials. Clin Neurophysiol. 2000;111(2):220–236. doi: 10.1016/s1388-2457(99)00236-9. [DOI] [PubMed] [Google Scholar]

- 3.Sharma A, Dorman M F, Spahr A J. A sensitive period for the development of the central auditory system in children with cochlear implants: implications for age of implantation. Ear Hear. 2002;23(6):532–539. doi: 10.1097/00003446-200212000-00004. [DOI] [PubMed] [Google Scholar]

- 4.Hyde M. The N1 response and its applications. Audiol Neurootol. 1997;2(5):281–307. doi: 10.1159/000259253. [DOI] [PubMed] [Google Scholar]

- 5.Lightfoot G. Summary of the N1–P2 cortical auditory evoked potential to estimate the auditory threshold in adults. Semin Hear. 2016;37(01):1–8. doi: 10.1055/s-0035-1570334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dillon H. So, baby, how does it sound? Cortical assessment of infants with hearing aids. Hear J. 2005;58(10):10–17. [Google Scholar]

- 7.Punch S, Van Dun B, King A, Carter L, Pearce W. Clinical experience of using cortical auditory evoked potentials in the treatment of infant hearing loss in Australia. Semin Hear. 2016;37(01):36–52. doi: 10.1055/s-0035-1570331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kosaner J Bayguzina S Gültekin M Monitoring adequacy of audio processor programs and auditory maturity using aided cortical assessment (ACA) Paper presented at: World Congress of Audiology; May 3–7, 2014; Brisbane, Australia

- 9.Gilley P M, Sharma A, Dorman M, Finley C C, Panch A S, Martin K. Minimization of cochlear implant stimulus artifact in cortical auditory evoked potentials. Clin Neurophysiol. 2006;117(8):1772–1782. doi: 10.1016/j.clinph.2006.04.018. [DOI] [PubMed] [Google Scholar]

- 10.Mc Laughlin M, Lopez Valdes A, Reilly R B, Zeng F G. Cochlear implant artifact attenuation in late auditory evoked potentials: a single channel approach. Hear Res. 2013;302:84–95. doi: 10.1016/j.heares.2013.05.006. [DOI] [PubMed] [Google Scholar]

- 11.Friesen L M, Picton T W. A method for removing cochlear implant artifact. Hear Res. 2010;259(1–2):95–106. doi: 10.1016/j.heares.2009.10.012. [DOI] [PubMed] [Google Scholar]

- 12.Sharma A, Campbell J, Cardon G. Developmental and cross-modal plasticity in deafness: evidence from the P1 and N1 event related potentials in cochlear implanted children. Int J Psychophysiol. 2015;95(2):135–144. doi: 10.1016/j.ijpsycho.2014.04.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sharma A, Martin K, Roland P. et al. P1 latency as a biomarker for central auditory development in children with hearing impairment. J Am Acad Audiol. 2005;16(8):564–573. doi: 10.3766/jaaa.16.8.5. [DOI] [PubMed] [Google Scholar]

- 14.Ponton C W, Don M, Eggermont J J, Waring M D, Kwong B, Masuda A. Auditory system plasticity in children after long periods of complete deafness. Neuroreport. 1996;8(1):61–65. doi: 10.1097/00001756-199612200-00013. [DOI] [PubMed] [Google Scholar]

- 15.Purdy S C, Kelly A S. Changes in speech perception and auditory evoked potentials over time after unilateral cochlear implantation in postlinguistically deaf adults. Semin Hear. 2016;37(01):62–73. doi: 10.1055/s-0035-1570329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Martin B A, Boothroyd A. Cortical, auditory, evoked potentials in response to changes of spectrum and amplitude. J Acoust Soc Am. 2000;107(4):2155–2161. doi: 10.1121/1.428556. [DOI] [PubMed] [Google Scholar]

- 17.Won J H, Clinard C G, Kwon S. et al. Relationship between behavioral and physiological spectral-ripple discrimination. J Assoc Res Otolaryngol. 2011;12(3):375–393. doi: 10.1007/s10162-011-0257-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ross B, Tremblay K L, Picton T W. Physiological detection of interaural phase differences. J Acoust Soc Am. 2007;121(2):1017–1027. doi: 10.1121/1.2404915. [DOI] [PubMed] [Google Scholar]

- 19.Miller S, Zhang Y. Neural coding of phonemic fricative contrast with and without hearing aid. Ear Hear. 2014;35(4):e122–e133. doi: 10.1097/AUD.0000000000000025. [DOI] [PubMed] [Google Scholar]

- 20.Picton T. Hearing in time: evoked potential studies of temporal processing. Ear Hear. 2013;34(4):385–401. doi: 10.1097/AUD.0b013e31827ada02. [DOI] [PubMed] [Google Scholar]

- 21.Tomlin D, Rance G. Maturation of the central auditory nervous system in children with auditory processing disorder. Semin Hear. 2016;37(01):74–83. doi: 10.1055/s-0035-1570328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tremblay K, Kraus N, McGee T, Ponton C, Otis B. Central auditory plasticity: changes in the N1-P2 complex after speech-sound training. Ear Hear. 2001;22(2):79–90. doi: 10.1097/00003446-200104000-00001. [DOI] [PubMed] [Google Scholar]

- 23.Barlow N, Purdy S C, Sharma M, Giles E, Narne V. The effect of short-term auditory training on speech in noise perception and cortical auditory evoked potentials in adults with cochlear implants. Semin Hear. 2016;37(01):84–98. doi: 10.1055/s-0035-1570335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hoppe U, Rosanowski F, Iro H, Eysholdt U. Loudness perception and late auditory evoked potentials in adult cochlear implant users. Scand Audiol. 2001;30(2):119–125. doi: 10.1080/010503901300112239. [DOI] [PubMed] [Google Scholar]

- 25.Bidelman G M, Villafuerte J W, Moreno S, Alain C. Age-related changes in the subcortical-cortical encoding and categorical perception of speech. Neurobiol Aging. 2014;35(11):2526–2540. doi: 10.1016/j.neurobiolaging.2014.05.006. [DOI] [PubMed] [Google Scholar]

- 26.Ross B, Fujioka T, Tremblay K L, Picton T W. Aging in binaural hearing begins in mid-life: evidence from cortical auditory-evoked responses to changes in interaural phase. J Neurosci. 2007;27(42):11172–11178. doi: 10.1523/JNEUROSCI.1813-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Tremblay K L, Piskosz M, Souza P. Effects of age and age-related hearing loss on the neural representation of speech cues. Clin Neurophysiol. 2003;114(7):1332–1343. doi: 10.1016/s1388-2457(03)00114-7. [DOI] [PubMed] [Google Scholar]

- 28.Barnet A B. EEG audiometry in children under three years of age. Acta Otolaryngol. 1971;72(1):1–13. doi: 10.3109/00016487109122450. [DOI] [PubMed] [Google Scholar]

- 29.Rapin I, Graziani L J. Auditory-evoked responses in normal, brain-damaged, and deaf infants. Neurology. 1967;17(9):881–894. doi: 10.1212/wnl.17.9.881. [DOI] [PubMed] [Google Scholar]

- 30.Billings C J, Tremblay K L, Souza P E, Binns M A. Effects of hearing aid amplification and stimulus intensity on cortical auditory evoked potentials. Audiol Neurootol. 2007;12(4):234–246. doi: 10.1159/000101331. [DOI] [PubMed] [Google Scholar]

- 31.Billings C J, Tremblay K L, Miller C W. Aided cortical auditory evoked potentials in response to changes in hearing aid gain. Int J Audiol. 2011;50(7):459–467. doi: 10.3109/14992027.2011.568011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Marynewich S, Jenstad L M, Stapells D R. Slow cortical potentials and amplification—Part I: N1–P2 measures. Int J Otolaryngol. 2012;2012:921513. doi: 10.1155/2012/921513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Jenstad L M, Marynewich S, Stapells D R. Slow cortical potentials and amplification—Part II: Acoustic measures. Int J Otolaryngol. 2012;2012:386542. doi: 10.1155/2012/386542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Cody D TR, Klass D W. Cortical audiometry. Potential pitfalls in testing. Arch Otolaryngol. 1968;88(4):396–406. doi: 10.1001/archotol.1968.00770010398012. [DOI] [PubMed] [Google Scholar]

- 35.Korczak P A, Kurtzberg D, Stapells D R. Effects of sensorineural hearing loss and personal hearing aids on cortical event-related potential and behavioral measures of speech-sound processing. Ear Hear. 2005;26(2):165–185. doi: 10.1097/00003446-200504000-00005. [DOI] [PubMed] [Google Scholar]

- 36.Souza P E, Tremblay K L. New perspectives on assessing amplification effects. Trends Amplif. 2006;10(3):119–143. doi: 10.1177/1084713806292648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Carter L, Golding M, Dillon H, Seymour J. The detection of infant cortical auditory evoked potentials (CAEPs) using statistical and visual detection techniques. J Am Acad Audiol. 2010;21(5):347–356. doi: 10.3766/jaaa.21.5.6. [DOI] [PubMed] [Google Scholar]

- 38.Carter L, Dillon H, Seymour J, Seeto M, Van Dun B. Cortical auditory-evoked potentials (CAEPs) in adults in response to filtered speech stimuli. J Am Acad Audiol. 2013;24(9):807–822. doi: 10.3766/jaaa.24.9.5. [DOI] [PubMed] [Google Scholar]

- 39.Chang H W, Dillon H, Carter L, van Dun B, Young S T. The relationship between cortical auditory evoked potential (CAEP) detection and estimated audibility in infants with sensorineural hearing loss. Int J Audiol. 2012;51(9):663–670. doi: 10.3109/14992027.2012.690076. [DOI] [PubMed] [Google Scholar]

- 40.Golding M, Dillon H, Seymour J, Carter L. The detection of adult cortical auditory evoked potentials (CAEPs) using an automated statistic and visual detection. Int J Audiol. 2009;48(12):833–842. doi: 10.3109/14992020903140928. [DOI] [PubMed] [Google Scholar]

- 41.Van Dun B, Carter L, Dillon H. Sensitivity of cortical auditory evoked potential detection for hearing-impaired infants in response to short speech sounds. Audiol Res. 2012;2e13:65–76. doi: 10.4081/audiores.2012.e13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hagerman B, Olofsson Å. A method to measure the effect of noise reduction algorithms using simultaneous speech and noise. Acta Acust United Acust. 2004;90(2):356–361. [Google Scholar]

- 43.International Organisation for Standardization Acoustics—Reference zero for the calibration of audiometric equipment—Part 2: Reference equivalent threshold sound pressure levels for pure tones and insert earphones Geneva, Switzerland; 1994

- 44.American Electroencephalographic Society . American Electroencephalographic Society guidelines for standard electrode position nomenclature. J Clin Neurophysiol. 1991;8(2):200–202. [PubMed] [Google Scholar]

- 45.Billings C Acoustic signal-to-noise ratios dominates auditory long latency responses International Evoked Response Audiometry Study Group. June 9–13, 2013; New Orleans, LA, USA

- 46.Picton T W, Woods D L, Baribeau-Braun J, Healey T MG. Evoked potential audiometry. J Otolaryngol. 1976;6(2):90–119. [PubMed] [Google Scholar]

- 47.Ross B, Lütkenhöner B, Pantev C, Hoke M. Frequency-specific threshold determination with the CERAgram method: basic principle and retrospective evaluation of data. Audiol Neurootol. 1999;4(1):12–27. doi: 10.1159/000013816. [DOI] [PubMed] [Google Scholar]

- 48.Tremblay K L, Billings C J, Friesen L M, Souza P E. Neural representation of amplified speech sounds. Ear Hear. 2006;27(2):93–103. doi: 10.1097/01.aud.0000202288.21315.bd. [DOI] [PubMed] [Google Scholar]

- 49.Killion M C. Noise of ears and microphones. J Acoust Soc Am. 1976;59(2):424–433. doi: 10.1121/1.380886. [DOI] [PubMed] [Google Scholar]

- 50.Billings C J, Papesh M A, Penman T M, Baltzell L S, Gallun F J. Clinical use of aided cortical auditory evoked potentials as a measure of physiological detection or physiological discrimination. Int J Otolaryngol. 2012;2012:365752. doi: 10.1155/2012/365752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Ching T YC, Dillon H, Marnane V. et al. Outcomes of early- and late-identified children at 3 years of age: findings from a prospective population-based study. Ear Hear. 2013;34(5):535–552. doi: 10.1097/AUD.0b013e3182857718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Easwar V, Glista D, Purcell D W, Scollie S D. Hearing aid processing changes tone burst onset: effect on cortical auditory evoked potentials in individuals with normal audiometric thresholds. Am J Audiol. 2012;21(1):82–90. doi: 10.1044/1059-0889(2012/11-0039). [DOI] [PubMed] [Google Scholar]

- 53.Easwar V, Purcell D W, Scollie S D. Electroacoustic comparison of hearing aid output of phonemes in running speech versus isolation: implications for aided cortical auditory evoked potentials testing. Int J Otolaryngol. 2012;2012:518202. doi: 10.1155/2012/518202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Easwar V, Glista D, Purcell D W, Scollie S D. The effect of stimulus choice on cortical auditory evoked potentials (CAEP): consideration of speech segment positioning within naturally produced speech. Int J Audiol. 2012;51(12):926–931. doi: 10.3109/14992027.2012.711913. [DOI] [PubMed] [Google Scholar]

- 55.Ching T YC, Zhang V, Hou S, Van Buynder P. Cortical auditory evoked potentials reveal changes in audibility with nonlinear frequency compression in hearing aids for children: clinical implications. Semin Hear. 2016;37(01):25–35. doi: 10.1055/s-0035-1570332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Glista D, Easwar V, Purcell D W, Scollie S D. A pilot study on cortical auditory evoked potentials in children: aided CAEPs reflect improved high-frequency audibility with frequency compression hearing aid technology. Int J Otolaryngol. 2012;2012:982294. doi: 10.1155/2012/982894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Picton T W, Alain C, Otten L, Ritter W, Achim A. Mismatch negativity: different water in the same river. Audiol Neurootol. 2000;5(3–4):111–139. doi: 10.1159/000013875. [DOI] [PubMed] [Google Scholar]

- 58.Billings C J. Uses and limitations of electrophysiology with hearing aids. Semin Hear. 2013;34(4):257–269. doi: 10.1055/s-0033-1356638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Tremblay K L, Kalstein L, Billings C J, Souza P E. The neural representation of consonant-vowel transitions in adults who wear hearing aids. Trends Amplif. 2006;10(3):155–162. doi: 10.1177/1084713806292655. [DOI] [PMC free article] [PubMed] [Google Scholar]