Abstract

This study investigated whether a short intensive psychophysical auditory training program is associated with speech perception benefits and changes in cortical auditory evoked potentials (CAEPs) in adult cochlear implant (CI) users. Ten adult implant recipients trained approximately 7 hours on psychophysical tasks (Gap-in-Noise Detection, Frequency Discrimination, Spectral Rippled Noise [SRN], Iterated Rippled Noise, Temporal Modulation). Speech performance was assessed before and after training using Lexical Neighborhood Test (LNT) words in quiet and in eight-speaker babble. CAEPs evoked by a natural speech stimulus /baba/ with varying syllable stress were assessed pre- and post-training, in quiet and in noise. SRN psychophysical thresholds showed a significant improvement (78% on average) over the training period, but performance on other psychophysical tasks did not change. LNT scores in noise improved significantly post-training by 11% on average compared with three pretraining baseline measures. N1P2 amplitude changed post-training for /baba/ in quiet (p = 0.005, visit 3 pretraining versus visit 4 post-training). CAEP changes did not correlate with behavioral measures. CI recipients' clinical records indicated a plateau in speech perception performance prior to participation in the study. A short period of intensive psychophysical training produced small but significant gains in speech perception in noise and spectral discrimination ability. There remain questions about the most appropriate type of training and the duration or dosage of training that provides the most robust outcomes for adults with CIs.

Keywords: Cochlear implant, cortical auditory evoked potential, auditory rehabilitation, auditory training, auditory plasticity, speech in noise

Learning Outcomes: As a result of this activity, the participant will be able to (1) describe auditory training tasks used in the literature; (2) list outcome measures used for determining efficacy of auditory rehabilitation.

Difficulty understanding speech in noise is a major factor contributing to poor uptake and usage of hearing devices. Although hearing devices are regarded as a primary rehabilitation option for people with hearing loss (HL), their usage does not always result in successfully understanding speech in noise. A review of adult cochlear implant (CI) users in Victoria, Australia reported median speech perception scores of 68%.1 These scores were achieved when listening to single words presented in quiet; results were poorer and more variable when listening in noise. On average, adults with HL wearing CIs performed similarly to adults with severe HL wearing bilateral hearing aids (HAs).1 HA wearers commonly report difficulties when trying to understand speech in noise, and this is one of the main reasons behind poor uptake of hearing instruments.2 As identified in a recent review surveys from the United Kingdom, United States, Finland, Denmark, and Australia reveal that only 20 to 40% of individuals who could benefit from hearing devices actually seek and receive them, and of those who do access them, up to 40% use them rarely or not at all.3 This is of particular concern, because, when HL is left untreated, it may have a significant negative impact both on the person with HL and communication partners. People with HL experience more depression, social isolation, diminished capacity to learn, cognitive decline, and reduced overall quality of life compared with people with normal hearing.4 5 6 Many people with HL withdraw from community life and avoid interpersonal interactions.7 Researchers have investigated auditory training as a method for reducing the negative effects of HL on speech perception and other auditory abilities.

Auditory training typically focuses on improving auditory discrimination skills and almost without exception uses speech stimuli.8 9 The effect of training is variable, with either slight to moderate gains in speech perception or no benefit, depending on the training protocol used and the duration of the training.10 A recently published review of auditory training programs (13 studies) for adults with mild to severe HL using hearing devices found significant variation in the types of training offered.8 Stimuli included sentences, words, and/or nonsense syllables; the duration of training ranged from 2 weeks to 12 weeks; and the training was based either at home or in the clinic. Although most studies showed that participants improved their performance on the specific task on which they were trained, results were mixed as to whether the training led to improved functional outcomes, such as improved understanding of speech in noise. Optimal auditory training parameters are still not known.

CI recipients have variable outcomes, especially for speech perception in noise regardless of their performance in quiet, despite enormous improvements in the technology.10 Several factors contribute to variable CI outcomes such as duration of HL and age of onset of the severe to profound HL.11 Auditory training has been studied with mixed results to improve speech recognition in noise for CI users.12 13 The effects of a brief period of speech perception training have also been investigated in adults with normal hearing listening to CI-simulated speech.9 The premise behind many training studies is that speech perception in noise engages a range of auditory processes and auditory training can strengthen these processes and hence improve speech perception.13 Several auditory training studies have investigated the impact of short-term training on speech perception in adults with CIs.8 10 13 14 For instance, a 2005 study of four weeks of training showed some benefits in quiet,12 although in a later study,15 the same authors discussed the importance of variability in the baseline performance. In the study, the authors go on to recommend the use of within-subject control studies especially for this population. In another short-term (3 weeks) computer-based training study in adults with CI, the authors found that the training assisted in consonant discrimination but did not generalize to sentence perception.14 Another 4-week auditory training study found that digit perception in noise improved and the improvements were sustained for at least 1 month after the training ended but that the improvements did not generalize to sentences.16 A 2008 auditory training study found that after training 24 hours over 6 weeks using a commercial program, perception of Hearing In Noise Test (HINT) sentences in noise improved, and this change was not observed in the control group.17 18 In a more recent study, 4 days of auditory training in adults with CIs produced showed some changes for speech perception in noise but only at favorable 15- and 10-dB signal-to-noise ratios (SNRs).13

The authors of these training studies acknowledge that the aim of auditory training is a significant improvement in speech perception in noise, which is hard to attain.8 Overall, the results to date are encouraging; however, several factors are not well understood. These include duration of training, amount of training or dosage, and the type of material or training activity.8 Hence the current study used a range of training tasks that are known to correlate with speech perception such as frequency discrimination, envelope cues, temporal fine structure, and temporal resolution. The premise of capturing various auditory processes in the training was that engaging the central auditory system using different psychophysical tasks would improve speech perception in noise.

Cortical auditory evoked potentials (CAEPs) have been used to evaluate auditory function in adults with CIs.19 Adults with normal hearing display a P1, N1, and P2 CAEP response; these peaks are also evident in adults with CIs. However, there are some differences in morphology associated with the duration and/or onset of HL.19 CAEPs are a useful objective measure of auditory function as they correlate with speech perception in experienced CI users, are influenced by auditory experience, and can be used as an objective research measure of auditory plasticity.19 20 There is some evidence for cortical auditory plasticity and auditory training benefits for speech perception in older adults with HL using HAs and CIs.8 21 22 The correlation between CAEPs and behavioral measures of auditory function suggests that CAEPs could be used to objectively evaluate the benefits of auditory training with minimal influence of nonauditory factors such as motivation.23 Although CAEPs are not essential for observing training effects, they are useful for determining whether there are neurophysiologic changes associated with the training.23 24 The current study therefore used both behavioral tasks (temporal and spectral discrimination, word recognition in noise) and CAEPs to measure the outcomes of auditory training.

Spectrotemporal encoding of speech is essential for speech perception in poor SNRs.25 For simple temporal tasks, such as gaps in noise, CI users may be able to perform at levels similar to normal hearing controls.26 For simple spectral tasks such as frequency discrimination, CI users struggle to achieve the sensitivity achieved by people with normal hearing.27 28 The question remains as to whether this is a limitation of current CI technology or whether CI users have the capacity to perceive subtle acoustic differences and perhaps training psychophysical abilities may improve these abilities. Maarefvand et al investigated a CI recipient who had the advantage of intense preimplantation auditory training, as well as having normal hearing for many years prior to losing his hearing.29 This CI user could discriminate between sounds one semitone apart, ability beyond that of the majority of CI users. Maarefvand et al concluded that CIs are able to code enough detail for a highly sensitive auditory system to be able to discriminate sounds at near normal hearing performance and that this CI user's high performance was related to the amount of auditory training undergone earlier in life.29 Intense auditory training postimplantation rather than preimplantation is the more usual model of auditory training for CI users, however.29 This case study suggests it is not necessarily CI technology that limits perception, but rather the ability of the CI user's auditory nervous system to process sounds.

Gap detection,30 temporal modulation discrimination (slow temporal processing),31 and spectral ripple thresholds correlate strongly with speech perception in experienced CI users.32 33 Because of the variable speech perception and psychophysical abilities of CI users, it is of interest to know whether these abilities can change postimplantation with intense, short-term auditory training.34 Temporal fine structure cues are needed for speech recognition in noise, and perception of these cues is compromised in people with HL.35 Processing of temporal fine structure was included in the auditory training paradigm in the Iterated Rippled Noise (IRN) task.36 Frequency discrimination has also been identified as challenging for CI users and hence was also included in the auditory training paradigm.37

There are no clear guidelines regarding the optimal duration and intensity of training. Compliance with training regimens is challenging, and hence shorter training periods may be more effective clinically.38 Little is known about whether speech perception and other aspects of auditory processing are trainable via psychophysical or music listening tasks and whether training effects can be distinguished from passive learning effects for CI users. There is evidence that short-term bouts of training can be advantageous for both auditory and cognitive processing.39 For example, 3 months of music training delivered at the rate of 3 hours of piano lessons per week improved memory and processing speed in older adults.40 Short-term music training also has been successful in improving music perception in CI users.10 CI users' speech perception may be regulated by their psychophysical abilities, and passive learning can influence these to an extent.10 This may explain research evidence for a link between duration of CI experience and performance41; the longer the duration of CI use, the more passive learning opportunities experienced. The current study investigated the effects of a short, 1-week period of spectrotemporal psychophysical training on speech recognition in noise and CAEPs to speech stimuli. We hypothesized that training over the week would result in improved performance on gap detection, spectral ripple noise, IRN, and temporal modulation discrimination tasks, and that this improvement in psychophysical abilities would be associated with improved word recognition scores and CAEPs.

Methods

Participants

Ten participants aged 39 to 78 years (mean = 55.36, standard deviation [SD] = 13.67) with 1.7 to 4.3 years of CI experience (mean = 3.01, SD = 0.96) undertook objective and behavioral measurements four times, three times prior to (to explain a stable performance baseline) and once after training. Participants were recruited from the New Zealand Northern Cochlear Implant Program. Adult CI users considered by their rehabilitationist to have a clinically stable MAP, with good clinical speech discrimination abilities (>50% score for HINT) sentences in quiet) and a single-sided implant, who had their implant a minimum of 15 months were considered eligible for the study. Participants' HINT sentence recognition scores in quiet ranged from 52 to 100% correct (mean = 85.0, SD = 21.9). No noise suppression programs were active during the assessments. All 10 participants had Advanced Combined Encoder strategies and were either using a Cochlear Freedom or Nucleus 5 (Cochlear, Australia) implant system. Six participants were prelingually deafened (Table 1). The research was approved by the University of Auckland Human Participants Ethics Committee.

Table 1. Details of the 10 Participants.

| CI, Duration of Implant (y) | Age of Aiding (y) | Age of Implant (y) | Implant Ear | Probable Cause of Hearing Loss |

|---|---|---|---|---|

| CI 1, 3 | Early but only regular since 18 | 57 | Left | Possibly genetic |

| CI 2, 1 | 1 | 44.9 | Left | Rubella |

| CI 3, 2.5 | 1 | 48 | Right | Rubella |

| CI 4, 1.5 | 12 | 37 | Left | Progressive in last 13–14 y |

| CI 5, 3 | 3 | 41.8 | Right | Fever |

| CI 6, 3 | 5 | 57.6 | Left | Hereditary |

| CI 7, 3.3 | 39 | 66.9 | Left | Otosclerosis/progressive |

| CI 8, 5.1 | 35 | 74.9 | Left | Congenital progressive |

| CI 9, 3.2 | 8 | 69.1 | Left | Progressive sensorineural |

| CI 10, 2.5 | 5 | 38 | Right | Progressive, 5 y, profound |

Auditory Training

There were four visits to the laboratory with 7 days of training between visits 3 and 4. The first trip to the laboratory is referred to as visit 1, the following day was visit 2 to gather test-retest information; visit 3 was 7 days later , followed by visit 4, which was 7 days after visit 3. Seven days of psychophysical auditory training was undertaken between visits 3 and 4; these are referred to as training days 1 to 7, with a control period of no training between visits 2 and 3. Participants were provided with a laptop and direct input lead at visit 3 after the initial three assessments. One week of home-based auditory training was undertaken on seven custom-designed APEX 3 programs,42 outlined in Table 2. The training protocol took ∼1 hour per day. Completion of the training protocol was confirmed for each participant at the end of the 7 days by examining the daily data files for each APEX program.

Table 2. Details of Psychophysical Tasks Trained for 7 Days.

| Task Type | Task Name | Format | Measurement |

|---|---|---|---|

| Temporal | Gap-in-Noise | 3AFC, staircase | Gap threshold (ms) |

| TMTF | 3AFC, staircase | Amplitude modulation depth (dB) | |

| IRN | 3AFC, staircase | IRN to noise ratio | |

| Spectral | Frequency Discrimination | 2AFC, yes-no task | Frequency (Hz) |

| SRN | 3AFC, staircase | Number of ripples per octave |

Abbreviations: 3AFC, three-alternative forced choice; IRN, Iterated Ripple Noise; SRN, Spectral Ripple Noise; TMTF, Temporal Modulation Transfer Function.

Training Tasks

All participants were trained on temporal and spectral tasks. These were presented in an adaptive manner. Gap-in-Noise and frequency discrimination tasks used temporally or spectrally simple stimuli, whereas the two rippled noise tasks and temporal modulation transfer function (TMTF) used complex noise stimuli. All tasks used a three-alternative forced choice protocol; participants had to identify the odd stimulus when three stimuli were presented. The presentation of the stimuli was through the direct input from the laptop to the implant processor. All tasks had to be completed each day and hence the overall training was about 1 hour. Participants were not told a specific order for completing the tasks. Each participant had a folder created on the computer, and most participants undertook the training tasks in alphabetical order (frequency discrimination first, TMTF last). The mean of the last three reversals in a block of five was taken as the threshold. The task was terminated whenever five reversals are completed or the minimum level of stimulus is reached.

Gap-in-Noise

Gap-in-Noise is a measure of temporal resolution, where listeners identify the noise containing a gap. The stimuli were white noise bursts of 500milliseconds duration with a ramp of 20 milliseconds with and without variable gap. Stimuli were generated using a 16-bit digital to analog converter with a sampling frequency of 44.1 kHz. The noise was passed through a band pass filter with 120 dB/octave slope (finite impulse response (FIR) filter). A variable amount of silence was introduced in the middle of noise to generate the gap. The silence duration varied in 2 milliseconds steps from 50 to 0 milliseconds.

TMTF

The TMTF is a measure of temporal resolution that indicates one's ability to detect of amplitude modulations in a signal across modulation frequency. The signal was a 500-millisecond duration white noise low pass filtered at 2,000 Hz with an onset and offset ramp of 20 milliseconds. The white noise was amplitude modulated by multiplying it with a DC shifted sine wave. The modulation frequencies used were 4, 16, 32, and 64 Hz. The depth of modulation (m) was controlled by varying the amplitude of the sine wave from 0 (0% modulation) to 1 (100% modulation). At each modulation frequency, 15 stimuli were created with modulation depths varying from −30 dB to 0 dB (in 2-dB steps). Each TMTF exercise was terminated when five reversals were obtained, and the mean of the last three reversals was recorded.

IRN

The IRN task required participants to discriminate IRN noise from bandpass noise. The IRN was created by adding a delayed (d = 10 milliseconds) copy of the noise to the original noise. Repeating this process eight times resulted in a stimulus creating a pitch sensation that was determined by the frequency 1/d, giving a perception of 100 Hz.43 The noise used in the present study had a bandwidth of 1,000 to 4,000 Hz.

Frequency Discrimination

In the frequency discrimination task, the listener's task was to detect the interval containing the high-pitched tone. Each stimulus had an overall duration of 500 milliseconds, including rise/fall times of 20 milliseconds. The amount of frequency change was 8 Hz for first two reversals, and 2 Hz for the subsequent eight reversals. The frequency discrimination task targeted spectral resolution at 1,000 and 4,000 Hz.

Spectral Ripple Difference

Spectral rippled noise (SRN) was generated as described by Won et al.31 The SRN had a bandwidth of 100 to 5,000 Hz and a peak-to-valley ratio of 30 dB. Fourteen different stimuli with different ripple densities (0.125, 0.176, 0.250, 0.354, 0.500, 0.707, 1.000, 1.414, 2.000, 2.828, 4.000, 5.657, 8.000, and 11.314 ripples/octave) were synthesized. Two versions of each stimulus were generated differing in the phase of the spectral envelope. The reference ripple noise had a phase of 0 radians, whereas the target ripple noise had a phase of π/2 radians. All the stimuli were 500 milliseconds in duration with 150-millisecond rise/fall times.

All tasks, except for the Gap-in-Noise task, provided immediate feedback for right or wrong answers. Thresholds for the forced-choice psychophysical discrimination tasks obtained on the first (day 1) and last training day (day 7) of the auditory training period were compared using paired t tests.

Outcome Measures

Two measures of auditory performance were undertaken before (visits 1, 2, 3) and after training (visit 4): CAEPs and speech recognition in quiet and noise. CAEPs were recorded using AgCl electrodes; the active electrode was placed at Fz with the reference electrode on the mastoid contralateral to the CI, and the ground electrode on the forehead. The Compumedics Neuroscan SCAN4.5 software with a Compumedics SynAmps2 amplifier (Compumedics, Australia) system was used for CAEP recordings. CAEP stimuli consisted of a bisyllabic utterance /baba/, spoken by an Australian female in three different prosodic patterns—no-stress (/baba/), early stress (/BAba/) and late stress (/baBA/)—presented in two different listening conditions: in quiet and in eight-talker babble with adult male and female speakers at 75-dB sound pressure level (SPL). The bisyllabic stimuli were chosen to match the CVCV structure of the words in the speech perception task and to explore effects of natural speech stimulus intensity variation on CAEP outcomes. Table 3 summarizes the temporal characteristics of the CAEP stimuli.

Table 3. Temporal Properties of Cortical Auditory Evoked Potential Stimuli.

| Stimulus | Syllable1 Duration (ms) | Pause Duration (ms) | Syllable2 Onset (ms) | Syllable2 Duration (ms) | Total Duration (ms) |

|---|---|---|---|---|---|

| /baba/ | 110 | 55 | 165 | 235 | 400 |

| /BAba/ | 136 | 44 | 180 | 176 | 356 |

| /baBA/ | 131 | 94 | 225 | 217 | 442 |

CAEP stimuli were presented using Neuroscan Stim2 software through an Australian Monitor Synergy SY400 power amplifier and Sabine Graphi-Q GRQ-3102 equalizer (Sabine Inc., Alachua, FL) to a Turbosound IMPACT 50 speaker (Turbosound, England) 1.4 m from the participant, at 0-degree azimuth. The level of /baba/ with no stress on either syllable was 75-dB SPL. The intensity of the stressed syllable in /BAba/ and /baBA/ was 8 dB higher. The babble noise was presented through a second identical speaker at 45-degree azimuth at 1.4 m distance, calibrated to 70-dB SPL, resulting in a SNR ranging from +5 dB (unstressed syllable) to +13 (stressed syllable) dB for the noise condition. Speech stimuli for CAEP recordings were presented in pseudorandomized order with a variable interstimulus interval of 1,000 to 1,500 milliseconds. Each speech stimulus was presented 60 times per trial, with three trials per condition, presented in randomized order, giving a total of 180 evoked responses per stimulus.

The Lexical Neighborhood Test (LNT) monosyllabic LNT and multisyllabic subtests were presented at 75 dB SPL at 0-degree azimuth using a laptop connected to a GSI 61 clinical audiometer, Laboratory-Gruppen amplifier, and TurboSound speaker (Turbosound, England).44 In the noise condition, the 70-dB SPL eight-speaker babble (National Australia Laboratory (NAL) recording) was played on the same audiometer channel (+5-dB SNR). LNT word lists were pseudorandomly assigned to the noise or quiet condition for each visit. LNT lists have easy words that have few alternatives in the lexical neighborhood and hence are highly predictable, and hard words have many alternatives in their neighborhood (for example, the word cat has many neighbors including bat, cut, cad, and at). Consequently, hard lists require the participant to hear well to recognize the presented token as mishearing a phoneme means a different word will be perceived. As the primary aim was to identify if the training improved listening in noise, testing in noise included the monosyllabic LNT and multisyllabic LNT easy and hard lists while testing in quiet was performed for easy monosyllabic LNT words only. If needed, pausing the recording was acceptable to allow the participant additional time to process the sound but the words were not repeated during speech perception testing.

Data Analysis

For CAEP analysis, continuous EEG recordings for each trial were timed between −100 to 900 milliseconds using batch processing. Baseline correction (−100 to 0 milliseconds) and artifact rejection (−100 to 100 µV) were applied to each epoch. Offline 30-Hz low-pass filter with zero phase shift was applied. The three trials per stimulus condition were combined to obtain a grand average for each stimulus condition. After post hoc processing, responses were peak picked for latencies and amplitudes of the P1-N1-P2 complex. The latency and amplitude of each peak P1-N1-P2 was determined for the six grand averages (quiet/noise, three speech stimuli) for each participant, for the four separate visits.

The bisyllabic stimuli produced two P1-N1-P2 evoked potential waveforms for some participants, but P1-N1-P2 to the first syllable was consistently evoked in all participants. The CAEP to the initial syllable was defined as the positive peak (P1) at ∼100 milliseconds after stimulus onset, N1 as the negative peak following P1, and P2 was defined as the largest peak within the complex in the 200- to 250-millisecond latency region. N1P2 amplitude was determined by subtracting N1 amplitude from P2 amplitude. Similar rules were used to identify the CAEP to the second syllable (P1′-N1′-P2′). Repeated measures analyses of variance (ANOVA) were conducted to determine stimulus (×3), noise (×2), and visit (×4) effects on the amplitudes and latency for the first three CAEP peaks. The later P1′-N1′-P2′ peaks were not analyzed because these peaks were not consistently present across participants and stimuli.

LNT percent word correct scores (monosyllabic LNT and multisyllabic LNT) for easy quiet and easy noise conditions were compared using repeated-measures ANOVA to determine whether speech scores were affected by word type, noise, and visit (two types of lists; two SNRs, noise and quiet; four visits). Composite noise scores (averaged across easy and hard word lists) were also examined separately using a repeated-measures ANOVA to see if training affected speech recognition scores in noise (irrespective of the type of stimuli).

Results

Psychophysical Auditory Training

Table 4 shows psychophysical means and SDs for the CI participants for the first and last day of training compared with published means for adults with normal hearing. Normative data are provided for the iterated and spectral ripple noise and TMTF tasks and the Gap-in-Noise and frequency discrimination tasks.45 46

Table 4. Mean Discrimination Thresholds (n = 10) on Day 1 (First) and Day 7 (Last) of Auditory Training for Simple and Complex Temporal and Spectral Psychophysical Tasks.

| Task Type | Task Name | Day 1, Mean (SD) | Day 7, Mean (SD) | Normal Hearing Adults |

|---|---|---|---|---|

| Temporal | Gap-in-Noise (ms) | 8.7 (6.3) | 6.3 (4.4) | 4.68 (1.0)* |

| Iterated Ripple Noise (Iterated Ripple Noise–to–noise ratio) | 0.153 (0.114) | 0.136 (0.108) | 0.05 (0.01)† | |

| Spectral | Frequency discrimination (Hz) at 1,000 Hz | 110.2 (49.3) | 97.1 (59.8) | 20.2 (15.1)* |

| Frequency discrimination (Hz) at 4,000 Hz | 138.8 (48.0) | 113.8 (29.1) | ||

| Spectral Ripple Noise (ripples/octave) | 2.65 (2.6) | 5.19 (2.7) | 5.52 (2.48)† |

Gap-in-Noise results were similar to values obtained for normal hearing adults, even pre-training, and did not change significantly over time (F(1, 9) = 0.477, p = 0.507). IRN and frequency discrimination scores did not change significantly over the training period, (F(1, 8) = 1.842, p ≥ 0.212 and F(1, 7) = 2.14, p ≥ 0.177, respectively). For the IRN and frequency discrimination tasks, participants did not achieve the sensitivity of participants with normal hearing, even post-training. Performance on the SRN task was similar to previously reported normative data.43 Thresholds significantly improved for the SRN task between the first and final day of training (F(1,8) = 7.58, p = 0.025).

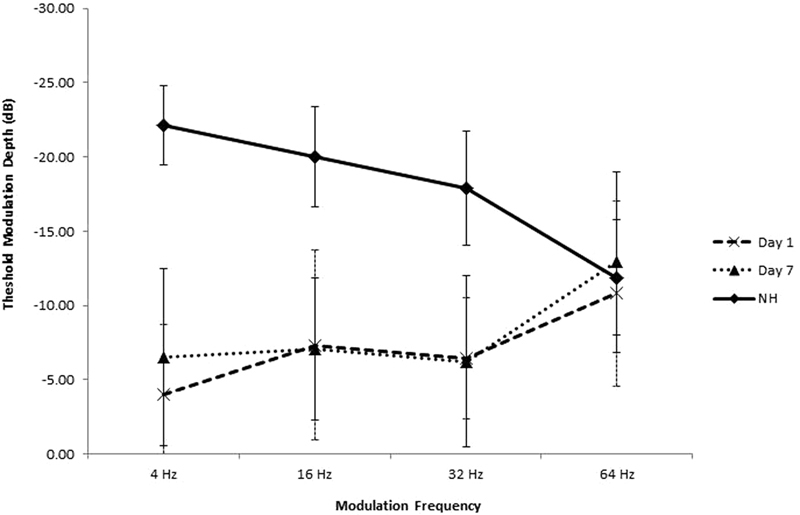

Fig. 1 shows TMTF results for the CI participants compared with normative (n = 10) results.43 Only 9 of the 10 CI participants had TMTF results as one participant was not able to complete the task. Fig. 1 shows similar performance at 64 Hz for normal hearing and CI listeners; t tests showed no difference in TMTF thresholds from the normative data for 64 Hz on day 1 (p = 0.647) or day 7 (p = 0.644). For all other modulation frequencies (4, 16, 32 Hz) thresholds were significantly poorer (p < 0.001) for the participants with CIs than the normative thresholds. The comparison of training day 1 and day 7 results across all frequencies suggested a significant effect of training (F(1,7) = 8.85, p = 0.046); however, planned comparisons showed only a trend for improved performance at 4 Hz (p = 0.057) when pre- post-training results were compared for individual amplitude modulation frequencies.

Figure 1.

Temporal modulation transfer function results of participants with cochlear implants (n = 9; dashed line = training day 1, dotted line = training day 7) compared with published normal hearing (NH) values from Peter et al (2014) (solid line).43

Noise and Syllable Stress Effects on CAEPs

For visit 1, latencies in quiet were 102.7 (±17.9) for P1, 158.2 ( ± 22.9) for N1, and 239.10 ( ± 19.4) for P2. P1, N1, and P2 latencies were all significantly longer (p < 0.001, see Table 5) and amplitudes were significantly smaller in noise than in quiet with the exception of N1 (p ≤ 0.28, see Table 5) for all three speech stimuli. For example, the noise increased latencies by 27 to 33 milliseconds for all peaks for the no-stress syllable. These results are consistent with other studies that have shown that masking affects stimulus audibility and hence CAEP latencies and amplitudes.47 48

Table 5. Results of Repeated-Measures ANOVAs Examining Visit, Syllable Stress, and Noise Effects on Cortical Auditory Evoked Potentials.

| Visit | Stress | Quiet/Noise | Interactions | ||

|---|---|---|---|---|---|

| Latencies | P1 | NS | F(2, 16) = 40.91, p < 0.001 | F(1, 8) = 68.04, p < 0.001 | Stress × noise, F(2, 16) = 10.06, p = 0.001 |

| N1 | NS | F(2, 16) = 29.93, p < 0.001 | F(1, 8) = 36.21, p < 0.001 | Visit × stress × noise, F (6, 48) = 2.51, p = 0.034 | |

| P2 | NS | F(2, 16) = 9.16, p = 0.002 | F(1, 8) = 85.74, p < 0.001 | NS | |

| Amplitudes | P1 | NS | NS | F(1, 8) = 6.84, p = 0.031 | NS |

| N1 | NS | NS | NS | NS | |

| P2 | F(3, 24)= 4.51, p = .012 | NS | F(1, 8) = 16.99, p = 0.003 | NS | |

| N1P2 | F(3,24)= 2.82, p = .060 | NS | F(1, 9)= 14.45, p = 0.005 | NS |

Abbreviations: ANOVA, analysis of variance; NS, not significant.

Participants could all perceive the differences between the speech stimuli and this was reflected in the CAEPs. P1, N1, and P2 latencies, but not amplitudes, differed significantly among the three speech stimuli (Table 5). P1, N1 and P2 latencies were significantly longer for the first syllable stress condition (BAba), and P1 and N1 latencies were significantly shorter for the second syllable stress condition (baBA).

Training Effects on CAEPs

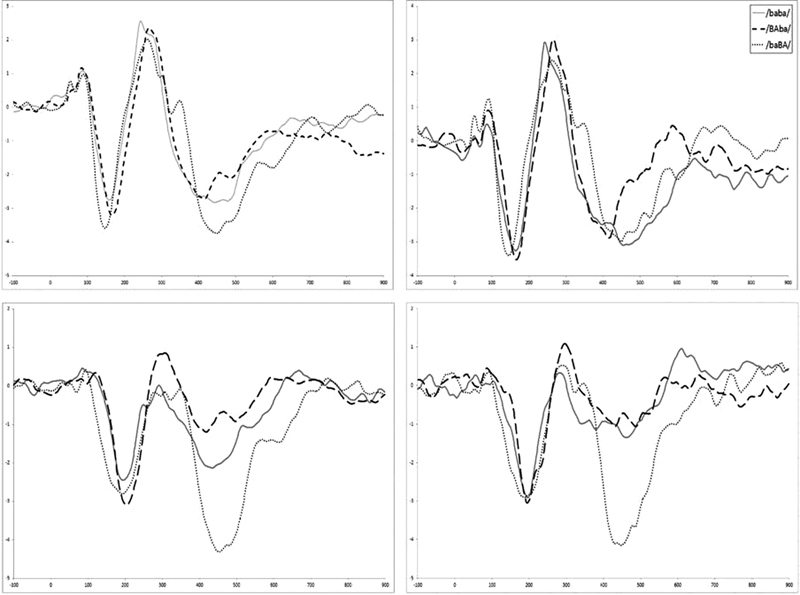

Fig. 2 shows pretraining versus post-training CAEPs recorded on visit 3 (immediately pretraining) and visit 4 (immediately post-training) for the three stimuli and in the quiet and noise conditions. The effect of varying stimulus stress is more evident for the CAEP waveforms recorded in noise. The speech stimulus with second syllable stress (baBA) produced a distinct P1′-N1′-P2′ response following the initial P1-N1-P2 in about half the participants. This is evident in the grand average waveform for /baBA/, but not in the grand averages for the other stimuli.

Figure 2.

Grand average cortical auditory evoked potentials (n = 10) recorded at Fz showing pre- (left column) and post- (right column) training prosodic stress responses in quiet (top row) and noise condition (bottom row). The y-axis has amplitudes 4 to −4 µV and x-axis has latencies from −100 to 900.

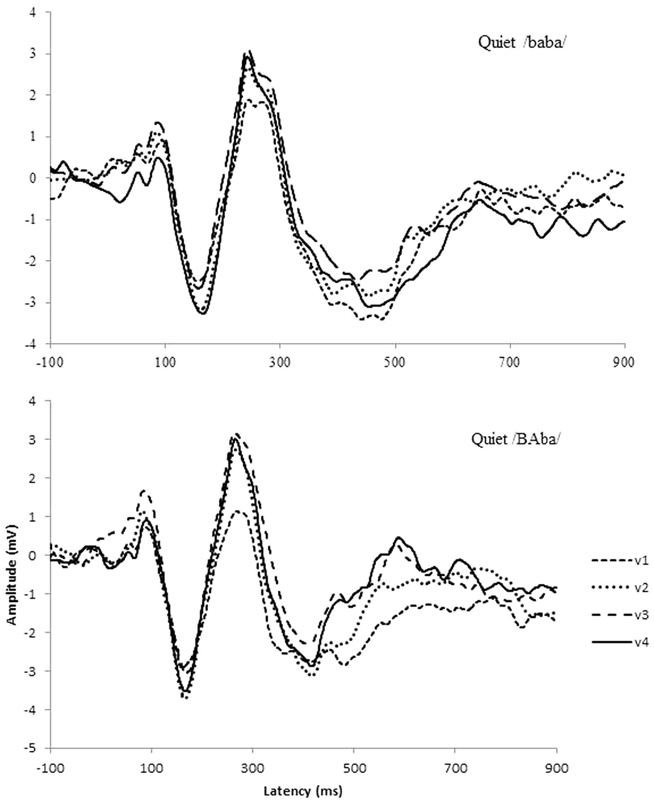

Fig. 3 shows CAEPs recorded for all the speech stimuli (/baba; BAba; baBA/) in quiet and in noise for all four visits. P2 and N1P2 amplitudes showed a trend for increased amplitudes across visit, consistent with previously reported training effects on CAEPs.49 50 Planned comparisons showed that for P2 there were no pre- and post-training differences when results were examined for individual stimuli. N1P2 amplitude increased significantly between visit 3 and 4 only for the no-stress stimulus /baba/ in quiet (p = 0.005).

Figure 3.

Cortical auditory evoked potential grand averages showing changes in the average waveform over visits, with v1, v2, and v3 being pretraining visits and v4 being the post-training visit. Top graph shows quiet /baba/ and bottom graph shows quiet /BAba/ condition.

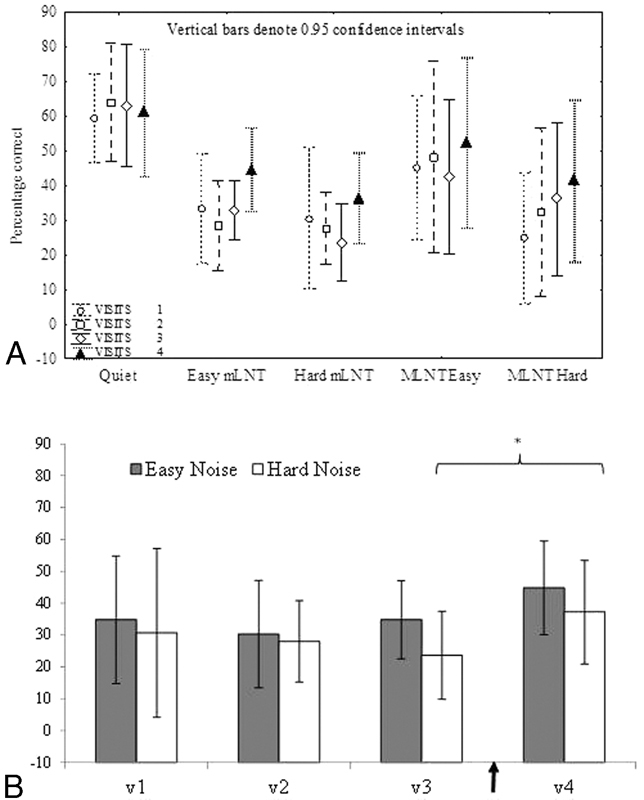

Speech Perception

LNT speech scores in quiet were very consistent over time, with a mean score of 63.7% ( ± 19.0) across the first three visits compared with post-training 63.2% ( ± 23.4). In noise, the mean score was 36.2% ( ± 20.8) in the pretraining sessions compared with 46.71% ( ± 22.2) at visit 4. As expected, speech perception in noise was significantly poorer than performance in quiet (F(1,9) = 42.57, p < 0.001). Overall LNT results (monosyllabic LNT and multisyllabic LNT) showed a significant main effect of visit for the noise condition (F(3, 24) = 6.63, p = 0.002) but not for the quiet condition. Planned comparisons also showed that monosyllabic LNT easy (p = 0.002) and monosyllabic LNT hard (p = 0.003) word scores in noise were significantly better post-training (visit 4) than pretraining at visit 3 (Fig. 4). There was more variability across baseline assessments for multisyllabic LNT lists (SD 30%) than for monosyllabic LNT (SD 16%) lists, which may have contributed to the lack of significant findings for multisyllabic LNT words.

Figure 4.

(A) Lexical Neighborhood Test (LNT) scores over four visits for quiet and noise conditions. Vertical bars denote 0.95 confidence intervals. *Two word lists that yielded a significant difference between visits 3 and 4. There were no difference between scores for visit 1, visit 2, and visit 3. (B) Easy and hard word lists for the LNT word lists across the four visits. The arrow indicates the training period (7 days). There is a significant difference for these two lists between visit 3 (pretraining) and visit 4 (post-training).

Correlations and Effect Sizes

Performance on the post-training session tasks that showed changes after training (N1P2 amplitude, monosyllabic LNT word scores in noise, spectral ripple discrimination (SRD) scores) scores were not correlated. The lack of correlation may reflect the small sample size or the differences in the size of the training benefits across behavioral and electrophysiologic measures. The effect size for the change in N1P2 amplitude for the no-stress stimulus /baba/ pre- versus post-training was medium to large (Cohen d = 0.65; r = 0.30). Moderate effect sizes were seen for easy monosyllabic LNT (d = 0.57; r = 0.27) and hard monosyllabic LNT words (d = 0.30; r = 0.14). SRD psychophysical scores showed the biggest change with a Cohen d of 0.81 (r = 0.38).

Discussion

The present study determined whether short-term computer-based auditory psychophysical training improved speech recognition in adults with CI and if altered speech scores would be reflected in the CAEPs. All 10 CI participants completed 7 hours of training over a week unsupervised at home with no monetary benefit. The auditory training was associated with a small average improvement over time on all psychophysical measures; however, only SRN thresholds improved significantly between training day 1 and day 7. The goal of training was to enhance speech perception in noise. Won and colleagues found that SRN discrimination correlated with speech perception in noise for CI users.31 Consistent with the observed improvement in SRN, speech recognition in noise significantly improved after training in the current study but only for two conditions (easy and hard monosyllabic LNT words).

Although N1P2 CAEP amplitudes showed some changes post-training, these did not correlate with behavioral post-training measures, and the prepost CAEP difference was only significant for the unstressed speech stimulus /baba/. Syllable stress had a significant effect on CAEP latencies, indicating that these intensity differences were processed at the level of the auditory cortex. The training affected CAEPs for the unstressed stimulus but not the stressed syllables, which could be a training-specific effect as intensity/prosody variation was not included in the training materials, or the effects of training may have been more difficult to demonstrate due to the greater variability in CAEPs observed for the stressed stimuli. Furthermore, changes in CAEPs can occur with repeated exposure to stimuli (e.g., Tremblay et al),51 hence it will be important to do future studies in this area using a matched control group that does not receive auditory training to determine whether the psychophysical training accounts for the observed changes in CAEPs and speech perception.

The improvement in speech scores is consistent with other investigations of short-term auditory training using commercial computerized auditory training software (Seeing and Hearing Speech) with CI recipients and postlingually deaf individuals (n = 5).13 The Seeing and Hearing Speech training occurred over 4 days of an hour each day and hence is comparable to the current study. The researchers also showed modest improvements in speech scores, 8.44% (p = 0.01) for HINT scores at +15-dB SNR and 7.89% (p = 0.02) for QuickSIN (speech in noise) scores.

Psychophysical performance was not examined at all four visits, only at the start and end of the training period (visits 3 and 4). Consistent with previous training studies, the largest effect of the training was on scores for one of the training tasks, SRN. This task involves spectrally complex stimuli, and performance on this task has been linked with speech perception in previous research with CI users.31 It is not clear whether training on this task alone would have resulted in the changes observed in CAEPs and speech scores; further research is needed to clarify this and to determine whether psychophysical training alone, or for a longer period of time, has similar or greater benefits compared with speech based auditory training.

Although performance differed after training for the SRN task, for speech scores, and for CAEPs, but these results did not correlate with each other. As noted by Tremblay et al,52 it is possible for CAEP changes to precede post-training changes in behavior, and hence it is useful to compare the time course of behavioral and electrophysiologic changes in CI participants undergoing training. The use of more closely matched stimuli for behavioral and CAEP measures may improve the agreement between these measures in future studies.

The sample size was relatively small, and there was large variation in speech recognition skills across the 10 participants. Variation in baseline abilities has been cited previously as a factor contributing to the limited success of auditory training.14 Participants in the current study had their CI for at least 15 months. Training studies report greater success when participants have had a year or less CI experience.12 Clinically, the use of auditory training seems particularly important for people with more CI experience whose performance reaches a plateau below an optimal level for effective communication in challenging environments in everyday life.

The majority of participants in the current study had prelingual onset of deafness. Earlier studies have shown better outcomes for CI users who are postlingually deafened. For example, Fu et al had four postlingually deafened CI participants out of 10 participants in their study and found that the significant improvements on HINT sentences were observed for these postlingual participants.12 Most of the participants in the current study were not successful HA users and had not used their HAs on a regular basis prior to implantation; therefore, the evidence for some auditory plasticity in this population provided by this study is encouraging.53 The auditory training materials and tasks in the current study focused on bottom-up auditory processing skills including spectral and temporal processing of simple and complex stimuli that may be more beneficial for this group.

The interaction between bottom-up auditory processing and top-down cognitive and language-processing skills and optimal auditory training has not been established. It is possible that training needs to tap into broader language, attention, and memory skills (top-down) for some people. It is recognized that training ought to be adaptive to accommodate for variations in performance and that learning is optimal when training is kept at a difficult but possible level, sometimes referred to as the edge of competence.54 The idea that the training approach should consider baseline cognitive abilities is new, however. There is increasing interest in the interaction between auditory perception and cognition, but this has not been explored with respect to auditory training. Lunner et al reviewed the evidence for links between cognition and HA performance and noted that working memory impacts on the benefits of different signal-processing strategies in HA users.55 HA users with better working memory benefit more from fast-acting compression. Another study by the same group reported that the impact of working memory differed depending on the duration of the hearing device usage.56 Closer to the fitting time, the working memory played a bigger role than 6 months later. Currently, there are only a few studies of auditory training benefits in CI users with small numbers, high variability in baseline speech perception abilities, and no assessment of cognitive skills that might impact on training effectiveness. Hence, more research is needed in this area.

The optimal duration and intensity/dosage of auditory training is also not well established. The current study had 7 hours of training but other studies have used longer training periods ranging up to 3 weeks with similar outcomes.8 Molloy et al found that shorter, more intensive training was more effective for auditory perceptual learning. Research that includes frequent probes to assess learning during the course of training is needed to better clarify optimal training duration and dosage for people with CIs.57

Another aspect that could be better evaluated in auditory training studies is the participants' reports of benefits or impact on listening effort. In the current study, three of the CI participants spontaneously volunteered that the training helped in increasing their awareness of practicing their listening skills. Participants' opinions and experiences of training benefits are often not reported but are an important consideration given the acknowledged poor compliance and lack of engagement in training,38 despite published evidence for training effectiveness. Given these challenges, it is important that training is acceptable, has demonstrated effectiveness for important functional outcomes such as speech perception in noise, and optimizes outcomes in a short period of time. The current study of short-term auditory training showed improved perception of spectrally complex auditory stimuli, cortical evoked potentials, and speech perception in noise on a challenging speech perception task, suggesting that the psychophysical training approach warrants further investigation.

References

- 1.Dowell R Hollow R Winton L Changing selection criteria for cochlear implants–the Melbourne experience Sydney, Cochlear Ltd white paper 2003 Available at: oldwebsite.cochlearacademy.com. Accessed January 7, 2016

- 2.Hickson L, Meyer C. Improving uptake and outcomes of hearing aid fitting for older adults: what are the barriers and facilitators? Int J Audiol. 2014;53 01:S1–S2. doi: 10.3109/14992027.2013.875265. [DOI] [PubMed] [Google Scholar]

- 3.Knudsen L V, Öberg M, Nielsen C, Naylor G, Kramer S E. Factors influencing help seeking, hearing aid uptake, hearing aid use and satisfaction with hearing aids: a review of the literature. Trends Amplif. 2010;14(3):127–154. doi: 10.1177/1084713810385712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Arlinger S. Negative consequences of uncorrected hearing loss—a review. Int J Audiol. 2003;42 02:S17–S20. [PubMed] [Google Scholar]

- 5.Hogan A, O'Loughlin K, Miller P, Kendig H. The health impact of a hearing disability on older people in Australia. J Aging Health. 2009;21(8):1098–1111. doi: 10.1177/0898264309347821. [DOI] [PubMed] [Google Scholar]

- 6.Lin F R, Metter E J, O'Brien R J, Resnick S M, Zonderman A B, Ferrucci L. Hearing loss and incident dementia. Arch Neurol. 2011;68(2):214–220. doi: 10.1001/archneurol.2010.362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Scarinci N, Worrall L, Hickson L. The effect of hearing impairment in older people on the spouse. Int J Audiol. 2008;47(3):141–151. doi: 10.1080/14992020701689696. [DOI] [PubMed] [Google Scholar]

- 8.Henshaw H, Ferguson M A. Efficacy of individual computer-based auditory training for people with hearing loss: a systematic review of the evidence. PLoS ONE. 2013;8(5):e62836. doi: 10.1371/journal.pone.0062836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Burkholder R, Pisoni D, Svirsky M. Perceptual learning and nonword repetition using a cochlear implant simulation. International Congress Series. 2004;1273:208–211. doi: 10.1016/j.ics.2004.08.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fu Q J, Galvin J J III. Perceptual learning and auditory training in cochlear implant recipients. Trends Amplif. 2007;11(3):193–205. doi: 10.1177/1084713807301379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Blamey P, Artieres F, Başkent D. et al. Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants: an update with 2251 patients. Audiol Neurootol. 2013;18(1):36–47. doi: 10.1159/000343189. [DOI] [PubMed] [Google Scholar]

- 12.Fu Q J, Galvin J J III, Wang X, Nogaki G. Moderate auditory training can improve speech performance of adult cochlear implant patients. Acoust Res Lett Online. 2005;6(3):106–111. [Google Scholar]

- 13.Ingvalson E M, Lee B, Fiebig P, Wong P C. The effects of short-term computerized speech-in-noise training on postlingually deafened adult cochlear implant recipients. J Speech Lang Hear Res. 2013;56(1):81–88. doi: 10.1044/1092-4388(2012/11-0291). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Stacey P C, Raine C H, O'Donoghue G M, Tapper L, Twomey T, Summerfield A Q. Effectiveness of computer-based auditory training for adult users of cochlear implants. Int J Audiol. 2010;49(5):347–356. doi: 10.3109/14992020903397838. [DOI] [PubMed] [Google Scholar]

- 15.Fu Q J, Galvin J J III. Maximizing cochlear implant patients' performance with advanced speech training procedures. Hear Res. 2008;242(1-2):198–208. doi: 10.1016/j.heares.2007.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Oba S I, Fu Q J, Galvin J J III. Digit training in noise can improve cochlear implant users' speech understanding in noise. Ear Hear. 2011;32(5):573–581. doi: 10.1097/AUD.0b013e31820fc821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Miller J D, Watson C S, Kistler D J, Wightman F L, Preminger J E. Preliminary evaluation of the speech perception assessment and training system (SPATS) with hearing-aid and cochlear-implant users. Acoustical Society of America. 2007;2(1):50004. doi: 10.1121/1.2988004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Miller J D, Watson C S, Kistler D J, Preminger J E, Wark D J. Training listeners to identify the sounds of speech: II. Using SPATS software. Hear J. 2008;61(10):29–33. doi: 10.1097/01.HJ.0000341756.80813.e1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kelly A S, Purdy S C, Thorne P R. Electrophysiological and speech perception measures of auditory processing in experienced adult cochlear implant users. Clin Neurophysiol. 2005;116(6):1235–1246. doi: 10.1016/j.clinph.2005.02.011. [DOI] [PubMed] [Google Scholar]

- 20.Purdy S C, Kelly A S, Thorne P R. Auditory evoked potentials as measures of plasticity in humans. Audiol Neurootol. 2001;6(4):211–215. doi: 10.1159/000046835. [DOI] [PubMed] [Google Scholar]

- 21.Anderson S, Kraus N. Auditory training: evidence for neural plasticity in older adults. Perspect Hear Hear Disord Res Res Diagn. 2013;17(1):37–57. doi: 10.1044/hhd17.1.37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fallon J B, Irvine D R, Shepherd R K. Cochlear implants and brain plasticity. Hear Res. 2008;238(1-2):110–117. doi: 10.1016/j.heares.2007.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sharma M, Purdy S C, Kelly A S. The contribution of speech-evoked cortical auditory evoked potentials to the diagnosis and measurement of intervention outcomes in children with auditory processing disorder. Semin Hear. 2014;35(1):51–64. [Google Scholar]

- 24.Bishop D V. Research Review: Emanuel Miller Memorial Lecture 2012—neuroscientific studies of intervention for language impairment in children: interpretive and methodological problems. J Child Psychol Psychiatry. 2013;54(3):247–259. doi: 10.1111/jcpp.12034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Anderson S, Skoe E, Chandrasekaran B, Kraus N. Neural timing is linked to speech perception in noise. J Neurosci. 2010;30(14):4922–4926. doi: 10.1523/JNEUROSCI.0107-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Shannon R V. Detection of gaps in sinusoids and pulse trains by patients with cochlear implants. J Acoust Soc Am. 1989;85(6):2587–2592. doi: 10.1121/1.397753. [DOI] [PubMed] [Google Scholar]

- 27.Moller A R. Cambridge, MA: Academic Press; 2000. Hearing: It's Physiology and Pathophysiology. [Google Scholar]

- 28.McKay C M. Spectral processing in cochlear implants. Int Rev Neurobiol. 2005;70:473–509. doi: 10.1016/S0074-7742(05)70014-3. [DOI] [PubMed] [Google Scholar]

- 29.Maarefvand M, Marozeau J, Blamey P J. A cochlear implant user with exceptional musical hearing ability. Int J Audiol. 2013;52(6):424–432. doi: 10.3109/14992027.2012.762606. [DOI] [PubMed] [Google Scholar]

- 30.Busby P A, Clark G M. Gap detection by early-deafened cochlear-implant subjects. J Acoust Soc Am. 1999;105(3):1841–1852. doi: 10.1121/1.426721. [DOI] [PubMed] [Google Scholar]

- 31.Won J H, Drennan W R, Rubinstein J T. Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users. J Assoc Res Otolaryngol. 2007;8(3):384–392. doi: 10.1007/s10162-007-0085-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Anderson E S, Oxenham A J, Nelson P B, Nelson D A. Assessing the role of spectral and intensity cues in spectral ripple detection and discrimination in cochlear-implant users. J Acoust Soc Am. 2012;132(6):3925–3934. doi: 10.1121/1.4763999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Luo X, Fu Q J, Wei C G, Cao K L. Speech recognition and temporal amplitude modulation processing by Mandarin-speaking cochlear implant users. Ear Hear. 2008;29(6):957–970. doi: 10.1097/AUD.0b013e3181888f61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Fu Q J, Galvin J III, Wang X, Nogaki G. Effects of auditory training on adult cochlear implant patients: a preliminary report. Cochlear Implants Int. 2004;5 01:84–90. doi: 10.1179/cim.2004.5.Supplement-1.84. [DOI] [PubMed] [Google Scholar]

- 35.Moore B C. The role of temporal fine structure processing in pitch perception, masking, and speech perception for normal-hearing and hearing-impaired people. J Assoc Res Otolaryngol. 2008;9(4):399–406. doi: 10.1007/s10162-008-0143-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Patterson R D, Handel S, Yost W A, Datta A J. The relative strength of the tone and noise components in iterated rippled noise. J Acoust Soc Am. 1996;100(5):3286–3294. [Google Scholar]

- 37.Gfeller K, Turner C, Oleson J. et al. Accuracy of cochlear implant recipients on pitch perception, melody recognition, and speech reception in noise. Ear Hear. 2007;28(3):412–423. doi: 10.1097/AUD.0b013e3180479318. [DOI] [PubMed] [Google Scholar]

- 38.Sweetow R W, Sabes J H. Auditory training and challenges associated with participation and compliance. J Am Acad Audiol. 2010;21(9):586–593. doi: 10.3766/jaaa.21.9.4. [DOI] [PubMed] [Google Scholar]

- 39.Strait D L, Kraus N. Can you hear me now? Musical training shapes functional brain networks for selective auditory attention and hearing speech in noise. Front Psychol. 2011;2:113. doi: 10.3389/fpsyg.2011.00113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bugos J A, Perlstein W M, McCrae C S, Brophy T S, Bedenbaugh P H. Individualized piano instruction enhances executive functioning and working memory in older adults. Aging Ment Health. 2007;11(4):464–471. doi: 10.1080/13607860601086504. [DOI] [PubMed] [Google Scholar]

- 41.Lazard D S, Vincent C, Venail F. et al. Pre-, per- and postoperative factors affecting performance of postlinguistically deaf adults using cochlear implants: a new conceptual model over time. PLoS ONE. 2012;7(11):e48739. doi: 10.1371/journal.pone.0048739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Francart T, van Wieringen A, Wouters J. APEX 3: a multi-purpose test platform for auditory psychophysical experiments. J Neurosci Methods. 2008;172(2):283–293. doi: 10.1016/j.jneumeth.2008.04.020. [DOI] [PubMed] [Google Scholar]

- 43.Peter V, Wong K, Narne V K, Sharma M, Purdy S C, McMahon C. Assessing spectral and temporal processing in children and adults using temporal modulation transfer function (TMTF), Iterated Ripple Noise (IRN) perception, and spectral ripple discrimination (SRD) J Am Acad Audiol. 2014;25(2):210–218. doi: 10.3766/jaaa.25.2.9. [DOI] [PubMed] [Google Scholar]

- 44.Kirk K I. Assessing Speech Perception in Listeners with Cochlear Implants: The Development of the Lexical Neighborhood Tests. Volta Review. 1998;100(2):63–85. [Google Scholar]

- 45.Meha-Bettison K The Musical Advantage: Professional Musicians have Enhanced Auditory Processing Abilities [Thesis] Macquarie University, Sydney, Australia 2013 [Google Scholar]

- 46.Musiek F E, Shinn J B, Jirsa R, Bamiou D E, Baran J A, Zaida E. GIN (Gaps-In-Noise) test performance in subjects with confirmed central auditory nervous system involvement. Ear Hear. 2005;26(6):608–618. doi: 10.1097/01.aud.0000188069.80699.41. [DOI] [PubMed] [Google Scholar]

- 47.Sharma M, Purdy S C, Munro K J, Sawaya K, Peter V. Effects of broadband noise on cortical evoked auditory responses at different loudness levels in young adults. Neuroreport. 2014;25(5):312–319. doi: 10.1097/WNR.0000000000000089. [DOI] [PubMed] [Google Scholar]

- 48.Billings C J, Tremblay K L, Stecker G C, Tolin W M. Human evoked cortical activity to signal-to-noise ratio and absolute signal level. Hear Res. 2009;254(1–2):15–24. doi: 10.1016/j.heares.2009.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Shahin A, Bosnyak D J, Trainor L J, Roberts L E. Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians. J Neurosci. 2003;23(13):5545–5552. doi: 10.1523/JNEUROSCI.23-13-05545.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Tremblay K, Kraus N, McGee T, Ponton C, Otis B. Central auditory plasticity: changes in the N1-P2 complex after speech-sound training. Ear Hear. 2001;22(2):79–90. doi: 10.1097/00003446-200104000-00001. [DOI] [PubMed] [Google Scholar]

- 51.Tremblay K L, Inoue K, McClannahan K, Ross B. Repeated stimulus exposure alters the way sound is encoded in the human brain. PLoS ONE. 2010;5(4):e10283. doi: 10.1371/journal.pone.0010283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Tremblay K, Kraus N, McGee T. The time course of auditory perceptual learning: neurophysiological changes during speech-sound training. Neuroreport. 1998;9(16):3557–3560. doi: 10.1097/00001756-199811160-00003. [DOI] [PubMed] [Google Scholar]

- 53.Isaiah A, Hartley D E. Can training extend current guidelines for cochlear implant candidacy? Neural Regen Res. 2015;10(5):718–720. doi: 10.4103/1673-5374.156964. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Moore D R, Halliday L F, Amitay S. Use of auditory learning to manage listening problems in children. Philos Trans R Soc Lond B Biol Sci. 2009;364(1515):409–420. doi: 10.1098/rstb.2008.0187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Lunner T, Rudner M, Rönnberg J. Cognition and hearing aids. Scand J Psychol. 2009;50(5):395–403. doi: 10.1111/j.1467-9450.2009.00742.x. [DOI] [PubMed] [Google Scholar]

- 56.Ng E H, Classon E, Larsby B. et al. Dynamic relation between working memory capacity and speech recognition in noise during the first 6 months of hearing aid use. Trends Hear. 2014;18:2.331216514558688E15. doi: 10.1177/2331216514558688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Molloy K, Moore D R, Sohoglu E, Amitay S. Less is more: latent learning is maximized by shorter training sessions in auditory perceptual learning. PLoS ONE. 2012;7(5):e36929. doi: 10.1371/journal.pone.0036929. [DOI] [PMC free article] [PubMed] [Google Scholar]