Abstract

Background

People experiencing complex chronic disease and disability (CCDD) face some of the greatest challenges of any patient population. Primary care providers find it difficult to manage multiple discordant conditions and symptoms and often complex social challenges experienced by these patients. The electronic Patient Reported Outcome (ePRO) tool is designed to overcome some of these challenges by supporting goal-oriented primary care delivery. Using the tool, patients and providers collaboratively develop health care goals on a portal linked to a mobile device to help patients and providers track progress between visits.

Objectives

This study tested the usability and feasibility of adopting the ePRO tool into a single interdisciplinary primary health care practice in Toronto, Canada. The Fit between Individuals, Fask, and Technology (FITT) framework was used to guide our assessment and explore whether the ePRO tool is: (1) feasible for adoption in interdisciplinary primary health care practices and (2) usable from both the patient and provider perspectives. This usability pilot is part of a broader user-centered design development strategy.

Methods

A 4-week pilot study was conducted in which patients and providers used the ePRO tool to develop health-related goals, which patients then monitored using a mobile device. Patients and providers collaboratively set goals using the system during an initial visit and had at least 1 follow-up visit at the end of the pilot to discuss progress. Focus groups and interviews were conducted with patients and providers to capture usability and feasibility measures. Data from the ePRO system were extracted to provide information regarding tool usage.

Results

Six providers and 11 patients participated in the study; 3 patients dropped out mainly owing to health issues. The remaining 8 patients completed 210 monitoring protocols, equal to over 1300 questions, with patients often answering questions daily. Providers and patients accessed the portal on an average of 10 and 1.5 times, respectively. Users found the system easy to use, some patients reporting that the tool helped in their ability to self-manage, catalyzed a sense of responsibility over their care, and improved patient-centered care delivery. Some providers found that the tool helped focus conversations on goal setting. However, the tool did not fit well with provider workflows, monitoring questions were not adequately tailored to individual patient needs, and daily reporting became tedious and time-consuming for patients.

Conclusions

Although our study suggests relatively low usability and feasibility of the ePRO tool, we are encouraged by the early impact on patient outcomes and generally positive responses from both user groups regarding the potential of the tool to improve care for patients with CCDD. As is consistent with our user-centered design development approach, we have modified the tool based on user feedback, and are now testing the redeveloped tool through an exploratory trial.

Keywords: eHealth, mHealth, multimorbidity, primary care, usability, feasibility, pilot

Introduction

Background

People experiencing complex chronic disease and disability (CCDD) face some of the greatest challenges of any patient population. Patients with CCDD can be characterized as having multiple chronic conditions that impact on their daily lives [1], they are at a higher risk of experiencing poor health outcomes [2,3] and will tend to use health services more than patients with single conditions [4]. These patients are considered to be among the highest cost patient populations in the health care system [2]. Health systems worldwide are examining ways in which care can best be structured to meet the needs of this growing, complex, and high-cost population.

Beyond the challenges that patients face in managing their own illnesses, primary health care providers struggle to manage the multiple conditions with discordant competing symptoms faced by these patients [5] and lack appropriate clinical practice guidelines to guide decision-making [6]. Patient-centered care, which allows for an individualized and holistic approach to patient care, is viewed as crucial to address the highly variable needs of patients with CCDD [7-10]. Patient-centered care can be supported through the adoption of goal-oriented care approaches as a means to help patients prioritize competing issues [11]. However, goals are often not agreed upon between patients with complex care needs and their clinicians [12], and clinicians may consider the process of ascertaining goals to be “too complex and too time consuming” [13].

The electronic Patient Reported Outcome (ePRO) mobile app and portal system were developed to support patients with CCDD and their primary health care providers to collaboratively set and monitor health-related goals. Mobile health (or mHealth) and other eHealth technologies have been previously used to help track health status and monitor symptoms via telemedicine and wearable technologies [14-17] and encourage improved engagement and changes in health behaviors by patients [18,19]. Although there are tools available that help support goal setting and monitoring, most of these are disease-specific (eg, supporting patients with diabetes [20]), and there are few available tools that can address the needs of patients with complex care needs who tend to be high users of the health care system [21].

Development of the ePRO tool was done in collaboration with the technology company, QoC Health Inc. QoC Health Inc. is a Canada-based technology company that is focused on developing patient-centered technology to enable shifting care for patients to the community [22]. The ePRO tool was developed and tested through an iterative user-centered design approach [23]. As a part of this development strategy, conducting usability and feasibility testing is vital to ensure that tools are understandable and can be adopted by target users in typical settings before running larger more costly evaluations [24,25]. This study describes findings from a pilot study to test the feasibility and usability of the ePRO tool from the perspective of patients with CCDD and health care provider users from a primary health care practice in Toronto, Ontario, Canada.

Feasibility and Usability Framework Guiding Study and Analysis

The aims of this study were to: (1) determine whether the ePRO tool was feasible to be used by patients with CCDD and their primary health care providers as part of the delivery of primary health care services and (2) assess the usability of the ePRO tool from the perspective of both patient and provider. Our emphasis was on exploring these questions in a real-world setting, and as such, we adopted a pilot study approach in which patients and providers used the tool over a 4-week period.

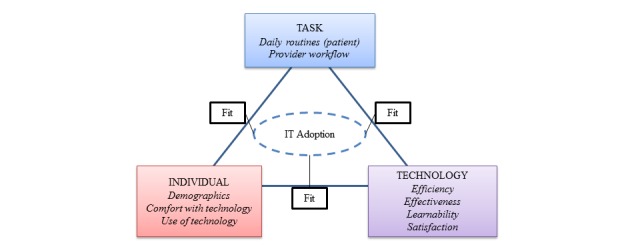

We used the “Fit between Individuals, Task and Technology” (FITT) framework to guide our feasibility and usability assessment [26,27]. The FITT framework suggests that adopting new eHealth systems requires a fit between the user, the technology, and the task or process that is undertaken. Feasibility refers to the ability of users to adopt a technology or intervention in daily routines (often assessed through use of the tool [28]), which is strongly related to the FITT model intention to assess the ability of a technology to be adopted. Usability specifically speaks to how the technology is meeting user needs and tasks. As such, the FITT framework was adopted to assess feasibility overall, with an embedded usability analysis to assess the technology specifically (see Table 1). Tools are typically assessed in terms of efficiency (what resources are required by the user to complete tasks [27]), effectiveness (the ability to complete tasks completely and accurately), learnability (how easily users can learn the system), and user satisfaction with the product [27,29,30]. Using this framework, we sought to answer the following research questions: (1) Is the ePRO tool feasible to be adopted into inter-disciplinary primary health care practices? and, (2) is the ePRO tool usable from both the patient and provider perspective?

Table 1.

Feasibility and usability measures.

| Conceptual framework | Measure | Data source | |

| Feasibility | |||

|

|

User | Demographics | Patient information form |

|

|

Comfort with technology | Patient and provider self-report (in training or in focus groups or interviews) | |

|

|

Use of technology | Data of ePRO system | |

| Task | Fit into daily routines | Patient focus groups and interviews | |

|

|

Fit into provider workflows | Provider focus groups | |

| Technology | Usability assessment (in the following category) |

|

|

| Usability | |||

|

|

Efficiency | Time to complete monitoring (ie, time on task) | System data |

|

|

Reported efficiency | Patient focus groups and interviews Provider focus group |

|

| Effectiveness | Reported errors | Online issue tracker system to communicate errors and problems with the system to QoC Health | |

| Learnability | Reported learnability | Patient focus groups and interviews Provider focus group |

|

| Satisfaction | Reported satisfaction | Patient focus groups and interviews Provider focus group |

|

Table 1 summarizes an overview of indicators used to assess feasibility and usability factors. These measures are aligned with similar studies of usability and feasibility [27,29-31]. As is typical in many feasibility and usability pilots, we relied on a relatively small sample size of patients and providers to test the ePRO tool. Although some usability studies have used surveys such as the System Usability Scale [32] or the Post-System Usability Questionnaire [24], given our small sample, we opted to capture most data through qualitative focus groups, interviews, and observational notes. Quantitative data regarding system use were pulled directly from the ePRO system data (ie, information generated from the technology system itself) to capture compliance and adherence information. Qualitative data collection and analysis allowed us to capture the breadth and depth of user experience.

Figure 1 presents a diagram of the FITT framework based on Sheehan and colleagues [27] original FITT model which includes the measures used to capture the components of the framework in our study.

Figure 1.

A diagram of the FITT Framework to assess usability and feasibility. Adapted from Sheehan et al [27] p. 364.

In a usability and feasibility assessment, we could have also explored privacy and security issues related to the system [33]. Given our systems are fully compliant with all privacy and security legislation related to transmission of patient data in Canada and the United States, and we had captured patient and provider perspectives on privacy and security in earlier stages of development [34], we did not believe it was necessary to assess privacy and security again at this stage of testing.

Methods

Setting

The ePRO tool has been developed within a Toronto-based Family Health Team (FHT); an inter-professional team of providers delivering primary health care services [35]. Patients have a lead primary care provider, either a physician or nurse practitioner; however, patients may receive care from any provider in the team (registered nurses, a social worker, a dietitian, and diabetes nurse educator). Six providers from the team participated in the study as active ePRO providers including 1 physician, 1 social worker, 1 registered nurse, 1 dietitian, and 1 diabetes educator. Active ePRO providers were involved in goal setting and monitoring of patients at the start of the pilot study and conducted at least 1 follow-up visit with the patient to overview progress using the portal system. Other providers, although not actively participating in the study had the opportunity to view their patient’s data on the portal at any time if they chose.

Recruitment

Active ePRO providers were asked to individually identify 3-5 eligible participants from their practice panels. Eligible patients met all the following inclusion criteria: (1) An FHT patient on the participating provider's practice panel; (2) physical capability to use a tablet or availability of a caregiver who had the physical capability to use a tablet who could enter data on the patients’ behalf; and (3) had complex care needs (2 or more chronic conditions, identified difficulty managing conditions, and social complexity and/or mental health issues) as identified by providers. Potential participants were contacted directly by FHT administrative staff over the phone or when they checked in for their appointment to ask if they were interested in being contacted by a member of the research team at which point they were informed about the study, what would be required for participation, the consent process, and information on privacy of their information. The recruitment process lasted from October 6, 2014 until November 7, 2014; during the process, 12 potential participants were identified. Eleven of the participants could be reached and agreed to participate.

Participants provided informed consent by signing consent forms at the time of training and orientation on the device. Participants also filled out patient information forms at this time to provide us with data on patient demographics. Participants were assured that their participation was voluntary, their personal data would only be accessible by the research team, and he or she could return the device at any time if they became ill or were having difficulties monitoring their goals. All the participants were assigned a unique identifier to ensure their anonymity before data analysis. Online data submitted from the device were on a secure server hosted by QoC Health Inc. The server is compliant with all health information data and security laws applicable in Canada and the United States. The technology partner, who had access to patient data needed to provide technical support, signed a confidentiality agreement before the initiation of the study.

Full ethics approval for this study was obtained from the Joint Bridgepoint Hospital-West Park Healthcare Centre-Toronto Central Community Care Access Centre-Toronto Grace Health Centre Research Ethics Board before the initiation of the study.

Training

Providers were trained in 2 separate 1-hour group training sessions before the initiation of the pilot study, one session for active ePRO providers and another for other providers, mainly physicians from the practice, in the event they wanted to monitor their patients involved in the study. Patients were trained one-on-one with a member of the research team in a 30-minute session at the time when consent was given to participate in the study. Although providing training to users limits our ability to test learnability, patient and provider users required a baseline level of understanding of the tool’s functionality and capabilities to appropriately engage in the tool, allowing us to test other key aspects of feasibility and usability. We anticipate that for this tool to be used as part of regular practice, both providers and patients would require some baseline training; as such, including training in our study offers a closer approximation to real-world use.

The Intervention: Overview of the ePRO Tool

The ePRO tool includes 2 key features: (1) My Goal Tracker and (2) Hospital CheckOut.

Feature #1: My Goal Tracker

The My Goal Tracker feature allows patients and providers to collaboratively identify patient goals and help patients to track outcomes related specifically to those goals. In the development of the ePRO tool, providers indicated that they engaged in goal setting activities with their patients with CCDD as a means to support improved self-management [36]. Activities such as motivational interviewing, counseling, and health coaching are used by providers to help patients manage at home between visits. The tool allows patients and providers to set goals related to 5 different areas identified as most important by patients with CCDD, their caregivers, and their primary care providers in the earlier phases of development. These areas include: (1) maintaining or improving general physical and social well-being (physical health goal); (2) maintaining or improving general mental well-being (mood and memory goal); (3) maintaining or improving mobility (mobility goal); (4) pain management (pain goal); and (5) weight management (diet goal).

Monitoring protocols are linked to each of the 5 goal areas and drawn on the basis of 3 valid and reliable generic outcome measures developed by Patient-Reported Outcomes Measurement Information System (PROMIS). The 3 PROMIS tools that are included are: (1) The General Health Scale; (2) The Pain Interference Scale; and (3) The Health Assessment Questionnaire. The PROMIS tools used in the monitoring protocols are generic rather than disease-specific patient-reported outcome measures to better reflect our patient population of interest. Furthermore, these tools have demonstrated validity and reliability in chronic disease populations [37-39]. Note that the diet goal was not based on a PROMIS tool but rather allowed patients to take pictures of their food and track their weight.

Feature #2: Hospital CheckOut

The Hospital CheckOut features allow patients to inform their primary care provider when they have visited and been discharged from a hospital. This feature addresses significant communication challenges identified by patients and providers through a user-needs assessment conducted in the first phase of our tool development [34].The patient simply enters the date of discharge, reason for visit, and name of hospital, and an alert is sent to the provider so that they can reach out to the hospital and retrieve the discharge report.

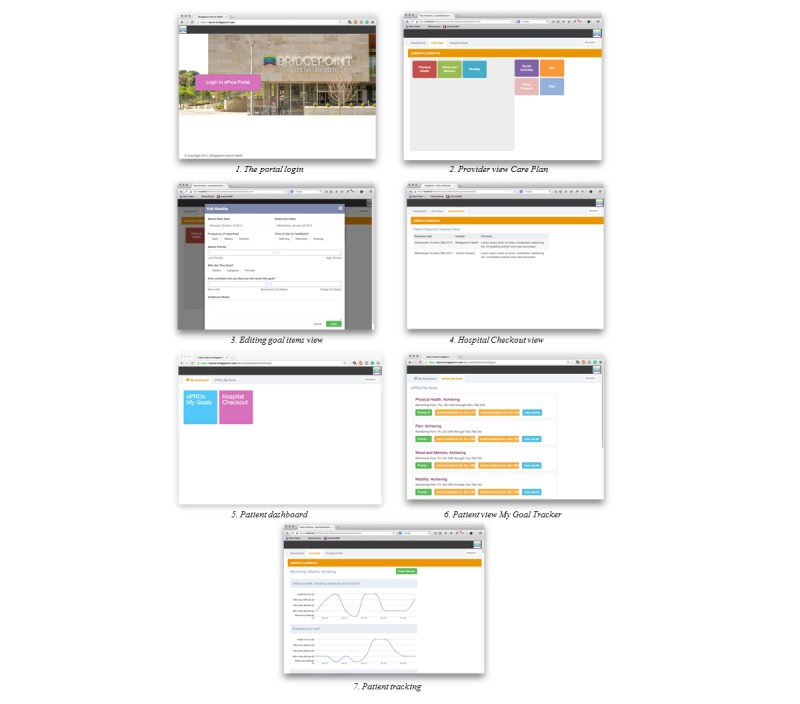

The Portal System

The provider portal allows providers to set up care plans with patients, which identify on which goals patients will be working toward. Once added to a patients' Care Plan, the goal shows up on the patients “My Goal Tracker,” and the patients then can track their progress toward their goal over time on their mobile device or on the patient portal. The provider is also able to view the Hospital CheckOut alerts on the portal. The patient portal allows patients to view their progress over time. Patients can also choose to enter their monitoring data on the portal rather than on the mobile device if they choose. In general, the portal system was intended to be more heavily used by providers when setting up goal tracking and viewing patient tracking, with patients only needing to access the portal to view their own results; all data tracking activities for patients can be conducted on the mobile app. See Figure 2.

Figure 2.

Depiction of the ePRO portal.

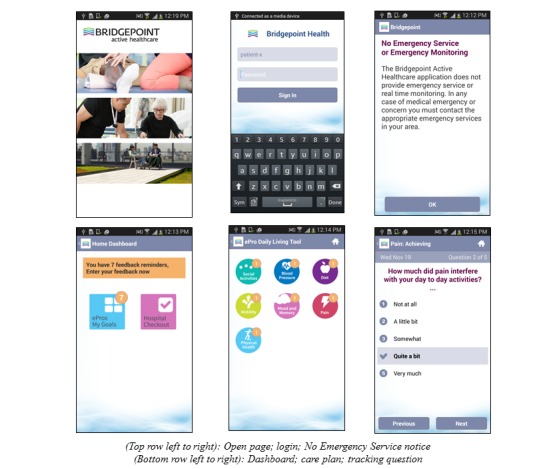

The Mobile App

The mobile app allows patients to track their goals and report hospital visits using the Hospital CheckOut. For the pilot study, patients were provided with locked Samsung Galaxy II android phones with the ePRO app uploaded. As the device was locked, they could only use the ePRO app on the phone. All devices were supported through a fourth generation/long-term evolution connection to not only allow patients to update their data at any time but also allowed the QoC Health Inc. to push bug fixes as required. See Figure 3.

Figure 3.

Depiction of the ePRO mobile app.

Pilot

After training, patients worked with providers to collaboratively identify goals and then add them to their care plans. This discussion occurred during a 30-minute appointment, which is typical for allied health professionals working with patients with CCDD to identify goals and improve self-management. Patients then tracked their goals for a 4-week period, after which they returned to their provider to view and discuss their results and tracking. Some patients visited their providers more frequently during the course of the study, which was part of their usual care. For instance, patients visiting the social worker typically visit the social worker once a week, and at each visit, they would discuss goal tracking and modify treatment plans and goals as needed.

At the end of the 4-week pilot study, patients and providers were asked to participate in separate focus groups where they provided feedback on the feasibility and usability of the tool. The patient focus group was held on December 17, 2014 (n=5), and the provider focus group was held on January 22, 2015 (n=6). Three patients who completed the study but were unable to participate in the focus group in December were separately interviewed to gather their feedback on usage of the tool. System data regarding all patient-reported data, types of goals tracked, response counts, time on the system (both patients and provider), and number of times the system was accessed were pulled by QoC Health Inc. and given to the research team in Excel format for analysis, and in addition, they provided an overview report of system use.

Analysis Method

Basic descriptive statistics were conducted using the system data. Content analysis of data from the issue tracker system was conducted to identify errors reported by users. Focus groups and interviews were analyzed using thematic content analysis [40]. Common themes were identified by 2 different groups of authors, for the patient (AG, AIK, and CSG) and provider (AG, PH, and CSG) focus group data. The authors reviewed the transcripts independently and developed their own preliminary coding scheme. Multiple iterations of coding occurred until consensus was reached on themes among reviewers to develop a final coding scheme [40]. To help organize themes as per the usability and feasibility framework summarized in Table 1, authors (AG and AK) identified coded themes that captured components of the feasibility framework for analysis. Qualitative data management software, NVivo9 [41] was used to manage the data after a final coding scheme was created. Using NVivo 9, researchers code text by highlighting text and selecting the appropriate code from the coding scheme. Coding was conducted by authors (AG, PH, and AIK), and coding reports from NVivo, in which all examples of data attached to a particular code, were generated and reviewed by the lead author along with AG, PH, and AIK to ensure content fit within the definition of the code.

Four patient participants and all providers were willing to provide feedback on the findings (member checking). These individuals were sent packages that included an overview of the analysis and a form to provide feedback. No feedback was returned from patients (1 letter was returned from a patient as the address provided by the participant was incorrect), 2 forms were returned by providers agreeing with findings.

Results

Assessing Feasibility: Understanding Users and Tasks

Patient User and Tasks

Twelve individuals were identified by providers as potential participants, among them, 11 were successfully contacted by the research team. Participants were both medically and socially complex; they had 2 or more chronic conditions (reported conditions include: diabetes, mental illness, joint conditions, heart conditions, kidney conditions, and chronic pain) and social vulnerabilities (ie, at least 2 of them were in low-income housing; however, socio-economic status was not explicitly captured). Two participants dropped out within the first few days due to health issues, and a third participant dropped out of the study after 2 weeks due to increased anxiety associated with the need to report on her health and using the mobile device. This patient already had high levels of anxiety and depression (including anxiety related to using their personal iPhone) before the initiation of the study. The provider anticipated that this could occur but wanted to give the patient a chance to engage in something new as part of her ongoing treatment. Other studies have found that health monitoring may lead to adverse effects on mental health and well-being [42]. Five of the patients who participated in the full study participated in the focus group, and the 3 others provided feedback through interviews (2 of them over the phone and the remaining 1 patient in person with CSG). See Table 2 for an overview of patient users and their use of the ePRO tool during the pilot.

Table 2.

Patient demographics, goal monitoring activities, and system usage.

| Patient participants | N=11 | |

|

|

Average age | 58 years |

|

|

Min | 35 years |

|

|

Max | 72 years |

|

|

Male | n=5 |

|

|

Female | n=6 |

|

|

Other (ie, transgender) | n=0 |

|

|

|

|

|

Country of origin

|

|

|

|

|

Canada | n=5 |

|

|

United Kingdom | n=2 |

|

|

United States | n=1 |

|

|

Jamaica | n=1 |

|

|

Not reported | n=2 |

|

Reported comfort with technology

|

|

|

|

|

Previous experience | n=6 |

|

|

Little experience | n=2 |

|

|

Not reported | n=3 |

| Attrition | n=3 | |

| Goals tracked |

|

|

|

|

Physical health | n=6 |

|

|

Mood and memory | n=3 |

|

|

Pain | n=2 |

|

|

Diet | n=2 |

|

|

Mobility | n=1 |

|

|

Patients tracking 1 goal | n=4 |

|

|

Patients tracking 2 goals | n=4 |

|

|

Patients tracking 3goals | n=1 |

|

|

Patients tracking 4 goals | n=1 |

|

|

One dropout patient did not set a goal | |

| System usage |

|

|

|

|

Unique survey completions | 210 |

|

|

Questions responded | 1311 |

|

|

Portal access (only accessed by 4) patients) |

1.74 average Min 0 Max 3 |

Patients reported that they manage their multiple chronic illnesses, acute issues that arise, and personal issues as part of their daily lives. Patients also reported using other apps to help manage their health with activity apps and tools such as FitBit, JawBone, and blood sugar monitoring apps.

Provider User and Tasks

Active ePRO providers, differed in terms of profession and the role they played in the primary care practice; however, all engaged in goal-setting activities with patients before the initiation of the pilot study. Through training and piloting, it was clear that most of the provider participants had a strong competency with technology with only 1 participant identifying more limited capability. In general, providers work in a busy practice where they typically get 30 minutes with their patients, whereas the physician gets closer to 10 minutes. Providers accessed the portal much more often than the patients, on average 10.4 times over 4 weeks (min: 4, max: 15), which is understandable as patients later reported forgetting that there was a portal or experiencing errors with the portal.

Providers reported that they were primarily only able to view patient data just before a patient’s follow-up visit to see a snapshot of the patient’s progress between appointments as that fit more readily into their existing workflow. Some providers reported seeing patients weekly (as was the case for the social worker), and others saw patients more on an as-needed basis. Providers noted that they already engaged in goal-setting activities with their patients often using the SMART goal framework (used initially in rehabilitative settings, see [43]). The practice also provides chronic disease management programs (ie, smoking cessation program). All providers are required to chart all patient encounters in their electronic medical record (EMR).

Assessing Usability: Technology Assessment Efficiency, Effectiveness, and Satisfaction With the ePRO Tool

Patient Feedback

Efficiency

Although each protocol did not take long to complete (on average 2-6 minutes, see Table 3), patient participants found that data entry became a tedious task, and at least 2 participants noted that they began completing questions for multiple days at one time, potentially compromising the validity of the self-report data. The ePRO system data revealed that in fact 5 patients completed questions for multiple days at one time, which accounted for between 8% and 46% of the participants’ answers. Participants also found that they forgot to do their monitoring when managing acute health issues or other life stresses. For example, one participant was managing chronic pain issues and lapses in her memory and experienced difficulty incorporating the device into her daily life.

Table 3.

Time to complete monitoring tasks for patients.

| Goals | No. of questions per survey/protocol | No. of protocol completions | Time to complete protocol (minutes) |

| Physical health | 6 required, 3 optional | 75 | Average: 3 Min:1; Max:12 1 outlier: 1428a |

| Mood and memory | 5 required, 3 optional | 68 | Average: 5.3 Min:1; Max:57 2 outliers: 1425 and 1180a |

| Pain | 5 required, 3 optional | 52 | Average: 5.6 Min: 1; Max: 31 1 outlier: 1427a |

| Mobility | 23 required, 2 optional | 3 | Average: 6.3 Min: 2; Max: 16 |

| Diet | 2 required, 1 optional | 9 | Average: 1.7 Min:0; Max:3 1 outlier: 683a |

aIf patients left surveys in the middle of completing them, the system recorded the full amount of time it took to complete the survey resulting in outliers as high as 1428 minutes.

And I don’t think that the pain was the total cause of memory lapse but it had a great deal to do with it.I mean I was going through a great deal of stress. So when I started to add them all together, you know, the stress, the workload, the impending developments that were happening in my life in completely different areas, you know, it was just like I'm standing here trying to juggle 8 balls without having any of them touch the floor it has a tendency to make you forget about whatever else you're doing

P009

atients made a number of suggestions for creating a more responsive tool that would complement their daily routine through reminders (for patients to input their data) and feedback acknowledging their completion of questions, flagging results (as appropriate), and connecting with the other health monitoring apps (ie, FitBit, JawBone, blood sugar monitoring). The most common suggestion was to enable connections between their health goals from the ePRO tool with other apps they were using to improve efficiency of their self-monitoring efforts.

Like linking with FitBit, for example... Yes, I think that would be very useful. Because your…well, it depends on how you use FitBit of course but, you know, you are tracking a lot of indicators around activity, weight, etc…. if you ’re diabetic, is it to link your blood sugar to your performance? You know, all those kinds of things. I think it would have to have a specific reason for using it.”

P002

Effectiveness

Some patients in the study identified that they felt using the tool had an early impact on their ability to self-manage and track their health goals. The tool helped to catalyze a sense of responsibility over their care and helped to improve patient-centered care delivery.

I knew why I felt better one week and why I did not feel better the next week.

P011

[my provider and I] were able to see …that [my goal] was not moving really, and to try to change it better…

P005

Patients were able to reflect on their progress of achieving their goals with providers and valued this interaction.

When we were talking about my goal, we were going through the charts, her giving her opinion. She was actually changing my opinion of how I should have answered that question. Well, that should be more interactive. I’d like to get her to do that sooner.

P012

However, patients experienced technical errors and difficulty in reviewing previously entered open-text data. In addition, questions often did not appear at the correct times or days, and patients were unable to locate different features on the tool. One patient and provider were so frustrated by the errors that they nearly pulled out of the study. This particular patient called both the provider and research team frequently to troubleshoot connectivity issues and to discuss aspects of the tool they did not like.

Learnability

The learning process was also an important challenge as one-time training was not considered to be sufficient. Participants suggested that adding instructional videos to highlight the key steps for using the device might be beneficial for visual learners and easier to follow than written instructions.

Satisfaction

Once technical errors experienced in the first 2 weeks of the study were resolved, patients appreciated having a device to help them monitor their health goals and health behaviors.

I found it very easy to use. I found that once I determined when I was going to do it daily, that I did it. I always got it done

P002

However, some patients reported feeling isolated with the mobile device, and felt that the tool could become a replacement for inperson consultation.

I think the only concern that I had big time was that it might be used to replace people. And that would really sadden me. I think especially in this time and, you know, people do really need people-to-people care and contact. And especially when they’re sick

P003

To mitigate feelings of detachment from the health care provider, participants suggested that having questions and goals that were more specific to the participant’s conditions, as well as personalized feedback from the provider or peers from the clinic would be helpful. Tool-enabled feedback, particularly from peers, was also viewed as a way to offer encouragement on progress toward goal attainment by way of a shared experience.

Provider Feedback

Efficiency

The research team was unable to track the time it took for patients and providers to set up the goals and the monitoring regime. Providers would schedule a 30-minute session to complete this task; however, they reported that the actual use of the tool would not take the full 30 minutes, but often the conversation about goal setting with the patient would require the entire visit.

After the initial visit, provider participants reported experiencing difficulty incorporating patient data into their workflow in terms of: (1) increased charting time required to input data into the provider’s EMR and (2) being able to view data in manageable chunks.

It would be very helpful if I can see that reporting for a week. Because what I did was I chose people that I see weekly anyway. But realistically in terms of workload even one patient, I bet I would not look at it, you know, every day.

Provider 06

Providers also identified a desire to have the content of the tool better aligned with current programs and practices. As one provider noted:

You know, in terms of using that in the template, that kind of way to set up and frame a goal with them... Because we're working with SMART goals, they need to be that specific...

Provider 04

Finally, some providers felt that they may be liable for monitoring patients during out-of-office hours, which would require additional time and resources. One provider discussed a specific experience during the pilot in which one of her two patients enrolled in the study started “sinking fast,” which she was able to catch by reviewing monitoring data on the portal. However, the provider identified this may not be a scalable solution in future:

Oh my god, this guy is sinking fast, I better make the phone call. Which I did. But I can’t do that for all my patients all the time.

Provider 05

Although it was made clear to providers and patients in training and on the app (see the “No Emergency Monitoring” message in Figure 3) that continuous monitoring was not available, some providers still reported experiencing conflict between ensuring that the tool fit in their existing workflow and their desire to provide high-quality care to their patients. Over the course of the pilot providers, most of them reviewed data before the patient’s appointment rather than monitoring in-between visits and found greater potential for the data to be useful as a synopsis of the patient’s progress.

Effectiveness

Some providers noted that having the tool in front of them helped to focus the conversation on patient goals.

To be able to talk about this with her, it was like, oh, thank God I can help set a goal.”

Provider 06

However, providers had difficulties goal setting for some patients, as the goals on the tool were not specific to the health monitoring options on the tool. Providers expressed concerns that the tool’s content was not comprehensive, as setting a specific goal and self-management were two different elements for chronic disease management:

Well, because we’re diabetes educators, R2 and I, we set goals all the time. But the goals that we’re setting were not reflecting in the options that were available. So there was no blood sugar. You know, there's no goal for blood sugar. There was no goal for pain management. There was no… Like there were n’t specific… They weren’t specific enough. And because we’re working with smart goals, they need to be that specific.

Provider 05

To improve effectiveness (and efficiency), providers wanted the tool to fit better with their existing workflows and programs, for example, through better alignment with creation of SMART goals for patients or allowing for monitoring protocols that aligned with goals of existing chronic disease management programs.

Providers experienced technical issues with the device and Web portal, including data not appearing on the portal after being entered on the mobile device, goals not showing up on patients' devices after being set up on the portal, and some challenges with login.

Learnability

Providers were unsure of where in the tool to enter free text and how to locate specific goals within the tool. In the focus group, providers noted forgetting about certain elements of the tool, suggesting the need for a manual or additional training sessions.

Satisfaction

Although providers also recognized the value of the tool in assisting the patient to self-monitor their chronic conditions, self-monitoring and goal attainment were two aspects in which the tool was felt to be lacking. For example, one provider discussed his or her goal of helping improve the patient’s mood and using the tool to monitor the patient’s eating habits and how the tool offered limited utility in supporting that goal.

The mood one might be a good pre and… Like I do n’t know, it depends on what the goal is. But like maybe a pre and post, doing the study to get like a sense of where they are before and where they are after. But like again with like eating the piece of fruit, her answering those questions every day, I do n’t know, maybe she gave you feedback saying that was really helpful, I do n’t know. I didn't think it was all that helpful. At least it wasn't helpful from my perspective.

Provider 04

With regard to goal attainment in particular, providers were concerned that questions were not specific enough to match goals.

FITT Assessment of the ePRO Tool

The technology assessment (efficiency, effectiveness, learnability, and satisfaction with the tool) was compared with identified user needs and tasks. This analysis is summarized in Table 4 to provide an assessment of the feasibility and usability of the ePRO tool.

Table 4.

Feasibility and usability assessment overview.

|

|

Users | Task | Technology |

| Patients | Multiple chronic conditions with moderate comfort with technology. Interests in monitoring goals related to physical health, mood and memory, pain, diet, and mobility Primarily used the mobile device |

Daily routines:

|

The ePRO tool met user needs to monitor and track goals they wished to work on; however, it did not fit well with daily tasks given questions were repetitive and not appropriately tailored to goal activities, and the tool was unable to connect with other monitoring activities in which patients were already engaged. |

| Providers | Multi-disciplinary providers from primary health care practice, moderate to high level of comfort with technology. Busy practice with limited time to monitor patients between visits. General interest in helping patients better manage |

Workflows:

|

The ePRO tool was helpful in getting patients to discuss goals as a strategy to improve management; however, it did not fit well with provider workflows in terms of supporting SMART goal development and integration with the EMR. |

Discussion

Despite the many challenges, patients and providers demonstrated near-daily use of the device over a short period of time. When we explore some of the more detailed feedback from the focus groups, ease of use of the tool, the noted impact on ability of patients to self-manage and patient-centered care delivery, and the potential for the tool to improve on their sense of responsibility over their care, may be the reason why there was daily usage among patients and weekly usage among providers despite challenges. Providers were equally positive about the potential of the tool to improve efficiency and patient-centeredness at the point of care, particularly if suggested changes were to be implemented.

However, based on our assessment criteria, overall feasibility and usability of the tool are determined to be low from both the patients’ and providers’ perspectives. Concerns regarding the impact on patient-provider relationships, the repetitive nature of questions leading to individuals filling out multiple questions at one time, and an inability of the system to connect with other monitoring activities they were already doing (eg, physical activity monitoring using other apps and devices) were among the more notable issues identified. Furthermore, the system was plagued by connectivity errors, which caused ongoing concerns and frustrations for both patient and provider users. Errors in usability tests are not uncommon and have been noted to impact on usability [17]. We should also consider that daily data entry may be too frequent for most users, and as such, looking for ways to collect data unobtrusively (eg, through connecting to a FitBit or JawBone device) may not only meet identified user needs but could also serve to reduce respondent fatigue.

Providers had a difficult time integrating the monitoring into their daily workflows. Although these providers were already doing goal setting with patients, concerns with a lack of integration with their EMR system and content that did not follow their usual model of care made usability and feasibility of the ePRO tool challenging. A notable concern is how the use of standardized monitoring protocols did not allow for effective monitoring of patient goals, which speaks to a relatively poor task-technology fit. Although the PROMIS tools are valid and reliable measures, these types of generic outcome measures are less helpful for day-to-day management of health-related goals for patients with CCDD. The adoption of valid and reliable patient-reported outcome measures thus may not be as useful in ongoing management of patients with CCDD and patient-centered care delivery [44] but rather may be more useful to assess whether goal attainment is having an effect on outcomes over the medium to long term (6-12 months).

Workflow integration is an important consideration as the introduction of technologies not only augments work processes but in fact reorganizes them [45]. Coupling ehealth tools successfully to health care provider workflow is challenging. Heterogeneity of care practices paired with interdisciplinary team roles and responsibilities, as with our FHT providers, makes it increasingly difficult to accurately and consistently predict provider care needs, especially when caring for patients with complex care needs [46-48]. However, the appetite for mobile health solutions that match provider workflow is in demand [49], with the likelihood of meaningful adoption increasing when end users are involved in the design process [50].

Unfortunately, the tool did not support interdisciplinary practice as we had hoped. We had anticipated that data could be used by the entire FHT (not just the active providers) to help in management of patients; however, the tool did not get used in this way during the pilot study, rather, providers used data in their own management of patients as part of their solo practice. Four weeks may have been a too short period, and potentially, longer term use may provide more opportunities for the data to be used by the interdisciplinary team. The impact on interdisciplinary practice will be examined in our exploratory trial (4-month trial) and pragmatic trial (12-month trial) of the ePRO tool.

The low usability of the tool was a somewhat surprising finding given the extensive user-centered design approach used in the earlier phases of this study. Providers from the FHT as well as patients and caregivers provided detailed and ongoing feedback on the development of the tool (outlined in [36]) before running the usability pilot; however, many of the usability and feasibility challenges experienced in the pilot presented here, particularly those with regard to content, were not identified in earlier stages of development. Typical user-centered design approaches may face challenges when addressing particularly complex patient populations, such as children and those with cognitive impairment, and user feedback may not be useful in all phases of the design process due to the lack of necessary design skills in users [51]. More than likely, however, was that the challenges experienced were not foreseen, and as such reinforced the importance of conducting small, real-world setting pilots such as those presented here as part of a user-centered development approach.

Limitations

Quantitative data on usage pulled from the ePRO system data did not provide the level of detail we would have liked with regard to tool efficiency. We had made a deliberate decision to do a real-world pilot test of the tool rather than test usability in a laboratory setting as has been used in other usability tests [52]. Although limiting, we dictate that real-world usability testing was more valuable when developing tools for a diverse user group such as patients with CCDD and multidisciplinary providers. Future testing will seek to refine the data that can be collected from the ePRO system to be able to extract time data more effectively. Another limitation of our study was the attrition rate of the patient participants. One of the challenges working with patients with CCDD means that health issues may impede ongoing participation in research. Future piloting and evaluation work will seek to oversample to a greater extent to offset likely dropouts.

Although the numbers of test users are low in our study, Nielsen and Landauer’s [53] model of detecting usability problems suggests that most usability problems (75%) can be detected by 10 users, and 50% of them can be detected by as few as 5. Furthermore, they offer a cost-benefit analysis of including additional users in testing and suggests that an optimal number of test subjects in medium-large projects is 6.7 when using test users to conduct a usability assessment. As such, although our numbers are low, they are aligned with other similar usability studies [30,31,54-57] and also allow us to capture most usability problems we are likely to see.

Finally, we were only able to conduct focus groups and interviews after the pilot study rather than during the study. In a similar pilot, Verwey et al [17] interviewed each user directly after all consultations to capture usability information, which may allow for capturing more useful data.

Conclusions and Future Works

Although our study suggests relatively low usability and feasibility of the ePRO tool, we are encouraged by the early impact on patient self-management and patient-centered care delivery as well as the general positive response from patients and providers regarding the importance and potential of a tool, which supports goal-oriented care delivery for patients with CCDD in primary health care settings. Findings from the pilot will be used to modify the ePRO tool to improve its feasibility and usability. Key considerations moving forward include: modifying goal monitoring protocols to allow for tailoring to specific goals, ensuring that we target patients with CCDD who could benefit most from goal-oriented care and prescreening for those who may be likely to experience anxiety related to participation, integrating the system into the EMR or with provider workflows, enabling integration with external apps used by patients (eg, JawBone or FitBit), and providing ongoing training opportunities potentially embedded within the tool (ie, through videos and walk-throughs).

Acknowledgments

The authors would like to acknowledge the Bridgepoint Family Health Team staff, health care providers, Clinical Lead and Executive Director for their support in this work, and the patient participants who dedicated their time to participate in this study. They would also like to acknowledge the tremendous work and support from QoC Health Inc. in particular Sarah Sharpe, Sue Bhella and Chancellor Crawford for facilitating working groups and working as the information technology leads supporting this work. Funding for this study was provided by the Ontario Ministry of Health and Long-Term Care (Health Services Research Fund #06034) through the Health System Performance Research Network at the University of Toronto. The views reflected in this study are those of the research team and not the funder.

Abbreviations

- CCDD

complex chronic disease and disability

- EMR

electronic medical record

- electronic medical record

electronic Patient Report Outcomes

- FITT

fit between individuals, tasks, and Technology

- IT

Information Technology

- PROMIS

Patient-Reported Outcomes Measurement Information System

- SMART goals

Specific, Measurable, Achievable, Realistic, Timed goals

Footnotes

Conflicts of Interest: None declared.

References

- 1.Noël PH, Frueh BC, Larme AC, Pugh JA. Collaborative care needs and preferences of primary care patients with multimorbidity. Health Expect. 2005 Mar;8(1):54–63. doi: 10.1111/j.1369-7625.2004.00312.x.HEX312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Marengoni A, Angleman S, Melis R, Mangialasche F, Karp A, Garmen A, Meinow B, Fratiglioni L. Aging with multimorbidity: a systematic review of the literature. Ageing Res Rev. 2011 Sep;10(4):430–9. doi: 10.1016/j.arr.2011.03.003.S1568-1637(11)00024-9 [DOI] [PubMed] [Google Scholar]

- 3.Bayliss E A, Bosworth H B, Noel P H, Wolff J L, Damush T M, Mciver L. Supporting self-management for patients with complex medical needs: recommendations of a working group. Chronic Illn. 2007 Jun;3(2):167–75. doi: 10.1177/1742395307081501.3/2/167 [DOI] [PubMed] [Google Scholar]

- 4.Canadian Institute for Health Information Primary Health Care. [2015-11-06]. Seniors and the Health Care System: What Is the Impact of Multiple Chronic Conditions https://www.cihi.ca/en/info_phc_chronic_seniors_en.pdf .

- 5.Boyd CM, Darer J, Boult C, Fried LP, Boult L, Wu AW. Clinical practice guidelines and quality of care for older patients with multiple comorbid diseases: implications for pay for performance. JAMA. 2005 Aug 10;294(6):716–24. doi: 10.1001/jama.294.6.716.294/6/716 [DOI] [PubMed] [Google Scholar]

- 6.Upshur Ross E G Do clinical guidelines still make sense? No. Ann Fam Med. 2014;12(3):202–3. doi: 10.1370/afm.1654. http://www.annfammed.org/cgi/pmidlookup?view=long&pmid=24821890 .12/3/202 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Institute of Medicine (US) Committee on Quality of Health Care in Amercia Crossing the Quality Chasm: A New Health System for the 21st Century. National Academy of Sciences. 2001 Mar;:1–8. [Google Scholar]

- 8.Boyd CM, Fortin M. Future of Multimorbidity Research: How Should Understanding of Multimorbidity Inform Health System Design? Public Health Reviews. 2010;32(2):451–474. http://www.publichealthreviews.eu/upload/pdf_files/8/PHR_32_2_Boyd.pdf . [Google Scholar]

- 9.Upshur C, Weinreb L. A survey of primary care provider attitudes and behaviors regarding treatment of adult depression: what changes after a collaborative care intervention? Prim Care Companion J Clin Psychiatry. 2008;10(3):182–6. doi: 10.4088/pcc.v10n0301. http://europepmc.org/abstract/MED/18615167 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sinnott C, McHugh S, Browne J, Bradley C. GPs' perspectives on the management of patients with multimorbidity: systematic review and synthesis of qualitative research. BMJ Open. 2013;3(9):e003610. doi: 10.1136/bmjopen-2013-003610. http://bmjopen.bmj.com/cgi/pmidlookup?view=long&pmid=24038011 .bmjopen-2013-003610 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Reuben DB, Tinetti ME. Goal-oriented patient care--an alternative health outcomes paradigm. N Engl J Med. 2012 Mar 1;366(9):777–9. doi: 10.1056/NEJMp1113631. [DOI] [PubMed] [Google Scholar]

- 12.Kuluski K, Gill A, Naganathan G, Upshur R, Jaakkimainen RL, Wodchis WP. A qualitative descriptive study on the alignment of care goals between older persons with multi-morbidities, their family physicians and informal caregivers. BMC Fam Pract. 2013;14:133. doi: 10.1186/1471-2296-14-133. http://www.biomedcentral.com/1471-2296/14/133 .1471-2296-14-133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ekdahl AW, Hellström I, Andersson L, Friedrichsen M. Too complex and time-consuming to fit in! Physicians' experiences of elderly patients and their participation in medical decision making: a grounded theory study. BMJ Open. 2012;2(3):e001063. doi: 10.1136/bmjopen-2012-001063. http://bmjopen.bmj.com/cgi/pmidlookup?view=long&pmid=22654092 .bmjopen-2012-001063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McLean S, Sheikh A. Does telehealthcare offer a patient-centred way forward for the community-based management of long-term respiratory disease? Prim Care Respir J. 2009 Sep;18(3):125–6. doi: 10.3132/pcrj.2009.00006. doi: 10.3132/pcrj.2009.00006.SG00155 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Appelboom G, Yang AH, Christophe BR, Bruce EM, Slomian J, Bruyère O, Bruce SS, Zacharia BE, Reginster J, Connolly ES. The promise of wearable activity sensors to define patient recovery. J Clin Neurosci. 2014 Jul;21(7):1089–93. doi: 10.1016/j.jocn.2013.12.003.S0967-5868(13)00647-4 [DOI] [PubMed] [Google Scholar]

- 16.Zheng H, Nugent C, McCullagh P, Huang Y, Zhang S, Burns W, Davies R, Black N, Wright P, Mawson S, Eccleston C, Hawley M, Mountain G. Smart self management: assistive technology to support people with chronic disease. J Telemed Telecare. 2010;16(4):224–7. doi: 10.1258/jtt.2010.004017.16/4/224 [DOI] [PubMed] [Google Scholar]

- 17.Verwey R, van der Weegen S. Spreeuwenberg M, Tange H, van der Weijden T. de WL. Technology combined with a counseling protocol to stimulate physical activity of chronically ill patients in primary care. Stud Health Technol Inform. 2014;201:264–70. [PubMed] [Google Scholar]

- 18.Ricciardi L, Mostashari F, Murphy J, Daniel JG, Siminerio EP. A national action plan to support consumer engagement via e-health. Health Aff (Millwood) 2013 Feb;32(2):376–84. doi: 10.1377/hlthaff.2012.1216.32/2/376 [DOI] [PubMed] [Google Scholar]

- 19.Strecher V. Internet methods for delivering behavioral and health-related interventions (eHealth) Annu Rev Clin Psychol. 2007;3:53–76. doi: 10.1146/annurev.clinpsy.3.022806.091428. [DOI] [PubMed] [Google Scholar]

- 20.van der Weegen Sanne. Verwey R, Spreeuwenberg M, Tange H, van der Weijden Trudy. de WL. The development of a mobile monitoring and feedback tool to stimulate physical activity of people with a chronic disease in primary care: a user-centered design. JMIR Mhealth Uhealth. 2013;1(2):e8. doi: 10.2196/mhealth.2526. http://mhealth.jmir.org/2013/2/e8/ v1i2e8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Becker S, Miron-Shatz T, Schumacher N, Krocza J, Diamantidis C, Albrecht U. mHealth 2.0: Experiences, Possibilities, and Perspectives. JMIR Mhealth Uhealth. 2014;2(2):e24. doi: 10.2196/mhealth.3328. http://mhealth.jmir.org/2014/2/e24/ v2i2e24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.QoC Health. [2016-01-19]. Who we are http://qochealth.com/who-we-are/

- 23.Devi K, Sen A, Hemachandran K. A working Framework for the User-Centered Design Approach and a Survey of the available Methods. International Journal of Scientific and Research Publications. 2012 Apr;2(4):1–8. [Google Scholar]

- 24.Vermeulen J, Neyens JC, Spreeuwenberg MD, van RE, Sipers W, Habets H, Hewson DJ, de Witte Luc P User-centered development and testing of a monitoring system that provides feedback regarding physical functioning to elderly people. Patient Prefer Adherence. 2013 Aug;7:843–54. doi: 10.2147/PPA.S45897. doi: 10.2147/PPA.S45897.ppa-7-843 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Sánchez-Morillo D, Crespo M, León A, Crespo Foix Luis F A novel multimodal tool for telemonitoring patients with COPD. Inform Health Soc Care. 2015 Jan;40(1):1–22. doi: 10.3109/17538157.2013.872114. [DOI] [PubMed] [Google Scholar]

- 26.Tsiknakis M, Kouroubali A. Organizational factors affecting successful adoption of innovative eHealth services: a case study employing the FITT framework. Int J Med Inform. 2009 Jan;78(1):39–52. doi: 10.1016/j.ijmedinf.2008.07.001.S1386-5056(08)00119-6 [DOI] [PubMed] [Google Scholar]

- 27.Sheehan B, Lee Y, Rodriguez M, Tiase V, Schnall R. A comparison of usability factors of four mobile devices for accessing healthcare information by adolescents. Appl Clin Inform. 2012;3(4):356–66. doi: 10.4338/ACI-2012-06-RA-0021. http://europepmc.org/abstract/MED/23227134 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Yang C, Linas B, Kirk G, Bollinger R, Chang L, Chander G, Siconolfi D, Braxton S, Rudolph A, Latkin C. Feasibility and Acceptability of Smartphone-Based Ecological Momentary Assessment of Alcohol Use Among African American Men Who Have Sex With Men in Baltimore. JMIR Mhealth Uhealth. 2015;3(2):e67. doi: 10.2196/mhealth.4344. http://mhealth.jmir.org/2015/2/e67/ v3i2e67 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Brown W, Yen P, Rojas M, Schnall R. Assessment of the Health IT Usability Evaluation Model (Health-ITUEM) for evaluating mobile health (mHealth) technology. J Biomed Inform. 2013 Dec;46(6):1080–7. doi: 10.1016/j.jbi.2013.08.001. http://linkinghub.elsevier.com/retrieve/pii/S1532-0464(13)00116-0 .S1532-0464(13)00116-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Al Ayubi Soleh U. Parmanto B, Branch R, Ding D. A Persuasive and Social mHealth Application for Physical Activity: A Usability and Feasibility Study. JMIR Mhealth Uhealth. 2014;2(2):e25. doi: 10.2196/mhealth.2902. http://mhealth.jmir.org/2014/2/e25/ v2i2e25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bakhshi-Raiez F, de Keizer N F. Cornet R, Dorrepaal M, Dongelmans D, Jaspers M W M A usability evaluation of a SNOMED CT based compositional interface terminology for intensive care. Int J Med Inform. 2012 May;81(5):351–62. doi: 10.1016/j.ijmedinf.2011.09.010.S1386-5056(11)00202-4 [DOI] [PubMed] [Google Scholar]

- 32.Jia G, Zhou J, Yang P, Lin C, Cao X, Hu H, Ning G. Integration of user centered design in the development of health monitoring system for elderly. Conf Proc IEEE Eng Med Biol Soc. 2013;2013:1748–51. doi: 10.1109/EMBC.2013.6609858. [DOI] [PubMed] [Google Scholar]

- 33.Tjora A, Tran T, Faxvaag A. Privacy vs usability: a qualitative exploration of patients' experiences with secure Internet communication with their general practitioner. J Med Internet Res. 2005;7(2):e15. doi: 10.2196/jmir.7.2.e15. http://www.jmir.org/2005/2/e15/ v7i2e15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Steele GC, Miller D, Kuluski K, Cott C. Tying eHealth Tools to Patient Needs: Exploring the Use of eHealth for Community-Dwelling Patients With Complex Chronic Disease and Disability. JMIR Res Protoc. 2014 Nov;3(4):e67. doi: 10.2196/resprot.3500. http://www.researchprotocols.org/2014/4/e67/ v3i4e67 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Glazier R, Zagorski B, Rayner J. Institute for Clinical Evaluative Sciences. 2012. [2015-11-06]. Comparison of Primary Care Models in Ontario by Demographics, Case Mix and Emergency Department Use, 2008/09 to 2009/10 http://www.ices.on.ca/Publications/Atlases-and-Reports/2012/Comparison-of-Primary-Care-Models .

- 36.Steele GC, Khan A, Kuluski K, McKillop I, Sharpe S, Bierman A, Lyons R, Cott C. Improving Patient Experience and Primary Care Quality for Patients With Complex Chronic Disease Using the Electronic Patient-Reported Outcomes Tool: Adopting Qualitative Methods Into a User-Centered Design Approach. JMIR Res Protoc. 2016;5(1):e28. doi: 10.2196/resprot.5204. http://www.researchprotocols.org/2016/1/e28/ v5i1e28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Cella D, Riley W, Stone A, Rothrock N, Reeve B, Yount S, Amtmann D, Bode R, Buysse D, Choi S, Cook K, Devellis R, DeWalt D, Fries JF, Gershon R, Hahn EA, Lai J, Pilkonis P, Revicki D, Rose M, Weinfurt K, Hays R. The Patient-Reported Outcomes Measurement Information System (PROMIS) developed and tested its first wave of adult self-reported health outcome item banks: 2005-2008. J Clin Epidemiol. 2010 Nov;63(11):1179–94. doi: 10.1016/j.jclinepi.2010.04.011. http://europepmc.org/abstract/MED/20685078 .S0895-4356(10)00173-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Frei A, Svarin A, Steurer-Stey C, Puhan MA. Self-efficacy instruments for patients with chronic diseases suffer from methodological limitations--a systematic review. Health Qual Life Outcomes. 2009;7:86. doi: 10.1186/1477-7525-7-86. http://hqlo.biomedcentral.com/articles/10.1186/1477-7525-7-86 .1477-7525-7-86 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Broderick JE, Schneider S, Junghaenel DU, Schwartz JE, Stone AA. Validity and reliability of patient-reported outcomes measurement information system instruments in osteoarthritis. Arthritis Care Res (Hoboken) 2013 Oct;65(10):1625–33. doi: 10.1002/acr.22025. doi: 10.1002/acr.22025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Green J, Thorogood N. Qualitative Methods for Health Research (Introducing Qualitative Methods series). 2nd edition. London, UK: SAGE Publications Ltd; 2009. [Google Scholar]

- 41.QSR International Ply Ltd. 2012. [2016-04-25]. NVivo qualitative data analysis software, Version 10 http://www.qsrinternational.com/product .

- 42.O'Kane MJ, Bunting B, Copeland M, Coates VE. Efficacy of self monitoring of blood glucose in patients with newly diagnosed type 2 diabetes (ESMON study): randomised controlled trial. BMJ. 2008 May 24;336(7654):1174–7. doi: 10.1136/bmj.39534.571644.BE. http://www.bmj.com/cgi/pmidlookup?view=long&pmid=18420662 .bmj.39534.571644.BE [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Bovend'Eerdt Thamar J H. Botell RE, Wade DT. Writing SMART rehabilitation goals and achieving goal attainment scaling: a practical guide. Clin Rehabil. 2009 Apr;23(4):352–61. doi: 10.1177/0269215508101741.0269215508101741 [DOI] [PubMed] [Google Scholar]

- 44.Miller D, Steele Gray C, Kuluski K, Cott C. Patient-Centered Care and Patient-Reported Measures: Let's Look Before We Leap. Patient. 2015 Oct;8(4):293–9. doi: 10.1007/s40271-014-0095-7. http://europepmc.org/abstract/MED/25354873 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Medina-Mora R, Winograd T, Flores R, Flores F. The action workflow approach to workflow management technology. Proceedings of the 1992 ACM conference on Computer-supported cooperative work; November 01 - 04, 1992; Toronto, Ontario, Canada. 1992. pp. 281–88. https://www.lri.fr/~mbl/ENS/CSCW/material/papers/MedinaMora-CSCW92.pdf . [Google Scholar]

- 46.Schaink A, Kuluski K, Lyons R, Fortin M, Jadad A, Upshur R, Wodchis WP. A scoping review and thematic classification of patient complexity: Offering a unifying framework. Journal of Comorbidity. 2012;2(1):1–9. doi: 10.15256/joc.2012.2.15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Pappas C, Coscia E, Dodero G, Gianuzzi V, Earney M. A mobile e-health system based on workflow automation tools. Proceedings of the 15th IEEE Symposium Computer-Based Medical Systems; 2002; Maribor, Slovenia. 2002. Jun 07, pp. 271–276. http://doi.ieeecomputersociety.org/10.1109/CBMS.2002.1011388 . [Google Scholar]

- 48.Tsasis P, Bains J. Management of complex chronic disease: facing the challenges in the Canadian health-care system. Health Serv Manage Res. 2008 Nov;21(4):228–35. doi: 10.1258/hsmr.2008.008001.21/4/228 [DOI] [PubMed] [Google Scholar]

- 49.Yu P, Yu H. Lessons learned from the practice of mobile health application development. Proceedings of the 28th IEEE Annual International Computer Software and Applications Conference ;2; 2004; Hong Kong. 2004. Sep 28, pp. 58–59. http://www.computer.org/csdl/proceedings/compsac/2004/2209/02/220920058.pdf . [DOI] [Google Scholar]

- 50.Abras C, Maloney-Krichmar D, Preece J. User-centered design. In: Bainbridge W, editor. Encyclopedia of Human-Computer Interaction. Thousand Oaks, California: Sage Publications; 2004. [Google Scholar]

- 51.Marti P, Bannon L. Exploring user-centred design in practice: Some Caveats. Know Techn Pol. 2009;22:7–15. doi: 10.1007/x12130-009-9062-3. [DOI] [Google Scholar]

- 52.Kaufman D, Starren J, Patel V, Morin P, Hilliman C, Pevzner Jenia, Weinstock Ruth S, Goland Robin, Shea Steven. A cognitive framework for understanding barriers to the productive use of a diabetes home telemedicine system. AMIA Annu Symp Proc. 2003:356–60. http://europepmc.org/abstract/MED/14728194 .D030003330 [PMC free article] [PubMed] [Google Scholar]

- 53.Nielsen J, Landauer T. A mathematical model of the finding of usability problems. Proceedings of the INTERACT'93 and CHI'93 conference on Human factors in computing systems ACM; 1993; Amsterdam, Netherlands. 1993. Apr 24-29, pp. 206–213. http://people.cs.uct.ac.za/~dnunez/reading/papers/p206-nielsen.pdf . [Google Scholar]

- 54.Connelly K, Siek KA, Chaudry B, Jones J, Astroth K, Welch JL. An offline mobile nutrition monitoring intervention for varying-literacy patients receiving hemodialysis: a pilot study examining usage and usability. J Am Med Inform Assoc. 2012;19(5):705–12. doi: 10.1136/amiajnl-2011-000732. http://jamia.oxfordjournals.org/cgi/pmidlookup?view=long&pmid=22582206 .amiajnl-2011-000732 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Knight-Agarwal C, Davis DL, Williams L, Davey R, Cox R, Clarke A. Development and Pilot Testing of the Eating4two Mobile Phone App to Monitor Gestational Weight Gain. JMIR Mhealth Uhealth. 2015;3(2):e44. doi: 10.2196/mhealth.4071. http://mhealth.jmir.org/2015/2/e44/ v3i2e44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Zmily A, Mowafi Y, Mashal E. Study of the usability of spaced retrieval exercise using mobile devices for Alzheimer's disease rehabilitation. JMIR Mhealth Uhealth. 2014;2(3):e31. doi: 10.2196/mhealth.3136. http://mhealth.jmir.org/2014/3/e31 .v2i3e31 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Mirkovic J, Kaufman DR, Ruland CM. Supporting cancer patients in illness management: usability evaluation of a mobile app. JMIR Mhealth Uhealth. 2014;2(3):e33. doi: 10.2196/mhealth.3359. http://mhealth.jmir.org/2014/3/e33/ v2i3e33 [DOI] [PMC free article] [PubMed] [Google Scholar]