Abstract

Cortical neurons integrate thousands of synaptic inputs in their dendrites in highly nonlinear ways. It is unknown how these dendritic nonlinearities in individual cells contribute to computations at the level of neural circuits. Here, we show that dendritic nonlinearities are critical for the efficient integration of synaptic inputs in circuits performing analog computations with spiking neurons. We developed a theory that formalizes how a neuron's dendritic nonlinearity that is optimal for integrating synaptic inputs depends on the statistics of its presynaptic activity patterns. Based on their in vivo preynaptic population statistics (firing rates, membrane potential fluctuations, and correlations due to ensemble dynamics), our theory accurately predicted the responses of two different types of cortical pyramidal cells to patterned stimulation by two-photon glutamate uncaging. These results reveal a new computational principle underlying dendritic integration in cortical neurons by suggesting a functional link between cellular and systems--level properties of cortical circuits.

DOI: http://dx.doi.org/10.7554/eLife.10056.001

Research Organism: Human, Mouse, Rat

eLife digest

Imagine that you are in the habit of checking three different weather forecasts each day, and then one day in early September the first forecast suddenly predicts snow. If you live in an area where it doesn’t normally snow in September, your initial reaction is likely to be surprise. However, you will not be quite so surprised to see a prediction of snow in the second forecast, and by the third forecast you will hardly be surprised at all.

In these three cases, you have responded to the same piece of information in a different way. In mathematics, this type of response is referred to as “nonlinear” because the output (varying degrees of surprise) is not directly proportional to the input (identical predictions of snow). In the case of the weather forecasts, the source of the nonlinearity was the fact that the three predictions were not truly independent. Instead, they corresponded with one another, or “correlated”, because all three depended on the weather itself.

In the brain, a single neuron can receive thousands of inputs from other cells. These are received via junctions called synapses that form between the cells. In many cases, the synapses form on the receiving neuron’s dendrites – the short branches that protrude from its cell body. Each dendrite can receive signals from hundreds of other neurons, and must combine these inputs to produce a single neuronal response. How dendrites do this is not clear.

Ujfalussy et al. have now developed a computational model that predicts the optimal response of dendrites to complex and realistic inputs from other neurons. The model shows that when dendrites receive inputs from neurons that independently respond to different stimuli, the optimal response is for the dendrites to average the inputs. This is a form of linear processing. By contrast, when the inputs are correlated – for example, because they come from neurons responding to the same stimulus – the optimal response is nonlinear processing. In this and other cases, the optimal response predicted by the model is similar to the response observed in real dendrites.

The model also makes a number of testable predictions; for example, that neurons with correlated activities will tend to form clusters of synapses close together on the dendrites of a target neuron, whereas neurons with unrelated activities will tend to form synapses that are further apart. Somewhat unexpectedly, Ujfalussy et al. show that compensating for input correlations accounts for almost all the nonlinearities that can be found in real neurons' dendrites – at least in response to relatively simple input patterns. Thus, it remains to be shown whether nonlinear dendritic responses to more complex input patterns can also be explained by this single principle. Further studies are also required to understand how different plasticity mechanisms enable neurons to achieve this close match between input correlations and dendritic processing.

Introduction

The dendritic tree of a cortical neuron performs a highly nonlinear transformation on the thousands of inputs it receives from other neurons, sometimes resulting in a markedly sublinear (Longordo et al., 2013) and often in strongly superlinear integration of synaptic inputs (Losonczy and Magee, 2006; Nevian et al., 2007; Branco and Häusser, 2011; Makara and Magee, 2013). These nonlinearities have been traditionally studied from the perspective of single-neuron computations, using a few well-controlled synaptic stimuli, revealing a remarkable repertoire of arithmetic operations that the dendrites of cortical neurons carry out (Poirazi and Mel, 2001; London and Häusser, 2005; Branco et al., 2010) including additive, multiplicative and divisive ways of combining individual synaptic inputs in the cell’s response (Silver, 2010). More recently, the role of nonlinear dendritic integration in actively shaping responses of single neurons under in vivo conditions has been demonstrated in several cortical areas including the hippocampus (Grienberger et al., 2014), as well as visual (Smith et al., 2013) and somatosensory cortices (Xu et al., 2012; Lavzin et al., 2012; Palmer et al., 2014).

However, while many of the basic biophysical mechanisms underlying these nonlinearities are well understood (Stuart et al., 2007), it has proven a daunting task to include all these mechanisms in larger scale network models to understand their interplay at the level of the circuit (Herz et al., 2006). Conversely, studies of cortical computation and dynamics have largely ignored the complex and highly nonlinear information processing capabilities of the dendritic tree and concentrated on circuit-level computations emerging from interactions between point-like neurons with single, somatic nonlinearities (Hopfield, 1984; Seung and Sompolinsky, 1993; Gerstner and Kistler, 2002; Vogels et al., 2011). Therefore, it is unknown how dendritic nonlinearities in individual cells contribute to computations at the level of a neural circuit.

A limitation of most theories of nonlinear dendritic integration is that they focus on highly simplified input regimes (Mel et al., 1998; Poirazi et al., 2003; Archie and Mel, 2000; Poirazi and Mel, 2001; Ujfalussy et al., 2009), essentially requiring both the inputs and the output of a cell to have stationary firing rates. This approach thus ignores the effects and consequences of temporal variations in neural activities at the time scale of inter-spike intervals characteristic of in vivo states in cortical populations (Crochet et al., 2011; Haider et al., 2013). In contrast, we propose an approach which is specifically centered on these naturally occurring statistical patterns – in analogy to the principle of ‘adaptation to natural input statistics’ which has been highly successful in accounting for the input-output relationships of cells in a number of sensory areas at the systems level (Simoncelli and Olshausen, 2001). We pursued this principle in understanding the integrative properties of individual cortical neurons, for which the relevant statistical input patterns are those characterising the spatio-temporal dynamics of their presynaptic spike trains. Thus, rather than modelling specific biophysical properties of a neuron directly, our goal was to predict the phenomenological input integration properties that result from those biophysical properties and are matched to the statistics of the presynaptic activities.

Our theory is based on the observation that cortical neurons mainly communicate by action potentials, which are temporally punctate all-or-none events. In contrast, the computations cortical circuits perform are commonly assumed to involve the transformations of analog activities varying continuously in time, such as firing rates or membrane potentials (Rumelhart et al., 1986; Hopfield, 1984; Dayan and Abbott, 2001; Archie and Mel, 2000; London et al., 2010). This implies a fundamental bottleneck in cortical computations: the discrete and stochastic firing of spikes by neurons conveys only a limited amount of information about their rapidly fluctuating activities (Pfister et al., 2010; Sengupta et al., 2014). Formalising the implications of this bottleneck mathematically reveals that the robust operation of a circuit requires its neurons to integrate their inputs in highly nonlinear ways that specifically depend on two complementary factors: the computation performed by the neuron and the long-term statistics of the inputs it receives from its presynaptic partners.

To critically evaluate our theory, we first illustrate qualitatively the nonlinearities that most efficiently overcome the spiking bottleneck for different classes of presynaptic correlation structures. Next, to provide biophysical insight, we demonstrate that the form of optimal input integration for these presynaptic correlations can be efficiently approximated by a canonical, biophysically-motivated model of dendritic integration. Finally, we test the prediction that cortical dendrites are optimally tuned to their input statistics in in vitro experiments. For this, we use available in vivo data to characterize the presynaptic population activity of two different types of cortical pyramidal cells. Based on these input statistics, our theory accurately predicts the integrative properties of the postsynaptic dendrites measured in two-photon glutamate uncaging experiments. We also show that NMDA receptor activation is necessary for dendritic integration to approximate the optimal response. These results suggest a novel functional role for dendritic nonlinearities in allowing postsynaptic neurons to integrate their richly structured synaptic inputs near-optimally, thus making a key contribution to dynamically unfolding cortical computations.

Results

Suppose that every day you check your three favorite websites for the weather forecast. On a September day, the first website forecasts snow which you find hard to believe as it is highly unusual in your area – so you dismiss it as the forecaster’s mistake. However, when you read a similar forecast on the second site, you become convinced that snow is coming, and by the time the third site brings you the same news you are hardly surprised at all. Thus, even though all three sources conveyed the same information (snow), they had different impact on you – in other words, their cumulative effect was nonlinear. This nonlinearity was due to the fact that the information you get from these sites tends to be correlated as they are all related to a common cause, the actual weather. Below we argue that the same fundamental statistical principle, that correlated information sources require nonlinear integration, accounts for the dendritic nonlinearities of cortical pyramidal neurons.

Overcoming the spiking bottleneck in circuit computations

To introduce our theory, we consider a postsynaptic neuron computing some function, , of the activity of its presynaptic partners, (Figure 1A, top):

| (1) |

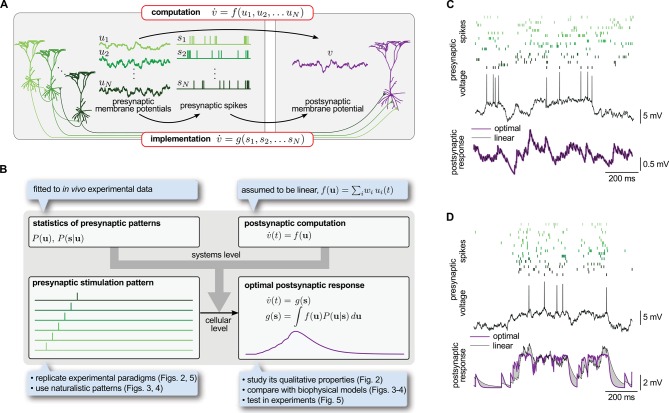

Figure 1. Spike-based implementation of analogue computations in neural circuits.

(A) Computation (top) is formalized as a mapping, , from presynaptic activities, (left), to the postsynaptic activity, (right). As neurons communicate with spikes, the implementation (bottom) of any computation must be based on the spikes the presynaptic neurons emit, (middle). Optimal input integration in the postsynaptic cells requires that the output of is close to that of . (B) The logic and plan of the paper. Grey box in the center shows theoretical framework, blue boxes around it show steps necessary to apply the framework to neural data. To compute the transformation from stimulation patterns (bottom left) to the optimal response (bottom right) we assumed linear computation (top right) and specified the presynaptic statistics based on cortical population activity patterns observed in vivo (top left). To demonstrate the validity of the approach, we studied the fundamental qualitative properties of the optimal response (Figure 2), compared it to biophysical models (Figures 3–4) and tested it in in vitro experiments (Figure 5). (C) The optimal postsynaptic response (purple line, bottom) linearly integrates spikes from different presynaptic neurons (top: rasters in shades of green; middle: membrane potential of one presynaptic cell) if their activities are statistically independent. (D) Optimal input integration becomes nonlinear (purple line, bottom) if the activities of the presynaptic neurons are correlated (rasters in shades of green, top), even though the long-term statistics and spiking nonlinearity of individual neurons remains the same as in (C). In this case, the best linear response (black line, bottom) is unable to follow the fluctuations in the signal.

Figure 1—figure supplement 1. An example of supralinear input integration with firing rate-based rather than membrane potential-based computations.

Figure 1—figure supplement 2. The range of total dendritic inputs in vitro and in vivo.

Figure 1—figure supplement 3. Nonlinear computation.

where is the resultant temporal change of the activity of the postsynaptic neuron. We chose and to be analog variables, rather than for example digital spike trains, in line with the vast bulk of theories of network computations (Hopfield, 1984; Dayan and Abbott, 2001; Pouget et al., 2003) and experimental results suggesting analog coding in the cortex (London et al., 2010; Shadlen and Newsome, 1998). In particular, we considered these variables to correspond to the coarse-grained (low-pass filtered) somatic membrane potentials of neurons (in particular, excluding the action potentials themselves, as often reported in experimental data; Carandini and Ferster, 2000), although the theory can equally be formalized in terms of instantaneous firing rates (Materials and methods, Figure 1-figure supplement 1).

The standard description of neural circuit dynamics in Equation 1 hides an important informational bottleneck intrinsic to the operation of cortical circuits. While according to Equation 1, the postsynaptic neuron’s analog activity, , is required to depend directly on the analog activities of its presynaptic partners, , in reality it only accesses these presynaptic activities through the spikes the presynaptic population transmits, , incurring a substantial loss of information (Alenda et al., 2010; Sengupta et al., 2014). Therefore, the function a neuron actually implements on its inputs can only depend directly on the presynaptic spikes, not the underlying activities (Figure 1A, bottom):

| (2) |

Importantly, while is dictated by the computational function of the circuit, the actual transformation of the synaptic input to the postsynaptic response, expressed by , is determined by the morphological and biophysical properties of the cell. (For these purposes, we regard the presynaptic side of synapses, transforming presynaptic spike trains to synaptic transmission events, as conceptually being part of the postsynaptic cell’s function.) How can then the neuron integrate the incoming presynaptic spikes, as formalized by , such that the resulting postsynaptic response best matches the required computational function, , thereby alleviating the fundamental informational bottleneck of spiking-based communication?

Determining the best is nontrivial because the same presynaptic spike train may be the result of many different underlying presynaptic activities (Paninski, 2006), each potentially implying a different output of the computational function. This ambiguity is formalized mathematically as a posterior probability distribution, , expressing the probability that the analog activities of the presynaptic cells might currently be given their spike trains, (Pfister et al., 2010; Ujfalussy et al., 2011). The optimal response, i.e. the that minimizes the average squared error relative to , is the expectation of under the posterior:

| (3) |

Crucially, the expression for the posterior, given by Bayes’ rule, is:

| (4) |

Note that while Equations 3–4 do not reveal directly the specific biophysical properties the postsynaptic cell should have, they tell us phenomenologically what signal integration properties should result from its biophysical properties. In particular, they make it explicit that the optimal depends fundamentally on two factors (Figure 1B, top):

the function that needs to be computed, , and

the statistics of presynaptic activities: , the prior probability distribution characterizing the long-run statistics of multi-neural activity patterns in the presynaptic ensemble, and the likelihood , expressing the potentially probabilistic relationship between analog activities (e.g. somatic membrane potential trajectories) and emitted spike trains.

In the following, we show that the outcome of the integration of presynaptic spike trains in cortical neurons approximates very closely the optimal response, and that dendritic nonlinearities are crucial for achieving this near-optimality. For this, 1) we make an assumption about the computational function of the postsynaptic cell, (Figure 1B, top right); 2) we constrain presynaptic statistics, and , by in vivo data about cortical population activity patterns (Figure 1B, top left); and with these 3) we compute the optimal response they jointly determine for various stimulation patterns (Figure 1B, bottom left and right).

Optimal input integration is nonlinear

To specify our model, we considered the case when itself is linear. Although networks with purely linear dynamics can perform non-trivial computations already (Dayan and Abbott, 2001; Hennequin et al., 2014), in the general case, we do expect to be nonlinear, e.g. sigmoidal (Hopfield, 1984). Nevertheless, in typical electrophysiological experiments only a small fraction of the full dynamic range of a neuron’s total input is stimulated (Figure 1—figure supplement 2), and so we approximate the computational function, , as being linear on this limited input range without loss of generality. (See Figure 1—figure supplement 3 for the application of the theory to the case of nonlinear .) Yet, as we show below, for physiologically realistic statistics of presynaptic activity patterns, the optimal response combines input spike trains in highly nonlinear ways even in the case of linear computation, predicting experimentally characterized nonlinearities in dendritic input integration. In particular, second- and higher-order prior presynaptic correlations, represented by , will have a major role in determining the form of the corresponding optimal response. The likelihood, , also influences the optimal response, but only in its quantitative details, as it does not involve correlations across neurons: each neuron’s firing is independent from the others’, given its own somatic membrane potential (Materials and methods).

Previous suggestions for how postsynaptic neurons achieve reliable computation despite the substantial ambiguity about the individual presynaptic activities relied on the linear averaging of inputs arriving from a sufficiently large pool of presynaptic neurons (Dayan and Abbott, 2001; Pfister et al., 2010). However, linear averaging is only guaranteed to produce the correct output, as dictated by Equations 3-4, if the activities of presynaptic neurons are statistically independent under the prior distribution, i.e. (Materials and methods). In contrast, the membrane potential (Crochet et al., 2011) and spiking (Cohen and Kohn, 2011) of cortical neural populations often show complex patterns of correlations, which include both ‘spatial’ (cross-correlations between different neurons) and temporal components (auto-correlations, i.e. the correlation of the activity of the same cell with itself at different moments in time). Thus, in this more general case, we expect the optimal response to involve a nonlinear integration of spike trains. While temporal correlations alone do not require nonlinear dendritic integration across synapses, only local nonlinearities within each synapse, as brought about e.g. by short term synaptic plasticity (Pfister et al., 2010), spatial correlations require the non-linear integration of spikes emitted by different presynaptic neurons.

To illustrate that presynaptic spatial correlations require nonlinear integration across synapses, we compared the best linear response to a given presynaptic spike pattern with the optimal response (Equation 3, as approximated by Equation 23) for two different input statistics that differed only in the correlations between the presynaptic cells but not in the activity dynamics or spiking of individual neurons (temporal correlations). To compute the postsynaptic response, we assumed that dendritic integration in the postsynaptic cell was linear but, in order to dissect the role of dendritic integration across synapses from the effects of nonlinearities in individual synapses, we allowed spikes from the same presynaptic neuron still to be integrated nonlinearly (Pfister et al., 2010). In the first case (Figure 1C), when the presynaptic neurons were independent, the best linear response was identical to the optimal response. However, if presynaptic neurons became correlated, the optimal response became nonlinear and the best linear response was unable to accurately follow the fluctuations in the input (Figure 1D).

Thus, inputs from presynaptic neurons whose activity tends to be correlated need to be nonlinearly integrated, while inputs from uncorrelated sources need to be integrated linearly. This could be naturally achieved in the same dendritic tree by clustering synapses of correlated inputs to efficiently engage dendritic nonlinearities, while distributing the synapses of uncorrelated inputs on different dendritic branches (Larkum and Nevian, 2008). Crucially, for correlated inputs it is also necessary that the dendritic nonlinearities have just the appropriate characteristics for the particular pattern of correlations in presynaptic activities.

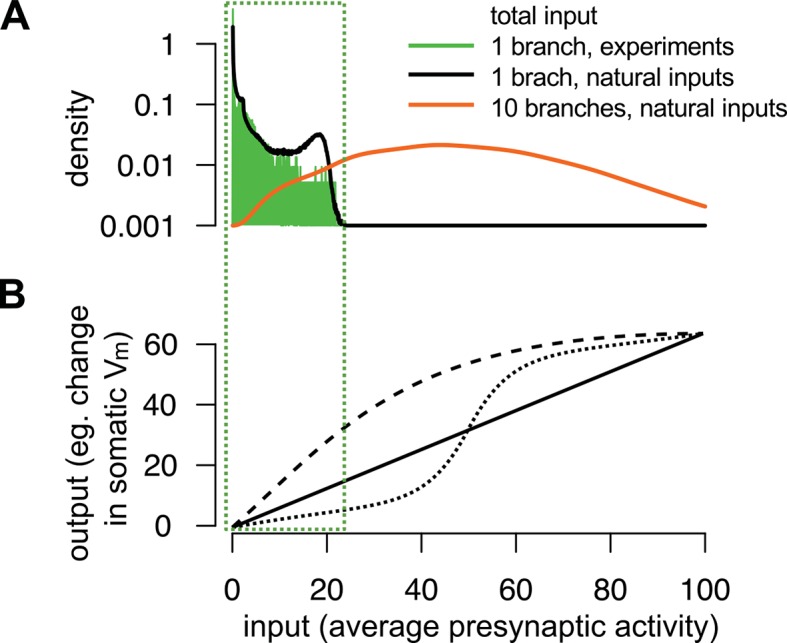

The form of the optimal nonlinearity depends on the statistics of presynaptic inputs

In order to systematically study the nonlinearities in the optimal response in the face of naturalistic input patterns, we derived and analyzed its behavior for a flexible class of richly structured, correlated inputs. Our statistical model for presynaptic activities, specifying the parametric forms of and (Materials and methods and Figure 2—figure supplement 1), was able to generate a variety of multi-neural activity patterns resembling the statistical properties described in in vitro and in vivo multielectrode recordings of neuronal population activities (Figure 2A and D show two representative examples). Once we have specified the statistical model of presynaptic activities, it uniquely determined the optimal response to any given input pattern (Equations 3–4). Thus, we used the same statistical model in two fundamentally different ways: first, to generate “naturalistic” in vivo-like patterns of presynaptic membrane potential traces and spike trains; and second, to compute the optimal response pattern to any stimulation pattern, be it “naturalistic” or parametrically varying “artificial” as used in typical in vitro experiments.

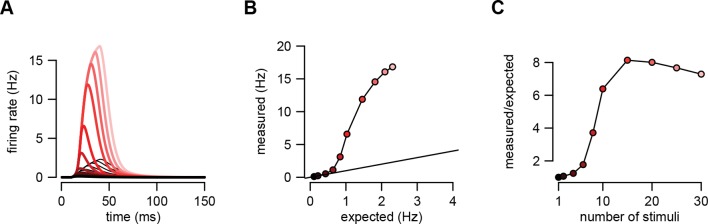

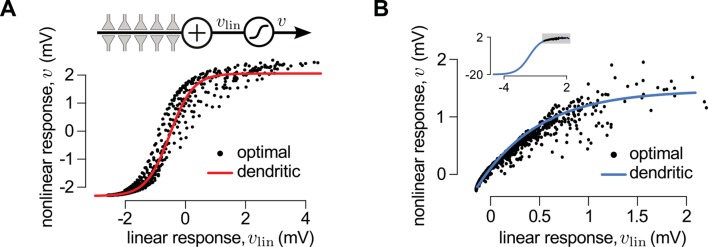

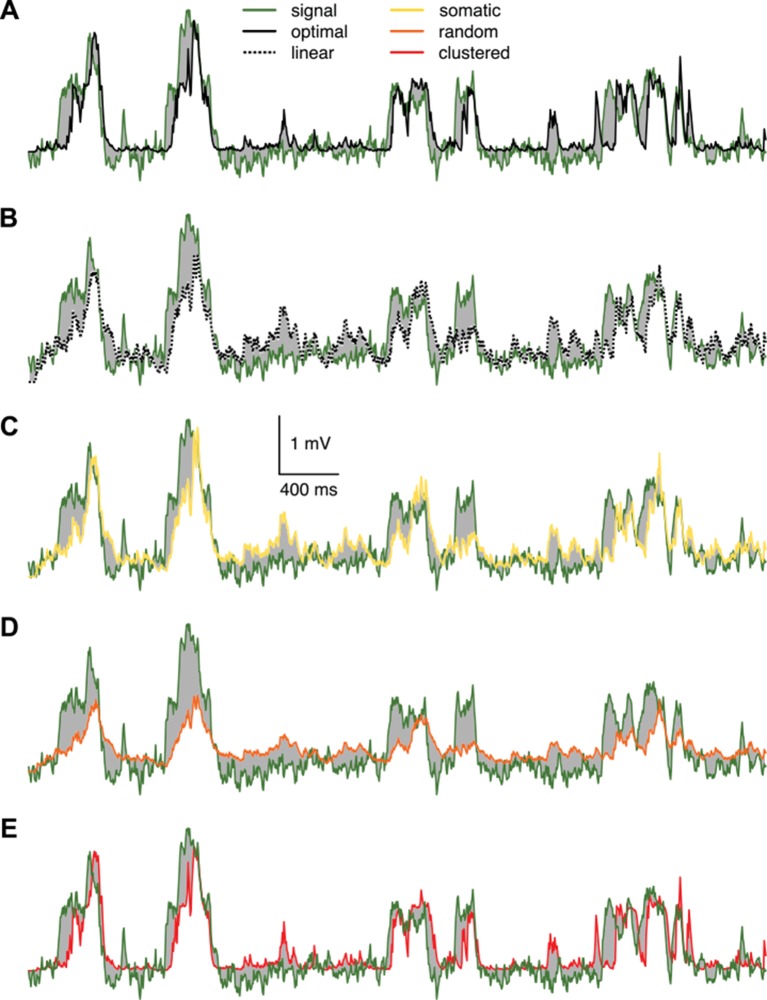

Figure 2. Nonlinearities in the optimal response.

(A–C) Second order correlations between presynaptic neurons (A) imply sublinear integration (B–C). (A) Membrane potentials and spikes of two presynaptic neurons with correlated membrane potential fluctuations. (B) The optimal response (solid line) to a single spike (left) and to a train of six presynaptic spikes (right, green colors correspond to different presynaptic cells, two of which are shown in A) when the long-run statistics of presynaptic neurons are like those shown in (A). Shaded areas highlight how response magnitudes to a single spike from the same presynaptic neuron differ in the two cases: the response to the sixth spike in the train (right, light blue shading) is smaller than the response to a solitary spike (left, gray shading) implying sublinear integration. Dashed line shows linear response. (C) Response amplitudes for 1–12 input spikes versus linear expectations. (D–F) Same as (A–C) but for presynaptic neurons exhibiting synchronized switches between a quiescent and an active state, introducing higher order correlations between the neurons (D, bottom). In this case, the optimal response shows supralinear integration (E–F).

Figure 2—figure supplement 1. Definition of the statistical model describing presynaptic activities and illustration of the inference process in the model.

The optimal response determined by this statistical model, for essentially any setting of parameters, was inherently nonlinear because the additional effect of a presynaptic spike depended on the pattern of spikes that had been previously received from the presynaptic population. Temporal correlations in the presynaptic population caused the optimal response to depend on the spiking history of the same cell (Pfister et al., 2010), while crucially, the additional presence of spatial correlations introduced a dependency on the past spikes of other cells. Thus, the integrated effect of multiple spikes could not be computed as a simple linear sum of their individual effects in isolation. Specifically, a spike that was consistent with the information already gained from recent presynaptic spikes had only a small effect on the response (Figure 2B). Conversely, a spike that was unexpected based on the recent spiking history caused a larger change (Figure 2E).

As could be anticipated based on Equations 3-4, whether a spike counted as expected or unexpected relative to recently received spikes, and hence whether it had a small or large postsynaptic effect, depended on the long-run prior distribution of presynaptic activities, . As a result, the same pattern of presynaptic spikes led to qualitatively different responses under different prior distributions. In particular, sublinear integration was optimal when presynaptic activities exhibited Gaussian random walks and thus they did not contain statistical dependencies beyond second order correlations (Figure 2A-C), as seen in the retina and cortical cultures (Schneidman et al., 2006). This was because with the activities of presynaptic neurons being positively correlated, successive spikes conveyed progressively less information about the presynaptic signal resulting in sublinear integration (Figure 2C) and the strength of the sublinearity depended on the magnitude of correlations (Ujfalussy and Lengyel, 2011). In contrast, supralinear integration was optimal when the presynaptic population exhibited coordinated switches between distinct states associated with large differences in the activity levels compared to activity-fluctuations within each state (Figure 2D–F). These switches led to higher order statistical dependencies as seen in the cortex in vivo, either due to population-wide modulation by cortical state (Gentet et al., 2010; Crochet et al., 2011), or due to stimulus-driven activation of particular cell ensembles (Harris et al., 2003; Ohiorhenuan et al., 2010; Miller et al., 2014). In this case, while observing a few spikes was consistent with random membrane potential fluctuations within the quiescent state, thus only warranting a small response, further spikes suggested that the presynaptic population was in the active state now and thus the response should be larger, leading to supralinear integration (Figure 2F).

Note, that nonlinearities in the optimal postsynaptic response needed not simply compensate for the nonlinearities in the presynaptic spike generation process, as captured by , but they critically depended on the presynaptic correlations, as captured by . Indeed, in Figures 1C, D and 2A–F, the same spiking nonlinearity was used and yet very different input integration was required depending on the form of the presynaptic statistics: linear integration for uncorrelated inputs (Figure 1B) and nonlinear integration for correlated inputs (Figure 1C), with sub- or supralinear integration being optimal depending on whether only second order (Figure 2A–C) or also higher order correlations were present in the presynaptic population (Figure 2D–F). Moreover, optimal input integration remained nonlinear even if the postsynaptic neuron computed a function of the presynaptic firing rates (rather than membrane potentials) which were linearly related to spikes (Figure 1—figure supplement 1).

Nonlinear dendrites can approximate the optimal response

The nonlinear input integration seen in the optimal response strongly resembled dendritic nonlinearities. Indeed, the basic biophysical mechanisms present in dendrites naturally yield nonlinearities that are qualitatively similar to those of the optimal response: purely passive properties lead to sublinear integration (Koch, 1999), whereas locally generated dendritic spikes endow dendrites with strong supralinearities (Nevian et al., 2007; Branco and Häusser, 2011). However, the full mathematical implementation of the optimal response is excessively complex (Materials and methods) and thus, there is unlikely to be a one-to-one mapping between the variables necessary for implementing it and the biophysical quantities available in dendrites. Therefore, we sought to establish a formal proof that dendritic-like dynamics can implement, even if approximately, the optimal response. For this, we considered two limiting cases of our statistical model of presynaptic activities, and , and compared the properties of the corresponding optimal response to a well-established simplified model of nonlinear dendritic integration, using a combination of analytical and numerical techniques.

First, we considered a limiting case in which the statistics of a large presynaptic population were strongly dominated by the simultaneous switching of presynaptic neurons between a quiescent and an active state (as shown in Figure 2D). In this limiting case we were able to show mathematically (see Materials and methods) that a simple, biophysically-motivated, canonical model of nonlinear dendritic integration (Poirazi and Mel, 2001) is able to produce responses that are near-identical to the optimal response for any sequence of presynaptic spikes (Figure 3A, see also Figures 4C). In this simple dendritic model (Figure 3A, inset; Equations 24–25), inputs within a branch are integrated linearly and the local dendritic response is then obtained by transforming this linear combination through a sigmoidal nonlinearity, which is a hallmark of supralinear behavior in dendrites (Poirazi et al., 2003b).

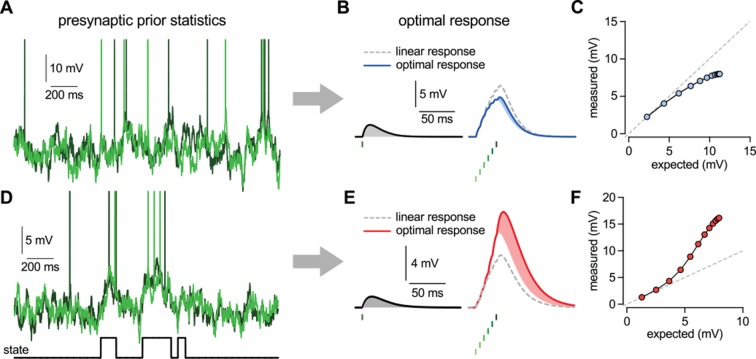

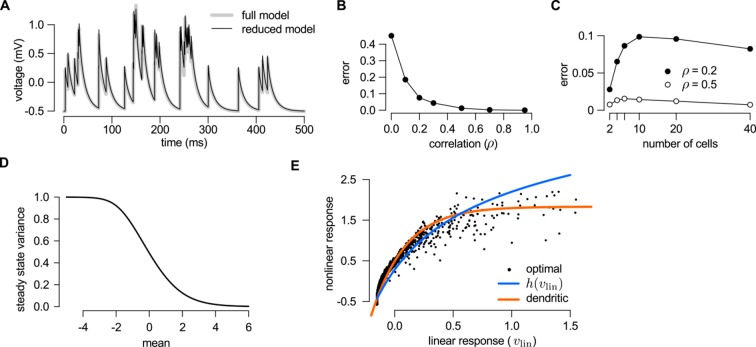

Figure 3. A canonical model of dendritic integration approximates the optimal response.

(A) The optimal response (black) and the response of a canonical model of a dendritic branch, (inset), with a sigmoidal nonlinearity (red, Equation 25) as functions of the linearly integrated input, (inset, Equation 24), when the presynaptic population exhibits synchronized switches between a quiescent and an active state, as in Figure 2D. Black dots show optimal vs. linear postsynaptic response sampled at regular ms intervals during a s-long simulation of the presynaptic spike trains. (B) Optimal response (black) approximated by the saturating part of the sigmoidal nonlinearity (blue) when the presynaptic population is fully characterized by second-order correlations, as in Figure 2A. Inset shows the same data on a larger scale to reveal the sigmoidal nature of the underlying nonlinearity (gray box indicates area shown in the main plot).

Figure 3—figure supplement 1. Reducing the optimal response with second order correlations to a canonical model of dendritic integration.

Figure 3—figure supplement 2. Adaptation without parameter change.

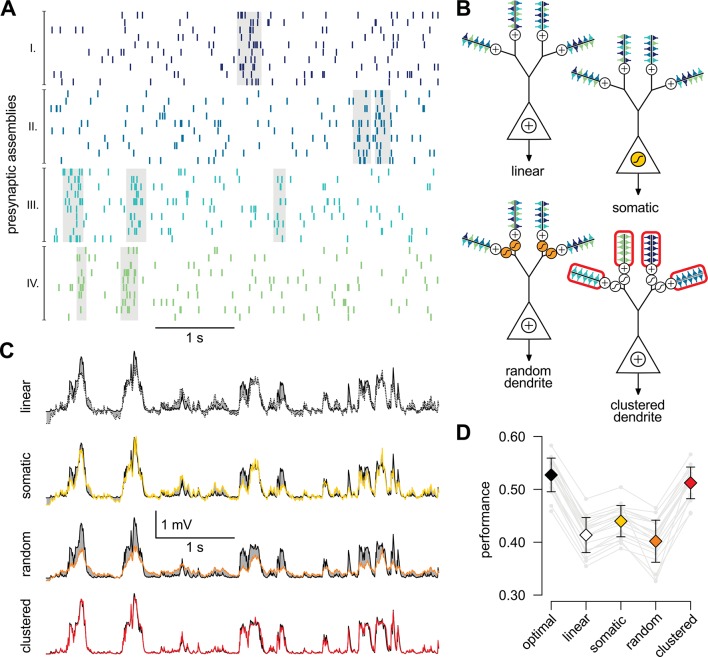

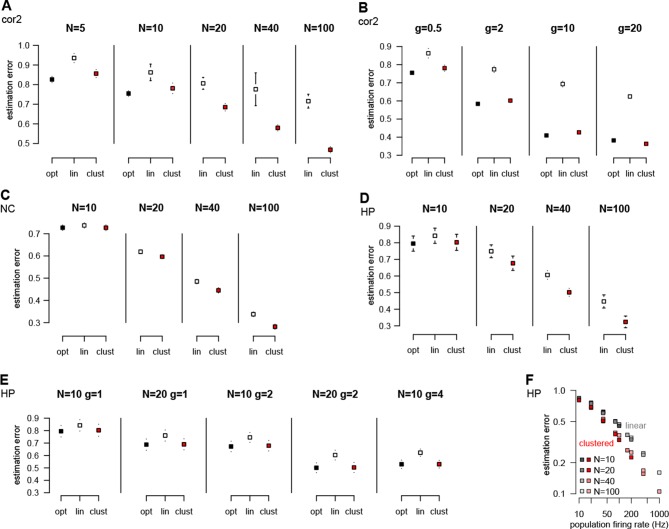

Figure 4. A simple nonlinear dendritic model closely approximates the optimal response for realistic input patterns.

(A) Presynaptic spiking activity matching the statistics observed during hippocampal sharp waves. Spike trains (rows) belonging to four different assemblies are shown (colors), gray shading indicates assembly activations. (B) Different variants of the dendritic model, parts colored in yellow, orange, and red highlight the differences between successive variants (see text for details). (C) Estimating the mean of the presynaptic membrane potentials based on the observed spiking pattern (shown in A) by the optimal response (black) compared to the linear (dotted), somatic (yellow), random (orange) and clustered (red) models. (D) Performance of the four model variants compared to that of the optimal response. Gray lines show individual runs, squares show means.d. Performance is normalized such that is obtained by predicting only the time-average of the signal, and means perfect prediction attainable only with infinitely high presynaptic rates (Materials and methods).

Figure 4—figure supplement 1. Responses of different variants of the dendritic model compared to the true signal.

Figure 4—figure supplement 2. Performance of different neuron models over a wide range of input statistics.

Second, we considered another limiting case in which the statistics of the presynaptic population were fully characterized by second-order correlations (as shown in Figure 2A). In this case, the same type of dendritic model, but with a sublinear input-output mapping, was able to approximate the optimal response very closely. Although a closed-form solution for the optimal nonlinear mapping could not be obtained in this case, it could be shown to be sublinear (Appendix), and was well approximated by a sigmoidal nonlinearity parametrized to be dominantly saturating (Figures 3B and Figure 3—figure supplement 1).

We also noted that it was the same type of sigmoidal nonlinearity which could implement supralinear and sublinear integration depending on the input regime (low background, synchronous spikes: supralinear; high background, asynchronous spikes: sublinear integration, compare Figure 3A and B, inset). This suggests that dendritic integration may adapt to systematic changes in presynaptic statistics, such as those brought about by transitioning between the desynchronized and synchronized states of the neocortex, or sharp waves and theta activity in the hippocampus, without having to change the parameters of its nonlinearity (Borst et al., 2005) (Figure 3—figure supplement 2). Indeed, Gasparini and Magee (2006) demonstrated that dendritic integration in hippocampal pyramidal cells was supralinear when inputs were highly synchronized (as they are during sharp waves), while integration was linear if the input was asynchronous (such as during theta activity).

Nonlinear dendrites are computationally advantageous

While the foregoing analyzes proved that dendritic-like nonlinearities can closely approximate the optimal response in certain limiting cases, they do not address directly whether having such nonlinearities in input integration is crucial for attaining near-optimal computational performance for more realistic input statistics, or simpler forms of input integration could achieve similar computational power. To study this, we considered a scenario in which the presynaptic population consisted of four ensembles, such that neurons belonging to each ensemble underwent synchronized switches in their activity levels which were independent across the four ensembles, while there were also independent fluctuations in the activity of individual presynaptic neurons which were comparable in magnitude to those caused by these synchronized activity switches (Figure 4A). We then assessed the performance of four different variants of a simple dendritic model relative to that of the optimal response (Figure 4B): a model with linear dendrites and soma; a model in which only the soma was nonlinear, and two models in which nonlinearities resided in the dendrites with either random or clustered connectivity between the presynaptic assemblies and the dendritic branches.

We quantified the performance of each of the models based on how closely their output approximated the linear average of the analog presynaptic activities giving rise to the spike trains they were integrating (Figure 4—figure supplement 1, Materials and methods). For a fair comparison, we tuned the parameters of each variant of the dendritic model to obtain the best possible performance with it (Figure 4C). The model with nonlinear dendrites and clustered connectivity had near-optimal cross-validated performance (Figure 4D) while all other models performed significantly worse (n = 20 runs, t = 51, t = 35, t = 20, and P10, P10, P10; respectively from left to right as shown in Figure 4D). This remained true when we varied the number and firing rate of presynaptic neurons over a wide range, and under a diverse set of qualitatively different population-level statistics, determining the dynamics of assembly switchings and within-assembly membrane potential correlations (Figure 4—figure supplement 2).

Taken together, these results demonstrate that the clustering of correlated inputs together with nonlinearities akin to those found in dendrites is necessary to achieve optimal estimation performance in the face of presynaptic correlations. However, in order to be tractable, our dendritic model was mathematically simplified and, as a result, only qualitatively reproduced the nonlinearities of real dendrites. Thus, we directly compared experimentally recorded responses in dendrites to the optimal response.

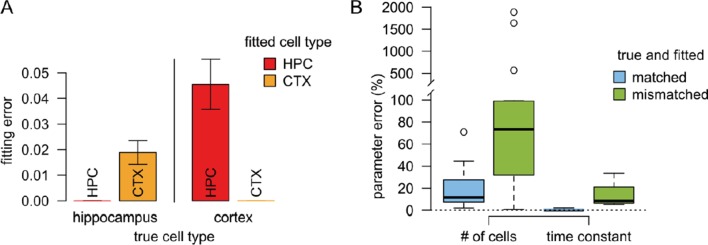

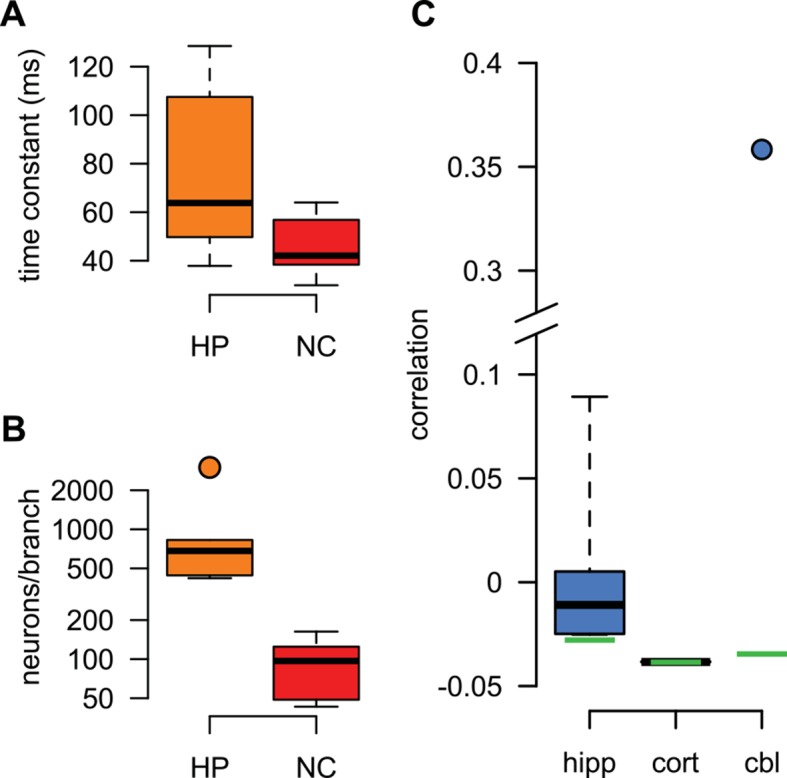

Nonlinear integration in cortical neurons is matched to their input statistics

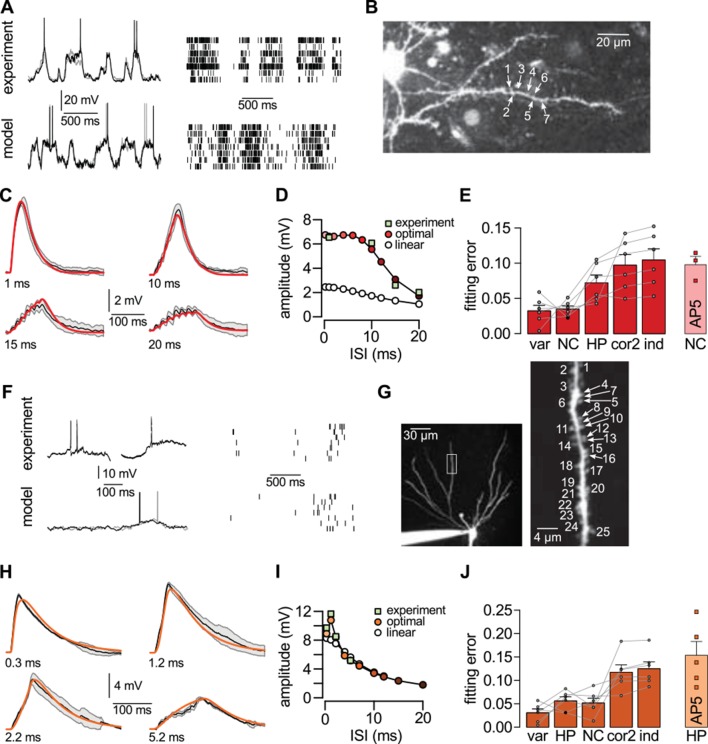

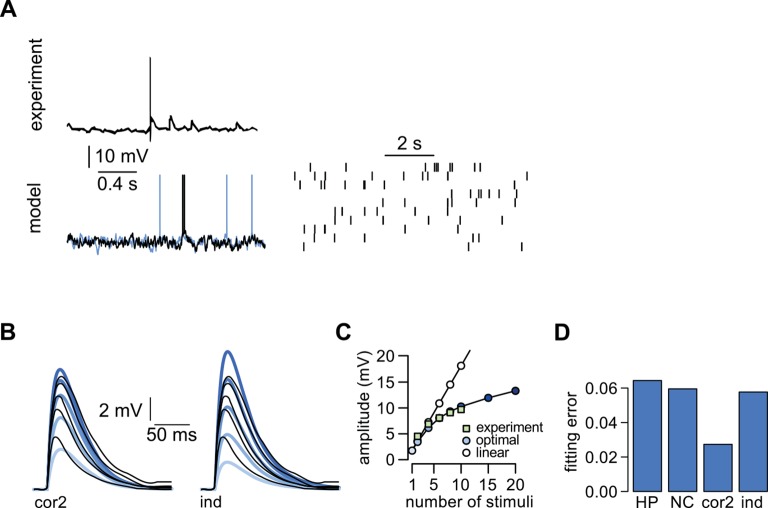

A crucial prediction of our theory is that dendritic nonlinearities act to achieve near-optimal responses in a way that the form of the nonlinearity is specifically matched to the long-run statistics of the presynaptic population. We tested this prediction in experiments in which two different types of cortical pyramidal neurons, from layer 2/3 of the neocortex (Figure 5A–E) and from area CA3 of the hippocampus (Figure 5F–J), received patterned dendritic stimulation using two-photon glutamate uncaging, and compared their subthreshold somatic responses with the optimal responses predicted by the theory.

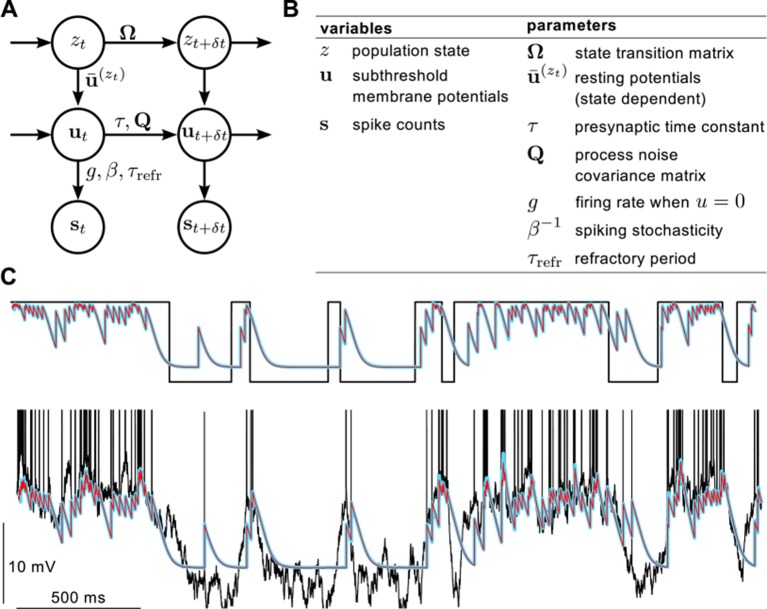

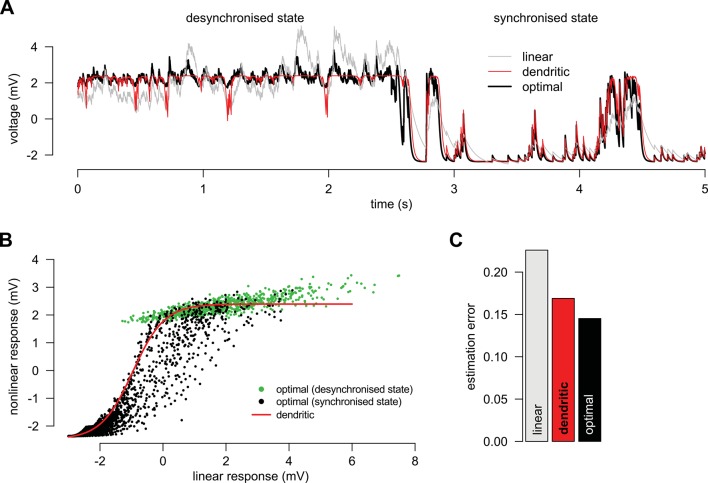

Figure 5. Nonlinear dendritic integration is matched to presynaptic input statistics.

(A) Sample membrane potential fluctuations (left, adapted from Gentet et al., 2010) and multineuron spiking patterns (right, adapted from Ji and Wilson, 2007) recorded from the neocortex (top), and matched in the model (bottom, see also Tables 1–3). (B) Two-photon image of a neocortical layer 2/3 pyramidal cell, numbers indicate individual dendritic spines stimulated in the experiment. (C) Responses to trains of seven stimuli using different inter-stimulus intervals (ISI, shown below traces) recorded in the cell shown in (B) (black; means.d.) and predicted by the optimal response tuned to the presynaptic statistics shown in A (red). Parameters related to postsynaptic dendritic filtering were tuned for the specific dendrite ( Figure 5—figure supplement 1B–C). (D) Dependence of response amplitudes on ISI in the same dendrite shown in B-C (squares), and as predicted by the optimal response (filled circles) or linear integration (empty circles). (E) Average error of fitting dendritic recordings across all dendrites and conditions using the optimal response tuned to different presynaptic statistics (NC, HP, cor2, ind; see text for details) compared to within-data variability (var). Gray lines show individual dendrites. Rightmost bar (NC-AP5) shows fit using NC presynaptic statistics to responses obtained after pharmacological blockade of NMDA receptor activation. (F–J) Same as (A–E) for presynaptic patterns characterized by hippocampal sharp waves (F) and recordings from hippocampal CA3 pyramidal cells (H–J) when stimulating synapses on its basal dendrites (G). In vivo data in (F) was adapted from (Ylinen et al., 1995) (left, membrane potential traces, not simultaneously recorded) and (O'Neill et al., 2006) (right, multineuron spike trains). Error bars show s.e.m.

Figure 5—figure supplement 1. Best fit parameters for fitting dendritic responses.

Figure 5—figure supplement 2. Dendritic integration in cerebellar stellate cells is not predicted by cortical presynaptic statistics.

For generating our predictions of the optimal response in these two cell types, we fitted the parameters describing presynaptic statistics in our model, and , to the statistical patterns in the activity of their respective presynaptic populations. For neocortical pyramidal cells, we fitted in vivo data available on the membrane potential fluctuations of layer 2/3 pyramidal cell-pairs in the barrel cortex during quiet wakefulness (Gentet et al., 2010; Crochet et al., 2011) (NC, Figure 5A, Table 2). For hippocampal pyramidal cells, we fitted presynaptic statistics to membrane potential fluctuations (Ylinen et al., 1995; English et al., 2014) and to multineuron spiking patterns of hippocampal pyramidal cells during sharp wave activity (Csicsvari et al., 1999; 2000) (HP, Figure 5F, Table 3). Due to the limitations of available hippocampal data sets, extracellular rather than intracellular data was used for fitting correlations. The motivation for our choice of the particular neocortical and hippocampal states used for fitting presynaptic statistics was two-fold. First, the general network state of the slice preparations in which we tested dendritic integration was likely most analogous to these states (A Gulyás, personal communication; see also Karlocai et al., 2014; Schlingloff et al., 2014), characterized by relatively suppressed neural excitability due to low levels of cholinergic modulation (Harris and Thiele, 2011; Eggermann et al., 2014). Second, the stimulation protocol used in our study (short bursts of synaptic stimuli following longer silent periods) was also most consistent with population activity during hippocampal sharp waves and quiet wakefulness in the cortex. In order to capture variability across the cells we recorded from, the parameters related to postsynaptic dendritic filtering (amplitude and decay of the response to a single stimulation, and the size of the dendritic subunit, Figure 5—figure supplement 1B-C) were tuned for the individual dendrites. Importantly, the parameters describing presynaptic statistics were fitted without regard to our dendritic experimental data, thus allowing a strong test of our predictions about dendritic integration (see Materials and methods).

Table 2.

Features of neocortical population activity during quiet wakefulness. Parameters of the model are given in column NC of Table 1.

| Data | Model (NC) | Reference | |

|---|---|---|---|

| duration of active states | 130 ms | 100 ms | Gentet et al. (2010) |

| duration of quiescent states | 200 ms | 250 ms | Gentet et al. (2010) |

| , firing rate during active states | 2.5 Hz | 2.86 Hz | Gentet et al. (2010) |

| , firing rate during quiescent states | 1/3 Hz | 0.39 Hz | Gentet et al. (2010) |

| , depolarisation during active states | 20 mV | 20 mV | Gentet et al. (2010) |

| time constant | 20 ms | 20 ms | Poulet and Petersen (2008) |

Table 3.

Features of hippocampal population activity during sharp wave-ripple states. Parameters of the model are given in column HP of Table 1A recent intracellular study (English et al., 2014) recording from CA1 neurons in awake mice found parameters similar to our previous estimates. Using the parameters found in that study – Hz, Hz (Table 1 of English et al., 2014), mV and mV (Figure 3A of English et al., 2014) yielding Hz and mV – did not influence our results (not shown).

| Data | Model (HP) | Reference | |

|---|---|---|---|

| activation rate of an ensemble | 0.25 Hz | 0.027 Hz | Grosmark et al. (2012); Pfeiffer and Foster (2013) |

| duration of SPWs | 105 ms | 100 ms | Csicsvari et al. (2000) |

| , firing rate during SPW | 10 Hz | 9.5 Hz | Csicsvari et al. (2000) |

| , firing rate between SPWs | 0.5 Hz | 0.6 Hz | Grosmark et al. (2012); Csicsvari et al. (2000) |

| , depolarisation during SPWs | 0–10 mV | 4.6 mV | Ylinen et al. (1995) |

| time constant | 8–22 ms | 20 ms | Epsztein et al. (2011) |

We found that the non-linear integration of individual spike patterns in cortical neurons was remarkably well fit by the optimal response when it was tuned to the correct presynaptic statistics (Figure 5C,H). The systematic dependence of response amplitudes on the inter-stimulus interval (ISI) in individual cells (Figure 5D,I) was also well predicted by the optimal response. We quantified the quality of match between the predicted and experimentally recorded time course of responses across a population of n = 6 (neocortex) and n = 6 (hippocampus) dendrites under a range of conditions varying ISI or the number of stimuli, and found that the precision of our predictions was not statistically different from that expected from the inherent variability of responses in individual dendrites (Figure 5E,J; neocortex: t = 0.2, P = 0.85; hippocampus: t = 1.85, P = 0.12). In contrast, when the optimal response was tuned to unrealistic presynaptic statistics characterized purely by second-order correlations (cor2), or by a lack of any correlations implying statistically independent presynaptic firing (ind), the quality of fits became significantly worse (Figure 5E,J; neocortex: t = −4.6, P = 0.006 for cor2, and t = −4.9, P = 0.004 for ind; hippocampus: t = −4, P = 0.01 for cor2, and t = −4.9, P = 0.004 for ind).

Moreover, using realistic presynaptic statistics, but matching hippocampal rather than neocortical activities, also resulted in significantly worse fits for neocortical responses (Figures 5E; t= −3.6, P = 0.02). The converse was not observed in the case of hippocampal neurons (Figures 5J; t= 0.43, P = 0.68). This might be because hippocampal neurons also receive neocortical inputs (albeit on their apical not basal dendrites) that show similar population activity patterns to the ones we matched here for the neocortical cells (Isomura et al., 2006), while the primary sensory cortical pyramidal cells we recorded from do not receive direct input from the hippocampus. Nevertheless, when we analyzed the quality of fit between our predictions and recorded responses in hippocampal and neocortical data together, we found a small, but significant interaction between the source of the input statistics (neocortex or hippocampus) and the location of the postsynaptic neurons (ANOVA F = 5, P 0.05). This suggests that dendritic nonlinearities in cortical pyramidal neurons are specifically tuned to the dynamics of their presynaptic cortical ensembles. Furthermore, the blockade of NMDA receptor activation by AP5 resulted in dendritic responses that afforded substantially poorer fits by the model, even after refitting the postsynaptic parameters (Figure 5E,J, AP5). This indicated that the fine tuning of dendritic nonlinearities to input statistics relied on the action of NMDA receptors.

As dendrites in both of our cortical cell types integrated inputs supralinearly, as a further control, we analyzed similar data available from cerebellar stellate cell dendrites, which are known to integrate their inputs sublinearly (Abrahamsson et al., 2012) (Figure 5—figure supplement 2). In this case, we fitted the statistics of individual presynaptic cells to those of cerebellar granule cells. The correlations between these cells are less known, but we found that assuming simple second-order correlations made the optimal response a close match to dendritic responses. In contrast, the hippocampal- or neocortical-like statistics that were crucial for matching responses in cortical dendrites (Figure 5D,H) resulted in a substantially poorer fit in this cerebellar cell type. This demonstrates a double dissociation in the matching of cortical and subcortical neuron types to cortical and non-cortical input statistics.

Discussion

We established a functional link between the statistics of the synaptic inputs impinging on the dendritic tree of a neuron and the way those inputs are integrated within the dendritic tree. We first demonstrated that efficient computation in spiking circuits requires nonlinear input integration if the activities of the neurons are correlated and that the structure of the presynaptic correlations determines the form of the optimal input integration. Second, we showed that the optimal response can be efficiently approximated by a canonical biophysically-motivated model of dendritic signal processing both for linearly correlated inputs and for cell-assembly dynamics. Third, we found that nonlinear dendrites with synaptic clustering carry significant benefits for decoding richly structured presynaptic spike trains. Finally, in vitro measurements of dendritic integration in two different types of cortical pyramidal neurons yielded postsynaptic responses that closely matched those predicted to be optimal given the in vivo input statistics of those particular cell types. These results suggest that nonlinear dendrites are essential to decode complex spatio-temporal spike patterns and thus play an important role in network-level computations in neural circuits.

Biophysical substrate

The central insight of our theory is the relationship between presynaptic statistics and postsynaptic response, formalized as the optimal response. The optimal response can be expressed as a set of nonlinear differential equations that requires storing and continuously updating variables within the dendritic tree, where is the number of synapses (Materials and methods). Thus, it is unlikely to be implemented by the postsynaptic neuron as such. Consequently, to demonstrate the biophysical feasibility of our theory, we derived a simple approximation to the optimal response that performs about equally well using just a few postsynaptic variables and that corresponds to a canonical descriptive model of dendritic integration (Poirazi et al., 2003; Poirazi et al., 2003b).

We found that simple second order correlations between presynaptic neurons imply sublinear integration which can be implemented by the saturating nonlinearity characteristic of passive dendrites. Conversely, the biophysical substrate for the type of supralinear integration that was optimal for state-switching dynamics likely involves NMDA receptors because the particular dendritic nonlinearites observed in the cortical cells in which we tested our theory are known to be mediated primarily through NMDA receptor activation (Branco et al., 2010; Makara and Magee, 2013; Major et al., 2013). Indeed, we found that pharmacological inactivation of NMDA receptors abolished the precise match between dendritic integration and presynaptic statistics in these neurons (Figure 5). Moreover, the local plateau potentials generated by NMDA currents have been shown to have graded response durations (Major et al., 2008), and the resulting nonlinearities could be continuously tuned between weaker and stronger forms (boosting and bistability, Major et al., 2013). These properties make NMDA receptor mediated dendritic nonlinearities ideally suited for being matched to presynaptic statistics, as the optimal response involves sustained dendritic depolarisations of varying duration (Figure 4) that depend parametrically on those statistics.

Input statistics and clustering

A central prediction of our theory that awaits confirmation is the existence of a tight relationship between the structure of correlations in the activity of presynaptic cells and the morphological clustering of their synapses on the postsynaptic dendrite. This is because our theory requires nonlinear integration of inputs from neurons with statistically dependent activity, while spikes from independent neurons need to be integrated linearly. Biophysical considerations suggest (Koch, 1999) and experimental data supports (Polsky et al., 2004; Losonczy and Magee, 2006) that, when synchronous, nearby inputs on the same dendritic branch are summed nonlinearly, whereas widely separated inputs are combined linearly. Consequently, our theory predicts that the correlation structure of the inputs will be mapped to the dendritic tree in a way that presynaptic neurons with strongly correlated activities target nearby locations while independent neurons innervate distinct dendritic subunits.

According to our theory, the kind of correlation relevant for determining synaptic clustering is the ‘marginal’ correlations between the membrane potentials of presynaptic neurons. Marginal correlations include both signal and noise correlations (Averbeck et al., 2006) and thus can reach substantial magnitudes even when noise correlations alone are small, as e.g. during desynchronized cortical states (Renart et al., 2010), especially for neurons with overlapping receptive fields (Froudarakis et al., 2014), and when measured between the membrane potentials of neurons rather than their spike counts (Dorn and Ringach, 2003; de la Rocha et al., 2007; Poulet and Petersen, 2008).

At the level of different dendritic regions, the segregation of different input pathways along the dendritic tree of hippocampal neurons supports this prediction (Witter et al., 1989; Druckmann et al., 2014). At the level of individual synapses, the degree and the existence of clustering among inputs showing correlated activity is currently debated. High resolution imaging revealed subcellular topography of sensory inputs in the tadpole visual system (Bollmann and Engert, 2009), clustered patterns of axonal activity in the parallel fibres that provide input to cerebellar Purkinje cells (Wilms and Häusser, 2015), and experience-driven synaptic clustering in the barn owl auditory localization pathway (McBride et al., 2008). Furthermore, it has been demonstrated that neighboring synapses are more likely to be coactive than synapses that are further away from each other in developing hippocampal pyramidal cells (Kleindienst et al., 2011) as well as in hippocampal cultures and in vivo in the barrel cortex during spontaneous activity (Takahashi et al., 2012). These results thus suggest clustering of correlated inputs.

In contrast, an interspersion of differently tuned orientation-, frequency- or whisker-specific synaptic inputs on the same dendritic segments was found in the mouse visual, auditory or somatosensory cortex, respectively, thus challenging the prevalence of synaptic clustering (Jia et al., 2010; Chen et al., 2011; Varga et al., 2011). However, in all these studies the stimuli used were non-naturalistic and varied along a single stimulus dimension only (direction of drifting gratings, pitch of pure tones, or the identity of the single whisker being stimulated), which may account for the apparent lack of clustering. In particular, our theory predicts clustering based on the long-term statistical dependencies between the responses of the presynaptic neurons for naturalistic inputs, which can be quite poorly predicted from their tuning properties for single stimulus dimensions (Harris et al., 2003; Fiser et al., 2004). In contrast, the statistical dependencies relevant for our theory are well represented by those found during spontaneous activity (Berkes et al., 2011). Indeed, studies finding evidence in favor of synaptic clustering analyzed the structure of synaptic input to dendritic branches during spontaneous network activity (McBride et al., 2008; Kleindienst et al., 2011; Makino and Malinow, 2011; Takahashi et al., 2012). Thus, presynaptic correlations for naturalistic stimulus sets may be predictive of synaptic clustering and providing more direct evidence for or against such clustering will offer a crucial test of our theory.

Linear vs. nonlinear postsynaptic computations

Although, in general, we expect single-neuron computations to be nonlinear (Zador, 2000), and our theory indeed applies to nonlinear computations (Figure 1—figure supplement 3), we assumed the postsynaptic computation to be linear for matching experimental data. This choice was justified by two reasons. First, it is difficult to determine, without making strong prior assumptions, what kind of nonlinear function the neuron actually computes; and so the choice of any particular such function would have been arbitrary. Note that even in relatively well-characterized cortical areas (such as the visual cortex) it is unknown how much of the computationally relevant output of individual neurons (such as orientation or direction selectivity) is brought about by specific nonlinearities in the input-output transformations of these neurons, or by multiple steps of feed-forward and recurrent processing carried out at various stages of the visual pathway between the retina and those neurons. Moreover, in some cases, even networks with linear dynamics can provide a remarkably close fit to experimentally observed cortical population dynamics (Hennequin et al., 2014). This issue may be best addressed in systems that are more specialized than the cortex so that there are well-supported hypotheses about the particular nonlinear computations individual neurons need to perform, such as the fly visual system (Single and Borst, 1998) or the mammalian and avian auditory brain stem (Agmon-Snir et al., 1998). In order to test our theory in these systems, in vivo multineural data will need to be collected from the afferent brain areas, preferably in the unanesthetized animal, for characterising the relevant statistical properties of the presynaptic population to which dendritic nonlinearities are adapted according to our prediction.

Second, any nonlinear function can be approximated to high precision by a linear function over a sufficiently limited input range. Currently available experimental techniques for systematically probing dendritic nonlinearities, including those used in our study, only provide data over such a very limited range (0.1% of the number of excitatory inputs impinging a neuron, Megías et al., 2001). Inputs in this small range do not sufficiently engage global nonlinearities brought about by active somatic conductances or global events such as Ca spikes. Thus, we could assume linear computation over this range without loss of generality (Figure 1—figure supplement 2). In fact, from this perspective, it is a non-trivial phenomenon to account for on its own right that stimulating such a small fraction of inputs already leads to observable nonlinearities in the postsynaptic dendrite. By defining the computation to be linear, we could demonstrate that such strong dendritic nonlinearities arise naturally in our theory, entirely due to the correlations in the prior input statistics, thus providing a functional account for this remarkable phenomenon.

Once patterned dendritic stimulation over a broader and more realistic range of inputs becomes feasible, our theory will provide a principled method for dissecting the roles of presynaptic correlations vs. genuine nonlinear computations in shaping dendritic nonlinearities. A sufficiently rich set of such data will allow the fitting of presynaptic parameters, as we did here, followed by fitting postsynaptic transfer functions to dendritic responses without having to make strong prior assumptions about their (linear) nature.

Analog communication, stochastic synaptic transmission and short-term synaptic plasticity

Our formalism was based on the assumption that cortical neurons only influence each other’s membrane potentials via the action potentials they emit. While there exist other, more analog forms of communication, such as the modulation of the effects of action potentials by subthreshold potentials (Clark and Häusser, 2006), the propagation of voltage signals through gap junctions (Vervaeke et al., 2012), and ephaptic interactions between nearby cells (Anastassiou et al., 2011), these either require slow membrane potential dynamics, small distances between interacting cells, or large degrees of population synchrony, and are thus generally believed to have a supplementary role beside spike-based communication (Sengupta et al., 2014). Note that our theory is self-consistent even though it considers spiking only in the presynaptic population and not in the postsynaptic neuron. This is because we assumed that the computationally relevant mapping is that between the membrane potentials of the presynaptic neurons and the postsynaptic cell (Figure 1A, Equation 1), and so, by induction, the spikes of the postsynaptic neuron will effect the mapping from its membrane potential to those of its postsynaptic partners.

We also assumed that presynaptic spikes deterministically and uniformly impact the postsynaptic response, and thus apparently neglected the stochasticity in synaptic transmission, and in particular systematic variations in synaptic efficacy brought about by short-term synaptic plasticity. Nevertheless, these presynaptic features are compatible with our theory. The stochasticity of synaptic transmission, due to a baseline level of synaptic failures, is straight-forward to incorporate in the model by reducing the effective presynaptic firing rate, which can thus be interpreted as a ‘transmission rate’ instead. In fact, we have already done this while matching hippocampal and neocortical presynaptic statistics (Table 1).

Table 1.

Parameters used in Figures 1–5 of the main paper (see also Tables 2–3). () is the rate of switching from the active to the quiescent (from the quiescent to the active) state. The resting potential corresponding to the active and quiescent states is and , respectively. () is the posterior variance (covariance) of the presynaptic membrane potential fluctuations in a given state where . τrefr is the length of the refractory period and is the baseline transmission probability in these synapses (13, 49).

| Figure 1 | Figure 2 | Figure 3 | Figure 4 | Figure 5 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| parameter | unit | B,C | A,B | C,D | A | B | A-D | ind | cor2 | NC | HP |

| Hz | 10 | – | 10 | 10 | – | 10 | – | – | 10 | 10 | |

| Hz | 10 | – | 0.27 | 0.67 | – | 0.67 | – | – | 4 | 0.027 | |

| mV | 2.4 | 0 | 2.3 | 2.3 | 0 | 2.3 | 0 | 0 | 10 | 2.3 | |

| ms | 20 | 20 | 20 | 20 | 20 | 20 | 20 | 20 | 20 | 20 | |

| mV | 1 | 16 | 4 | 1 | 1 | 1 | 1 | 1 | 10 | 1 | |

| mV | 0 | 0.5 | 0.5 | 0 | 0.5 | 0 | 0 | * | 5 | 0.5 | |

| Hz | 1 | 1 | 1 | 5.3 | 0.5 | 5 | 0.5 | 0.5 | 1 | 2 | |

| mV | 1 | 0.4 | 0.4 | 0.5 | 0.4 | 0.4 | 1 | 2 | 0.1 | 0.6 | |

| ms | 3 | 3 | 3 | 1 | 3 | 1 | 3 | 3 | 3 | 3 | |

| – | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0.5 | 0.2 | |

| – | 20 | 70 | 20 | 10 | 10 | 10 | +0† | +20† | * | * | |

| ms | 0 | 10 | 10 | 0 | 0 | 0 | * | * | * | * | |

| – | * | * | * | * | |||||||

*These parameters were fitted to experimentally recorded dendritic responses, see Figure 5—figure supplement 1. †The numbers 0 and 20 indicated here are in addition to the number of stimulated synaptic sites in the experiment. For the ind model, this number does not affect the predictions, for the cor2 model its effects could phenomenologically be incorporated into which we chose to fit instead.

Short-term synaptic plasticity, resulting in dynamical changes in synaptic efficacy as a function of the recent spiking history of the presynaptic neuron, is not only a constraint in our framework, but as we have shown in related work, it can act itself as an optimal estimator of the membrane potentials of individual presynaptic neurons (Pfister et al., 2010). Thus, the effects of short-term plasticity can be regarded as a special case of what can be expected from our optimal response: when presynaptic neurons are statistically independent, spikes arriving at different synapses are integrated linearly, and local nonlinearities acting at individual synapses suffice (Figure 1C, see also Materials and methods). However, the importance of nonlinear interactions between inputs from different presynaptic neurons, brought about by dendritic nonlinearities, rapidly increases with the magnitude of presynaptic correlations, especially in large populations (Figure 1D, see also Ujfalussy et al., 2011).

These considerations suggest that short-term synaptic plasticity and dendritic nonlinearities have complementary roles in tuning the postsynaptic response to the statistics of the presynaptic population along the orthogonal dimensions of time and space. The former is useful in the face of temporal correlations private to individual presynaptic neurons (auto-correlations, e.g., brought about by spike frequency adaptation, Pfister and Surace, 2014), while the latter is matched to spatio-temporal correlation patterns present across the presynaptic population.

Inhibitory neurons

We focused on the nonlinear integration of excitatory inputs in the dendritic tree of cortical neurons that have been extensively studied and described over the past decades, giving rise to a strong body of converging evidence as to their characteristics and mechanisms (Spruston, 2008). Recent work studying the nonlinear interaction between inhibitory and excitatory inputs in active dendrites (Gidon and Segev, 2012; Jadi et al., 2012; Müller et al., 2012; Wilson et al., 2012; Lovett-Barron et al., 2012) demonstrated that local inhibition has a powerful control over the excitability of the dendritic tree.

However, it is not yet clear whether these inhibitory inputs are directly involved in the computation performed by the circuit, just as excitatory neurons but with negative signs (Koch et al., 1982), or, alternatively, they may have a more ancillary role in supporting computations carried out primarily by excitatory neurons (Vogels et al., 2011).

Our theory can be extended to include both possibilities, by allowing inhibitory inputs to contribute to the computational function, , with negative weights, or by considering them as providing auxiliary information about the common state of the excitatory presynaptic ensemble, especially when this state is in the more suppressed regime. Indeed, our preliminary results suggest that such an extension of our theory (Ujfalussy and Lengyel, 2013) successfully accounts for the interaction of (excitatory) Schaffer collateral inputs with the feedforward inhibitory effects of the temporo-ammonic pathway (Remondes and Schuman, 2002), likely mediated by interneurons delivering dendritic inhibition (Dvorak-Carbone and Schuman, 1999).

In the present paper we focused on dendritic integration in pyramidal neurons because dendritic nonlinearities have traditionally been more extensively characterized in this cell type, but our theory equally applies to synaptic integration in other types of neurons, including inhibitory interneurons. Therefore, our theory predicts a qualitative similarity of dendritic integration in different neuron types (i.e. interneurons versus principal cells) when they receive inputs from overlapping presynaptic populations. Indeed, it has been recently found that inhibitory interneurons can exhibit dendritic NMDA spikes under certain experimental circumstances (Katona et al., 2011; Chiovini et al., 2014) in addition to standard sublinear integration. The differences between dendritic integration in excitatory and inhibitory neurons could be attributed to their different computational function, , or differences in the specific presynaptic populations innervating them.

Adaptation of dendritic nonlinearities to presynaptic statistics

According to our theory, the optimal response depends on prior information about the input statistics. Consequently, for dendritic processing to approximate the optimal response, this prior information needs to be implicitly encoded in the form of the particular nonlinearity a dendrite expresses. Therefore, our theory predicts an ongoing adaptation of dendritic nonlinearities to presynaptic firing statistics across several time-scales.

First, there is a simple yet potent mechanism implicit in our theory that can ensure that a match of dendritic integration to presynaptic statistics is maintained as those statistics are changing over time. This is based on the observation that essentially instantaneous, albeit probably incomplete, adaptation can occur even without specific changes in the integrative properties of dendrites per se, simply due to the fact that a critical level of input synchrony is required to elicit dendritic spikes, and so the same sigmoid-looking dendritic transfer function can be used as superlinear, linear, or sublinear, depending on which part of its input range is being used (Figure 3—figure supplement 2).

Second, to match the more specific modulation of the statistics of presynaptic activities by global cortical states (Crochet et al., 2011; Mizuseki and Buzsaki, 2014), dendritic integration may also be modulated by these states. As different cortical states are typically accompanied by changes in the neuromodulatory milieu (Hasselmo, 1995; Harris and Thiele, 2011), neuromodulators may be the ideal substrates to ensure that dendritic integration also changes according to the current cortical activity mode. This may provide a functional account of changes in the excitability of the dendritic tree as dynamically regulated by acetylcholine and monoamines (Sjöström et al., 2008).

Third, experience-dependent synaptic plasticity can gradually change the statistics of the presynaptic population activity implying that the optimal form of input integration should also change as a function of experience. We propose that branch-specific forms of plasticity of dendritic excitability (Losonczy et al., 2008; Makara et al., 2009; Müller et al., 2012) may have a functional role in enabling dendrites to adjust the form of input integration to such slowly developing and long-lasting changes in the statistics of their inputs.

Finally, whether inputs from two presynaptic cells are integrated linearly or nonlinearly in a dendrite depends critically on the distance between their synapses within the dendritic tree (Polsky et al., 2004; Losonczy and Magee, 2006). Our theory requires nonlinear integration of inputs from neurons with statistically dependent activity, predicting a mapping of presynaptic correlations on the postsynaptic dendritic tree. Local electrical and biochemical signals can drive synaptic plasticity (Larkum and Nevian, 2008; Govindarajan et al., 2011; Winnubst et al., 2015) and rewiring (DeBello, 2008) leading to synaptic clustering of correlated inputs along the dendritic tree (Kleindienst et al., 2011; Takahashi et al., 2012).

A combination of all these mechanisms may be crucial for achieving and dynamically maintaining, at the level of individual neurons, a detailed matching of dendritic nonlinearities to presynaptic statistics. Thus, our theory provides a novel framework for studying a range of phenomena regarding the dynamical regulation of dendritic nonlinearities from the perspective of circuit-level computations.

Materials and methods

Source code for reproducing the analyses and simulations presented in the paper as well as the experimental data we used for testing our predictions are available online (https://bitbucket.org/bbu20/optimdendr).

Computing the optimal response

In order to study the optimal form of input integration with realistic input statistics, we need to make two important assumptions. First, we need to assume a particular algebraic form for the computation that a neuron performs. Second, we need to define what the relevant presynaptic statistics are, that is, the membrane potential and spiking dynamics of the presynaptic population under naturalistic in vivo circumstances. Given these two assumptions, the theory uniquely defines the optimal response of a neuron to any input pattern. The optimal response has qualitatively similar properties whether computations are defined as mappings between pre- or postsynaptic membrane potentials or firing rates (Figure 1—figure supplement 1).

Throughout the paper the term input refers to the spatio-temporal spiking pattern impinging the neuron while the response of a neuron refers to its (subthreshold) somatic membrane potential (or firing rate, see below). All parameters used in the paper are given in Table 1 or in the caption of the corresponding Figure.

Postsynaptic computation

We assumed the postsynaptic computation to be linear, i.e. the dynamics of the postsynaptic membrane potential evolves according to a weighted sum of the presynaptic membrane potential values, (cf. Equation 1):

| (5) |

where is the time constant of the postsynaptic neuron, is the number of presynaptic neurons, and is the computational weight assigned to presynaptic neuron . As the postsynaptic neuron cannot access presynaptic membrane potentials, , directly only the spikes the presynaptic cells emit, , (Figure 1, Equation 2), the optimal response (that minimizes mean squared error) is the expectation of Equation 5 under the posterior distribution of the presynaptic membrane potential at time , given the history of presynaptic spiking up to that time, (cf. Equation 3):

| (6) |

Throughout the paper we call the output of Equation 6 the optimal response and compare its behavior to input integration in the dendrites of cortical pyramidal cells.

Table 1 shows the values of the parameters in Equation 5 (, , and ) used in the simulations. In short, to illustrate the contributions of inference to Equation 6 (the term including the integral), we used in Figures 1, 3 and 4 as well as in all Supplemental Figures, unless otherwise stated. We used ms in Figure 2 to aid comparison with experimental data and fitted to data for Figure 5. Throughout the paper we used , except in Figure 5 where we fit and jointly to the data.

Presynaptic statistics

Computing the posterior, in Equation 6 requires a model for the joint membrane potential and spiking statistics of the presynaptic population, (see also Equation 4). For mathematical convenience, we present some of our results below in discrete time with time step size , which we will eventually take to zero to derive time-continuous equations. We distinguish discrete and continuous time results by using time as an index versus as an argument of the corresponding time-dependent quantities, e.g. vs. .

We describe the joint statistics of presynaptic membrane potentials and spikes by a hierarchical generative model that has three layers of variables (Figure 2—figure supplement 1A,B). The global state of the system is described by a single binary variable, that switches between a quiescent () and an active () state following first-order Markovian dynamics (see Appendix for the extension to an arbitrary number of states). The transition rates to the active and quiescent states are given by and , respectively.

The dynamics of (subthreshold) membrane potentials are modeled as a multivariate Ornstein-Uhlenbeck (mOU) process, which yields random walk-like behavior that (unlike simple Brownian motion) decays exponentially towards a baseline defined by the resting potential , which in turn depends on the momentary global state of the system, :

| (7) |

where is the presynaptic time constant of the exponential decay, and is the ‘process noise’ covariance matrix determining the variance of individual membrane potentials (together with ) and, importantly, also the correlations between presynaptic neurons. It is straightforward to extend the model by also making these parameters state- (or in fact, neuron-) dependent.

Note that both the state switching and mOU components of this model introduce both spatial and temporal statistical dependencies in the membrane potentials and spike trains of presynaptic cells. In the rest of this paper, we informally refer to any statistical dependency (second or higher order) as ‘correlation’, and we write ‘auto-correlation’ when we refer to the correlations between the membrane potential (firing rate) values of the same neuron at different times, and ‘cross-correlation’ when referring to the correlation between the activities of two different cells (at the same time, or at different times). Also note that temporal and spatial correlations can not be studied in complete isolation in the case of smoothly varying signals, such as membrane potentials, as the cross-correlation between the activity of two presynaptic neurons always has a characteristic temporal profile. While it is possible to consider a presynaptic neuronal population completely lacking spatial correlations (i.e. independent presynaptic neurons, as in Figure 1C), having a population with only spatial but not temporal correlations would require the membrane potentials of the individual neurons to be temporally white noise – which is so far removed from reality that we did not consider this case worth pursuing.

More specifically, the timescale of temporal correlations (auto-correlations) in the model depend on the transition rates of the switching component, and , and the presynaptic time constant of the mOU component, , such that cells are auto-correlated as long as , , and . Spatial correlation (cross-correlations between different presynaptic neurons) also emerge from both components. First, the pairs of presynaptic neurons corresponding to the non-zero off-diagonal elements of matrix of the mOU component become correlated. Second, synchronous state transitions during state switching in the presynaptic ensemble introduce positive correlations. Importantly, in both the temporal and spatial domains, while the mOU process can only introduce second-order correlations (i.e. it makes membrane potentials be distributed according to a multivariate normal), the switching process introduces higher order correlations (such that membrane potentials are not normally distributed any more). These higher order correlations are stronger when the effect of state-switching is large relative to the membrane potential fluctuations within a single state.

Finally, instead of modeling the detailed dynamics of action potential generation, we model spiking phenomenologically by introducing a single discrete variable, , for each presynaptic neuron that represents the number of spikes neuron fires in time step . (Note that in the limit this variable becomes binary, i.e. there can never be more than one spike fired in a time window.) Spiking in each cell only depends on the membrane potential of that cell, and follows an inhomogeneous Poisson process with the firing rate, , being an exponential function of the membrane potential (Gerstner and Kistler, 2002):

| (8) |