Abstract

Background

Real-time automated continuous sampling of electronic medical record data may expeditiously identify patients at risk for death and enable prompt life-saving interventions. We hypothesized that a real-time electronic medical record-based alert could identify hospitalized patients at risk for mortality.

Methods

An automated alert was developed and implemented to continuously sample electronic medical record data and trigger when at least two of four systemic inflammatory response syndrome criteria plus at least one of 14 acute organ dysfunction parameters was detected. The SIRS/OD alert was applied real-time to 312,214 patients in 24 hospitals and analyzed in two phases: training and validation datasets.

Results

In the training phase, 29,317 (18.8%) triggered the alert and 5.2% of such patients died whereas only 0.2% without the alert died (unadjusted odds ratio 30.1; 95% confidence interval [95%CI] 26.1, 34.5; P<0.0001). In the validation phase, the sensitivity, specificity, area under curve (AUC), positive and negative likelihood ratios for predicting mortality were 0.86, 0.82, 0.84, 4.9, and 0.16, respectively. Multivariate Cox-proportional hazard regression model revealed greater hospital mortality when the alert was triggered (adjusted Hazards Ratio 4.0; 95%CI 3.3, 4.9; P<0.0001). Triggering the alert was associated with additional hospitalization days (+3.0 days) and ventilator days (+1.6 days; P<0.0001).

Conclusion

An automated alert system that continuously samples electronic medical record-data can be implemented, has excellent test characteristics, and can assist in the real-time identification of hospitalized patients at risk for death.

Keywords: sepsis, critical illness, electronic health records, forecasting, mortality

INTRODUCTION

Sepsis is a major cause of mortality in hospitalized patients and requires prompt identification and treatment1. Prompt intervention is crucial considering that studies have shown that mortality from septic shock is increased by 7.6% for every hour of delayed treatment initiation following the onset of hypotension 2. Conventionally, providers perform risk evaluations at the bedside and make interventions based on their subjective understanding which then informs multiple subsequent aspects of clinical decision-making1,3. A variety of risk assessment tools are currently in use to detect mortality in hospitalized patients 4-9. Continuous monitoring for early warning scores (EWS) and other acuity scores such as modified-EWS and Rothman index are utilized to identify adverse trends and physiological deterioration10. Health systems also utilize risk-adjustment models, but mostly retrospectively for quality of care assessments11. Acute Physiology and Chronic Health Evaluation (APACHE) scores are widely used to identify individual risk after the first 24 hours of admission to the intensive care unit (ICU) but are limited in their application to critical care patients and dependent on information from the first 24 hours only12,13. Alternatively, diagnosis-specific triage has been adopted for early identification and treatment for high-risk conditions such as sepsis or delirium14,15.

Despite such available tools, there have not been any reports of tools applied real-time that continuously sample physiological and laboratory information from electronic medical records and synthesize a composite alerting signal that alerts the clinician at the bed-side of possible clinical deterioration. In the era of big-data and predictive analytics, however, the performance of real-time automated continuous sampling and analysis of electronic medical record data may allow early identification of patients at risk for sepsis and death and provide opportunity for expeditious interventions aimed at reducing sepsis-related mortality. A recent retrospective analysis that involved development of a new prediction score (TREWscore) analyzed historical physiological and laboratory data collected in the ICU and demonstrated the ability to better predict severe sepsis than EWS16. Despite such available tools, to our knowledge, there is an implementation gap in that there are no automated tools that can continuously sample and screen data derived from electronic medical record systems of hospitalized patients and warn providers of impending mortality.

We wish to report the successful implementation of a real-time automated continuous sampling and analysis of electronic medical record data over 24 hospital facilities that allowed early identification of patients with high risk for hospital mortality. We developed this real-time alert to detect the presence of both systemic inflammatory response syndrome and acute organ dysfunction with the rationale that the need for ≥ 2 systemic inflammatory response syndrome criteria alone excludes one in eight otherwise similar patients with substantial mortality17. We hypothesized that a real-time electronic medical record-based alert that automatically and continuously samples electronic medical record data and utilizes systemic inflammatory response syndrome and acute organ dysfunction derived criteria could enhance the identification of hospitalized patients at high risk for mortality. Such an alert could facilitate real-time risk stratification and appropriate resource allocation strategies and aggressive management aimed at reducing mortality.

METHODS

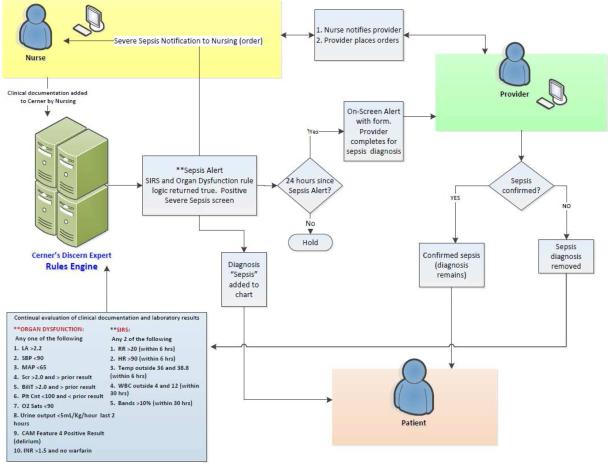

The SIRS/OD alert logic was developed at Banner Health using Cerner Discern Expert® (Cerner Corporation, North Kansas City, MO). The SIRS/OD alert logic would trigger an alert in the electronic medical record whenever the nurse or providing physician accessed the patient's chart (figures 1 and 2). This study is a retrospective assessment of the data that was collected and was approved by Banner Health Institutional Review Board, including a waiver for informed consent (IRB # 05-14-0014). The data from 312,214 consecutive hospitalized patients from 24 hospitals that were subjected to the SIRS/OD alert logic from April 29, 2011 until June 30, 2013 was analyzed. We divided the data into two equal halves – a training and validation data-set – of 156,107 patients each.

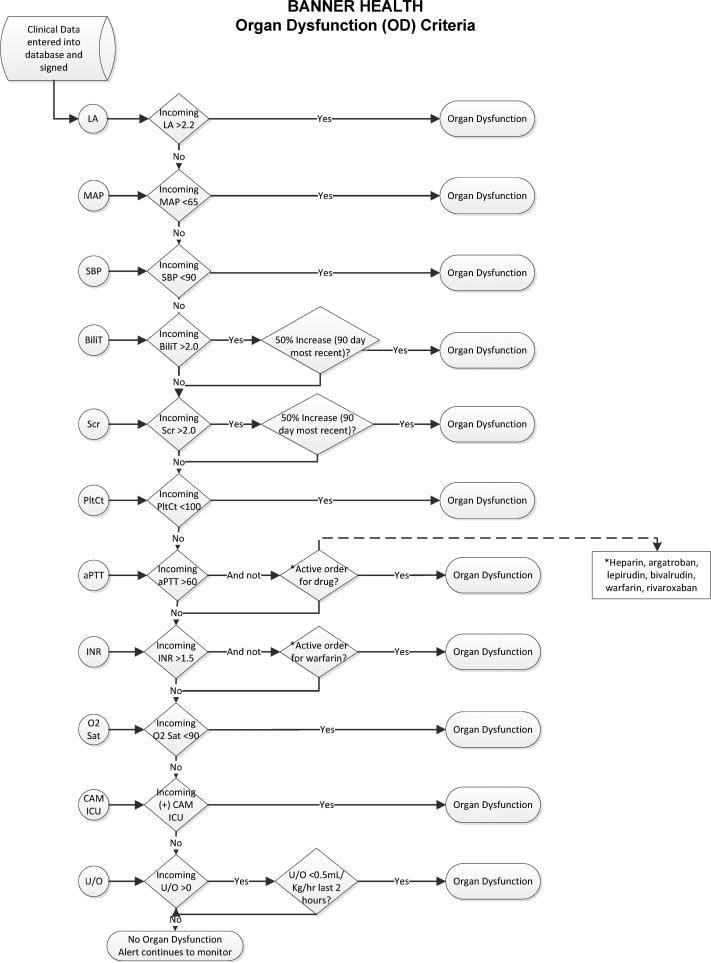

Figure 1.

Organ Dysfunction and systemic inflammatory response syndrome Decision Flowcharts (figure 1; panel A) and systemic inflammatory response syndrome criteria (figure 1; panel B) that were the basis of the Cerner-based SIRS/OD logic. This logic ran real-time in the Cerner data-warehouse and alerted the providers as shown in figure 2. LA= serum lactic acid levels; MAP = mean arterial pressure; SBP = systolic blood pressure; Bili = serum bilirubin levels; Scr = serum creatinine level; PltCt = blood platelet count; aPTT = activated partial thromboplastin time; INR = International Normalized Ratio; O2 sat = oxygen saturation by pulseoximetry; CAM-ICU = Confusion Assessment Method in Intensive Care Unit patients; U/O = urine output charter in electronic medical record.

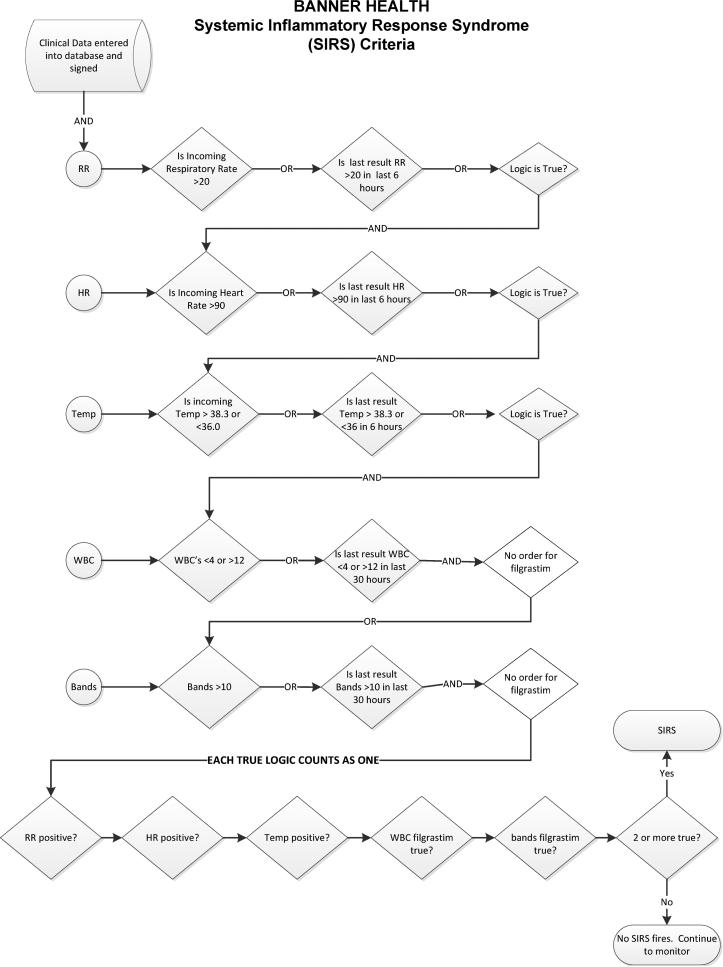

Figure 2.

Schematic diagram of how the real-time electronic medical record based alert would activate and inform the providers of severe sepsis and increased risk for death in their patients.

The SIRS/OD alert logic and system are outlined in figures 1 and figure 2, respectively. More detailed information on the SIRS/OD logic is provided in the online supplement. This screening system was based upon the identification of three events, two independent and one correlating, from data entered into the electronic medical record. The two independent elements are: the “systemic inflammatory response syndrome event”—detection of two traditional systemic inflammatory response syndrome criteria occurring within 6 hours of each other (with the exception of those WBC-related values for which 30 hour timeframe was permitted), and the “acute organ dysfunction event” which involved detection of any acute organ dysfunction as defined by strict criteria (figure 1; panel B). The final event (“correlating”) is an evaluation for the temporal association of the two prior elements, requiring that systemic inflammatory response syndrome and acute organ dysfunction events occur within 8 hours of each other. If all of these conditions were met, then the SIRS/OD alert was triggered (figure 1A and 1B). We undertook steps to mitigate the occurrence of false alert firings described in the supplement.

Alert may fire in patients while in the emergency department or those admitted to the hospital inpatient or ICU setting. Once the alert was triggered, providers were expected to respond to confirm or refute the presence of severe sepsis. If the providers confirmed, or failed to respond to the alert, the alert would not trigger again during that hospital stay. If the providers refuted the presence of severe sepsis, the alert could trigger after 48 hours latency period if the trigger criteria were met again. We evaluated in-hospital mortality, length of stay (LOS), and ventilator days from the Cerner data-warehouse and hospital discharge summary. We used the defined billing International Classification of Diseases, Ninth Revision (ICD-9) diagnoses to identify patients who met the “Angus implementation” sepsis criteria which requires both ICD9 codes for severe sepsis or septic shock and the presence of organ dysfunction that was determined by combining various comorbid conditions by extracting up to 13 ICD9 codes from the Cerner data-warehouse including the principal and secondary diagnosis using Clinical Classification Software (CCS2014; AHRQ 2014) compatible with STATA18,19. Severe sepsis was derived from Cerner data-warehouse and registered as present if there was the presence of sepsis-induced tissue hypoperfusion or organ dysfunction that are described in the online supplement.

Statistical analysis

Continuous variables were reported as means and standard deviations and categorical variables as percentages. Categorical variables were compared using Chi-square testing and continuous variables using t-tests or nonparametric equivalents as appropriate. Standard formulas were used to calculate the test characteristics such as sensitivity, specificity, positive and negative likelihood ratios. To describe the frequency of outcomes for the patients who triggered the SIRS/OD alert or were devoid of such triggers, we constructed Kaplan-Meier cumulative-event curves for all-cause hospital mortality. Data were censored at the time of hospital discharge. The log-rank test was used to compare differences among the two groups. Multivariate Cox proportional hazard regression model was used to determine whether SIRS/OD alert increased the risk of all-cause hospital mortality. Hazard ratios (HR) and 95% confidence intervals (CI) were calculated for the association between end points and baseline characteristics. Additionally, from the list of predictors and potential confounders, simple logistic-regression analysis was performed to identify significant determinants of in-hospital mortality. Subsequently, the significant determining variables were entered into a multi-variate forward stepwise logistic regression model with in-hospital mortality as the dependent variable. Area under the curve for the models and Hosmer-Lemeshow statistic for goodness of fit were calculated and reported. For outcomes that were continuous variables such as length of hospital stay or ventilator days, generalized linear models were built with the time variable as the dependent variable and determining factors and covariates. A two-tailed p < 0·05 was considered significant. Statistical analyses were conducted using SPSS v23 (IBM Corporation; Armonk, NY) and STATA14·0 (College Station, TX).

RESULTS

Patient characteristics for those who triggered or did not trigger a SIRS/OD alert are provided in table 1. In general, patients who triggered the SIRS/OD alert were older, more likely to be male, have cancer, undergone coronary artery bypass grafting, suffered trauma, or be labelled with sepsis by Angus criteria. Patients who triggered the alert during their hospital stay were also more likely to suffer from chronic medical conditions (table 1). Crude hospital mortality, length of hospital stay, and number of ventilator days was greater in the group who triggered the SIRS/OD alert during their hospital stay than those who did not trigger the alert (table 1).

Table 1.

Patient characteristics and outcomes in the training and validation datasets

| Training Phase No ALERT (n=126,790) | Training Phase ALERT (n=29,317) | Validation Phase No ALERT (n=127,078) | Validation Phase ALERT (n=29,029) | |

|---|---|---|---|---|

| CHARACTERISTICS | ||||

| Age | 55·2 ± 21·9 | 63·7 ± 18·1* | 55·3 ± 22·0 | 64·0 ± 18·1* |

| Female sex | 81,153 (64%) | 15,086 (51·5%)* | 81,062 (63·8%) | 14,868 (51·2%)* |

| Cancer diagnosis | 10,835 (8·5%) | 4,886 (16·7%)* | 10,681 (8·4%) | 4,508 (15·5%)* |

| CABG | 438 (0·8%) | 500 (1·7%)* | 461 (0·4%) | 418 (1·4%)* |

| APACHE score | 46·4 ± 20·6 | 63·8 ± 27·5* | 46·1 ± 20·1 | 65·0 ± 27·9*† |

| Trauma | 5,520 (4·4%) | 1,476 (5·0%)* | 5,990 (4·7%) | 1,384 (4·8%) |

| Cardiac disease | 28,819 (22·7%) | 8,901 (30·4%)* | 27,983 (22·0%) | 8,469 (29·2%)* |

| Cerebrovascular disease | 5,833 (4·6%) | 1,147 (3·9%) | 5,649 (4·5%) | 1,183 (4·1%) |

| COPD | 7,402 (5·8%) | 3,136 (10·7%)* | 8,032 (6·3%) | 3,579 (12·3%)* |

| CKD | 5,577 (4·4%) | 2,167 (7·4%)* | 5,963 (4·7%) | 2,035 (7·0%)* |

| OUTCOMES | ||||

| ICU length of stay | 1·4 ± 1·4 | 3·4 ± 4·2* | 1·3 ± 1·3 | 3·4 ± 4·2* |

| Hospital length of stay | 3·5 ± 4·0 | 6·7 ± 6·9* | 3·5 ± 3·6 | 6·4 ± 6·3*† |

| Number of Ventilator days | 1·9 ± 1·4 | 3·8 ± 3·9* | 1·7 ± 1·2 | 3·7 ± 3·7 |

| Angus criteria | 6,242 (4·9%) | 7,856 (26·8%)* | 6,384 (5·0%) | 7,888 (27·2%)* |

| Severe Sepsis | 0 (0%) | 21,829 (74·5%)* | 8838 (9·8%)† | 20,191 (69·6%)*† |

| Mortality | 233 (0·2%) | 1,539 (5·2%)* | 264 (0·2%) | 1,684 (5·8%)* |

different within phases

different across phases when comparison is made by ALERT status

CABG=coronary artery bypass grafting; COPD= chronic obstructive pulmonary disease; CKD=chronic kidney disease

In the training phase, 9,361 alerts (31·9% of all alerts) triggered when the patient was in an emergency department. Similarly, in the validation phase, 10,357 alerts (35·7%) triggered when the patients was in the emergency department. Of the total alerts, 80·2% were first noted within 48 hours of admission. In the training phase, the time interval between admission and alert was a median of 21·5 hours (interquartile range [IQR] 5·2, 51·3 hours). Similarly, in the validation phase, the time interval between admission and alert was a median of 19·5 hours (IQR 4·5, 50·3 hours).

Mortality

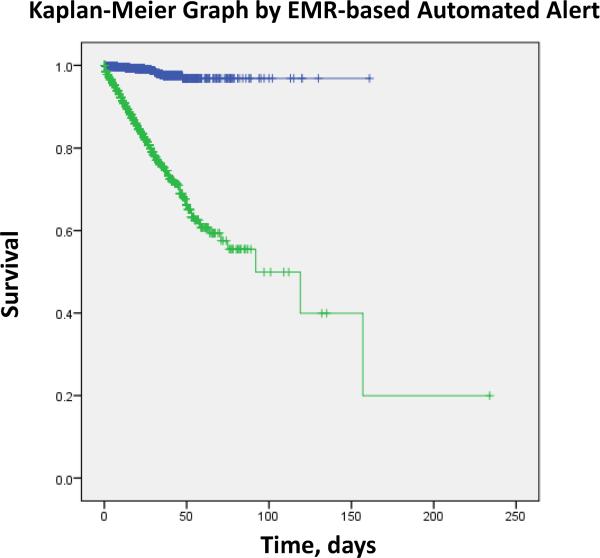

In the both the training and validation phase, in-hospital mortality was much greater in the patients with the SIRS/OD alert than those without the alert (table 1). The Kaplan-Meier analysis shows that patients with SIRS/OD alert during their hospital stay had increased mortality when compared with the group without the alert (Figure 3).

Figure 3.

Kaplan-Meier curves across the electronic medical records-based real-time alert during the hospital stay. The systemic inflammatory response syndrome and organ dysfunction based electronic medical record alert (SIRS/OD alert) triggered in hospitalized patients (green) or did not trigger (blue). The probability of survival was lower for patients with SIRS/OD alert trigger during their hospital stay than for patients without such an alert (P<0·0001 by the log-rank test).

Table 2 shows univariate and multivariate stepwise hierarchical logistic regression of in-hospital mortality for both the training and validation datasets. Compared to the group that did not trigger the SIRS/OD alert, the group that triggered the alert manifested higher mortality after adjusting for the significant confounders (table 2). The strength of association between SIRS/OD alert and in-hospital mortality was similar in both the training and validation datasets (table 2). These results were similar to the Cox-adjusted multivariate model, which showed a higher in-hospital mortality in patients who triggered the SIRS/OD alert versus those who did not trigger such an alert (table 3). The probability of survival was lower for patients with SIRS/OD alert trigger during their hospital stay than for patients without such an alert (P<0·0001 by the log-rank test; figure 3).

Table 2.

Univariate and multivariate regression of Mortality predicted by the SIRS/OD alert

| DERIVATION SET Odds ratio (95% CI) | VALIDATION SET Odds ratio (95% CI) | |

|---|---|---|

| UNIVARIATE REGRESSIONS | ||

| Age | 1·03 (1·03, 1·04)*** | 1·04 (1·03, 1·04)*** |

| Male sex | 1·9 (1·7, 2·1)*** | 1·9 (1·7, 2·1)*** |

| SIRS/OD Alert | 30·1 (26·1, 34·5)*** | 29·6 (25·9, 33·7)*** |

| Cancer diagnosis | 2·4 (2·2, 2·7)*** | 2·4 (2·1, 2·7)*** |

| Coronary artery bypass graft surgery | 1·2 (0·7, 2·1) | 2·6 (1·8, 3·8)*** |

| APACHE score | 1·05 (1·05, 1·05)*** | 1·05 (1·04, 1·05)*** |

| Trauma | 1·3 (1·1, 1·6)* | 1·3 (1·1, 1·6)** |

| Cerebrovascular disease | 2·0 (1·7, 2·4)*** | 2·3 (2·0, 2·7)*** |

| Chronic kidney disease | 1·3 (1·0, 1·5)* | 0·8 (0·6, 1·0) |

| Cardiac disease | 1·8 (1·6, 1·9)*** | 2·0 (1·8, 2·1)*** |

| Chronic obstructive pulmonary disease | 0·6 (0·5, 0·8)*** | 0·6 (0·5, 0·8)*** |

| MODEL 1§ | (n=156,100) | (n=156,106) |

| SIRS/OD Alert | 26·1 (22·7, 30·0)*** | 25·8(22·6, 29·5)*** |

| MODEL 1 AUC | 0·90 | 0·90 |

| MODEL 1 Hosmer-Lemeshow statistic | 0·028* | 0·005** |

| MODEL 2‡ | (n=18,643) | (n=18,658) |

| SIRS/OD Alert | 5·1 (3·9, 6·8)*** | 4·5 (3·5, 5·8)*** |

| MODEL 2 AUC | 0·88 | 0·87 |

| MODEL 2 Hosmer-Lemeshow statistic | 0·02* | 0·0002** |

Adjusted for age, sex, cancer diagnosis, CABG, trauma, cardiac disease, chronic kidney disease, cerebrovascular disease, and chronic obstructive pulmonary disease.

Adjusted for age, sex, cancer diagnosis, CABG, trauma, cardiac disease, chronic kidney disease, cerebrovascular disease, and chronic obstructive pulmonary disease, and APACHE score.

SIRS/OD alert = alert logic using systemic inflammatory response syndrome and organ dysfunction EMR-based real-time alert logic

95%CI= 95% confidence interval

P<0·05

P<0·01

P<0·0001

Table 3.

Cox proportional hazards model of mortality predictors

| Total cohort n=36,895 HR(95%CI) | P value | Training n=18,452 HR(95%CI) | P value | Validation n=18,443 HR(95%CI) | P value | |

|---|---|---|---|---|---|---|

| Age | 1·01 (1·01, 1·01) | <0·0001 | 1·01 (1·00, 1·02) | <0·0001 | 1·01 (1·00, 1·01) | <0·0001 |

| Male sex | 0·99 (0·9, 1·1) | 0·77 | 0·99(0·9, 1·1) | 0·96 | 0·97(0·9, 1·1) | 0·60 |

| SIRS/OD Alert | 4·0 (3·3, 4·9) | <0·0001 | 4·4 (3·3, 6·0) | <0·0001 | 3·7(2·8, 4·9) | <0·0001 |

| Cancer | 1·4 (1·3, 1·5) | <0·0001 | 1·3 (1·2, 1·8) | <0·0001 | 1·4 (1·2, 1·6) | <0·0001 |

| CVD | 1·1 (1·0, 1·2) | 0·005 | 1·2 (1·0, 1·3) | 0·015 | 1·1(0·9, 1·2) | 0·13 |

| APACHE score | 1·03 (1·03, 1·03) | <0·0001 | 1·03(1·03, 1·03) | <0·0001 | 1·03(1·03, 1·03) | <0·0001 |

| Trauma | 1·04 (0·88, 1·23) | 0·69 | 1·1(0·8, 1·4) | 0·62 | 1·02(0·80, 1·27) | 0·89 |

| Chronic kidney disease | 0·94 (0·78, 1·14) | 0·55 | 1·2 (0·9, 1·5) | 0·24 | 0·7 (0·5, 0·9) | 0·04 |

| COPD | 0·71 (0·57, 0·90) | 0·004 | 0·68(0·47, 0·97) | 0·03 | 0·75 (0·56, 1·01) | 0·057 |

| CVA | 1·6 (1·4, 1·8) | <0·0001 | 1·5(1·2, 1·8) | <0·0001 | 1·7 (1·4, 2·0) | <0·0001 |

HR=hazard ratio; 95%CI=95% confidence interval; SIRS/OD alert = alert logic using systemic inflammatory response syndrome and organ dysfunction EMR-based real-time alert logic; COPD= Chronic obstructive pulmonary disease; CVD=Cardiovascular disease; CVA=Cerebrovascular disease

Predicting mortality and severe sepsis

In both the training and validation phases, the test characteristics for predicting mortality and severe sepsis were excellent (table 4). Considering a pre-test odds for mortality of 0·012 for the validation cohort, and a negative likelihood ratio of 0·16 for the SIRS/OD in predicting death, the post-test probability was 0·002. This could be interpreted to indicate that only 0·2% of hospitalized patients who do not trigger the SIRS/OD logic will die during that hospitalization. Similarly, the post-test probability for predicting severe sepsis when the SRS/OD alert was not triggered was near 0.

Table 4.

Test characteristics of SIRS/OD alert to predict severe sepsis and mortality

| DERIVATION PHASE B (95%CI) | VALIDATION PHASE B (95%CI) | |

|---|---|---|

| Severe Sepsis | ||

| Sensitivity | 1·0 | 0·99 |

| Specificity | 0·94 | 0·94 |

| Area under the curve | 1·0 | 0·96 |

| LR+ | 17·9 | 15·2 |

| LR− | 0 | 0·01 |

| Mortality | ||

| Sensitivity | 0·87 | 0·86 |

| Specificity | 0·82 | 0·82 |

| Area under the curve | 0·84 | 0·84 |

| LR+ | 4·8 | 4·9 |

| LR− | 0·16 | 0·16 |

LR+ = positive likelihood ratio of the SIRS/OD alert as a test; LR− = negative likelihood ratio of the SIRS/OD alert as a test.

Sensitivity analysis

Sensitivity analysis was undertaken to determine the presence of a time effect by considering the time lapsed from the start date of implementation of the SIRS/OD alert as a variable. Such analysis did not materially change the strength of the association between SIRS/OD alert and inhospital mortality (adjusted HR 4·0, 95%CI 3·3, 4·9; P<0·0001). Interestingly, we noticed a reduction in mortality with time in years (adjusted HR 0·88, 95%CI 0·81, 0·97; P=0·008). To additionally account for such time-based effects, we selected patients randomly over the study period rather than the sequential initial training phase followed by the validation phase. Such sensitivity analysis did not materially change the strength of the association between the SIRS/OD alert and mortality. Moreover, when analysis was restricted to the 37,301 patients (n=18,658 in validation phase) in whom the APACHE variable was available, there was again no material change in the results.

Duration of hospital stay and mechanical ventilation

In generalized linear models, in the training phase, after adjusting for various confounders, the triggering of the SIRS/OD alert was associated with longer duration of hospital stay and greater ventilator days when compared to those who did not trigger the alert (table 5). Similarly, in the validation phase, the triggering of the SIRS/OD alert was associated with longer duration of hospital and ventilator days than that of patients who did not trigger the alert (table 5).

Table 5.

Generalized Linear Models for assessing association between alert and hospital length of stay and ventilator days §

| DERIVATION SET B (95%CI) n=18,643 | VALIDATION SET B (95%CI) n=18,658 | |

|---|---|---|

| Hospital length of stay | ||

| SIRS/OD alert | + 2·8 (2·6, 3·0)*** | + 3·0 (2·7, 3·2)*** |

| Ventilator days | ||

| SIRS/OD alert | + 1·5 (1·3, 1·7)*** | + 1·6 (1·4, 1·8)*** |

Adjusted for age, sex, cancer diagnosis, CABG, APACHE score, trauma, cardiac disease, chronic kidney disease, cerebrovascular disease, and chronic obstructive pulmonary disease.

B= coefficient; 95%CI= 95% confidence interval

* P<0·05;

** P<0·01;

P<0·0001

SIRS/OD alert = alert logic using systemic inflammatory response syndrome and organ dysfunction EMR-based real-time alert logic

Discussion

We have reported the successful implementation of predictive analytics involving a real-time SIRS/OD alert across a large 24 hospital healthcare system that automatically and continuously sampled electronic medical record data, analyzed, and alerted providers of hospitalized patients identified to be at increased risk for death. This SIRS/OD alert predicted in-hospital mortality and severe sepsis when triggered with excellent test characteristics. Moreover, the SIRS/OD alert identified, in real-time, a sub-population of patients at high risk for greater length of hospital stay and duration of mechanical ventilation.

Although the SIRS/OD alert uses many variables similar to other acuity alerts like APACHE score, it is meant to alert the provider real-time and is not meant to replace these indicators. Also, the SIRS/OD alert can be reliably applied across all hospital settings such as outside the ICU and therefore differs from critical care-specific outcome and predictive algorithms, such as APACHE13. Previously, Lagu and colleagues have demonstrated that administrative claims data has discriminant characteristics similar to other conventional models (such as APACHE-II) to predict mortality with AUC of 0·69 and can be used in patients outside the ICU20. Others have shown that Simplified Acute Physiology Score II (SAPS II) and the 24-hour Mortality Probability Model II (MPM II) are able to predict mortality with AUC of 0·7921. Our work builds upon such work in making such data available real-time to clinicians rather than retrospectively, and we believe that our iterative continuous sampling by the SIRS/OD logic in the electronic medical record led to a higher AUC for predicting in-hospital mortality and severe sepsis. To our knowledge, our study is the first to report real-world implementation of a real-time alert with such test characteristics. Although this is a retrospective report of a quality improvement initiative that was implemented in 2011, we believe that our report is unique and adds to the growing literature on universal risk prediction in hospitalized patients22.

A significant majority of the hospital mortality (87%) was observed in the population on whom the alert triggered. Moreover, the majority of alerts were triggered early (< 48 hours) in the hospital course of those patients, who subsequently died, with an average of 5·3 days from the time the alert triggered to death. It follows that the SIRS/OD alert could possibly provide a time window for therapeutic intervention. Moreover, the post-test probability for death was 0·002 which could be interpreted to indicate that only 0·2% of hospitalized patients who do not trigger the SIRS/OD logic will die during that hospitalization. The test characteristics of the SIRS/OD alert for predicting severe sepsis in the validation data-set were excellent and performed better than previous reports 16,20. Such prediction capabilities and automation make this an effective early warning tool.

There are limitations to our study. We recognize that the Hosmer-Lemeshow goodness of fit (GOF) test was significant implying that the model may not fit the data well, but this test is known to fail with datasets of 50,000 patients or greater 23. Also, the AUC for the receiver operating characteristics was excellent (> 0·85), the R2 was reasonable, and both the training and validation data-sets yielded similar results suggesting that the SIRS/OD alert is a good predictor23.

Risk prediction and stratification tools have demonstrated utility in improving clinical, quality, and financial metrics among various populations, including outcomes related to disease progression, treatment response, ICU transfer, LOS, and survival24-28. We recognize that this study does not demonstrate that the implementation of the electronic medical record-based safety alert led to reduction in mortality for lack of a parallel control group. Moreover, only 1 in 4 patients on whom the SIRS/OD alert triggered were reportedly septic by Angus implementation criteria. This could mean that the SIRD/OD criteria were much more sensitive and/or that the clinical diagnosis of sepsis and severe sepsis were under-reported. Such greater sensitivity would however be preferable considering that recently, conventional definitions for sepsis such as the need for ≥ 2 systemic inflammatory response syndrome criteria alone fails to detect one in eight otherwise similar patients with substantial mortality17. It follows that such a sensitive real-time SIRS/OD alert identified the patients at greater risk for hospital mortality which is the primary intent of our predictive analytics. Nevertheless, another possible explanation for the discrepancy between SIRS/OD alert and Angus implementation criteria could be that we did not factor the effect of therapeutic interventions at each sampling data point in the electronic medical records. Conceivably, such interventions could have aborted the development of sepsis that met “Angus implementation” definition and led to a systematic over-estimation of sepsis by the SIRS/OD alert. Moreover, adjustment for covariates reduced the strength of association between the SIRS/OD trigger and mortality. Our study was not designed to compare our alert to the Angus criteria for sepsis, but, rather to serve as an early warning for death. We believe that because many hospitals are currently using systemic inflammatory response syndrome plus acute organ dysfunction plus clinical judgment for infections in their attempts at early sepsis identification, using our criteria and algorithm provides evidence for effective implementation of an automated and continuous surveillance that goes beyond disease-specific diagnosis to a broader risk category for all inpatients at risk for death. Another limitation of our study was that this was a retrospective study of a quality improvement initiative meant to assist clinicians detect at-risk populations. However, although this report was analyzed retrospectively for the validity of the SIRS/OD alert, it should be noted that the SIRS/OD alert was processed real-time in an automated manner and implemented in a “real-world” setting in consecutive patients and was actionable with regards to response by clinicians. Conceivably, there may be greater value for the crude unadjusted test characteristics of the SIRS/OD alert because the ICU providers respond to the alerts rather than the adjusted alerts29.

Conclusions

Our findings support the feasibility of successful implementation of a real-time automated electronic medical record-based alert system that uses systemic inflammatory response syndrome and organ dysfunction criteria to identify patients at high risk for hospital mortality, greater ventilator days, and longer duration of hospitalization. Outcomes from this alert using our algorithms were stable and replicable over time, generalizable across populations, and potentially actionable in terms of clearly identifying a majority of the high-risk patients within 48 hours of admission. Our findings underscore the feasibility and predictive potential for leveraging large, standardized electronic medical record-based data to provide real-time monitoring of adverse trends in hospitalized patients.

Supplementary Material

Clinical significance.

An alert based upon “real-time” electronic medical record data can identify hospitalized patients at risk for death.

Patients who trigger the alert had four times the chance of dying at the next hospital day when compared to patients who did not trigger the alert.

Such predictive analytics was implemented in a “real-world” setting involving 24 hospitals and enabled early and targeted medical intervention.

Triggering the alert was associated with additional hospitalization days and ventilator days.

Acknowledgements

The authors would like to express their gratitude to the following individuals and groups who assisted in the completion of this work: Brett Efaw (former Systems analyst, Banner Health; Phoenix, AZ) for his work in performing the data queries; Didi Hartling (Medical Informaticist, Banner Health; Phoenix, AZ) for serving as a resource for design and implementation of the sepsis alert; Emily A. Kuhl, Ph.D. (Senior Science Writer, GYMR; Washington, D.C.) for her invaluable assistance in the preparation of this manuscript; Mikaela Mackey and Ethel Utter (formerly of the Critical Care TRIAD, Banner Health) for their logistical support and historical data; Stephen Frankel, M.D., FCCM, FCCP (National Jewish Health; Denver, CO) for editorial guidance; Banner Health's Critical Care Clinical Consensus Group and Clinical Informatics for Process Improvement Sepsis Team for their review and feedback on the initial data; and Banner Health's Care Management Council for their support in authorizing internal resources to permit the completion of this work. Special acknowledgement is made for the many tireless hours spent by each facility sepsis leader and their respective facility teams in implementing and maintaining a consistent response to the alerts. Finally, the authors offer their sincere thanks to every individual nurse and physician at the bedside who intervened on a patient's behalf when an alert triggered.

Dr. Parthasarathy reports grants from NIH/NHLBI (HL095799), grants from Patient Centered Outcomes Research Institute (IHS-1306-2505), grants from US Department of Defense, grants from NIH (National Cancer Institute; R21CA184920), grants from Johrei Institute, personal fees from American Academy of Sleep Medicine, personal fees from American College of Chest Physicians, non-financial support from National Center for Sleep Disorders Research of the NIH (NHLBI), personal fees from UpToDate Inc., Philips-Respironics, Inc., and Vaopotherm, Inc.; grants from Younes Sleep Technologies, Ltd., Niveus Medical Inc., and Philips-Respironics, Inc. outside the submitted work. In addition, Dr. Parthasarathy has a patent UA 14-018 U.S.S.N. 61/884,654; PTAS 502570970 (Home breathing device). The above-mentioned conflicts including the patent are unrelated to the topic of this paper.

Funding support: Funding support from NIH/NHLBI. SP was supported by National Institutes of Health Grants (HL095799, HL095748, and CA184920). Research reported in this manuscript was partially funded through a Patient-Centered Outcomes Research Institute (PCORI) Award (IHS-1306-02505). The statements in this manuscript are solely the responsibility of the authors and do not necessarily represent the views of PCORI, its Board of Governors or Methodology Committee. The funding institutions did not have any role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Authorship credit: Conceived and designed the experiments (HSK, RG, and ER), Analyzed the data (SK, HSK, RG, RHG, and SP), Interpretation of data (SK, HK, RG, RHG and SP), contributed reagents/materials/analysis tools (SK, HSK, RG, RHG, and SP), drafted the article or revised it critically for important intellectual content (HSK, RHG, MPS, MM, BS, SK, RG, ER and SP), final approval of the version to be published (HSK, RHG, MPS, MM, BS, SK, RG, ER and SP).

Conflict of Interests:

The authors have no conflicts of interest to disclose.

REFERENCES

- 1.Dellinger RP, Levy MM, Rhodes A, et al. Surviving Sepsis Campaign: international guidelines for management of severe sepsis and septic shock, 2012. Intensive Care Med. 2013;39:165–228. doi: 10.1007/s00134-012-2769-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Castellanos-Ortega A, Suberviola B, Garcia-Astudillo LA, et al. Impact of the Surviving Sepsis Campaign protocols on hospital length of stay and mortality in septic shock patients: results of a three-year follow-up quasi-experimental study. Crit Care Med. 2010;38:1036–1043. doi: 10.1097/CCM.0b013e3181d455b6. [DOI] [PubMed] [Google Scholar]

- 3.Weiss CH, Moazed F, McEvoy CA, et al. Prompting physicians to address a daily checklist and process of care and clinical outcomes: a single-site study. Am J Respir Crit Care Med. 2011;184:680–686. doi: 10.1164/rccm.201101-0037OC. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rothman MJ, Rothman SI, Beals Jt. Development and validation of a continuous measure of patient condition using the Electronic Medical Record. J Biomed Inform. 2013;46:837–848. doi: 10.1016/j.jbi.2013.06.011. [DOI] [PubMed] [Google Scholar]

- 5.Churpek MM, Yuen TC, Park SY, et al. Using electronic health record data to develop and validate a prediction model for adverse outcomes in the wards*. Crit Care Med. 2014;42:841–848. doi: 10.1097/CCM.0000000000000038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Escobar GJ, LaGuardia JC, Turk BJ, et al. Early detection of impending physiologic deterioration among patients who are not in intensive care: development of predictive models using data from an automated electronic medical record. J Hosp Med. 2012;7:388–395. doi: 10.1002/jhm.1929. [DOI] [PubMed] [Google Scholar]

- 7.Brown H, Terrence J, Vasquez P, et al. Continuous monitoring in an inpatient medical-surgical unit: a controlled clinical trial. Am J Med. 2014;127:226–232. doi: 10.1016/j.amjmed.2013.12.004. [DOI] [PubMed] [Google Scholar]

- 8.Moonesinghe SR, Mythen MG, Das P, et al. Risk stratification tools for predicting morbidity and mortality in adult patients undergoing major surgery: qualitative systematic review. Anesthesiology. 2013;119:959–981. doi: 10.1097/ALN.0b013e3182a4e94d. [DOI] [PubMed] [Google Scholar]

- 9.Sacco Casamassima MG, Salazar JH, Papandria D, et al. Use of risk stratification indices to predict mortality in critically ill children. Eur J Pediatr. 2014;173:1–13. doi: 10.1007/s00431-013-1987-6. [DOI] [PubMed] [Google Scholar]

- 10.Smith MEB, Chiovaro JC, O'Neil M, et al. Early Warning System Scores: A Systematic Review: VA Evidence-based Synthesis Program Reports. Department of Veterans Affairs; Washington D.C.: 2014. [PubMed] [Google Scholar]

- 11.Nyweide DJ, Lee W, Cuerdon TT, et al. ASsociation of pioneer accountable care organizations vs traditional medicare fee for service with spending, utilization, and patient experience. JAMA. 2015;313:2152–2161. doi: 10.1001/jama.2015.4930. [DOI] [PubMed] [Google Scholar]

- 12.Knaus WA, Wagner DP, Draper EA, et al. The APACHE III prognostic system. Risk prediction of hospital mortality for critically ill hospitalized adults. Chest. 1991;100:1619–1636. doi: 10.1378/chest.100.6.1619. [DOI] [PubMed] [Google Scholar]

- 13.Wenner JB, Norena M, Khan N, et al. Reliability of intensive care unit admitting and comorbid diagnoses, race, elements of Acute Physiology and Chronic Health Evaluation II score, and predicted probability of mortality in an electronic intensive care unit database. J Crit Care. 2009;24:401–407. doi: 10.1016/j.jcrc.2009.03.008. [DOI] [PubMed] [Google Scholar]

- 14.Umscheid CA, Betesh J, VanZandbergen C, et al. Development, implementation, and impact of an automated early warning and response system for sepsis. J Hosp Med. 2015;10:26–31. doi: 10.1002/jhm.2259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.van Meenen LC, van Meenen DM, de Rooij SE, et al. Risk prediction models for postoperative delirium: a systematic review and meta-analysis. J Am Geriatr Soc. 2014;62:2383–2390. doi: 10.1111/jgs.13138. [DOI] [PubMed] [Google Scholar]

- 16.Henry KE, Hager DN, Pronovost PJ, et al. A targeted real-time early warning score (TREWScore) for septic shock. Sci Transl Med. 2015;7:299ra122. doi: 10.1126/scitranslmed.aab3719. [DOI] [PubMed] [Google Scholar]

- 17.Kaukonen KM, Bailey M, Pilcher D, et al. Systemic inflammatory response syndrome criteria in defining severe sepsis. N Engl J Med. 2015;372:1629–1638. doi: 10.1056/NEJMoa1415236. [DOI] [PubMed] [Google Scholar]

- 18.Quality AfHRa . Clinical Classifications Software (CCS). 2014 Healthcare Cost and Utilization Project – HCUP A Federal-State-Industry Partnership in Health Data. Agency for Healthcare Research and Quality; Rockville, MD: 2014. [Google Scholar]

- 19.Iwashyna TJ, Odden A, Rohde J, et al. Identifying patients with severe sepsis using administrative claims: patient-level validation of the angus implementation of the international consensus conference definition of severe sepsis. Med Care. 2014;52:e39–43. doi: 10.1097/MLR.0b013e318268ac86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Lagu T, Lindenauer PK, Rothberg MB, et al. Development and validation of a model that uses enhanced administrative data to predict mortality in patients with sepsis. Crit Care Med. 2011;39:2425–2430. doi: 10.1097/CCM.0b013e31822572e3. [DOI] [PubMed] [Google Scholar]

- 21.Le Gall JR, Lemeshow S, Leleu G, et al. Customized probability models for early severe sepsis in adult intensive care patients. Intensive Care Unit Scoring Group. JAMA. 1995;273:644–650. [PubMed] [Google Scholar]

- 22.Higgins TL. Severe sepsis outcomes: how are we doing?*. Crit Care Med. 2014;42:2126–2127. doi: 10.1097/CCM.0000000000000443. [DOI] [PubMed] [Google Scholar]

- 23.Kramer AA, Zimmerman JE. Assessing the calibration of mortality benchmarks in critical care: The Hosmer-Lemeshow test revisited. Crit Care Med. 2007;35:2052–2056. doi: 10.1097/01.CCM.0000275267.64078.B0. [DOI] [PubMed] [Google Scholar]

- 24.Kluth LA, Black PC, Bochner BH, et al. Prognostic and Prediction Tools in Bladder Cancer: A Comprehensive Review of the Literature. Eur Urol. 2015;68:238–253. doi: 10.1016/j.eururo.2015.01.032. [DOI] [PubMed] [Google Scholar]

- 25.Scruth EA, Page K, Cheng E, et al. Risk determination after an acute myocardial infarction: review of 3 clinical risk prediction tools. Clin Nurse Spec. 2012;26:35–41. doi: 10.1097/NUR.0b013e31823bfafc. [DOI] [PubMed] [Google Scholar]

- 26.Vincent JL, Moreno R. Clinical review: scoring systems in the critically ill. Crit Care. 2010;14:207. doi: 10.1186/cc8204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rapsang AG, Shyam DC. Scoring systems in the intensive care unit: A compendium. Indian J Crit Care Med. 2014;18:220–228. doi: 10.4103/0972-5229.130573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mapp ID, Davis LL, Krowchuk H. Prevention of unplanned intensive care unit admissions and hospital mortality by early warning systems. Dimens Crit Care Nurs. 2013;32:300–309. doi: 10.1097/DCC.0000000000000004. [DOI] [PubMed] [Google Scholar]

- 29.Rubenfeld GD. Customized probability models for severe sepsis: correction and clarification. JAMA. 1995;274:872. doi: 10.1001/jama.274.11.872b. author reply 872-873. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.