Abstract

This article describes implementation experiences “scaling up” the Cognitive Behavioral Intervention for Trauma in Schools (CBITS)—an intervention developed using a community partnered research framework. Case studies from two sites that have successfully implemented CBITS are used to examine macro- and school-level implementation processes and strategies used to address implementation issues and create a successful implementation support system. Key elements of the implementation support system include pre-implementation work, ongoing clinical and logistical implementation supports, promotion of fidelity to the intervention’s core components, tailored implementation to fit the service context, and a value on monitoring child outcomes.

The vast majority of children with mental health needs do not receive services (Kataoka, Zhang, & Wells, 2002), and of those who do, about 75% receive mental health services through school (Farmer, Burns, Phillips, Angold, & Costello, 2003). This has resulted in increased attention to the provision of mental health services in schools, which can address financial and structural barriers to care (Garrison, Roy, & Azar, 1999). A growing number of school-based programs are demonstrating positive effects on children’s emotional and behavioral outcomes. A critical issue in school psychology is how to best support these programs in moving from initial development and effectiveness studies to full-scale implementation in schools across the country. This article explores implementation processes and lessons learned from “scaling up” the Cognitive Behavioral Intervention for Trauma in Schools (CBITS). Of primary interest were strategies sites used to address common implementation issues for school mental health programs. We use case examples from two sites that have successfully adopted and implemented CBITS to explore macro- and school-level strategies important in developing an implementation support system.

Overview of CBITS

CBITS was developed using a community-partnered research model involving several of this article’s coauthors (e.g., Jaycox, 2003; Kataoka et al., 2009; Stein et al., 2002). Participatory research partnerships are useful as researchers strive to develop, evaluate, and disseminate mental health programs in educational settings (Stoiber & Kratochwill, 2000), and CBITS development followed key elements of the community partnered participatory research model that Wells and colleagues (2006) articulated for the mental health field. This model begins with joint negotiation of health improvement priorities across community stakeholders and research partners, and then involves matching community needs, resources, and values with evidence-based interventions. Development and implementation of programs is accomplished through a participatory process. Program effects on individuals and communities are assessed, followed by dissemination of programs and findings. The model emphasizes sustainability planning and involvement of partners in all phases of research.

The development of CBITS stemmed from a collaborative partnership among Los Angeles Unified School District, University of California, Los Angeles, and RAND, and was motivated by school officials’ concern about exposure to violence affecting students’ school success (Jaycox, Kataoka, Stein, Wong, & Langley, 2005; Stein et al., 2002; Wong, 2006; Wong et al., 2007). Los Angeles Unified School District forged a partnership with clinical researchers to create a program to improve the well-being of students exhibiting symptoms of post-traumatic stress disorder (PTSD). Specifically, school partners wanted a program that could be (a) delivered by existing school staff using the limited resources available in this public school system; (b) conducted during a class period, typically 45 minutes in duration; (c) provided to a large number of students; and (d) disseminated with user-friendly materials that could be tailored for a socioeconomically, ethnically/racially, and linguistically diverse student body.

One of the research partners, a clinical psychologist and expert in cognitive behavioral treatment (CBT) and trauma, developed a group intervention based on CBT for trauma-related PTSD, which has been supported in controlled outcome studies for sexually abused children (e.g., Cohen, Deblinger, Mannarino, & Steer, 2004), children with single-incident trauma (March, Amaya-Jackson, Murray, & Schulte, 1998), and adult survivors of interpersonal violence (Foa et al., 1999). CBT interventions have similarities to other models used in schools (i.e., time-limited, incorporate didactics and homework, skills-based, behaviorally-oriented) and are amenable to groups. The psychologist drafted the manual, continually eliciting feedback from partners (e.g., school clinicians and staff, community members, academic partners); revised the manual (most often for clarity and ease of use); piloted the intervention; and further refined it based on iterative feedback, including focus groups with parents to assess program acceptability.

Thus, the CBITS program was designed to “fit the intervention” within the framework of the school environment. As a result, CBITS has many characteristics found to predict adoption of new innovations (Rogers, 1995), such as relative advantage over usual care, compatibility with school practices, and minimized complexity. The resulting intervention is a 10-session group intervention, with 1–3 individual sessions dedicated to creating a trauma narrative (structured telling and processing of the memory of a traumatic event) and additional parent and teacher education sessions (Jaycox, 2003). The key elements of CBITS include core CBT techniques: psychoeducation regarding the effects of trauma on students, relaxation, cognitive restructuring, graduated in vivo exposure, and social problem solving. The program also has taken a public health approach, using a brief screening tool to identify students who may not otherwise come to the attention of school staff.

CBITS was evaluated in two consecutive research studies in which CBITS was delivered by school district employed clinicians. The first was a quasi-experimental pilot study in which a preliminary version of CBITS was tested as part of a program for recently immigrated students. Results showed significant reductions in symptoms of PTSD and depression in the intervention versus the waitlist control group (Kataoka et al., 2003). The intervention was further refined (adding two sessions, per clinician request), and evaluated with a general school population in a randomized, controlled effectiveness trial. Results indicated that students who received CBITS earlier in the school year had significantly lower symptoms of PTSD and depression, and parent-reported psychosocial dysfunction (effect sizes of 1.08, 0.45, and 0.77 SD, respectively) compared to those in a waitlist control group (Stein, Jaycox et al., 2003; Stein, Kataoka et al., 2003). An important facet of these studies was the use of the partnered research model and an emphasis on maximizing the real-world relevance of the study. Each study was designed to balance the priorities of the school community with the demands of rigorous research design, and to capitalize on the combined expertise of the partners. One example of this joint decision making was the study design. The district indicated that its priority was to treat all identified children within the same school year, leading researchers to choose a randomized waitlist control design in which students received the intervention either in the fall or spring semester.

In addition to the CBITS manual (Jaycox, 2003), products from this partnership include dissemination tools such as a Web-based training (www.cbitsprogram.org), a CBITS implementation “toolkit,” and videos for school staff and parents that feature principals and teachers talking about the effects of trauma on academics. The team has also developed adaptations to facilitate implementation in foster care settings (Schultz et al., 2010), in faith-based settings (Kataoka et al., 2006), for students in special education or with low literacy, and for nonclinical school staff (Jaycox et al., 2009).

Scaling Up: Implementation Examples From the Field

Over time, interest in CBITS has grown across the country and internationally, with the demand for the program outpacing capacity to engage in the same level of community– research partnership at each site. Thus, dissemination and implementation activities have changed over time. Most sites adopting CBITS do not have research partners, and service models have varied considerably (e.g., district-employed social workers and school psychologists, colocated community mental health clinicians, individual contracted providers). Understanding the process of adoption and implementation as it occurs in these real-world contexts is a crucial next step in being able to promote the factors that lead to successful community implementation.

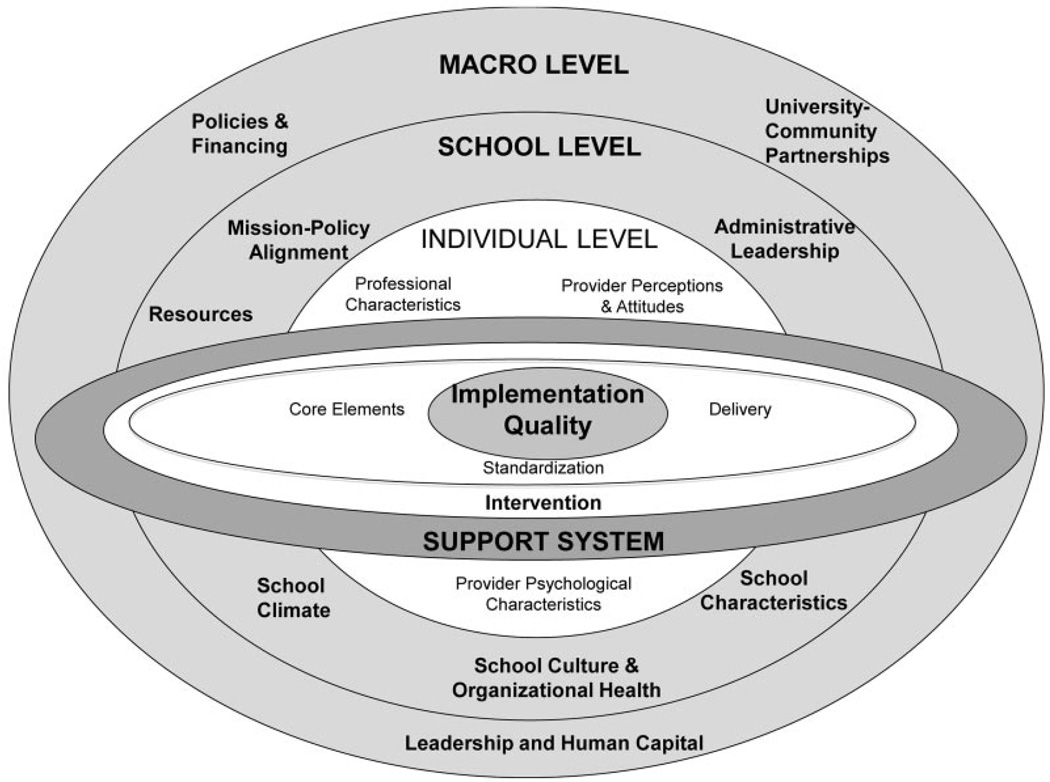

Several systematic reviews and conceptual models highlighting critical factors in implementation of innovations can help frame this understanding (e.g., Feldstein & Glasgow, 2008; Fixsen, Naoom, Blasé, Friedman, & Wallace, 2005; Greenhalgh, Robert, MacFarland, Bate, & Kyriakidou, 2004). These models highlight factors such as characteristics of the intervention (e.g., ease of use, relevance, compatibility with the setting), the support system (e.g., time, money, tangible supports, leadership), provider characteristics, and community characteristics. To guide our discussion of CBITS, we turn to the model of Domitrovich et al. (2008), which identifies independent but interrelated factors at multiple levels that affect school program implementation. These include macro-level factors (policies and financing, university/community partnerships, leadership); school factors (resources, expertise, administrative leadership, decision structure, school climate, organizational health, school culture, mission/policy alignment); the support system for the intervention (training, ongoing coaching, implementation tools), and individual provider-level issues (professional characteristics, perceptions of the intervention; Domitrovich et al., 2008). Figure 1 depicts an adapted version of this model that includes factors most relevant to CBITS implementation, along with an expanded representation of the support system to include macro-level factors. Although each of these factors is important in its own right, it is difficult for a single study to examine the entire model. In this article, we concentrate on macro- and school-level processes and their implications for the structure of local implementation support systems.

Figure 1.

Factors affecting implementation quality for the Cognitive Behavioral Intervention for Trauma in Schools (adapted from Domitrovich et al., 2008).

As an initial exploration of these issues, we conducted interviews with clinicians and site directors from sites previously trained in CBITS. The study highlighted key implementation barriers: lack of parent engagement (for active consents for screening and treatment, attendance at parent sessions), competing priorities for clinicians and schools, logistical barriers (time, space), and lack of buy-in from school staff (Langley, Nadeem, Kataoka, Stein, & Jaycox, 2010). Interestingly, there was no variation between sites that successfully implemented CBITS and those that did not in the types of barriers endorsed. Both groups held positive perceptions of CBITS, rating it highly on its ease of use. The primary difference was that “successful” sites reported having a network of professionals for support and funding (and by extension, leadership support and organizational infrastructure; Langley et al., 2010). Findings from this study raise questions about specific processes that allowed successful sites to overcome barriers.

In the current study, we were interested in understanding macro- and school-level processes that allow successful implementation of CBITS. Implementation of an intervention with fidelity to positively affect student outcomes requires a quality implementation support system with ongoing support (e.g., training, coaching, and practical tools; e.g., Fixsen et al., 2005; Joyce & Showers, 2002; Kelly et al., 2000). To date, we have little understanding of the on-the-ground macro- and school-level approaches needed to garner that support.

At the macro level, we focus on three key factors: leadership and human capital, policies and financing, and university/community partnerships (Domitrovich et al., 2008). Leadership and human capital refers to the presence of district-level champions, staff with the requisite skills, as well as community coalitions that can leverage resources and support. Policies and finances refers to the presence of funds and federal, state, and local policies that facilitate implementation. University/community partnerships refers to partnerships and support from researchers or treatment developers. At the school level, we focus on six primary factors: mission–policy alignment (aligning social emotional goals with educational goals), administrative leadership (from local principals and leaders), resources (time, space, funding), personnel expertise (presence of skilled staff), school culture (attitudes towards new practices), school climate and organizational health (functioning of organization), and school characteristics (population/community needs, demographics, geographical context; Domitrovich et al., 2008).

Using two case studies, we explore the interactions between the factors at the macro and school levels as they contribute to the establishment of a CBITS implementation support system. Specifically, we address the following questions: (a) How do sites use macro-level and school-level approaches to create the conditions for successful implementation of CBITS? (b) What key components of local implementation support systems emerge from these approaches?

Method

To guide our approach, we draw on the key principles of developmental evaluation, an approach suited to examination of dynamic processes in real-world contexts (Gamble, 2008; Patton, 2011). Unlike traditional evaluation focused on assessing program success and failure, developmental evaluation is specifically designed to support, guide, and understand innovations as they emerge and develop. There is recognition that within adaptive systems that such innovative practices and processes may unfold in nonlinear, complex, and dynamic ways, with levels of uncertainty and ambiguity (Patton, 2011). Although developmental evaluations can use common methods and data-collection approaches seen in school psychology (e.g., collecting individual-level outcome data), they increasingly require the use of nontraditional methods (e.g., experiential data-gathering, interviews, observations of naturally occurring decisions and processes; Gamble, 2008; Patton, 2011). Using this approach, we examine two case studies of CBITS implementation. Case studies are a methodology well suited to developmental evaluation as they are designed for understanding complex implementation processes and examining variables that are not readily measured with existing quantitative instruments (Yin, 2009; see Figure 2 for an overview of the methodological process).

Figure 2.

Developmental evaluation methods used to examine Cognitive Behavioral Intervention for Trauma in Schools implementation process.

Selection of Sites

Sites were selected based on their success implementing CBITS groups across many schools, indication of improved child outcomes (i.e., pre- and post-treatment outcomes showing significant declines in trauma symptoms), and representativeness of the most common service delivery models for CBITS (i.e., district-employed clinicians or community agency-employed clinicians colocated in schools). Although a number of sites met these criteria, we chose two sites in which sufficient information on implementation processes was available to answer research questions regarding macro- and school-level factors.

The two sites selected, described in more detail later, were Jersey City Public Schools (JCPS) in Jersey City, New Jersey, where CBITS was delivered by district-employed clinicians, and Mercy Family Center in New Orleans, Louisiana, where CBITS was delivered by a community mental health agency. We gathered information for the case studies from the following key stakeholders: site leadership (clinic director from Mercy Family Center, district-level administrators from JCPS); clinicians implementing CBITS (i.e., community agency clinicians, school district-employed clinicians); and members of our research team.

Procedures

Case study data were derived from multiple sources. We gathered data via informal communication and direct observation, as well as the sites’ local data and discussions with key informants. Members of our research team have communicated with JCPS and Mercy Family Center during implementation planning, training of clinicians, and active implementation of CBITS. Information gathering occurred within the context of ongoing work on collaborative projects, informal discussions, formal meetings, consultation phone calls, and e-mail exchanges with site leadership and clinicians (Gamble, 2008; Patton, 2011). To ensure that our understanding of site-specific implementation activities was accurate and that we had sufficient information to address all the domains in our conceptual model, we also conducted interviews with the two site leaders and had supplementary verbal and written exchanges with site leadership and lead supervisors and clinicians, who confirmed our interpretation of the available information.

Data Analysis

Case study information from the two sites was analyzed according to our chosen theoretical framework, as recommended by Yin (2009). The lead author synthesized the information about the two case study sites by analyzing how their implementation processes conformed to, or diverged from, our conceptual model, noting specific actions the sites took that were representative of macro- and school-level implementation processes. A written summary of the analysis was distributed to coauthors for feedback, which focused on facilitating consensus and refining the analysis. In the event of diverging perspectives, consensus was achieved through group discussion and written communication. As discussed in the results section, the JCPS case study illustrates how macro-level factors were primary in implementing CBITS across a school district. In the New Orleans case study, school-level factors played a more significant role. Both sites experienced interactions across levels, illustrating the interdependence of these factors. We end by describing emerging core components of the support system.

Results

Macro-Level Factors in Dissemination: JCPS

Background

This case study describes the process used to achieve district-wide CBITS implementation in the 2008–2009 academic year. The JCPS serve approximately 30,000 students from diverse backgrounds (38% Latino, 36% African American, 10% White, 14% Asian American, 1% American Indian/Alaskan Native, and 0.6% Pacific Islander) in Jersey City, New Jersey. There are high proportions of recent immigrant students (35% foreign born; 25% no English in the home; over 50 languages) and socioeconomically disadvantaged students (66% of students eligible for free lunch). A unique aspect of the JCPS’s CBITS implementation was the role of senior-level district leadership, whose involvement shaped how the district handled policy issues, partnerships, school-level factors, and the implementation support system. We organize our discussion of JCPS’s implementation strategies within the three macro-level categories highlighted by Domitrovich et al. (2008).

Leadership and human capital

The associate superintendent of Special Education’s (AS) leadership was critical to CBITS implementation in JCPS. The process began with a partnership between the AS, her staff, and a local community agency to address the educational needs of the district’s recent immigrant and refugee students. In the years before district-wide CBITS implementation, the agency partners provided teachers with education about the experiences of recent immigrant and resettled communities, and worked with parents to orient them to the U.S. educational system. As dialogues evolved, the AS recognized the high levels of trauma exposure across the district and became interested in implementing CBITS.

Before district-wide implementation, the community agency partners piloted CBITS in a single school, enlisting the school’s guidance department for logistical support. Groups were expanded to a second school, at the request of that school’s principal, and cofacilitated with a district-employed counselor. Based on promising student outcomes and a positive response from the school community, the AS decided to use district-employed clinicians to implement CBITS district-wide in the fifth through ninth grades (30 schools). The AS built on the district’s existing human capital, in the form of clinical social workers, district staff, and existing partnerships with community agencies. She and her staff also obtained support and engaged key stakeholders by presenting on CBITS to district officials, the JCPS Board of Education, and school principals through a Principals’ Symposium. Using evaluation data from the community agency’s smaller scale implementation, the AS provided a clear delineation of benefits for the district, schools, and students.

Policies and financing

A critical strategy for district-wide roll out was alignment of CBITS with educational policies. At the federal level, the Individuals with Disabilities Education Act mandates that all children, including those with disabilities, receive a free and appropriate public education. Particularly relevant for CBITS were provisions in the 2004 reauthorization of Individuals with Disabilities Education Act regarding early intervening services (EIS) for children who are not currently identified as needing special education or related services but who need additional academic and behavioral support to succeed in general education settings. In addition, JCPS has a mandate to address disproportionate representation of racial/ethnic groups in special education.

As one approach to address these mandates, JCPS was implementing response to intervention. Response to Intervention is a model that promotes early identification of students at risk for learning difficulties, and may be used as one approach to determine whether a student has a learning disability. The model seeks to match instruction with students’ identified needs, using evidence-based approaches and a tiered system of services that increase in intensity, as needed. Although the primary focus of Response to Intervention is on instruction and learning difficulties, there is recognition of the need for behavioral supports to support learning (Reschly & Bergstrom, 2009). Using the response to intervention framework, the AS and her team developed a behavioral support program to provide counseling to general and special education students. CBITS qualified as a second-tier targeted intervention for students affected by traumatic stress.

University–community partnerships

Partnerships with community agencies and researchers were another critical macro-level factor. As noted earlier, the district partnered closely with a community agency that provided grant-funded services for traumatized students. The agency partners had prior training from certified CBITS trainers and actively facilitated relationships that allowed the district to pursue this training for its own staff. JCPS then enlisted the agency partners to provide ongoing clinical consultation to district clinicians. The agency also provided targeted direct implementation support (e.g., help administering screening instruments to students) if needed.

After the clinicians were trained, the district took part in a yearlong CBITS Learning Collaborative led by CBITS faculty, including treatment developers and CBITS trainers. The Learning Collaborative was based on the Institute for Healthcare Improvement’s Breakthrough Series Collaborative model, which is designed to help health care organizations make improvements in quality of care in response to scientific evidence. The model provides a structure in which organizations can easily learn from each other and from recognized experts (Institute for Healthcare Improvement, 2003). Several sites across the country, including JCPS, were trained in CBITS through the standard in-person two-day training. They also attended two additional in-person Learning Sessions (midschool year, and at the end of the school year) designed to enhance skills, introduce quality improvement methods, and share lessons learned across sites. In between these meetings, key staff (i.e., supervisors, site leaders, lead clinicians) participated in monthly phone calls with CBITS faculty focused on clinical skills and implementation issues. Sites also completed monthly implementation metrics (i.e., groups sessions held, attendance, clinician self-ratings) to inform quality improvement efforts. For example, if a site’s metrics indicated poor attendance in parent sessions compared to other sites, the site might try a new parent engagement strategy. After the initial two-day training by CBITS faculty, the 30 JCPS clinicians implementing CBITS typically did not participate in the ongoing Learning Collaborative phone calls or meetings. However, the involvement of district leadership (i.e., the AS, supervisors, and program directors in the Department of Special Education) in the CBITS Learning Collaborative helped JCPS create an organized implementation support structure and learn with colleagues from other sites.

Implementation support system

With supportive leadership and strong connections with university and community partners, JCPS developed a centralized, structured support plan that fit their setting and helped to address school-level implementation variables. At the district level, they added clinical and logistical support for CBITS to existing monthly team meetings for district-employed clinicians, provided on-call assistance available from district staff and agency partners, and developed an internal implementation “toolkit” that included timelines for specific tasks (e.g., principal meetings, teacher in-services, consents, screening), outreach materials, handouts, consents/letters, and outcome measures all tailored for JCPS.

Although JCPS did not monitor fidelity formally (aside from clinician-reported session checklists), an agency partner with CBITS experience reviewed core clinical skills during the monthly team meetings. The meetings also included discussion of cultural or clinical adaptations (e.g., expanding sessions, translation issues, cultural adaptations of examples to illustrate concepts more effectively), engagement strategies for recent immigrant families, and implementation-related adaptations (e.g., running groups after school or during lunch).

Finally, the district conducted an evaluation to ensure quality, provide feedback to stakeholders, and support sustainability. In the 2008–2009 school year, the program served 214 students across 30 schools. Of the students who participated in CBITS, there was a significant pre- to post-CBITS decline in PTSD symptoms, t(212) = 10.43, p < .001. The district gathered preliminary data on school performance by contrasting fall and spring report cards. About a quarter of the students participating in CBITS improved their grades in math, reading, and writing, and about 62% maintained their grades (approximately 13% had declines in grades). In addition, students and teachers provided written and verbal feedback on their experiences with CBITS. Together, this data made a convincing case to district officials and local school administrators that the CBITS program was helpful.

Interactions with school-level implementation factors

Because macro-level variables do not operate in isolation, we examined the interaction between macro- and school-level variables. Here, we discuss the general approach used by JCPS to optimize school factors (e.g., administrator support, resources, mission/policy alignment, school culture). First, at the Principals’ Symposium mentioned earlier, the AS discussed the link between trauma exposure and learning, made the case for CBITS, and elicited principals’ active involvement in planning for implementation (e.g., the AS routinely involved principals in making strategic plans). Second, the monthly team meetings and internal implementation toolkit (which included timelines and concrete outreach materials geared towards local principals and teachers) provided structure and tools for clinicians as they garnered the support of local administrators. This support was critical to addressing mission/policy alignment and resources. Resources in this case included protected time, group space, and venues for parent and teacher outreach (e.g., presence at PTA meetings, back-to-school night, teacher meetings).

These strategies were extended to local school staff. Using the JCPS implementation toolkit and ideas shared at the monthly team meetings, clinicians educated teachers and school staff about the effect of trauma on learning and collaboratively problem solved on how to minimize the program’s effect on academic time. A subset of clinicians involved teachers more directly. In some schools, teachers helped motivate students to return consents for CBITS screening by offering homework credit (parents had the option to agree or decline). Some teachers also assisted during CBITS screenings in classrooms. In parallel, progress and student outcomes were shared with school administrators and district leadership. Such activities helped to create a trauma-informed school culture open to programs addressing student mental health.

School-Level Factors in Implementation: Mercy Family Center, New Orleans

Background

In 2005, Hurricane Katrina was one of the most devastating natural disasters in recent U.S. history; over 1.7 million people suffered flooding or moderate to catastrophic damage (McCarthy, Peterson, Sastry, & Pollard, 2006). In New Orleans, the public school system was shut down, and many other schools and districts were affected. Because schools were among the early responders to mental health issues upon their reopening, there was interest in CBITS from a variety of districts and agencies. In conjunction with some of these early trainings, Kataoka and colleagues (2009) conducted a focus group study with practitioners working in schools soon after they reopened. Key themes included the pervasiveness of both hurricane- and nonhurricane-related traumas, the effect of the disaster on providers, challenges related to fragmented service structures (e.g., isolated clinicians, lack of communication, lack of centralized resources), and limited resources and support of mental health in schools.

Against this backdrop, Mercy Family Center, which housed a newly created program called Project Fleur-de-lis (PFDL), requested CBITS training. PFDL’s leader (the clinical director of Mercy Family Center and PFDL) recognized that the influx of supports to New Orleans would be short-lived and that schools would continue to be a primary service setting for a host of needs. As such, he designed PFDL to address intermediate- and long-term mental health needs (Walker, 2008). PFDL offers three tiers of services: the Classroom-Camp-Community-Culture Based Intervention to formally or informally screen students for services and address general school needs (Macy, Macy, Gross, & Brighton, 2006); CBITS as a school-based targeted intervention program; and clinic-based Trauma-Focused Cognitive-Behavioral Treatment (TF-CBT; Cohen, Mannarino, Berliner, & Deblinger, 2000) for children with higher-level needs related to trauma and grief (Walker, 2008). To set up the service system, the clinical director secured financing from foundation, private, and nonprofit sources and forged partnerships with treatment developers for training of PFDL staff (Cohen et al., 2009; Walker, 2008). After initial attention to macro-level factors (leadership, financing, partnerships), CBITS implementation success was achieved primarily by focusing on intertwined school-level factors.

School characteristics

Along with inherent challenges common to schools in urban communities, the hurricane had tremendous effect on schools and communities’ basic needs and mental health, with both students and staff affected (Kataoka et al., 2009). To assess these issues before offering services, PFDL conducted focus groups with staff from 42 area schools in the months after the hurricane, and learned that many schools were overwhelmed with overcrowded classrooms, lack of teachers, and damaged buildings and student records (Walker, 2008).

School climate and organizational health

These school characteristics were tied to school climate and organizational health. Climate refers to student and staff perceptions of the school. Organizational health is the organization’s ability to adapt to challenges over time, foster an open and supportive environment, and maintain staff morale (Domitrovich et al., 2008). As PFDL began its work, it became clear that many schools had immense challenges around student and staff safety and basic infrastructure for teaching and reestablishing routines. PFDL staff (the clinical director and clinicians) felt that such schools were poor environments to intervene on mental health issues because they did not engender basic feelings of trust and safety. As such, PFDL felt they had to work in schools that were “ready” to receive services, both in terms of climate and organizational health. In practice, this meant working with schools that had reestablished routines, basic feelings of safety, and a stable infrastructure within which to work. The Catholic schools were among the first to reopen and establish these conditions after the storm. PFDL first started CBITS in three Catholic schools, and then expanded over time to additional Catholic schools and schools in the public and charter systems.

School culture

School culture as it relates to implementation of mental health programs refers to routine activities and shared values, norms, and beliefs around the uptake of innovative programs and practices (Domitrovich et al., 2008). There was general support for new programs in New Orleans that addressed mental health aftereffects of the hurricanes (Walker, 2008). However, the PDFL team felt that schools were inundated with new programs, many of which did not have a strong evidence base and did not address long-term mental health needs. Ultimately, schools began to perceive new programs negatively. This dynamic was particularly pervasive in the public school system, making schools reticent to take on CBITS.

Interestingly, the Catholic school system was less affected by disaster politics and was also embracing its role in the broader community to provide for students’ needs beyond education. Although they were reticent to allow individual therapy on campus, Catholic schools were increasingly open to skills-based group programs like CBITS. Over time, CBITS success led to a greater openness to intensive individual treatment in school (i.e., TF-CBT).

Mission–policy alignment

PFDL partnered closely with each school to address the social and emotional student needs most pressing to the school, including behavior and attention problems. This focus on daily needs, rather than rigidly sticking to hurricane exposure, provided schools the support they needed rather than trying to make all problems fit into the trauma-related interventions. PFDL did this by working with schools to assess students’ needs and triage cases into an array of PFDL and community services (Walker, 2008). In parallel, they educated school staff about the effect of trauma exposure on learning.

Administrative support and resources

Building from this work, the notion that schools were an appropriate place to address emotional issues was gaining traction among local school administrators. Although few administrators became “champions” for CBITS, their support was critical to secure staff time, space, and on-site logistical support for running CBITS groups.

Personnel expertise

PFDL had a core clinical social work staff that provided initial services in three Catholic schools with intensive weekly clinical supervision. As CBITS expanded to additional Catholic, public, and charter schools, PFDL required that schools provide their own counselor whom PFDL would train and support. This was done in order to develop local expertise, ensure administrator and school support, and build longer-term capacity.

Implementation support system

Like JCPS, the core PFDL team was trained by the CBITS faculty and took part in a CBITS Learning Collaborative. In the initial implementation with the Catholic schools, clinicians met weekly in their regular PFDL consultation meetings to problem solve around school-level implementation factors (e.g., school buy-in) and logistics, share strategies and tools, and address clinical skills. Clinician-reported session checklists helped to ensure that the clinicians regularly reviewed core components during their supervision; however, there was no formal fidelity monitoring. This support system was further enhanced by PFDL’s involvement in a community-partnered research project with members of our team. The study did not provide clinical support, but allowed PFDL to gather clinical outcome data (Jaycox et al., 2010). This data helped ensure quality implementation and expansion to new schools.

After this initial phase, the clinical director went through additional training in order to become a certified CBITS trainer. As PFDL adds additional schools to their program, the clinical director has been able to directly train and support providers in the field, with direct knowledge of local experiences, culture, and values. A key component of the dissemination model is that school counselors run their first CBITS group with a seasoned PFDL clinician. PFDL also offers weekly clinical consultation to these providers. Using this approach, CBITS is now being implemented in 18 public, charter, and Catholic schools. PFDL has also added an evaluation component and partners with school counselors to gather outcomes data.

Interactions with macro-level implementation factors

Throughout our description of PFDL’s approach to school-level implementation factors and the implementation support system, there are clear links to macro-level implementation factors. First, PFDL’s implementation success can be attributed in part to the leadership and vision of their director. PFDL’s director secured funding for PFDL through foundation, private, and non-profit sources, and forged critical partnerships with treatment developers. This led to staff training, participation in a national Learning Collaborative, and certification of the director as a CBITS trainer.

Because PFDL was interested in evaluating their model, they formed a new research–community partnership referenced earlier, adding partners from Allegheny General Hospital to the researchers at RAND and UCLA and the clinicians at the Mercy Family Center. The partnership received support from the National Institute of Mental Health and planned a project to evaluate PFDL’s service model and inform future post-disaster recovery efforts. The grant coincided with PDFL’s first year of CBITS groups in the 2006–2007 academic year. Through this partnered research project, students were screened for trauma symptoms in schools and randomized to receive CBITS in schools or TF-CBT in clinics from PFDL (Cohen et al., 2004). Interestingly, although 91% of students completed CBITS, only 15% completed TF-CBT at the clinic sites (Jaycox et al., 2010). Results highlighted the much higher accessibility of school-based services and demonstrated reductions in symptoms of PTSD for students in each trauma treatment (Jaycox et al., 2010).

Key Components of Implementation Support Systems

After analyzing our case studies with respect to macro- and school-level processes, our second research question was: What key components of local implementation support systems emerge from these approaches? In both case studies, macro- and school-level factors created the conditions for CBITS success. These factors were also explicitly targeted in support system activities. Below we discuss emerging core elements of the implementation support system derived from our analysis. Table 1 provides a list of these components and possible activities.

Table 1.

Description of Implementation Support System Components and Activities

| Implementation Support System Component |

Sample Activities |

|---|---|

| Preimplementation activities |

|

| Ongoing clinical and logistical support |

|

| Promotion of fidelity |

|

| Monitoring outcomes |

|

| Fitting to local setting |

|

Preimplementation activities

Before any groups were run, both sites undertook significant effort to lay the groundwork for CBITS. This included establishment of local partnerships, developing relations with CBITS faculty for training and consultation, engaging stakeholders (e.g., parents, teachers, administrators, other school staff), and strategic targeting of school-level implementation variables to optimize the implementation context. These “pre-implementation” activities appear critical for supporting mental health services in educational settings. At the macro level, contact with CBITS faculty was important. Although we had limited direct contact with frontline clinicians in the field, the Learning Collaborative provided a support platform upon which the sites were able to build implementation support systems tailored to their settings. JCPS’s support system was more centralized, with all clinicians working under the same umbrella. PFDL initially used this model with their core team implementation. The next step, however, required PFDL to work from school to school to create a city-wide network of CBITS providers in order to meet the needs of their growing group of schools.

Pre-implementation activities at the school level focused on largely on optimizing school-level implementation factors through extensive outreach and partnership approaches locally. For example, JCPS held a Principals’ Symposium to garner support and address issues around resources and openness to CBITS. In New Orleans, PFDL did significant groundwork to understand the context in which they were working, partnered with schools to meet their most pressing needs, and provided education to school staff about trauma and related issues. Each site stressed the importance of these pre-implementation activities at each individual school.

Ongoing logistical and clinical supports

Both sites sustained a focus on systemic issues throughout implementation and provided support to clinicians on both clinical and systems-level issues again. Clinical supervision had key elements identified in the literature as important to implementing interventions with quality and fidelity (Fixsen et al., 2005). However, it also included logistical support focused on school-level implementation issues, practical provider tools, and sharing of strategies. Each site provided its clinical and logistical support on a regular schedule, supplemented it with on-call assistance from site leadership and local experts, and sought out targeted consultation from CBITS faculty as needed. In addition, PFDL also had newly trained providers co-lead groups with experienced CBITS clinicians.

At the same time, each site crafted their support model to capitalize on local resources, expertise, and structures. In each setting, certified CBITS trainers conducted initial training, but follow-up took different forms. In Jersey City, the AS set up a support structure that used a local expert (the agency partner) and district-employed program staff to jointly provide consultation to clinicians. In New Orleans, PFDL conducted its own weekly supervision. Once PFDL staff was experienced, they mentored new trainees by co-leading groups with them. PFDL further enhanced local support when its director trained to become a certified CBITS trainer.

Emphasis on fidelity to the core components of the intervention

Neither site conducted formal fidelity monitoring. However, leaders at both sites explicitly promoted fidelity to the model. For example, clinicians used self-rated adherence for self-reflective and supervisory purposes. Although this is not typically a reliable indicator of fidelity (Schoenwald et al., 2011), the exercise promotes the importance of fidelity. It also facilitates discussion of core intervention components and adaptations clinicians are considering. This article is not focused on treatment adaptation, but our experience tells us that support systems should ideally promote skilled delivery of the core components, incorporating adaptations made to language, examples, and teaching techniques in the manual (e.g., greater incorporation of visuals) in order to ensure that students understand the concepts and that the intervention delivery fits the cultural and contextual needs of students, parents, and communities (Ngo et al., 2008).

Monitoring outcomes

Both sites monitored student outcomes, which were used to demonstrate success to stakeholders, support sustainability, and justify program expansion. JCPS did this for internal evaluation purposes. In New Orleans, PFDL assessed outcomes as part of a research study. Sites may collect outcomes in different ways according to their resources and capacity, but it is important to note that these sites were successful implementers, in part because they used data to ensure quality and demonstrate students benefited from CBITS.

Fitting to local setting

There is little doubt that JCPS and PFDL’s success hinged on their ability to shape CBITS implementation to their local context. This involved addressing the overall context (e.g., post-disaster recovery), policy context (e.g., aligning mental health language with federal policies and district priorities), community needs (e.g., offering trauma services in the context of a service model that addressed broader student mental health needs in New Orleans), local expertise of partners (e.g., agency- and school-based partners), resources (e.g., working with indigenous staff and resources), and capacity (e.g., matching program growth to local capacity, building and sustaining partnerships). They also aligned supervision time with existing meeting structures, used local experts, and augmented the local support system with targeted consultation from CBITS trainers.

Notably, JCPS and PFDL took incremental steps before embarking on large-scale projects. As noted in each case description, CBITS began with a single school or a small set of schools before progressing into a larger rollout. By implementing CBITS on a smaller scale, sites were able to demonstrate feasibility, share initial outcomes, set up support structures and processes, and develop strategies to address implementation challenges. This theme is echoed in approaches taken in developing clinician capacity and skills (e.g., training core staff before expansion, local staff becoming certified as CBITS trainers) and in pre-implementation strategies (e.g., assessing needs, garnering key stakeholder support). Although their emphases were different, both JCPS and PFDL used “top down” and “bottom up” approaches, outreaching to district leadership as well as to frontline school staff. This process allowed them to gain allies at all levels, which ultimately helped overcome common barriers (e.g., logistics, dedicated time, and parent and school staff buy-in).

Discussion

The goal of this study was to examine the ways in which macro- and school-level factors influence the process of implementation for a school-based trauma intervention. We were also interested in identifying core components of implementation support systems that emerged from these processes. Our developmental evaluation of two case studies according to relevant factors in Domitrovich and colleagues’ (2008) conceptual model of implementation of school-based prevention and intervention programs was not designed as an empirical test of the model, but rather as a elucidation of implementation processes in real-world contexts. The specific strategies used by these sites can inform the work of community and school stakeholders, including social workers, school psychologists, counselors, teachers, administrators, district leadership, and parents as they strive to implement similar programs. Our analysis also helps deepen our conceptual models of school-based implementation, and identifies areas for future research.

Both macro- and school-level processes were critical and very much interdependent in our case studies. In JCPS, macro-level district leadership helped create school-level conditions conducive to implementation. Interestingly, Jersey City’s implementation was centralized, with broad-scale implementation emerging from within the district’s administrative structure, closely aligned to district policies and programs related to special education. In New Orleans, CBITS implementation was undertaken by an outside entity, and emphasized a more decentralized school-to-school approach. Yet, macro-level leadership and partnership remained important. These case examples provide us with indication that both centralized and decentralized approaches can be successful, ultimately resulting in positive outcomes for students.

Our study also helped us develop an expanded and more specified conceptualization of the implementation support system that complements research from others showing the importance of standardized materials, trainings, structured coaching, and supervision (Domitrovich et al., 2008). In our analysis, we identified key activities under the categories of preimplementation work, ongoing clinical and logistical support, promotion of fidelity, monitoring outcomes, and fitting CBITS to the local setting. Of particular importance was the preimplementation or readiness phase, with its establishment of partnerships, engagement of stakeholders, and development of clinical and logistical support systems built on the existing resources and expertise. To support such processes over time, both sites integrated CBITS supervision into monthly or weekly team meetings, used clinician-reported checklists to promote fidelity to the model, reviewed clinical competencies, required data reporting, and consulted with national CBITS trainers. Notably, the collaborative processes used to achieve this echo the same approaches we took in the development of the intervention (e.g., allowing schools to identify their needs, matching needs with evidence-based interventions, building on existing resources and strengths, empowering partners, tailoring to fit the context, building sustainability).

Our role as treatment developers and expert trainers is an important component of the support system. Although both sites examined in this article participated in a Learning Collaborative, our involvement varies according to the specific circumstances of the site. For EBPs disseminated similarly to CBITS, our experience highlights different ways that treatment developers can support implementation, some being more hands-on (e.g., Learning Collaborative, partnered research studies) than others (e.g., periodic consultation). Of course, successful implementation can take place without such supports, and can even improve outcomes for the children served, but the process may be more difficult without the support of experts to troubleshoot and enhance the work (Goodkind, Lanoue, & Milford, 2010). Which approach is indicated depends on the experience, expertise, and partnerships in the implementing sites, as well as resources (grants, funds for ongoing consultation). Such elements of support systems are not emphasized in intervention manuals or research papers and should be better integrated into training of practitioners in new practices.

There are several implications for further research. Although this article focused on macro- and school-level processes, fidelity to the core components of an EBP has been shown to result in positive outcomes. However, the sites described here did not implement stringent monitoring of fidelity (i.e., observation by supervisors, audiotaped sessions). This could be viewed as a shortcoming on the part of the implementing sites. However, it is important to bear in mind that most sites implementing EBPs are not involved in research, raising the need for the development of efficient and effective fidelity monitoring methods that can be used for program evaluation in routine practice settings (Essock, Covell, Shear, Donahue, & Felton, 2006; Schoenwald et al., 2011). This includes adherence to the model and indicators of demonstrated competence (i.e., level of skill and judgment used; Schoenwald et al., 2011).

The implementation experiences described in this article highlight the importance of understanding an evidence-based intervention within its context, with a focus on the specific strategies and processes undertaken by implementing sites. However, there is limited capacity for data collection in service settings. Rigorous research, whether qualitative, quantitative, or mixed method, requires funding, expertise, and resources. As such, it is important to continue to foster research– community partnerships that can seek grant funding for implementation research.

This focus has methodological implications for school psychology research. To learn from real-world implementation efforts, it would be advantageous to use alternate forms of knowledge generation, which can then be used to shape research questions. The developmental evaluation approach we used to examine the case studies included in this article represents one approach. Another complementary approach we have used is to convene a CBITS Summit yearly in the past two years. The summit extends the approach we have described in the article by creating an intensive experience to support implementation. Bringing together diverse sites who have implemented CBITS allows for shared learning across multiple-school stakeholders, clinicians, and researchers, and facilitates a more condensed and intensive implementation support experience, enhanced by bringing together sites that have implemented CBITS in a variety of contexts (e.g., urban, rural) and diverse populations (e.g., different ethnic communities). The in-person experience promotes sharing of implementation support models, engagement strategies, approaches for tailoring to different cultural contexts, program enhancements, evaluation methods, and clinician tools. Such meetings also generate common research agendas, such as identifying ways to assess how CBITS affects academic performance.

For practitioners in the field of school psychology, our study highlights practical, real-world strategies for implementing mental health programs. One particularly relevant implementation innovation is JCPS’s alignment of CBITS, a mental health-focused intervention, with academic priorities. The district was able to align behavioral supports and CBITS explicitly with federal policies and district special education programs in order to serve a broad spectrum of students from special education and general education. They collected preliminary data on the academic effect of CBITS, which showed that one-quarter of students who received CBITS had academic gains and almost two-thirds maintained their grades. Similarly, we have found that students who received CBITS early in the school year were more likely than students who received CBITS later in the school year to have a passing grade of “C” or higher in both language arts and math (Kataoka et al., in press). These findings are consistent with data from others supporting the association between social emotional and academic success (Durlak, Weissberg, Dymnicki, Taylor, & Schellinger, 2011; Zins, Bloodworth, Weissberg, & Walberg, 2004). By understanding how programs like CBITS can address the mission of learning, whether through special education services or improving classroom performance, school psychologists and other school-based clinicians can collaborate with educators on shared goals for students.

In this article, we examined sites that had implemented CBITS on a relatively large scale. However, the processes and the components of the support system apply to individual providers at the local school level as well. School psychologists are uniquely poised to balance the mental health with the academic priorities within schools in the planning, implementation, and sustainability of programs such as CBITS. Given their training and expertise, school psychologists can provide valuable assessment to determine who is appropriate for services like CBITS, as well as implementing CBITS groups, providing logistical support to other providers, and educating school staff about the effect that trauma can have on learning. In addition, school psychologists can provide leadership and consultation at the school and district level, making connections between programs like CBITS and primary, secondary, and tertiary prevention.

There are limitations to the current study. Although developmental evaluation is an important methodology for elucidating complex processes in dynamic settings (Patton, 2011), findings may not generalize to other contexts. For example, both our case studies were set in large, urban areas with many students affected by poverty. Moreover, one site was examined in a postdisaster context. In addition, both sites participated in a national CBITS Learning Collaborative, which may make them different from others. Examination of smaller-scale program rollouts, rural settings, and sites that did not have the same kind of contact with experts or researchers would help to provide further understanding of implementation theories applied to schools. In addition, our primary informants did not include parents and students. Parents have important perspectives on family–school collaboration, a key aspect of school climate, school culture, and organizational health, which should be included in future research.

Despite these limitations, this article provides specific examples of real-world implementation processes that help to confirm and expand conceptual models of implementation and highlight specific factors important in taking a school-based mental health intervention to scale. Our examination of successful implementation sites demonstrates how macro- and school-level implementation factors can interact to create the conditions conducive to quality program delivery. Identification of support system elements, such as pre-implementation work, ongoing clinical and logistical supports, promotion of fidelity to the core components of the intervention, tailored implementation to fit the service context, and a value on monitoring child outcomes can aid activities of practitioners in the field and inform future research.

Acknowledgments

The authors are grateful to their collaborators and partners whose work helped to shape this article: Ruth Campbell, Laura Danna, Priscilla Petrosky, Martha Santiago, Pamela Vona, Douglas Walker, and Marleen Wong. The authors also acknowledge the editorial guidance of Elise Cappella. The writing of this article was supported by National Institute of Mental Health (NIMH) 5K01MH83694 (EN) and MH082712 (SK) and Substance Abuse and Mental Health Services Administration (SAMHSA; SM59285).

Biographies

Erum Nadeem, PhD, is an assistant professor and research scientist at New York State Psychiatric Institute, Columbia University, where she has a Career Development Award from the NIMH to use community-based participatory research methods to enhance the alignment between the goals of Cognitive Behavioral Intervention for Trauma in Schools (CBITS) and the mission and structure of the school setting. She also works on projects evaluating child mental health service initiatives through the New York State Office of Mental Health (NYSOMH), including the Evidence Based Treatment Dissemination Center, which provides training and consultation to community clinicians delivering EBTs for common childhood disorders (e.g., disruptive behavior disorders, depression, PTSD). She received her PhD in clinical psychology at the University of California, Los Angeles (UCLA) and completed her postdoctoral training in the UCLA Department of Psychiatry and Biobehavioral Sciences as part of the UCLA/RAND Health Services Research Training Program.

Lisa H. Jaycox, PhD, a clinical psychologist and senior behavioral scientist at RAND, has both clinical and research expertise in trauma and school mental health interventions, with a focus on traumatized children and adolescents. She is the developer of CBITS and the adapted form of CBITS for nonclinicians called SSET. Her work focuses on disseminating evidence-based interventions into community settings, and evaluating existing community programs to determine their effect.

Sheryl H. Kataoka, MD, MSHS, is an associate professor in the UCLA Division of Child and Adolescent Psychiatry and training director of the UCLA Child Psychiatry Fellowship. Her clinical and research career has focused on the access to and provision of culturally appropriate mental health services, especially for poor and ethnic minority children. She is studying ways to improve the quality of trauma-related mental health services for special education students, how a community participatory partnership facilitated the implementation of CBITS in an immigrant Latino faith-based community, and implementation factors and organizational characteristics of service delivery in schools in post-Katrina New Orleans. She was recently awarded an NIMH grant to study an adaptation of the Learning Collaborative as an implementation strategy for schools. She received the 2009 Sidney Berman Award for School-Based Study and Intervention for Learning Disorders and Mental Health from the American Academy of Child and Adolescent Psychiatry.

Audra K. Langley, PhD, is an assistant professor in the UCLA Division of Child and Adolescent Psychiatry and the director of training for the SAMHSA-funded Trauma Service Adaption Center for Schools, and serves as chair of the National Child Traumatic Stress Network (NCTSN) School Committee. She is a researcher and clinician specializing in cognitive behavioral treatment for children and adolescents with PTSD, anxiety, and related disorders. She has directed CBITS training and consultation efforts for the past nine years, working closely with clinicians and administrators serving high-risk youth in schools and NCTSN-funded sites across the country. Via her current National Institutes of Health Career Development Award, she is partnering with schools to develop and pilot an intervention for elementary school students exposed to traumatic events.

Bradley D. Stein, MD, PhD, is a senior natural scientist at the RAND Corporation and associate professor of child and adolescent psychiatry at the University of Pittsburgh. He has extensive experience in examining the implementation of mental health interventions in schools and other child serving settings, with a focus on school responses to trauma and violence. He was part of the original team to develop, implement, and evaluate CBITS in the Los Angeles Unified School District, and also has evaluated the implementation of school suicide prevention programs. He has been involved in the mental health response to multiple disasters, including the Oklahoma City bombing and the crash of TWA 800. He also worked as a humanitarian aid work in the former Yugoslavia for the majority of 1994. His trauma-related research includes analyses of national survey data regarding Americans’ response to terrorism and an examination of health-related behaviors during the anthrax attacks in Washington, DC, in the fall of 2001. He was the recipient of the 2006 Norbert and Charlotte Rieger Service Program Award for Excellence from the American Academy of Child and Adolescent Psychiatry, which recognizes innovative programs that address prevention, diagnosis, or treatment of mental illness in children and serve as model programs to the community.

Contributor Information

Erum Nadeem, Columbia University & New York State Psychiatric Institute.

Lisa H. Jaycox, RAND Corporation

Sheryl H. Kataoka, University of California, Los Angeles

Audra K. Langley, University of California, Los Angeles

Bradley D. Stein, RAND Corporation

References

- Cohen JA, Deblinger E, Mannarino A, Steer R. A multisite, randomized controlled trial for children with sexual abuse-related PTSD symptoms. Journal of the American Academy of Child & Adolescent Psychiatry. 2004;43:393–402. doi: 10.1097/00004583-200404000-00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JA, Jaycox LH, Walker DW, Mannarino AP, Langley AK, DuClos JL. Treating traumatized children after Hurricane Katrina: Project Fleur-de-lis. Clinical Child and Family Psychology Review. 2009;12:55–64. doi: 10.1007/s10567-009-0039-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JA, Mannarino AP, Berliner L, Deblinger E. Trauma-focused cognitive behavioral therapy for children and adolescents: An empirical update. Journal of Interpersonal Violence. 2000;15:1202–1223. [Google Scholar]

- Domitrovich CE, Bradshaw CP, Poduska JM, Hoagwood K, Buckley JA, Olin S, et al. Maximizing the implementation quality of evidence-based preventive interventions in schools: A conceptual framework. Advances in School Mental Health Promotion. 2008;1:6–27. doi: 10.1080/1754730x.2008.9715730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durlak J, Weissberg R, Dymnicki A, Taylor R, Schellinger K. The impact of enhancing students’ social and emotional learning: A meta-analysis of school-based universal interventions. Child Development. 2011;82:405–432. doi: 10.1111/j.1467-8624.2010.01564.x. [DOI] [PubMed] [Google Scholar]

- Essock SM, Covell NH, Shear KM, Donahue SA, Felton CJ. Use of clients self-reports to monitor Project Liberty clinicians fidelity to a cognitive-behavioral intervention. Psychiatric Services. 2006;57:1320–1323. doi: 10.1176/ps.2006.57.9.1320. [DOI] [PubMed] [Google Scholar]

- Farmer EM, Burns BJ, Phillips SD, Angold A, Costello EJ. Pathways into and through mental health services for children and adolescents. Psychiatric Services. 2003;54:60–66. doi: 10.1176/appi.ps.54.1.60. [DOI] [PubMed] [Google Scholar]

- Feldstein AC, Glasgow RE. A practical, robust implementation and sustainability model (PRISM) for integrating research findings into practice. The Joint Commission Journal on Quality and Patient Safety. 2008;34:228–243. doi: 10.1016/s1553-7250(08)34030-6. [DOI] [PubMed] [Google Scholar]

- Fixsen DL, Naoom SF, Blasé KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; 2005. [Google Scholar]

- Foa EB, Dancu CV, Hembree EA, Jaycox LH, Meadows EA, Street GP. A comparison of exposure therapy, stress inoculation training, and their combination for reducing Post-traumatic Stress Disorder in female assault victims. Journal of Consulting and Clinical Psychology. 1999;67:194–200. doi: 10.1037//0022-006x.67.2.194. [DOI] [PubMed] [Google Scholar]

- Gamble J. A developmental evaluation primer. Canada: Montreal, Québec; 2008. [Google Scholar]

- Garrison EG, Roy IS, Azar V. Responding to the mental health needs of Latino children and families through school-based services. Clinical Psychology Review. 1999;19:199–219. doi: 10.1016/s0272-7358(98)00070-1. [DOI] [PubMed] [Google Scholar]

- Goodkind JR, Lanoue MD, Milford J. Adaptation and implementation of cognitive behavioral intervention for trauma in schools with American Indian youth. Journal of Clinical Child and Adolescent Psychology. 2010;39:858–872. doi: 10.1080/15374416.2010.517166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenhalgh T, Robert G, MacFarland F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: Systematic review and recommendations. Milbank Quarterly. 2004;82:581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Institute for Healthcare Improvement. The breakthrough series. Boston, MA: Author; 2003. [Google Scholar]

- Jaycox LH. Cognitive-Behavioral Intervention for Trauma in Schools. Longmont, CO: Sopris West Educational Services; 2003. [Google Scholar]

- Jaycox LH, Cohen JA, Mannarino AP, Walker DW, Langley AK, Gegenheimer KL, et al. Children’s mental health care following Hurricane Katrina: A field trial of trauma-focused psychotherapies. Journal of Traumatic Stress. 2010;23:223–231. doi: 10.1002/jts.20518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jaycox LH, Kataoka SH, Stein BD, Wong M, Langley A. Responding to the needs of the community: A stepped care approach to implementing trauma-focused interventions in schools. Report on Emotional and Behavioral Disorders in Youth. 2005;5:85–88. 100–103. [Google Scholar]

- Jaycox LH, Langley AK, Stein BD, Wong M, Sharma P, Scott M, et al. Support for students exposed to trauma: A pilot study. School Mental Health. 2009;1:49–60. doi: 10.1007/s12310-009-9007-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joyce B, Showers B. Student achievement through staff development. 3rd. Alexandria, VA: Association for Supervision and Curriculum Development; 2002. [Google Scholar]

- Kataoka SH, Fuentes S, O’Donoghue VP, Castillo-Campos P, Bonilla A, Halsey K, et al. A community participatory research partnership: The development of a faith-based intervention for children exposed to violence. Ethnicity and Disease. 2006;16:S89–S97. [PubMed] [Google Scholar]

- Kataoka SH, Jaycox LH, Wong M, Nadeem E, Langley AK, Tang L, et al. Ethnicity and Disease. Effects on school outcomes in low-income minority youth: Preliminary findings from a community-partnered study of a school trauma intervention. in press. [PMC free article] [PubMed] [Google Scholar]

- Kataoka SH, Nadeem E, Wong M, Langley AK, Jaycox LH, Stein BD, et al. Improving disaster mental health care in schools: A community-partnered approach. American Journal of Preventive Medicine. 2009;37:S225–S229. doi: 10.1016/j.amepre.2009.08.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kataoka SH, Stein BD, Jaycox LH, Wong M, Escudero P, Tu W, et al. A school-based mental health program for traumatized Latino immigrant children. Journal of the American Academy of Child & Adolescent Psychiatry. 2003;42:311–318. doi: 10.1097/00004583-200303000-00011. [DOI] [PubMed] [Google Scholar]

- Kataoka SH, Zhang L, Wells KB. Unmet need for mental health care among U.S. children: Variation by ethnicity and insurance status. American Journal of Psychiatry. 2002;159:1548–1555. doi: 10.1176/appi.ajp.159.9.1548. [DOI] [PubMed] [Google Scholar]

- Kelly JA, Somlai AM, DiFranceisco WJ, Otto-Salaj LL, McAuliffe TL, Hackl KL, et al. Bridging the gap between the science and service of HIV prevention: Transferring effective research-based HIV prevention interventions to community AIDS service providers. American Journal of Public Health. 2000;90:1082–1088. doi: 10.2105/ajph.90.7.1082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langley AK, Nadeem E, Kataoka SH, Stein BD, Jaycox LH. Evidence-based mental health programs in schools: Barriers and facilitators of successful implementation. School Mental Health. 2010;2:105–113. doi: 10.1007/s12310-010-9038-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macy RD, Macy DJ, Gross S, Brighton P. Classroom-camp-community-culture based intervention: Basic training manual for the 9-session CBI. Boston: The Center for Trauma Psychology; 2006. [Google Scholar]

- March JS, Amaya-Jackson L, Murray MC, Schulte A. Cognitive-behavioral psychotherapy for children and adolescents with posttraumatic stress disorder after a single-incident stressor. Journal of the American Academy of Child & Adolescent Psychiatry. 1998;37:585–593. doi: 10.1097/00004583-199806000-00008. [DOI] [PubMed] [Google Scholar]

- McCarthy KF, Peterson DJ, Sastry N, Pollard M. The repopulation of New Orleans after Hurricane Katrina. Santa Monica, CA: RAND; 2006. [Google Scholar]

- Ngo V, Langley A, Kataoka SH, Nadeem E, Escudero P, Stein BD. Providing evidence-based practice to ethnically diverse youths: Examples from the Cognitive Behavioral Intervention for Trauma in Schools (CBITS) program. Journal of the American Academy of Child Adolescent Psychiatry. 2008;47:858–862. doi: 10.1097/CHI.0b013e3181799f19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patton MQ. Developmental evaluation: Applying complexity concepts to enhance innovation and use. New York: Guilford Press; 2011. [Google Scholar]

- Reschly DJ, Bergstrom MK. Response to intervention. In: Gutkin TB, Reynolds CR, editors. The handbook of school psychology. New York: Wiley; 2009. [Google Scholar]

- Rogers EM. Diffusion of innovations. New York: Free Press; 1995. [Google Scholar]

- Schoenwald SK, Garland AF, Chapman JE, Frazier SL, Sheidow AJ, Southam-Gerow MA. Toward the effective and efficient measurement of implementation fidelity. Administration and Policy in Mental Health. 2011;38:32–43. doi: 10.1007/s10488-010-0321-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz D, Barnes-Proby D, Chandra A, Jaycox LH, Maher E, Pecora P. Toolkit for adapting Cognitive Behavioral Intervention for Trauma in Schools (CBITS) or Supporting Students Exposed to Trauma (SSET) for implementation with youth in foster care. Santa Monica, CA: RAND; 2010. [PMC free article] [PubMed] [Google Scholar]

- Stein BD, Jaycox LH, Kataoka SH, Wong M, Tu W, Elliott MN, et al. A mental health intervention for schoolchildren exposed to violence: A randomized controlled trial. Journal of the American Medical Association. 2003;290:603–611. doi: 10.1001/jama.290.5.603. [DOI] [PubMed] [Google Scholar]

- Stein BD, Kataoka SH, Jaycox LH, Steiger EM, Wong M, Fink A, et al. The mental health for immigrants program: Program design and participatory research in the real world. In: Weist MD, editor. Handbook of school mental health: Advancing practice and research. Issues in clinical child psychology. New York: Kluwer Academic/Plenum Publishers; 2003. pp. 179–190. [Google Scholar]

- Stein BD, Kataoka SH, Jaycox LH, Wong M, Fink A, Escudero P, et al. Theoretical basis and program design of a school-based mental health intervention for traumatized immigrant children: A collaborative research partnership. Journal of Behavioral Health Services and Research. 2002;29:318–326. doi: 10.1007/BF02287371. [DOI] [PubMed] [Google Scholar]

- Stoiber KC, Kratochwill TR. Empirically supported interventions and school psychology: Rationale and methodological issues—Part I. School Psychology Quarterly. 2000;15:75–105. [Google Scholar]

- Walker DW. A school-based mental health service model for youth exposed to disasters: Project Fleur-de-lis. The Prevention Researcher. 2008;15:11–13. [Google Scholar]

- Wells KB, Staunton A, Norris KC, Bluthenthal R, Chung B, Gelberg L, et al. Building an academic-community partnered network for clinical services research: The Community Health Improvement Collaborative (CHIC) Ethnicity and Disease. 2006;16:S3–S17. [PubMed] [Google Scholar]

- Wong M. Commentary: Building partnerships between schools and academic partners to achieve a health-related research agenda. Ethnicity and Disease. 2006;16:149–153. [PubMed] [Google Scholar]

- Wong M, Rosemond M, Stein BD, Langley AK, Kataoka SH, Nadeem E. School-based intervention for adolescents exposed to violence. The Prevention Researcher. 2007;14:17–20. [Google Scholar]

- Yin RK. Case study research: Design and methods. 4th. Thousand Oaks, CA: Sage Publications; 2009. [Google Scholar]

- Zins JE, Bloodworth MR, Weissberg RP, Walberg HJ. The scientific base linking social and emotional learning to school success. In: Joseph RPW, Zins E, Wang Margaret C, Walberg Herbert J, editors. Building academic success on social and emotional learning: What does the research say? New York: Teachers College Press; 2004. pp. 3–22. [Google Scholar]