Abstract

This paper studies the intrinsic connection between a generalized LASSO and a basic LASSO formulation. The former is the extended version of the latter by introducing a regularization matrix to the coefficients. We show that when the regularization matrix is even- or under-determined with full rank conditions, the generalized LASSO can be transformed into the LASSO form via the Lagrangian framework. In addition, we show that some published results of LASSO can be extended to the generalized LASSO, and some variants of LASSO, e.g., robust LASSO, can be rewritten into the generalized LASSO form and hence can be transformed into basic LASSO. Based on this connection, many existing results concerning LASSO, e.g., efficient LASSO solvers, can be used for generalized LASSO.

Keywords: LASSO, generalized LASSO, robust LASSO, deconvolution, solution path, diagonally dominant, total variation

1. Introduction

The least absolute shrinkage and selection operator (LASSO) [1] is one of the most popular approaches for sparse linear regression in the last decade, which is usually formulated as

where y ∈ ℝn gathers n observed measurements; A ∈ ℝn×p contains p predictors of dimension n; x ∈ ℝp contains p coefficients; ‖ ․ ‖ and ‖ ․ ‖1 stand for the ℓ2- and ℓ1-norm respectively; and λ > 0 is the regularization parameter, controlling the tradeoff between the data fidelity and the model complexity. The most attracting feature of LASSO is the use of ℓ1-norm regularization, which yields sparse coefficients. The ℓ1-norm regularization results in the piecewise linearity [2] of the solution path {x* (λ)|λ ∈ (0, +∞)} (i.e., the set of solutions with respect to continuous change of the regularization parameter λ), allowing for efficient reconstruction of the whole solution path. Based on this property, famous path tracking algorithms such as the least angle regression (LARS) [3] and the homotopy [2, 4] have been developed. LARS is a greedy algorithm working with decreasing λ value. At each iteration, a dictionary atom is selected and appended to the set previously selected, and the next critical λ value is computed. Homotopy is an extension of LARS, performing both forward and backward selection of the atoms already selected.

The generalized LASSO [5] (or analysis [6, 7], least mixed norm [8]) extends the basic LASSO (or synthesis) by imposing a regularization matrix D ∈ ℝm×p on the coefficient vector x:

where D typically contains the prior knowledge (e.g., structure information) [9] about x. For example, if x is expected to be a piecewise constant signal (i.e., implying that its first order derivative is sparse), then D is taken to be the first order derivative operator [10]. Some variants of LASSO can be regarded as the generalized LASSO by forming a structured matrix D. For example, the fused LASSO proposed by Tibshirani et al. [10] imposes the ℓ1 regularization on both the coefficients and their first order derivatives to encourage the solution to be locally constant. When one of the two regularization parameters is fixed, a fused LASSO problem can be rewritten as a generalized LASSO by cascading a scaled identity matrix with a first order derivative matrix to form the D matrix. The graph-guided fused LASSO [11, 12] incorporates the network prior information into D for correlation structure. Application of generalized LASSO can be found in image restoration [8], visual recognition [13], electroencephalography (EEG) [14], bioinformatics [15], ultrosonics [16], etc.

Since the LASSO has been proposed, results concerning the recovery condition [3, 17], solution path property [18], degree of freedom [19], model selection consistency [20], efficient algorithms as well as software development [2, 4] have been widely studied. One may want to know whether these results can be applicable to a generalized LASSO problem, e.g., solving a generalized LASSO problem with a LASSO solver. An immediate example is when D is a full rank square (hence invertible) matrix. By a simple change of variables u = Dx, the original generalized LASSO problem can be transformed into the basic LASSO form with a predictor matrix AD−1 and a coefficient vector u. Therefore it can be solved by calling the LASSO subroutine.

Although the generalized LASSO and LASSO have been studied from various aspects [5, 7, 21], their connections are not fully explored, which is the main focus of this paper. Elad et al. [6] showed that they are equivalent, but confined the discussion to the denoising case, where A is an identity matrix. Tibshirani and Taylor [5] showed that the former can be transformed to the latter; however, their method needs to introduce a matrix D0 (see Appendices), which brings other potential questions as discussed in the conclusion.

The paper is organized as follows: in Section II, we show that when the regularization matrix is even- or under-determined with full rank conditions, the generalized LASSO can be transformed into the LASSO form via the Lagrangian framework. Based on this formula, in Section III we show that some published results of LASSO can be extended to the generalized LASSO. In Section IV, two variants of LASSO, namely the regularized deconvolution and the robust LASSO are analyzed under the generalized LASSO framework. We conclude the paper in Section IV.

2. Condition and formula of transformation

The simplification of the generalized LASSO depends on the setting of D [6]. For the even-determined case (m = p)1, if D has full rank, by a simple change of variables u = Dx, the original generalized LASSO problem can be transformed into the basic LASSO with a predictor matrix AD−1 and a coefficient vector u. Once the LASSO solution û(λ) is known, the original generalized LASSO solution is immediate: x̂(λ) = D−1û(λ).

For the over-determined case (m > p), if we change the variable x to u, then u has a higher dimension than x, or higher degree of freedom. If only a solution û satisfies the constraint DD†û = û where D† is the Moore-Penrose pseudoinverse of D, the original solution is guaranteed to be found [6]. Therefore, the generalized LASSO under this case cannot be transformed into a basic LASSO but a LASSO with equality constraint [22].

We consider the under-determined case (m < p). The following Theorem 1 states that a generalized LASSO problem can be transformed into a basic LASSO form under some conditions.

Theorem 1

If matrix has full column rank (implying m + n ≥ p), and D has full row rank (implying m ≤ p), then the generalized LASSO problem can be transformed into the following LASSO form:

| (1) |

where

| (2) |

and Q1 ∈ ℝn×p and Q2 ∈ ℝm×p are defined from the (unique) QR decomposition

| (3) |

Proof

Since Φ has full column rank, its QR decomposition (3) is unique and the square matrix R ∈ ℝp×p is invertible. Moreover, Q2 ∈ ℝm×p is full row rank because D is full row rank. (3) rereads:

| (4) |

| (5) |

From QTQ = Ip, we have

| (6) |

The generalized LASSO problem is equivalent to the following constrained optimization problem

| (7) |

The Lagrange function associated with (7) reads

where μ gathers m Lagrange multipliers. The optimality condition reads

From these two equations, we have the following system

| (8) |

If matrix

| (9) |

is invertible, we can find the equivalent form of the generalized LASSO problem. By substituting A and D with (4) and (5) respectively, we have

where Im ∈ ℝm×m is the identity matrix. Since R and Im are full rank, the invertibility of M is equivalent to that of

From (6),

From Woodbury’s matrix identity [23, p.141], N is invertible if and only if

is invertible. Since

and (Q2 is full rank), the invertibility of W is guaranteed, and therefore M is invertible.

From the block matrix inversion lemma [23, p.108], we have

Therefore,

and

where . Finally, from (8) we have

| (10) |

and

By substituting (10) into (7), we obtain the results (1) and (2).

Once the solution path {û(λ)|λ ∈ (0, +∞)} is known, the corresponding solution path {x̂(λ)|λ ∈ (0, +∞)} can be calculated according to (10).

By substituting Q1 = AR−1 and Q2 = DR−1 into (2), and utilizing

| (11) |

up to a few manipulations, an useful equivalent form of (2) without QR decomposition is obtained as

| (12) |

And from (10), x can be recovered as

| (13) |

Note that H has the same dimensionality as AD†, but normally they are not equal unless Ψ is a diagonal matrix.

Remark 1

There are many real-world examples that satisfy this condition. e.g., the total variation denoising problem where A and D are an identity and a first order derivative matrix, respectively. By substituting A and D into M in (9), one can check that M is invertible, and hence satisfies the condition in Theorem 1. Another two examples are the regularized deconvolution problem and the robust LASSO, which will be presented in detail in Sec. 4.

Remark 2

Under the case m + n = p, Φ is a full rank (square) matrix. Therefore, the row vectors of Q are orthogonal, and . From (1)–(2), we have H = 0 and the solution is obviously u = 0. According to (10), the original solution is . This solution does not depend on λ, and the solution path {x̂(λ)|λ ∈ (0, +∞)} is a singleton.

Another viewpoint is obtained by a change of variables. Defining υ = Rx, the generalized LASSO problem rereads

| (14) |

Since the rows of Q1 and Q2 form an orthonormal basis, it is obvious that the minimum ℓ2-norm least square solution satisfies Q2υ̂ = 0. This means that υ̂ minimizes both the ℓ2- and ℓ1-term simultaneously. Therefore, the cost function in (14) is equal to 0 whatever λ > 0.

Remark 3

Under the case m + n < p, Null(Φ) ≠ ∅, therefore one can check that the generalized LASSO does not yield unique solution, and there are unlimited number of solutions with Dx* = 0 and Ax* = y whatever λ > 0.

Note that this case does not satisfy the condition in Theorem 1 since Φ does not have full column rank. This case serves to complete the discussion.

3. Extension of existing LASSO results

Since the LASSO has been intensively studied during the last decade, many results concerning the computational issue have been published. In this section, we extend some of them into the generalized LASSO problem.

3.1. Monotonic solution path property

Our earlier result [18, Theorem 2] shows that if (HTH)−1 is diagonally dominant, then the solution path of the corresponding LASSO problem changes monotonically with respect to λ. In other words, the solution path has at most m segments. Therefore, the ‘forward’ algorithm, LARS, yields the same solution path as the ‘forward-backward’ algorithm, homotopy, and both of them can recover the complete solution path within m iterations. Based on this fact, the computational complexity can thus be reduced. The following corollaries extend this result to the generalized LASSO.

Corollary 1

If a generalized LASSO problem satisfying condition in Theorem 1 also satisfies that ((DΨ−1 DT)−1 − I)−1 is diagonally dominant, then the complete solution path can be recovered within m iterations of LARS and homotopy algorithm.

Proof

When a generalized LASSO problem satisfying condition in Theorem 1, it can be transformed into a LASSO problem with H and z being defined in (2). By utilizing equations (4), (5), (6) and (11), we have

therefore, (HTH)−1 is diagonally dominant. From [18], this corollary is straightforward.

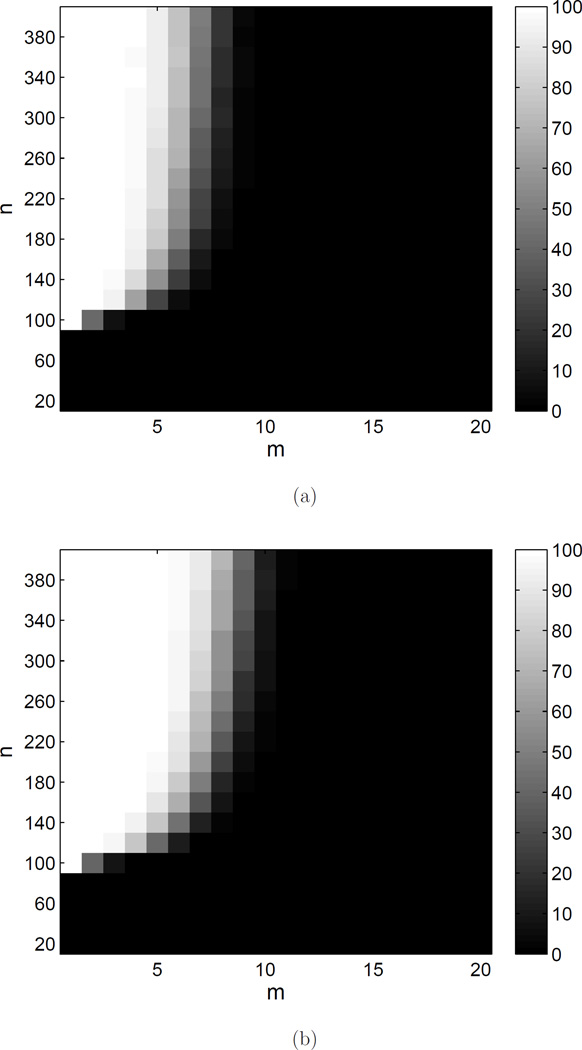

There exist many matrices satisfying above condition. Obvious examples are the orthogonal dictionaries like Dirac basis or Hadamard basis. By Monte Carlo simulation, we study the probability of random matrices to satisfy the condition. p is fixed to 100, and for each given configuration (n, m), 1000 trials A and D are generated, whose entries obey i.i.d. normal distribution or Bernoulli distribution with parameter b = 0.1 (the probability for 1 is b, for 0 is 1 − b). The frequency of A and D satisfying condition in Corollary 1 is shown in Fig. B.1. From the simulation results, it is shown that random matrices satisfy the condition when n ≫ m.

Figure B.1.

The frequency (in percentage) of random matrices A and D satisfying condition in Corrolary 1 with p = 100. (a) Entries in A and D obeys normal distribution and Bernoulli distribution with parameter b = 0.1, respectively. (b) The distributions of A and D are exchanged.

When ATA forms an orthogonal design, i.e., ATA = I, above condition can be further simplified to the following corollary.

Corollary 2

If a generalized LASSO problem satisfying condition in Theorem 1 also satisfies that A forms an orthogonal design, and DDT is diagonally dominant, then the complete solution path can be recovered within m iterations of LARS and homotopy algorithm.

Proof

Since D has full row rank, DDT is invertible. By utilizing Searle’s set of matrix identities [24, page 151], we can verify that

When ATA = I, we have

Therefore, condition in Corollary 1 can be simplified into checking the diagonal dominance of DDT.

Corollaries 1 and 2 show that in the best case, the computational complexity of homotopy can reach as low as m iterations to compute the complete regularization path on λ ∈ (0, +∞). It is shown [25] that in the worst case, the computation complexity can reach as high as (3m − 1)/2. Therefore, the computation complexity highly varies depending on the specific problem. Instead of using a generic LASSO solver such as LARS, fast and efficient algorithms can be developed for some specific problems. e.g., the algorithm in [26] permits fast computation and low storage for the regularized deconvolution problem introduced later in Sec. 4.1 with α = 0 and β = − 1. The computational advantage is significant when the dimension is extremely large.

3.2. Decoupling into 1-dimensional subproblems

Efron et al. [3] and Friedman et al. [27] have shown that if the dictionary A forms an orthogonal design (or uncorrelated design), i.e., ATA = I, a LASSO problem can be decoupled into a set of subproblems, and then solved separately.

To be more specific, when ATA = I the LASSO criterion can be rewritten as

This shows that the p-dimensional LASSO problem can be decoupled into p’ 1-dimensional subproblems, and each subproblem can be solved separately. The closed-form solution reads

| (15) |

where ηλ(·) is the componentwise soft thresholding function [28]:

Similarly, for generalized LASSO problem the following corollary provides a sufficient condition to guarantee decoupling.

Corollary 3

If a generalized LASSO problem satisfying condition in Theorem 1 also satisfies

| (16) |

then it can be decoupled into p 1-dimensional LASSO problems, with the following closed-form solution

| (17) |

Proof

Since A and D satisfy condition in Theorem 1, this generalized LASSO problem can be transformed into a LASSO problem, with H, z being defined in (2), and matrices Q1, Q2 and R being defined in the proof of Theorem 1.

By substituting equations (4), (5) and (11) into (16), an equivalent condition reads

| (18) |

Therefore, .

From (2) and by utilizing (6), it is easy to prove that the Gramian matrix of H is an identity matrix, i.e., HTH = I, so H forms an orthogonal design, indicating the transformed LASSO problem can be decoupled.

| (20) |

Further more, with the help of (18), we can also prove

| (19) |

Finally, by replacing A and y in (15) with H and z respectively, and substituting (19), the closed-form solution reads in (17).

Remark 4

If ATA is invertible, by utilizing Searle’s set of matrix identities [24, page 151], an alternative condition of (16) reads

Note that this condition is stronger than (16) since for under-determined A, ATA is not invertible.

One can check that condition in Corollary 3 fulfills when D is a square matrix with full rank and ATA = DTD.

4. Two examples on the analysis of LASSO variants

Several variants of LASSO can be unified under the generalized LASSO framework, such as the total variation regularized deconvolution for signal and image recovery [29], the robust LASSO for face recognition and sensor network [30], the adaptive LASSO for variable selection [31], the fused LASSO for gene expression data analysis [10], the ℓ1 trend filtering for time series analysis [32], and the adaptive generalized fused LASSO for road-safety data analysis [33]. In this section, the first two will be discussed.

4.1. Regularized deconvolution

Deconvolution is a challenging problem, which can be formulated as a LASSO problem with a Toeplitz predictor matrix. Total variation [34] is a regularization tool to restore piecewise constant signals. This leads to the generalized LASSO form in which A is a Toeplitz matrix containing the convolution kernel and D is also a Toeplitz matrix containing the total variation kernel. For high dimensional problems, the computation burden is a major factor. The following theorem sheds some lights on this issue.

Corollary 4

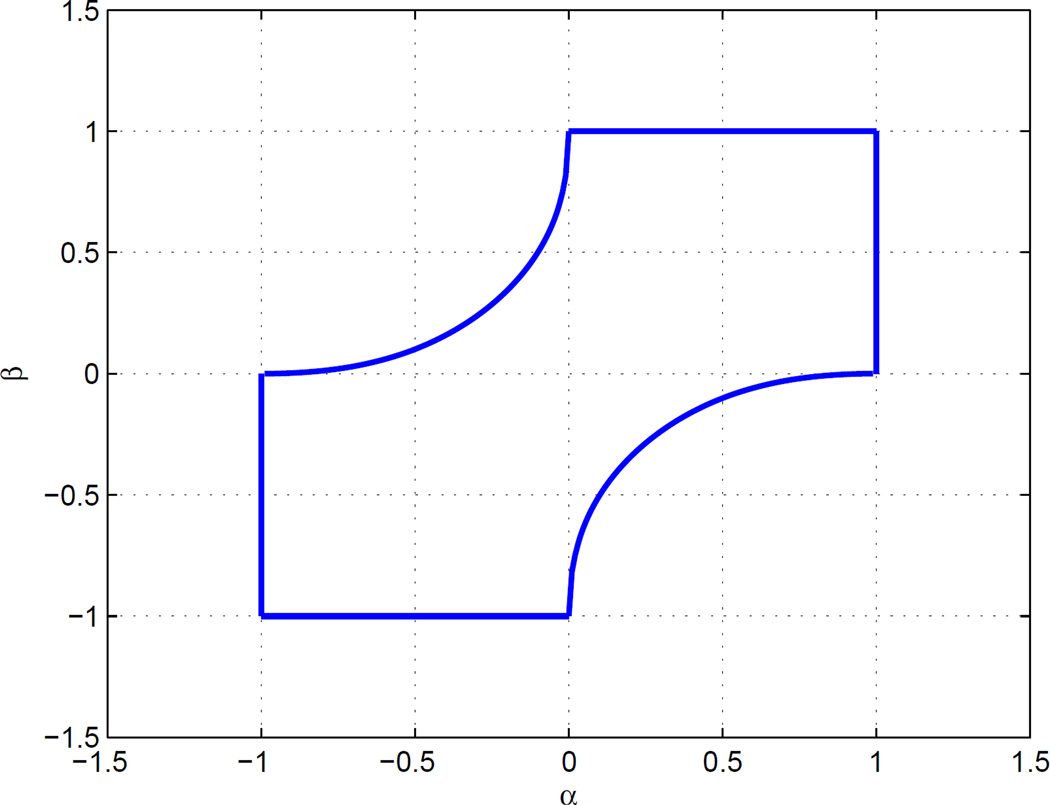

Let A and D be lower square triangle Toeplitz matrices with the first column [1, α, α2, α3, …, αn−1]T and [1, β, 0, 0, …, 0]T, respectively. If α and β fall within the region displayed in Fig. B.2, then the generalized LASSO problem can be solved for all λ by the LARS and homotopy algorithms in at most n iterations.

Figure B.2.

The feasible region that admits matrix (12) to be diagonal dominant. The region is mirror symmetric with respect to line α = β, and the curve in the south-east quadrant has expression .

The total variation regularization corresponds to the special case when β = − 1.

Proof

According to Theorem 1, since D is invertible, this generalized LASSO problem is equivalent to the basic LASSO problem (1) with H = AD−1. Since A is squared and invertible, the Gramian matrix inverse reads (HTH)−1 = DA−1 A−T DT. So in the following we will show that this matrix is diagonally dominant.

One can check that A−1 is also a lower triangle Toeplitz matrix with the first column as [1, −α, 0, 0, …, 0]T. Then the Gramian matrix DA−1 A−T DT reads as (20). In order to have the diagonal dominance property, the following three inequalities are required, which correspond to the first three rows in matrix (20) (The conditions related to the other rows are obviously implied by the third inequality):

Those inequalities yield three regions in the 2D plane (α, β) whose intersection is displayed on Fig. B.2.

Corollary 4 in this paper, Theorem 2 in [18], Theorem 1 and Corollary 2 in [17] jointly show that for deconvolution problem with total variation regularization (β = −1), if the convolution kernel is high pass (−1 ≤ α < 0) or all pass (α = 0), but not low pass (0 < α ≤ 1), the true solution can be recovered via the generalized LASSO. If there is no total variation constraint (β = 0), then any convolution kernel having exponential attenuation ([1, α, α2, α3, …, αn−1]T, −1 ≤ α ≤ 1) admits the perfect recovery.

4.2. Robust LASSO

LASSO with the first order total variation method can recover piecewise constant signals. However, if there are outliers in the signal y, this approach tends to introduce false detections. A second component signal s ∈ ℝn is introduced to cope with the outliers in the observation [30]. Since outliers are usually due to burst error, which are assumed to obey the Laplace distribution, a second ℓ1 regression term is incorporated into the objective function, yielding the robust generalized LASSO as follows,

This problem can be rewritten into a generalized LASSO form with , and , where τ = λ2/λ1. When has full column rank, D has full column rank, and τ > 0, one can check that has full column rank, Drobust has full row rank, and M is invertible. Therefore, from Theorem 1 the robust LASSO problem can be transformed into a basic LASSO form.

5. Conclusion

This paper discusses the simplification of a generalized LASSO problem into the basic LASSO form. When the regularization matrix D is even- or under-determined (m ≤ p), we showed that this simplification is possible. Otherwise, there is no guarantee that this simplification can be done. In the former case, optimization tools dedicated to LASSO can be straightforwardly applied to the generalized LASSO.

Tibshirani and Taylor [5] gave a simple way to transform a generalized LASSO to the basic LASSO form when D is not a square matrix (m < p). As shown in Appendix Appendix A, they introduced a matrix D0 to form , a square matrix D̃, which is invertible. This poses the question on whether their results depend on D0 or not. Appendix Appendix B shows that their method yields the equivalent results as ours, and this indicates that H and z in their formula do not depend on D0. In addition, an improperly introduced D0 may incur potential numerical error.

The proposed formula reposes directly on A and D, therefore gives an insight into their interaction in the generalized LASSO problem. Based on the proposed formula, it is shown that existing results related to LASSO can be extended to the generalized LASSO. Since some variants of LASSO can be unified under the generalized LASSO framework, they can be transformed into the basic LASSO, and hence efficient LASSO solvers can be applied for the solution. Furthermore, under this framework, many different types of regression formulations such as the trend filtering in a recent study [35] can be unified.

Acknowledgments

This study was partially supported by National Science Foundation of China (No. 61401352), China Postdoctoral Science Foundation (No. 2014M560786), Shaanxi Postdoctoral Science Foundation, Fundamental Research Funds for the Central Universities (No. xjj2014060), National Science Foundation (No. 1539067), and National Institute of Health (No. R01MH104680, R01MH107354, and R01GM109068).

Appendix A

Tibshirani and Taylor’s transformation method

Tibshirani and Taylor [5] proposed the following method to transform a generalized LASSO when rank(D) = m < p.

First a matrix D0 ∈ ℝ(p − m) × p is found, whose rows are orthogonal to these in D. Then matrix is a square matrix with full rank, and thus invertible.

For further use, let us define with u and υ are column vectors of length m and p−m respectively, therefore , and the generalized LASSO criterion rereads

Let’s also define

where A1 and A2 are matrices of size n × m and n × (p − m) respectively. Above criterion rereads

| (A.1) |

which is a least sequare problem with respect to υ, and the solution is

where .

Finally, by substituting υ* into (A.1), the generalized LASSO can be transformed into form (1) with

| (A.2) |

Appendix B

The equivalence between Tibshirani and Taylor’s method and the proposed method in the current paper

In this appendix, we prove that H’s and z’s in form (2) and (A.2) are equal.

Appendix B.1. Equivalence of H’s

First, for further use let’s define Q0 ≜ D0R−1 and

| (B.1) |

where P2 and P0 are of size p × m and p × (p − m), respectively.

It is easy to verify that

| (B.2) |

| (B.3) |

| (B.4) |

From (B.4), (6) and (B.2), we have

| (B.5) |

Left multiply both sides of (B.3) with Q1, and substitute resultant equation into (B.5), we have

Move the second part to the right side of equals sign, and left multiply both sides with , right multiply with , we have

Left multiply both sides of above equation with −A2, and add with A1, we have

The left side of above equation is H in (A.2), and the right side is equal to H in (2), since left multiply both sides of (B.3) with Q1, and right multiply with yields

Appendix B.2. Equivalence of z’s

To prove that z’s in (2) and (A.2) are equal, we have to prove

From (B.1), one can verify that

Left multiply both sides of above equation with Q1, and right multiply with , we have

| using Eq. (B.4) |

| using Eq. (B.2) |

| using Eq. (6) |

| using Eq. (B.4) |

Footnotes

This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

In general, the term even- over- and under-determination are used to describe the predictor matrix A. In this paper, we borrow these terms to characterize the regularization matrix D.

References

- 1.Tibshirani R. Regression shrinkage and selection via the Lasso. J. R Statist. Soc. B. 1996;58(1):267–288. [Google Scholar]

- 2.Osborne MR, Presnell B, Turlach BA. A new approach to variable selection in least squares problems. IMA Journal of Numerical Analysis. 2000;20(3):389–403. [Google Scholar]

- 3.Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression. Annals Statist. 2004;32(2):407–499. [Google Scholar]

- 4.Malioutov DM, Cetin M, Willsky AS. Proc. IEEE ICASSP. V. Philadephia, PA: 2005. Mar. Homotopy continuation for sparse signal representation; pp. 733–736. [Google Scholar]

- 5.Tibshirani RJ, Taylor J. The solution path of the generalized lasso. Annals Statist. 2011;39(3):1335–1371. [Google Scholar]

- 6.Elad M, Milanfar P, Rubinstein R. Analysis versus synthesis in signal priors. Inverse Problems. 2007;23(3):947. [Google Scholar]

- 7.Vaiter S, Peyré G, Dossal C, Fadili J. Robust sparse analysis regularization. IEEE Trans. Inf. Theory. 2013;59(4):2001–2016. [Google Scholar]

- 8.Fu H, Ng MK, Nikolova M, Barlow JL. Efficient minimization methods of mixed ℓ2-ℓ1 and ℓ1-ℓ1 norms for image restoration. SIAM J. Sci. Comput. 2006;27(6):1881–1902. [Google Scholar]

- 9.Eldar YC, Kutyniok G, editors. Compressed Sensing: Theory and Applications. Cambridge University Press; 2012. May, [Google Scholar]

- 10.Tibshirani R, Saunders M, Rosset S, Zhu J, Knight K. Sparsity and smoothness via the fused lasso. Journal Of The Royal Statistical Society Series B. 2005;67(1):91–108. [Google Scholar]

- 11.Kim S, Sohn K-A, Xing EP. A multivariate regression approach to association analysis of a quantitative trait network. Bioinformatics. 2009;25:i204–i212. doi: 10.1093/bioinformatics/btp218. ISMB 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chen X, Lin Q, Kim S, Carbonell JG, Xing EP. Smoothing proximal gradient method for general structured sparse regression. The Annals of Applied Statistics. 2012;6(2):719–752. [Google Scholar]

- 13.Morioka N, Satoh S. Generalized lasso based approximation of sparse coding for visual recognition. Advances in Neural Information Processing Systems. 2011:181–189. [Google Scholar]

- 14.Vega-Hernández M, Martínez-Montes E, Sánchez-Bornot JM, Lage-Castellanos A, Valdés-Sosa PA. Penalized least squares methods for solving the EEG inverse problem. Statistica Sinica. 2008;18:1535–1551. [Google Scholar]

- 15.Tibshirani R, Wang P. Spatial smoothing and hot spot detection for CGH data using the fused lasso. Biostatistics. 2008;9(1):18–29. doi: 10.1093/biostatistics/kxm013. [DOI] [PubMed] [Google Scholar]

- 16.Yu C, Zhang C, Xie L. A blind deconvolution approach to ultrasound imaging. IEEE Trans. Ultrasonics Ferroelectrics Frequency Control. 2012;59(2):271–280. doi: 10.1109/TUFFC.2012.2187. [DOI] [PubMed] [Google Scholar]

- 17.Donoho DL, Tsaig Y. Fast solution of l1-norm minimization problems when the solution may be sparse. IEEE Trans. Inf. Theory. 2008 Nov.54(11):4789–4812. [Google Scholar]

- 18.Duan J, Soussen C, Brie D, Idier J, Wang Y-P. On LARS/homotopy equivalence conditions for over-determined LASSO. IEEE Signal Processing Letters. 2012;19(12):894–897. [Google Scholar]

- 19.Zou H, Hastie T, Tibshirani R. On the ”degrees of freedom” of the lasso. Annals Statist. 2007;35(5):2173–2192. [Google Scholar]

- 20.Zhao P, Yu B. On model selection consistency of lasso. Journal of Machine Learning Research. 2006;7:2541–2563. [Google Scholar]

- 21.She Y. Sparse regression with exact clustering. Electronic Journal of Statistics. 2010;4:1055–1096. [Google Scholar]

- 22.James GM, Paulson C, Rusmevichientong P. Tech. Rep. University of Southern California; 2013. The constrained lasso. http://www-bcf.usc.edu/~gareth/research/CLassoFinal.pdf. [Google Scholar]

- 23.Bernste D. Matrix Mathematics. Princeton University Press; 2009. [Google Scholar]

- 24.Searle SR, editor. Matrix Algebra Useful for Statistics. John Wiley & Sons, Inc.; 1982. [Google Scholar]

- 25.Mairal J, Yu B. International Conference on Machine Learning. Edinburgh, Scotland, UK: 2012. Complexity analysis of the lasso regularization path; pp. 353–360. [Google Scholar]

- 26.Duan J, Zhang J-G, Lefante J, Deng H-W, Wang Y-P. IEEE international conference on bioinformatics and biomedicine workshops. Atlanta, GA, USA: 2011. Detection of copy number variation from next generation sequencing data with total variation penalized least square optimization; pp. 3–12. [Google Scholar]

- 27.Friedman J, Hastie T, Höfling H, Tibshirani R. Pathwise co-ordinate optimization. The Annals of Applied Statistics. 2007;1(2):302–332. [Google Scholar]

- 28.Donoho DL, Johnstone IM. Adapting to unknown smoothness via wavelet shrinkage. J. Amer. Statist. Assoc. 1995;90(432):1200–1224. [Google Scholar]

- 29.Chambolle A, Lions P-L. Image recovery via total variation minimization and related problems. Numer. Math. 1997;76:167–188. [Google Scholar]

- 30.Nguyen NH, Tran TD. Robust lasso with missing and grossly corrupted observations. IEEE Trans. Inf. Theory. 2013;59(4):2036–2058. [Google Scholar]

- 31.Zou H. The adaptive lasso and its oracle properties. J. Amer. Statist. Assoc. 2006;101(476):1418–1429. [Google Scholar]

- 32.Kim S-J, Koh K, Boyd S, Gorinevsky D. ℓ1 trend filtering. SIAM review. 2009;51(2):339–360. [Google Scholar]

- 33.Viallon V, Lambert-Lacroix S, Hoeing H, Picard F. Tech. Rep. Université de Lyon; 2013. Adaptive generalized fused-lasso: Asymptotic properties and applications. http://www.statistics.gov.hk/wsc/IPS012-P1-S.pdf. [Google Scholar]

- 34.Rudin L, Osher S, Fatemi C. Nonlinear total variation based noise removal algorithm. Physica D. 1992;60:259–268. [Google Scholar]

- 35.Tibshirani RJ. Adaptive piecewise polynomial estimation via trend filtering. Annals Statist. 2014;42(1):285–323. [Google Scholar]