Highlights

-

•

In joint action tasks, co-actors have a choice between different coordination mechanisms.

-

•

How joint actions are coordinated depends on shared perceptual information.

-

•

In the absence of shared visual information, co-actors rely on a general heuristic strategy.

-

•

When shared visual information is available, co-actors switch to a communicative strategy.

Keywords: Joint action, Interpersonal coordination, Nonverbal communication, Signaling, Predictability

Abstract

Previous research has identified a number of coordination processes that enable people to perform joint actions. But what determines which coordination processes joint action partners rely on in a given situation? The present study tested whether varying the shared visual information available to co-actors can trigger a shift in coordination processes. Pairs of participants performed a movement task that required them to synchronously arrive at a target from separate starting locations. When participants in a pair received only auditory feedback about the time their partner reached the target they held their movement duration constant to facilitate coordination. When they received additional visual information about each other’s movements they switched to a fundamentally different coordination process, exaggerating the curvature of their movements to communicate their arrival time. These findings indicate that the availability of shared perceptual information is a major factor in determining how individuals coordinate their actions to obtain joint outcomes.

1. Introduction

In order to perform joint actions such as throwing and catching a ball, walking hand-in-hand or moving a table together, two or more individuals need to coordinate their actions in space and time while overcoming challenges that arise from not having direct access to each other’s sensorimotor processes (Knoblich et al., 2011, Knoblich and Jordan, 2003, Wolpert et al., 2003). Previous research has identified several different coordination processes that enable joint action partners to overcome these challenges. In some instances, the key to coordination is monitoring each other’s actions and predicting their effects on joint outcomes (Keller, 2012, Loehr et al., 2013, Radke et al., 2011). In other instances, co-actors minimize the time spent in a shared workspace and move away from potential areas of collision, thereby reducing the need for fine-grained coordination (Richardson et al., 2011, Vesper et al., 2009). Further coordination processes include distributing tasks effectively (Brennan et al., 2008, Konvalinka et al., 2010), providing communicative signals (Pezzulo et al., 2013, Sacheli et al., 2013, Vesper and Richardson, 2014) and keeping one’s performance stable across consecutive instances of the same action (Vesper, van der Wel, Knoblich, & Sebanz, 2011).

This multitude of interpersonal coordination processes raises the question of what determines which kind of processes co-actors rely on when faced with a particular joint task. One obvious factor in determining which coordination process is applied is the perceptual information co-actors share. Accordingly, the current study investigated whether augmenting the amount of visual information shared between co-actors could cause a fundamental switch in the way co-actors coordinate their actions. We targeted two coordination processes that were recently reported in the joint action literature.

The first coordination process relates to the use of general heuristic strategies (“coordination smoothers”; Vesper, Butterfill, Knoblich, & Sebanz, 2010). One such coordination strategy is to reduce the temporal variability of one’s own behavior. This was shown in a reaction time (RT) study where two people were instructed to respond to visual stimuli as fast and as synchronously as possible (Vesper et al., 2011). RTs were less variable when participants performed the task together than when they performed the task alone. The reduction in intra-individual variability of RT proved to be effective for interpersonal coordination by reducing the asynchronies between co-actors’ responses. The underlying coordination strategy of generating consistent timing across multiple action repetitions can be employed across many different joint action contexts such as synchronous and sequential action performance (Vesper et al., 2011), even in the complete absence of information about a co-actor’s performance, and it has also been observed in joint actions of non-human primates (Visco-Comandini et al., 2015).

The second coordination process of sensorimotor communication (Pezzulo et al., 2013) assumes that people modulate their actions to transmit task-relevant information. This is particularly useful if one person has privileged access to information relevant for achieving a joint action goal. This person can modulate movement parameters such as direction, velocity, or grip size with the aim of providing specific information to a partner. The partner can identify these modulations as communicative because they deviate from the most efficient way of performing the action as predicted by their own forward models (Wolpert et al., 2003). The exaggerated kinematic information can help to choose between action alternatives (Candidi, Curioni, Donnarumma, Sacheli, & Pezzulo, 2015). It could, however, also support coordination in situations where there is no ambiguity about the joint action target, but uncertainty about another’s action timing.

Empirical support for sensorimotor communication has been provided by Sacheli et al. (2013) who instructed two co-actors to simultaneously grasp a bottle-shaped object either at the narrow upper part or at the wide lower part. ‘Leaders’, who knew where to grasp the object, exaggerated grip size, velocity, and the amplitude of their movements to inform naïve ‘followers’ about the intended grasp location. Similarly, in a study of synchronous tapping towards a sequence of target locations, leaders informed a naïve task partner about the location of an upcoming target by specifically exaggerating the amplitude of their trajectories relative to the distance between current hand position and subsequent target (Vesper & Richardson, 2014).

In order to test whether people spontaneously select different coordination processes depending on the availability of information about a joint action partner, we developed a task that enabled participants to coordinate either by reducing action variability or by modulating movement parameters in a communicative manner. In contrast to earlier studies on the modulation of movement parameters for communication, relevant task knowledge was not distributed asymmetrically. Therefore, communication was not necessary for successful joint action performance and both coordination processes might therefore be equally effective in supporting joint task performance.

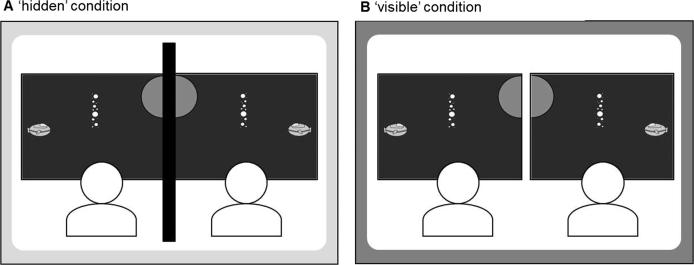

Pairs of participants performed speeded mouse movements to a target presented at the center of two adjacent computer screens (see Fig. 1). They were instructed to arrive at the target as synchronously as possible. The only aspect that differed between the two joint action conditions was whether co-actors could see each other’s screens and mouse movements (‘visible’ condition) or not (‘hidden’ condition). In line with previous research, we expected that in the ‘hidden’ condition co-actors would reduce the variability of movement times in order to make their actions more stable (Vesper et al., 2011) and to thus improve coordination.

Fig. 1.

Schematic depiction of the task setup in the two joint conditions. In the ‘hidden’ condition, an opaque partition prevented co-actors from seeing each other’s screens and movements.

Of central interest was which coordination process co-actors would rely on in the ‘visible’ condition. If the availability of shared visual information leads to a preference for communicative modulation of movement they should exaggerate aspects of their movements that provide information supporting coordination (Sacheli et al., 2013, Vesper and Richardson, 2014). Alternatively, co-actors may rely on the strategy of generating consistent timing across multiple action repetitions even if shared perceptual information is available.

2. Method

2.1. Participants

Thirty-two adults (mean age 21.0 years, SD = 1.61 years; all right-handed) participated in pairs (six female pairs, two male pairs, eight mixed pairs). They gave prior informed consent and received monetary compensation for their participation. The experiment was conducted in agreement with the Declaration of Helsinki.

2.2. Apparatus and stimuli

Participants were seated next to each other in front of two computer screens. Each screen showed a part of a “space scene” (Fig. 1) with three elements presented on a dark-blue background: (1) A yellow “spaceship” close to the outer margin of each screen (2.5 cm × 1.9 cm; positioned centrally on the vertical axis), indicating the starting position for each trial; (2) a small or large (radius of 2.0 cm or 3.8 cm) “planet” as the target which was a light-blue half circle on the inner margin of each screen at one of three possible vertical locations (20%, 50% or 80% from the upper screen margin); (3) an array of small differently-sized white dots, centered between “spaceship” and “planet”, representing an “asteroid belt” (1.9 cm × 9.3 cm; positions at 20%, 35%, 50%, 65% or 80% from upper screen margin) which, in half the trials, created an obstacle between the start and the target location.

The visual stimuli were presented on two 17″-screens (resolution 1280 × 1024 pixel, refresh rate 60 Hz). In the individual blocks and in the ‘hidden’ condition, an opaque black cardboard (70 × 100 cm) between participants prevented co-actors from seeing each other’s screens, mouse cursors and hand movements. The experiment was run on two Dell OptiPlex computers connected through a null-modem cable to enable online data exchange. Two gaming computer mouse devices (Logitech G500; automatic acceleration turned off) sampled participants’ movements at 100 Hz. Matlab 2012a was used for controlling the experiment and for data preparation.

2.3. Procedure

Participants performed the experiment in pairs. In a first block of trials, an individual condition (‘individual (pre)’) was recorded from each participant while the other participant waited outside the experimental room. In the next two blocks, participants performed the task together in the ‘hidden’ condition (where an opaque partition prevented co-actors from seeing each other’s screens and movements; Fig. 1A) and the ‘visible’ condition (Fig. 1B). The order of the joint conditions was counterbalanced between participant pairs. Finally, each participant completed another individual condition (‘individual (post)’) alone. Each block had 96 trials with short breaks after each 16th trial. The overall duration of the experiment was 1.5 h.

At the beginning of each trial, the “spaceship” was presented for 600 ms at the starting position. Next, the target (“planet”) and obstacle (“asteroid belt”) appeared. The spaceship briefly flashed for 200 ms to redirect participants’ attention to the start location. The relative position of target and obstacle locations determined whether or not the direct path between start and target location was blocked. In cases where it blocked the direct path, the obstacle was positioned such that the distance of moving above it or below it was identical. Participants were instructed to move a yellow circle (the mouse cursor) as fast as possible to the target without touching the obstacle and, in the joint conditions, to arrive at the target at the same time as the partner. In all conditions, a feedback tone (100 ms; frequency of 1100 Hz for the left-seated person and 1320 Hz for the right-seated person) was played as soon as the mouse cursor entered the target area. At the end of each trial, the target turned green to indicate successful task performance. It turned red if at least one participant did not reach the target after 1600 ms, touched the obstacle area, or if the absolute asynchrony between co-actors’ target arrival times in the joint conditions exceeded 400 ms1. Participants moved back to the starting position at their own pace. Participants were made aware in the instructions that both of them always received the same configuration of target position, target size and obstacle presence in each trial.

2.4. Data analysis

Each trajectory was normalized by resampling mouse-coordinates at 101 equally time-spaced values using linear interpolation (Spivey, Grosjean, & Knoblich, 2005). For each participant and condition, mean time to target (TTmean) was computed as the time interval between the external start signal (the flashing of the space ship) and arrival at the target. To determine spatial deviation from the direct path between start and target location, we flipped the right participant’s trajectories to match the direction between starting position and target for both participants. The trajectories were projected to the horizontal axis to compute mean area under the curve (AUCmean) (Spivey et al., 2005). Standard deviations for both measures were also computed (TTvariability and AUCvariability). Coordination accuracy was computed as mean absolute asynchrony of target arrival times. Trials in which participants’ TT was more than 1600 ms or in which they touched an obstacle were excluded (1.2% of all trials). In order to not inflate statistical power, dependent variables from the remaining trials were first aggregated on an individual participant level and then averaged over the two members of a pair2. All dependent variables (TT, AUC, asynchrony; reported in Table 1) were normalized by dividing data from each joint condition by the ‘individual (pre)’ condition acquired in the beginning of the experiment. Normalized performance in the ‘hidden’ and ‘visible’ conditions were compared with pair-wise t-tests and also tested with one-sample t-tests against 1 to detect differences to baseline performance (‘individual (pre)’). Where appropriate, Bonferroni-correction was applied. The influence of TT and AUC on asynchrony was tested with separate multiple regression analyses (‘enter’ method, IBM SPSS 22).

Table 1.

Non-normalized mean values (SD in parentheses) for all dependent variables.

| ‘hidden’ | ‘visible’ | ‘individual (pre)’ | ‘individual (post)’ | |

|---|---|---|---|---|

| TTmean (ms) | 603.1 (137.4) | 659.4 (169.6) | 704.7 (143.2) | 576.1 (98.2) |

| TTvariability (ms) | 108.4 (18.7) | 124.8 (24.8) | 152.3 (23.8) | 110.5 (17.5) |

| AUCmean (cm2) | 115 (10.6) | 125.4 (16) | 114.7 (11.7) | 119.6 (9.6) |

| AUCvariability (cm2) | 32 (5.9) | 34.9 (9.6) | 32.7 (6.1) | 33.3 (5.5) |

| Asynchrony (ms) | 119.1 (7.2) | 109 (7.1) | – | – |

3. Results

3.1. Effects of visibility

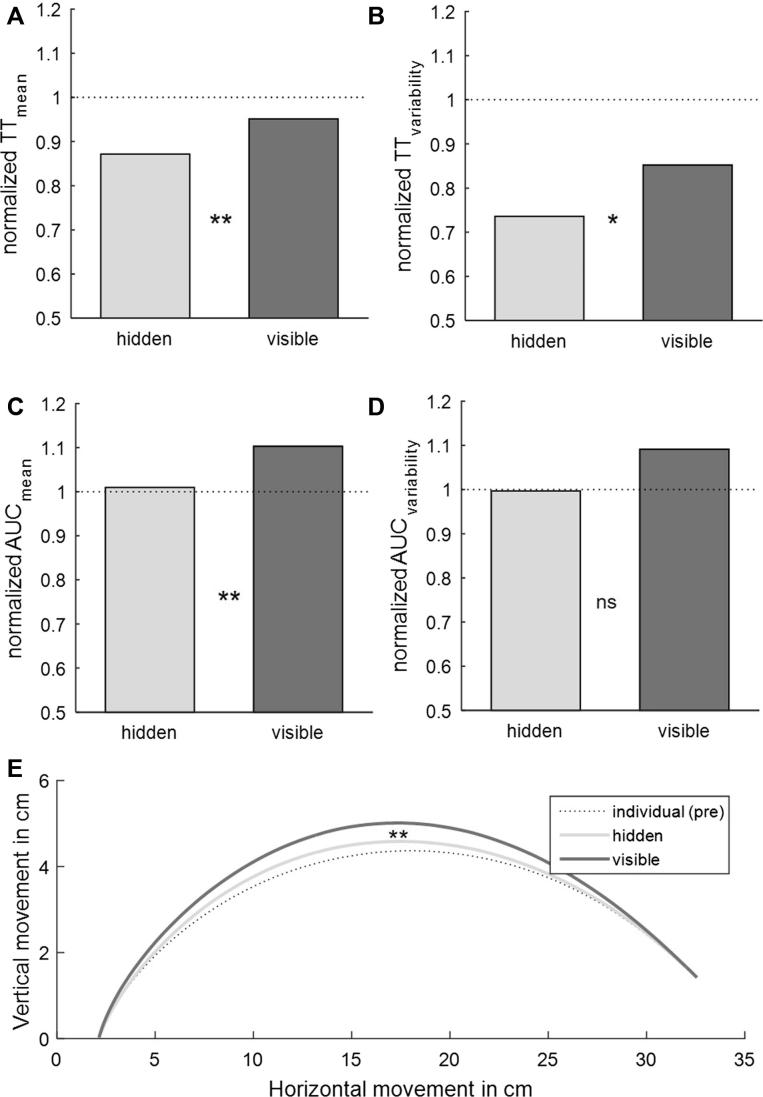

We compared co-actors’ normalized performance between the ‘hidden’ and ‘visible’ conditions (Fig. 2; for the non-normalized data see Table 1). In line with our hypothesis that co-actors would try to reduce the temporal variability of their actions when they could not see each other, performance was not only faster (smaller normalized TTmean), t(15) = −3.29, p < 0.01, Cohen’s d = −0.82, but also less variable (smaller normalized TTvariability), t(15) = −2.52, p < 0.05, Cohen’s d = −0.63, in the ‘hidden’ condition. In line with our hypothesis that co-actors would modulate movement parameters in a communicative manner when they could see each other, the analysis of normalized AUCmean showed a larger area under the curve in the ‘visible’ condition, t(15) = −3.82, p < 0.01, Cohen’s d = −0.96. The difference in variability of curvature (normalized AUCvariability), t(15) = −1.4, p > 0.1, Cohen’s d = −0.35, was not significant.

Fig. 2.

Results for (A and B) normalized time to target and (C and D) area under the curve in the ‘hidden’ and ‘visible’ conditions. The dotted lines show where performance would exactly match the ‘individual (pre)’ baseline acquired before the joint conditions. (E) Grand-averages of participants’ time-standardized movement trajectories. [∗ p<0.05; ∗∗ p<0.01; ∗∗∗ p<0.001].

3.2. Joint versus baseline performance

To further investigate how co-actors modulated their performance to achieve coordination in the ‘hidden’ and ‘visible’ conditions, we compared normalized TT and normalized AUC to the test value of 1 (dotted lines in Fig. 2). Values different from 1 indicate that co-actors modulated their performance in the joint conditions relative to the ‘individual (pre)’ condition. In the ‘hidden’ condition, normalized TTmean, t(15) = −4.1, p < 0.01, Cohen’s d = −1.03, and normalized TTvariability, t(15) = −8.01, p < 0.001, Cohen’s d = −2, were significantly reduced compared to baseline. In the ‘visible’ condition, the analysis was significant for normalized AUCmean, t(15) = 3.06, p < 0.05, Cohen’s d = 0.76, indicating that curvature was larger in the ‘visible’ condition. Normalized AUCvariability did not differ from baseline, t(15) = 1.04, p > 0.6, Cohen’s d = 0.26., but normalized TTvariability was also reduced in the ‘visible’ condition, t(15) = −3.07, p < 0.05, Cohen’s d = −0.7. There were no other significant results, all t < 1.3, all p > 0.4, all Cohen’s d < 0.3.

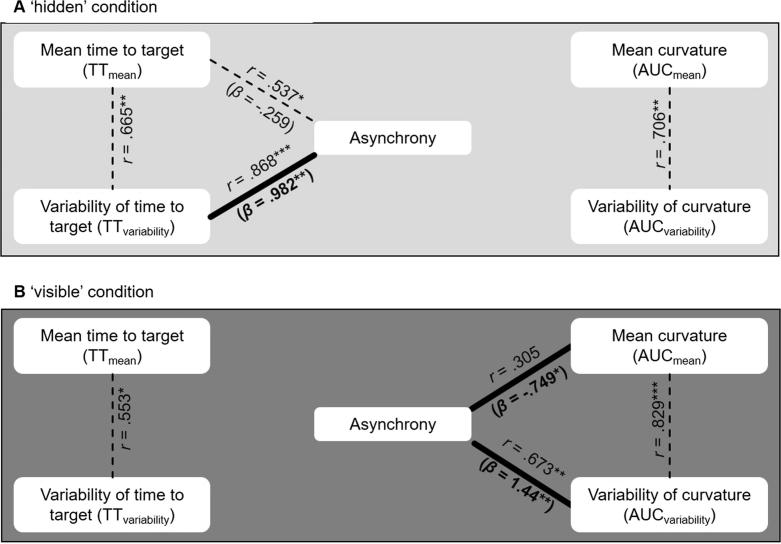

3.3. Effects on coordination

Mean absolute asynchrony (Table 1) in the ‘visible’ condition and in the ‘hidden’ condition did not differ significantly, indicating that whether or not participants could see each other did not affect their coordination success, t(15) = 1.24, p > 0.2, Cohen’s d = 0.31. To examine in more detail how performance variables influenced coordination, we performed two regression analyses entering TTmean, TTvariability, AUCmean, and AUCvariability as predictors for asynchrony.

The analysis of the ‘hidden’ condition (Fig. 3A) showed a significant overall effect, F(4, 11) = 10.41, p < 0.01. Importantly, TTvariability significantly predicted asynchrony when controlling for all other variables, as lower temporal variability led to better coordination. Additional zero-order correlations showed that TTmean might have been a mediator in this as it was correlated with both asynchrony and TTvariability.

Fig. 3.

Results of modeling the impact of the four performance variables on asynchrony. Significant multiple regression outcomes (thick lines; standardized coefficients in parentheses) and significant zero-order correlations (dotted lines) are shown. [∗ p<0.05; ∗∗ p<0.01; ∗∗∗ p<0.001].

For the ‘visible’ condition (Fig. 3B), there was also a significant overall effect, F(4, 11) = 8.57, p < 0.01. However, here AUCmean predicted asynchrony when controlling for all other variables such that larger movement curvature resulted in better coordination. AUCvariability also significantly predicted asynchrony such that asynchrony was lower the less spatially variable participants’ movements were. Additional zero-order correlations indicated that AUCmean and AUCvariability were correlated (higher curvature implied more variability). This points to a trade-off between generating salient perceptual input for the partner (high AUCmean) and minimizing its noise (small AUCvariability; Pezzulo et al., 2013). To validate this interpretation further, we calculated a signal-to-noise ratio as SNR = AUCmean/AUCvariability. As predicted, SNR was positively correlated with asynchrony such that pairs with a better signal-to-noise ratio arrived more synchronously at the target, r = 0.619, p < 0.05. Keeping curvature high and variability low was most effective in reducing asynchrony.

There were no other significant effects in the regression models, all β < 0.3, all t < 1.5, all p > 0.2, and no further significant zero-order correlations between the temporal and spatial variables in either condition, all r < 0.4, all p > 0.1. Moreover, the variance inflation factor (VIF) of the regression analyses were small (all VIF < 4.9), indicating that our effects were not due to multi-collinearity (Hair, Black, Babin, & Anderson, 2009).

4. Discussion

We investigated whether augmenting the amount of visual information available to co-actors makes them use different coordination processes: relying on reduction of temporal variability when perceptual information is scarce whereas using deviations from the most efficient movement path to inform the partner about action timing when visual information is shared. In support of these two processes, the mean and variability of time to target (TT) was lower in the ‘hidden’ condition than in the ‘visible’ condition while the mean of the area under the curve (AUC) was higher in the ‘visible’ condition. Crucially, the results of multiple regression analyses confirmed that in the ‘hidden’ condition, lower temporal variability led to better coordination, whereas in the ‘visible’ condition, larger deviations from the most efficient path resulted in better coordination.

The results for the ‘hidden’ condition confirmed previous findings that participants reduce timing variability across repeated actions when no other means for coordination are available (Vesper et al., 2011). Speeding up helped make their performance more stable, in line with the principle that moving one’s own temporal performance closer to its limits results in reduced variability (Wagenmakers & Brown, 2007). Because there was a general speedup in individual performance from ‘individual (pre)’ to ‘individual (post)’ it seems possible that general learning effects may account for the speedup and the reduction of variability in the ‘hidden’ condition. However, the strong influence of variability reduction on coordination, with higher reduction leading to lower asynchronies in the ‘hidden’ condition, makes such an explanation unlikely. Our earlier results clearly show that such an influence exists only if participants intend to synchronize their actions with one another but not when they perform exactly the same task without synchronization instruction (Vesper et al., 2011). Thus the reduction of variability in the present task likely reflects the workings of a general heuristic strategy that shares similarities to heuristics using common knowledge for decision making (Schelling games, where for example the most salient landmark is chosen as a meeting point; Clark, 1996, Schelling, 1980).

The results for the ‘visible’ condition showed that participants chose a different coordination process and used spatial deviations from the most efficient trajectory to inform their partner, although reducing timing variability would have led to a similar level of coordination success (overall, asynchronies were not smaller than in the ‘hidden’ condition). This form of coordination is often referred to as sensorimotor communication (Pezzulo et al., 2013) and depends on the partner’s ability to predict efficient trajectories (Wolpert et al., 2003) and to compute deviations from them. Larger deviations normally imply increased variability of the trajectory, so participants needed to find a trade-off between producing large deviations and minimizing noise (Candidi et al., 2015, Pezzulo et al., 2013). Accordingly, our results showed that coordination performance was better in pairs with a signal-to-noise ratio that allowed them to keep curvature high and variability low. The extra effort invested to inform the partner suggests that sensorimotor communication is a deliberate attempt to coordinate. The present results show that sensorimotor communication cannot only be used by a leader to communicate to a follower which action to select but it can also be used to exchange information reciprocally in the service of coordination.

One might argue that the differences between the two joint conditions can be explained by visuomotor interference (Brass et al., 2001, Kilner et al., 2003, Sacheli et al., 2013) or dynamic entrainment processes (Richardson, Marsh, Isenhower, Goodman, & Schmidt, 2007). Both accounts would also predict that availability of visual information could influence two partners’ action performance. However, it is unlikely that these processes can account for our findings. First, neither process can explain why co-actors’ did not choose the most efficient trajectories given that the required movements were always symmetrical for the two partners (same obstacles, same target). Second, the observed deviation from the trajectory was also present in trials where participants performed different movements, i.e. where one participant moved above the obstacle to the target while the other moved below the obstacle (36% of trials in ‘visible’ with an AUCmean of 120.5 cm2, 39% in ‘hidden’ with an AUCmean of 108 cm2, t(15) = −2.38, p < 0.05). Third, an analysis of the similarity of AUCmean between the two co-actors within single trials showed no difference in correlation between ‘hidden’ and ‘visible’ (both r = 0.46), suggesting that there was no immediate online influence of the partner’s performance.

Although the present study found no indication for involvement of visuomotor or entrainment processes, it is likely that participants would come to rely on these processes in situations where they are effective in supporting coordination (Candidi et al., 2015, Sacheli et al., 2013, Vesper and Richardson, 2014). Along these lines, further work could investigate whether visual information about the co-actor’s hand movements can be used to improve interpersonal action coordination. Another direction for future studies is to examine in more detail whether and how the properties of obstacles, that are either the same or different for the two partners, influence joint action coordination.

Taken together, the present findings suggest three main conclusions. First, reducing temporal variability (Vesper et al., 2011) constitutes a default strategy that can be used in situations where no other means of coordination are available (Vesper et al., 2010). Second, sensorimotor communication is not restricted to situations where a knowledge asymmetry between co-actors enforces information exchange (Sacheli et al., 2013, Vesper and Richardson, 2014). Third, participants may generally prefer sensorimotor communication over reducing temporal variability when visual channels for communication are available.

Acknowledgement

The research reported in this article was supported by the European Research Council under the European Union’s Seventh Framework Program (FP7/2007–2013)/ERC (European Research Council) grant agreement 609819, SOMICS (Constructing Social Minds: Coordination, Communication, and Cultural Transmission), and by ERC grant agreement 616072, JAXPERTISE. We thank Veronika Herendy and Annamaria Lisincki for their help with data collection.

Footnotes

The data of four pilot participants were all within the range of these limits (TT = 972 ms, SD = 267 ms, and asynchrony = 95.2 ms, SD = 84.4 ms).

For AUC and TT, we performed the same analyses based on individual participants’ data without averaging across pairs. These analyses revealed no qualitative differences to the results from averaged pairs.

Supplementary data associated with this article can be found, in the online version, at http://dx.doi.org/10.1016/j.cognition.2016.05.002.

Appendix A. Supplementary material

This is open data under the CC BY license (http://creativecommons.org/licenses/by/4.0/).

References

- Brass M., Bekkering H., Prinz W. Movement observation affects movement execution in a simple response task. Acta Psychologica. 2001;106:3–22. doi: 10.1016/s0001-6918(00)00024-x. [DOI] [PubMed] [Google Scholar]

- Brennan S.E., Chen X., Dickinson C.A., Neider M.B., Zelinsky G.J. Coordinating cognition: The costs and benefits of shared gaze during collaborative search. Cognition. 2008;106:1465–1477. doi: 10.1016/j.cognition.2007.05.012. [DOI] [PubMed] [Google Scholar]

- Candidi M., Curioni A., Donnarumma F., Sacheli L.M., Pezzulo G. Interactional leader–follower sensorimotor communication strategies during repetitive joint actions. Journal of the Royal Society Interface. 2015;12:20150644. doi: 10.1098/rsif.2015.0644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark H.H. Cambridge University Press; Cambridge, England: 1996. Using language. [Google Scholar]

- Hair J.F., Black W.C., Babin B.J., Anderson R.E. 7th ed. Prentice Hall; Upper Saddle River: 2009. Multivariate data analysis. [Google Scholar]

- Keller P.E. Mental imagery in music performance: Underlying mechanisms and potential benefits. Annals of the New York Academy of Science. 2012;1252:206–213. doi: 10.1111/j.1749-6632.2011.06439.x. [DOI] [PubMed] [Google Scholar]

- Kilner J., Paulignan Y., Blakemore S. An interference effect of observed biological movement on action. Current Biology. 2003;13:522–525. doi: 10.1016/s0960-9822(03)00165-9. [DOI] [PubMed] [Google Scholar]

- Knoblich G., Butterfill S., Sebanz N. Psychological research on joint action: theory and data. In: Ross B., editor. Vol. 54. Academic Press; Burlington: 2011. pp. 59–101. (The psychology of learning and motivation). [Google Scholar]

- Knoblich G., Jordan J.S. Action coordination in groups and individuals: Learning anticipatory control. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2003;29(5):1006–1016. doi: 10.1037/0278-7393.29.5.1006. [DOI] [PubMed] [Google Scholar]

- Konvalinka I., Vuust P., Roepstorff A., Frith C.D. Follow you, follow me: Continuous mutual prediction and adaptation in joint tapping. Quarterly Journal of Experimental Psychology. 2010;63:2220–2230. doi: 10.1080/17470218.2010.497843. [DOI] [PubMed] [Google Scholar]

- Loehr J., Kourtis D., Vesper C., Sebanz N., Knoblich G. Monitoring individual and joint action outcomes in duet music performance. Journal of Cognitive Neuroscience. 2013;25:1049–1061. doi: 10.1162/jocn_a_00388. [DOI] [PubMed] [Google Scholar]

- Pezzulo G., Donnarumma F., Dindo H. Human sensorimotor communication: A theory of signaling in online social interactions. PLoS ONE. 2013;8(11):e79876. doi: 10.1371/journal.pone.0079876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Radke S., de Lange F., Ullsperger M., de Bruijn E. Mistakes that affect others: An fMRI study on processing of own errors in a social context. Experimental Brain Research. 2011;211:405–413. doi: 10.1007/s00221-011-2677-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richardson M.J., Harrison S.J., May R., Kallen R.W., Schmidt R.C. Self-organized complementary coordination: Dynamics of an interpersonal collision-avoidance task. BIO Web of Conferences. 2011;1:00075. doi: 10.1037/xhp0000041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richardson M.J., Marsh K.L., Isenhower R.W., Goodman J.R., Schmidt R. Rocking together: Dynamics of intentional and unintentional interpersonal coordination. Human Movement Science. 2007;26:867–891. doi: 10.1016/j.humov.2007.07.002. [DOI] [PubMed] [Google Scholar]

- Sacheli L., Tidoni E., Pavone E., Aglioti S., Candidi M. Kinematics fingerprints of leader and follower role-taking during cooperative joint actions. Experimental Brain Research. 2013;226(4):473–486. doi: 10.1007/s00221-013-3459-7. [DOI] [PubMed] [Google Scholar]

- Schelling T.C. Harvard University Press; 1980. The strategy of conflict. [Google Scholar]

- Spivey M., Grosjean M., Knoblich G. Continuous attraction toward phonological competitors. Proceedings of the National Academy of Sciences. 2005;102:10393–10398. doi: 10.1073/pnas.0503903102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vesper C., Butterfill S., Knoblich G., Sebanz N. A minimal architecture for joint action. Neural Networks. 2010;23(8–9):998–1003. doi: 10.1016/j.neunet.2010.06.002. [DOI] [PubMed] [Google Scholar]

- Vesper C., Richardson M.J. Strategic communication and behavioral coupling in asymmetric joint action. Experimental Brain Research. 2014;232:2945–2956. doi: 10.1007/s00221-014-3982-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vesper C., Soutschek A., Schubö A. Motion coordination affects movement parameters in a joint pick-and-place task. Quarterly Journal of Experimental Psychology. 2009;62(12):2418–2432. doi: 10.1080/17470210902919067. [DOI] [PubMed] [Google Scholar]

- Vesper C., van der Wel R., Knoblich G., Sebanz N. Making oneself predictable: Reduced temporal variability facilitates joint action coordination. Experimental Brain Research. 2011;211:517–530. doi: 10.1007/s00221-011-2706-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Visco-Comandini F., Ferrari-Toniolo S., Satta E., Papazachariadis O., Gupta R., Nalbant L.E., Battaglia-Mayer A. Do non-human primates cooperate? Evidences of motor coordination during a joint action task in macaque monkeys. Cortex. 2015;70:115–127. doi: 10.1016/j.cortex.2015.02.006. [DOI] [PubMed] [Google Scholar]

- Wagenmakers E.-J., Brown S. On the linear relation between the mean and the standard deviation of a response time distribution. Psychological Review. 2007;114:830–841. doi: 10.1037/0033-295X.114.3.830. [DOI] [PubMed] [Google Scholar]

- Wolpert D.M., Doya K., Kawato M. A unifying computational framework for motor control and interaction. Philosophical Transactions of the Royal Society of London B. 2003;358:593–602. doi: 10.1098/rstb.2002.1238. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

This is open data under the CC BY license (http://creativecommons.org/licenses/by/4.0/).