Abstract

Background: The application of evidence-based medicine (EBM) to the practice of hand surgery has been limited. Production of high-quality research is an integral component of EBM. With considerable improvements in the quality evidence in both orthopedic and plastic and reconstructive surgery, it is imperative that hand surgery research emulates this trend. Methods: A systematic review was performed on all hand surgery articles published in 6 journals over a 20-year period. The journals included Plastic and Reconstruction Surgery, Journal of Plastic, Reconstructive and Aesthetic Surgery, Journal of Hand Surgery–European Volume, Journal of Hand Surgery–American Volume, Journal of Bone & Joint Surgery, and the Bone & Joint Journal. The level of evidence of each article was determined using the Oxford level of evidence. The quality of methodology of randomized controlled trials (RCTs) was assessed using Jadad scale. Statistical analysis involved chi-squares and Student t test (P < .05). Results: A total of 972 original hand surgery research articles were reviewed. There was a significant increase in the average level of evidence of articles published between1993 and 2013. High-quality evidence only accounted for 11.2% of evidence published, with a significant increase over the study period (P = 0.001). Quantitative evaluation of the 26 published RCTs, using Jadad scale, revealed a progressive improvement in study design from 0.3 in 1993 to 3.33 in 2013. Conclusions: Hand surgery research has mirrored trends seen in other surgical specialties, with a significant increase in quality of evidence over time. Yet, high-quality evidence still remains infrequent.

Keywords: hand surgery, levels of evidence, randomized controlled trials

Introduction

Research provides the scientific evidence required for the practice of clinical medicine. Out of necessity to comprehend this evidence, a conceptual methodological tool, known as evidence-based medicine (EBM), was established. Described as a major milestone in the advancement of medicine,17 EBM, when used in conjunction with clinical expertise, ensures a safe, reliable, and cost-effective delivery of health care to patients.14

The practice of EBM requires critical appraisal of the best available research. Hierarchal classification “pyramids,” an appraisal tool, arranges the evidence based on susceptibility to bias and threats to internal validity, with evidence most prone to these ranked at the bottom. The Oxford Centre for Evidence-Based Medicine (CEBM) Levels of Evidence11 is a commonly utilized ranking classification system. Higher levels of evidence are allocated level I (eg, randomized controlled trial [RCT]) and evidence from poorly designed research or with limited clinical application are allocated level V (eg, expert opinion).

Hand surgery has historically been at the forefront of surgical technique and innovation.15 Yet slow application of the principles of EBM into hand surgery practice has limited recent progression.13 To continually deliver optimal care to hand surgery patients, it is imperative that the highest quality research is available. Therefore, the aim of this study is to evaluate the quality of evidence in hand surgery research over a 20-year period.

Material and Methods

A systematic review was performed on all hand surgery articles published in 6 journals over a 20-year period, involving the years 1993, 1998, 2003, 2008, and 2013. The highest impact factor journals frequently publishing hand surgery research were selected. These included Plastic and Reconstructive Surgery (PRS); Journal of Plastic, Reconstructive and Aesthetic Surgery (JPRAS), formerly the British Journal of Plastic Surgery; Journal of Hand Surgery–European Volume (JHS-Eur); Journal of Hand Surgery–American Volume (JHS-Am); Journal of Bone & Joint Surgery (JBJS); and the Bone & Joint Journal (BJJ), formerly known as Journal of Bone and Joint Surgery (British Volume). These journals were accessed online.

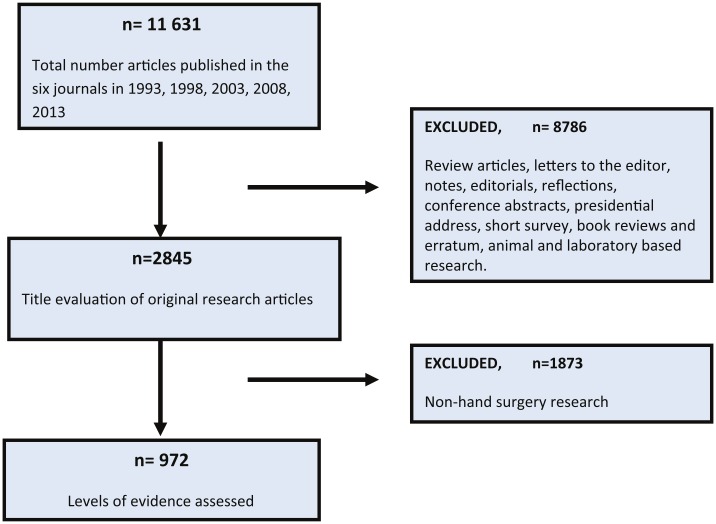

All original research articles (experimental, observation studies, case reports, and technical tips) were included in this systematic review (Figure 1). Review articles, letters to the editor, notes, editorials, reflections, invited articles, conference abstracts, book reviews, and erratum were excluded. This study focused on clinical research, so all animal- and laboratory-based research was not included. An initial title review of articles excluded all non–hand surgery research published within the selected journals. A consensus for hand surgery was agreed between authors to include all anatomy distal and including the proximal carpal row.

Figure 1.

A systematic flow diagram of study methodology.

Articles were reviewed by 2 authors who were blinded by each other’s results. The reviewers were not blinded to journal or year of publication. Any discrepancy between reviewers was subsequently discussed with the senior author and a consensus was reached. If inconsistencies arose between the levels of evidence assigned by the publishing journal and assessment made by reviewers, the latter was chosen.

Each clinical article was allocated a level of evidence using the guidelines published by the Oxford Centre for Clinical Evidence.11 The clinical aim of the research was initially subcategorized as per guidelines into therapy, prevention, aetiology, or harm, prognosis, diagnosis, symptom prevalence or differential diagnosis, or economic and decision analysis. The degree of validity and the bias from the study design of each article was evaluated and ranked from level I (highest) to level V (lowest). Further assessment of the quality of all the randomized clinical trials was performed using the Oxford quality scoring system, the Jadad scale.6 A score of 5 is given to each RCT based on methods relevant to random assignment, double blinding, and description of the flow of patients. Statistical analysis involved chi-squares and Student t tests (P < .05).

Results

A total of 11 631 articles were published in all six journals from the designated 5 years, over a 20-year period. Of these, 972 were original clinical hand surgery research and included for further analysis in study (Figure 1). The most published hand surgery topics were the carpus (21.6%), tendons (12.1%), and carpal tunnel (10.2%).

The number of hand surgery articles published per year progressively increased from 167 in 1993 to 217 in 2013 (P = .012). The 2 specific hand surgery journals, JHS-Eur and JHS-Am, published 83.7% (807/972) of all hand surgery articles. Within these journals, the proportion of articles specifically related to hand surgery increased over the 20-year period from 70.4% to 88.3 % for JHS-Eur and from 33.8% to 51.5% in JHS-Am. A similar trend was evident in the other universal journals publishing hand surgery research (PRS, JPRAS, JBJS, BJJ), with a collective proportional increase of published hand surgery articles from 16.7 % in 1998 to 27.9% in 2013.

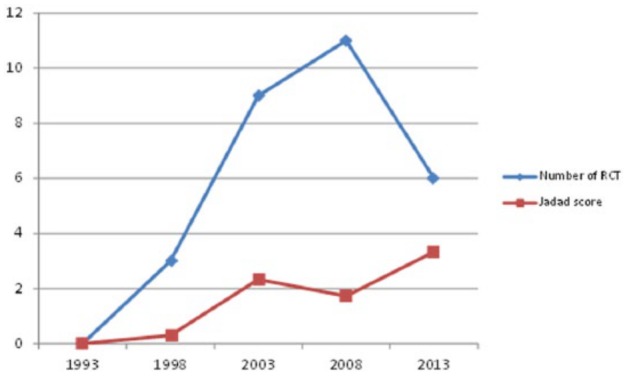

The most prevalent study methodology was case series, accounting for 47.6% (463/972) of published research. Evident was a decreasing trend in the number of case series over time, from 53.9% in 1993 to 41.9% in 2013 (P = .551). In comparison, cohort studies (retrospective and prospective) demonstrated an increase in the number of publications, from 16.1% in 1993 to 24.8% in 2013. RCTs accounted for 2.9% (29/972) of research, with a correspondingly low number (1.3%) of systematic reviews/meta-analyses (Table 1). A further review of 26 published RCTs demonstrated a considerable improvement in the study methodology with the Jadad score increased gradually from 0.3 in 1998 to 3.33 in 2013 (Figure 2).

Table 1.

Trends in the Prevalence of Research Study Design Over the 20-Year Period.

| Study type | 1993 (%) n = 167 | 1998 (%) n = 216 | 2003 (%) n = 181 | 2008 (%) n = 191 | 2013 (%) n = 217 | Total (%) N = 972 |

|---|---|---|---|---|---|---|

| Randomized controlled trials | 0 (0) | 3 (1.4) | 9 (4.9) | 11 (5.7) | 6 (2.7) | 29 (2.9) |

| Systematic review/meta-analysis | 1 (0.6) | 0 (0) | 1 (0.5) | 2 (1.0) | 9 (4.1) | 13 (1.3) |

| Prospective cohort | 10 (5.9) | 16 (7.4) | 19 (10.5) | 25 (13.0) | 25 (11.5) | 96 (9.8) |

| Retrospective cohort | 17 (10.2) | 12 (5.5) | 15 (8.3) | 26 (13.6) | 29 (13.3) | 99 (10.1) |

| Case-control | 5 (2.9) | 4 (2.4) | 5 (2.7) | 2 (1.0) | 6 (2.7) | 22 (2.2) |

| Case series | 90 (53.9) | 105 (48.6) | 95 (52.4) | 82 (42.9) | 91 (41.9) | 463 (47.6) |

| Case reports | 44 (26.3) | 76 (35.2) | 37 (20.4) | 42 (21.9) | 51 (23.5) | 250 (25.7) |

Figure 2.

Comparison between the number of published RCTs and quality of their methodology (1993-2013).

Note. RCT, randomized controlled trial.

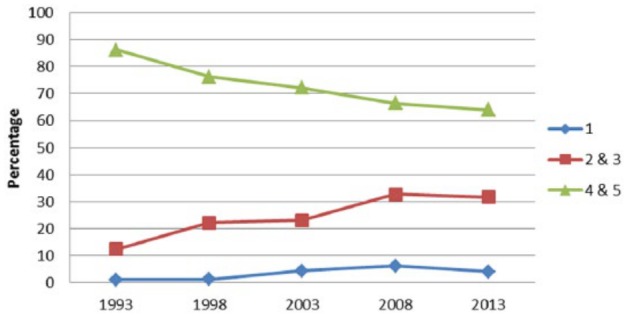

High-quality evidence (levels I and level II) accounted for 112 of 972 (11.5%) of research published, with a statistically increasing trend over the study period (P = .001). Level IV evidence was the most prevalent accounting for (45.2%) 440 of 972 of all published research (Table 2, Figure 3).

Table 2.

Levels of Evidence of Clinical Hand Surgery Research Over the 20-Year Period.

| Level of evidence | 1993 | 1998 | 2003 | 2008 | 2013 | Total (%) |

|---|---|---|---|---|---|---|

| 1a, 1b, 1c | 2 | 3 | 8 | 12 | 9 | 34 (3.5) |

| 2a, 2b | 7 | 13 | 16 | 18 | 24 | 78 (8.0) |

| 3a, 3b | 14 | 35 | 26 | 34 | 45 | 154 (15.8) |

| 4 | 86 | 96 | 93 | 79 | 86 | 440 (45.2) |

| 5 | 58 | 69 | 38 | 48 | 53 | 266 (27.3) |

Figure 3.

Trends in level of evidence in hand surgery articles over 20 years.

Evident was an increase in studies specifying the use statistical analysis (either P value or confidence intervals) from 19.4% to 58.3%. A comparison between levels of evidence in hand surgery research and other surgical specialties is displayed in Table 3.

Table 3.

Comparison of Level of Evidence Between Surgical Specialities.

| Level I, n (%) | Level II, n (%) | Level III, n (%) | Level IV, n (%) | ||

|---|---|---|---|---|---|

| Hand surgery | 2013 | 9 (5.5) | 24 (16.4) | 45 (27.4) | 86 (52.4%) |

| Spinal surgery | 2013 | 33 (4.7) | 163 (23.2) | 88 (12.5) | 419 (59.6) |

| Facial cosmetic | 2008 | 4 (1.7) | 24 (10.2) | 35 (14.8) | 173 (73.3) |

| Foot and ankle | 2010 | 7 (6) | 14 (12) | 18 (15) | 78 (67.8) |

Discussion

Evaluating the quality of published research, an integral component of EBM, facilitates informed clinical decisions. The number of hand surgery research articles, as demonstrated, has progressively increased over the last 20 years, making critical appraisal more arduous. Hierarchical evidence appraisal is a reliable, reproducible, and rapid tool for evaluating the quality of evidence.12 Acknowledgment of the importance of these “level of evidence” has increased in hand surgery, with multiple journals requesting an allocation on submission of a manuscript. This study demonstrates a progressive increase in the quality of evidence published in hand surgery research, confirming that hand surgery is emulating the trends of orthopedic10 and plastic and reconstructive surgery8 by adapting to the demands of EBM.

Low-quality evidence (levels IV and V) accounted for a substantial proportion (82.5%) of published hand surgery research, with case series the most prevalent study type. Describing a cohort of patients who all undergo the same intervention over a period of time, case series mirror realistic clinical situations.5 For example, Brunelli et al assessed the functional and aesthetic outcomes following exteriorization the pedicle in partial second toe transfer for reconstructing the distal phalanx in 10 patients.4 Case series are frequently the only appropriate study type in evaluating novel surgical techniques in a rare cohort of patients, as demonstrated by Brunelli et al. However, the absence of a control group and overwhelming selection and observation biases justify the low allocation of case series in hierarchical evidence pyramid. The inclusion of a control group within the research design, as seen in cohort studies, provides a temporal framework for the assessment of causality, consequently improving the quality of research. Improved understanding and application of EBM principles can account for the 12% decrease in the prevalence of cases series, with a subsequent 8.7% increase in cohort studies over the last 20 years.

RCTs are widely accepted as the most definitive research design for assessing the effectiveness of an intervention.2 Despite 72.2% of all hand surgery research centered on therapeutic interventions, there is an exceptionally low number of published RCTs (2.2%). Other surgical specialties report comparably low numbers: otolaryngology, 3.7%18; ophthalmology, 4.9%7; and foot and ankle surgery, 4.1%.1 These similar trends highlight well-documented ethical and practical problems in performing RCTs in surgery.9 Solomon and McLeod postulate that less than 40% of surgical questions are amenable to RCTs.16 In hand surgery, difficulties arise in blinding, randomization, and standardization of both procedures and surgical skill. The desire for higher quality evidence will unavoidably result in poorly conducted RCTs, with misleading results. The significant clinical influence of the outcomes from RCTs necessitates methodology evaluation. In using the Jadad scale, a critical appraisal scale of RCT methods, this trend analysis illustrated a substantial improvement in RCT design, over a 20-year period. This progression has corresponded with the journals publishing hand surgery research adopting the Consolidated Standards of Reporting Trials3 statement in reporting of RCTs. This 25-point checklist ensures all critical information regarding RCTs is accurately recorded, providing transparent information on both methodology and results.

There are some limitations to this study. First, restricting hand surgery research to journals frequently publishing hand-related articles resulted in a potential omission of evidence published in different journals of a higher impact factor. Selecting 5-year intervals for review provided the framework for a trend analysis, but as a result research falling between these years was not analyzed. Blinding the reviewer to both the year and the name of the journal was not feasible therefore potentially introducing a selection bias. Multiple hierarchical evidence appraisal systems exist, yet the Oxford CEBM Level of Evidence 2011 guidelines were chosen as they are commonly utilized in comparable research. The strength of the study is its large sample of articles reviewed in a systematic method, with the reviewers blinded to each other’s results. Selecting the journal publishing hand surgery based on having highest impact means the research evaluated is the same that influences clinical decision making.

Conclusion

Hand surgery research is slowly adopting the principles of EBM into clinical practice. Growing recognition and understanding of EBM has improved study designs across all levels of evidence. The reliance on case series and nonclinical research will inheritably hamper the future progression of hand surgery. Multiple barriers for performing higher quality studies must be assessed, as this high-level research is a requirement for the delivery of optimal care to patients.

Footnotes

Ethical Approval: This study was approved by our institutional review board.

Statement of Human and Animal Rights: This article does not contain any studies with human or animal subjects.

Statement of Informed Consent: Informed consent was not needed as this was a review article.

Declaration of Conflicting Interests: The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The authors received no financial support for the research, authorship, and/or publication of this article.

References

- 1. Barske HL, Baumhauer J. Quality of research and level of evidence in foot and ankle publications. Foot Ankle Int. 2012;33:1-6. [DOI] [PubMed] [Google Scholar]

- 2. Becker A, Blümle A, Momeni A. Evidence-based Plastic and Reconstructive Surgery: developments over two decades. Plast Reconstr Surg. 2013;132:657-663. [DOI] [PubMed] [Google Scholar]

- 3. Begg C, Cho M, Eastwood S, et al. Improving the quality of reporting of randomized controlled trials. The CONSORT statement. JAMA. 1996;276:637-639. [DOI] [PubMed] [Google Scholar]

- 4. Brunelli F, Spalvieri C, Rocchi L, Pivato G, Pajardi G. Reconstruction of the distal finger with partial second toe transfers by means of an exteriorised pedicle. J Hand Surg Eur Vol. 2008;33:457-461. [DOI] [PubMed] [Google Scholar]

- 5. Coroneos CJ, Ignacy TA, Thoma A. Designing and reporting case series in plastic surgery. Plast Reconstr Surg. 2011;128:361-368. [DOI] [PubMed] [Google Scholar]

- 6. Jadad AR, Moore RA, Carroll D. Assessing the quality of reports of randomized clinical trials: is blinding necessary? Control Clin Trials. 1996;17:1-12. [DOI] [PubMed] [Google Scholar]

- 7. Lai TY, Leung GM, Wong VW, Lam RF, Cheng AC, Lam DS. How evidence-based are publications in clinical ophthalmic journals? Invest Ophthalmol Vis Sci. 2006;47:1831-1838. [DOI] [PubMed] [Google Scholar]

- 8. Loiselle F, Mahabir RC, Harrop AR. Levels of evidence in plastic surgery research over 20 years. Plast Reconstr Surg. 2008;121:207-211. [DOI] [PubMed] [Google Scholar]

- 9. McCulloch P, Taylor I, Sasako M, Lovett B, Griffin D. Randomised trials in surgery: problems and possible solutions. BMJ. 2002;324:1448-1451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Obremskey W, Pappas N, Attallah-Wasif E, Tornetta P, Bhandari M. Level of evidence in orthopaedic journals. J Bone Joint Surg Am. 2005;87:2632-2638. [DOI] [PubMed] [Google Scholar]

- 11. OCEBM Levels of Evidence Working Group. The Oxford 2011 Levels of Evidence. Oxford, UK: Centre for Evidence-Based Medicine. [Google Scholar]

- 12. Poolman RW, Kerkhoffs GM, Struijs PA, Bhandari M. Don’t be misled by the orthopaedic literature: tips for critical appraisal. Acta Orthop. 2007;78:162-171. [DOI] [PubMed] [Google Scholar]

- 13. Rosales R, Reboso-Moralesm L, Yolanda MH, Diez I. Level of evidence in hand surgery. BMC Research Notes. 2012;5:665-667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Rosenberg W, Donald A. Evidence based medicine: an approach to clinical problem-solving. BMJ. 1995;310:1122-1126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Schmitt T, Talley J, Chang J. New concepts and technologies in reconstructive hand surgery. Clin Plast Surg. 2012;39:445-451. [DOI] [PubMed] [Google Scholar]

- 16. Solomon MJ, McLeod RS. Should we be performing more randomized controlled trials evaluating surgical operations? Surgery. 1995;118:459-467. [DOI] [PubMed] [Google Scholar]

- 17. Watts G. Let’s pension off the “major breakthrough.” BMJ. 2007;334(suppl 1):s4. [DOI] [PubMed] [Google Scholar]

- 18. Yao F, Singer M, Rosenfeld RM. Randomized controlled trials in otolaryngology journals. Otolaryngol Head Neck Surg. 2007;137:539-544. [DOI] [PubMed] [Google Scholar]