“The Dress” is a peculiar photograph: by themselves the dress’ pixels are brown and blue, colors associated with natural illuminants [1], but popular accounts suggest the dress appears white/gold or blue/black [2]. Could the purported categorical perception arise because the original social-media question was an alternative-forced-choice? In a free-response survey (N=1401), we found that most people, including those naïve to the image, reported white/gold or blue/black, but some said blue/brown. Reports of white/gold over blue/black were higher among older people and women. On re-test, some subjects reported a switch in perception, showing the image can be multistable. In a language-independent measure of perception, we asked subjects to identify the dress’ colors from a complete color gamut. The results showed three peaks corresponding to the main descriptive categories, providing additional evidence that the brain resolves the image into one of three stable percepts. We hypothesize these reflect different internal priors: some people favor a cool illuminant (blue sky), discount shorter wavelengths, and perceive white/gold; others favor a warm illuminant (incandescent light), discount longer wavelengths, and see blue/black. The remaining subjects may assume a neutral illuminant, and see blue/brown. By introducing overt cues to the illumination, we can flip the dress color.

Popular accounts suggest The Dress (Figure 1A/B) elicits large individual differences in color perception [2]. We confirmed this in a survey of 1401 subjects (313 naïve; 53 tested in laboratory; 28/53 re-tested). Subjects were asked to complete: “this is a _______ and ______ dress” (Supplemental Experimental Procedures).

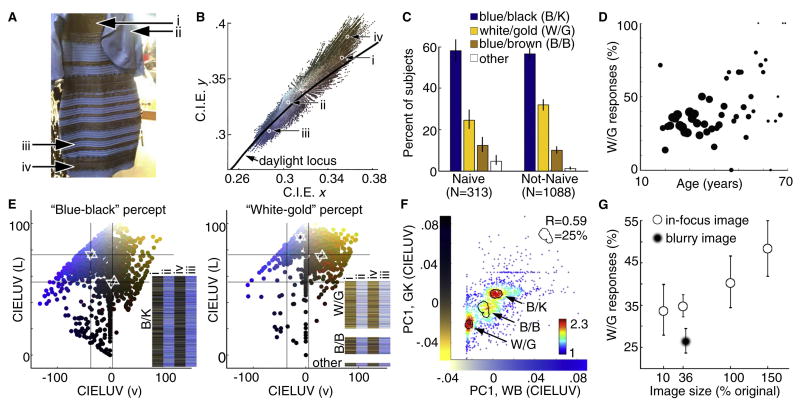

Figure 1.

Striking differences in color perception of The Dress. (A) Original photograph, reproduced with permission from Cecilia Bleasdale. (B) Pixel chromaticities for the dress. (C) Histogram of color descriptions, for naïve (N=313) and non-naive (N=1088) subjects. Error bars are 95% C.I. (D) Of subjects who reported W/G or B/K (N=1221), the odds of reporting W/G increased by a factor 1.02 per unit age, p=0.0035, 95% C.I. [1.01-1.03] (Table S1). Symbol size denotes number of subjects (largest dot=76; smallest dot=1). (E) Color matches for regions i-iv (panel A), sorted by color description (B/K, left; W/G, right). Symbols show averages (upward triangles, regions i and ii; downward triangles, regions iii and iv), and contain 95% C.I.s of the mean. Grid provides a reference across the B/K and W/G panels. Insets depict color matches for individual subjects in each row, sorted by description. (F) Color matches for region (i) plotted against matches for region (ii) for all subjects (R=0.59, p<0.0001). Contours contain the highest density (25%) of respondents obtained in separate plots (not shown) generated by sorting the data by description (B/K, W/G, B/B). The first principal component of the population matches to (i, iv) defined the y axis (gold/black, “GK”); the first PC of the population matches to (ii, iii) defined the x axis (white/blue, “WB”). Each subject’s (x,y) values are the PC weights for their matches (Supplementary Experimental Procedures). Color scale is number of subjects. (G) Among W/G or B/K respondents, percent of W/G responses increased with image size (N=235, 10%; N=1223, 36%; N=245, 100%; N=215, 150%; p < 0.0001, OR=1.004 [1.002-1.007]). The horizontal dimension of the image was about 2°, 7.2 °, 20°, and 30° of visual angle. Blurring the image biased responses towards B/K (N=1048, image was 41% of original size; image was 260×401pixels with a 0.11° pixel radius Gaussian bl ur; Chi-square, p<0.0001).

Overall, 57% of subjects described the dress as blue/black (B/K); 30%, white/gold (W/G); 11%, blue/brown (B/B); and 2%, other. Redundant descriptions (e.g. “white-golden”, “white-goldish”) were binned. Naïve and non-naïve populations showed similar distributions (Figure 1C), although non-naïve subjects used a smaller number of unique descriptions (Figure S1A). When country (Figure S1B) was removed from the logistic regression (Table S1), experience became a predictor: non-naïve subjects were more likely to choose B/K or W/G, over B/B or other (p = 0.021, Wald chi-square; Odds Ratio (OR) = 1.53, 95% C.I. [1.06-2.17]). These results show that experience shaped the language used to describe the dress, and possibly the perception of it. Males were less likely than females to report W/G over B/K (p = 0.019, OR=0.75, [0.58-0.95]). Moreover, odds of reporting W/G increased with age (Figure 1D). Of non-naive subjects, 45% reported a switch since first exposure. Three of 28 subjects retested in laboratory reported a switch between sessions. Subjects whose perception switched were more likely to report B/K (p = 0.0003, OR = 0.60 [0.46-0.79], where W/G=success).

Subjects were asked to match the dress’ colors. Blue pixels (ii, iii, Figure 1A) were consistently matched bluer by subjects reporting B/K and whiter by subjects reporting W/G, whereas brown pixels (i, iv) were matched blacker by subjects reporting B/K and golden by subjects reporting W/G (Figure 1E; Figure S1C). For a given region, average color matches made by W/G perceivers differed in both lightness and hue from matches made by B/K perceivers (p vals.<0.0001). Intra-subject reliability was significant (Figure S1D,E). Across all subjects, matches for (i) were predictive of matches for (ii); moreover, the density plot showed three peaks (Figure 1F; Figure S1F,G). The highest density of W/G, B/K, and B/B responders (contours Figure 1F) coincide with these peaks, suggesting that the brain resolves the image into one of three stable percepts.

We suspect that priors on both material properties [3, 4] and illumination [5] are implicated in resolving the dress’ color. In the main experiment, the image was 36% of the original size. In a follow-up experiment (N=853 additional subjects), the fraction of W/G respondents rose with increasing image size (Figure 1G). This suggests that high-spatial frequency information (a cue to dress material), more evident at larger sizes, biases interpretation toward W/G. To further test this, we determined responses to a blurry image: the fraction of W/G respondents dropped. Subjects also rated the illumination for The Dress and two test images showing the dress under cool or warm illumination (Figure S2A). Judgment variance was higher for the original than for either test (cool, p=10-5; warm, p=10-7, F-test), but similar for the tests (p=0.08), suggesting that illumination in The Dress is ambiguous. When the dress was embedded in a scene with unambiguous illumination cues, the majority of subjects conformed to a description predicted by the illumination (Figure S2B).

A color percept is the visual system’s best guess given available sense data and an internal model of the world [6]. Visual cortex shows a bias for colors associated with daylight [7, 8]; this bias may represent the brain’s internal model. We hypothesize that some brains interpret the surprising chromatic distribution (Figure 1B) as evidence that a portion of the spectral radiance is caused by a color bias of the illuminant [1] (Supplementary Discussion). Some people may expect a cool illuminant, discount short wavelengths, and perceive white/gold; others may favor a warm illuminant, discount longer wavelengths, and see blue/black. The remaining people may assume a neutral illuminant and see blue/brown. But what causes the individual differences? People experience different illuminants and adapt [9]. If exposure informs one’s prior, we might predict that older subjects and women are more likely to assume sky-blue illumination because they are more likely than younger subjects and men to have a daytime chronotype [10]. Consistent with this prediction, women and older people were more likely to see white/gold. Conversely, night owls may be more likely to assume a warmer illuminant [2] common for artificial light, and see blue/black. Alternatively, all people may have the same prior on the illuminant, but different priors on other aspects of the scene that interact to produce different percepts of the dress.

Supplementary Material

Document S1. Provides additional information on experimental procedures, results, analysis, and discussion.

Figure S1. Provides the analysis of the subset of subjects who performed the task in the laboratory under controlled viewing conditions (N=53; 28 of whom were tested on two sessions separated by 3-5 days), and naïve subjects who reported having not seen the image before (N=313). Figure also shows the analysis of subpopulations from India and US.

Figure S2. Shows how subjects responded when viewing the dress in a scene with unambiguous illumination cues.

Table S1. Results of the logistic regression models.

Acknowledgments

NIH R01 EY023322, NSF 1353571. Dave Markun and Kris Ehinger consulted on color correction and modeling. Steven Worthington helped with statistics. Jeremy Wilmer and Sam Norman-Haignere helped quantify individual differences. Alexander Rehding and Kaitlin Bohon gave comments.

Footnotes

Author contributions

BRC, RLS, and KLH conceived the experiment and analyzed the data. RLS generated the stimuli and KLH implemented the Mechanical Turk experiment. BRC wrote the manuscript.

Supplemental Information including two figures, one table, supplemental experimental procedures, supplemental discussion, and supplemental references and can be found online at *.bxs.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Rosa Lafer-Sousa, Email: rlaferso@mit.edu, Department of Brain and Cognitive Sciences, MIT, Cambridge MA 02139.

Katherine L. Hermann, Email: khermann@mit.edu, Neuroscience Program, Wellesley College, Wellesley MA, 02481; Department of Brain and Cognitive Sciences, MIT, Cambridge MA 02139.

Bevil R. Conway, Email: bevil@mit.edu, Neuroscience Program, Wellesley College, Wellesley MA, 02481; Department of Brain and Cognitive Sciences, MIT, Cambridge MA 02139.

References

- 1.Conway BR. Why do we care about the colour of the dress? The Guardian. 2015 http://www.theguardian.com/commentisfree/2015/feb/27/colour-dress-optical-illusion-social-media.

- 2.Rogers A. The Science of Why No One Agrees on the Color of This Dress. Wired. 2015 http://www.wired.com/2015/02/science-one-agrees-color-dress/

- 3.Zaidi Q. Cognitive Science. Vol. 2. John Wiley & Sons, Ltd; 2011. Nov-Dec. Visual inferences of material changes: color as clue and distraction. WIREs; pp. 686–700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Witzel C, Valkova H, Hansen T, Gegenfurtner KR. Object knowledge modulates colour appearance. iperception. 2011;2:13–49. doi: 10.1068/i0396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bloj MG, Kersten D, Hurlbert AC. Perception of three-dimensional shape influences colour perception through mutual illumination. Nature. 1999;402:877–879. doi: 10.1038/47245. [DOI] [PubMed] [Google Scholar]

- 6.Brainard DH, Longere P, Delahunt PB, Freeman WT, Kraft JM, Xiao B. Bayesian model of human color constancy. J Vis. 2006;6:1267–1281. doi: 10.1167/6.11.10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lafer-Sousa R, Liu YO, Lafer-Sousa L, Wiest MC, Conway BR. Color tuning in alert macaque V1 assessed with fMRI and single-unit recording shows a bias toward daylight colors. J Opt Soc Am A Opt Image Sci Vis. 2012;29:657–670. doi: 10.1364/JOSAA.29.000657. [DOI] [PubMed] [Google Scholar]

- 8.Conway BR. Color signals through dorsal and ventral visual pathways. Vis Neurosci. 2014;31:197–209. doi: 10.1017/S0952523813000382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.McDermott KC, Malkoc G, Mulligan JB, Webster MA. Adaptation and visual salience. J Vis. 2010;10:1–32. doi: 10.1167/10.13.17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Adan A, Archer SN, Hidalgo MP, Di Milia L, Natale V, Randler C. Circadian typology: a comprehensive review. Chronobiol Int. 2012;29:1153–1175. doi: 10.3109/07420528.2012.719971. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Document S1. Provides additional information on experimental procedures, results, analysis, and discussion.

Figure S1. Provides the analysis of the subset of subjects who performed the task in the laboratory under controlled viewing conditions (N=53; 28 of whom were tested on two sessions separated by 3-5 days), and naïve subjects who reported having not seen the image before (N=313). Figure also shows the analysis of subpopulations from India and US.

Figure S2. Shows how subjects responded when viewing the dress in a scene with unambiguous illumination cues.

Table S1. Results of the logistic regression models.