Abstract

A popular and successful class of decision-making models (the “evidence accumulator” models) has been recently challenged by a new hypothesis called the urgency-gating model. Hawkins et al. (J Neurophysiol 114: 40–47, 2015) used a sophisticated curve-fitting procedure to show that these models are discriminable and thus testable in constant evidence tasks. In this Neuro Forum article I raise possible limitations of such an approach, discuss some of its implications, and propose alternative solutions.

Keywords: urgency gating, evidence accumulation, goodness of fit, predictions, model parameters

the evidence accumulator models (EAMs; with all their variations) posit that when an observer decides between two possible interpretations of a noisy stimulus, sensory evidence is temporally integrated during the deliberation period until a commitment threshold is reached (Ratcliff 1978). This simple mechanism produces a remarkably good match with human decision data in a variety of tasks (Palmer et al. 2005; Ratcliff 2002; Ratcliff and McKoon 2008) and receives strong additional support from neurophysiological studies carried out on rodents and monkeys (see Gold and Shadlen 2007 for a review). Among them, a striking result was the observation that many neurons in the lateral intraparietal (LIP) area, a region involved in visual attention and eye movement preparation, increase their firing rate depending on the strength of sensory evidence (Roitman and Shadlen 2002). These buildup patterns have been interpreted as the neural correlate of the temporal integration proposed by accumulator models during decision making.

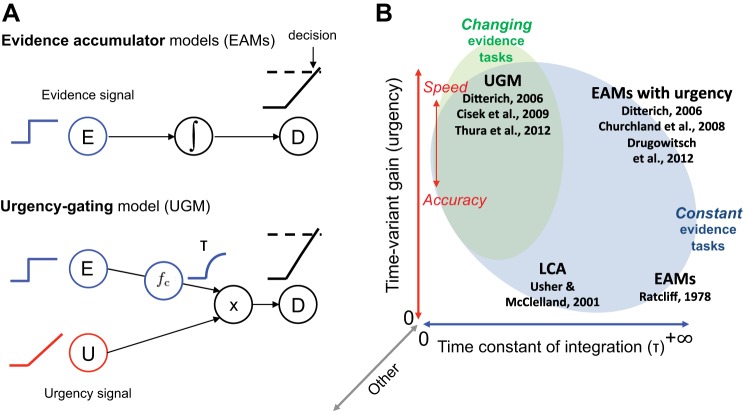

This influential theory has been recently challenged by an alternative proposal from our group. The motivation for this new model, called the urgency-gating model (UGM), was that perfect temporal integration of sensory evidence (always assumed by EAMs) cannot deal with rapid changes that often occur in real-life situations. In the UGM, sensory evidence is quickly estimated (via a low-pass filter, to manage noise) and then combined with a growing urgency signal that pushes the system until the commitment threshold is crossed (Cisek et al. 2009; Thura et al. 2012). From a mechanistic point of view, EAMs and UGM thus differ in the way the neural decision signal gradually builds up to the threshold: in EAMs, buildup solely relies on the slow integration of sensory evidence; in UGM, temporal integration of sensory evidence is brief (determined by the time constant of the low-pass filter), and it is the growing urgency that brings the signal to the threshold (Fig. 1A).

Fig. 1.

A: schema of the evidence accumulator models (EAMs; top) and the urgency-gating model (UGM; bottom). B: models of speeded decisions described in a space defined by 2 parameters: the time constant of evidence integration (abscissa) and the time-variant gain, implementing urgency (ordinate). Other parameters can possibly define extra dimensions (gray arrows). UGM refers to the urgency-gating model of Cisek and colleagues (Cisek et al. 2009; Thura et al. 2012). Ditterich (2006) at top left refers to the time-varying model without accumulation; Ditterich (2006) at top right refers to the time-varying model with accumulation. Drugowitsch et al. (2012) and Churchland et al. (2008) refer to a diffusion model incorporating an urgency signal. LCA refers to the leaky competing accumulator model of Usher and McClelland (2001). EAM refers to evidence accumulator models as classes of accumulator models assuming perfect integration and no urgency (e.g., Ratcliff 1978). All of these models provide good fits to data collected in constant-evidence tasks (blue ellipse). Changing evidence tasks (green ellipse) and speed-accuracy manipulation (red arrow) help to constrain model parameters further. In Cisek et al. (2009), Thura et al. (2012), and Thura and Cisek (2014), we showed that the time constant of integration was brief. In Thura et al. (2014), we showed that monkeys adjust the urgency parameter depending on the context of the decision task, either emphasizing speed or accuracy of their decisions to optimize their reward rate.

In a recent paper published in the Journal of Neurophysiology, Hawkins et al. (2015b) test EAMs and UGM predictions against two data sets often used to support evidence accumulation during decision making (Ratcliff and McKoon 2008; Roitman and Shadlen 2002). They report that EAMs provide a better fit to the Ratcliff and McKoon data, but they also show that behavioral data (performance and response times) from the Roitman and Shadlen study are in fact better described by the UGM. This last result is surprising, because Roitman and Shadlen's study is considered as possibly the strongest neurophysiological proof for evidence accumulation during simple perceptual decision making. Almost all work addressing the neural mechanisms of such processes cite this study and follow the interpretation of the authors: that an accumulation process predicts animals' behavior and explains the neural data in parietal cortex.

Consequently, two questions arise from these results: First, what can one conclude from this apparent discrepancy, since neither of the models is able to conclusively fit both data sets? Second, why would the UGM successfully predict Roitman and Shadlen's results if these data have always been considered as the paragon of the proof for evidence accumulation models?

We recently proposed that in any task in which sensory evidence is constant within the time course of a trial, accumulator and urgency-gating models are mathematically equivalent and make the same predictions about behavioral and neuronal decision data (Thura et al. 2012). Because almost all decision tasks have used such stable input scenarios, we proposed that perhaps, the buildup that is always interpreted as accumulation of sensory evidence might instead be interpreted as growing motor urgency (Churchland et al. 2008; Ditterich 2006).

In their report, Hawkins et al. (2015b) demonstrate that our mathematical proof of equivalence was incomplete, because our equations omitted to consider the evolution of intratrial noise variance during the decision process. To show that the models are distinguishable not only theoretically but also in practice, the authors first used a model recovery method involving simulating data sets from one model and fitting those simulated data with both EAMs and UGM, to assess which model provides the best fits to the data. The rationale is that if the models are discriminable, then data generated by EAMs will be better fit by EAMs than by the UGM, and vice versa. In all cases tested, they found that synthetic data generated from EAMs was always best fit by EAMs and vice versa for the UGM. Next, they compared the performance of the two models in fitting real data from human and monkey subjects faced with stable input decision tasks. Here again, the authors showed that the two models are discriminable in predicting these real datasets. On the basis of these three pieces of evidence, Hawkins et al. concluded that EAMs are not equivalent to the UGM and that these models can be discriminated even in constant evidence paradigms.

Hawkins et al. (2015b) are correct that our derivations omitted to consider the evolution of the intratrial noise variance. This implies that, strictly speaking, EAMs and the UGM are indeed not mathematically identical in constant environments. However, as described later in more detail, this omission has no consequence on the motivation of why one should use an urgency mechanism to make decisions and on the experimental validation of the UGM in our study; our data cannot be fit with any version of an EAM.

Nevertheless, let us focus on how well the models predict real data in constant evidence tasks. Hawkins et al. (2015b) analyzed two data sets from human (Ratcliff and McKoon 2008) and monkey (Roitman and Shadlen 2002) experiments. In each study, subjects performed the classic random dot motion discrimination task in which a decision is based on a cloud of dots, a stable percentage of which move coherently toward the left or right of the screen while the remaining dots move randomly. The task for the subjects is to indicate the direction of coherent motion by pressing buttons or through eye movements.

As mentioned earlier, the result of the fitting exercise is that neither model can unequivocally predict both data sets (their Fig. 5). The goodness-of-fit comparisons show that EAMs predict data from Ratcliff and McKoon better than the UGM, and conversely, the UGM makes better predictions of the data collected in Roitman and Shadlen's study. But how strong are the conclusions one can draw from these comparisons? Differences in the goodness of fit are in fact very modest (only 1.5% for Ratcliff and McKoon's data in favor of the EAMs and about 6% for Roitman & Shadlen's data in favor of the UGM).

When differences are that small, one must wonder whether the decisions made in configuring the models could have impacted the outcome of which model ultimately predicts the better fit. For instance, when Hawkins et al. (2015b) add across-trial noise variability to both models in their synthetic data fitting analysis, EAMs and UGM become less discriminable (compare the fits in their Fig. 2, A and C, and the scales of the x-axes in Fig. 2, B and D). One can thus suspect that any remaining discriminability between the models will depend on assumptions made about the degree to which variability is dominated by within- vs. across-trial noise.

Another example applies to a recent paper from the same group in which the authors claim that fixed bound models (in which the decision threshold is fixed within trials) fit more data than collapsing bounds or urgency signals (Hawkins et al. 2015a). However, a closer inspection of what was actually shown reveals that the results are actually remarkably inconclusive. For example, Figs. 5 and 6 of that article show two analyses (made with slightly different assumptions about variability in model parameters) that lead to different conclusions for most of the data sets under consideration. So which set of conclusions is correct?

These cases highlight an issue often associated with goodness-of-fit comparisons to data sets for which the predictions of different models are in fact very similar (see for instance Teodorescu and Usher 2013). Many assumptions are made about parameter distributions and choosing the shape of these distributions influences the outcome of the tests. In given circumstances, it is such assumptions about parameter settings and not the structure of the model itself that determines performance in fitting data (Jones and Dzhafarov 2014b). The model under consideration then becomes universal (or unfalsifiable), because it can literally fit any data set as long as parameters are properly chosen. Whereas these considerations are still under lively debate (Heathcote et al. 2014; Jones and Dzhafarov 2014a; Smith et al. 2014), they certainly stress the importance of understanding which model assumptions are responsible for its predictive performance.

To come back to the Hawkins et al. (2015b) study, if one still assumes that model fits are conclusive, then one important conclusion is that previous accounts of existing data, by default interpreted using the accumulator framework, may need to be reexamined more carefully in light of a potential alternate interpretation. The authors show that accumulator models make a better description of the human data sets, whereas UGM is more accurate in predicting monkeys' behavior (for the 2 sets of selected data). From a modeling perspective, this could be taken to suggest (as noted by the authors) that human and monkey subjects used different mechanisms to make their decisions: human subjects accumulated evidence and decided without a strong influence of urgency, whereas monkeys processed sensory evidence more quickly and urgency eventually stopped the process to prevent uncertain decisions from consuming too much time.

One speculative interpretation for this interesting result is that naive human subjects may have instinctively prioritized precision over all other objectives (perhaps because of pride) and thus set a low level of urgency to guarantee high percentages of correct responses, even at a high cost of time. “Expert” monkeys, however, may have adopted a different strategy (perhaps through months of training), betting more on the overall success (and reward) rate instead of performance per se. Consistently with this proposition, we and others recently showed that urgency signals allow monkeys to optimize their reward rate by adjusting the speed-accuracy trade-off of their decisions (Ditterich 2006; Drugowitsch et al. 2012; Thura et al. 2014).

Going further in this interpretation, such results are consistent with the idea that depending on various factors such as task properties, instructions and constrains, and expertise of subjects, decision mechanisms are adjustable, allowing one to apply the most appropriate strategy for a given task/situation. For EAMs and the UGM, this “switch” is conceivable, because the models in fact comprise the same two key parameters: a time constant of evidence integration (τ) and a time-variant gain, implementing urgency. In the UGM, the time constant of integration is brief and urgency is high. In EAMs however, the time constant is infinite (or perfect) and urgency is absent. These models can thus be described in a two-dimensional space of parameters where the time constant and time-variant gain define the axes (Fig. 1B). In this space, EAMs and the UGM would lie on opposite corners. Other decision models differing with regard to these two parameters can also be added in this space: the leaky competing accumulator model (LCA; Usher and McClelland 2001) would lie at the bottom left corner of this space, whereas accumulator models incorporating urgency (Churchland et al. 2008; Ditterich 2006; Drugowitsch et al. 2012) would lie at the top right corner.

How then can we estimate these parameters and dissociate evidence accumulation from urgency univocally? One approach, taken by Hawkins et al. (2015b), is to perform model fit comparisons on existing data from constant evidence tasks. A complementary approach is to design new paradigms that would force the models to make very different predictions that could be then easily teased apart empirically, at both the behavioral and neuronal levels.

Recently, we designed tasks in which sensory evidence for the two response choices continuously evolves and can even reverse within individual trials. Behavioral data strongly suggest that in these tasks, subjects do not accumulate the sensory evidence used to make their decisions, whether the stimulus is noisy or not (Cisek et al. 2009; Thura et al. 2012). This contradicts the predictions of EAMs. By combining behavioral and neurophysiological approaches, we also showed that neurons involved in the deliberation process track the evolution of sensory evidence with a time constant that does not exceed 200 ms (Thura and Cisek 2014). Next, we showed that the same neurons involved in tracking the evidence are also modulated by a time-varying signal, suggesting the presence of urgency (Thura and Cisek 2014). Finally, we tested this urgency signal hypothesis by encouraging monkeys to modify the speed-accuracy trade-off of their decisions by varying the timing parameters of the changing evidence task. We demonstrated that animals adjust their level of urgency depending on the context of the task, to increase their rate of reward (Thura et al. 2014). Thus only the UGM successfully predicts subjects' behavior and describes the neural patterns in these scenarios, which more closely resemble the changing world in which the brain evolved.

Crucially, EAMs and UGM also make very different predictions about the brain structures and circuits involved in the decision process. Assuming that an urgency signal influences decisions leads to several important questions: What is the origin of this signal? Does it affect other functions (e.g., movement execution)? What is its role in pathological behavior? We are currently investigating these questions in ongoing studies (see for instance Thura et al. 2014 for an example of how urgency might affect movement execution).

In their discussion, Hawkins et al. (2015b) suggest challenging the UGM with the same multiple constant evidence paradigms that have been used to support accumulator models. Because it can be argued that our results are task dependent and do not generalize to the kinds of constant-evidence perceptual discrimination tasks usually studied, this is an understandable proposition. But their own attempt already reveals how small the differences between model predictions can be. Should we make conclusions and commit to a model on the basis of a 1.5 or 6% difference in fit when neither model is perfect? Instead, as acknowledged by the authors, I believe that a fruitful alternative would be to challenge both models with paradigms in which sensory information varies over the time course of trials (Huk and Shadlen 2005; Kiani et al. 2008). These tasks have features that allow one to distinguish models very clearly, they are more representative of decisions in the real world, and when associated with neural recordings, they can help to constrain the space of models by putting bounds on their parameters (Fig. 1B).

GRANTS

This work was supported by fellowships from the FYSSEN Foundation and the Groupe de Recherche sur le Système Nerveux Central.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author.

AUTHOR CONTRIBUTIONS

D.T. prepared figures; D.T. drafted manuscript; D.T. edited and revised manuscript; D.T. approved final version of manuscript.

ACKNOWLEDGMENTS

I am grateful to John Kalaska and Paul Cisek for discussions and comments on the manuscript.

REFERENCES

- Churchland AK, Kiani R, Shadlen MN. Decision-making with multiple alternatives. Nat Neurosci 11: 693–702, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P, Puskas GA, El-Murr S. Decisions in changing conditions: the urgency-gating model. J Neurosci 29: 11560–11571, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ditterich J. Evidence for time-variant decision making. Eur J Neurosci 24: 3628–3641, 2006. [DOI] [PubMed] [Google Scholar]

- Drugowitsch J, Moreno-Bote R, Churchland AK, Shadlen MN, Pouget A. The cost of accumulating evidence in perceptual decision making. J Neurosci 32: 3612–3628, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci 30: 535–574, 2007. [DOI] [PubMed] [Google Scholar]

- Hawkins GE, Forstmann BU, Wagenmakers EJ, Ratcliff R, Brown SD. Revisiting the evidence for collapsing boundaries and urgency signals in perceptual decision-making. J Neurosci 35: 2476–2484, 2015a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkins GE, Wagenmakers EJ, Ratcliff R, Brown SD. Discriminating evidence accumulation from urgency signals in speeded decision making. J Neurophysiol 114: 40–47, 2015b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heathcote A, Wagenmakers EJ, Brown SD. The falsifiability of actual decision-making models. Psychol Rev 121: 676–678, 2014. [DOI] [PubMed] [Google Scholar]

- Huk AC, Shadlen MN. Neural activity in macaque parietal cortex reflects temporal integration of visual motion signals during perceptual decision making. J Neurosci 25: 10420–10436, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones M, Dzhafarov EN. Analyzability, ad hoc restrictions, and excessive flexibility of evidence-accumulation models: reply to two critical commentaries. Psychol Rev 121: 689–695, 2014a. [DOI] [PubMed] [Google Scholar]

- Jones M, Dzhafarov EN. Unfalsifiability and mutual translatability of major modeling schemes for choice reaction time. Psychol Rev 121: 1–32, 2014b. [DOI] [PubMed] [Google Scholar]

- Kiani R, Hanks TD, Shadlen MN. Bounded integration in parietal cortex underlies decisions even when viewing duration is dictated by the environment. J Neurosci 28: 3017–3029, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmer J, Huk AC, Shadlen MN. The effect of stimulus strength on the speed and accuracy of a perceptual decision. J Vis 5: 376–404, 2005. [DOI] [PubMed] [Google Scholar]

- Ratcliff R. A diffusion model account of response time and accuracy in a brightness discrimination task: fitting real data and failing to fit fake but plausible data. Psychon Bull Rev 9: 278–291, 2002. [DOI] [PubMed] [Google Scholar]

- Ratcliff R. A theory of memory retrieval. Psychol Rev 85: 59–108, 1978. [Google Scholar]

- Ratcliff R, McKoon G. The diffusion decision model: theory and data for two-choice decision tasks. Neural Comput 20: 873–922, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roitman JD, Shadlen MN. Response of neurons in the lateral intraparietal area during a combined visual discrimination reaction time task. J Neurosci 22: 9475–9489, 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith PL, Ratcliff R, McKoon G. The diffusion model is not a deterministic growth model: comment on Jones and Dzhafarov (2014). Psychol Rev 121: 679–688, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teodorescu AR, Usher M. Disentangling decision models: from independence to competition. Psychol Rev 120: 1–38, 2013. [DOI] [PubMed] [Google Scholar]

- Thura D, Beauregard-Racine J, Fradet CW, Cisek P. Decision making by urgency gating: theory and experimental support. J Neurophysiol 108: 2912–2930, 2012. [DOI] [PubMed] [Google Scholar]

- Thura D, Cisek P. Deliberation and commitment in the premotor and primary motor cortex during dynamic decision making. Neuron 81: 1401–1416, 2014. [DOI] [PubMed] [Google Scholar]

- Thura D, Cos I, Trung J, Cisek P. Context-dependent urgency influences speed-accuracy trade-offs in decision-making and movement execution. J Neurosci 34: 16442–16454, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Usher M, McClelland JL. The time course of perceptual choice: the leaky, competing accumulator model. Psychol Rev 108: 550–592, 2001. [DOI] [PubMed] [Google Scholar]