Abstract

Navigability, an ability to find a logarithmically short path between elements using only local information, is one of the most fascinating properties of real-life networks. However, the exact mechanism responsible for the formation of navigation properties remained unknown. We show that navigability can be achieved by using only two ingredients present in the majority of networks: network growth and local homophily, giving a persuasive answer how the navigation appears in real-life networks. A very simple algorithm produces hierarchical self-similar optimally wired navigable small world networks with exponential degree distribution by using only local information. Adding preferential attachment produces a scale-free network which has shorter greedy paths, but worse (power law) scaling of the information extraction locality (algorithmic complexity of a search). Introducing saturation of the preferential attachment leads to truncated scale-free degree distribution that offers a good tradeoff between these parameters and can be useful for practical applications. Several features of the model are observed in real-life networks, in particular in the brain neural networks, supporting the earlier suggestions that they are navigable.

Introduction

Large scale networks are ubiquitous in many domains of science and technology. They influence numerous aspects of daily human life, and their importance is rising with the advances in the information technology. Even human’s ability to think is governed by a large-scale brain network containing more than 100 billion neurons[1]. One of the most fascinating features found in the real-life networks is the navigability, an ability to find a logarithmically short path between two arbitrary nodes using only local information, without global knowledge of the network.

In the late 1960’s Stanley Milgram and his collaborators conducted a series of experiments in which individuals from the USA were asked to get letters delivered to an unknown recipient in Boston[2]. Participants forwarded the letter to an acquaintance that was more likely to know the target. As a result about 20% of the letters arrived to the target on the average in less than six hops. In addition to revealing the existence of short paths in real-world acquaintance networks, the small-world experiments showed that these networks are navigable: a short path was discovered through using only local information. Later, the navigation feature was discovered in other types of networks[3]. The first algorithmic navigation model with a local greedy routing was proposed by J. Kleinberg[4, 5], inspiring many other studies and applications of the effect (see the recent review in [3]). However, the exact mechanism that is responsible for formation of navigation properties in real-life networks remained unknown. It was recently suggested that the navigation properties can rise due to various optimization schemes, such as optimization of network’s entropy[6], optimization of network transport[7–12], game theory models[13, 14] or due to internal hyperbolicity of a hidden metric space[15]. In ref. [16] a realistic model based on random heterogeneous networks was proposed to describe navigation processes in real scale-free networks. Later, hyperbolicity of hidden metric space was proposed as a possible reason of forming such navigable structures in real-life networks[14, 15, 17]. However it is unclear whether hyperbolicity and other aforementioned complex schemes are related to processes in real-life networks. In this work we show that the navigation property can be directly achieved by using just two ingredients that are present in the majority of real-life networks: network growth and local homophily[18], giving a simple and persuasive answer to the question of the nature of navigability in real-life systems.

Advances in network studies often find their use in a highly connected field of applied graph based algorithms. And one of natural products of navigation studies is emergence of new efficient algorithms for distributed data similarity search (namely, the K-Nearest Neighbor Search, K-NN) which is a keen problem for many applications[19]. K-NN algorithms based on proximity graph routing have been known for decades[20–22], they, however, suffer from the power law scalability of the routing hops number. Several graph-only structures with polylogarithmic routing complexity inspired by the Kleinberg’s idea were proposed in refs. [23–25] to solve this problem; their realization, nonetheless, was far from practical applications. In refs. [26–28] an efficient general metric approximate K-NN algorithm was introduced based on a different idea. The algorithm utilized incremental insertion and connection of newcoming elements to their closest neighbors in order to construct a navigable small world graph. By simulations the authors showed that the algorithm can produce networks with short greedy paths and achieves a polylogarithmic complexity for both search and insertion, firmly outperforming rival algorithms for a wide selection of datasets[28–30]. However, the scope of the works[26–28] was limited to the approximate nearest neighbors problem.

Based on these ideas we propose Growing Homophilic (GH) networks as the origin of small world navigation in real-life systems. We analyze the network properties using simulations and theoretical consideration, confirming navigation properties and demonstrating that the scale-free navigation models[16, 31, 32] considered earlier are not truly local in terms of information extraction locality (algorithmic complexity of a search), while the proposed model is. We also show that the GH network features can be found in real-life networks, with an emphasis on functional brain networks.

Functional brain networks are studied in vivo using MRI techniques[33] and are usually modeled by generalizations of random models[34–36] requiring global network knowledge. It was suggested that the brain networks are navigable through utilizing the rich club (a densely interconnected high degree subgraph[37]) and that the navigation plays a major role in brain’s function[38]. In the recent work[14] it was demonstrated that the functional brain networks have a navigation skeleton that allows greedy searching with low errors. Both growth and homophily[35, 39] are usually considered to be important factors influencing the brain network structure. Local connection to nearby neurons together with network growth are considered as a plausible mechanism for formation of long range connections in small nervous networks[40, 41], similarly to the proposed model. Our study shows that the GH networks have high level features that are found in the functional brain networks, indicating that the GH mechanism is not suppressed and plays a significant role in brain network formation, thus supporting the earlier suggestions that the brain networks are naturally navigable.

GH networks can also be viewed as a substantial generalization of a complex growing spatial 1D OHO model introduced in [42], which included incremental connection of new elements to near 1D circle interval neighbors, followed by interval normalization. The OHO model demonstrated a way to deterministically produce networks with high clustering and short average path. Recently, another generalization of this model for the multidimensional case was proposed as a possible mechanism for formation of neural networks[43]. However, formation of navigation properties was not a subject of the OHO model studies. GH network in the case of 1D circle data can be also considered as a degenerated version of growth models studied in [17] with an exclusion of popularity term (which also makes it similar to the OHO model). It was demonstrated that the hyperbolic model from ref. [17], which is a growing model in a hyperbolic space, adequately describes evolution of many scale-free real networks. However, the properties for the case without hyperbolicity (popularity) which leads to an exponential degree distribution were studied poorly. As follows from the navigation models in refs. [15, 16, 32], a scale-free degree distribution with γ<2.5 is required for the such networks to be navigable in the large network limit, thus according to [15, 16, 32] without hyperbolicity the mentioned growing network should not to be navigable. In contrast we show that the proposed GH networks with exponential degree distribution are in fact navigable even when using the definition of navigability from ref. [16].

Results

Construction and navigation properties

To construct a GH network we use a set of elements S from a metric space σ and a single construction parameter M. We start building network by inserting a random element from S. Then we iteratively insert randomly selected remaining elements e by connecting to M the previously inserted elements that have minimal distance Δ to e, until all elements from S are inserted. Unlike the models from refs. [4, 5, 7, 8, 16, 17, 42–45] and the Watts-Strogatz model [46] (which all require global network knowledge at construction), the GH algorithm insertions can be done approximately using only local information by selecting the approximate nearest neighbors through a help of network navigation feature (see Methods section for details). This has a clear interpretation: new nodes in many real networks do not have global knowledge, so they have to navigate the network in order to find their place and adapt. The tests showed that under appropriate parameters there is no measurable difference in network metrics whether the construction had exact or inexact neighbors selection, while the network assembly process was drastically faster in the approximated neighbors case.

Because the elements from S are not placed on a regular lattice, the greedy search algorithm can be trapped in a local minimum before reaching the target. The generalization of the regular lattice for this case is the Delaunay graph, which is dual to the Voronoi partition. If we have a Delaunay graph subset in the network, the greedy search always ends at an element from S which is the closest to any target element t ∈ σ [47], thus exactly solving the Nearest Neighbor problem. In a less general case when t ∈ S (i.e. the target is an element from the network), it is enough to have a Delaunay subgraph–the Relative Neighborhood Graph[20]. It is easy to construct a Delaunay graph in low dimensional Euclidian spaces, especially in 1D case where Delaunay graph is a simple liked list, however it was shown that constructing the graph using only distances between the set elements is impossible for general metric spaces[47]. Still, connecting to M nearest neighbors acts as a good enough approximation of the Delaunay graph, so that by increasing M or using a slightly modified versions of the greedy algorithm these effects can be made negligible[27, 28]. The average greedy algorithm hop count of a GH network for different input data is presented in Fig 1(A). The graph shows a clear logarithmic scaling for all data used, including a non-trivial case of edit distance for English words. At the parameters used, the probability of a successfull navigation was higher than 0.92 for all the data and higher than 0.999 for vector data with d<5.

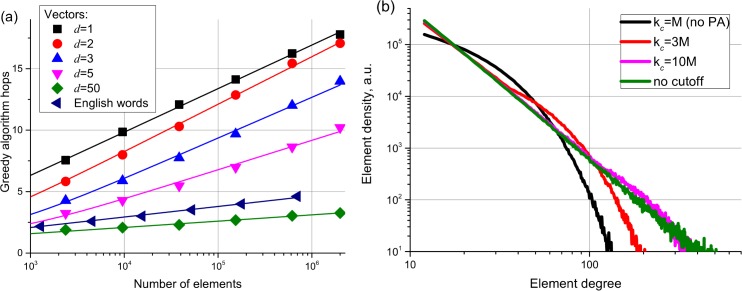

Fig 1.

(a) Average hop count during a greedy search for different dimensionality Euclidian data and English words database with edit distance, demonstrating the logarithmic scaling. (b) Degree distribution for the GH algorithm networks with PA for different degree cutoffs (kc).

The definition of navigability in [16] requires also that the greedy search success probability does not tend to zero in the limit of infinite network size. This restriction has led to a conclusion that the navigability should be expected only for the power law degree distribution networks with γ<2.5. Networks produced by a GH model are also navigable by the aforementioned definition at least at small dimensionality (d≤6), as tests indicate that the recall converges to a constant value (see Figure A in S1 File), thus demonstrating existence of a new class of navigable models.

Degree distribution and information extraction locality

A GH network has an exponential degree distribution (see in Fig 1(B)). The scale in the exponent is determined by the M parameter, similar analysis can be done as in [42, 43]. The exponential degree distribution is present in the real-life networks such as power grids, air traffic networks, and collaboration networks of company directors[48]. Studies of the functional brain network degree distribution yielded ambiguous results: some investigations have shown exponential degree distribution, while others exhibited scale-free or truncated scale-free distribution[49].

Most of the studied real-life networks, however, have a power law degree distribution. The GH algorithm can be slightly modified by adding a preferential attachment (PA)[50] to produce a scale-free (power law) degree distribution (which makes it somewhat similar to the growing models in a hyperbolic plane[17]). To achieve that, the distances to the elements are normalized by k1/d during the network construction for uniform data in Euclidean space (see the Methods section for the details), leading to a power law degree distribution with γ close to 3. With the addition of a cutoff kc, the degree distribution transforms into a power law with an exponential cutoff (see Fig 1(B)). As expected for scale-free networks[16], adding the preferential attachment does not suppress the network navigability (see Fig 2(B)).

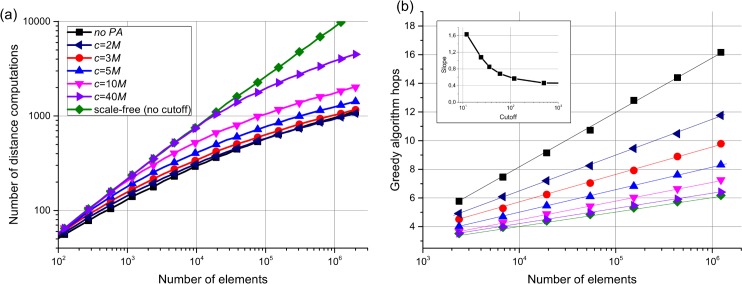

Fig 2. Comparison of the GH model to the GH model with PA.

(a) Number of distance computations per a greedy search for GH network with PA for different degree cutoffs (kc). (b) Average hop count during a greedy search for GH network with PA for different degree cutoffs. The inset shows the decay of greedy hop slope with an increase of the degree cutoff. Both plots are presented for Euclid data with d = 2, M = 12.

Exponential degree distribution is usually attributed to limited capacity of a node or to absence of PA mechanisms. However, there is another critical distinction between scale-free and exponential degree distributions in terms of locality of information extraction which arises in virtual computer networks having practically no limit on node capacity. We define the locality as the number of distance computations during a greedy search, which also corresponds to algorithmic complexity of a search algorithm. Our simulations show that, for the scale-free networks (both in GH networks with PA and scale-free networks studied in [16]), the number of distance computations has a power law scaling with the number of network elements, in contrast to GH networks without PA and Kleinberg’s networks which have a polylogarithmic scaling[28] (see Fig 2; comparison with scale-free networks from [16] is presented in S1 File). This happens because the greedy algorithm prefers nodes with the highest degrees (which have monopoly on long range links)[16] while the maximum degree in scale-free network has power law scaling N1/(γ-1) with the number of elements[51] leading to N1/(γ-1) search complexity. The authors in [15] argued that the best choice for optimal navigation is when γ is close to 2; this, however, leads to almost linear scaling of greedy search distance computations number. Such scaling makes using scale-free networks impractical for greedy routing in large-scale networks where high locality of information extraction matters, which is the case of K-NN algorithms and is likely to be the case for the brain networks.

The importance of employing the locality of information extraction as a measure for network navigation studies can be underpinned by considering a star graph (a graph where every node is connected to a single central hub) with a modified greedy search algorithm that uses effective distance Δ⋅eak (where k is degree of a candidate node, a is a parameter, Δ is initial metric distance between the candidate and the target). The parameter a can be always set large enough, so that every greedy path will go through the hub reaching the target in two steps regardless of the network size. This network is ideal in all navigation measures defined in [15, 16], having short path, ideal success ratio and low average degree. However, to find these paths the greedy search algorithm has to utilize the hub’s global view of the network and to compare distances to every network node thus having a bad linear algorithmic complexity scaling.

At the same time, the scale-free networks offer less greedy algorithm hops compared to the base GH algorithm which is beneficial. A power law degree distribution with an exponential cutoff seems to be a good tradeoff between low number of hops and low complexity of a search. Slightly increasing the cutoff kc above M in GH algorithm with PA sharply decreases the number of greedy algorithm hops (see Fig 2(B)), while having almost no impact on the number of distance computations (Fig 2(A)). This finding can be used for constructing artificial networks optimized for best navigability both in terms of complexity and number of hops.

Link length distribution and optimal wiring

For uniformly distributed bounded d-dimensional Euclidian data, the average distance between the nearest neighbors scales as r(N) ∼ N−1/d (1) with the total number of elements N in the network. It means that every characteristic scale of link length in a final network can be put in correspondence to some specific time of construction. That allows deducing the link length distribution through differencing the equation (1). By doing this we get a power law link length density dN ∼ r−αdr with α = d + 1 exponent (confirmed by the simulations). It was recently shown[7, 8] that α = d + 1 is the optimal value for the shortest path length and greedy navigation path in case of constraint on total length of all connections in the network. Thus, GH networks are naturally close to optimal in terms of the wiring cost.

Power law link length distributions with α = d + 1 are encountered in real-life networks like airport connections networks[52] and functional brain networks[53, 54]. It was speculated in refs. [7, 8] that such behavior arises due to global optimization schemes, while GH networks provide a much more simple and natural explanation for the exponent value.

Self-similarity and hierarchical modular structure

Construction of a GH network is an iterative process: at each step we have as an input a navigable small world network and we insert new elements and links preserving its properties. A part of a uniform data GH network covered by a ball is also a navigable small world with few outer connections. Thus, the GH networks have self-similar structure. Analysis of self-similarity identical to [31] is presented in in Figure B in S1 File, demonstrating a self-similar structure of network’s clustering coefficient.

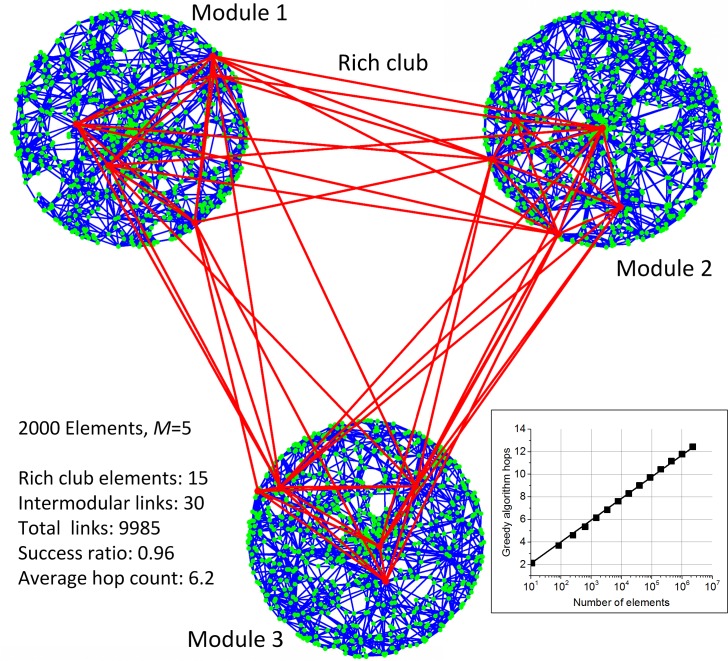

The hierarchical self-similarity property is found in many real-life networks[31, 55, 56]. Studies have shown that the functional brain networks form a hierarchically modular community structure[53, 57] consisting of highly interconnected specialized modules, only loosely connected to each other. This may seem to contradict the small world feature which is usually modeled by random networks[53]. GH networks can easily model both small world navigation and modular structure simultaneously by introducing clusters. In this case, coordinates of the cluster centers may correspond to different neuron specialties in a generalized underlying metric space. A 2D GH network for clustered data is presented in Fig 3 demonstrating highly modular and at the same time navigable network structure containing only 30 intermodular links (0.03% of the total number) between the first elements in the network (which form a rich club). By using a simple modification of the greedy algorithm with preference of high degree nodes (see the Methods section) short paths between different module elements can be efficiently found using only local information.

Fig 3. 2D network constructed by the GH algorithm with M = 5 for clustered d = 2 Euclidian data.

The inset shows scaling of the modified greedy algorithm average hop count.

Rich club and greedy hops upper bounds

Simulations show that probability of a connection between the GH network elements grows exponentially with the element degree, thus demonstrating the presence of a rich club (see Figure D in S1 File). Due to incremental construction and self-similarity of the GH networks every preceding instance of a GH network acts as a rich club to any subsequent instance. To achieve a well-defined rich club, the self-similarity symmetry has to be broken by introducing non-fractality in data, such as a fixed number of clusters in Fig 3.

Universally, the rich club is composed of the first elements inserted by the GH algorithm, which is also the case for the brain networks. Studies have shown that the rich club in human brain is formed before the 30th week of gestation with almost no changes of its inner connections until birth[58]. Moreover, the investigations of C. elegans worms neural network have shown that the rich club neurons are among the first neurons to be born[59, 60]. Thus, together with [40], the GH model offers a plausible explanation of how the rich clubs are formed in brain networks.

Due to presence of rich clubs in GH networks a general navigation analysis similar to the scale-free networks from [16] can be done. At the beginning of a greedy search the algorithm “zooms-out” preferring high degree nodes with a higher characteristic link radius until it reaches a node for which the characteristic radius of the connections is comparable with the distance to the target node. Next, a reverse “zoom-in” procedure takes place until the target node is reached, see [16] for details.

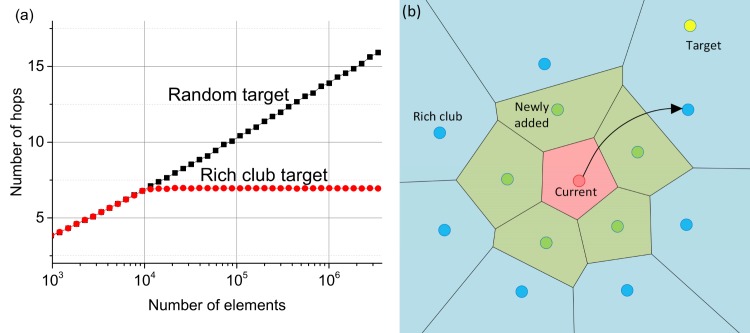

We offer a slightly different perspective. It can be shown that in GH networks the rich club is also navigable, meaning that a greedy search between two rich hub nodes is very likely to select only the rich club nodes at each step. This is illustrated by the simulations results in Fig 4(A) showing that the average hop count for the first 104 elements selected as start and targets nodes does not depend on the dataset size, i.e. the greedy search algorithm ignores newly added links. Fig 4(B) shows a schematic Voronoi partition of rich club element connections for a greedy algorithm step with another rich club element as a target. In the case of a good Delaunay graph approximation (high enough M) addition of new elements alters the Voronoi partitioning only locally as is shown in Fig 4(B), thus having no impact on the greedy search between the rich club elements. The latter can be proved for 1D vector spaces, since in 1D the Voronoi partitions of the new elements are completely bounded by a single rich club element further from the base element in the same direction. It is not, however, straightforward how to make a rigid proof for higher dimensionality/more general spaces.

Fig 4.

(a) Average number of greedy algorithm hops scaling for the first 104 elements given as start and target nodes (red) and all elements used for search (black). The first 104 elements form a rich club that ignores more newly added elements. The results are presented for Euclidian data with d = 2, M = 20. (b) Cartoon of Voronoi partition for connections of a single greedy search step. Newly added elements (green) cause only local changes in Voronoi partitioning, so if the target element lies outside the current element connections, it falls into Voronoi partition of rich club’s elements (blue), thus ignoring local connections at greedy search.

Self-similarity and navigability of the rich club in GH networks play a crucial role in the navigation process. Suppose we have a GH network which has a perfect Delaunay graph as subset at every step, rich club navigation feature and has an average greedy algorithm hop count H. We can show that by doubling the number of the elements the average greedy path increases no more than by adding a constant, thus having a 2log2(N) upper bound of the greedy hop number.

If the start and target nodes are from the rich club (first half of the network), the average hop count does not increase as it has been shown previously. If the greedy algorithm starts a search for a distant target from a newly added element it has at least 1/2 probability that the next selected element is from the rich club (since a half of the elements is from the rich club, assuming no correlation with the connection distances), thus reaching the rich club on average in two steps. Next, the greedy algorithm needs on average no more than H steps to get to a rich club element for which the Voronoi region has the query. For a case when the destination node is from the rich club the average number of hops is thus H+2. In the opposite case the search ends on average in two additional steps, because the probability that next node is from the rich club is at least 1/2 and since we have already visited the rich club node that is closest to the query, this cannot happen. Thus the average number of hops in this case is H+4. By concerning the last case (the target–newly added element and start element is from the rich club) we get an average H+2 hops. Thus the upper hop bound scales as 2log2(N) proving GH networks have a logarithmic scaling of the greedy search hops. This consideration also predicts the scaling log2(N) of the shortest path length, and thus the stretch (the ratio between the greedy path length and the short path length) should be equal to 2.

Greedy paths inferred from the simulations (Fig 1(A)) are significantly shorter than the presented theoretical upper bound (predicted upper bound is about 40 for one million elements). The predicted stretch, however, is in agreement with the evaluated one for the case of large networks (see Figure E(a) in S1 File). The discrepancy in the number of greedy hops can be explained in this setting by significantly less shortest path length due to exclusion from the consideration of the links between non-consecutive generations of elements. The case when the link overlap between the non-consecutive generations is small can be modelled in 1D by connecting to the exact Delaunay neighbors, this leads to producing the shortest paths very close to the predicted upper bound (see Figure E(b) in S1 File). However, even in this case the number of greedy algorithm hops is still significantly smaller than the upper bound. The latter can be explained due to the fact that in this setting the probability of selecting a correct link on each step of the greedy search is significantly higher than 1/2 due to the strong correlation between the length of the links and the element generation (i.e. the most distant link is very likely to get you straight to the rich club, this is violated for large M values). A more detailed consideration has to be made to get the exact bound of the greedy algorithm paths which takes into account the number of neighbors M as well as higher dimensionality and preferential attachment effects.

Discussion

Using simulations and theoretical studies we have demonstrated that two ingredients that are present in the majority of networks, namely network growth and local homophily, are sufficient to produce a navigable small world network, giving a simple and persuasive answer how the navigation feature appears in real-life networks. In contrast to the generally used models, a simple local GH model without central regulation by using only local information produces hierarchical self-similar optimally wired navigable networks, which offers a simple explanation why these features are found in real-life networks, without a need of employing hyperbolicity or other complex schemes. Self-similarity and rich clubs navigation of the GH networks lead to emergence of logarithmic scaling of the greedy algorithm hops in GH networks.

By adding PA with saturation the degree distribution can be tuned from exponential to scale-free with or without exponential cutoff. We have shown that in case of pure scale-free degree distribution (as well as for the scale-free networks studied in [16] and hyperbolic networks), the true logarithmic local routing cannot be achieved due to a power law scaling of information extraction locality (algorithmic complexity of a search). Truncated scale-free degree distribution offers a reasonable tradeoff between the path length and the algorithmic complexity and can be used for practical applications.

Thus, every network that has both growth and homophily is a potential navigable small-world network. This is an important finding for real-life networks, as real life is full of examples of growth and homophily shaping the network. Scientific papers form a growing navigable citation network (navigability of which is actively utilized by researchers) just by citing existing related works. Big city passenger airports were among the first to open and later became big network hubs which play an important role in spatial navigation in airport networks[16]. Neural brain networks are formed utilizing both growth and homophily, producing hierarchical structures with rich clubs consisting of early born neurons. The proposed GH model offers a conclusive explanation of navigability in these networks. Still, the evolution of some networks including social structures incorporate other factors, such as node moving and departure. For these networks, a sudden departure of a major node (say, a key manager in a company or rich club neurons[61]) can seriously hurt the performance. However, some of these networks can be resilient to the above-mentioned processes, thus preserving navigation. For example, there are quite a few top-level deputies in big companies, and when a manager quits, he/she has to pass his/her contacts to a newcomer.

There is evidence that in real-life networks such as an airport and brain networks, the GH model is not suppressed by other mechanisms. In addition to the aforementioned growth and homophily, several other high level features of the GH model are observed in brain networks, such as low diameter, high clustering, presence of navigation skeleton, hierarchical self-similar modular structure, power law link length distribution with exact d+1 exponent, and emergence of a rich club from the first elements in the network. This indicates that the model plays a significant role in the formation of brain networks and that they are likely to be navigable, supporting the earlier suggestions[14, 38].

The proposed GH model can be used as a guide for building artificial optimally wired navigable structures using only local information.

Methods

Construction

The GH networks were constructed through iteratively inserting the elements into the network in random order by adding bidirectional links to the M closest elements. To find the connections we applied approximate K-NN graph algorithms[28] (C++ implementations of the K-NN algorithms are available in the Non-Metric Space Library[62], https://github.com/searchivarius/nmslib/) which utilized the navigation in the constructed graph. To obtain approximate M nearest neighbors, a dynamic list of M closest of the found elements (initially filled with a random enter point node) was kept during the search. The list was updated at each step by evaluating the neighborhood of the closest previously non-evaluated element in the list until the neighborhood of every element from the list was evaluated. For M = 1, this method is equivalent to a basic greedy search. The best M results from several trials were used as the approximate closest elements. The number of trials was adjusted so that the recall (the ratio between the found and the true M nearest neighbors) was higher than 0.95, producing results almost indistinguishable from what one get from the exact search. Pseudocode of the insertion procedure is presented in the Supporting Information. Changing the seed of the algorithm random data generators, connecting to the M exact neighbors and/or construction in many parallel threads had a very slight effect on the evaluated network metrics.

The degree normalized distance is computed by dividing the standard L2 distance by a power function of a network element degree, thus making high degree nodes effectively “closer” to the target than they are in the plain Euclid space. The power in the degree function is set to 1/d, where d is the dimensionality of the vector space. The preference to high degrees is saturated by a constant kc: if the element degree is higher than kc, than the L2 distance is just divided by the power function of the constant kc. Note that setting kc less than M leads to complete absence of the preference. Pseudocode of the degree normalized distance function is presented in the Supporting Information.

The mentioned degree normalized distance was used to find the approximate connections for the case of GH with PA. Instead of just connecting a new element to approximate L2 distance closest elements in GH networks, in case of GH with PA a new element is rather connected to the elements which minimize the degree normalized distance function.

Datasets

Random Euclidian data with coordinates distributed uniformly in [0,1] range with L2 distance was used to model the vectors. For testing the Damerau–Levenshtein distance, about 700k English words from the Scowl Debain database were used as the dataset. In order to unambiguously select the next node during a greedy search, a small random value was added to the Damerau–Levenshtein distance. For Fig 1(A) parameter M was set to be 9, 12, 20, 25, 150 and 40 for Euclidian vectors with d = 1, 2, 3, 5, 50 and English word dataset, respectively. The success ratio for the vectors was higher than 0.999 for d≤5, higher than 0.92 for d = 50 and English words data. On the average, only 760 distance computations were needed to find the path between two arbitrary words in 700k database and only about 1280 distance computations were required to find the path for 20 million d = 5 Euclidian vectors.

Network metrics

To evaluate the average number of hops, we used up to 104 randomly selected nodes as start and target elements. The greedy algorithm selects at each step a neighbor that is closest to the target as an input for the next step, until it reaches the element which is closer to the target than its neighbors. The success ratio is the ratio of the number of successful searches to the total number of searches. The search is considered failed if the result is not the target element.

The information extraction locality metric was evaluated by counting the average number of distance calculations during a single greedy search.

To get a high recall (>0.95) for the tests with clustered 2D data (Fig 3) we used a modification of the greedy search algorithm[44] that minimized the degree normalized distance, which was also used for construction of GH networks with PA.

Supporting Information

Supplementary part of the paper that includes: comparison of the basic GH model to the scale-free networks from [16] (Figure A); plots of self-similarity in clustering coefficient distribution (Figure B); plots of average nearest neighbor degree (Figure C); plots of rich club coefficient (Figure D); pseudocode of the construction algorithm; pseudocode of the degree normalized distance algorithm; plots of stretch and upper limits of the greedy path in 1D (Figure E).

(PDF)

Acknowledgments

We are grateful to I. Malkova, L. Boytsov, D. Yashunin, N. Krivatkina and V. Nekorkin for the discussions.

The reported study was funded by RFBR, according to the research project No. 16-31-60104 mol_а_dk and supported by Government of Russian Federation (agreement #14.Z50.31.0033 with the Institute of Applied Physics of RAS). Alexander Ponomarenko is grateful for the support from the Laboratory of Algorithms and Technologies for Network Analysis, National Research University Higher School of Economics.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

The reported study was funded by Russian Foundation for Basic Research, according to research project No. 16-31-60104 mol_а_dk (http://www.rfbr.ru/rffi/eng), and supported by the Government of Russian Federation, agreement #14.Z50.31.0033, with the Institute of Applied Physics of RAS (http://минобрнауки.рф/) (receiver: YAM). AP is grateful for the support from the Laboratory of Algorithms and Technologies for Network Analysis, National Research University Higher School of Economics (https://nnov.hse.ru/en/latna/). The grants have sponsored the study. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Arbib MA. The handbook of brain theory and neural networks: MIT press; 2003. [Google Scholar]

- 2.Travers J, Milgram S. An experimental study of the small world problem. Sociometry. 1969:425–43. [Google Scholar]

- 3.Huang W, Chen S, Wang W. Navigation in spatial networks: A survey. Physica A: Statistical Mechanics and its Applications. 2014;393:132–54. [Google Scholar]

- 4.Kleinberg JM. Navigation in a small world. Nature. 2000;406(6798):845–. [DOI] [PubMed] [Google Scholar]

- 5.Kleinberg J, editor The small-world phenomenon: An algorithmic perspective. Proceedings of the thirty-second annual ACM symposium on Theory of computing; 2000 2000: ACM.

- 6.Hu Y, Wang Y, Li D, Havlin S, Di Z. Possible origin of efficient navigation in small worlds. Physical Review Letters. 2011;106(10):108701 [DOI] [PubMed] [Google Scholar]

- 7.Li G, Reis S, Moreira A, Havlin S, Stanley H, Andrade J Jr. Optimal transport exponent in spatially embedded networks. Physical Review E. 2013;87(4):042810. [DOI] [PubMed] [Google Scholar]

- 8.Li G, Reis S, Moreira A, Havlin S, Stanley H, Andrade J Jr. Towards design principles for optimal transport networks. Physical Review Letters. 2010;104(1):018701 [DOI] [PubMed] [Google Scholar]

- 9.Chaintreau A, Fraigniaud P, Lebhar E. Networks become navigable as nodes move and forget Automata, Languages and Programming: Springer; 2008. p. 133–44. [Google Scholar]

- 10.Clarke I, Sandberg O, Wiley B, Hong TW, editors. Freenet: A distributed anonymous information storage and retrieval system Designing Privacy Enhancing Technologies; 2001: Springer. [Google Scholar]

- 11.Sandberg O, Clarke I. The evolution of navigable small-world networks. arXiv preprint cs/0607025. 2006.

- 12.Zhuo Z, Cai S-M, Fu Z-Q, Wang W-X. Self-organized emergence of navigability on small-world networks. New Journal of Physics. 2011;13(5):053030. [Google Scholar]

- 13.Yang Z, Chen W, editors. A Game Theoretic Model for the Formation of Navigable Small-World Networks. Proceedings of the 24th International Conference on World Wide Web; 2015: International World Wide Web Conferences Steering Committee.

- 14.Gulyás A, Bíró JJ, Kőrösi A, Rétvári G, Krioukov D. Navigable networks as Nash equilibria of navigation games. Nature Communications. 2015;6:7651 10.1038/ncomms8651 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Krioukov D, Papadopoulos F, Kitsak M, Vahdat A, Boguná M. Hyperbolic geometry of complex networks. Physical Review E. 2010;82(3):036106. [DOI] [PubMed] [Google Scholar]

- 16.Boguna M, Krioukov D, Claffy KC. Navigability of complex networks. Nature Physics. 2009;5(1):74–80. [Google Scholar]

- 17.Papadopoulos F, Kitsak M, Serrano MÁ, Boguñá M, Krioukov D. Popularity versus similarity in growing networks. Nature. 2012;489(7417):537–40. 10.1038/nature11459 [DOI] [PubMed] [Google Scholar]

- 18.McPherson M, Smith-Lovin L, Cook JM. Birds of a feather: Homophily in social networks. Annual Review of Sociology. 2001:415–44. [Google Scholar]

- 19.Chávez E, Navarro G, Baeza-Yates R, Marroquín JL. Searching in metric spaces. ACM computing surveys (CSUR). 2001;33(3):273–321. [Google Scholar]

- 20.Arya S, Mount DM, editors. Approximate Nearest Neighbor Queries in Fixed Dimensions. SODA; 1993. [Google Scholar]

- 21.Sebastian TB, Kimia BB, editors. Metric-based shape retrieval in large databases. Pattern Recognition, 2002 Proceedings 16th International Conference on; 2002: IEEE.

- 22.Hajebi K, Abbasi-Yadkori Y, Shahbazi H, Zhang H, editors. Fast approximate nearest-neighbor search with k-nearest neighbor graph. IJCAI Proceedings-International Joint Conference on Artificial Intelligence; 2011.

- 23.Beaumont O, Kermarrec A-M, Marchal L, Rivière É, editors. VoroNet: A scalable object network based on Voronoi tessellations. Parallel and Distributed Processing Symposium, 2007 IPDPS 2007 IEEE International; 2007: IEEE.

- 24.Beaumont O, Kermarrec A-M, Rivière É. Peer to peer multidimensional overlays: Approximating complex structures Principles of Distributed Systems: Springer; 2007. p. 315–28. [Google Scholar]

- 25.Lifshits Y, Zhang S, editors. Combinatorial algorithms for nearest neighbors, near-duplicates and small-world design. Proceedings of the Twentieth Annual ACM-SIAM Symposium on Discrete Algorithms; 2009: Society for Industrial and Applied Mathematics.

- 26.Ponomarenko A, Malkov Y, Logvinov A, Krylov V, editors. Approximate Nearest Neighbor Search Small World Approach. International Conference on Information and Communication Technologies & Applications; 2011; Orlando, Florida, USA.

- 27.Malkov Y, Ponomarenko A, Logvinov A, Krylov V. Scalable distributed algorithm for approximate nearest neighbor search problem in high dimensional general metric spaces Similarity Search and Applications: Springer; Berlin Heidelberg; 2012. p. 132–47. [Google Scholar]

- 28.Malkov Y, Ponomarenko A, Logvinov A, Krylov V. Approximate nearest neighbor algorithm based on navigable small world graphs. Information Systems. 2014;45:61–8. [Google Scholar]

- 29.Ponomarenko A, Avrelin N, Naidan B, Boytsov L. Comparative Analysis of Data Structures for Approximate Nearest Neighbor Search. In Proceedings of The Third International Conference on Data Analytics. 2014.

- 30.Naidan B, Boytsov L, Nyberg E. Permutation search methods are efficient, yet faster search is possible. VLDB Procedings. 2015;8(12):1618–29. [Google Scholar]

- 31.Serrano MA, Krioukov D, Boguná M. Self-similarity of complex networks and hidden metric spaces. Physical Review Letters. 2008;100(7):078701 [DOI] [PubMed] [Google Scholar]

- 32.Wang B, Zhuo Z, Cai S-M, Fu Z-Q. Effects of community structure on navigation. Physica A: Statistical Mechanics and its Applications. 2013;392(8):1902–8. [Google Scholar]

- 33.Bullmore E, Sporns O. Complex brain networks: graph theoretical analysis of structural and functional systems. Nature Reviews Neuroscience. 2009;10(3):186–98. 10.1038/nrn2575 [DOI] [PubMed] [Google Scholar]

- 34.Vértes PE, Alexander-Bloch A, Bullmore ET. Generative models of rich clubs in Hebbian neuronal networks and large-scale human brain networks. Philosophical Transactions of the Royal Society of London B: Biological Sciences. 2014;369(1653):20130531 10.1098/rstb.2013.0531 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Betzel RF, Avena-Koenigsberger A, Goñi J, He Y, de Reus MA, Griffa A, et al. Generative models of the human connectome. NeuroImage. 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Vértes PE, Alexander-Bloch AF, Gogtay N, Giedd JN, Rapoport JL, Bullmore ET. Simple models of human brain functional networks. Proceedings of the National Academy of Sciences. 2012;109(15):5868–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Colizza V, Flammini A, Serrano MA, Vespignani A. Detecting rich-club ordering in complex networks. Nature Physics. 2006;2(2):110–5. [Google Scholar]

- 38.van den Heuvel MP, Kahn RS, Goñi J, Sporns O. High-cost, high-capacity backbone for global brain communication. Proceedings of the National Academy of Sciences. 2012;109(28):11372–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Bullmore E, Sporns O. The economy of brain network organization. Nature Reviews Neuroscience. 2012;13(5):336–49. 10.1038/nrn3214 [DOI] [PubMed] [Google Scholar]

- 40.Lim S, Kaiser M. Developmental time windows for axon growth influence neuronal network topology. Biological cybernetics. 2015;109(2):275–86. 10.1007/s00422-014-0641-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Nicosia V, Vértes PE, Schafer WR, Latora V, Bullmore ET. Phase transition in the economically modeled growth of a cellular nervous system. Proceedings of the National Academy of Sciences. 2013;110(19):7880–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ozik J, Hunt BR, Ott E. Growing networks with geographical attachment preference: Emergence of small worlds. Physical Review E. 2004;69(2):026108. [DOI] [PubMed] [Google Scholar]

- 43.Zitin A, Gorowara A, Squires S, Herrera M, Antonsen TM, Girvan M, et al. Spatially embedded growing small-world networks. Scientific Reports. 2014;4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Thadakamalla H, Albert R, Kumara S. Search in spatial scale-free networks. New Journal of Physics. 2007;9(6):190. [Google Scholar]

- 45.Zuev K, Boguñá M, Bianconi G, Krioukov D. Emergence of Soft Communities from Geometric Preferential Attachment. Scientific Reports. 2015;5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Watts DJ, Strogatz SH. Collective dynamics of ‘small-world’networks. Nature. 1998;393(6684):440–2. [DOI] [PubMed] [Google Scholar]

- 47.Navarro G. Searching in metric spaces by spatial approximation. The VLDB Journal. 2002;11(1):28–46. [Google Scholar]

- 48.Newman M, Barabasi A-L, Watts DJ. The structure and dynamics of networks: Princeton University Press; 2006. [Google Scholar]

- 49.Qi S, Meesters S, Nicolay K, Romeny BMtH, Ossenblok P. The influence of construction methodology on structural brain network measures: A review. Journal of Neuroscience Methods. (0). 10.1016/j.jneumeth.2015.06.016 [DOI] [PubMed] [Google Scholar]

- 50.Barabási A-L, Albert R. Emergence of scaling in random networks. Science. 1999;286(5439):509–12. [DOI] [PubMed] [Google Scholar]

- 51.Boguná M, Pastor-Satorras R, Vespignani A. Cut-offs and finite size effects in scale-free networks. The European Physical Journal B-Condensed Matter and Complex Systems. 2004;38(2):205–9. [Google Scholar]

- 52.Bianconi G, Pin P, Marsili M. Assessing the relevance of node features for network structure. Proceedings of the National Academy of Sciences. 2009;106(28):11433–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Gallos LK, Makse HA, Sigman M. A small world of weak ties provides optimal global integration of self-similar modules in functional brain networks. Proceedings of the National Academy of Sciences. 2012;109(8):2825–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Eguiluz VM, Chialvo DR, Cecchi GA, Baliki M, Apkarian AV. Scale-free brain functional networks. Physical Review Letters. 2005;94(1):018102 [DOI] [PubMed] [Google Scholar]

- 55.Song C, Havlin S, Makse HA. Self-similarity of complex networks. Nature. 2005;433(7024):392–5. [DOI] [PubMed] [Google Scholar]

- 56.Colomer-de-Simón P, Serrano MÁ, Beiró MG, Alvarez-Hamelin JI, Boguñá M. Deciphering the global organization of clustering in real complex networks. Scientific Reports. 2013;3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Meunier D, Lambiotte R, Bullmore ET. Modular and hierarchically modular organization of brain networks. Frontiers in neuroscience. 2010;4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Ball G, Aljabar P, Zebari S, Tusor N, Arichi T, Merchant N, et al. Rich-club organization of the newborn human brain. Proceedings of the National Academy of Sciences. 2014;111(20):7456–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Towlson EK, Vértes PE, Ahnert SE, Schafer WR, Bullmore ET. The rich club of the C. elegans neuronal connectome. The Journal of Neuroscience. 2013;33(15):6380–7. 10.1523/JNEUROSCI.3784-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Varier S, Kaiser M. Neural development features: Spatio-temporal development of the Caenorhabditis elegans neuronal network. PLOS Computational Biology. 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Rubinov M. Schizophrenia and abnormal brain network hubs. Dialogues in Clinical Neuroscience. 2013;15(3):339 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Boytsov L, Naidan B. Engineering Efficient and Effective Non-metric Space Library Similarity Search and Applications: Springer; 2013. p. 280–93. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary part of the paper that includes: comparison of the basic GH model to the scale-free networks from [16] (Figure A); plots of self-similarity in clustering coefficient distribution (Figure B); plots of average nearest neighbor degree (Figure C); plots of rich club coefficient (Figure D); pseudocode of the construction algorithm; pseudocode of the degree normalized distance algorithm; plots of stretch and upper limits of the greedy path in 1D (Figure E).

(PDF)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.