Abstract

Vocabulary learning in a second language is enhanced if learners enrich the learning experience with self-performed iconic gestures. This learning strategy is called enactment. Here we explore how enacted words are functionally represented in the brain and which brain regions contribute to enhance retention. After an enactment training lasting 4 days, participants performed a word recognition task in the functional Magnetic Resonance Imaging (fMRI) scanner. Data analysis suggests the participation of different and partially intertwined networks that are engaged in higher cognitive processes, i.e., enhanced attention and word recognition. Also, an experience-related network seems to map word representation. Besides core language regions, this latter network includes sensory and motor cortices, the basal ganglia, and the cerebellum. On the basis of its complexity and the involvement of the motor system, this sensorimotor network might explain superior retention for enactment.

Keywords: second language, word learning, enactment, embodiment, brain

Introduction

In foreign language instruction, novel vocabulary is mainly taught by means of audio-visual strategies such as listening and comprehension activities (Graham et al., 2014). At home, learners are confronted with bilingual lists that decay fast (Yamamoto, 2014). Memory research has demonstrated that self-performed gestures accompanying words and phrases during learning enhance vocabulary retention compared to reading and/or listening (Zimmer, 2001). The effect of gestures on verbal memory, enactment effect (Engelkamp, 1980; Engelkamp and Krumnacker, 1980; Engelkamp and Zimmer, 1984) or subject performed task effect (Cohen, 1981), has proven to be robust. In the 1980s and 1990s, enactment of words and phrases was successfully tested in various populations, including children, young, and elderly people (Bäckman and Nilsson, 1985), subjects with cognitive and mental impairments (Mimura et al., 1998), and Alzheimer patients (Karlsson et al., 1989) by means of recognition and free and cued recall tests. In recent years, an increasing number of behavioral studies have documented the positive effect of enactment also in second language word learning in both the short and in the long term (for a review, see Macedonia, 2014). More recently, the combined value of enactment and physical exercise has been investigated in elementary bilingual instruction (Mavilidi et al., 2015; Toumpaniari et al., 2015).

Over the decades, different theories have accounted for the enactment effect. Nearly 40 years ago, these theories based their evidence on behavioral experiments and were nourished by observation and a large portion of intuition. With the advent of neuroscience, these theories have gained additional empirical ground. They have been partially validated through combined experiments employing tools from both disciplines, i.e., behavioral psychology and brain imaging as we describe in the next sections.

The first theory asserts that a gesture performed by learners during the acquisition of a novel word leaves a motor trace in memory (Engelkamp and Krumnacker, 1980; Engelkamp and Zimmer, 1984, 1985; Nyberg et al., 2001). In neuroscience, a trace is an experience-related component in the functional network representing the word (Pulvermüller, 2002); neuroimaging studies have proven the existence of the trace. During acoustic and audio-visual recognition of words learned through enactment, motor cortices become active (Nyberg et al., 2001; Masumoto et al., 2006; Eschen et al., 2007; Macedonia et al., 2011; Mayer et al., 2015). More generally, this theory can be embedded in the framework of embodied cognition (Barsalou, 2008), where a concept entails sensory, motor and/or emotive components pertaining to the corresponding sensory, motor and/or affective systems engaged during experience (Jirak et al., 2010). The trace can be detected by means of brain imaging that locates brain activity during word recognition/retrieval. This activity is due to simulation processes that reactivate neural ensembles originally involved and interconnected during the experience (Dijkstra and Post, 2015).

Another theory attributes the enactment effect to mental imagery. Saltz and Donnenwerthnolan (1981) suggested that performing a gesture to a word triggers the mental image associated with the word. Thereafter the double representation (verbal and visual) enhances the word's retention. This view is connected to Paivio's dual-coding theory (Paivio, 1969, 1971), which maintains that an image paired to a word has an impact on the word's retention because the processing of both word and image engages different channels and exploits both potentials. Imagery related to L2 word learning has also been tested in a brain imaging experiment: Macedonia et al. (2011) had subjects learn L2 words with two sets of gestures, iconic and semantically unrelated. Audiovisual presentation of words learned with semantically unrelated gestures elicited activity in a network engaged in cognitive control. These results were interpreted as detection of the mismatch between the mental image a person has of a word and the gesture used during training. In other studies, mismatches between L1 words and gestures were seen as activating a network denoting incongruence (Holle and Gunter, 2007; Holle et al., 2008). Altogether, in recent decades a growing body of evidence has demonstrated that language and gesture represent two aspects of the same communicative system (Goldin-Meadow, 1999; Bernardis and Gentilucci, 2006; Kelly et al., 2010) and that they share neural substrates (for a review, see Andric and Small, 2012).

In a few behavioral studies, the enactment effect has also been explained in terms of complexity of word representation (Knopf, 1992; Kormi-Nouri, 1995; Macedonia, 2003; Macedonia and Knösche, 2011). In these studies, the term complexity was used in a descriptive way: The authors asserted that a written word enriched by a gesture is represented in memory in a more complex way because additional perceptive modalities are engaged. Neuroscience has demonstrated that the brain codes, represents and stores information connected to a word on the basis of the sensory input provided (Pulvermüller, 2001, 2005; Pulvermüller and Fadiga, 2010). Hence gestures make the word's representation richer and more complex. In traditional L2 instruction, learning a written word or listening to it leads to a representation that is bare of multiple sensorimotor experiences as in L1. Gestures as a learning strategy add sensorimotor and proprioceptive information to the word in L2. Thereby the word's representation becomes more complex and elaborative.

A further explanation for the enactment effect focuses on enhanced attention. Supporters of this theory assert that learners performing a gesture connected to a word's semantics are more attentive than those who only read verbal information or hear the word (Backman et al., 1993; Knudsen, 2007; Muzzio et al., 2009a,b; Pereira et al., 2012). Attention is a basic component of retention as it induces representational stability in the hippocampus (Muzzio et al., 2009a; Aly and Turk-Browne, 2016).

The superior retrievability of L2 words learned through enactment has also been accounted for in terms of depth of encoding (Quinn-Allen, 1995; Macedonia, 2003; Tellier, 2008; Kelly et al., 2009; Macedonia et al., 2011; Krönke et al., 2013). The concept of deep and shallow encoding goes back to the “Level of Processing Framework” (LOP) by Craik and Tulving (1975). In LOP, sensory processing—hearing a word—is shallow information encoding that leads to poor memory performance (Craik and Lockhart, 1972). By contrast, semantic processing by selecting semantic features of a word in a task is deep and leads to durable memorization (Hunt and Worthen, 2006). Furthermore, deep processing is achieved by integrating novel information with pre-existing knowledge and creating distinctiveness during encoding. Thus, deep processing could be accomplished through gestures. Performing a gesture means selecting arbitrary features related to a word that represent its semantics. Furthermore, gestures can integrate novel information, i.e., the word in L2, with pre-existing semantic knowledge about the word in L1. Although depth of processing has been taken as an explanation for successful memorization in many studies on enactment, the brain mechanisms associated with depth are not fully understood. In an early review article, Nyberg (2002) connected deep processing with brain activity in frontal and medial temporal brain regions. More recently, Galli (2014) reviews functional magnetic resonance imaging (fMRI) studies on tasks with shallow vs. deep encoding; the author concludes that brain regions engaged in shallow encoding represent a subset of those involved in deep encoding. Galli concludes that shallow and deep encoding might have varying network topographies depending on the kind of stimulus processed and the specificity of the encoding tasks.

While early enactment research attributed the effect to only one of the above reasons, recent research indicates that the different accounts mirror different aspects of enacting verbal information. A gesture accompanying a novel word creates a motor trace, triggers a mental image, encodes more deeply, and obviously engages more attention than only reading the word. All these processes are performed in networks interacting in brain topography and time.

Another theory on enactment, the system-oriented approach pursued by Engelkamp (2001), has not received much attention in the scientific discourse. Following on the line of memory subsystems (Engelkamp and Zimmer, 1994), Engelkamp advances the hypothesis that enactment, because of the “physical properties of the ongoing stimulus” might engage “more than one memory system.” More explicitly, encoding a written word as such (a character string) involves explicit, i.e., declarative memory. However, encoding a gesture, an action, stores the information related to the word in procedural memory. Accordingly, because enactment of verbal information engages “systems that are obligatorily activated dependently on the stimulus modality” combines both declarative and procedural memory during learning. Engelkamp observes that “there are systems that are not automatically activated given a specific stimulus, but that can be strategically activated, for instance, when a specific task is given.” In other words, the procedural system is not automatically engaged when we learn words by reading them or listening to them. However, the procedural system can be strategically involved by accompanying the words with gestures. Gestures therefore lead to memory enhancement because—by the nature of the stimulus—they engage procedural memory in word storage. Daprati et al. (2005) also address the possible involvement of procedural memory for words encoded by enactment. The study is conducted on healthy individuals and on schizophrenic patients. The latter show deficits in awareness states but procedural memory is intact (Danion et al., 2001). Healthy subjects take advantage of the enactment effect but patients suffering from schizophrenia do not. This result does not confirm the engagement of procedural memory in learning through enactment; however, schizophrenia has been reported to be a motor awareness disturbance (Frith et al., 2000) with deficits in monitoring of self-generated actions (Frith, 1987). This might explain the failure of enactment in this specific population.

Despite the progress in enactment research, we still lack a complete picture of how novel words learned with iconic gestures are functionally mapped in the brain. The present study aims to show the different experience-related components of the word network. To this end, we use a section of a fMRI dataset originally acquired to investigate differences in retention between words learned with iconic gestures and words learned with semantically unrelated gestures (Macedonia et al., 2011). We designed that study in order to test word retention learned with iconic and semantically unrelated gestures. At that time, it was not clear which component was crucial to retention: the motor component or iconicity. We found that words accompanied by iconic gestures were better retained than words learned with semantically unrelated gestures and that this correlated with stronger activity in the motor cortices. Furthermore, words learned with semantically unrelated gestures elicited a network for cognitive control, possibly denoting a mismatch between an internal image of the word and the gesture presented while learning.

In the present study, we extract from the dataset those events that are related to the recognition of words learned with iconic gestures. By analyzing the BOLD response elicited during recognition of the L2 words, we seek to localize networks involved in learning. We hypothesize that these networks include the sensorimotor modalities engaged during the process. Further, we follow Engelkamp's system-oriented approach and pose the question of whether other brain structures related to procedural memory—besides motor cortices, as demonstrated in previous studies—may be involved in a word's representation. If so, this could provide evidence for the engagement of procedural memory in word learning through enactment.

Methods

Participants, behavioral training procedure, and results

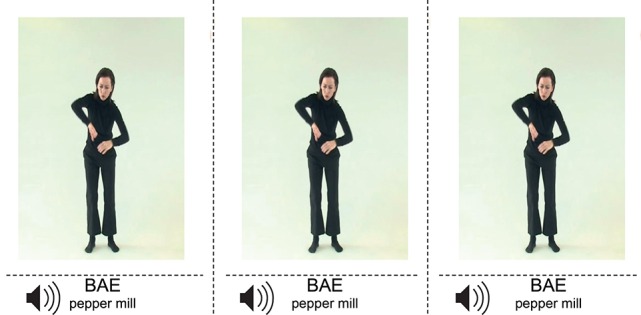

Eighteen participants (mean age 23.44, M = 25, SD = 1.38, 10 females, 8 males) memorized 92 words of Vimmi, an artificial corpus created in order to avoid associations to languages known to the subjects and conforming to Italian phonotactic rules (Macedonia et al., 2011). During the training, lasting for 4 days, 2 h daily, the novel words were accompanied either by 46 iconic (McNeill, 1992) or by 46 semantically unrelated gestures such as stretching one's arms. Participants watched videos of an actress performing the gestures and enunciating the novel words (Figure 1). Simultaneously, the written word in Vimmi and in German appeared on a screen. Thereafter, participants were cued to repeat the word in Vimmi and to perform the corresponding gesture. Participants were randomly divided in two subgroups that trained both sets of words and gestures in a counterbalanced way. The 92 words were subdivided in blocks of 6 + 6 + 6 + 5 items. Within each block, every vocabulary item was randomized and presented daily 13 times. From the second until the fifth experiment day, memory performance was assessed through cued translation tests from German into Vimmi and vice versa. Starting from day 2, before the training participants were given a randomized list of the 92 trained words to be translated into the other language (duration 7.5 min for every list). Additionally the same test was administered after ~60 days.

Figure 1.

Screen shot of an iconic gesture from the video used during training. It represents the Vimmi word bae (Engl. pepper mill). During the training, participants were cued to perform the gesture as they said the word after reading and hearing it.

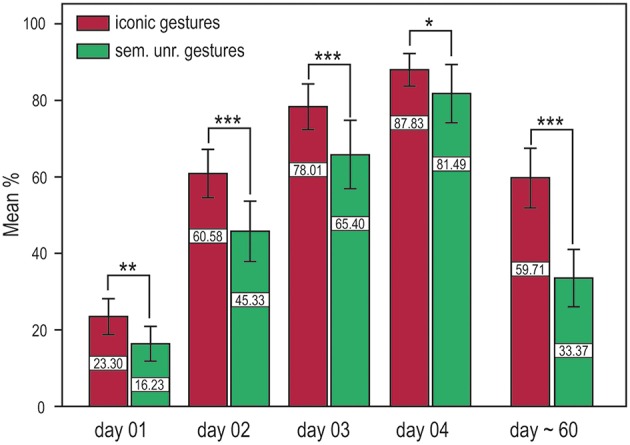

We performed a repeated measures ANOVA with the factors training and time (iconic and semantically unrelated gestures) and time (DAY 01, DAY 02, DAY 03, DAY 04). Significantly better results in word retention were achieved with iconic gestures in both the short and long term. For both translation directions, memory performance was significantly better for words learned with iconic gestures, i.e., German into Vimmi = Training F(1, 32) = 22.86, p < 0.001 and Vimmi into German = F(1, 32) = 15.20, p < 0.001. Additionally, around 60 days after training, memory performance was tested again by means of a paired free recall test. Participants were first given an empty sheet and were asked to write down as many words as they could remember in either one or the other language with the corresponding translation. This test mirrors the capacity of a second language learner to retrieve the word in both languages. The free recall test showed superior memory results for words learned with iconic gestures F(1, 28) = 122.18, p < 0.001 (Figure 2).

Figure 2.

Training results for the cued translation test from German into Vimmi and vice versa (merged data) and results for the paired free recall test (day 60). Words encoded through iconic gestures are significantly better retrieved at all time points. *p < 0.05, **p < 0.01, ***p < 0.001.

fMRI experiment

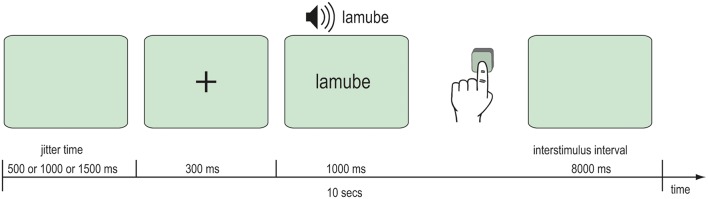

After the behavioral learning phase, fMRI data were acquired during an audio-visual word recognition task. In the scanner, participants were audio-visually presented 92 words that they had previously trained and 23 novel words. Further 23 silent events represented the baseline. A single item was presented at each trial beginning with a fixation cross for 300 ms followed by the Vimmi word for 1 s. The interstimulus interval was 8 s. Participants read the words on a back-projection screen mounted behind their heads in the bore of the magnet. Audio files with an approximate duration of 1000 ms were played when the word was shown. Participants were instructed to press a key with their left hand if the word was unknown. Altogether the scanning comprised 138 trials with the trained and the novel words and the silent events. All items were balanced across the presentation. The whole experimental session lasted 23 min (Figure 3).

Figure 3.

Scanning procedure.

Neuroimaging parameters

Functional scanning was performed with the following imaging parameters: BOLD sensitive gradient EPI sequence, TR = 2000 ms, TE = 30 ms, flip angle = 90°, acquisition bandwidth = 100 Hz. We acquired 20 axial slices (4 mm thick, 1 mm interslice distance, FOV 19.2 cm, data matrix of 64 × 64 voxels, inplane resolution of 3 × 3 mm) every 2000 ms on a 3-T Bruker (Ettlingen, Germany) Medspec 30/100 system. Prior to functional data acquisition, we obtained a T1-weighted modified driven equilibrium Fourier transform (MDEFT) image (data matrix 256 × 256, TR = 130 ms, TE = 10 ms) with a non-slice-selective inversion pulse that was followed by a single excitation of each slice (Norris, 2000). This anatomical image (which was acquired in the same orientation as the functional images) was co-registered with a previously obtained high-resolution whole-head 3-D brain image: 128 sagittal slices, 1.5-mm thickness, FOV 25.0 × 25.0 × 19.2 cm, data matrix of 256 × 156 voxels. Thereafter, the same registration parameters were applied to the functional images. The fMRI experiment was approved by the Ethics Committee of the University of Leipzig (Germany).

Data analysis

For the present paper, we considered only the data related to the words learned through iconic gestures and contrasted them with the baseline silence. We intended to explore the topography of experience-related word networks. We analyzed the functional data with the Lipsia software package (Lohmann et al., 2001). Functional data were corrected for motion and for the temporal offset between the slices. Thereafter, we aligned the functional slices with a 3D stereotactic coordinate reference system. We acquired the registration parameters on the basis of the MDEFT slices, thereby achieving an optimal match between the slices and the individual 3D reference dataset, standardized to the Talairach stereotactic space (Talairach and Tournoux, 1988). We transformed the functional slices using trilinear interpolation, so that the resulting functional slices were aligned with the stereotactic coordinate system according to the registration parameters. During pre-processing, we further smoothed the data with a Gaussian filter of 10 mm FWHM, and a temporal high-pass filter with a cut-off frequency of 1/100 Hz. The data were entered as statistics using general linear regression with pre-whitening (Worsley et al., 2002). By using the Yule–Walker equations from the least squares residuals, we estimated autocorrelation parameters. They were also used to whiten both data and design matrices. Thereafter, we re-estimated the linear model using least squares on the whitened data to produce estimates of effects and their standard errors. We generated the design matrix using the canonical hemodynamic response function (Friston et al., 1998). Subsequently, we generated contrast images by computing the difference between the parameter estimates of the iconic gestures condition and the baseline, i.e., silence. We entered all contrast images into a second-level Bayesian analysis. Compared with null hypothesis significance, this analysis has a high reliability in small-group statistics with high within-subject variability (Friston et al., 2008). For display reasons, Bayesian probabilities (1-p) were finally transformed to z-values.

fMRI results

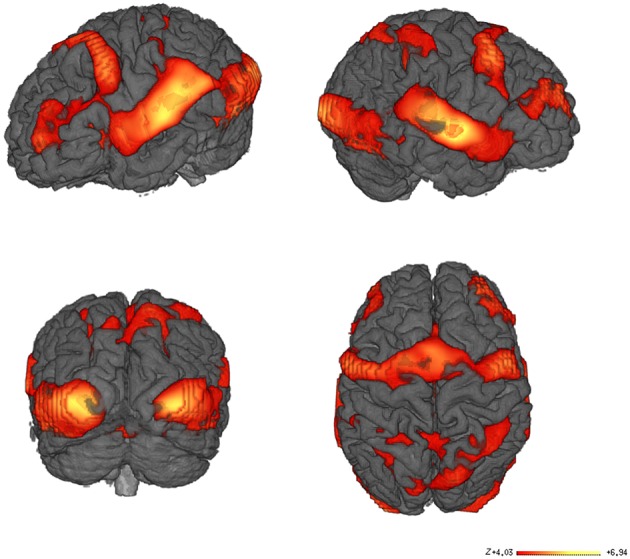

Our data were acquired during a word recognition task when participants lying in the scanner were visually and acoustically presented words that they had previously learned with iconic gestures, and unknown words, in random order. The whole brain analysis of the contrast between all words learned with iconic gestures vs. the baseline silence revealed haemodynamic responses in a number of regions as listed in Table 1. As fMRI lacks temporal resolution for neural processes, our visualization of regional oxygenated blood flow seems to reflect processes of perception, attention and word selection that occurred during scanning, as well as the functional neural representation of the words (Figure 4).

Table 1.

Results of fMRI main contrast (Silence—Iconic Gestures).

| CEREBRUM Talairach | ||||

|---|---|---|---|---|

| x | y | z | z-val | |

| BA 3 | ||||

| 27 | −33 | 60 | 3.965 | Right Cerebrum, Parietal Lobe, Postcentral Gyrus, Gray Matter, Brodmann area 3, Range = 1 |

| 21 | −36 | 60 | 4.031 | Right Cerebrum, Parietal Lobe, Postcentral Gyrus, Gray Matter, Brodmann area 3, Range = 1 |

| BA 4 | ||||

| −63 | −21 | 42 | 3.068 | Left Cerebrum, Frontal Lobe, Precentral Gyrus, Gray Matter, Brodmann area 4, Range = 3 |

| BA 5 | ||||

| −33 | −39 | 60 | 4.205 | Left Cerebrum, Parietal Lobe, Postcentral Gyrus, Gray Matter, Brodmann area 5, Range = 0 |

| BA 6 | ||||

| −21 | 3 | 66 | 5.123 | Left Cerebrum, Frontal Lobe, Superior Frontal Gyrus, Gray Matter, Brodmann area 6, Range = 0 |

| −54 | 0 | 39 | 5.526 | Left Cerebrum, Frontal Lobe, Precentral Gyrus, Gray Matter, Brodmann area 6, Range = 0 |

| −18 | 3 | 48 | 5.583 | Left Cerebrum, Frontal Lobe, Medial Frontal Gyrus, Gray Matter, Brodmann area 6, Range = 1 |

| −45 | 0 | 57 | 5.140 | Left Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 6, Range = 2 |

| −36 | −9 | 36 | 5.131 | Left Cerebrum, Frontal Lobe, Precentral Gyrus, Gray Matter, Brodmann area 6, Range = 2 |

| −42 | −3 | 30 | 5.532 | Left Cerebrum, Frontal Lobe, Precentral Gyrus, Gray Matter, Brodmann area 6, Range = 2 |

| −39 | −3 | 60 | 5.192 | Left Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 6, Range = 3 |

| −30 | 0 | 39 | 5.213 | Left Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 6, Range = 4 |

| −21 | −9 | 39 | 5.349 | Left Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 6, Range = 4 |

| −30 | −12 | 39 | 5.110 | Left Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 6, Range = 4 |

| 36 | −3 | 54 | 5.751 | Right Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 6, Range = 0 |

| BA 7 | ||||

| −30 | −54 | 42 | 6.615 | Left Cerebrum, Parietal Lobe, Superior Parietal Lobule, Gray Matter, Brodmann area 7, Range = 3 |

| −12 | −45 | 48 | 4.749 | Left Cerebrum, Parietal Lobe, Precuneus, Gray Matter, Brodmann area 7, Range = 1 |

| −18 | −69 | 39 | 5.020 | Left Cerebrum, Parietal Lobe, Precuneus, Gray Matter, Brodmann area 7, Range = 0 |

| 27 | −48 | 57 | 4.834 | Right Cerebrum, Parietal Lobe, Superior Parietal Lobule, Gray Matter, Brodmann area 7, Range = 1 |

| 12 | −66 | 51 | 5.507 | Right Cerebrum, Parietal Lobe, Precuneus, Gray Matter, Brodmann area 7, Range = 1 |

| 18 | −45 | 45 | 5.296 | Right Cerebrum, Parietal Lobe, Precuneus, Gray Matter, Brodmann area 7, Range = 2 |

| 24 | −54 | 39 | 6.106 | Right Cerebrum, Parietal Lobe, Precuneus, Gray Matter, Brodmann area 7, Range = 5 |

| BA 8 | ||||

| 39 | 21 | 48 | 2.415 | Right Cerebrum, Frontal Lobe, Superior Frontal Gyrus, Gray Matter, Brodmann area 8, Range = 0 |

| BA 9 | ||||

| −42 | 39 | 33 | 4.744 | Left Cerebrum, Frontal Lobe, Superior Frontal Gyrus, Gray Matter, Brodmann area 9, Range = 0 |

| −54 | 27 | 33 | 4.281 | Left Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 9, Range = 0 |

| 0 | 15 | 33 | 6.229 | Left Cerebrum, Frontal Lobe, Cingulate Gyrus, Gray Matter, Brodmann area 32, Range = 2 |

| −12 | −18 | 33 | 5.317 | Left Cerebrum, Limbic Lobe, Cingulate Gyrus, Gray Matter, Brodmann area 24, Range = 5 |

| −48 | 12 | 24 | 4.619 | Left Cerebrum, Frontal Lobe, Inferior Frontal Gyrus, Gray Matter, Brodmann area 9, Range = 2 |

| 27 | 48 | 33 | 5.256 | Right Cerebrum, Frontal Lobe, Superior Frontal Gyrus, Gray Matter, Brodmann area 9, Range = 1 |

| 30 | 33 | 24 | 5.629 | Right Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 9, Range = 2 |

| BA 10 | ||||

| 30 | 48 | 27 | 5.246 | Right Cerebrum, Frontal Lobe, Superior Frontal Gyrus, Gray Matter, Brodmann area 10, Range = 2 |

| 30 | 57 | 18 | 4.980 | Right Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 10, Range = 1 |

| 27 | 42 | 12 | 5.109 | Right Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 10, Range = 5 |

| BA 11 | ||||

| −30 | 39 | −12 | 2.876 | Left Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 11, Range = 0 |

| BA 13 | ||||

| −30 | 6 | 21 | 5.532 | Left Cerebrum, Sub-lobar, Insula, Gray Matter, Brodmann area 13, Range = 4 |

| −30 | 21 | 6 | 6.619 | Left Cerebrum, Sub-lobar, Insula, Gray Matter, Brodmann area 13, Range = 1 |

| 30 | 12 | 18 | 5.846 | Right Cerebrum, Sub-lobar, Insula, Gray Matter, Brodmann area 13, Range = 5 |

| 54 | −33 | 18 | 5.989 | Right Cerebrum, Sub-lobar, Insula, Gray Matter, Brodmann area 13, Range = 1 |

| BA 18 | ||||

| −24 | −96 | 0 | 6.494 | Left Cerebrum, Occipital Lobe, Cuneus, Gray Matter, Brodmann area 18, Range = 1 |

| 15 | −75 | 18 | 3.324 | Right Cerebrum, Occipital Lobe, Cuneus, Gray Matter, Brodmann area 18, Range = 1 |

| 24 | −93 | 0 | 6.618 | Right Cerebrum, Occipital Lobe, Cuneus, Gray Matter, Brodmann area 18, Range = 2 |

| BA 20 | ||||

| −39 | −12 | −18 | 4.996 | Left Cerebrum, Temporal Lobe, Sub-Gyral, Gray Matter, Brodmann area 20, Range = 1 |

| BA 22 | ||||

| −66 | −48 | 12 | 5.722 | Left Cerebrum, Temporal Lobe, Superior Temporal Gyrus, Gray Matter, Brodmann area 22, Range = 0 |

| BA 23 | ||||

| −3 | −15 | 27 | 5.883 | Left Cerebrum, Limbic Lobe, Cingulate Gyrus, Gray Matter, Brodmann area 23, Range = 0 |

| 0 | −33 | 24 | 5.374 | Left Cerebrum, Limbic Lobe, Cingulate Gyrus, Gray Matter, Brodmann area 23, Range = 2 |

| BA 24 | ||||

| 18 | −9 | 42 | 5.158 | Right Cerebrum, Limbic Lobe, Cingulate Gyrus, Gray Matter, Brodmann area 24, Range = 3 |

| 15 | −3 | 39 | 5.046 | Right Cerebrum, Limbic Lobe, Cingulate Gyrus, Gray Matter, Brodmann area 24, Range = 4 |

| 3 | 3 | 30 | 5.225 | Right Cerebrum, Limbic Lobe, Cingulate Gyrus, Gray Matter, Brodmann area 24, Range = 0 |

| BA 25 | ||||

| −9 | 15 | −15 | 4.249 | Left Cerebrum, Frontal Lobe, Medial Frontal Gyrus, Gray Matter, Brodmann area 25, Range = 1 |

| BA 27 | ||||

| 9 | −36 | 3 | 4.960 | Right Cerebrum, Limbic Lobe, Parahippocampal Gyrus, Gray Matter, Brodmann area 27, Range = 2 |

| BA 29 | ||||

| −9 | −39 | 12 | 4.868 | Left Cerebrum, Limbic Lobe, Posterior Cingulate, Gray Matter, Brodmann area 29, Range = 4 |

| BA 31 | ||||

| 12 | −33 | 42 | 4.746 | Right Cerebrum, Limbic Lobe, Cingulate Gyrus, Gray Matter, Brodmann area 31, Range = 3 |

| BA 32 | ||||

| 18 | 33 | 18 | 5.318 | Right Cerebrum, Limbic Lobe, Anterior Cingulate, Gray Matter, Brodmann area 32, Range = 0 |

| BA 36 | ||||

| −24 | −33 | −15 | 4.128 | Left Cerebrum, Limbic Lobe, Parahippocampal Gyrus, Gray Matter, Brodmann area 36, Range = 1 |

| −36 | −30 | −21 | 4.687 | Left Cerebrum, Limbic Lobe, Parahippocampal Gyrus, Gray Matter, Brodmann area 36, Range = 0 |

| BA 37 | ||||

| −51 | −72 | 0 | 5.590 | Left Cerebrum, Occipital Lobe, Inferior Temporal Gyrus, Gray Matter, Brodmann area 37, Range = 0 |

| −42 | −63 | −12 | 6.291 | Left Cerebrum, Temporal Lobe, Fusiform Gyrus, Gray Matter, Brodmann area 37, Range = 0 |

| 42 | −57 | −18 | 5.604 | Right Cerebrum, Temporal Lobe, Fusiform Gyrus, Gray Matter, Brodmann area 37, Range = 0 |

| 42 | −42 | −6 | 5.503 | Right Cerebrum, Temporal Lobe, Fusiform Gyrus, Gray Matter, Brodmann area 37, Range = 5 |

| BA 38 | ||||

| −30 | 9 | −21 | 3.815 | Left Cerebrum, Temporal Lobe, Superior Temporal Gyrus, Gray Matter, Brodmann area 38, Range = 2 |

| −54 | 21 | −21 | 3.566 | Left Cerebrum, Temporal Lobe, Superior Temporal Gyrus, Gray Matter, Brodmann area 38, Range = 2 |

| BA 39 | ||||

| −54 | −69 | 36 | −3.767 | Left Cerebrum, Parietal Lobe, Angular Gyrus, Gray Matter, Brodmann area 39, Range = 2 |

| BA 40 | ||||

| −57 | −33 | 30 | 4.984 | Left Cerebrum, Parietal Lobe, Inferior Parietal Lobule, Gray Matter, Brodmann area 40, Range = 1 |

| BA 41 | ||||

| −54 | −18 | 6 | 6.565 | Left Cerebrum, Temporal Lobe, Superior Temporal Gyrus, Gray Matter, Brodmann area 41, Range = 0 |

| 36 | −27 | 12 | 6.112 | Right Cerebrum, Temporal Lobe, Transverse Temporal Gyrus, Gray Matter, Brodmann area 41, Range = 0 |

| 60 | −18 | 6 | 7.005 | Right Cerebrum, Temporal Lobe, Superior Temporal Gyrus, Gray Matter, Brodmann area 41, Range = 3 |

| BA 42 | ||||

| −63 | −30 | 9 | 6.330 | Left Cerebrum, Temporal Lobe, Superior Temporal Gyrus, Gray Matter, Brodmann area 42, Range = 0 |

| BA 44 | ||||

| −57 | 6 | 6 | 5.390 | Left Cerebrum, Frontal Lobe, Precentral Gyrus, Gray Matter, Brodmann area 44, Range = 1 |

| BA 46 | ||||

| −39 | 48 | 15 | 5.116 | Left Cerebrum, Frontal Lobe, Middle Frontal Gyrus, Gray Matter, Brodmann area 46, Range = 1 |

| −60 | 30 | 9 | 2.669 | Left Cerebrum, Frontal Lobe, Inferior Frontal Gyrus, Gray Matter, Brodmann area 46, Range = 2 |

| BA 47 | ||||

| −33 | 27 | −18 | 2.982 | Left Cerebrum, Frontal Lobe, Inferior Frontal Gyrus, Gray Matter, Brodmann area 47, Range = 1 |

| 33 | 18 | 0 | 6.942 | Right Cerebrum, Sub-lobar, Extra-Nuclear, Gray Matter, Brodmann area 47, Range = 1 |

| 18 | 30 | −15 | 3.595 | Right Cerebrum, Frontal Lobe, Inferior Frontal Gyrus, Gray Matter, Brodmann area 47, Range = 2 |

| SUBCORTICAL STRUCTURES | ||||

| Thalamus | ||||

| −21 | −33 | 3 | 5.011 | Left Cerebrum, Sub-lobar, Thalamus, Gray Matter, Pulvinar, Range = 0 |

| −18 | −12 | 9 | 5.434 | Left Cerebrum, Sub-lobar, Thalamus, Gray Matter, Ventral Lateral Nucleus, Range = 1 |

| −12 | −18 | 9 | 5.298 | Left Cerebrum, Sub-lobar, Thalamus, Gray Matter, Range = 0 |

| 9 | −24 | 21 | 4.967 | Right Cerebrum, Sub-lobar, Thalamus, Gray Matter, Range = 4 |

| 6 | −12 | 9 | 5.594 | Right Cerebrum, Sub-lobar, Thalamus, Gray Matter, Medial Dorsal Nucleus, Range = 0 |

| Caudate | ||||

| −18 | 27 | 9 | 4.207 | Left Cerebrum, Sub-lobar, Caudate, Gray Matter, Caudate Head, Range = 5 |

| 21 | 9 | 18 | 6.066 | Right Cerebrum, Sub-lobar, Caudate, Gray Matter, Caudate Body, Range = 5 |

| 9 | 0 | 9 | 6.425 | Right Cerebrum, Sub-lobar, Caudate, Gray Matter, Caudate Body, Range = 1 |

| Hippocampus | ||||

| 33 | −12 | −15 | 5.527 | Right Cerebrum, Limbic Lobe, Parahippocampal Gyrus, Gray Matter, Hippocampus, Range = 2 |

| Substantia nigra | ||||

| −12 | −21 | −6 | 5.259 | Left Brainstem, Midbrain, Gray Matter, Substania Nigra, Range = 1 |

| Uncus | ||||

| 21 | 6 | −18 | 4.642 | Right Cerebrum, Limbic Lobe, Uncus, Gray Matter, Brodmann area 28, Range = 0 |

| 21 | −6 | −21 | 5.159 | Right Cerebrum, Limbic Lobe, Uncus, Gray Matter, Amygdala, Range = 0 |

| Putamen | ||||

| −30 | −9 | −6 | 4.770 | Left Cerebrum, Sub-lobar, Lentiform Nucleus, Gray Matter, Putamen, Range = 1 |

| Claustrum | ||||

| 36 | 6 | −6 | 5.304 | Gray Matter nearest to (36, 6, −6): Right Cerebrum, Sub-lobar, Claustrum, Gray Matter, Range = 3 |

| CEREBELLUM | ||||

| 3 | −30 | −18 | 5.456 | Right Cerebellum, Anterior Lobe, Culmen, Gray Matter, Range = 5 |

| 24 | −63 | −18 | 5.778 | Right Cerebellum, Posterior Lobe, Declive, Gray Matter, Range = 0 |

| 18 | −90 | −18 | 4.137 | Right Cerebellum, Posterior Lobe, Declive, Gray Matter, Range = 2 |

| 6 | −39 | −21 | 5.193 | Right Cerebellum, Anterior Lobe, Culmen, Gray Matter, Range = 0 |

| 9 | −45 | −21 | 5.004 | Right Cerebellum, Anterior Lobe, Culmen, Gray Matter, Range = 0 |

| 12 | −63 | −21 | 5.759 | Right Cerebellum, Posterior Lobe, Declive, Gray Matter, Range = 0 |

| 12 | −84 | −21 | 3.768 | Right Cerebellum, Posterior Lobe, Declive, Gray Matter, Range = 0 |

| −9 | −48 | −3 | 4.514 | Left Cerebellum, Anterior Lobe, Culmen, Gray Matter, Range = 0 |

| −12 | −54 | −6 | 4.736 | Left Cerebellum, Anterior Lobe, Culmen, Gray Matter, Range = 0 |

| −33 | −72 | −15 | 6.062 | Left Cerebellum, Posterior Lobe, Declive, Gray Matter, Range = 0 |

| −9 | −36 | −18 | 4.405 | Left Cerebellum, Anterior Lobe, Culmen, Gray Matter, Range = 0 |

| −3 | −78 | −18 | 5.505 | Left Cerebellum, Gray Matter, Range = 0 |

| BRAINSTEM | ||||

| 9 | −21 | −3 | 4.800 | Right Brainstem, Midbrain, Gray Matter, Red Nucleus, Range = 0 |

Figure 4.

fMRI Results. Main contrast for words learned with iconic gestures vs. silence. Learning through iconic gestures creates extended sensorimotor networks that resonate upon audio-visual word presentation. The networks map the modalities engaged during learning. The color-coded areas show clusters with high Bayesian posterior probability of condition. The bar represents the z-values.

Audio-visual perception and attention

During task execution in the scanner, sensorial perception and word recognition occur. We found related bilateral neural activity in the thalamus, which is known to process incoming information and to relay it to related specific areas in the cortex (Mitchell et al., 2014). Further, auditory cortices BA 38 along with BA 41 and BA 42 (the transverse temporal areas) responded upon acoustic word perception. In addition to processing auditory information, these areas also access stored representations of words (Scott and Wise, 2004). Other regions were engaged in the task: BA 22 maps sound and word meaning (Zhuang et al., 2014), the left fusiform gyrus BA 37 is implicated in reading (McCandliss et al., 2003), and the supramarginal gyrus BA 40 mediates word recognition (Stoeckel et al., 2009; Wilson et al., 2011). Also the frontal eye field BA 8 was active and possibly engaged in attention processes (Esterman et al., 2015).

Lexical recognition

Zhuang et al. (2014) describe lexical recognition as consisting of two related processes: competition and selection. In competition, cohort candidates, i.e., words sharing some phonological feature(s) with the word presented compete with each other for a match. These cohort words are stored in memory areas that become active upon search. We found activity in an extended memory network engaged in word competition, including the right hippocampus (Smith et al., 2011; Huijgen and Samson, 2015), the para-hippocampal gyrus, BA 27, BA 36 bilaterally (Squire and Dede, 2015), and the left temporal lobe BA 38 (St. Jacques et al., 2011). In our data, the process of selection involved the left inferior frontal gyrus and also its right counterpart, BA 44, 46, the left BA 47, cortical regions found previously during the completion of this task (Heim et al., 2005; Rodd et al., 2012; Zhuang et al., 2014), and the insula, which is also engaged in word processing (Zaccarella and Friederici, 2015). Contrary to this last study, we did not find involvement of the pars triangularis of the inferior frontal gyrus BA 45. Instead, we detected activity in BA 9, a region known to be active in working memory mediating selection tasks, in interplay with BA10, and in the anterior cingulate cortex BA 32 and BA 34 (Zhang et al., 2003), areas also present in our network.

Cognitive control

Our results suggest that mechanisms of cognitive control might also contribute to the network engaged in word recognition. We found increased activity in the anterior cingulate cortex BA 31 and 34 as well as in its posterior portion BA 31, in the middle and superior frontal gyri BA 9, and in the frontopolar prefrontal cortex BA 10. Previous studies bundled these regions into a network that detects input diverging from a stored template and suppressing it (Botvinick et al., 2001; Cole and Schneider, 2007). Hence cognitive control could account for adequate item selection, suppression of cohort candidates, and novel words presented during the task. However, our analysis does not allow discernment of whether the cingulate cortices and BA 10 contribute to selection within the network for cognitive control or whether selection processes and dedicated networks include the network of cognitive control.

Experience-related sensorimotor word networks

Our investigation aimed to detect how words learned with iconic gestures are functionally mapped into neural tissue. In addition to the core language network (Friederici, 2011) described in the preceding section we found a number of premotor, motor, and sensorimotor areas that were activated during word recognition. Most remarkably, upon audio-visual presentation of the words (note that no videos of the actress performing gestures were shown during the scanning procedure), the brain images unveiled activity in large portions of the left premotor cortex BA 6, which is engaged in movement preparation and simulation. The right counterpart was minimally involved. We attribute this imbalance to the fact that all subjects were right-handed (Tettamanti et al., 2005). Activity in the premotor cortices as preparation of words referring to motor acts is well documented in numerous neuroimaging studies in which words were presented either visually or acoustically or both (Hauk et al., 2004; Pulvermuller, 2005; D'Ausilio et al., 2009; Cappa and Pulvermüller, 2012; Berent et al., 2015). In our study, the pattern of response to audio-visual word presentation also involved the left primary motor cortex, where BA 4 and BA 7 play a role in motor sequence coordination, visuo-motor coordination, planning of complex movements, and proprioception (Baker et al., 2012). Additionally, our data revealed involvement of the basal ganglia, i.e., left putamen, left and right caudate, left substantia nigra, and the cerebellum, which bilaterally contribute to motor emulation processes (Lotze and Halsband, 2006; Ridderinkhof and Brass, 2015). We ascribed the engagement of the motor regions during word recognition to the experience collected by our subjects while learning. In retrieval, motor acts are unconsciously simulated as associated to the phoneme and letter sequence(s). Similarly, primary somatosensory cortices BA 3 and BA 5, also present in our analysis, converge to create a proprioceptive trace within the network related to gesture execution (Pleger and Villringer, 2013). Perception of space and body location mediated by the supramarginal gyrus BA 40 and the angular gyrus BA 39 are also part of a word's representation (Blanke, 2012). Besides involvement of sensorimotor areas, activity in BA 18, a visual association area, reflects the complex image processing that occurs during training and the reactivation of a mental image (Lambert et al., 2004). Subjects were cued to read the words and watch the videos of an actress performing the iconic gestures. Involvement of the right fusiform gyrus might thus mirror the input, i.e., the actress' face (Morris et al., 2007) and body (Soria Bauser and Suchan, 2015) might also be mapped into the word's representation. Altogether, these data reflect the sensorimotor input processed during learning. The contrast images provide evidence of word learning as a cognitive process grounded in embodied experiences (Pulvermuller, 1999).

Discussion

The present study is explorative. Also, this fMRI-data analysis has a limitation: it cannot disentangle the different processes that occurred in the scanner, i.e., word perception, recognition, and the functional neural mapping of the words created through sensorimotor experiences. Brain areas engaged in multiple functions partially overlap in the different processes, i.e., sensorial processing, the recognition task and sensorimotor emulation of the words.

However, the data provide insight into the functional representation of words in a foreign language learned through iconic gestures. The results support Engelkamp's and Krummnacker's seminal theory on enactment (1980), which proposed that performing a gesture when memorizing a word leaves a motor trace in the word's representation. Furthermore, our results can be embedded in theories of embodied language that have emerged in the past decade. Based on neuroscientific evidence, these studies maintain that language (along with other processes in cognition) is grounded in bodily experiences created with our sensorimotor systems (Barsalou, 1999, 2008; Pulvermuller, 1999; Gallese and Lakoff, 2005; Fischer and Zwaan, 2008; Jirak et al., 2010; Glenberg and Gallese, 2012). When seeing a fruit basket, children get to grasp a fruit. Holding the fruit, children smell it, put it in their mouth, taste it, drop it, pick it up, squeeze it, and feel the pulp and dripping juice; while pointing to the fruit while caregivers produce a sequence of sounds [ˈɒr.ɪndʒ], orange, the “concept's name” (Oldfield and Wingfield, 1965). Connecting all sensorimotor experiences with the sound, children try to reproduce it. By doing so, children combine multiple bodily experiences related to the fruit and create an embodied concept connected to a sound string that becomes a part of this representation. One day children will learn to write the sound sequence. At that point, children acquire another system—graphemes—in order to label the concept and to communicate about these experiences in a written way. Spoken and written words thus become a component of the concept, and as such they become connected with the embodied concept itself. Considering this, words are not abstract units of the mind (Fodor, 1976, 1987). Instead, words are grounded to a great amount in bodily experiences (Gallese and Lakoff, 2005). A number of studies conducted over the past decade have demonstrated the involvement of the body in conceptual representations. Most of these studies have used written words that participants read silently in the fMRI scanner. Reading action words activated motor cortices (Kemmerer et al., 2008; Cappa and Pulvermüller, 2012). This process occurred selectively depending on the effector of the body involved in the action (Carota et al., 2012). Action words (such as kick, pick, and lick) that refer to actions performed with leg, arm or mouth elicited activity in regions of the motor cortex controlling their movement (Hauk et al., 2004). González et al. (2006) made participants read odor words and found brain activity in regions that are not related only to the task, i.e., canonical language areas involved in reading, but more interestingly in olfactory brain regions. Note that participants had no perception of odor. Similarly, mere reading of gustatory words such as salt engaged gustatory regions in the brain (Barrós-Loscertales et al., 2012).

In recent years, embodiment research has shown that a good portion of abstract words are also grounded in bodily experiences. In the fMRI scanner, Moseley et al. (2012) had participants read abstract emotion words such as fear, dread, and spite. In the subjects' brains, the researchers detected activity in emotional networks, but more interestingly in those portions of the premotor cortex engaged in movement preparation for arm- and face-related gestures. Such gestures ground the social expression of feelings. Anecdotally speaking, if asked to demonstrate the concept of grief, in Western culture we might produce a certain facial expression or mime wiping our eyes to indicate crying. Hence even if, linguistically, the word is abstract, our interpretation of it is embodied. We have learned the spoken or written word for a concept that is not abstract but related to emotional and bodily experiences.

Additionally, metaphors involving body parts also are reported to evoke embodied reactions in the brain. Boulenger et al. (2009) asked whether somatotopic responses in the motor cortex occur during reading of metaphoric sentences such as “John grasped the idea” compared to literal sentences such as “John grasped the object.” The results showed activity in motor cortices for both metaphoric and literal sentences. Another study (with magnetic encephalography) produced similar results (Boulenger et al., 2012). Lacey et al. (2012) had participants read sentences such as “She has steel nerves.” and “Life is a bumpy road.” that contained texture metaphors, along with control sentences such as “She is very calm.” and “Life is a challenging road.” Texture metaphors induced activity in the somatosensory cortex and more specifically in texture-selective areas. Altogether, the reviewed studies suggest that interaction with the world creates brain topographies of concepts and reflects a word's semantics (Pulvermüller, 2002; Moseley and Pulvermüller, 2014).

Our data do not overtly support the hypothesis on enactment that attributes memory enhancement to visual imagery (Backman et al., 1993; Knudsen, 2007; Muzzio et al., 2009a,b). However, it stands to reason that the word network also comprises a mental image of the gesture's execution from a first-person perspective; hence the preparation to perform the gesture with the premotor cortex, supplementary motor area (Park et al., 2015), motor cortices, basal ganglia, and cerebellum are involved. However, the word network might also comprise a kinetic mental image from a second-person perspective, i.e., the subject's unconscious rehearsal of the actress during gesture performance. This perspective could be mapped in the higher visual association areas, the fusiform gyrus, the supramarginal, and the angular gyrus as described in the results section of this paper. Other regions, e.g., hippocampus, posterior cingulate cortex, medial prefrontal cortex, and angular gyrus, identified in imagery also are contained in the activated network and found in other studies (Huijbers et al., 2011; Ridderinkhof and Brass, 2015).

Network complexity and memory

Our results confirm one of the theories on enactment asserting that complexity of word representation determines memory enhancement (Knopf, 1992; Kormi-Nouri, 1995; Macedonia, 2003). Theoretically, this position is embedded in connectionist models of memory. In connectionism, a concept can be described by means of networks representing and storing information (McClelland, 1985; McClelland and Rumelhart, 1985; McClelland and Rogers, 2003). Concept networks consist of nodes and edges. Nodes can be seen not only on an abstract level but also in a more biological understanding as neural assemblies and cortical areas wiring together on different scales upon synchronous stimulus processing (Douglas and Martin, 2004; Singer, 2013). During learning, synchronous firing of neurons (coincident activation) leads to changes in the weight of connections (Hebb, 1949) among neurons. Through these changes in connections, neurons (re)organize in functional units in different dimensions that process and store information. According to connectionist models, concepts are not stored locally in the brain. Instead, concepts are represented in a distributed way (Lashley, 1950). Thus, a concept is not considered as a single unit but as a pattern clustering different components wired together during learning. A growing body of evidence has demonstrated this position in neuroscientific studies (for a review, see Pulvermüller, 2013). In connectionist networks, word retrieval is driven by spreading activation within the network. Activation starts at one or more nodes, depending on the input, and triggers activity in the whole network that ends after the search has been completed by inhibition (McClelland, 1985). The classic example described by McClelland et al. (1986) is the rose. The smell and the appearance of the flower are experienced synchronously. After a certain number of experiences, the two components become interconnected. Visual processing of a rose will activate the network. Hence the node storing the smell will be reached by activity and the smell sensation will be triggered even in absence of a real rose. Twenty years later, González et al. fMRI-study (González et al., 2006) connected neuroscientific evidence to McClelland's rose example.

One basic assumption in neural networks theories is that complexity in representation makes memories stable and longer lasting (Klimesch, 1994). The more nodes a concept has, the more stable the concept's representation is. In fact, if a node within the network decays, activity in the network can be started from other nodes and information can be restored. For example, a word in L2 that has been learned with a picture will be more complex in its representation than a word that has been learned in its written form. This will enhance the word's memorability, as shown in a recent study by Takashima et al. (2014). Paradoxically, if a word network in L2 consists of only one node, for example a string of sounds in a foreign language, decay could affect it fatally. A gesture accompanying a word engages many brain regions and therefore provides a complex representation of the word. It enriches the sound or character string with sensorimotor information, it makes its representation complex, and it enhances retrievability compared to audio-visual learning only. This is the case in both the short (Macedonia and Knösche, 2011) and the long term (Macedonia and Klimesch, 2014) when the network is impacted by decay.

Procedural memory for words learned with iconic gestures

Our data also allow a further interpretation. Considering the high involvement of the motor system in the word recognition task, as described in the Results Section, it becomes plausible to assume that procedural memory might be engaged in word learning. Procedural memory is implicit, long-term, and grounded in the motor system when a person acquires a skill. In cognitive science, vocabulary theoretically is situated in the domain of declarative memory (Tulving and Madigan, 1970; Ullman, 2004; Cabeza and Moscovitch, 2013; Squire and Dede, 2015). However, because of the procedure used to acquire the words (iconic gestures accompanying the words, i.e., well defined motor acts/programs), it stands to reason that both declarative and procedural memory systems might interact and jointly accomplish word storage and retrieval. In activation Table 1, we find brain regions typically mediating declarative memory (Nikolin et al., 2015), i.e., hippocampus, para-hippocampal (Nadel and Hardt, 2011), and the fusiform gyri (Ofen et al., 2007), as well as regions within the prefrontal cortex, the dorsolateral prefrontal cortex (Blumenfeld and Ranganath, 2007), and the medial temporal lobe (Mayes et al., 2007). This provides evidence that words learned during our experiment are stored in declarative memory. At the same time, our activation list reports brain regions mediating procedural memory, in addition to (pre)motor regions, the basal ganglia (Barnes et al., 2005; Yin and Knowlton, 2006; Wilkinson and Jahanshahi, 2007) and the cerebellum (D'Angelo, 2014). Thus, word learning, if accompanied by gestures seems to recruit both memory systems. This might be responsible for superior memory performance in storage and retrieval of verbal information. Our results are in line with literature considering declarative and procedural memory as interacting as opposed to being distinct (Davis and Gaskell, 2009), and with a more recent review of patient and animal studies indicating that the medial temporal lobe and basal ganglia mediate declarative and procedural learning, respectively, depending on task demands (Wilkinson and Jahanshahi, 2015).

Conclusion

In this paper, we were interested in the functional neural representation of novel words learned with iconic gestures. Our study is explorative and one of our aims was to detect brain regions that play a special role in memory enhancement. Besides networks engaged in attention and word recognition, we found a word network that maps the words according to the modalities engaged in the learning process. We attribute the superior memory results induced by gestures to the complexity of the network. Complex sensorimotor networks for words store verbal information in an extended way, conferring stability on the word's representation. Our brain data also shows that learning words with gestures possibly engages both declarative and procedural memory. The involvement of both memory systems thus explains why learning is enhanced and information decay is delayed as shown in behavioral long-term studies. The implications for education are clear: gestures should be used in second language lessons in order to enhance vocabulary learning.

Author contributions

MM Experimental design, stimuli creation and recording, data acquisition and analysis, paper writing. KM Supervision in data acquisition and analysis.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This study was supported by the Cogito Foundation, Wollerau, Switzerland by a grant to MM.

References

- Aly M., Turk-Browne N. B. (2016). Attention stabilizes representations in the human hippocampus. Cereb. Cortex 26, 783–796. 10.1093/cercor/bhv041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andric M., Small S. L. (2012). Gesture's neural language. Front. Psychol. 3:99. 10.3389/fpsyg.2012.00099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bäckman L., Nilsson L. G. (1985). Prerequisites for lack of age differences in memory performance. Exp. Aging Res. 11, 67–73. 10.1080/03610738508259282 [DOI] [PubMed] [Google Scholar]

- Backman L., Nilsson L. G., Nouri R. K. (1993). Attentional demands and recall of verbal and color information in action events. Scand. J. Psychol. 34, 246–254. 10.1111/j.1467-9450.1993.tb01119.x [DOI] [PubMed] [Google Scholar]

- Baker K. S., Piriyapunyaporn T., Cunnington R. (2012). Neural activity in readiness for incidental and explicitly timed actions. Neuropsychologia 50, 715–722. 10.1016/j.neuropsychologia.2011.12.026 [DOI] [PubMed] [Google Scholar]

- Barnes T. D., Kubota Y., Hu D., Jin D. Z., Graybiel A. M. (2005). Activity of striatal neurons reflects dynamic encoding and recoding of procedural memories. Nature 437, 1158–1161. 10.1038/nature04053 [DOI] [PubMed] [Google Scholar]

- Barrós-Loscertales A., González J., Pulvermüller F., Ventura-Campos N., Bustamante J. C., Costumero V., et al. (2012). Reading salt activates gustatory brain regions: fMRI evidence for semantic grounding in a novel sensory modality. Cereb. Cortex 22, 2554–2563. 10.1093/cercor/bhr324 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barsalou L. W. (1999). Perceptual symbol systems. Behav. Brain Sci. 22, 577–609. discussion: 610–560. 10.1017/s0140525x99002149 [DOI] [PubMed] [Google Scholar]

- Barsalou L. W. (2008). Grounded cognition. Annu. Rev. Psychol. 59, 617–645. 10.1146/annurev.psych.59.103006.093639 [DOI] [PubMed] [Google Scholar]

- Berent I., Brem A. K., Zhao X., Seligson E., Pan H., Epstein J., et al. (2015). Role of the motor system in language knowledge. Proc. Natl. Acad. Sci. U.S.A. 112, 1983–1988. 10.1073/pnas.1416851112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernardis P., Gentilucci M. (2006). Speech and gesture share the same communication system. Neuropsychologia 44, 178–190. 10.1016/j.neuropsychologia.2005.05.007 [DOI] [PubMed] [Google Scholar]

- Blanke O. (2012). Multisensory brain mechanisms of bodily self-consciousness. Nat. Rev. Neurosci. 13, 556–571. 10.1038/nrn3292 [DOI] [PubMed] [Google Scholar]

- Blumenfeld R. S., Ranganath C. (2007). Prefrontal Cortex and long-term memory encoding: an integrative review of findings from neuropsychology and neuroimaging. Neuroscientist 13, 280–291. 10.1177/1073858407299290 [DOI] [PubMed] [Google Scholar]

- Botvinick M. M., Braver T. S., Barch D. M., Carter C. S., Cohen J. D. (2001). Conflict monitoring and cognitive control. Psychol. Rev. 108, 624–652. 10.1037/0033-295X.108.3.624 [DOI] [PubMed] [Google Scholar]

- Boulenger V., Hauk O., Pulvermüller F. (2009). Grasping ideas with the motor system: semantic somatotopy in idiom comprehension. Cereb. Cortex 19, 1905–1914. 10.1093/cercor/bhn217 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boulenger V., Shtyrov Y., Pulvermüller F. (2012). When do you grasp the idea? MEG evidence for instantaneous idiom understanding. Neuroimage 59, 3502–3513. 10.1016/j.neuroimage.2011.11.011 [DOI] [PubMed] [Google Scholar]

- Cabeza R., Moscovitch M. (2013). Memory systems, processing modes, and components: functional neuroimaging evidence. Perspect. Psychol. Sci. 8, 49–55. 10.1177/1745691612469033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cappa S. F., Pulvermüller F. (2012). Cortex special issue: language and the motor system. Cortex 48, 785–787. 10.1016/j.cortex.2012.04.010 [DOI] [PubMed] [Google Scholar]

- Carota F., Moseley R., Pulvermüller F. (2012). Body-part-specific representations of semantic noun categories. J. Cogn. Neurosci. 24, 1492–1509. 10.1162/jocn_a_00219 [DOI] [PubMed] [Google Scholar]

- Cohen R. L. (1981). On the generality of some memory laws. Scand. J. Psychol. 22, 267–281. 10.1111/j.1467-9450.1981.tb00402.x [DOI] [Google Scholar]

- Cole M. W., Schneider W. (2007). The cognitive control network: integrated cortical regions with dissociable functions. Neuroimage 37, 343–360. 10.1016/j.neuroimage.2007.03.071 [DOI] [PubMed] [Google Scholar]

- Craik F. I., Tulving E. (1975). Depth of processing and the retention of words in episodic memory. J. Exp. Psychol. 104, 268–294. 10.1037/0096-3445.104.3.268 [DOI] [Google Scholar]

- Craik F. I. M., Lockhart R. S. (1972). Levels of processing - framework for memory research. J. Verbal Learning Verbal Behav. 11, 671–684. 10.1016/S0022-5371(72)80001-X [DOI] [Google Scholar]

- D'Angelo E. (2014). The organization of plasticity in the cerebellar cortex: from synapses to control Prog. Brain Res. 210, 31–58. 10.1016/B978-0-444-63356-9.00002-9 Available online at: http://www.sciencedirect.com/science/bookseries/00796123 [DOI] [PubMed] [Google Scholar]

- Danion J. M., Meulemans T., Kauffmann-Muller F., Vermaat H. (2001). Intact implicit learning in schizophrenia. Am. J. Psychiatry 158, 944–948. 10.1176/appi.ajp.158.6.944 [DOI] [PubMed] [Google Scholar]

- Daprati E., Nico D., Saimpont A., Franck N., Sirigu A. (2005). Memory and action: an experimental study on normal subjects and schizophrenic patients. Neuropsychologia 43, 281–293. 10.1016/j.neuropsychologia.2004.11.014 [DOI] [PubMed] [Google Scholar]

- D'Ausilio A., Pulvermüller F., Salmas P., Bufalari I., Begliomini C., Fadiga L. (2009). The motor somatotopy of speech perception. Curr. Biol. 19, 381–385. 10.1016/j.cub.2009.01.017 [DOI] [PubMed] [Google Scholar]

- Davis M. H., Gaskell M. G. (2009). A complementary systems account of word learning: neural and behavioural evidence. Philos. Trans. R. Soc. B Biol. Sci. 364, 3773–3800. 10.1098/rstb.2009.0111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dijkstra K., Post L. (2015). Mechanisms of embodiment. Front. Psychol. 6:1525. 10.3389/fpsyg.2015.01525 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Douglas R. J., Martin K. A. C. (2004). Neuronal circuits of the neocortex. Annu. Rev. Neurosci. 27, 419–451. 10.1146/annurev.neuro.27.070203.144152 [DOI] [PubMed] [Google Scholar]

- Engelkamp J. (1980). Some studies on the internal structure of propositions. Psychol. Res. 41, 355–371. 10.1007/BF00308880 [DOI] [Google Scholar]

- Engelkamp J. (2001). Action memory - a system-oriented approach, in Memory for Action - A Distinct Form of Episodic Memory? eds Zimmer H., Cohen R. L., Guynn M. J., Engelkamp J., Kormi-Nouri R., Foley M. A. (Oxford: Oxford University Press; ), 49–96. [Google Scholar]

- Engelkamp J., Krumnacker H. (1980). Imaginale und motorische Prozesse beim Behalten verbalen Materials. Z. Exp. Angewandte Psychol. 27, 511–533. [Google Scholar]

- Engelkamp J., Zimmer H. D. (1984). Motor program information as a separable memory unit. Psychol. Res. 46, 283–299. 10.1007/BF00308889 [DOI] [PubMed] [Google Scholar]

- Engelkamp J., Zimmer H. D. (1985). Motor programs and their relation to semantic memory. German J. Psychol. 9, 239–254. [Google Scholar]

- Engelkamp J., Zimmer H. D. (1994). Human Memory: A Multimodal Approach. Seattle, WA: Hogrefe & Huber. [Google Scholar]

- Eschen A., Freeman J., Dietrich T., Martin M., Ellis J., Martin E., et al. (2007). Motor brain regions are involved in the encoding of delayed intentions: a fMRI study. Int. J. Psychophysiol. 64, 259–268. 10.1016/j.ijpsycho.2006.09.005 [DOI] [PubMed] [Google Scholar]

- Esterman M., Liu G., Okabe H., Reagan A., Thai M., Degutis J. (2015). Frontal eye field involvement in sustaining visual attention: evidence from transcranial magnetic stimulation. Neuroimage 111, 542–548. 10.1016/j.neuroimage.2015.01.044 [DOI] [PubMed] [Google Scholar]

- Fischer M. H., Zwaan R. A. (2008). Embodied language: a review of the role of the motor system in language comprehension. Q. J. Exp. Psychol. 61, 825–850. 10.1080/17470210701623605 [DOI] [PubMed] [Google Scholar]

- Fodor J. A. (1976). The Language of Thought. Hassocks: Harvester Press. [Google Scholar]

- Fodor J. A. (1987). Psychosemantics: The Problem of Meaning in the Philosophy of Mind. Cambridge, MA: MIT Press. [Google Scholar]

- Friederici A. D. (2011). The brain basis of language processing: from structure to function. Physiol. Rev. 91, 1357–1392. 10.1152/physrev.00006.2011 [DOI] [PubMed] [Google Scholar]

- Friston K., Chu C., Mourão-Miranda J., Hulme O., Rees G., Penny W., et al. (2008). Bayesian decoding of brain images. Neuroimage 39, 181–205. 10.1016/j.neuroimage.2007.08.013 [DOI] [PubMed] [Google Scholar]

- Friston K. J., Fletcher P., Josephs O., Holmes A., Rugg M. D., Turner R. (1998). Event-related fMRI: characterizing differential responses. Neuroimage 7, 30–40. 10.1006/nimg.1997.0306 [DOI] [PubMed] [Google Scholar]

- Frith C. D. (1987). The positive and negative symptoms of schizophrenia reflect impairments in the perception and initiation of action. Psychol. Med. 17, 631–648. 10.1017/S0033291700025873 [DOI] [PubMed] [Google Scholar]

- Frith C. D., Blakemore S. J., Wolpert D. M. (2000). Explaining the symptoms of schizophrenia: abnormalities in the awareness of action. Brain Res. Rev. 31, 357–363. 10.1016/S0165-0173(99)00052-1 [DOI] [PubMed] [Google Scholar]

- Gallese V., Lakoff G. (2005). The Brain's concepts: the role of the Sensory-motor system in conceptual knowledge. Cogn. Neuropsychol. 22, 455–479. 10.1080/02643290442000310 [DOI] [PubMed] [Google Scholar]

- Galli G. (2014). What makes deeply encoded items memorable? Insights into the levels of processing framework from neuroimaging and neuromodulation. Front. Psychiatry 5:61. 10.3389/fpsyt.2014.00061 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glenberg A. M., Gallese V. (2012). Action-based language: a theory of language acquisition, comprehension, and production. Cortex 48, 905–922. 10.1016/j.cortex.2011.04.010 [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S. (1999). The role of gesture in communication and thinking. Trends Cogn. Sci. 3, 419–429. 10.1016/S1364-6613(99)01397-2 [DOI] [PubMed] [Google Scholar]

- González J., Barros-Loscertales A., Pulvermüller F., Meseguer V., Sanjuán A., Belloch V., et al. (2006). Reading cinnamon activates olfactory brain regions. Neuroimage 32, 906–912. 10.1016/j.neuroimage.2006.03.037 [DOI] [PubMed] [Google Scholar]

- Graham S., Santos D., Francis-Brophy E. (2014). Teacher beliefs about listening in a foreign language. Teach. Teach. Educ. 40, 44–60. 10.1016/j.tate.2014.01.007 [DOI] [Google Scholar]

- Hauk O., Johnsrude I., Pulvermüller F. (2004). Somatotopic representation of action words in human motor and premotor cortex. Neuron 41, 301–307. 10.1016/S0896-6273(03)00838-9 [DOI] [PubMed] [Google Scholar]

- Hebb D. O. (1949). The Organization of Behavior. A Neuropsychological Theory. New York, NY; London: John Wiley & Sons; Chapman & Hall. [Google Scholar]

- Heim S., Alter K., Ischebeck A. K., Amunts K., Eickhoff S. B., Mohlberg H., et al. (2005). The role of the left Brodmann's areas 44 and 45 in reading words and pseudowords. Cogn. Brain Res. 25, 982–993. 10.1016/j.cogbrainres.2005.09.022 [DOI] [PubMed] [Google Scholar]

- Holle H., Gunter T. C. (2007). The role of iconic gestures in speech disambiguation: ERP evidence. J. Cogn. Neurosci. 19, 1175–1192. 10.1162/jocn.2007.19.7.1175 [DOI] [PubMed] [Google Scholar]

- Holle H., Gunter T. C., Rüschemeyer S.-A., Hennenlotter A., Iacoboni M. (2008). Neural correlates of the processing of co-speech gestures. Neuroimage 39, 2010–2024. 10.1016/j.neuroimage.2007.10.055 [DOI] [PubMed] [Google Scholar]

- Huijbers W., Pennartz C. M. A., Rubin D. C., Daselaar S. M. (2011). Imagery and retrieval of auditory and visual information: neural correlates of successful and unsuccessful performance. Neuropsychologia 49, 1730–1740. 10.1016/j.neuropsychologia.2011.02.051 [DOI] [PubMed] [Google Scholar]

- Huijgen J., Samson S. (2015). The hippocampus: a central node in a large-scale brain network for memory. Rev. Neurol. (Paris). 171, 204–216. 10.1016/j.neurol.2015.01.557 [DOI] [PubMed] [Google Scholar]

- Hunt R. R., Worthen J. B. (2006). Distinctiveness and Memory. New York, NY; Oxford: Oxford University Press. [Google Scholar]

- Jirak D., Menz M. M., Buccino G., Borghi A. M., Binkofski F. (2010). Grasping language – a short story on embodiment. Conscious. Cogn. 19, 711–720. 10.1016/j.concog.2010.06.020 [DOI] [PubMed] [Google Scholar]

- Karlsson T., Backman L., Herlitz A., Nilsson L. G., Winblad B., Osterlind P. O. (1989). Memory improvement at different stages of Alzheimers-Disease. Neuropsychologia 27, 737–742. 10.1016/0028-3932(89)90119-X [DOI] [PubMed] [Google Scholar]

- Kelly S. D., McDevitt T., Esch M. (2009). Brief training with co-speech gesture lends a hand to word learning in a foreign language. Lang. Cogn. Process. 24, 313–334. 10.1080/01690960802365567 [DOI] [Google Scholar]

- Kelly S. D., Ozyürek A., Maris E. (2010). Two sides of the same coin: speech and gesture mutually interact to enhance comprehension. Psychol. Sci. 21, 260–267. 10.1177/0956797609357327 [DOI] [PubMed] [Google Scholar]

- Kemmerer D., Castillo J. G., Talavage T., Patterson S., Wiley C. (2008). Neuroanatomical distribution of five semantic components of verbs: evidence from fMRI. Brain Lang. 107, 16–43. 10.1016/j.bandl.2007.09.003 [DOI] [PubMed] [Google Scholar]

- Klimesch W. (1994). The Structure of Long-Term Memory: A Connectivity Model of Semantic Processing. Hillsdale, NJ: Erlbaum. [Google Scholar]

- Knopf M. (1992). Gedächtnis für Handlungen. Funktionsweise und Entwicklung. Ph.D. thesis, University of Heidelberg, Heidelberg.

- Knudsen E. I. (2007). Fundamental components of attention. Annu. Rev. Neurosci. 30, 57–78. 10.1146/annurev.neuro.30.051606.094256 [DOI] [PubMed] [Google Scholar]

- Kormi-Nouri R. (1995). The nature of memory for action events: an episodic integration view. Eur. J. Cogn. Psychol. 7, 337–363. 10.1080/09541449508403103 [DOI] [Google Scholar]

- Krönke K.-M., Mueller K., Friederici A. D., Obrig H. (2013). Learning by doing? The effect of gestures on implicit retrieval of newly acquired words. Cortex 49, 2553–2568. 10.1016/j.cortex.2012.11.016 [DOI] [PubMed] [Google Scholar]

- Lacey S., Stilla R., Sathian K. (2012). Metaphorically feeling: comprehending textural metaphors activates somatosensory cortex. Brain Lang. 120, 416–421. 10.1016/j.bandl.2011.12.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lambert S., Sampaio E., Mauss Y., Scheiber C. (2004). Blindness and brain plasticity: contribution of mental imagery?: an fMRI study. Cogn. Brain Res. 20, 1–11. 10.1016/j.cogbrainres.2003.12.012 [DOI] [PubMed] [Google Scholar]

- Lashley K. S. (1950). In search of the engram, in Society of Experimental Biology Symposium No. 4; Physiological Mechanisms in Animal Behaviour, ed Pumphrey R. J. (Cambridge: Cambridge University Press; ), 545–482. [Google Scholar]

- Lohmann G., Muller K., Bosch V., Mentzel H., Hessler S., Chen L., et al. (2001). LIPSIA–a new software system for the evaluation of functional magnetic resonance images of the human brain. Comput. Med. Imaging Graph. 25, 449–457. 10.1016/S0895-6111(01)00008-8 [DOI] [PubMed] [Google Scholar]

- Lotze M., Halsband U. (2006). Motor imagery. J. Physiol. Paris 99, 386–395. 10.1016/j.jphysparis.2006.03.012 [DOI] [PubMed] [Google Scholar]

- Macedonia M. (2003). Sensorimotor Enhancing of Verbal Memory Through “Voice Movement Icons” During Encoding of Foreign Language (German: Voice Movement Icons. Sensomotorische Encodierungsstrategie zur Steigerung der Quantitativen und Qualitativen Lerneffizienz bei Fremdsprachen). Ph.D. thesis, University of Salzburg.

- Macedonia M. (2014). Bringing back the body into the mind: gestures enhance word learning in foreign language. Front. Psychol. 5:1467. 10.3389/fpsyg.2014.01467 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macedonia M., Klimesch W. (2014). Long-term effects of gestures on memory for foreign language words trained in the classroom. Mind Brain Educ. 8, 74–88. 10.1111/mbe.12047 [DOI] [Google Scholar]

- Macedonia M., Knösche T. R. (2011). Body in mind: how gestures empower foreign language learning. Mind Brain Educ. 5, 196–211. 10.1111/j.1751-228X.2011.01129.x [DOI] [Google Scholar]

- Macedonia M., Müller K., Friederici A. D. (2011). The impact of iconic gestures on foreign language word learning and its neural substrate. Hum. Brain Mapp. 32, 982–998. 10.1002/hbm.21084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masumoto K., Yamaguchi M., Sutani K., Tsuneto S., Fujita A., Tonoike M. (2006). Reactivation of physical motor information in the memory of action events. Brain Res. 1101, 102–109. 10.1016/j.brainres.2006.05.033 [DOI] [PubMed] [Google Scholar]

- Mavilidi M.-F., Okely A. D., Chandler P., Cliff D. P., Paas F. (2015). Effects of integrated physical exercises and gestures on preschool children's foreign language vocabulary learning. Educ. Psychol. Rev. 27, 413–426. 10.1007/s10648-015-9337-z [DOI] [Google Scholar]

- Mayer K. M., Yildiz I. B., MacEdonia M., Von Kriegstein K. (2015). Visual and motor cortices differentially support the translation of foreign language words. Curr. Biol. 25, 530–535. 10.1016/j.cub.2014.11.068 [DOI] [PubMed] [Google Scholar]

- Mayes A., Montaldi D., Migo E. (2007). Associative memory and the medial temporal lobes. Trends Cogn. Sci. 11, 126–135. 10.1016/j.tics.2006.12.003 [DOI] [PubMed] [Google Scholar]

- McCandliss B. D., Cohen L., Dehaene S. (2003). The visual word form area: expertise for reading in the fusiform gyrus. Trends Cogn. Sci. 7, 293–299. 10.1016/S1364-6613(03)00134-7 [DOI] [PubMed] [Google Scholar]

- McClelland J. L. (1985). Distributed models of cognitive processes. Applications to learning and memory. Ann. N.Y. Acad. Sci. 444, 1–9. 10.1111/j.1749-6632.1985.tb37576.x [DOI] [PubMed] [Google Scholar]

- McClelland J. L., Rogers T. T. (2003). The parallel distributed processing approach to semantic cognition. Nat. Rev. Neurosci. 4, 310–322. 10.1038/nrn1076 [DOI] [PubMed] [Google Scholar]

- McClelland J. L., Rumelhart D. E. (1985). Distributed memory and the representation of general and specific information. J. Exp. Psychol. Gen. 114, 159–197. 10.1037/0096-3445.114.2.159 [DOI] [PubMed] [Google Scholar]

- McClelland J. L., Rumelhart D. E., Hinton G. E. (1986). The appeal of parallel distributed processing, in Parallel Distributed Processing: Explorations in the Microstructure of Cognition, eds Rumelhart D. E., McClelland J. L., Group P. R. (Cambridge, MA: MIT Press; ), 3–44. [Google Scholar]

- McNeill D. (1992). Hand and Mind: What Gestures Reveal About Thought. Chicago, IL; London: University of Chicago Press. [Google Scholar]

- Mimura M., Komatsu S., Kato M., Yashimasu H., Wakamatsu N., Kashima H. (1998). Memory for subject performed tasks in patients with Korsakoff syndrome. Cortex 34, 297–303. 10.1016/S0010-9452(08)70757-3 [DOI] [PubMed] [Google Scholar]

- Mitchell A. S., Sherman S. M., Sommer M. A., Mair R. G., Vertes R. P., Chudasama Y. (2014). Advances in understanding mechanisms of thalamic relays in cognition and behavior. J. Neurosci. 34, 15340–15346. 10.1523/JNEUROSCI.3289-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris J. P., Pelphrey K. A., McCarthy G. (2007). Face processing without awareness in the right fusiform gyrus. Neuropsychologia 45, 3087–3091. 10.1016/j.neuropsychologia.2007.05.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moseley R., Carota F., Hauk O., Mohr B., Pulvermüller F. (2012). A role for the motor system in binding abstract emotional meaning. Cereb. Cortex 22, 1634–1647. 10.1093/cercor/bhr238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moseley R. L., Pulvermüller F. (2014). Nouns, verbs, objects, actions, and abstractions: local fMRI activity indexes semantics, not lexical categories. Brain Lang. 132, 28–42. 10.1016/j.bandl.2014.03.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muzzio I. A., Kentros C., Kandel E. (2009a). What is remembered? Role of attention on the encoding and retrieval of hippocampal representations. J. Physiol. 587, 2837–2854. 10.1113/jphysiol.2009.172445 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muzzio I. A., Levita L., Kulkarni J., Monaco J., Kentros C., Stead M., et al. (2009b). Attention enhances the retrieval and stability of visuospatial and olfactory representations in the dorsal hippocampus. PLoS Biol. 7:e1000140. 10.1371/journal.pbio.1000140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadel L., Hardt O. (2011). Update on memory systems and processes. Neuropsychopharmacology 36, 251–273. 10.1038/npp.2010.169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nikolin S., Loo C. K., Bai S., Dokos S., Martin D. M. (2015). Focalised stimulation using high definition transcranial direct current stimulation (HD-tDCS) to investigate declarative verbal learning and memory functioning. Neuroimage 117, 11–19. 10.1016/j.neuroimage.2015.05.019 [DOI] [PubMed] [Google Scholar]

- Norris D. G. (2000). Reduced power multislice MDEFT imaging. J. Magn. Reson. Imaging 11, 445–451. [DOI] [PubMed] [Google Scholar]

- Nyberg L. (2002). Levels of processing: a view from functional brain imaging. Memory 10, 345–348. 10.1080/09658210244000171 [DOI] [PubMed] [Google Scholar]

- Nyberg L., Petersson K. M., Nilsson L. G., Sandblom J., Aberg C., Ingvar M. (2001). Reactivation of motor brain areas during explicit memory for actions. Neuroimage 14, 521–528. 10.1006/nimg.2001.0801 [DOI] [PubMed] [Google Scholar]

- Ofen N., Kao Y. C., Sokol-Hessner P., Kim H., Whitfield-Gabrieli S., Gabrieli J. D. (2007). Development of the declarative memory system in the human brain. Nat. Neurosci. 10, 1198–1205. 10.1038/nn1950 [DOI] [PubMed] [Google Scholar]

- Oldfield R. C., Wingfield A. (1965). Response latencies in naming objects. Q. J. Exp. Psychol. 17, 273–281. 10.1080/17470216508416445 [DOI] [PubMed] [Google Scholar]

- Paivio A. (1969). Mental imagery in associative learning and memory. Psychol. Rev. 76, 241–263. 10.1037/h0027272 [DOI] [Google Scholar]

- Paivio A. (1971). Imagery and Verbal Processes. New York, NY: Holt. [Google Scholar]

- Park C.-H., Chang W. H., Lee M., Kwon G. H., Kim L., Kim S. T., et al. (2015). Which motor cortical region best predicts imagined movement? Neuroimage 113, 101–110. 10.1016/j.neuroimage.2015.03.033 [DOI] [PubMed] [Google Scholar]

- Pereira A., Ellis J., Freeman J. (2012). Is prospective memory enhanced by cue-action semantic relatedness and enactment at encoding? Conscious. Cogn. 21, 1257–1266. 10.1016/j.concog.2012.04.012 [DOI] [PubMed] [Google Scholar]

- Pleger B., Villringer A. (2013). The human somatosensory system: from perception to decision making. Progr. Neurobiol. 103, 76–97. 10.1016/j.pneurobio.2012.10.002 [DOI] [PubMed] [Google Scholar]

- Pulvermuller F. (1999). Words in the brain's language. Behav. Brain Sci. 22, 253–279. discussion: 280–336. 10.1017/S0140525X9900182X [DOI] [PubMed] [Google Scholar]

- Pulvermüller F. (2001). Brain reflections of words and their meaning. Trends Cogn. Sci. 5, 517–524. 10.1016/S1364-6613(00)01803-9 [DOI] [PubMed] [Google Scholar]

- Pulvermüller F. (2002). The Neuroscience of Language: On Brain Circuits of Words and Serial Order. Cambridge; New York, NY: Cambridge University Press. [Google Scholar]

- Pulvermuller F. (2005). Brain mechanisms linking language and action. Nat. Rev. Neurosci. 6, 576–582. 10.1038/nrn1706 [DOI] [PubMed] [Google Scholar]

- Pulvermüller F. (2013). Semantic embodiment, disembodiment or misembodiment? In search of meaning in modules and neuron circuits. Brain Lang. 127, 86–103. 10.1016/j.bandl.2013.05.015 [DOI] [PubMed] [Google Scholar]

- Pulvermüller F., Fadiga L. (2010). Active perception: sensorimotor circuits as a cortical basis for language. Nat. Rev. Neurosci. 11, 351–360. 10.1038/nrn2811 [DOI] [PubMed] [Google Scholar]