Abstract

Affective prosody is that aspect of speech that conveys a speaker’s emotional state through modulations in various vocal parameters, most prominently pitch. While a large body of research implicates the cingulate vocalization area in controlling affective vocalizations in monkeys, no systematic test of functional homology for this area has yet been reported in humans. In this study, we used functional magnetic resonance imaging to compare brain activations when subjects produced affective vocalizations in the form of exclamations vs non-affective vocalizations with similar pitch contours. We also examined the perception of affective vocalizations by having participants make judgments about either the emotions being conveyed by recorded affective vocalizations or the pitch contours of the same vocalizations. Production of affective vocalizations and matched pitch contours activated a highly overlapping set of brain areas, including the larynx-phonation area of the primary motor cortex and a region of the anterior cingulate cortex that is consistent with the macro-anatomical position of the cingulate vocalization area. This overlap contradicts the dominant view that these areas form two distinct vocal pathways with dissociable functions. Instead, we propose that these brain areas are nodes in a single vocal network, with an emphasis on pitch modulation as a vehicle for affective expression.

Keywords: affective prosody, fMRI, larynx-phonation area, cingulate cortex, voice, pitch

Introduction

Affective prosody—or tone of voice—conveys a speaker’s emotional state through modulations in the acoustics of the voice, particularly vocal pitch (Banse and Sherer, 1996). Listeners can reliably recognize a broad range of vocally expressed emotions, even when the spoken words are unrelated to the emotion (Fairbanks and Pronovost, 1938; Belin et al., 2008; Simon-Thomas et al., 2009) or when recordings are filtered to remove segmental content (Lieberman and Michaels, 1962). Unlike the words that make up the segmental aspect of speech, affective vocalizations can be recognized across languages (Laukka et al., 2013), between cultures that have had only minimal historical contact (Sauter et al., 2010)—although with some cultural variation (Scherer and Wallbott, 1994)—and across species (Faragó et al., 2014). Indeed, infants who are hearing-impaired produce affective vocalizations that are acoustically similar to those of normal-hearing infants (Scheiner et al., 2004, 2006).

While affective prosody usually unfolds across the course of an utterance, it can also be uttered in the form of short bursts. Schröder (2003) proposed that such bursts occur along a continuum from ‘raw affect bursts’ to ‘verbal interjections’. Raw affect bursts, such as bouts of laughter or crying, are most similar to the innate affective calls of non-human animals. On the other hand, verbal interjections, such as exclamations, can be composed of affectively intoned single syllables (Belyk and Brown, 2014c). Exclamations are particularly well-suited for neuroimaging studies of affective prosody since: (i) they are brief enough to accommodate event-related designs in functional magnetic resonance imaging (fMRI) experiments, (ii) they do not require syntactic processing and (iii) many of them are non-words, thereby reducing the necessity of semantic processing.

Compared with the vocal systems of animals studied in neural models of vocalization, such as the squirrel monkey (Saimiri sciureus) and rhesus monkey (Macaca mulatta), relatively little is known about how affective vocalizations are produced and controlled in humans. The lower motor neurons that innervate the musculature of the larynx—which is the organ of vocalization—are contained in the nucleus ambiguus of the medulla. Two motor pathways project to the nucleus ambiguus to drive vocalization. The first is a pathway from the cingulate vocalization area (Jürgens and Pratt, 1979b) in the anterior cingulate cortex (ACC) that projects to the periaqueductal gray (PAG) of the midbrain, which itself projects to the nucleus ambiguus (Jürgens and Pratt, 1979b). In monkeys, stimulation of the PAG elicits species-specific affective vocalizations (Jürgens and Ploog, 1970), and lesioning of the PAG abolishes affective vocal responses both to environmental stimuli and to stimulation of cortical areas that project to the PAG (Jürgens and Pratt, 1979a,b). One such area is the ACC, stimulation of which also elicits species-specific affective vocalizations (Jürgens and Pratt, 1979b). Lesions to the ACC prevent the initiation of operantly conditioned vocalizations (Aitken, 1981; Sutton et al., 1974, 1981), but have no effect on spontaneous vocalizations in affective contexts that would normally elicit these responses (Jürgens and Pratt, 1979). Hence, the ACC in monkeys is believed to initiate volitional, but not reflexive, species-specific affective vocalizations via projections to the PAG (Jürgens, 2002, 2009).

A second pathway to the nucleus ambiguus originates in the cortical larynx area of the primary motor cortex of the precentral gyrus. In monkeys, electrical stimulation of the cortical larynx area stimulates contraction of the intrinsic and extrinsic laryngeal muscles (Hast et al., 1974), but does not elicit vocalization (Jürgens, 1974). Furthermore, bilateral lesions to this region have little effect on vocal behavior (Jürgens et al., 1982; Kirzinger and Jürgens, 1982). Hence, the monkey larynx area, while clearly a source of innervation of the laryngeal muscles, does not appear to drive vocal behavior. This represents a striking species difference in the cortical control of the larynx between monkeys and humans, one supported by neuroanatomy. In monkeys, the cortical larynx area is restricted to the premotor cortex (Hast et al., 1974), while in the human brain it extends into primary motor cortex as well (Loucks et al., 2007; Brown et al., 2008; Simonyan et al., 2009; Belyk and Brown, 2014b). The human larynx area is activated by volitional movement of the laryngeal muscles, phonation and forced expiration, leading us to refer to it as the ‘larynx phonation area’ (LPA; Brown et al., 2008). In fact, in contrast to the monkey cortical larynx area, electrical stimulation of the human LPA does indeed produce vocalization (Penfield and Boldrey, 1937), and lesions may cause mutism (Jürgens et al., 1982) and other speech disorders. Furthermore, the human LPA projects monosynaptically to the laryngeal lower motor neurons in the nucleus ambiguus (Kuypers, 1958; Iwatsubo et al., 1990), while the monkey larynx area projects to the nucleus ambiguus only indirectly via synapses in the reticular formation (Simonyan and Jürgens, 2003; Jürgens and Ehrenreich, 2007).

One prominent model of vocal-motor control (Myers, 1976; Owren et al., 2011; Ackermann et al., 2014) dichotomizes innate-affective and learned non-affective vocal behaviors, attributing the production of affective vocalizations to the cingulate pathway and non-affective vocalizations to the primary motor cortex. Evidence from the monkey vocal system provides strong support for the involvement of the cingulate vocal pathway in driving species-specific affective vocalizations (Jürgens and Pratt, 1979; Sutton et al., 1981; Kirzinger and Jürgens, 1982). However, monkeys are not vocal learners (Petkov and Jarvis, 2012); innate, species-specific affective vocalizations constitute their entire vocal repertoire. In addition, the cortical larynx area of the monkey appears to lack vocal functionality altogether. This makes the monkey a poor model of the vocal-motor control of learned vocalizations. Humans clearly possess both of these vocal-motor pathways, but it is unclear whether these pathways operate reciprocally, as implied in this model, or if they operate in concert to produce the complex vocal repertoire of humans, which includes not only affective vocalizations but speech and song as well.

Given the anatomical and functional reorganization of laryngeal motor control in humans, it is unclear whether the LPA plays as limited a role in human affective vocalization as the cortical larynx area does in monkeys. Indeed, much of human affective vocal expression occurs in parallel with speech. Barrett et al. (2004) collected fMRI data while participants performed a speech task before and after inducing sad affect. Both self-reported sad affect and reduced fundamental-frequency range—a vocal cue of sadness—were correlated with the degree of activation of the ACC, as predicted from knowledge of the monkey vocal system. Similarly, Wattendorf et al. (2013), in an fMRI study of laughter, showed that both the ACC and PAG were active when laughter was voluntary, whereas inhibition of laughter activated the ACC without the PAG, and induced laughter activated the PAG without the ACC. These data are consistent with the roles of the PAG in producing affective vocalizations and the ACC in exerting volitional control over the PAG. However, activation of the LPA also correlated with reduced fundamental-frequency range during induced sad affect (Barrett et al., 2004), and the LPA was active during both volitional and induced laughter (Wattendorf et al., 2013). These findings suggest that, in humans, control of the larynx during affective vocalization may result from an integration of the cingulate and primary-motor vocalization pathways.

We previously hypothesized (Belyk and Brown, 2014a) that a portion of the inferior frontal gyrus—the IFG pars orbitalis (IFGorb)—that is reliably activated when perceiving affective vocalizations may be involved in planning affective vocalizations. This region has anatomical connections with limbic, auditory and premotor areas (Price, 1999; Anwander et al., 2007; Turken and Dronkers, 2011; Yeterian et al. 2012), similar to adjacent Broca’s area, which sits at the interface of speech perception and production (Watkins and Paus, 2004). None of the handful of studies that have examined the production of affective vocalizations in humans have reported activation in the IFGorb (Barrett et al., 2004; Aziz-Zadeh et al., 2010; Wattendorf et al., 2013). However, given the relatively low power of whole-brain analyses in human brain imaging, we sought to perform a sensitive test of the activation of the IFGorb during affective production using a region-of-interest (ROI) analysis.

Because of the great paucity of research on affective vocalizing in humans, we conducted a highly controlled study that compared the production of exclamations to acoustically matched nonsense syllables that were similar in vocal dynamics—particularly pitch profiles—but that differed in affective content. In doing so, we aimed to test two hypotheses. First, in order to test for a potential dissociation between the cingulate and LPA vocal pathways, we examined whether these cortical regions were differentially activated during affective and non-affective vocal production. The putative dissociation between vocal motor pathways for affective and non-affective vocalizations predicts that the ACC and PAG are more active when producing affective vocalizations and that the LPA is more active when producing non-affective vocalizations. Such a dissociation further predicts functional connectivity between the ACC and PAG, but not between the LPA and either of these structures. Second, given the acknowledged role of the IFGorb in perceiving affective vocalizations, we wanted to see if this area was active when producing affective vocalizations as well. In order to localize this area, we examined patterns of brain activation when participants made judgments about the emotions expressed in vocal recordings, as compared to judgments about the pitch contours of these recordings. We then performed ROI analyses to test the hypothesis that the IFGorb is also activated beyond baseline when producing affective vocalizations. Finally, given that the majority of the literature on affective prosody focuses on perception alone, we were interested in comparing the brain network for affective vocalizing with that for affective perception. Considering the general propensity of sensorimotor systems to activate during both action execution and observation (Cross et al., 2006; Aziz-Zadeh et al., 2010; Menenti et al., 2011), we performed exploratory analyses to search for exclusivity and overlap in our vocal production and perceptual experiments.

Methods

Stimulus recording procedure

A male professional actor was instructed to vocalize the monosyllables eep, ep, oap, and oop (phonetically/ip/, /ɛp/, /ɔp/, and /up/ in the International Phonetic Alphabet), in each of four different affective prosodies: happiness, sadness, pleasure and disgust. He was given the instruction to produce happy and sad vocalizations with a descending pitch-contour, and to produce pleasure and disgust vocalizations with an arched pitch-contour. Four renditions of each syllable/emotion combination were recorded, resulting in a total of 64 recordings. The amplitude of the set of recordings was equalized in Praat (Praat: doing phonetics by computer; www.fon.hum.uva.nl/praat/) to ensure that all recordings were equally audible in the MRI environment.

Stimulus validation procedure

Twelve participants were presented with each of the 64 recordings, and performed a four-alternative forced-choice discrimination task. Participants were instructed to identify the emotion that was being expressed among the four possible responses of ‘happiness’, ‘sadness’, ‘pleasure’ and ‘disgust’. Recordings of syllable/emotion combinations were then ranked with regard to their accuracy of discrimination. For each emotion, recordings in the top two quartiles were included in the perceptual tasks of the fMRI experiment, while recordings in the third quartile were reserved for training prior to the scanning session. The remaining recordings were discarded. All subjects provided written informed consent before participation in the study, which was approved by the McMaster Research Ethics Board.

Imaging experiment procedure

Sixteen participants with no history of neurological or psychiatric illness were recruited for the fMRI experiment. The data from two participants was omitted from the group analysis due to excessive head motion, resulting in a final sample size of 14 (10 female, 13 right handed). All subjects provided written informed consent before participation in the study, which was approved by the Hamilton Integrated Research Ethics Board.

Participants performed four tasks in four separate functional scans (i.e. one task per scan) in counterbalanced order, each scan lasting 7 min 40.8 s. Each scan consisted of 32 trials lasting 14.4 s (nine functional volumes) each. For the two vocal production tasks (see below), each trial consisted of a visual cue presented for 1.6 s that prompted participants to vocalize, followed by 12.8 s of fixation on a crosshair. For the two perception tasks (see below), each trial consisted of 1.6 s of a recorded vocalization, followed by 12.8 s of silence with visual fixation on a crosshair throughout. All tasks were modeled according to a slow event-related design.

Affective vocal production

Participants were visually cued with a monosyllabic exclamation word, and were instructed to produce the exclamation with the appropriate prosody as expressively as possible. The exclamation words were drawn from a previous study in which participants expressively produced these tokens on command (Belyk and Brown, 2014c). The exclamation words were ‘Oooh!’, ‘Mmm!’, ‘Eww!’, ‘Yuck!’, ‘Yay!’, ‘Good!’, ‘No!’ and ‘Damn!’. Participants were trained on a day prior to the scanning session to perform the task while lying still and without making facial expressions or head movements.

Pitch-contour production

Participants produced monosyllables with either descending or arched pitch contours. The goal was to create a vocal task in which the acoustic properties of the exclamations were controlled for, but which lacked their affective character. On each trial, participants were visually cued with one of the monosyllables ‘eep’, ‘ep’, ‘oap’’ or ‘oop’’ as well as a pitch-contour representation in the form of either a downward-sloping arrow or an arch-shaped arrow. Hence, participants produced eight distinct vocalizations to match the number of both the exclamation words in the affective vocal-production condition and the vocal recordings in the perceptual tasks. These two contours were selected to match those of the vocal recordings in the perception task, which themselves were either descending or arched. Participants were trained on a day prior to the scanning session to perform this task while lying still and without making facial expressions or head movements.

Affect perception

Participants listened to the 32 recordings selected from the stimulus-validation experiment (see above) using MRI-compatible, noise-cancelling headphones. Unlike the stimulus-validation study—where participants had to perform an emotion-identification task—the participants in the fMRI version of the task performed a binary valence-discrimination task. Participants were instructed to discriminate between recordings that expressed an emotion of either positive valence (i.e. happiness or pleasure) or negative valence (i.e. sadness or disgust). The assignment of valence to response buttons (index finger vs middle finger) was counterbalanced across participants. In addition, the order of stimuli within each condition was randomized.

Pitch-contour perception

Participants listened to the same 32 recordings as in the affect perception task but this time performed a binary contour-discrimination task. Participants were instructed to discriminate between recordings that contained either a descending contour (i.e. happiness and sadness) or an arched contour (i.e. pleasure and disgust).

Magnetic resonance imaging

Magnetic resonance images were acquired with a GE Signa Excite 3 Tesla MRI. Functional images sensitive to the blood oxygen level-dependent (BOLD) signal were collected with gradient echo sequences with repetition time = 1600 ms, echo time = 33 ms, flip angle = 90°, 28 slices, slice thickness = 4 mm, gap = 0 mm, in-plane resolution 3.75 mm × 3.75 mm, matrix = 64 × 64, and field of view = 240 mm. A total of 293 volumes was collected per scan. Five dummy volumes were discarded at the beginning of each scan, leaving a total of 288 volumes per scan.

Image analysis

Functional scans were analyzed with Brain Voyager 2.4, supplemented with NeuroElf (neuroelf.net). Each functional scan was spatially smoothed with a Gaussian kernel of 4 mm full-width half maximum and high-pass filtered with a cut-off frequency of 0.0078125 Hz (or 1/128 s). Each sample was realigned to the first sample in order to correct for head motion. Head-motion correction generated a set of parameters indicating the extent of translation in and rotation around the three cardinal axes for each sample. These motion parameters were included as nuisance regressors in all subsequent analyses. Low-level contrasts were thresholded with a false discovery rate (FDR) of P < 0.005 and a cluster threshold k > 24. The cluster threshold was relaxed to k > 5 in the midbrain to permit the detection of small nuclei in that region. High-level contrasts were thresholded at P < 0.01 uncorrected. To control for the rate of false positives, a Monte Carlo simulation using the AlphaSim algorithm selected a cluster-size threshold for each high-level contrast that maintained a family-wise error rate of P < 0.05.

ROI analyses were conducted to determine (i) whether the cingulate and primary-motor vocal pathways are dissociated in function, and (ii) whether activation of the IFG pars orbitalis is specific to making judgments about affective prosody or if it participates in encoding it during vocal production as well. Five-millimeter cubic ROIs were placed around functionally defined maxima in the left ACC and PAG, bilateral LPA, and right IFGorb. Only unilateral ROIs were analyzed for the ACC, PAG, and IFGorb because full-brain analyses failed to localize equivalent contralateral regions. Regression coefficients from each ROI were entered into linear mixed models in R (R Core Development Team, 2014) with the crossed factors Task (production vs perception) and Content (affect vs pitch contour).

Functional connectivity analyses were conducted to test (i) whether activations in the ACC and PAG are differentially correlated when producing affective vocalizations vs matched pitch contours, and (ii) whether activations in the ACC and LPA are correlated, anti-correlated or independent. Time courses were extracted from each ROI for all participants. First-level regression models were computed in R in which the time course of the PAG or LPA were predicted by the time course of the ACC, with the hemodynamic response function of the experimental design as a covariate. Notably, this analysis removes the mutual influence of the experimental design on the time course of each region, precluding the interpretation that these regions are merely co-activated. Correlation coefficients from the first-level analysis were tested in a second-level analysis using Welch’s t tests for samples of unequal variance in order to determine whether the regression coefficients differed significantly from zero and/or between the two production tasks.

Results

Behavioral performance

During debriefing, all participants reported successfully producing the target affective vocalizations and pitch contours on cue. All participants performed above chance level on both the valence (mean 93%, SD 0.06%) and pitch-contour discrimination tasks (mean 87%, SD 0.07%). While participants performed above chance on both tasks, their discrimination accuracy was higher for the valence task than the contour-discrimination task t(10) = 3.84, P < 0.05.

Production

Overlapping vocal production activations

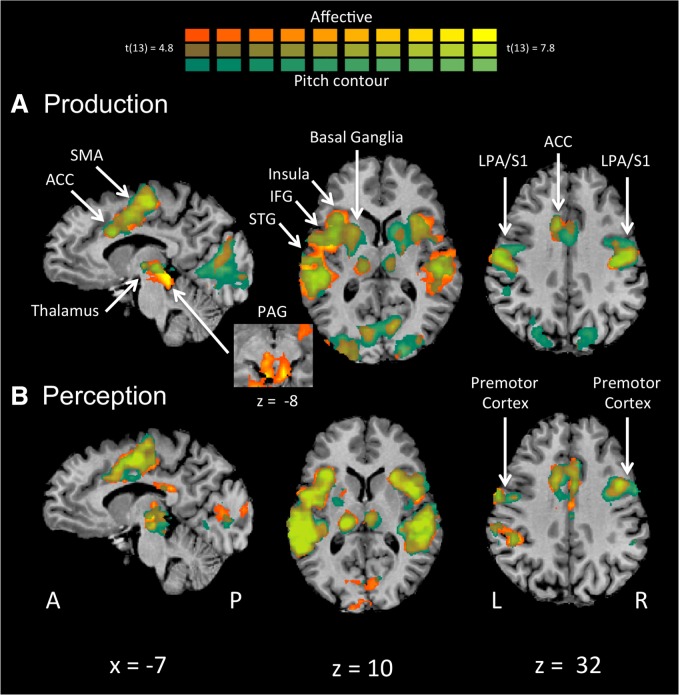

The affective production task and the acoustically matched pitch-contour production task activated a highly overlapping set of brain regions when compared with rest. These brain regions described a basic vocal network (see Figure 1A, Table 1). Both tasks activated the bilateral larynx phonation area and adjacent orofacial motor cortex (BA 4), extending into the premotor cortex (lateral BA 6) and primary somatosensory cortex (BA 3/1/2). Bilateral activation was also observed in the SMA (medial BA 6) extending into the ACC (BA 24), anterior insula, thalamus, primary auditory cortex (BA 41) and visual cortex (spanning BA 17, 18 and 19, and reflecting the presence of the visual stimulus), left STG (BA 22), as well as the right superior parietal lobule (SPL) and putamen.

Fig. 1.

Whole brain analyses. (A) Affective vocal production (orange) and pitch-contour vocal production (green) vs rest reveal a remarkable degree of overlap across most of the vocal network, demonstrating little specificity for affective vocalization. (B) Affect perception (orange) and pitch-contour perception (green) vs rest again demonstrate strong overlap between conditions. ACC, anterior cingulate cortex; IFG, inferior frontal gyrus LPA, larynx-phonation area; PAG, periaqueductal gray; SMA, supplementary motor area; STG, superior temporal gyrus; S1, primary somatosensory cortex.

Table 1.

Production

| Brain region | Affective production |

Contour production |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | Voxels | t value | x | y | z | Voxels | t value | |

| Frontal lobe | ||||||||||

| LPA/S1 (BA 4/6/3) | 39 | −16 | 34 | 570 | 11.5 | 54 | −13 | 43 | 49 | 8.7 |

| −57 | −10 | 37 | 366 | 9.7 | −48 | −7 | 34 | 31 | 7.1 | |

| SMA (BA 6) | 3 | −4 | 52 | 815 | 9.0 | |||||

| −6 | −16 | 55 | 401 | 9.9 | −9 | −10 | 61 | 40 | 8.7 | |

| Precentral gyrus (BA 6) | 24 | −16 | 52 | 48 | 7.0 | |||||

| 54 | −7 | 28 | 855 | 11.6 | ||||||

| ACC (BA 24) | −9 | 5 | 37 | 186 | 8.8 | |||||

| IFG/insula | 36 | 14 | 13 | 179 | 7.3 | 30 | 17 | 13 | 380 | 8.4 |

| −30 | 14 | 19 | 472 | 9.5 | −33 | 20 | 13 | 29 | 6.9 | |

| Parietal lobe | ||||||||||

| SPL (BA 7) | −18 | −34 | 61 | 32 | 7.5 | −60 | −16 | 28 | 659 | 9.2 |

| 33 | −58 | 46 | 15 | −73 | 46 | 83 | 8.8 | |||

| 24 | −58 | 46 | 64 | 6.6 | ||||||

| 18 | −70 | 55 | 30 | 6.2 | ||||||

| −36 | −61 | 46 | 143 | 7.3 | ||||||

| Posterior cingulate (BA 23) | −9 | −25 | 28 | 27 | 6.7 | |||||

| Temporal lobe | ||||||||||

| Heschl's gryus (BA 41) | 51 | −22 | 10 | 298 | 9.7 | 45 | −19 | 10 | 41 | 7.1 |

| Heschl's gryus (BA 41) | −48 | −31 | 10 | 451 | 10.2 | −54 | −28 | 10 | 205 | 8.8 |

| PrhG (BA 19) | −18 | −49 | −2 | 298 | 9.2 | |||||

| STG (BA 22) | −51 | −13 | 10 | 25 | 8.0 | −54 | 8 | 4 | 402 | 7.9 |

| Occipital lobe | ||||||||||

| Lingual gyrus (BA 17) | −18 | −94 | −11 | 737 | 10.7 | −27 | −97 | −2 | 52 | 7.5 |

| Inferior occipital gyrus (BA 18) | −42 | −82 | −14 | 71 | 9.9 | −39 | −85 | −5 | 1681 | 10.4 |

| Inferior occipital gyrus (BA 19) | 42 | −79 | −5 | 128 | 8.0 | |||||

| Fusiform gyrus (BA 19) | 15 | −64 | 1 | 332 | 8.5 | 21 | −61 | −5 | 1710 | 9.9 |

| −30 | −88 | 1 | 36 | 9.9 | −42 | −76 | −11 | 75 | 9.1 | |

| Middle occipital gyrus (BA 18) | 18 | −91 | 19 | 99 | 6.4 | 21 | −91 | 13 | 124 | 8.4 |

| Lingual gyrus (BA 18) | −15 | −73 | 7 | 59 | 7.6 | −12 | −73 | 4 | 219 | 9.5 |

| Lingual gyrus (BA 19) | −12 | −85 | 25 | 86 | 7.6 | −24 | −79 | 28 | 203 | 8.3 |

| Cuneus (BA 18) | 24 | −94 | 1 | 113 | 8.3 | 24 | −79 | 22 | 101 | 6.9 |

| Subcortical | ||||||||||

| Thalamus | 9 | −25 | 1 | 318 | 10.1 | 6 | −16 | 4 | 129 | 6.6 |

| −21 | −25 | 1 | 354 | 12.5 | −15 | −22 | −2 | 200 | 7.4 | |

| Subthalamic nucleus | 15 | −13 | −2 | 23 | 9.0 | |||||

| Caudate nucleus | 12 | 8 | 13 | 77 | 7.7 | |||||

| Cerebellum | 9 | −55 | −14 | 291 | 8.5 | |||||

| Putamen | 18 | 8 | 4 | 95 | 7.8 | 21 | 8 | 13 | 226 | 7.5 |

| Globus palidus | −24 | 5 | 4 | 99 | 7.7 | |||||

| Periaquaductal gray | 6 | −31 | −8 | 8 | 5.2 | |||||

Notes: Locations of peak voxels for producing affective and contour-based vocalizations. After each anatomical name in the brain region column, the Brodmann number for that region is listed. The columns labeled as x, y and z contain the Talairach coordinates for the peak of each cluster reaching significance at FDR P > 0.005 with cluster threshold k > 24. ACC, anterior cingulate cortex; IFG, inferior frontal gyrus; LPA, larynx-phonation area; PAG, periaqueductal grey; PrhG, parahippocampal gyrus; SMA, supplementary motor area; STG, superior temporal gyrus; SPL, superior parietal lobule; S1, primary somatosensory cortex.

Non-overlapping vocal production activations

Producing affective vocalizations further activated the left parahippocampal gyrus (BA 19) and globus pallidus and right subthalamic nucleus, cerebellum and PAG. Producing meaningless pitch contours further activated the right caudate nucleus and left posterior gyrus (BA 23).

Producing affective vs pitch contour vocalizations

Contrary to the predicted dissociation, there were no significant differences in brain activation between the production of affective vocalizations and matched pitch-contours anywhere in the brain.

Perception

Overlapping perceptual activations

The two perceptual judgment tasks activated highly overlapping brain regions when each task was compared to rest. These regions included auditory, language, and motor areas related to the perceptual judgment, as well as visual areas related to the response cue (see Figure 1B, Table 2). Both tasks activated the bilateral primary auditory cortex (BA 41), visual cortex (BA’s 17 and 18), thalamus, anterior insula (BA 13), and inferior parietal lobule (IPL; BA 40), right orofacial premotor cortex (BA 6), SMA (BA 6), cerebellum, claustrum, middle frontal gyrus (BA 9) and left posterior cingulate (BA 23), precentral and postcentral gyri (BA 4/3), corresponding to the hand area involved in button press. Both conditions also activated the same region of the ACC observed during the vocal production tasks, although with a slightly reduced magnitude.

Table 2.

Perception

| Brain regions | Affective perception |

Contour perception |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | Voxels | t value | x | y | z | Voxels | t value | |

| Frontal lobe | ||||||||||

| SMA (BA 6) | 6 | 2 | 46 | 1223 | 14.7 | 6 | 2 | 49 | 57 | 12.2 |

| IFG pars opercularis (BA 44) | 45 | 8 | 10 | 758 | 13.3 | |||||

| −45 | 5 | 16 | 511 | 12.6 | ||||||

| IFG pars triangularis (BA 45) | −48 | 5 | 4 | 449 | 10.1 | |||||

| Hand motor cortex (BA 4) | 51 | −10 | 46 | 81 | 8.8 | |||||

| −51 | −13 | 52 | 61 | 10.8 | −54 | −13 | 52 | 61 | 10.5 | |

| Premotor cortex (BA 6) | 39 | −10 | 49 | 94 | 7.6 | |||||

| −57 | 2 | 28 | 63 | 9.7 | −9 | −7 | 49 | 899 | 12.3 | |

| Anterior insula (BA 13) | −30 | 20 | 13 | 60 | 9.8 | −33 | 11 | 13 | 76 | 10.7 |

| Middle frontal gyrus (BA 9) | 45 | 8 | 34 | 147 | 9.1 | 45 | 5 | 31 | 222 | 12.0 |

| 48 | 23 | 28 | 31 | 6.5 | ||||||

| −33 | 44 | 34 | 28 | 5.3 | ||||||

| IFG pars orbitalis (BA 47) | 17 | −8 | 34 | 8.4 | ||||||

| Temporal lobe | ||||||||||

| Heschle's gyrus (BA 41) | 48 | −28 | 10 | 805 | 11.6 | 57 | −22 | 13 | 821 | 11.5 |

| −48 | −34 | 10 | 1205 | 13.4 | −54 | −28 | 13 | 1148 | 13.1 | |

| pSTG (BA 22) | 51 | −40 | 13 | 36 | 9.4 | |||||

| −57 | −46 | 13 | 37 | 11.7 | ||||||

| ITG (BA 47) | −57 | −58 | −5 | 33 | 6.3 | |||||

| Parietal lobe | ||||||||||

| IPL (BA 40) | 48 | −31 | 40 | 44 | 6.5 | |||||

| −33 | −37 | 49 | 959 | 14.6 | −60 | −34 | 31 | 26 | 9.3 | |

| Postcentral gyrus (BA 3) | −30 | −28 | 64 | 1145 | 15.0 | |||||

| PCG (BA 23) | 6 | −43 | 25 | 72 | 7.5 | 6 | −31 | 28 | 97 | 9.3 |

| −9 | −28 | 25 | 28 | 7.5 | ||||||

| SPL (BA 7) | −24 | −70 | 43 | 61 | 8.3 | |||||

| Precuneus (BA 7) | −18 | −82 | 46 | 28 | 6.4 | |||||

| Occipital lobe | ||||||||||

| Cuneus (BA 18) | 6 | −94 | 7 | 107 | 7.0 | |||||

| 0 | −91 | 16 | 29 | 6.4 | ||||||

| Lingual gyrus (BA 17) | 6 | −85 | 1 | 97 | 6.9 | 6 | −73 | 4 | 155 | 7.4 |

| −15 | −70 | 4 | 222 | 7.6 | −15 | −70 | 1 | 224 | 8.4 | |

| Subcortical | ||||||||||

| Thalamus | 3 | −16 | 1 | 78 | 8.1 | 6 | −16 | 10 | 65 | 8.2 |

| 6 | −4 | 1 | 26 | 7.7 | ||||||

| −18 | −22 | 10 | 396 | 10.6 | −15 | −22 | 13 | 546 | 11.9 | |

| Cerebellum | 15 | −46 | −17 | 358 | 8.8 | 15 | −55 | −17 | 350 | 10.3 |

| −18 | −64 | −26 | 81 | 8.2 | ||||||

| −27 | −61 | −26 | 49 | 7.7 | ||||||

| Claustrum | 33 | −1 | −2 | 34 | 7.4 | 30 | 11 | 13 | 823 | 14.9 |

| Putamen | −21 | 5 | 7 | 41 | 5.5 | |||||

Notes: Locations of peak voxels for the affect perception and pitch-contour perception tasks. After each anatomical name in the brain region column, the Brodmann number for that region is listed. The columns labelled as x, y and z contain the Talairach coordinates for the peak of each cluster reaching significance at FDR P > 0.005 with cluster threshold k > 24. IFG, inferior frontal gyrus; IPL, inferior parietal lobule; ITG, inferior temporal gyrus; SMA, supplementary motor area; pSTG, posterior superior temporal gyrus; PCG, posterior cingulate gyrus SPL, superior parietal lobule.

Non-overlapping perception activations

Making judgments about the emotions expressed in the vocal stimuli further activated the temporal pole, right IFG pars opercularis (BA 44) and IPL (BA 40), as well as the left IFG pars triangularis (BA 45), posterior cingulate gyrus (BA 23), putamen and cerebellum. Making judgments about the pitch contour of the vocal stimuli further activated the bilateral pSTG (BA 22), left IFG pars opercularis (BA 44), superior parietal lobule (SPL; BA 7) and SMA, as well as the right orofacial premotor cortex (BA 6).

Perceiving affective vs pitch contour vocalizations

In replication of previous studies, contrasts between discriminating the emotion expressed in the stimuli vs discriminating the pitch contours that they contained revealed increased activation in the right anterior STG (BA 38), and left IFG pars triangularis (BA 45) and IPL (see Table 3).

Table 3.

Affective vs pitch-contour tasks

| Brain region | Affect > contour perception |

||||

|---|---|---|---|---|---|

| x | y | z | Voxels | t value | |

| Frontal lobe | |||||

| IFG pars triangularis (BA 45) | −46 | 25 | 2 | 43 | 4.2 |

| Temporal lobe | |||||

| Temporal pole (BA 38) | 42 | 17 | −14 | 30 | 5.2 |

| Parietal lobe | |||||

| Inferior parietal lobule (BA 40) | −42 | −37 | 55 | 23 | 5.0 |

Notes: Locations of peak voxels for the high-level contrast [affective perception > contour perception]. Family-wise error was maintained at P < 0.05 by combining an uncorrected threshold of P < 0.01 with a cluster threshold of k > 12, as selected by AlphaSim. No significant differences were observed for the contrast affect production > contour production. IFG, inferior frontal gyrus.

Production vs perception

In order to compare the brain areas involved in production vs perception irrespective of affective content, we ran the conjunction of contrasts [affect production > affect perception] ∩ [contour production > contour perception]. An examination of these two contrasts individually revealed nearly identical activation profiles. At a FDR of P < 0.05, only the bilateral LPA (extending into adjacent orofacial motor and somatosensory cortex), basal ganglia and visual cortex (reflecting the visual stimulus) were significantly more active during vocal production than the perceptual tasks. This small subset of activations reflects the otherwise overlapping activation of the vocal network for vocal production and perception tasks.

Region-of-interest analyses

Five regions of interest were defined around the functionally localized left ACC (Talairach coordinates −9, 5, 37), left PAG (−6, −31, −8), left (−52, −17, 33) and right (50, −11, 30) LPA/orofacial motor cortex, and right IFG pars orbitalis (42, 17, −8). A 2 × 2 Task (production vs perception) by Content (affect vs contour) analysis was conducted in each ROI. A significant main effect of task was observed for each ROI, with no effect of content and no task-by-content interaction (see Figure 2).

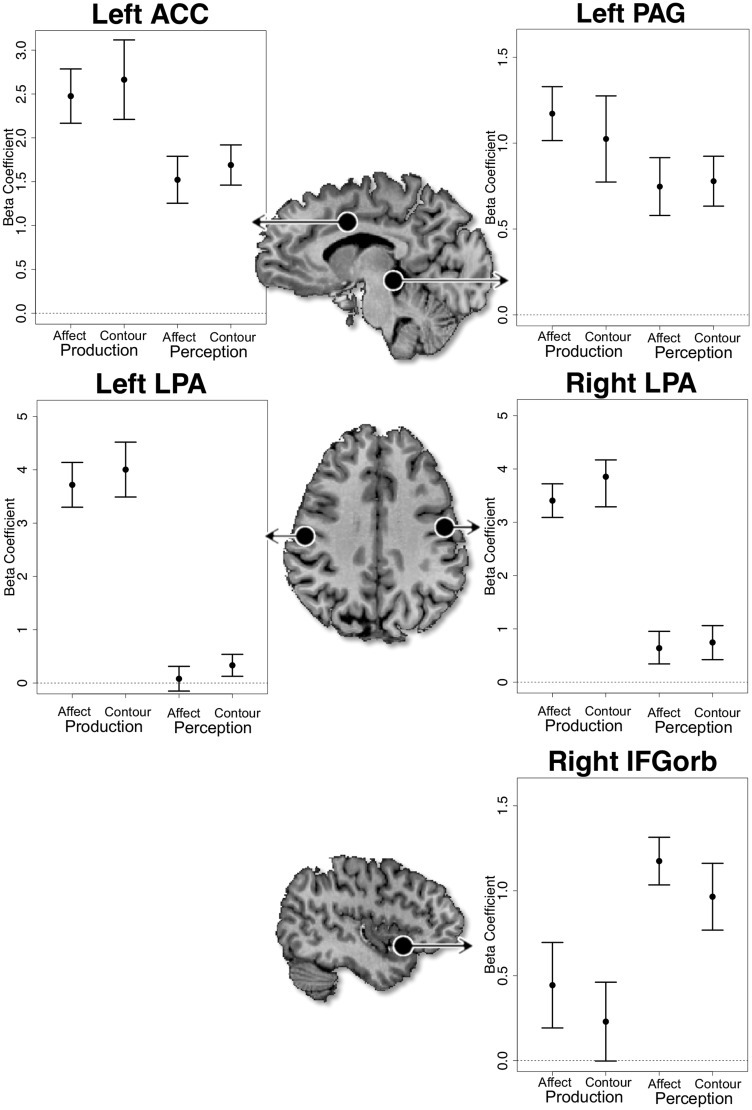

Fig. 2.

Region-of-interest (ROI) analyses. ROIs in the ACC, PAG, LPA and IFG pars orbitalis are indicated by black circles on the axial and midsagittal slices. Mean beta values are plotted for each task and each ROI. Error bars mark one standard error above and below the mean. In each area, there are strong differences between the level of activation for the production and perception tasks, but no difference between the affective and pitch-contour conditions, nor are there any statistical interactions. ACC, anterior cingulate cortex; IFG, inferior frontal gyrus; LPA, larynx-phonation area; PAG, periaqueductal gray.

In the left ACC, activation was greater for the production tasks than the perception tasks F(1,39) = 14.4, P < 0.05. Neither content F(1,39) = 0.5, P = 0.48 nor the task-by-content interaction was significant F(1,39) = 0.014, P = 0.97. Similarly, activation in the PAG was greater for the production tasks than the perception tasks F(1,39) = 6.5, P < 0.05. Neither the main effect of content F(1,39) = 0.2, P = 0.66 nor the task-by-content interaction was significant F(1,39) = 0.46, P = 0.5. Since the PAG is of relatively small volume and a 5-mm cubic ROI may extend beyond the PAG to include other midbrain nuclei, we replicated this analysis with 3 mm and 1 mm cubic ROIs. The results of these analyses were similar to those reported above, demonstrating that this analysis is robust to the size of the ROI (see Supplementary Table 2).

The left LPA was also more active for production tasks F(1,39) = 145, P < 0.05, with no effect of content F(1,39) = 0.8, P = 0.38 and no interaction F(1,39) ≈ 0, P = 0.96. Similar trends were observed in the right LPA with a significant main effect of task F(1,39) = 113, P < 0.05, no effect of content F(1,39) = 1, P = 0.32 and no interaction F(1,39) = 0.4, P = 0.54.

The opposite trend was observed in the right IFGorb. A main effect of task was observed such that activation was greater for the perception than the production tasks F(1,39) = 18.4, P < 0.05. Neither content F(1,39) = 1.5, P = 0.22 nor the task-by-content interaction was significant F(1,39) = 0.0002, P = 0.99.

Functional connectivity

Activation of the ACC significantly predicted activation of the PAG—even after controlling for co-activation due to the experimental design—in both the affective t(13) = 3.7, P < 0.05, and contour t(13) = 2.4, P < 0.05 production conditions. Functional connectivity did not differ significantly between conditions t(15.8) = 1.12, P = 0.27. Functional connectivity was also observed between the ACC and left LPA in both tasks: affective t(13) = 11.0, P < 0.05, and contour t(13) = 11.6, P < 0.05, with no difference between tasks t(25.8) = −1.2, P < 0.26. A similar trend was observed for the ACC and right LPA: affective t(13) = 17.1, P < 0.05, contour t(13) = 12.0, P < 0.05, and affect vs contour t(21.9) = −1, P = 0.31.

Discussion

The primary objective of this study was to test the presumed dissociation of affective and non-affective vocalizations as the domains of the cingulate and primary-motor vocal pathways, respectively. We had participants vocalize either affectively expressive exclamations or monosyllables expressed with meaningless, though acoustically matched, pitch contours. To localize brain regions involved in perceiving affective vocalizations (Witteman et al., 2012; Belyk and Brown, 2014a), we had participants listen to actor-produced affective vocalizations, and to discriminate either the valence of the emotion being expressed in the vocalization or the pitch contour.

Several analyses failed to support a functional dissociation between the two vocal-production pathways and additionally lent support to a model in which the primary process in producing affective vocalizations is the modulation of vocal parameters such as pitch: (i) vocal production activated a network of brain areas that were commonly reported in studies of vocalization (Brown et al., 2009), regardless of whether the vocalizations were affective or based solely on non-affective pitch contours; (ii) direct contrasts between the vocalization tasks failed to detect differences in activation anywhere in the brain; (iii) ROI analyses revealed that both the cingulate vocalization pathway and LPA were equally active during both affective and non-affective vocalizing; (iv) we observed functional connectivity between the ACC and PAG—the two major components of the cingulate vocalization pathway—during both vocal conditions and (v) the ACC was functionally connected with the LPA during both vocal conditions. These data suggest that the primary-motor and cingulate vocalization pathways may not be functionally dissociated, as previously suggested based non-human animal models. We further demonstrated that the IFG pars orbitalis does not participate in vocal production, contrary to our previous hypothesis (Belyk and Brown, 2014a).

Cingulate involvement in vocalization, affective or otherwise

While there is a paucity of research examining the production of affective vocalizations in humans, those studies that have been conducted, including this study, are unanimous in reporting coactivation of the cingulate and LPA pathways (Barrett et al., 2004; Aziz-Zadeh et al., 2010; Wattendorf et al., 2013). While Barrett et al. (2004) observed that ACC activation correlated with vocal acoustics during expressive speech, the same was observed for the LPA. Wattendorf et al. (2013) observed that the LPA was activated during laughter, even when it was spontaneous. We further observed that the LPA and ACC are functionally connected, not only during affective but also during non-affective vocalization. While one previous study reported negative functional connectivity between these regions during simple vocal tasks (Simonyan et al., 2009), the ACC was not itself activated in those tasks, making the interpretation difficult.

While the cingulate vocalization pathway is undoubtedly essential for affective vocalization (Jürgens, 2009), evidence from this study suggests that it is not specific for that purpose. Region-of-interest analyses revealed that the ACC and PAG were both activated and were functionally connected when participants vocalized, regardless of whether the vocalizations were affective. These findings are inconsistent with a specialization of these areas for affective vocalization, but instead suggest that they are part of a broader vocal-motor system. Interestingly, the ACC was also active during both of the perceptual judgment tasks, much like the premotor cortex, suggestive of a role in planning rather than executing vocalizations. Previous brain imaging studies of affective vocalization in humans do strongly support the involvement of the ACC in producing affective vocalizations, but do not exclude its involvement in producing non-affective vocalizations (Barrett et al., 2004; Wattendorf et al., 2013). Indeed, the ACC is frequently activated during non-affective speech (Paus et al., 1993; Crosson et al., 1999) and singing (Brown et al., 2009). Hence, the ACC appears to participate in vocalization, affective or otherwise.

Pitch modulation as a common denominator

A common feature of our two vocal tasks is modulation of vocal pitch. For example, happiness and disgust are both expressed with high-pitched vocalizations, while sadness and pleasure are expressed with low-pitched vocalizations (Belyk and Brown, 2014b). Pitch level as well as pitch variability, among other vocal acoustic parameters, can further distinguish these emotions. Pitch is among the most informative acoustic cues to affective vocal expression (Banse and Sherer, 1996; Goudbeek and Scherer, 2010). The emotions expressed by vocalizations that have been manipulated to remove all but the pitch information can be discriminated above chance (Lieberman and Michaels, 1962). Sensitivity to vocal pitch cues emerges early in development. Mothers use infant-directed speech, as characterized by a raised vocal pitch (Fernald and Simon, 1984), to sooth infants, and children use pitch (among other cues) differentially so as to vary the form and intensity of their tantrums (Green et al., 2011).

Our results demonstrate that the neural system for affective vocal expression is highly similar to that for pitch production. Aziz-Zadeh et al. (2010) observed that many brain areas, including the ACC, were active both when speakers vocally expressed an emotion and when they intoned a question, where both forms of intonation involved dynamic pitch modulation, but only one conveyed emotion. A meta-analysis of brain imaging studies of singing and simple syllable production found activation of both the LPA and cingulate vocalization area (Brown et al., 2009). Schulz et al. (2005) observed activation of the ACC and PAG, and functional connectivity between them, during non-affective speech when it was voiced, but not when it was whispered.

Taken together, the findings of this study and the existing literature suggest that, in humans, the brain network activated by affective vocalizations may not be specific to expressing affective states, but instead may be part of the neural system for pitch modulation. This same observation applies to the perception of affective vocalizations, which overlapped the perception of pitch contours, and both of which overlapped the system for vocal production, with the exception of a few principal areas like the primary motor cortex and basal ganglia that are involved in initiating movements. This is consistent with the general property of sensorimotor systems that observing an action that one can perform activates many of the brain areas involved in executing the same action, whether it be body movements (Cross et al., 2006) or speech (Menenti et al., 2011). To our knowledge, only one other study has examined such a production-perception linkage in the context of prosody. Consistent with the findings of Aziz-Zadeh et al. (2010), we observed overlapping activation throughout much the vocal motor system during the production and perception of affective prosody.

The IFG pars orbitalis is specific to perception

We previously hypothesized that the IFGorb may be involved in planning the prosodic component of speech (Belyk and Brown, 2014a), in much the same way that Broca’s area participates in speech planning (Watkins and Paus, 2004). This study tested that hypothesis by localizing the IFGorb with a prosodic discrimination task and tested whether it was also activated when producing affective vocalizations. Contrary to our hypothesis, ROI analyses revealed that the IFGorb was not activated by either production task and was thus specific for perception.

Conclusion

Both the cingulate vocalization pathway and the LPA were activated during vocalization, regardless of whether vocalizations expressed an emotion. This result contradicts the dominant view of these regions as separate pathways for affective and non-affective vocalization, respectively. Instead it supports a view in which the neural system for affective vocalization is very similar if not identical to the neural system for pitch production, where pitch is a principal carrier of affective signals in the voice. We further tested the hypothesis that a key brain region involved in perceiving affective prosody in human speech, the IFG pars orbitalis, also participates in producing affective prosody. The results suggest that this region does not participate in vocal production, but instead is specific to perception.

Supplementary Material

Acknowledgements

We are grateful to Dr. Chris Aruffo for generating the vocal recordings.

Funding

This work was funded by a grant from the Natural Sciences and Engineering Research Council (NSERC) of Canada to S.B. (371336).

Supplementary data

Supplementary data are available at SCAN online.

Conflict of interest. None declared.

References

- Ackermann H., Hage S.R, Ziegler W. (2014). Brain mechanisms of acoustic communication in humans and nonhuman primates: An evolutionary perspective. Behavioral and Brain Sciences , 37, 529–604. [DOI] [PubMed] [Google Scholar]

- Aitken P.G. (1981). Cortical control of conditioned and spontaneous vocal behavior in rhesus monkeys. Brain and Language, 13(1), 171–84. [DOI] [PubMed] [Google Scholar]

- Anwander A., Tittgemeyer M., von Cramon D.Y., Friederici A.D., Knösche T.R. (2007). Connectivity-based parcellation of Broca’s area. Cerebral Cortex , 17(4), 816–25. [DOI] [PubMed] [Google Scholar]

- Aziz-Zadeh L., Sheng T., Gheytanchi A. (2010). Common premotor regions for the perception and production of prosody and correlations with empathy and prosodic ability. Plos One, 5(1), e8759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banse R., Sherer K. (1996). Acoustic profiles in vocal emotion expression. Journal of Personality and Social Psychology, 70(3), 614–36. [DOI] [PubMed] [Google Scholar]

- Barrett J., Pike G.B., Paus T. (2004). The role of the anterior cingulate cortex in pitch variation during sad affect. European Journal of Neuroscience, 19(2), 458–64. [DOI] [PubMed] [Google Scholar]

- Belin P., Fillion-Bilodeau S., Gosselin F. (2008). The Montreal Affective Voices: A validated set of nonverbal affect bursts for research on auditory affective processing. Behavior Research Methods, 40(2), 531–9. [DOI] [PubMed] [Google Scholar]

- Belyk M., Brown S. (2014a). Perception of affective and linguistic prosody: An ALE meta-analysis of neuroimaging studies. Social Cognitive and Affective Neuroscience, 9, 1395–403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belyk M., Brown S. (2014b). Somatotopy of the extrinsic laryngeal muscles in the human sensorimotor cortex. Behavioural Brain Research , 290, 364–71. [DOI] [PubMed] [Google Scholar]

- Belyk M., Brown S. (2014c). The acoustic correlates of valence depend on emotion family. Journal of Voice, 28(4), 523.e9–e18. [DOI] [PubMed] [Google Scholar]

- Brown S., Laird A.R., Pfordresher P.Q., Thelen S.M., Turkeltaub P., Liotti M. (2009). The somatotopy of speech: Phonation and articulation in the human motor cortex. Brain and Cognition, 70(1), 31–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown S., Ngan E., Liotti M. (2008). A larynx area in the human motor cortex. Cerebral Cortex, 18(4), 837–45. [DOI] [PubMed] [Google Scholar]

- Cross E.S., Hamilton A.F.D.C., Grafton S.T. (2006). Building a motor simulation de novo: observation of dance by dancers. Neuroimage, 31(3), 1257–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crosson B., Sadek J.R., Bobholz J.A., et al. (1999). Activity in the paracingulate and cingulate sulci during word generation: An fMRI study of functional anatomy. Cerebral Cortex, 9(4), 307–16. [DOI] [PubMed] [Google Scholar]

- Fairbanks G., Pronovost W. (1938). Vocal pitch during simulated emotion. Science, 88(2286), 382–3. [DOI] [PubMed] [Google Scholar]

- Faragó T., Andics A., Devecseri V., Kis A., Gácsi M., Ádám M. (2014). Humans rely on the same rules to assess emotional valence and intensity in conspecifics and dog vocalizations. Biology Letters, 10, 1–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernald A., Simon T. (1984). Expanded intonation contours in mothers’ speech to newborns. Developmental Psychology , 20(1), 104–13. [Google Scholar]

- Goudbeek M., Scherer K. (2010). Beyond arousal: Valence and potency control cues in the vocal expression of emotion. Journal of the Acoustical Society of America, 128(3), 1322–36. [DOI] [PubMed] [Google Scholar]

- Green J.A., Whitney P.G., Potegal M. (2011). Screaming, yelling, whining, and crying: Categorical and intensity differences in vocal expressions of anger and sadness in children’s tantrums. Emotion , 11(5), 1124–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hast M.H., Fischer J.M., Wetzel A.B. (1974). Cortical motor representation of the laryngeal muscles in macaca mulatta. Brain, 73, 229–40. [DOI] [PubMed] [Google Scholar]

- Iwatsubo T., Kuzuhara S., Kanemitsu A. (1990). Corticofugal projections to the motor nuclei of the brainstem and spinal cord in humans. Neurology, 40, 309–12. [DOI] [PubMed] [Google Scholar]

- Jürgens U. (1974). On the elicitability of vocalization from the cortical larynx area. Brain Research, 81(3), 564–6. [DOI] [PubMed] [Google Scholar]

- Jürgens U. (2002). Neural pathways underlying vocal control. Neuroscience & Biobehavioral Reviews, 26(2), 235–58. [DOI] [PubMed] [Google Scholar]

- Jürgens U. (2009). The neural control of vocalization in mammals: A review. Journal of Voice, 23(1), 1–10. [DOI] [PubMed] [Google Scholar]

- Jürgens U., Ehrenreich L. (2007). The descending motorcortical pathway to the laryngeal motoneurons in the squirrel monkey. Brain Research, 1148, 90–5. [DOI] [PubMed] [Google Scholar]

- Jürgens U., Kirzinger A., von Cramon D. (1982). The effects of deep-reaching lesions in the cortical face area on phonation: A combined case report and experimental monkey study. Cortex, 18, 125–39. [DOI] [PubMed] [Google Scholar]

- Jürgens U., Ploog D. (1970). Cerebral representation of vocalization in the squirrel monkey. Experimental Brain Research, 554, 532–54. [DOI] [PubMed] [Google Scholar]

- Jürgens U., Pratt R. (1979a). Role of the periaqueductal grey in vocal expression of emotion. Brain Research, 167, 367–78. [DOI] [PubMed] [Google Scholar]

- Jürgens U., Pratt R. (1979b). The cingular vocalization pathway in the squirrel monkey. Experimental Brain Research, 510, 499–510. [DOI] [PubMed] [Google Scholar]

- Kirzinger A., Jürgens U. (1982). Cortical lesion effects and vocalization in the squirrel monkey. Brain Research, 233, 299–15. [DOI] [PubMed] [Google Scholar]

- Kuypers H.G.J.M. (1958). Corticobulbar connexions to the pons and lower brain-stem in man. Brain, 81(3), 364–88. [DOI] [PubMed] [Google Scholar]

- Laukka P., Elfenbein H. A., Söder N., et al. (2013). Cross-cultural decoding of positive and negative non-linguistic emotion vocalizations. Frontiers in Psychology, 4, 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman P., Michaels S. B. (1962). Some aspects of fundamental frequency and envelope amplitude as related to the emotional content of speech. Journal of the Acoustical Society of America, 34(7), 922–7. [Google Scholar]

- Loucks T.M.J., Poletto C.J., Simonyan K., Reynolds C.L., Ludlow C.L. (2007). Human brain activation during phonation and exhalation: Common volitional control for two upper airway functions. Neuroimage, 36(1), 131–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menenti L., Gierhan S. M.E., Segaert K., Hagoort P. (2011). Shared language: Overlap and segregation of the neuronal infrastructure for speaking and listening revealed by functional MRI. Psychological Science, 22(9), 1173–82. [DOI] [PubMed] [Google Scholar]

- Myers R.E. (1976). Comparative neurology of vocalization and speech: Proof of a dichotomy. Annals of the New York Academy of Sciences , 280(1), 745–57. [DOI] [PubMed] [Google Scholar]

- Owren M.J., Amoss R.T., Rendall D. (2011). Two organizing principles of vocal production: Implications for nonhuman and human primates. American Journal of Primatology, 73(6), 530–44. [DOI] [PubMed] [Google Scholar]

- Paus T., Petrides M., Evans A.C., Meyer E. (1993). Role of the human anterior cingulate cortex in the control of oculomotor, manual, and speech responses: A positron emission tomography study. Journal of Neurophysiology, 70(2), 453–69. [DOI] [PubMed] [Google Scholar]

- Penfield W., Boldrey E. (1937). Somatic motor and sensory representations in the cerebral cortex of man as studied by electrical stimulation. Brain, 60, 389–443. [Google Scholar]

- Petkov C.I., Jarvis E.D. (2012). Birds, primates, and spoken language origins: Behavioral phenotypes and neurobiological substrates. Frontiers in Evolutionary Neuroscience, 4, 1–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price J.L. (1999). Prefrontal cortical networks related to visceral function and mood. Annals of the New York Academy of Sciences , 877, 383–96. [DOI] [PubMed] [Google Scholar]

- R Core Development Team (2014). R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. [Google Scholar]

- Sauter D.A., Eisner F., Ekman P., Scott S.K. (2010). Cross-cultural recognition of basic emotions through nonverbal emotional vocalizations. Proceedings of the National Academy of Sciences, 107(6), 2408–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scheiner E., Hammerschmidt K., Jürgens U., Zwirner P. (2004). The influence of hearing impairment on preverbal emotional vocalizations of infants. Folia Phoniatrica et Logopaedica , 56(1), 27–40. [DOI] [PubMed] [Google Scholar]

- Scheiner E., Hammerschmidt K., Jürgens U., Zwirner P. (2006). Vocal expression of emotions in normally hearing and hearing-impaired infants. Journal of Voice, 20(4), 585–604. [DOI] [PubMed] [Google Scholar]

- Scherer K.R., Wallbott H.G. (1994). Evidence for universality and cultural variation of differential emotion response patterning. Journal of Personality and Social Psychology, 66(2), 310–28. [DOI] [PubMed] [Google Scholar]

- Schröder M. (2003). Experimental study of affect bursts. Speech Communication, 40, 99–116. [Google Scholar]

- Schulz G.M., Varga M., Jeffires K., Ludlow C.L., Braun A.R. (2005). Functional neuroanatomy of human vocalization: An H215O PET study. Cerebral Cortex, 15(12), 1835–47. [DOI] [PubMed] [Google Scholar]

- Simon-Thomas E.R., Keltner D.J., Sauter D., Sinicropi-Yao L., Abramson A. (2009). The voice conveys specific emotions: Evidence from vocal burst displays. Emotion, 9(6), 838–46. [DOI] [PubMed] [Google Scholar]

- Simonyan K., Jürgens U. (2003). Efferent subcortical projections of the laryngeal motorcortex in the rhesus monkey. Brain Research, 974(1–2), 43–59. [DOI] [PubMed] [Google Scholar]

- Simonyan K., Ostuni J., Ludlow C.L., Horwitz B. (2009). Functional but not structural networks of the human laryngeal motor cortex show left hemispheric lateralization during syllable but not breathing production. Journal of Neuroscience, 29(47), 14912–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turken A.U., Dronkers N.F. (2011). The neural architecture of the language comprehension network: Converging evidence from lesion and connectivity analyses. Frontiers in Systems Neuroscience , 5, 1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton D., Larson C., Lindeman R.C. (1974). Neocortical and limbic lesion effects on primate phonation. Brain Research, 71(1), 61–75. [DOI] [PubMed] [Google Scholar]

- Sutton D., Trachy R.E., Lindeman R.C. (1981). Primate phonation: Unilateral and bilateral cingulate lesion effects. Behavioural Brain Research, 3(1), 99–114. [DOI] [PubMed] [Google Scholar]

- Watkins K., Paus T. (2004). Modulation of motor excitability during speech perception: The role of Broca’s area. Journal of Cognitive Neuroscience, 16(6), 978–87. [DOI] [PubMed] [Google Scholar]

- Wattendorf E., Westermann B., Fiedler K., Kaza E., Lotze M., Celio M.R. (2013). Exploration of the neural correlates of ticklish laughter by functional magnetic resonance imaging. Cerebral Cortex, 23, 1280–9. [DOI] [PubMed] [Google Scholar]

- Witteman J., Van Heuven V.J.P., Schiller N.O. (2012). Hearing feelings: A quantitative meta-analysis on the neuroimaging literature of emotional prosody perception. Neuropsychologia, 50(12), 2752–63. [DOI] [PubMed] [Google Scholar]

- Yeterian E.H., Pandya D.N., Tomaiuolo F., Petrides M. (2012). The cortical connectivity of the prefrontal cortex in the monkey brain. Cortex , 48(1), 58–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.