Abstract

We present novel Bayesian methods for the analysis of exponential decay data that exploit the evidence carried by every detected decay event and enables robust extension to advanced processing. Our algorithms are presented in the context of fluorescence lifetime imaging microscopy (FLIM) and particular attention has been paid to model the time-domain system (based on time-correlated single photon counting) with unprecedented accuracy. We present estimates of decay parameters for mono- and bi-exponential systems, offering up to a factor of two improvement in accuracy compared to previous popular techniques. Results of the analysis of synthetic and experimental data are presented, and areas where the superior precision of our techniques can be exploited in Förster Resonance Energy Transfer (FRET) experiments are described. Furthermore, we demonstrate two advanced processing methods: decay model selection to choose between differing models such as mono- and bi-exponential, and the simultaneous estimation of instrument and decay parameters.

Introduction

Optical microscopy methods are extensively and increasingly used in biomedicine. In particular, quantification of the acquired images has become essential, both in terms of morphology and in terms of intensity. More advanced fluorescence imaging techniques (e.g. confocal [1], two-photon [2], super-resolution methods [3, 4], and many others) are often used in preference to more standard methods (e.g. widefield [5]) in recent decades. Similarly, many fluorescent proteins [6] and small molecules [7, 8] are being exploited for their fluorescent properties. Advances such as these have allowed unprecedented investigations of biological cells and tissues with sub-micrometre resolution, and indeed with single molecule resolution. Many of these techniques utilise not just the intensity of fluorescence light from the sample but may also gain information from its spectrum and polarisation, as well as the probability of light emission following the excitation of the molecule (as opposed to other energy loss mechanisms). All of which can reveal details of the molecular environment, such as pH or oxygenation state [9, 10], and of molecular interactions such as those that occur between the individual proteins that enable life [11].

The finite probability of fluorescence light emission following fluorophore excitation results in a decaying profile of fluorescence intensity from a given ensemble of molecules. Since the probability per unit time is usually constant over the time period of the decay (typically nanoseconds for organic fluorescent molecules), the profile is a decaying exponential function that can be characterised by the fluorescence lifetime (the time to decay by 1/e). There are many techniques for measuring the decay profile directly in the time-domain, or indirectly, by measuring the phase shift (and loss of amplitude) of the delayed emission using modulated excitation, and subsequently determining the lifetime. A brief review of those aspects follows in this text, but it is the extraction of the fluorescence lifetime from the evidence provided by single fluorescence emission detected events, utilising Bayesian statistics in a novel way, that is the subject of this paper. Furthermore, the Bayesian framework provides a robust method for extension to more complex models of emission profile, as well the possibility of extracting additional measurements and the inclusion of prior knowledge. Because more complex models are automatically penalised by the framework if there is not sufficient data to support them, the chance of mis-interpretation is greatly reduced compared to standard techniques.

When linked with single or two-photon laser scanning techniques to form Fluorescence Lifetime Imaging Microscopy (FLIM), these techniques provide powerful tools for biological investigation. For example, molecular interactions between specific proteins in a cell can be robustly detected in living and fixed cells and tissues using FLIM by exploiting Förster Resonance Energy Transfer (FRET) [12–14]. Proteins of interest may be fluorescently labeled with specific primary antibodies and when fluorophores are chosen appropriately, FRET can be detected through a reduction in the fluorescence lifetime of one of the fluorophores. Alternatively proteins can be used directly in living cells e.g. [15]. The detection of FRET gives a clear indication that the two molecules are co-located within a distance of about 10 nm and this is the scale at which protein interactions occur [10, 16, 17].

Our previous analysis [18] is extended to offer multi-exponential decay analysis, decay model selection, and improved instrument response function (IRF) determination. The developed Bayesian analysis is based on a fully analytic system model which rigorously incorporates repetitive excitation, a multi- exponential decay model, and an improved IRF approximation in order to more accurately determine any significant limitations that may be present in a real instrument. The analysis methods presented here have advantages when photon counts are low. Under these conditions, all fitting processes have limitations, but we sought to develop a ‘gold standard’ method that can be shown to yield the best possible information from very sparse data. Our previous work [18], aimed at fitting mono-exponential decays, was shown to require about half as many detected photons to achieve decay estimates with a similar accuracy as could be obtained using other analysis methods.

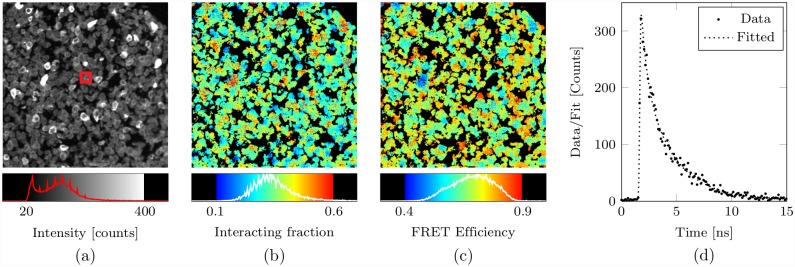

As a preliminary example of what can be achieved with bi-exponential analysis in the context of a FRET experiment, Fig 1 shows the distribution of FRET efficiency and interacting fraction e.g. [19] in a cell pellet sample. A cell pellet sample is used here to demonstrate the HER2-HER3 interaction; being membrane proteins this interaction should occur fairly evenly across the sample. The separation of the two parameters, FRET efficiency and interacting fraction, is possible if bi-exponential analysis is used together with certain assumptions (see below). The simpler mono-exponential model can only reveal a single parameter, usually the effective FRET efficiency [19]. Many more complex FRET conditions, that are commonly ecountered, cannot be well modelled with a bi-exponential but the Bayesian framework we present lends itself well to more complex situations given sufficient data, and will provide robust and non-misleading answers. Indeed, model selection will help us to determine appropriate models in complex situations given the data acquired in conjuction with prior knowledge. The resolution of more complex models demands far greater numbers of photons; in this paper we target performance in the low intensity regime.

Fig 1. Bayesian bi-exponential analysis of cell pellet data.

Lifetime analysis of FLIM data from a cell pellet sample. In (a) an intensity image having pixels with a total photon count of between about 20 and 400 were analysed (having invoked 9 × 9 spatial binning to provide sufficient photon counts for a bi-exponential analysis) using the bi-exponential Bayesian algorithm. In (b) and (c) the interacting fraction and the FRET efficiency respectively (as computed using the Bayesian estimates of the decay parameters). The size of a 9 × 9 spatial bin is indicated in (a) by a red square at the centre of the image and in (d) the decay data from from the spatial bin is shown. All of the images correspond to a 334 × 334 μm field of view, and are 256 × 256 pixels. See Appendix A4 Cell Pellet Preparation for details about the sample.

The data for this kind of time-domain FLIM is usually acquired using Time Correlated Single Photon Counting (TCSPC): this involves the collection of fluorescence decay photon emission times to yield a histogram of photon count (fluorescence intensity) against time, and Poisson statistics dictates that the more photons that are counted the more accurately the histogram represents the fluorescence decay. The most commonly applied analysis methods for this type of data involve the ‘direct fitting’ of a fluorescence decay model to the measured histogram. In the direct fitting approach a decay model, typically of the form

| (1) |

is fitted directly to the histogram, where I(t) represents the fluorescence intensity at time t, Z represents a uniform background level, and each of the L decay components is described by an initial intensity Aℓ and a decay lifetime τℓ. The optimal fit is determined by iteratively minimising a distance metric between the fit and the measured photon counting histogram. In performing such an analysis, the necessary convolution of I(t) with a (usually) measured instrument response function (IRF) is typically performed numerically.

The effectiveness of the ‘direct fitting’ approach depends crucially on the choice of the distance metric; different distance metrics introduce into the analysis different assumptions regarding the statistical noise in the counted photon data.

In the least-squares (LS) fitting approach, the distance metric is based on the squared difference between the measurements and the proposed fit; a choice which implicitly imposes a Gaussian ‘noise model’ that does not correspond to counting discrete events and approximates experimental reality only when the number of photons counted is large (where Gaussian statistics approximate Poisson statistics). Different adaptive and re-binning schemes have been investigated as a means of counteracting the problem of histogram bins having either zero or very low photon counts and have been found to be effective in improving the accuracy of LS estimates [20–22]. The influence of various binning ‘schemes’ was investigated, from the perspective of acquisition, by [23].

The maximum-likelihood (ML) approach to direct fitting [22, 24, 25] is based on the assumption that the noise in photon count data obeys Poisson statistics. Despite there being overwhelming consensus in the literature that ML-based direct fitting should be preferred over LS-based direct fitting, the use of LS-based fitting remains widespread in time-domain FLIM, largely down to its ease of implementation and to the fact that it is packaged with popular commercial FLIM analysis implementations. The realisation of a ML-based analysis by the simple adaption of a standard LS-based analysis using the Levenberg-Marquardt algorithm was presented by Laurence and Chromy [26].

Global analysis algorithms have been applied to time-domain FLIM [19, 27, 28] and have been demonstrated to be a powerful means by which reliable fluorescence decay parameter estimates can be obtained even in poor signal-to-noise conditions by exploiting the expected spatial invariance of some of the properties of fluorescence decays across an image (e.g. Barber et al. [27] assume the bi-exponential fluorescence lifetimes to be invariant across an image).

Of course, in order to obtain accurate decay parameter estimates it is necessary that the decay underlying the data be faithfully represented by the analysis model; the analysis of bi-exponential decay data with a mono-exponential model may not offer sufficient insight into the underlying fluorescence or interaction process. Similarly any significant peculiarities of the data acquisition process should also be modelled and although the influence of repetitive excitation is often acknowledged, it has only been included formally in the analysis in a small number of publications [18, 27, 29].

A number of time domain FLIM analysis methods which are not based on direct histogram fitting have also been used. A phasor analysis has been applied to time-domain FLIM [30] with an intuitive representation of the experimental data which may have a particular appeal to the non-expert. An analysis based on the Laguerre expansion has also been described e.g. [31, 32]. Although these techniques have merit in specific instances they do not offer a significant advantage when photon counts are low, are not easily extended robustly to complex situations and cannot readily include prior knowledge.

Methods

Modelling the photon-counting time-domain FLIM system

In time-domain FLIM, a pulsed laser is often used to periodically excite the sample, causing fluorescence emission. Fluorescence decay photons are subsequently detected by a photon-counting detector; their arrival times (relative to the pulsed excitation) being recorded electronically with a high accuracy. Over time, a set of photon arrival times that represent the fluorescence emission accumulates; it is this set that form the acquired data in our analysis. In parameterizing the system for use with this analysis, a model that captures the relevant characteristics of the system as accurately as possible, and relates a particular photon arrival time to a set of fluorescence decay and other model parameters, is developed. In the interest of developing a model that remains generally applicable, those elements of the system that exert little influence on the behaviour of the typical system (and are easily controlled experimentally through appropriate configuration of the hardware) can be safely left out. Nevertheless, it should be remembered that emitting species located at different physical sites within the microscope’s point spread function (PSF) emit with exponential decay times characteristic of each type of site, and the resulting overall decay profile is a superposition of all these emissions. When the number of sites is sufficiently large, the system can be viewed as a continuum of environments, characterized by a multi-exponential profile. In practice the photon count is far too low to be able to separate these individual components, with very similar lifetimes and the best that can be used, in the context of FLIM performed on biological material, is an approximated single exponential decay. Super resolution methods e.g. [33] may ultimately be able to improve on this by reducing the number of sites imaged in the PSF. Similarly instrumental limitations are usually determined by the type of detector. For example, photomultiplier tubes, particularly end on tubes commonly used in microscopes, exhibit wavelength-dependent transit times; detector afterpulsing can also be limiting [34] and all practical imaging systems do not respond equally to all polarisations. All these, and other effects, corrupt the acquired histogram.

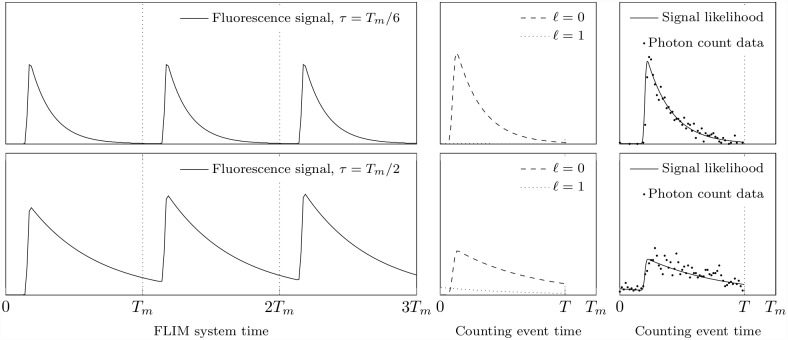

Considering time-resolved FLIM in fairly simple terms, as illustrated in Fig 2, a sample undergoes repetitive short-pulse excitation (repetition period Tm) and emits fluorescence photons, some of which traverse a path through the experimental apparatus before they are detected. It is neither desirable nor likely to be advantageous to attempt to model independently the influence of all of the individual optical components of the time-domain FLIM system; instead, these effects are modelled by an overall delay u that the instrument imposes onto these photons. An excitation pulse of finite width and electronic jitter cause u to be a random variable sampled from a distribution that is described by Γ(u).

Fig 2. Repetitive excitation and photon counting.

An illustration of the key components of the FLIM system model having repetition period Tm and a measurement window of duration T. In the upper left panel, a mono-exponential decay of lifetime τ = Tm/6 as a consequence of a sample being subjected to repetitive excitation at discrete times nTm (n = 1, 2, 3,…), the fluorescence signal being retarded by a typical IRF on progressing through the system apparatus. In the upper right panel, the normalised fluorescence signal likelihood within the measurement interval [0, T] (i.e. there is no likelihood of detecting a photon outside of the measurement interval (T, Tm]), and typical data for such a decay having about 1,000 total photons counted into 64 bins of equal width subdividing the measurement interval. The bottom half of the figure repeats the top for a mono-exponential decay of lifetime τ = Tm/2, and again shows data having about 1000 photon counts.

TCSPC involves determining the arrival time Δt of a detected photon with reference to the most recent excitation pulse, and recording it as having being detected within a time interval (i.e. a bin). (In practice, reverse-start-stop TCSPC is used and it is actually the time between photon detection and the next excitation that is measured, it then being trivial to represent such times with reference to the preceding excitation pulse as the repetition period is known.) The following sections add details to the above description, beginning with what goes in to the system (i.e. repetitive excitation), followed by what comes out of the system (i.e. photon arrival times recorded in discrete time), and what happens inbetween (i.e. sample signal responds to the excitation and instrument delays the signal).

In the interest of readability, wherever possible only the key assumptions that guide the model development and significant results along the way are presented here; intermediate steps, technical and mathematical details can be found in Appendix A1 Mathematical Details.

In this analysis, the events that are analysed are photon arrival times that have been detected in the measurement interval [0, T], consequently the likelihood expressions are normalised over this interval.

Repetitive excitation Formalising the description above, we can write the recorded photon arrival time Δt determined with respect to the most recent excitation as

| (2) |

where t is the actual emission time of a photon due to the fluorescence decay process and u captures the IRF (as described above). The final term allows for the fact that the excitation time may have been more than one excitation period before photon detection. The floor function is denoted by ⌊x⌋, such that n = ⌊x⌋ is the largest integer n ≤ x. Although detection events are correlated to the excitation ‘clock’, information about whether an event wraps around to subsequent detection periods is lost and defining Δt in this way allows us to account for this phenomenon. A recorded arrival time Δt could result from a detected photon that was due to an excitation event that occurred a period or more beforehand (t > Tm); or could result from a photon emitted a number of repetition periods earlier than the most recent excitation pulse but delayed sufficiently as to be seen in the latest window (u > Tm)—the likelihood of such situations depends on the decay distribution p(t) and the IRF signal distribution Γ(u) respectively. In reality, p(t) is much more likely to extend beyond Tm than is Γ(u).

Arrival times are recorded only during a measurement interval of duration T ≤ Tm, and therefore Δt ∈ [0, T]. The probability of arrival time Δt for a photon detected in the measurement interval [0, T] on the periodic window, is given by

| (3) |

where S(Δt) is the likelihood of a photon from an arbitrary signal p(t) being detected at time Δt, and w0 ∈ [0, 1] represents the contribution of a uniform background. The step function is denoted by θ(x), such that θ(x > 0) = 1 and θ(x ≤ 0) = 0. The probability of a signal photon having arrival time Δt, while considering all possible emission times t and all possible delays u, is given by

| (4) |

where the emission time t is distributed according to an arbitrary signal p(t) and the instrument introduces a delay u that is distributed according to Γ(u). The Dirac delta function is represented by δ(x) and exists only when x is equal to zero, such that ∫ dx δ(x) f(x) = f(0) for any sufficiently smooth function f(x). In transforming Eq (4) (as detailed in Appendix A1 Mathematical Details), the influence that the repetitive nature of the system has on a measured arrival time Δt is instead captured in a summation, such that

| (5) |

where the normalisation constant Λ(T, Tm) is given by

| (6) |

Observe that Eq (5) describes the convolution of the IRF, Γ(u), and the pure signal p(t), as is familiar from conventional data-fitting FLIM analysis techniques. The summation has the role of accounting for the history of the signal that may be apparent in the measurement interval, such that when ℓ = 0 any recorded arrival time is due to a photon emitted and detected in the same measurement interval, when ℓ = 1 any detected photon had been emitted in the repetition period immediately preceding the measurement interval, when ℓ = 2 any detected photon emanated from the repetition period before that, and so on (Fig 2). Note that Eq (5) remains completely general, incorporating a uniform background proportion w0, the effects of repetitive excitation, an arbitrary instrument response Γ(u) and an arbitrary decay signal p(t).

Discrete time data Accounting for the discrete time nature of these data, the likelihood of photon arrival time Δt being within a time interval (i.e. a bin) is required. Defining a bin as being an interval b = [bL, bH] ⊆ [0, T] that lies within the measurement window, and adopting the shorthand p(b) = p(Δt ∈ b), the likelihood of a photon arrival time Δt falling in the bin b is given by

| (7) |

where |b| = bH − bL denotes the width of the interval b = [bL, bH], and S(b) is the likelihood of a photon from the signal p(t) being detected within the bin, and is given by

| (8) |

Notice that Eqs (7) and (8) remain completely general for a system that generates discretised time-domain data due to the repetitive excitation (subject to the distributions p(t) and Γ(u) being normalised).

Multi-exponential decays The model developed so far, as given by Eqs (7) and (8), describes the photon-counting time-domain FLIM system, incorporates those effects imposed on the analysis by the design of the experimental system, that is, repetitive excitation and the collection of photon arrival times in discrete time.

A multi-exponential decay signal p(t) of the form

| (9) |

and the set ΩK of permitted weight and lifetime values

| (10) |

are defined; the collection of weight and lifetime parameters of the K decay components being denoted by w = (w1,…,wK) and τ = (τ1,…,τK) respectively. The introduction of Eq (9) into the model, as described by Eqs (7) and (8), subject to the requirement that , yields

| (11) |

where wk weights the contribution of an exponential decay of lifetime τk to the overall signal, the fluorescence bin-likelihood describing the likelihood of a photon arrival time being counted in the interval b = [bL, bH] due to a mono-exponential decay with lifetime τ, and being given by

| (12) |

Analytic instrument response approximation An approximation to the IRF comprising a weighted sum of truncated Gaussian distributions is proposed, with the aim that the asymmetry and other artifacts of a real IRF can be adequately captured. The IRF approximation is given by

| (13) |

where γi ∈ [0, 1] weights the contribution of a truncated Gaussian distribution of width σi ≥ 0, centered about a delay parameter ui ≥ 0 and having a lower cut-off δi ≥ 0. The set summarizes the parameters of the I instrument response components, and the weightings are subject to the constraint . The error integral is denoted by erf(x) and is defined by . In defining this approximation the requirement for a sufficiently good configurable approximation is balanced with the desire that the necessary convolution integrals remain analytic. Approximation of the IRF by a mixture of Gaussian distributions is appropriate as any actual IRF is the convolution of many physical effects introduced by many different components of the system, including the finite width of the laser pulse, the response of the photomultiplier tube (PMT), and various effects arising from the system electronics. Repeated convolutions of any function will tend towards a Gaussian.

The introduction of Eq (13) into the fluorescence bin-likelihood model Eq (12) yields

| (14) |

where the quantity is given by

| (15) |

the quantity χ(τ, t, σ, δ, u) is given by

| (16) |

and the quantity

| (17) |

is defined for compactness. The fluorescence bin-likelihood Eq (12) can be represented as the sum of contributions originating from ℓ ≥ 0, such that

| (18) |

where the contribution from the ℓth previous excitation is given by

| (19) |

It is worthy of note that, although the model incorporates rigorously any history of the signal that may be present in the recorded arrival time data, in practice, for decay lifetimes considerably shorter than the repetition period, the summation over ℓ need only include the first two terms. In the case that a decay lifetime is not significantly smaller than the repetition period, the summation should include more terms as appropriate.

Bayesian analysis of the system model

The Bayesian framework is now applied to the system model developed above with the purpose of quantifying probabilistically the model parameters of some fluorescence decay data and also to provide answers to the often unasked questions regarding the nature of both the fluorescence decay and of the FLIM instrument. In particular, the following are explored:

-

Parameter estimation

What is the probability of a set of fluorescence decay model parameter values given the detected data, the decay model, and the parameterized instrument response approximation?

-

Model selection

How many exponential decay components, K, are likely to have yielded the decay data?

-

Instrument response determination

What is the most likely form of the IRF given the fluorescence decay data?

The following notation is introduced and shall be used throughout the remainder of this document:

-

The data

The data D = {(bj, cj)|j = 1,…,M} comprise a set of M bin-count pairs (bj, cj) where bj represents the jth bin (i.e. interval ) and cj is the number of photons recorded as having been counted into that bin. It is required that none of the bins overlap (i.e. bj ∩ bk = ∅, ∀j ≠ k) and that their union forms the measurement interval (i.e. ).

-

The decay model

The fluorescence decay model is denoted by , where K exponential decays of lifetime τk contribute to the overall decay according to weight wk as defined by Eq (9). The notation wK = (w1,…,wK) and τK = (τ1,…,τK) shall also be used.

-

The parameterized IRF approximation

The characteristics of the FLIM equipment are denoted by , which defines an instrument response approximation composed of I weighted, truncated Gaussian distributions, as defined by Eq (13).

The Bayesian analysis developed in this section intentionally does not explicitly incorporate any particular form for the required prior distributions, in order that the expressions developed remain general and can be used as a starting point and be suitably updated on making specific the choice of prior distribution.

Decay parameter estimation Most commonly, it is the fluorescence decay parameter estimates that are of greatest interest to the experimentalist, with both the IRF approximation, , and the decay model, , having been either determined or assumed. The posterior distribution gives the likelihood of fluorescence decay parameter values, (wK, τK), given the data, D, the IRF approximation, , and the decay model, , and its hyperparameter(s), αK, and is given by

| (20) |

where the normalisation constant, , is given by

| (21) |

and the shorthand has been introduced for compactness.

Of course, as any parameter estimates, (wK, τK), derived from Eq (20) are conditioned not only on the data, D, but also on the decay model, , (and its hyperparameter(s), αK), and instrument response characterization, , it should be expected that they be most reasonable when the appropriate and have been employed throughout the analysis.

Decay model selection Here, in order to address such questions as “Should mono-exponential or bi-exponential analysis be used?”, the Bayesian framework is employed to determine the most applicable fluorescence decay model given the data, where it is assumed in this analysis that the IRF parametrization has been appropriately determined. In this work, the most probable decay model and the most probable hyperparameter(s) are determined together, their posterior distribution offering a quantitative measure of the likelihood of the recorded data being due to the model and its hyperparameter(s) αK, as given by

| (22) |

where is the prior probability of decay model and its hyperparameter(s) αK, and in the denominator the summation is over all candidate decay models. The relative likelihood of candidate decay models, for example models and , can be obtained by the pairwise comparison of the evidence for those models, that is

| (23) |

Alternatively, the most probable decay model and its hyperparameters conditioned on the data can be sought by finding the maximum of Eq (22). On writing , the most probable decay model and its optimal hyperparameters are given by

| (24) |

where the quantity is as defined by Eq (21), the optimal hyperparameter(s) are given by

| (25) |

and is the prior distribution (the so-called hyperprior) of the hyperparameter(s) αK for the decay model .

Instrument response determination The measurement of an instrument response can sometimes be a difficult practical problem or the IRF may vary over the image in some unpredictable way. These situations are more likely to occur in fields other than microscopy, where, for example, images of natural scenes may be obtained with a fluorescence lifetime camera. This section develops the Bayesian determination of the instrument response approximation parameters from the fluorescence decay data itself. Denoting by the parameter values of the instrument response approximation Γ(u) (Eq (13)), the posterior distribution of the instrument response parameter values is given by

| (26) |

Of course, should the data be acquired using a fluorophore known to exhibit a purely K-exponential decay, the above simplifies to yield

| (27) |

Additionally, it is also possible to estimate the instrument response parameters at the same time as estimating the fluorescence decay parameters. Again, under the assumption of a purely K-exponential decay, the posterior to be calculated simplifies yet further to give

| (28) |

Software Implementation

The Bayesian analysis algorithms were implemented in the C programming language and incorporated into the TRI2 (Time Resolved Imaging 2) image processing software package [19, 27].

The optimal mono- and bi-exponential parameter estimates are those which maximise the posterior distribution as given by Eq (20), or equivalently, those which minimise the quantity . In our implementation these values are determined by locating the global minimum using two different approaches; one in which the global minimum is located in the “continuous” parameter space by means of the downhill simplex or simulated annealing algorithms [35], and another in which an exhaustive search of the discretised parameter space is performed, thereby enabling faster determination of the optimal values and easier viewing of the posterior and marginal distributions.

A Gaussian approximation, as developed in e.g. [36], to the mono- and bi-exponential parameters posterior distributions Eq (20) was employed for the implementation of the Bayesian model selection algorithm. In adopting such an approach the “model evidence”, as given by Eq (21), may be approximated using only the optimal parameter values and the (first and second) derivatives of the quantity S(wK, τK) = ‒ln[p(wK, τK)p(D|wK, τK)], which in this work were determined analytically. The primary advantage of using this approach is that the requirement for numerical integration to determine the “model evidence” is avoided.

Determination of the optimal IRF approximation has been implemented using a simulated annealing algorithm [35] and under the assumption that the fluorescence decay is known to be either mono- or bi-exponential. The inclusion of too many Gaussian components in the IRF approximation Eq (13) and the consequences of over-fitting could be avoided by the development of a Bayesian IRF approximation model selection algorithm to determine the optimal number of Gaussian components and their parameters; this has not been investigated to date though would be an achievable extension to the current implementation should it be deemed to offer sufficient advantage to the analysis.

Results

The performance of the Bayesian algorithms was compared to that of the direct-fitting approach using ML and LS for the analysis of synthetic data simulating various mono- and bi-exponential decay conditions and a typical TCSPC system (see Appendix A2 Synthetic Data). The performance of the different methods was also compared for the analysis of human carcinoma cell and human breast cancer tissue experimental data (see Appendices A3 Human Epithelial Carcinoma Cell Preparation and A5 Human Breast Cancer Tissue Preparation).

The Bayesian mono- and bi-exponential decay analysis algorithms were tested against ML and LS, all approaches operating directly on the accumulated histogram. The ML estimation routines were implemented as described in [26], and are based on the modified Levenberg-Marquardt (MLM) algorithm. The LS implementation is also based upon the MLM algorithm and is described in [19, 27]. As the repetitive nature of TCSPC excitation is not accounted for in any of the established analysis techniques used for comparison, in order to avoid their potential effects the ML and LS analysis routines consider only a window of the collected data that excludes time points before the rise of the transient has occurred. Automated selection of the valid data window is discussed in [27]. The Bayesian analysis routines operate on all of the available data, except for small portions at the start and end of the transient, potentially corrupted by consequences of dithering applied to time measurements of the time-amplitude converter in the TCSPC electronics [34].

The Bayesian-determined optimal single Gaussian IRF approximation, having a FWHM width of 0.129 ns (i.e. a standard deviation of 0.055 ns) centered about a delay of 2.067 ns, was determined from a single high-count data IRF measurement (about 5 million photon counts) and was used for the analysis of synthetic data presented below.

Mono-exponential parameter estimation

The mono-exponential parameter estimates obtained with this model are in close agreement with those reported in [18] using a simpler model. In particular, when applied to low count synthetic data (having a background component of about 10%), our Bayesian algorithms enable a decrease of about a factor of two in the number of photon counts required, and therefore in the imaging duration, for mono-exponential lifetime estimation with an accuracy of about 10%. Similarly, when applied to experimental data, Bayesian mono-exponential analysis of the data from imaging human epithelial carcinoma cells stably expressing cdc42-GFP again yielded a tighter lifetime distribution than those obtained using ML and LS methods. This indicates a reduction in the spread of the random error of the analysis and results that are more representative of the biological distribution of lifetime values due to local variations in the cellular environment.

The enhanced model described in this paper offers improvements over the model of [18] in the case of decays having a very slow decay component, and in situations where the IRF is not well approximated by a single Gaussian.

Bi-exponential parameter estimation

In bi-exponential decay analysis, the lifetimes, τ1 and τ2, and initial amplitudes, A1 and A2, of the two decay components are estimated from the fluorescence decay data.

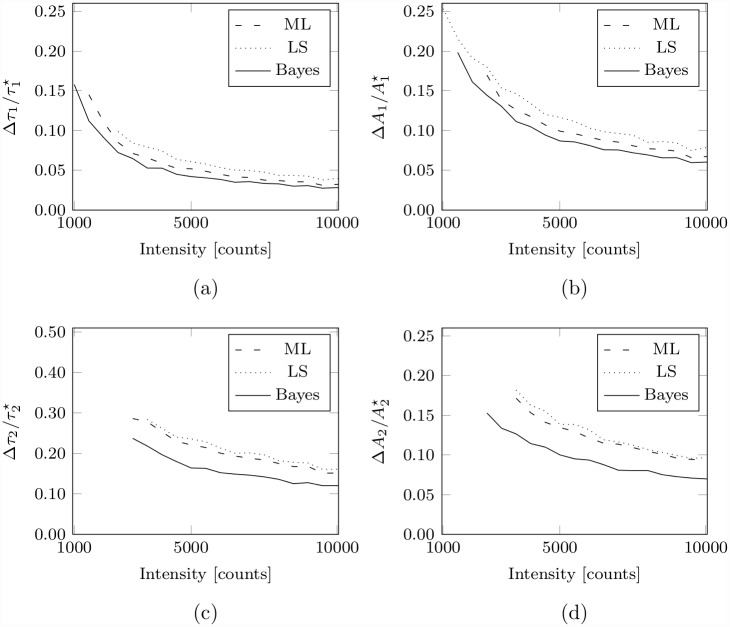

A comparison of the performance of the Bayesian bi-exponential algorithm with LS and ML is shown in Fig 3 for the analysis of synthetic data simulating bi-exponential decays at low photon counts. The fractional errors in the estimated lifetimes and initial amplitudes versus photon count are shown in (Fig 3a and 3c) and (Fig 3b and 3d) respectively for simulated bi-exponential decays having lifetimes and , and having equal initial amplitudes i.e. .

Fig 3. Bi-exponential parameter estimation at low photon counts.

The uncertainty, as measured by the standard deviation of the estimated parameter distribution, in the bi-exponential decay parameter estimates obtained using ML, LS, and Bayesian analysis for the analysis of synthetic data simulating a bi-exponential decay (, , ) for a range of intensities between 103 and 104 photon counts. In (a) and (c) the fractional errors in the estimated decay lifetimes versus photon count; in (b) and (d) the fractional errors in the initial amplitudes of the two decay components. In all cases, the normalised width is displayed only when the respective estimates are not biased by more than 5% of the true value.

The Bayesian bi-exponential algorithm provides parameter estimates to a greater precision, as measured by the standard deviation of the estimated parameter distributions, than is achieved using ML or LS analysis, as is evident on visual inspection of Fig 3; the Bayesian algorithm offers superior estimates for all of the bi-exponential decay parameters for all intensities between about 103 and 104 photon counts. At an intensity of about 5×103 photon counts, the lifetime of the slower decay component, τ1, is estimated to a precision of about 4.2% by our Bayesian algorithm; at the same intensity ML and LS achieve a precision of about 5.2% and 6.1% respectively. Indeed, to achieve the precision that is offered by the Bayesian algorithm at 5×103 photon counts, ML requires an intensity of almost 6.5×103 photon counts and LS requires about 9×103 photon counts. A similar improvement in precision is achieved for the estimates of the corresponding initial amplitude, A1, at 5×103 photon counts; the Bayesian algorithm offers estimates within a precision of 8.9%, whereas ML and LS achieve a precision of 10.3% and 11.7% respectively. The precision of the estimated lifetime and initial amplitude of the faster decay component is expected to be inferior to that of the slower component, as for such a decay the slower component contributes roughly four times as many of the counted photons to the intensity than the slower decay component. However, it is for the characterisation of the faster decay component that the Bayesian algorithm provides greatest advantage over ML and LS. The faster decay lifetime, τ2, is estimated to a precision of 16.4% by the Bayesian algorithm at 5×103 photon counts; ML and LS do not offer such precision below intensities of about 8.5×103 and 9.5×103 photon counts respectively. The initial amplitude of the faster decay component, A2, is also estimated with greater precision by the Bayesian algorithm; at 5×103 photon counts the Bayesian algorithm achieves a precision of 10.0%, as compared to a precision of 13.5% offered by ML and 13.8% offered by LS analysis.

In a biological example where levels of protein-protein interactions can be determined by FRET (via FLIM), we can demonstrate the use of the bi-exponential model. We are thus making the assumptions that the donor has a single dominant decay path, resulting in mono-exponential kinetics, and that the target proteins (for example, HER2 and HER3 for the cell pellet data (Appendix A4 Cell Pellet Preparation) illustrated in Fig 1) readily form a stable dimer where the donor to acceptor distance is relatively stable and consistent. Thus bi-exponential analysis is appropriate to resolve the interacting (dimerised) and non-interacting populations. In such situations, the FRET efficiency and interacting fraction are biologically relevant quantities that can then be determined from the estimated decay lifetimes τ1 and τ2 and the initial amplitudes A1 and A2 respectively, as follows,

FRET efficiency: E = 1 − τ2/τ1, τ1 > τ2,

Interacting fraction: F2 = A2/(A1 + A2).

The FRET efficiency E can be used to quantify the separation of fluorophore pairs and the interacting fraction F2 provides a measure of the proportion of molecules undergoing FRET.

Of course, in such experiments, superior bi-exponential parameter estimates lead to more precise estimates of derived quantities such as the FRET efficiency and interacting fraction. Indeed, the FRET efficiency for the bi-exponential decay data analysed for Fig 3 was estimated to a precision of 4.5% using the Bayesian bi-exponential lifetime estimates at an intensity of 5×103 photon counts; the ML and LS estimates resulted in a precision of 6.2% and 6.3% respectively. Similarly, a precision of 8.2% was achieved for the interacting fraction estimated from the Bayesian bi-exponential initial amplitudes; ML and LS offered a precision of 9.5% and 10.3% respectively.

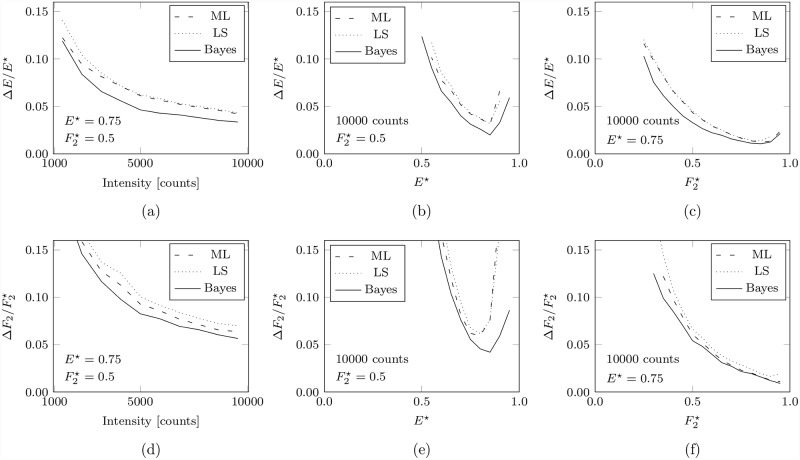

A comparison of the performance of the Bayesian bi-exponential algorithm with LS and ML is shown in Fig 4 for the analysis of synthetic data simulating three FRET efficiency and interacting fraction conditions at low photon counts. The fractional errors in FRET efficiency and interacting fraction versus photon count are shown in (Fig 4a and 4d), the same errors are shown in (Fig 4b and 4e) and (Fig 4c and 4f) for different FRET efficiencies and for different interacting fractions respectively.

Fig 4. FRET efficiency and interacting fraction estimation at low photon counts.

The uncertainty, as measured by the standard deviation of the estimated parameter distribution, in the bi-exponential decay parameter estimates obtained using ML, LS, and Bayesian analysis for the analysis of synthetic data simulating a bi-exponential decay. In (a) and (d) are the fractional errors in FRET efficiency and interacting fraction respectively versus photon count, in (b) and (e) the same for different FRET efficiencies E⋆, and in (c) and (f) for different interacting fractions . In all cases, the normalised width is displayed only when the respective estimates are not biased by more than 5% of the true value. See main text for details.

The fractional errors in FRET efficiency and interacting fraction versus photon count (Fig 4a and 4d) are plotted for synthetic data simulating a bi-exponential decay having a constant FRET efficiency of E⋆ = 0.75 (i.e. lifetimes of and ) and an interacting fraction of (i.e. ), for intensities between about 103 and 104 photon counts. The improvement in the estimated FRET efficiency offered by Bayesian analysis is a consequence of superior lifetime estimates compared to those from ML and LS analysis. At an intensity of about 5×103 photon counts the FRET efficiency derived from Bayesian lifetime estimates has an uncertainty of about 4.7%, whereas the estimates due to ML and LS analysis have an uncertainty of about 6.2% and 6.4% respectively. Bayesian analysis also offers improved estimates of the interacting fraction; at an intensity of about 5×103 photon counts the interacting fraction determined from the Bayesian bi-exponential parameter estimates has an uncertainty of about 8.3% as compared to about 9.6% and 10.3% for ML and LS respectively.

The performance for different FRET efficiencies is shown in (Fig 4b and 4e) for synthetic data having an interacting fraction of (i.e. ) and an intensity of about 104 photon counts. Bayesian analysis provides a slight improvement in the estimation of the FRET efficiency and the interacting fraction and could therefore offer more precise estimates at a given FRET efficiency or estimates within a given precision for an increased range of FRET efficiencies. However, it is evident that at such an intensity the uncertainty in the estimated FRET efficiency exceeds 10% and increases rapidly for all of the analysis techniques for actual FRET efficiencies of less than about 0.55 and that an equally rapid deterioration in the estimated interacting fraction occurs at FRET efficiencies lower than about 0.65. The estimated interacting fraction demonstrates a bias of greater than 5% for FRET efficiencies greater than about 0.8 for ML and LS analysis, and at about 0.9 for Bayesian analysis (data where the bias is greater than 5% has not been plotted).

The sensitivity to different interacting fractions is shown in (Fig 4c and 4f) for synthetic data having a FRET efficiency of E⋆ = 0.75 (i.e. ns, ns) and an intensity of about 104 total counts. Bayesian analysis offers a modest improvement in precision over ML and LS analysis in this instance.

Of course, some bi-exponential decays are more amenable to accurate analysis than others; it would be reasonable to expect it to be more difficult to resolve the two decay components if they have similar lifetimes (i.e. a low FRET efficiency) and impossible at the point where they are equal (FRET efficiency equal to zero) or if one of the components dominates the decay (i.e. a very high or very low interacting fraction). It is these competing effects that give rise to the minima in (Fig 4b and 4e), the position of the minimum depending on the level of interacting fraction. The reader may note that in this example values of E < 0.5 are not well estimated. This situation becomes better with different interacting fractions and more photon counts, but it should be remembered that this attempts to extract the actual FRET efficiency and not the effective FRET efficiency often reported.

Bayesian analysis offers superior estimation of biologically relevant quantities in many situations such as the FRET efficiency and interacting fraction in comparison to ML and LS analysis. Although the improvement in precision using Bayesian analysis is modest compared to ML and LS analysis, in some cases this improvement does mean that fewer total photon counts are required for analysis at a given precision or that a wider range of FRET efficiency or interacting fractions can be studied. It may be that a modest improvement in the precision of the estimated FRET efficiency could be the difference between observing a statistically significant difference in an experiment in which only a small change in the FRET efficiency is expected. It is also worth noting that Bayesian analysis in this form is very rarely worse than the other methods, and this is true at higher photon counts, so the only possible penalty from exclusively employing the Bayesian analysis is increased analysis time.

Bayesian decay model selection

Typically, a combination of factors influence which decay model is chosen to analyse the experimental data. In choosing the decay model the expectation of what form the decay is believed to be is always likely to be significant, as is whether the intensity of the acquired experimental data is sufficient to support a statistically significant analysis using higher-order models. The application of the selected model is often justified by inspecting the parameter estimates, and the closeness of the fits to the acquired data at a representative sample of image pixels. Closeness can be assessed by examining the residual distance (difference) between the fit and the data, and even the auto-correlation of the point-wise residuals [37].

The Bayesian decay model selection analysis algorithm (Eq (24)) can be applied to determine probabilistically the decay model that most-likely underlies the time-resolved data from any number of different models. In this section the results of a Bayesian decay model selection algorithm, chosen to distinguish between mono- and bi-exponential decay data, are shown; the developed algorithm determines the most-probable decay model from an ensemble containing only the mono-exponential decay model and bi-exponential decay model (the mono- and bi-exponential decay models being formalised according to Eq (9) with K = 1 and K = 2 respectively).

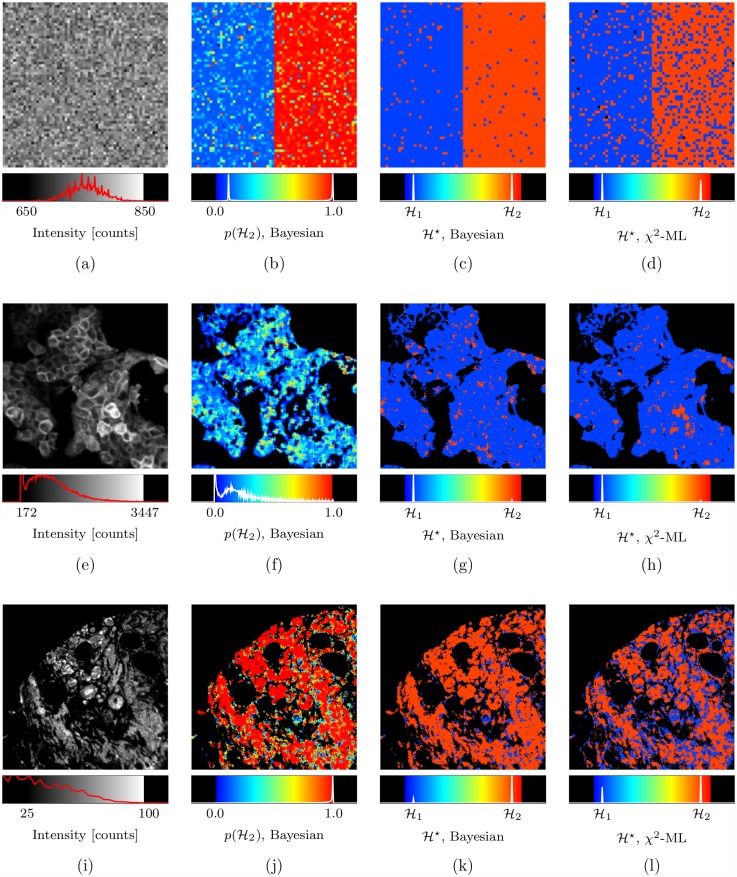

The results of model selection are shown in Fig 5 alongside those from the χ2 model selection of [38] acting on the ML analysis, for the analysis of an image comprising both mono- and bi-exponential synthetic data, an image of the GFP-expressing cells expected to follow a mono-exponential decay (see Appendix A3 Human Epithelial Carcinoma Cell Preparation), and the human breast cancer tissue image (see Appendix A5 Human Breast Cancer Tissue Preparation).

Fig 5. Bayesian decay model selection.

In (a) an intensity image having pixels with intensity of about 750 photon counts, those on the left half of the image simulating a mono-exponential decay and those on the right half of the image simulating a bi-exponential decay; in (b) and (c) the Bayesian determined probability of the decay model being bi-exponential, , and the optimal decay model, , respectively, and in (d) the optimal model as determined by the χ2 model selection algorithm of [38] using the ML parameter estimates. Similar images are shown for human cancer cells expressing GFP (e-h), showing largely mono-exponential characteristics, and for human breast cancer tissue (i-l) which has many ‘contaminants’ from heterogeneous tissue types giving rise to bi-exponential, or higher order, responses. See main text for details.

The Bayesian model selection algorithm is able to successfully distinguish between mono-exponential and bi-exponential decay data at very low intensities of about 750 photon counts, as shown in (Fig 5b and 5c). The pixels in the left half of the image contain mono-exponential decay data, each generated to have a decay lifetime of , and the pixels in the right half contain bi-exponential decay data, each generated to have one decay component with lifetime and another with lifetime , their initial amplitudes being equal. The analysed transients were generated to simulate our TCSPC system (repetition rate: 40 MHz, measurement interval: 20.0 ns partitioned into 256 equal bins) and incorporated the effects of a Gaussian instrument response, no background (i.e. uniform background of zero counts per bin), and the effects of Poisson noise. Of the 2048 mono-exponential decays that were analysed 84 of them were incorrectly classified as being bi-exponential (i.e. 4.1% false positive) and of the 2048 bi-exponential decays analysed 53 were classified as being mono-exponential (i.e. 2.6% false negative). Overall, the occurrence of FRET was predicted with about 97% accuracy by Bayesian decay model selection. The χ2 based model selection algorithm predicted only 89% of the mono-exponential decays correctly, and the performance on bi-exponential data is visibly poorer.

The results of application of Bayesian and χ2-ML decay model selection to the GFP-expressing human carcinoma cell data (see Appendix A3 Human Epithelial Carcinoma Cell Preparation) are shown in (Fig 5f, 5g and 5h); both methods demonstrate that the image largely consists of mono-exponential decay data as should be expected. There is correlation between a region of high intensity pixels and a bi-exponential decay being determined as optimal by the χ2-ML decay model selection algorithm, as evident on inspection of (Fig 5h); it is suspected that the χ2-ML decay model selection may be biased towards selection of the bi-exponential decay model as a consequence of poor decay estimates compensating for an underestimation of the background level by ML analysis at these pixels.

In (Fig 5j, 5k and 5l) the data from a human breast cancer tissue (see Appendix A5 Human Breast Cancer Tissue Preparation) image is predicted to follow a bi-exponential decay as would be consistent with expectation. Tissue of this type has numerous ‘contaminants’ from heterogeneous tissue types, endogenous fluorophores and preparation chemicals that will give rise to bi-exponential, or higher order, responses. This binary ‘mono’ or ‘bi’ selection algorithm will be more likely to assign higher order exponential responses into the bi-exponential category as a the better explanation of the data.

It is important to appreciate that in interpreting the results of Bayesian model selection, inferences can only be made concerning those models that are present in the ensemble. The algorithms as applied in this work operate over an ensemble consisting only of mono- and bi-exponential decays; and it is therefore not possible to make any inference as to whether the data may actually be more likely to be due to, for example, a tri-exponential or even some non-exponential decay process. However, the Bayesian algorithm could be extended to offer the relative likelihoods of mono-, bi-, and tri-exponential decays. Similarly, a background noise only model could be incorporated to permit determination of the presence or absence of a decay signal in some data, along the lines of the method realised in [39].

Bayesian IRF determination

The Bayesian IRF determination algorithm has been developed according to Eq (28) such that the parameters describing the optimal IRF approximation and the decay parameters are estimated simultaneously from the TCSPC data: Simultaneous IRF and Decay (SID) analysis. As both sets of parameters are estimated simultaneously the algorithm may be applied for either purpose under different experimental conditions.

Typically, the Bayesian IRF determination algorithm will be run over a data set having very high photon counts in order to estimate the optimal IRF approximation to be used in subsequent decay analysis; in this instance, the decay parameter estimates would be of little interest. An alternative application of the Bayesian IRF determination algorithm, however, could be to provide decay parameter estimates from a system for which the IRF changes greatly between pixels; in this instance, it would be the IRF approximation parameters that may be of little interest.

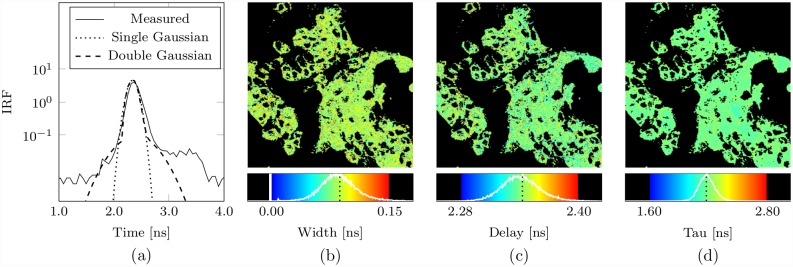

The effectiveness of the Bayesian IRF determination algorithm to provide accurate decay and IRF approximation parameter estimates simultaneously is demonstrated in Fig 6 for human carcinoma cell data (see Appendix A3 Human Epithelial Carcinoma Cell Preparation).

Fig 6. Bayesian simultaneous decay and IRF analysis.

In (a) the measured and optimal single- and double-Gaussian IRF approximations as determined on application of the Bayesian SID algorithm to a single data set having over 107 counts obtained by binning the data from all image pixels from a single image of the human carcinoma cell data (see Appendix A3 Human Epithelial Carcinoma Cell Preparation). In (b) and (c), the width and delay parameter estimates obtained on independent analysis of each pixel of the same human carcinoma cell data image using the Bayesian SID algorithm, assuming a mono-exponential decay and a single-Gaussian approximation, for pixels having intensities between about 350 counts and 3500 counts; in (d) the corresponding lifetime estimates. In (b), (c), and (d), the corresponding optimal single-Gaussian IRF approximation estimates are indicated in the histograms by a dotted black line.

The optimal single- and double-Gaussian IRF approximations for these data are shown along with the measured IRF in (Fig 6a); these estimates were obtained on analysis of a single data set having over 107 counts, obtained by binning the data from all image pixels from an image having intensities between about 350 and 3500 counts. The optimal single-Gaussian IRF approximation is defined by the estimates ; the optimal double-Gaussian IRF approximation is defined by . It is evident that the double-Gaussian approximation follows the measured IRF more closely at either side of the main peak than does the single-Gaussian approximation.

The single-Gaussian IRF approximation width and delay estimates obtained on independent application of the Bayesian SID algorithm, under the assumption of a mono-exponential decay, to each pixel are shown in (Fig 6b, 6c and 6d), for pixels with intensities between about 350 and 3500 counts, with most pixels having an intensity of less than 1000 counts.

The distribution of the delay estimates has an average of 2.342 ns and a standard deviation of 0.019 ns, and the width parameter estimates are distributed around an average value of 0.086 ns with a standard deviation of 0.022 ns; both are in close agreement with the optimal values determined using the high count data set. The mono-exponential lifetime estimates obtained on application of the Bayesian SID are inferior to those obtained on applying Bayesian decay analysis with the optimal IRF approximation, as might be expected given that the IRF parameters are additionally estimated from the same data. However, for such decay data and for such an instrument for which a single Gaussian serves as a reasonable approximation of the IRF, it is noteworthy that the lifetime estimates are only slightly degraded in comparison to those obtained from Bayesian decay analysis alone using the optimal approximated IRF, so there remains a significant improvement over the estimates offered by ML (which uses the experimentally measured IRF). The Bayesian SID lifetime distribution is centered about an average of 2.18 ns with standard deviation 0.17 ns. The estimates derived from Bayesian decay analysis using the optimal IRF approximation have an average of 2.16 ns and a standard deviation of 0.15 ns. The ML estimates (lifetime image not shown) were centered about an average lifetime of 2.17 ns with standard deviation 0.25 ns.

Discussion

In this paper we have presented the novel analysis of exponential fluorescence decay data using Baysian inference that is based on the concept that each single photon carries some evidence about the photo-physical system from which it was emitted. In particular, algorithms that relate to the analysis of time-domain FLIM data from microscopes have been presented which may be used in the microscopical studies of cellular protein interactions employing FRET. We have extended our previous work on mono-exponential analysis [18], that indicated the possible doubling of the acquisition speed is possible by using the Bayesian approach, to include the following important advances:

complex decay modelling, demonstrated here by bi-exponential analysis

simultaneous estimation of additional system parameters such as the IRF

decay model selection

rigorous treatment of repetitive pulse excitation

complex IRF modelling with multiple Gaussians

We have shown that this novel analysis performs better than the two most popular methods of ‘direct fitting’, LS and ML, in terms of accuracy and bias, and offers a distinct advantage when photon counts are low and the TCSPC-accumulated histogram is not a good representation of the underlying fluorescence decays. The greatest improvement over previous techniques was seen when performing mono-exponential analysis, where a factor of two improvement in accuracy was observed. Since time-domain FLIM using TCSPC is a relatively slow method of acquisition, this factor of two improvement can be exploited to image significantly faster for the same accuracy as previously. This may allow time-lapse experiments where imaging speed is important. The improvement due to the new algorithms when performing bi-exponential analysis was less impressive, but still offers a significantly better accuracy in determined parameters, extending the range of FRET parameters that can be accommodated and therefore the biological protein interactions that can be studied.

The analysis has also been extended to include model selection to infer robustly whether underlying photo-physical system(s) have a single fluorescence lifetime or would be better represented by a bi-exponential model. This type of analysis is not common in the FLIM field and as well as indicating which type of model to use, it can also be used to indicate to the user whether there data is of sufficient quality (e.g. contains sufficient photon counts) to provide robust parameter estimates of a high-order model; a common pitfall for the novice FLIM user.

We also presented the first published simultaneous estimate of the IRF and decay parameters based only on the assumption of the IRF being approximated by a mixture of Gaussian distributions. This represents a step forward in determining dynamically the response of the instrument as well as the sample. Although it is not impossible to obtain a reasonable estimate of the IRF for many FLIM systems experimentally, obtaining parameter estimates algorithmically has advantages. One is that it enables us to use analytical expressions that aid implementation and execution speed. A second and more generally applicable advantage is relevant to the use of other types of fluorescence lifetime equipment (or other ‘time-of-flight’ systems [40, 41]) where it may be impossible to determine the IRF, or where the IRF may change from pixel to pixel of the image (e.g. when imaging natural scenes the distance to the sample introduces a variable delay onto the signal).

All the algorithms are available for use in the TRI2 image processing program. This is currently available as compiled ‘freeware’ for Windows from the Oxford Group’s web site (http://users.ox.ac.uk/~atdgroup/software/).

Appendix

A1 Mathematical Details

Development of the time domain FLIM model Intermediate steps required for the development of our time-domain FLIM system model that were not presented in the main paper are detailed below along with a brief commentary as to the mathematical properties employed at each stage.

The development of the signal photon-likelihood, from the integral form defined by Eq (4) to the more generally useful form of Eq (5) where the influence of recording arrival times with respect to a periodic window is captured by a summation, is now presented. The integral in Eq (4) can be rewritten to give

| (29) |

| (30) |

| (31) |

| (32) |

| (33) |

where δ(x) represents the Dirac delta function which exists only when x is equal to zero (i.e. ∫dx δ(x)f(x) = f(0) for any function f(x)), δi,j is the Kronecker delta function which exists only when i = j, the step function is denoted by θ(x) with θ(x > 0) = 1 and θ(x ≤ 0) = 0, and the floor function is denoted by ⌊x⌋, such that n = ⌊x⌋ is the largest integer n ≤ x. By definition, any observed photon arrival time Δt ∈ [0, T] must fall within the measurement interval and therefore cannot exceed the modulation period Tm (i.e. Δt ≤ T ≤ Tm), consequently δℓ,⌊ℓ+Δt/Tm⌋ = δℓ,ℓ = 1 and the integral simplifies further to give

| (34) |

Since the instrument response approximation Γ(u) is non-zero only for non-negative delay times u (i.e. Γ(u) = 0 if u < 0), the above expression simplifies yet further to be of the form in Eq (5), that is

| (35) |

Incorporating the discrete-time nature of these time-domain FLIM data into our model to give the photon and signal photon likelihood in discrete time, p(b) and S(b), as given by Eqs (7) and (8) respectively, is trivial, requiring that the photon and signal photon likelihoods in continuous time, p(Δt) and S(Δt), as given by Eqs (3) and (5) respectively, be integrated over the interval defining the bin b = [bL, bH]. The introduction of a multi-exponential fluorescence decay signal of the form defined by Eq (9), and subject to the constraints of Eq (10), leads to the photon bin-likelihood, p(b), as given by Eq (11), it now being convenient to define the fluorescence bin-likelihood, , which describes the likelihood of a fluorescence decay photon arrival time being counted in the interval b = [bL, bH] due to a mono-exponential decay with lifetime τ, as given by Eq (12).

The introduction of an approximation to the IRF, , comprising a weighted sum of truncated Gaussian distributions and of the form described by Eq (13), leads to the fluorescence decay bin-likelihood as given by Eq (19) where the necessary integrals are performed analytically, as described below. The IRF approximation can be written as , where the contribution of the ith IRF component, denoted by , is given by

| (36) |

where the quantity is introduced for compactness. The fluorescence decay bin-likelihood, as given in Eq (12), can then be decomposed into the sum of contributions of each of the instrument response components, such that , where represents the contribution of the ith instrument response component to the overall fluorescence decay bin-likelihood, and is given by

| (37) |

Determining now the convolution of a component of the multi-exponential decay signal, having a decay lifetime τ, and the ith component of the instrument response approximation as follows:

| (38) |

where the quantity is given by

Notice that the term θ[ℓTm + Δt − δi] ensures that the integral is positive. The developed expression describes (without normalisation) the likelihood of a fluorescence decay photon at time Δt in the measurement interval. The fluorescence decay bin-likelihood due to the ith instrument response component can now be written as

| (39) |

Observe that the term θ[ℓTm + Δt − δi] ensures that the fluorescence photon likelihood is zero until the decay time ℓTm + Δt exceeds the cutoff parameter δi. In determining the remaining integral, which accounts for the discrete time nature of TCSPC data, it is convenient to incorporate the bin boundaries directly into the developed expression, to give

| (40) |

It is evident that if the time bin lies entirely before the cutoff there is no likelihood of a fluorescence decay photon being counted into it, the likelihood of photon being counted into a time bin which straddles the cutoff is determined by integrating between the cutoff and the upper bin boundary, and if the time bin is entirely beyond the cutoff then the likelihood of a photon arrival time in the bin is determined by integrating between the bin boundary values. These conditions are encapsulated by the following expression,

| (41) |

The remaining integral is determined analytically, by parts, giving

| (42) |

Defining the quantity,

| (43) |

the integral can be written (using Eq (41)), whilst also absorbing the factor from Eq (39), to give

| (44) |

Consequently, the fluorescence decay bin-likelihood due to the ith instrument response component can be written as follows:

| (45) |

where the quantity Ψi (τ, bL, bH, T, Tm, σi, δi, ui) is given by

| (46) |

Incorporating this into the model yields the following expression for the bin-likelihood (which includes the contribution of both background and a fluorescence decay signal):

| (47) |

where summarizes the parameters required for the approximation of the instrument response.

A2 Synthetic Data

Synthetic data were generated to simulate a variety of mono- and bi-exponential decay conditions; all generated data reflected a modelled TCSPC system having a repetition rate of 40 MHz, a measurement interval of 20.0 ns partitioned into 256 bins of equal width, the effects of a Gaussian IRF having a FWHM width of 0.15 ns, and incorporated Poisson noise at each bin.

A3 Human Epithelial Carcinoma Cell Preparation

Human epithelial carcinoma cells (A431) (ATTC, UK) stably expressing cdc42-GFP [42] were grown in Dulbecco’s modified Eagle’s medium containing 10% fetal calf serum. Cells were seeded on a glass coverslip (50000 cells/ml) and 48 hours later were fixed with 4% paraformaldehyde, treated with 1mg/ml NaBH4, then mounted with Mowiol (VWR, UK) containing antifade agent and kept at -20 degC.

A4 Cell Pellet Preparation

Cell pellet preparations offer the opportunity to have the controllability of a cell experiment but in a medium that is similar to a tissue section. MCF7 cells (ATTC, UK) were cultured in DMEM supplemented with 10% FCS. Cells were transfected with HER2-GFP and HER3-GFP plasmids at ratio 3:1 using FuGene6 (Promega) according to the manufacturer’s protocol, and cultured for 24 hours. Cells were fixed with 10% formalin for 5 hours and then processed overnight on Leica ASP300S and embedded into paraffin on Leica EG11508C. For staining, cell pellets or tissue were cut (3 μm) and underwent the same antigen retrieval procedure (BenchMark Ventana) and antibody-based staining with Alexa546 and Cy5.

A5 Human Breast Cancer Tissue Preparation

FLIM data was obtained from an ongoing study of protein interactions in human tissue by our collaborators. Tissue microarrays were created from 218 primary breast cancers from patients included in the METABRIC (Molecular Taxonomy of Breast Cancer International Consortium) study [43], and whose material was stored by King’s Health Partners Cancer Biobank. These underwent standard de-waxing and antibody retrieval protocols, and were stained for HER3 and HER2 proteins. Anti-HER3 (B9A11) was purchased from Monogram Biosciences Inc., anti-HER2 (e2-4001+3B5) was purchased from ThermoScientific Ltd. and directly labeled according to the manufacturer’s protocol with Alexa546 (X546) and Cy5, respectively. Human tissue samples were provided by King’s Health Partners Cancer Biobank, a Human Tissue Authority licenced facility (reference 12121) with NHS Research Ethics Committee approval to provide samples for research (12/EE/0493).

A6 FLIM Data Acquisition

Time-domain FLIM was performed with an in-house Open Microscope system [44], excitation being provided by a supercontinuum 40MHz source (SC450-4, Fianium, UK), pulse width ∼4 ps, and photon counting performed using a SPC830 TCSPC board (Becker & Hickl, Berlin, Germany). De-scanned detection was afforded by the use of a fast single-photon response, photomultiplier tube (PMH-100-0, Becker & Hickl, Berlin, Germany) placed behind a pinhole, parfocal with the image plane. The following filters were used in data acquisition from cell samples: 470 nm excitation filter (Semrock FF01-470/22-25, Laser 2000, UK) and an IR cut filter, 495 nm dichromatic reflector (Semrock FF 495-Di02-25x36, Laser 2000, UK), and 510 nm emission filter (Semrock FF01-510/20-25, Laser 2000, UK). The following filters were used in data acquisition from tissue samples: 540 nm excitation filter (Semrock FF01-540/15-25, Laser 2000, UK) and an IR cut filter, 30/70 neutral beam splitter (48NT-392 30R/70T, Edmund Optics, UK), and 593 nm emission filter (Semrock FF01-593/40-25, Laser 2000, UK)

To avoid pulse pile-up, peak photon counting rates were adjusted to be well below the maximum counting rate offered by the TCSPC electronics [34], with average photon counting rates of the order 104 − 105 photons/second. The photon arrival times, with respect to the 40 MHz repetitive laser pulses, were binned into 256 time windows over a total measurement period of 15 ns. Images were captured with a 0.75 NA objective lens (S Fluor 20x/0.75 air, Nikon, UK) at 256 × 256 pixels corresponding to 334 × 334 μm at the sample. Data acquisition was performed at room temperature.

IRF measurement was performed by replacing the sample with an Aluminium-coated reflective slide and removing the emission filter such that reflected excitation light reaches the detector. In order to replicate experimental conditions in terms of laser power and photon detection rate, neutral density filters (ND10A and ND40A, Thor Labs, UK) were placed in the excitation light path.

Acknowledgments

We thank Prof T. Ng and Dr G. Weitsman for cell and tissue samples and other supporting staff at the Randall Division of Cell and Molecular Biophysics, King’s College London and at the Cancer Research UK and Medical Research Council Oxford Institute for Radiation Oncology, University of Oxford.

Data Availability

All image data and analysis results files are available from the Open Science Framework database (DOI 10.17605/OSF.IO/G8FT2).

Funding Statement

This work was supported by Cancer Research UK (www.cancerresearchuk.org), Programme grant C133/A/1812: BV, and Engineering and Physical Sciences Research Council (www.epsrc.ac.uk), EP/C546105/1 and EP/C546113/1: BV ACCC.

References

- 1. Pawley JB. Handbook of Biological Confocal Microscopy. Springer-Verlag New York Inc; 2006. [Google Scholar]

- 2. Denk W, Strickler J, Webb W. Two-photon laser scanning fluorescence microscopy. Science. 1990;248(4951):73–76. 10.1126/science.2321027 [DOI] [PubMed] [Google Scholar]

- 3. Hell SW, Wichmann J. Breaking the diffraction resolution limit by stimulated emission: stimulated-emission-depletion fluorescence microscopy. Optics Letters. 1991;19(11):780–782. 10.1364/OL.19.000780 [DOI] [PubMed] [Google Scholar]

- 4. Rust M, Bates M, Zhuang X. Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM). Nature Methods. 2006;3(10):793–796. 10.1038/nmeth929 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Ploem JS. Laser scanning fluorescence microscopy. Applied Optics. 1987. August;26(16):3226–3231. 10.1364/AO.26.003226 [DOI] [PubMed] [Google Scholar]

- 6. Shaner NC, Steinbach PA, Tsien RY. A guide to choosing fluorescent proteins. Nature Methods. 2005;2(12):905–909. 10.1038/nmeth819 [DOI] [PubMed] [Google Scholar]

- 7. Ormö M, Cubitt AB, Kallio K, Gross LA, Tsien RY, Remington SJ. Crystal Structure of the Aequorea victoria Green Fluorescent Protein. Science. 1996. September;273(5280):1392–1395. 10.1126/science.273.5280.1392 [DOI] [PubMed] [Google Scholar]

- 8. Crivat G, Taraska JW. Imaging proteins inside cells with fluorescent tags. Trends in biotechnology. 2012. January;30(1):8–16. 10.1016/j.tibtech.2011.08.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Gerritsen HC, Sanders R, Draaijer A, Ince C, Levine YK. Fluorescence lifetime imaging of oxygen in living cells. Journal of Fluorescence. 1997. March;7(1):11–15. 10.1007/BF02764572 [DOI] [PubMed] [Google Scholar]

- 10. Lakowicz JR. Principles of Fluorescence Spectroscopy. Lakowicz JR, editor. Plenum Press, New York; 1983. [Google Scholar]

- 11. Cheng PC. The Contrast Formation in Optical Microscopy In: JBPE, editor. The Handbook of Biological Confocal Microscopy. Plenum Press, New York; 2006. p. 162–206. [Google Scholar]

- 12. Förster T. Zwischenmolekulare Energiewanderung und Fluoreszenz [Intermolecular energy migration and fluorescence] (in German). Annalen der Physik. 1948;437:55–75. [Google Scholar]

- 13. Wallrabe H, Periasamy A. Imaging protein molecules using FRET and FLIM microscopy. Current Opinion in Biotechnology. 2005;16:19–27. 10.1016/j.copbio.2004.12.002 [DOI] [PubMed] [Google Scholar]

- 14. Peter M, Ameer-Beg SM, Hughes MKY, Keppler MD, Prag S, Marsh M, et al. Multiphoton-FLIM Quantification of the EGFP-mRFP1 FRET Pair for Localization of Membrane Receptor-Kinase Interactions. Biophysical Journal. 2005;88:1224–1237. 10.1529/biophysj.104.050153 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Ameer-Beg SM, Peter M, Keppler MD, Prag S, Barber PR, Ng TC, et al. Dynamic imaging of protein-protein interactions by MP-FLIM. Proc SPIE. 2005; (5700):152–161. 10.1117/12.589202 [DOI] [Google Scholar]

- 16. Ng T, Squire A, Hansra G, Bornancin F, Prevostel C, Hanby A, et al. Imaging Protein Kinase Cα Activation in Cells. Science. 1999;283:2085–2089. 10.1126/science.283.5410.2085 [DOI] [PubMed] [Google Scholar]

- 17. Wouters FS, Verveer PJ, Bastiaens PI. Imaging biochemistry inside cells. Trends Cell Biol. 2001;11(5):203–11. 10.1016/S0962-8924(01)01982-1 [DOI] [PubMed] [Google Scholar]

- 18. Rowley MI, Barber PR, Coolen ACC, Vojnovic B. Bayesian analysis of fluorescence lifetime imaging data. Proc SPIE. 2011;790325:1–12. [Google Scholar]

- 19. Barber PR, Ammer-Beg SM, Gilbey J, Carlin LM, Keppler M, Ng TC, et al. Multiphoton time-domain fluorescence lifetime imaging microscopy: practical application to protein-protein interactions using global analysis. Journal of The Royal Society Interface. 2009;6:S93–S105. 10.1098/rsif.2008.0451.focus [DOI] [Google Scholar]

- 20. Tellinghuisen J. Bias and Precision in the Estimation of Exponential Decay Parameters from Sparse Data. Analytical Chemistry. 1993;65 (9):1240–1246. 10.1021/ac00057a022 [DOI] [Google Scholar]

- 21. Turton DA, Reid GD, Beddard GS. Accurate Analysis of Fluorescence Decays from Single Molecules in Photon Counting Experiments. Analytical Chemistry. 2003;75 (16):4182–4187. 10.1021/ac034325k [DOI] [PubMed] [Google Scholar]

- 22. Nishimura G, Tamura M. Artefacts in the analysis of temporal response functions measured by photon counting. Physics in Medicine and Biology. 2005;50:1327–1342. 10.1088/0031-9155/50/6/019 [DOI] [PubMed] [Google Scholar]

- 23. Gerritsen HC, Asselbergs MAH, Agronskaia AV, Sark WGJHMV. Fluorescence lifetime imaging in scanning microscopes: acquisition speed, photon economy and lifetime resolution. Journal of Microscopy. 2002;206:218–224. 10.1046/j.1365-2818.2002.01031.x [DOI] [PubMed] [Google Scholar]

- 24. Hall P, Sellinger B. Better Estimates of Exponential Decay Parameters. Journal of Physical Chemistry. 1981;85 (20):2941–2946. 10.1021/j150620a019 [DOI] [Google Scholar]

- 25. Bajzer Z, Therneau TM, Sharp JC, Prendergast FG. Maximum likelihood method for the analysis of time-resolved fluorescence decay curves. European Biophysics Journal. 1991;20 (5):247–262. 10.1007/BF00450560 [DOI] [PubMed] [Google Scholar]

- 26. Laurence TA, Chromy BA. Efficient maximum likelihood estimator fitting of histograms. Nature Methods. 2010;7(5):338–339. 10.1038/nmeth0510-338 [DOI] [PubMed] [Google Scholar]

- 27. Barber PR, Ammer-Beg SM, Gilbey J, Edens RJ, Ezike I, Vojnovic B. Global and pixel kinetic data analysis for FRET detection by multi-photon time-domain FLIM. Proc SPIE. 2005;5700:171–181. 10.1117/12.590510 [DOI] [Google Scholar]

- 28. Grecco HE, Roda-Navarro P, Verveer PJ. Global analysis of time correlated single photon counting FRET-FLIM data. Optics Express. 2009;17:6493–6508. 10.1364/OE.17.006493 [DOI] [PubMed] [Google Scholar]

- 29. Leung RWK, Yeh SCA, Fang Q. Effects of incomplete decay in fluorescence lifetime estimation. Biomedical Optics Express. 2011;2:2517–2531. 10.1364/BOE.2.002517 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Digman MA, Caiolfa VR, Zamai M, Gratton E. The Phasor Approach to Fluorescence Lifetime Imaging Analysis. Biophysical Journal. 2007;94 (2):L14–L16. 10.1529/biophysj.107.120154 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Jo JA, Fang Q, Marcu L. Ultrafast Method for the Analysis of Fluorescence Lifetime Imaging Microscopy Data Based on the Laguerre Expansion Technique. IEEE Journal of Selected Topics in Quantum Electronics. 2005;11 (4):835–845. 10.1109/JSTQE.2005.857685 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Dabir AS, Trivedi CA, Ryu Y, Pande P, Jo JA. Fully automated deconvolution method for on-line analysis of time-resolved fluorescence spectroscopy data based on an iterative Laguerre expansion technique. Journal of Biomedical Optics. 2009;14 (2). 10.1117/1.3103342 [DOI] [PubMed] [Google Scholar]