Abstract

Investigations into the neural basis of memory in human and non-human primates have focused on the hippocampus and associated medial temporal lobe (MTL) structures. However, how memory signals from the hippocampus affect motor actions is unknown. We propose that approaching this question through eye movement, especially by assessing the changes in looking behavior that occur with experience, is a promising method for exposing neural computations within the hippocampus. Here, we review how looking behavior is guided by memory in several ways, some of which have been shown to depend on the hippocampus, and how hippocampal neural signals are modulated by eye movements. Taken together, these findings highlight the need for future research on how MTL structures interact with the oculomotor system. Probing how the hippocampus reflects and impacts motor output during looking behavior renders a practical path to advance our understanding of the hippocampal memory system.

Keywords: Memory, Medial temporal lobe, Hippocampus, Eye movements, Saccades, Oculomotor system, Spatial memory, Neurons, Free-viewing, Primate

1. Introduction

For decades, looking behavior has been used to assess memory (Hannula, Althoff, Warren, Riggs, Cohen, and Ryan, 2010), and recent efforts have identified subtle changes in viewing behavior that indicate memory. However, we currently know very little about the relationship between medial temporal lobe (MTL) structures that are necessary for memory and the oculomotor system that controls eye movements. In an attempt to motivate future research that investigates the neural mechanisms by which memory interacts with eye movement, here we review studies demonstrating the influence of memory on looking behavior, describe related neural signals in MTL structures, and discuss potential points of interaction between the MTL and oculomotor systems.

The study of biological systems in more natural settings, where experimental stimuli are less artificial and required behavior is less controlled, has been growing. This approach has been explicitly called for in certain fields, such as vision (Churchland, Ramachandran, and Sejnowski, 1994; Findlay and Gilchrist, 2003; Geisler and Ringach, 2009) for the purpose of better exposing nervous system operations. Because vision naturally relies heavily upon eye movement, the case was made to study vision in the context of looking behavior instead of using the more common technique of requiring subjects to fixate for long periods of time while visual stimuli are presented peripherally (Findlay and Gilchrist, 2003). A similar argument for the importance of natural behavior has also been made for the study of eye movements themselves (Tatler, Hayhoe, Land, and Ballard, 2011), where this approach has yielded great insight into what constitutes normal behavior. We wish to extend this idea by advocating for a more natural approach to the study of memory. As we describe below, behavioral paradigms that allow both humans and non-human primates to freely view images have uncovered a range of effects that experience has on eye movement. Importantly, these modifications in viewing behavior with experience have often been shown to depend upon the integrity of MTL structures, and eye movements have been shown to modulate MTL neural activity. We will review these findings and explore how future research of hippocampal function can benefit through discovery of how the MTL reflects and affects eye movement.

2. Why study the neurophysiology of memory through eye movement?

Primacy of looking for primates

Vision is a primate’s primary sensory modality. Unlike rodents, for example, who boast an impressive olfactory ability, we boast an impressive visual ability, and chiefly use vision to extract information from the world around us. It is interesting to note that in the English language, we use terms associated with vision as a synonym for “understand”: “I see your point”, “show me what you mean”, “we don’t have the same views”, “her innovative vision for the future”, “it opened my eyes.” Primates’ natural inclination towards visual sensing can also be illustrated by the fact that monkeys do not have to be trained to look at pictures, and readily initiate image viewing even without reward offered by the experimenter (Wilson and Goldman-Rakic, 1994). Monkeys show a preference for a picture over a blank screen (Humphrey, 1972) and look longer at pictures than a homogenous color field (Wilson and Goldman-Rakic, 1994). Additionally, memory for what we view is impressive, and a large literature demonstrates that humans can achieve almost perfect recognition of previously viewed images despite testing sets of hundreds to thousands of images (Shepard, 1967; Standing, 1973; Standing, Conezio, and Haber, 1970).

The mechanics of looking heavily influence our memory because the visual input that feeds memory is highly discretized by eye movements. Specifically, primate looking behavior is constituted by fixations and saccades that break up visual information. “Saccades” are rapid, ballistic eye movements that direct the central, high-resolution areas of our retinae around the environment. By contrast, “fixations” are the still periods of time between eye movements, where the retinal image is relatively stable and we can extract detailed information from visual stimuli. Despite the uniform perception we have of looking at a stable visual scene, we are in fact making saccades about three to five times a second, and we actually only see visual detail within about two degrees of visual angle (about the width of your thumbs held next to each other at arm’s length) of the world at any one moment (Findlay and Gilchrist, 2003). Recognition memory for objects more than two degrees away from fixation is impoverished, suggesting that direct fixation is necessary for an object within a visual scene to be reliably encoded during natural viewing (Nelson and Loftus, 1980). Fixation count is arguably a currency of memory, as the strength of picture recognition depends on the number of fixations made during encoding (Kafkas and Montaldi, 2011; Molitor, Ko, Hussey, and Ally, 2014) rather than how long the picture was viewed (Loftus, 1972). As spatially specific indicators of attention and perception, fixations also determine what we remember within pictures, with stronger memory associated with image regions that contained more fixations during encoding (Irwin and Zelinsky, 2002; Pertzov, Avidan, and Zohary, 2009; van der Linde, Rajashekar, Bovik, and Cormack, 2009).

Looking behavior guided by memory

Measuring the novelty preference in looking behavior is one way memory can be assessed in the laboratory in a relatively natural context (Buffalo, Ramus, Clark, Teng, Squire, and Zola, 1999; Manns, Stark, and Squire, 2000; Zola, Squire, Teng, Stefanacci, Buffalo, and Clark, 2000). Most often used by developmental psychologists to observe memory in human infants (Reynolds, 2015), preferential looking at novel objects is a memory metric that capitalizes on primates’ innate preference for novelty and their ability to form a robust memory for an image viewed only a few seconds.

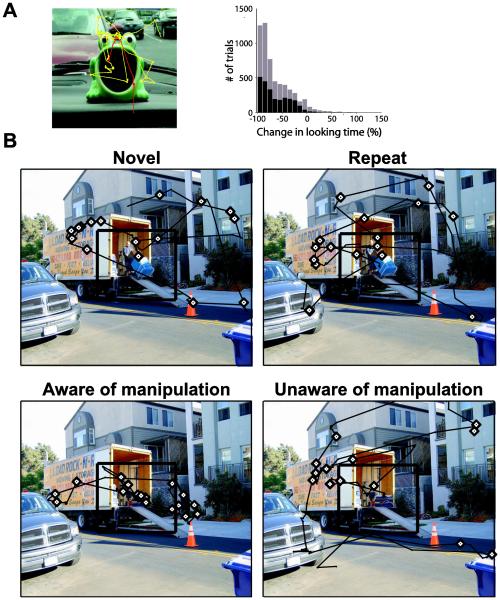

The simplest method for quantifying novelty preference in looking behavior is to compare the overall time spent looking at novel and repeated stimuli. In the “Visual Paired Comparison Task,” novelty preference is quantified as the proportion of time spent looking at a novel image when it is presented alongside a previously viewed image. Using this measure, healthy human adults, infants, and monkeys exhibit a preference for looking at novel stimuli (Buffalo et al., 1999; Crutcher, Calhoun-Haney, Manzanares, Lah, Levey, and Zola, 2009; Fagan, 1970; Manns et al., 2000; McKee and Squire, 1993; Nemanic, Alvarado, and Bachevalier, 2004; Zola, Manzanares, Clopton, Lah, and Levey, 2012; Zola et al., 2000). In the “Visual Preferential Looking Task” (Figure 1A), developed for performing neurophysiology experiments of novelty preference in monkeys (Wilson and Goldman-Rakic, 1994), only one image is presented at a time, and if the monkey looks away, the image vanishes and the trial ends. Comparing overall looking time for novel and repeated images also reveals novelty preference in this task (Jutras, Fries, and Buffalo, 2009; Killian, Jutras, and Buffalo, 2012; Wilson and Goldman-Rakic, 1994).

Figure 1. A. Monkeys look less at a repeated image.

Monkeys performing the Visual Preferential Looking Task control the total time images are presented to them by looking away from the image, which causes it to vanish and the next trial to begin. LEFT: Scan path of the monkey for the first (yellow) and second (red) presentations of the image are shown. The monkey spent much less time viewing the image in the second presentation. RIGHT: Histogram depicts the change in looking time as a percentage of the amount of time the monkey spent looking at the first presentation of each stimulus over 45 sessions for two monkeys (black: Monkey A; gray: Monkey B). A negative value represents images for which looking times were longer during the first presentation. Figure from Jutras, Fries and Buffalo (2009). B. People fixate more on a manipulated region of a previously seen image if they are aware of what has been manipulated. Eye movement traces (black lines) and fixations (diamonds) for four participants are shown when the image was novel, repeated, manipulated when the participant was aware the scene had been changed, or manipulated when the participant was unaware the scene had been changed. Eye movement data are for the 5 seconds that the image was presented. The participant who was aware the scene had been changed (bottom right) exhibited a greater proportion of her fixations within the manipulated region than the participant who was unaware of the manipulation, or participants who had never seen a different version of the image (top row). The participants who viewed this image as a manipulated scene (bottom row) had previously seen the image where a man with a dolly was in the region identified by the black square. The black square on each image identifies the critical region, but the square did not appear during testing. Figure from Smith et al. (2006).

Another measure of viewing behavior that reflects stimulus novelty is the number of fixations made while freely viewing visual scenes. Several studies have reported that more fixations are made within novel scenes, compared with repeated or familiar scenes (Althoff and Cohen, 1999; Hannula et al., 2010; Ryan, Althoff, Whitlow, and Cohen, 2000; Smith, Hopkins, and Squire, 2006; Smith and Squire, 2008). This effect is also observed within a virtual 3D environment (Kit, Katz, Sullivan, Snyder, Ballard, and Hayhoe, 2014) in which, in a more real-life scenario, subjects became familiar with the environment by performing virtual household tasks over several days of sessions. Novel features introduced into the virtual environment were fixated upon with increased probability relative to control objects.

Both the timing and distribution of eye movements have also been shown to indicate whether a stimulus is encoded in memory. A general effect has been observed that people make fixations of shorter duration when they view novel, compared to repeated images (Smith et al., 2006). The duration of fixations has also been linked to the strength of memory encoding, as fixations are shorter when subjects view novel images that are later reported as recollected compared to those that are subsequently forgotten (Kafkas and Montaldi, 2011). People also sample (fixate) fewer image regions when viewing a repeated image (Althoff and Cohen, 1999; Smith et al., 2006; Smith and Squire, 2008), and this change in behavior has been linked to awareness that an image is repeated (Smith and Squire, 2008). Fixations have also been reported to be more clustered across the image space when subjects initially view a later-recollected image compared to an image that was subsequently only judged as familiar (Kafkas and Montaldi, 2011).

In another paradigm which exploits novelty preference to measure memory, one portion of the scene is altered between novel and repeat viewing, i.e., an object in the scene is removed, replaced, or moved to a new location in the scene. In this case, subjects spend more time looking at the altered region of the previously seen image. Subjects view the altered region longer, make more fixations within it (Figure 1B), and make more eye movement transitions into and out of it (Ryan et al., 2000; Smith et al., 2006; Smith and Squire, 2008). Interestingly, this behavior is apparent even when subjects are instructed simply to view the images, and are under no explicit experimental instruction to remember the stimuli or identify changes (Ryan et al., 2000; Smith and Squire, 2008).

Although there is general agreement that hippocampal-dependent memory is typically accompanied by conscious awareness, some disagreement exists about whether awareness is required for memory-guided viewing. For example, human subjects preferentially viewed a manipulated region of an image without correctly reporting awareness of the manipulation on some trials (Ryan et al., 2000). Hannula and colleagues (2010) posit that this phenomenon supports the idea that awareness is not necessary for memory-guided viewing behavior. However, later studies did not replicate this result, and found that awareness was required for preferential viewing of a manipulated region (Smith et al., 2006; Smith and Squire, 2008). Some methodological differences could explain this discrepancy. One difference involved a detail about how the scenes were manipulated, i.e., adding and removing stimuli only (Smith et al., 2006; Smith and Squire, 2008), or including a shift in the left-right location of an object (Ryan et al., 2000). Another important difference between the studies was the directions provided to the participants. Ryan et al. (2000) asked the subjects questions that oriented their attention to the critical region of the scene prior to the manipulation, while Smith and Squire (2008) simply instructed the subjects to pay attention to each picture, and subjects were not under any expectation that memory would be tested. Future studies are needed to determine the impact of these methodological differences on the influence of hippocampal processing and awareness.

A change in pupil diameter has also been described as a memory response, and modulation in pupil dilation with memory persists even when people are instructed to feign forgetting (Heaver and Hutton, 2011). When humans freely view images, the pupil diameter is larger for repeated images ~850 ms after image onset, and maintains this relative size differential between novel and repeated images for at least several seconds (Bradley and Lang, 2015). The magnitude and rate of pupil constriction in response to a novel image has also been shown to predict declarative memory for that image (Bradley and Lang, 2015; Kafkas and Montaldi, 2011; Naber, Frässle, Rutishauser, and Einhauser, 2013).

Another modification of looking behavior by experience is the tendency of eye movements and visual attention to be directed away from previously attended locations (Posner and Cohen, 1984; Wang and Klein, 2010). Also known as “inhibition of return” (IOR), this phenomenon has been proposed to facilitate foraging (Klein, 1988), so that we advantageously avoid returning to previously examined locations that no longer provide new information. IOR has been observed when humans and monkeys view pictures, particularly during tasks in which the goal is to find a visual target within a display (Gilchrist and Harvey, 2000; Motter and Belky, 1998; Shariat Torbaghan, Yazdi, Mirpour, and Bisley, 2012; Wang and Klein, 2010).

Other properties of natural looking behavior in a real-world environment have suggested the existence of a non-retinal neural map of remembered visual space that informs looking (Hayhoe, Shrivastava, Mruczek, and Pelz, 2003; Pertzov, Zohary, and Avidan, 2010). Behavioral evidence (described below) suggests the existence of a neural spatial map that, unlike the spatial maps in the visual cortex, reflects spatial memory instead of current sensory information, and importantly, is referenced to features of the environment instead of the body. For example, neurons in the visual cortex reflect visual stimuli with fidelity to where those stimuli fall on the two retinae, so that when the eyes move, and a new image falls on the retinae, the activity of visual cortical neurons reflects this new arrangement. Consequently, this retinotopic map of visual space changes with every eye movement. This retinal reference frame is more generally referred to as an “egocentric frame of reference” because the map reflects stimuli relative to a part of the body (which in this case is the retina). However, despite the fact that many primate brain areas are retinotopic, behavior indicates that a non-retinal, or “allocentric” neural map (a map locked to environmental features instead of the body) of remembered visual space may exist.

Just as a personal “sense” of spatial awareness or a memory for a location outside the field of view suggests that such a non-retinal map of remembered visual space exists in the human brain, so does laboratory evidence of human eye movement. During natural tasks, people can make large gaze shifts to targets outside the field of view (Land, Mennie, and Rusted, 1999), suggesting the existence of a spatial memory that can guide orienting to a target not currently in sight. Additionally, tracking eye movements during natural tasks, like making a sandwich (Hayhoe et al., 2003; Land et al., 1999) or tea (Land et al., 1999), reveals that people often make a sequence of short saccades that occur too quickly after one another (< 100 ms) to be individually planned eye movements (saccade planning typically takes ~200 ms). The existence of these quick saccadic sequences indicates that the eye movements are sometimes pre-planned in a spatial frame of reference independent of current eye position (Hayhoe et al., 2003), since changing eye position means a changing retinal image and along with that, retinal location of the “goal” saccadic target. Congruent with this notion that saccadic sequences can be pre-programmed, (Zingale and Kowler, 1987) demonstrated that the latency to initiate a sequence of saccades increased with the number of saccades in the sequence.

Another looking behavior associated with memory is re-enactment, in which spontaneous eye movements during mental imagery (e.g., recalling visual content as if it were being currently seen while looking at a blank screen) closely reflect the content and spatial relations of the original picture (Brandt and Stark, 1997; Johansson and Johansson, 2013; Laeng and Teodorescu, 2002; Spivey and Geng, 2001). This behavior has been linked to improved memory: Allowing subjects to freely move their eyes on a blank screen during recall increases performance on a recall task compared to requiring subjects to stare at one location (Johansson and Johansson, 2013). Even controlled eye movements to instructed screen locations on a blank screen can improve recall performance if the fixation location during recall matches the stimulus location (Johansson and Johansson, 2013). Further work has shown that when subjects are asked questions about a part of a previously viewed image while viewing a blank screen, they spontaneously look at the location where the object had been presented. Interestingly, this behavior correlates with better memory for the object’s features, whereas blocking this behavior by asking subjects to fixate causes a decrease in the quality of memory (Laeng, Bloem, D'Ascenzo, and Tommasi, 2014).

As described in the following sections, discovering the neural bases of these mnemonic looking behaviors is an area of active research. A number of natural looking behaviors in a real-world environment require eye movements to be planned using visuospatial memory in a non-retinal, environmental frame of reference, although a neural map of space using such a reference frame has yet to be found in the traditional oculomotor structures of the primate brain. In addition, the neural mechanisms by which recognition memory guides viewing is currently unknown. To lay the foundation for future research which might address these questions, we will first describe experimental findings that MTL structures are needed in order for experience to influence looking behavior.

Changes in viewing behavior with experience depend on the MTL

The role of the hippocampus and other MTL structures in viewing behavior has been demonstrated in experiments that compare healthy subjects to subjects with MTL damage. Unlike healthy individuals, who show a preference for novelty in the Visual Paired Comparison task, individuals with damage to MTL structures spend an equal amount of time looking at a novel and previously viewed image (McKee and Squire, 1993). This is also true for patients with patients diagnosed with amnestic Mild Cognitive Impairment, who likely have altered MTL function (Crutcher et al., 2009). This picture-viewing task potentially even serves as a diagnostic tool for the MTL deterioration associated with Alzheimer’s disease, as impaired novelty preference on this task predicts the cognitive decline associated with Alzheimer’s disease up to 3 years prior to clinical diagnosis (Zola et al., 2012). Additionally, unlike healthy controls, patients with large lesions of MTL structures, as well as patients with damage limited to the hippocampus, fail to exhibit a decreased number of fixations or regions sampled for repeated images (Smith and Squire, 2008, but c.f. Ryan et al., 2000). Amnesic patients also exhibit impaired memory for spatial properties of image content, as evidenced by a lack of preferential viewing of a manipulated region of a repeated scene (Ryan et al., 2000). Amnesic patients with damage limited to the hippocampus are likewise impaired at deciding whether scenes are novel, repeated, or manipulated (Smith et al., 2006). A recent report suggests that memory effects on pupil size also depend on the MTL, because Alzheimer’s patients (with presumed damage to MTL structures) fail to demonstrate a pupil constriction memory effect compared to healthy control subjects (Dragan, Leonard, Lozano, McAndrews, Ng, Ryan, Tang-wai, Wynn, and Hoffman, 2014). Importantly, the effects of MTL damage on novelty preference in viewing behavior have also been demonstrated in monkeys with restricted lesions of MTL structures. Impaired novelty preference has been demonstrated following lesions of the hippocampus alone (Bachevalier, Nemanic, and Alvarado, 2014; Nemanic et al., 2004; Zola et al., 2000), the hippocampus together with parahippocampal cortex (Pascalis and Bachevalier, 1999) or the amygdaloid complex (Bachevalier, Brickson, and Hagger, 1993), the parahippocampal cortex alone (Nemanic et al., 2004), and the perirhinal cortex alone (Bachevalier et al., 2014; Buffalo et al., 1999). Taken together, these findings suggest that the hippocampus and associated MTL structures are critical for several changes in viewing behavior that accompany experience.

Neural activity associated with memory-guided changes in viewing behavior

Neural recordings in MTL structures have revealed responses locked to eye movements and the onset of visual stimuli that reflect memory. In both humans and monkeys, the onset of a visual stimulus often has a dramatic effect upon hippocampal neural responses (Hoffman, Dragan, Leonard, Micheli, Montefusco-siegmund, Taufik, and Valiante, 2013; Jutras and Buffalo, 2010a; Kreiman, Koch, and Fried, 2000), and modulations in these visual responses have been shown to reflect stimulus novelty. For example, hippocampal (Jutras and Buffalo, 2010a; Rutishauser, Ye, Koroma, Tudusciuc, Ross, Chung, and Mamelak, 2015) and entorhinal neurons (Killian et al., 2012) demonstrate attenuated (match suppression) or enhanced (match enhancement) visual responses when a monkey views a repeated image. Importantly, the magnitude of this neural modulation correlates with the strength of memory for the repeated image (Jutras et al., 2010).

Eye movement is also reflected in MTL neural activity. Saccades move our eyeballs at extreme speeds, prohibiting us from being capable of resolving the identity of visual objects that pass across our retinae in a blur. These sudden, ballistic eye movements therefore break up the stream of meaningful visual data to the cortex, and dramatically interrupt the flow of sensory input to the brain. These physical properties of looking behavior make it unsurprising to observe a reflection of saccade-related information within many areas of the primate brain, such as the well-known phenomenon of saccadic suppression within visual brain areas. Logically, it follows that downstream brain structures that receive this visual information, such as the hippocampus, would likewise be modulated by eye movements, perhaps reflexively reflecting the flow of information. However, we believe it is more likely that evolution set up a nervous system equipped to advantageously synchronize with the active processes of sensation. This idea is consistent with recent theories suggesting an optimized relationship between motor behaviors involved in gathering information (including eye movements) and neural activity in sensory areas that reflect “active sensing” (Schroeder, Wilson, Radman, Scharfman, and Lakatos, 2010). Additional support for this idea comes from the seminal discovery of an outgoing saccadic motor signal that is used by the brain for other internal processing (Sommer and Wurtz, 2002; 2004; 2006; 2008). An elegant series of experiments from Sommer and Wurtz demonstrated that before a saccade is made, the superior colliculus (one of the last stops for an oculomotor signal before reaching the eye muscles) sends information about the impending eye movement *back* to the brain by way of the medial dorsal nucleus of the thalamus. If this pathway is temporarily inactivated, and the superior colliculus is therefore prevented from telling the brain about the final eye movement decision, then the neural response fields of frontal eye field (FEF) neurons fail to shift to their future locations (“anticipatory shifting”), and the monkey loses certain, constrained motor ability, i.e., the monkey cannot account for the first eye movement when attempting to make an accurate second eye movement in a task where both saccades must be planned before any movement (Sommer and Wurtz, 2006). Such specialized neural hardware for processing saccades beyond an outgoing motor signal therefore exists in the primate brain, and likely not just in that one anatomical pathway (Wurtz, Joiner, and Berman, 2011).

Although no experiments to date have targeted the hippocampus to similarly identify arrival and function of an internal copy of an efferent motor decision, it has been shown that non-visual, eye-movement signals do indeed exist in the MTL. Saccades made in darkness generate event-related potentials in the MTL (Sobotka and Ringo, 1997) and modulate activity of individual MTL neurons (Ringo and Sobotka, 1994; Sobotka, Nowicka, and Ringo, 1997). By recording and stimulating in two brain structures at different time points relative to an eye movement, MTL connectivity with the inferotemporal cortex, known for visual object processing, was shown to peak 100 ms after fixation even for saccades made in darkness (Sobotka, Zuo, and Ringo, 2002). Additionally, neurons coding for the direction of a saccade have recently been reported in the entorhinal cortex (Killian, Potter, and Buffalo, 2015). Taken together, these findings demonstrate the relevance of eye movement itself to MTL processing.

Several neurophysiological studies have provided evidence that the relationship between hippocampal processing and eye movements predicts memory formation.

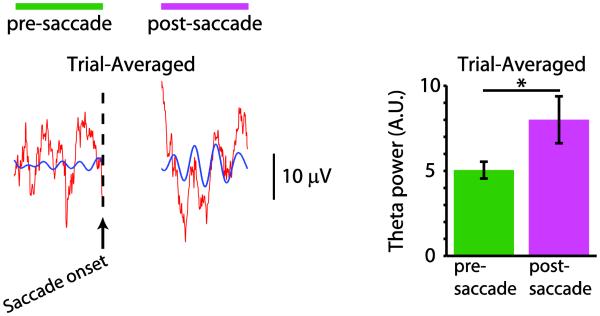

Specifically, modulation of oscillatory hippocampal activity by saccades has been shown to distinguish memory strength. Oscillations in the local field potential reflect the periodic, coherent activity for a population of neurons, and are thought to indicate the rhythm of communication between different brain areas. The theta rhythm (~6-12 hz) in the hippocampus and other MTL structures has been heavily investigated in rodents due to its import in defining spatial activity (e.g., where a rodent is within a place field (O'Keefe and Recce, 1993). The phase of an ongoing theta-frequency oscillation in the hippocampal local field potential is reset (returned to a single phase value) upon the end of an eye movement in both humans and monkeys performing free-viewing tasks (Hoffman et al., 2013; Jutras, Fries, and Buffalo, 2013). This fixation-locked reset of the hippocampal theta oscillation was shown to be a marker of subsequent memory in that the reset was more reliable when a monkey viewed images that were later well-remembered (Jutras et al., 2013). A possible functional benefit of this fixation-locked reset of neural activity is that the reset could create an optimal encoding state of the hippocampus for input of meaningful visual information, congruent with the idea that long term potentiation can be optimally induced at particular phases of the theta-band oscillation in hippocampal neurons (Huerta and Lisman, 1995; Hyman, Wyble, Goyal, Rossi, and Hasselmo, 2003; Jutras and Buffalo, 2010b; 2014; McCartney, Johnson, Weil, and Givens, 2004). The finding that the phase reset does not occur when fixations are made in darkness (Hoffman et al., 2013) suggests that this fixation-locked reset requires visual input and perhaps arises from the timing of input of visual information into the hippocampus rather than from an outgoing saccadic motor signal.

MTL activity has also been shown to reflect current eye position within a visual environment. MTL cells reflecting where a monkey looks have been documented in the hippocampus and entorhinal cortex (Feigenbaum and Rolls, 1991; Killian et al., 2012; Meister, Killian, and Buffalo; Nowicka and Ringo, 2000). In some experiments, the monkey’s body position in the room or relative to a visual stimulus was varied, allowing experimenters to test whether neurons represented the eye position relative to the monkey’s body (egocentric space, e.g., a neuron fires when the monkey moves his eyes 5 degrees of visual angle to the left. regardless of where the monkey is within the room or what features of the room the monkey is viewing), or gaze position (head and eye position together) relative to visual objects (allocentric space, e.g., a neuron fires when the monkey looks at the door regardless of whether it is to his left or right). Results from these studies have suggested that about 50% of hippocampal spatially-modulated neurons code gaze position egocentrically, while 50% code gaze position allocentrically (Feigenbaum and Rolls, 1991; Georges-François, Rolls, and Robertson, 1999; Meister et al.). Visual input was also shown to impact the observed gaze-selective and place-selective activity because these responses were attenuated in the dark (Nishijo, Ono, Eifuku, and Tamura, 1997; Robertson, Rolls, Georges-François, and Panzeri, 1999).

Although neural maps of the environment in rodent hippocampus and medial entorhinal cortex (e.g., place cells, grid cells) are well-known to respond relative to visual cues in a room, evidence regarding spatial activity in the primate MTL is still emerging. Some evidence exists for a strict primate parallel to rodent place and grid cells. Similar to rodent findings, spatial representations in MTL regions have been observed in humans navigating a virtual environment (Ekstrom, Kahana, Caplan, Fields, Isham, Newman, and Fried, 2003; Jacobs, Weidemann, Miller, Solway, Burke, Wei, Suthana, Sperling, Sharan, Fried, and Kahana, 2013) and in monkeys that were trained to control a cart to move around a room (Ono, Nakamura, Nishijo, and Eifuku, 1993). However, evidence also exists for a less strict parallel between rodent and primate spatial representations. Hippocampal neurons of a monkey self-locomoting in a cart were shown to be selective not for where the monkey was located, but rather where the monkey was looking (“spatial view cells,” (Feigenbaum and Rolls, 1991; Georges-François et al., 1999). Congruent with a neural spatial map of where the a primate is looking, entorhinal cells have been identified that provide a grid-like representation of fixation locations on a computer monitor as monkeys freely viewed images (Killian et al., 2012; Meister et al.). In addition, similar to heading direction cells in rodents (Taube, Muller, and Ranck, 1990), entorhinal neurons were recently found to be selective for the direction of the upcoming or previously-completed saccade regardless of where the monkey was currently looking (Killian et al., 2015). Although the details regarding these different reference frames across species and brain structures remain to be resolved, a more central remaining mystery is how MTL neurons with spatial representation potentially influence orienting behavior guided by memory.

3. Future work: How does the MTL coordinate with the oculomotor system?

How do eye movements dictate visual input to the hippocampus?

Major progress in understanding memory signals in the primate has been made using monkeys trained to maintain central fixation while visual stimuli is presented (passive viewing). Here, we advocate extending this effective approach by measuring responses during natural, active looking behavior. As we have reviewed above, experiments that allow for free-viewing exploration of images have revealed novel response properties in the hippocampus and entorhinal cortex. Future experiments of this type would be useful for revealing the input format of visual information, such as visual responses locked to the beginning of every fixation, and how these responses are modulated by experience and memory. If visual perception and memory processes are synchronized with the beat of fixations, it may be possible to observe a gradual development of a neural memory signal in the hippocampus across fixations, spanning the time between initial visual input to behavioral output of memory-guided looking. The observation of a gradual build-up of a memory signal would enable the investigation of several additional questions: Would this potential memory signal appear in hippocampal responses prior to observed changes in looking behavior? Would visual responses be modulated especially by fixations into regions of an image that had been manipulated since the previous viewing? Do these response modulations depend on whether the manipulation is consciously noticed or preferentially fixated? In a task in which the experimental subject is searching for a specific target in a visual display, would MTL neurons signal the successful, target-focusing eye movement before the movement is made, and even before such signals appear in parietal cortex (Mirpour, Arcizet, Ong, and Bisley, 2009) or the ventral prearcuate region of the prefrontal cortex (Bichot, Heard, Degennaro, and Desimone, 2015)? Tracking recognition with viewing behavior statistics could enable “recognition psychophysics” that would enhance our understanding of the MTL’s functional position within a sensory-motor loop.

Findings of neural memory signals locked to eye movement could also motivate a parallel analysis of rodent exploratory behavior. Similar to the Visual Paired Comparison Task used in primates, rodents performing the “Novel Object Recognition Task” preferentially explore a novel object when it is simultaneously presented alongside a previously presented object (Ennaceur and Delacour, 1988). Lesion studies in rodents have shown that the hippocampus is necessary for this preferential exploration (Clark, Zola, and Squire, 2000), and neurons in the rodent hippocampus have also been shown to respond selectively to novel objects (O'Keefe, 1976). Although analysis of time-locked neural signals to exploratory behavior in rodents is more challenging than in primates (where tracking eye movement exploration of an image provides a highly time-resolved behavioral event), it is feasible that rodent exploratory behaviors such as sniffing and head-scanning are similarly locked to neural responses systematically modulated by stimulus novelty.

Does viewing behavior depend on an MTL representation of space?

To date, the search for an allocentric (i.e., world-based reference frame) representation of visual space has not focused on MTL structures, since primate MTL is not generally investigated for its role in producing actions in real-time, from fixation to fixation. However, behavioral phenomena have motivated the search for such an allocentric spatial map in the primate brain. The behavioral phenomenon of IOR, for example, is robust to intervening saccades. Specifically, IOR can be observed at an attended screen location despite an eye movement that displaces the attended location on the retina (Hilchey, Klein, Satel, and Wang, 2012; Pertzov et al., 2010; Posner and Cohen, 1984). This finding has led researchers to hypothesize that a map of visual space with a non-retinal frame of reference exists in the brain. Evidence suggests that this neural spatial representation could be a head-centered frame of reference, or, alternatively, an “environmental” (Hilchey et al., 2012; Posner and Cohen, 1984) or allocentric frame of reference, enabling a person to fixate multiple times across a scene and even turn her head without altering the neurally coded location of the attended stimulus. As described above, there is evidence that the MTL may code visual space in such a non-retinotopic reference frame. By providing a non-retinal, allocentric neural representation of visual space, built up by association between distinct elements of the visual environment, the MTL could support memory of a particular scene or context. This representation could be used to direct eye movements to salient parts of an absent image (re-enactment), or, as postulated earlier in this review, to inform orienting behaviors that must rely on non-retinal maps of remembered visual space. Specifically, this non-retinal map of visual space could be used for directing eye movements to remembered locations of relevant stimuli in the environment. However, the transformation of visuospatial information from a retinal to an allocentric frame of reference is not well-understood, even in rodents, where non-retinal maps locked to visual cues in the environment have been studied in the MTL for decades.

One candidate anatomical path by which the MTL may receive allocentric spatial information and impact eye movements is through its reciprocal connections with the posterior cingulate cortex (PCC) and the retrosplenial cortex (RSC). In monkeys, neurons in the PCC and the RSC exhibited allocentric place-selective responses during virtual navigation (Sato, Sakata, Tanaka, and Taira, 2006; 2010), similar to rodent place cells. In addition, there is some evidence that neurons in the PCC demonstrate an allocentric reference frame for visuospatial events, as measured in a task in which monkeys made saccades to a set of targets (Dean and Platt, 2006). Kravitz and colleagues (Kravitz, Saleem, Baker, and Mishkin, 2011) suggested that a parieto-medial temporal pathway, linking the caudal intraparietal lobule with the MTL, via the PCC and the RSC, plays a particular role in spatial navigation. Both the RSC and the PCC originate substantial inputs to the entorhinal cortex, presubiculum and parasubiculum of the hippocampal formation, as well as to the parahippocampal cortex (Kobayashi and Amaral, 2007). These connections are largely reciprocal, with the heaviest projections from the MTL targeting the RSC (Kobayashi and Amaral, 2003). An important topic for future research is understanding whether place-selective responses in the MTL are similar during virtual navigation and visual exploration, as well as understanding how mnemonic signals from the MTL may interact with attentional signals in the parietal and frontal cortices in support of oculomotor decisions.

Another potential area for neurophysiological investigation of MTL spatial circuitry is the nucleus reuniens of the thalamus. In rodents, the nucleus reuniens connects the prefrontal cortex (PFC) to the hippocampus, and the integrity of this connection is required for observing goal-specific spatial representations, like spatial trajectories, in the rat hippocampus (Ito, Zhang, Witter, Moser, and Moser, 2015). It is currently unknown whether this is also true in the primate. Would hippocampal spatial representations of where a monkey is looking vanish with deactivation of the nucleus reuniens, or would deactivation affect only the spatial representations that were specific to certain goals? In the context of human behavior, if a person looked at the silverware drawer in the process of making tea or in the process of making a sandwich, would a hippocampal spatial view cell selective for looking at the silverware drawer be equally active in both cases? The rodent data suggest that we would observe goal-dependent modulations in hippocampal spatial activity, and that this would be require input from the PFC through the nucleus reunions, but the work to demonstrate this remains to be done.

What structures within the oculomotor system are targets of MTL output?

Although the neural mechanics of how hippocampal-dependent memory affects the oculomotor system are unknown, the primate oculomotor system has been well studied. Oculomotor decisions of where and when to look emerge in a number of brain areas, including the prefrontal cortex (Funahashi, Bruce, and Goldman-Rakic, 1989; Fuster and Alexander, 1971; Kim and Shadlen, 1999), posterior parietal cortex (Gnadt and Andersen, 1988; Mountcastle, Lynch, Georgopoulos, Sakata, and Acuna, 1975), frontal eye fields (Gold and Shadlen, 2000), superior colliculus (Horwitz and Newsome, 2001) and the caudate (Ding and Gold, 2010), to name a few. Additionally, electrical stimulation of several of these areas has been shown to induce reliable saccadic eye movements (parietal cortex: (Shibutani, Sakata, and Hyvärinen, 1984), frontal cortex: (Robinson and Fuchs, 1969), midbrain: (Robinson, 1972)). Accordingly, all of these areas are candidate regions for direct or indirect targets of MTL output projections that influence eye movements. The hypothetical output from the MTL to oculomotor structures can be theoretically parsed into two categories of eye movement modulation: 1) Where to look (e.g., looking at the manipulated region of a previously viewed image) and 2) When to look (e.g., looking at remembered images with longer fixation durations). Oculomotor decision-making for where to look next has been investigated for decades largely by characterizing neural correlates of spatial attention in many brain areas with a retinal frame of reference. In these oculomotor brain areas, neurons frequently represent the location of the upcoming eye movement as well as the location of visual spatial attention. Neurons in these oculomotor areas therefore are not pure motor neurons, and may constitute a “salience map” of the visual world (Colby and Goldberg, 1999; Falkner, Krishna, and Goldberg, 2010), where the magnitude of a cell’s firing rate can be interpreted reflecting the salience of the piece of the world located in the cell’s response field. If the cell’s firing rate is high, representing the location of spatial attention, then a saccade to the cell’s response field is likely imminent. Parietal cortex neurons that exhibit persistent activity that bridges the period of delay imposed between introduction of a sensory stimulus and a movement towards it, were once dubbed “command neurons” (Mountcastle et al., 1975). These neurons, along with the “command neurons” in other areas, are logical targets for MTL output that influences the saccadic decision of where to look.

The impact of memory on oculomotor decisions of when to look may be a separate pathway from the one that leaves the MTL to influence where to look. It is possible that memory impacts when to look via an MTL projection to lower arousal centers, such as locus coeruleus (LC), perhaps causing a general slowing in the rate of making saccades for remembered stimuli. Another potential behavioral impact of MTL output to the LC could manifest as change in pupil size. The LC can control pupil size and is also thought to be the site of cognitive modulation of pupil diameter (Samuels and Szabadi, 2008). Supportive of the idea that a single output from the MTL to the LC may cause both memory-dependent behaviors is the fact that these behaviors share an onset time. Pupil constriction with repeated images and the lengthening of fixation duration with repeated images manifest at about the same time (~850 ms after image onset (Bradley and Lang, 2015; Miriam Meister and Seth Koenig, personal communication)). Future research investigating MTL output to oculomotor structures could constitute a major step forward for understanding the generation of eye movements and the general neural mechanisms by which memory influences behavior.

4. Conclusions

We propose that analyzing eye movements during natural viewing behavior is a promising way to identify the MTL’s contribution to a working nervous system. Looking behavior is guided by memory, is sensitive to MTL damage, and is a natural behavior that can be assessed in both human and non-human primates. Spatial and mnemonic neural representations within the MTL are also modulated by viewing behavior. Importantly, the oculomotor areas of the primate brain are well-studied, and a large literature documenting their anatomy and the signals they carry provides a scaffold for increasing our understanding of MTL function.

Converging evidence suggests that MTL neurons can represent visual space in a non-retinal frame of reference, where neural activity is spatially selective and aligned to the environment instead of the monkey’s body. This line of research in the primate has the potential to connect to a vast body of literature documenting this activity in rodents. While the memory and the spatial traditions of MTL research have long been separated (Eichenbaum and Cohen, 2014), some points of convergence are emerging (Schiller, Eichenbaum, Buffalo, Davachi, Foster, Leutgeb, and Ranganath, 2015). We are optimistic that additional advances can be made through a full characterization of spatial and mnemonic representations in the MTL as revealed through looking behavior. This avenue of research promises to bridge the gap between primate and rodent literatures, between memory and spatial traditions, and most progressively, between sensation and memory-informed movement.

Figure 2. Neural activity in monkey hippocampus is locked to eye movements.

LEFT: Trial-averaged LFP is aligned to saccade onset. Theta oscillations show phase alignment across trials after the saccade, which translates to visible theta oscillations in the trial-averaged LFP (scale bar = 600 ms). Red trace shows raw, trial-averaged LFP, and blue trace shows theta-filtered LFP. RIGHT: Theta (6.7-11.6 Hz) power for the trial-averaged LFP for pre-saccade (green) and post-saccade (pink) periods. Theta power was significantly higher for the post-saccade period than for the pre-saccade period (*P < 0.05). Figure from Jutras, Fries and Buffalo (2013).

Acknowledgments

This work was supported by National Institute of Mental Health Grants MH080007 and MH093807 (to E.A.B.) and the National Institutes of Health, ORIP-0D010425.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Althoff RR, Cohen NJ. Eye-movement-based memory effect: a reprocessing effect in face perception. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1999;25:997–1010. doi: 10.1037//0278-7393.25.4.997. [DOI] [PubMed] [Google Scholar]

- Bichot NP, Heard MT, Degennaro EM, Desimone R. Article A Source for Feature-Based Attention in the Prefrontal Cortex Article A Source for Feature-Based Attention in the Prefrontal Cortex. Neuron. 2015;88:1–13. doi: 10.1016/j.neuron.2015.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley MM, Lang PJ. Memory, emotion, and pupil diameter: Repetition of natural scenes. Psychophysiology. 2015;52:1186–93. doi: 10.1111/psyp.12442. [DOI] [PubMed] [Google Scholar]

- Buffalo E. a., Ramus SJ, Clark RE, Teng E, Squire LR, Zola SM. Dissociation Between the Effects of Damage to Perirhinal Cortex and Area TE. Learning & Memory. 1999;6:572–599. doi: 10.1101/lm.6.6.572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churchland PS, Ramachandran VS, Sejnowski TJ. Koch C, Davis J, editors. A Critique of Pure Vision. Large-Scale Neuronal Theories of the Brain. 1994:24–60. [Google Scholar]

- Clark RE, Zola SM, Squire LR. Impaired recognition memory in rats after damage to the hippocampus. The Journal of Neuroscience : The Official Journal of the Society for Neuroscience. 2000;20:8853–8860. doi: 10.1523/JNEUROSCI.20-23-08853.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colby CL, Goldberg ME. Space and attention in parietal cortex. Annual Review of Neuroscience. 1999;22:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- Crutcher MD, Calhoun-Haney R, Manzanares CM, Lah JJ, Levey AI, Zola SM. Eye tracking during a visual paired comparison task as a predictor of early dementia. American Journal of Alzheimer's Disease and Other Dementias. 2009;24:258–266. doi: 10.1177/1533317509332093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding L, Gold JI. Caudate encodes multiple computations for perceptual decisions. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2010;30:15747–15759. doi: 10.1523/JNEUROSCI.2894-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H, Cohen Neal J. Can We Reconcile the Declarative Memory and Spatial Navigation Views on Hippocampal Function? Neuron. 2014;83:764–770. doi: 10.1016/j.neuron.2014.07.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekstrom a. D., Kahana MJ, Caplan JB, Fields T. a., Isham E. a., Newman EL, Fried I. Cellular networks underlying human spatial navigation. Nature. 2003;425:184–188. doi: 10.1038/nature01964. [DOI] [PubMed] [Google Scholar]

- Ennaceur A, Delacour J. A new one-trial test for neurobiological studies of memory in rats. 1: Behavioral data. Behavioural brain research. 1988;31:47–59. doi: 10.1016/0166-4328(88)90157-x. [DOI] [PubMed] [Google Scholar]

- Fagan JF. Memory in the infant. Journal of experimental child psychology. 1970;9:217–226. doi: 10.1016/0022-0965(70)90087-1. [DOI] [PubMed] [Google Scholar]

- Falkner AL, Krishna BS, Goldberg ME. Surround suppression sharpens the priority map in the lateral intraparietal area. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2010;30:12787–12797. doi: 10.1523/JNEUROSCI.2327-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feigenbaum JD, Rolls ET. Allocentric and egocentric spatial information processing in the hippocampal formation of the behaving primate. Psychobiology. 1991;19:21–40. [Google Scholar]

- Findlay JM, Gilchrist ID. Active Vision : The Psychology of Looking and Seeing. Oxford University Press; Oxford ; New York: 2003. [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. Mnemonic Coding of Visual Space in the Monkey ’ s Dorsolateral Prefrontal Cortex. Journal of Neurophysiology. 1989;61:331–349. doi: 10.1152/jn.1989.61.2.331. [DOI] [PubMed] [Google Scholar]

- Fuster JM, Alexander GE. Neuron Activity Related to Short-Term Memory. Science. 1971;173:652–654. doi: 10.1126/science.173.3997.652. [DOI] [PubMed] [Google Scholar]

- Geisler WS, Ringach D. Natural Systems Analysis. Visual Neuroscience. 2009;26:1–1. doi: 10.1017/s0952523808081005. [DOI] [PubMed] [Google Scholar]

- Georges-François P, Rolls ET, Robertson RG. Spatial view cells in the primate hippocampus: allocentric view not head direction or eye position or place. Cerebral cortex. 1999;9:197–212. doi: 10.1093/cercor/9.3.197. [DOI] [PubMed] [Google Scholar]

- Gilchrist ID, Harvey M. Refixation frequency and memory mechanisms in visual search. Current Biology. 2000;10:1209–1212. doi: 10.1016/s0960-9822(00)00729-6. [DOI] [PubMed] [Google Scholar]

- Gnadt JW, Andersen RA. Memory related motor planning activity in posterior parietal cortex of macaque. Experimental Brain Research. 1988;70:216–220. doi: 10.1007/BF00271862. [DOI] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. Representation of a perceptual decision in developing oculomotor commands. Nature. 2000;404:390–394. doi: 10.1038/35006062. [DOI] [PubMed] [Google Scholar]

- Hannula DE, Althoff RR, Warren DE, Riggs L, Cohen NJ, Ryan JD. Worth a glance: using eye movements to investigate the cognitive neuroscience of memory. Frontiers in Human Neuroscience. 2010;4:166–166. doi: 10.3389/fnhum.2010.00166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayhoe MM, Shrivastava A, Mruczek R, Pelz JB. Visual memory and motor planning in a natural task. Journal of Vision. 2003;3:49–63. doi: 10.1167/3.1.6. [DOI] [PubMed] [Google Scholar]

- Heaver B, Hutton SB. Keeping an eye on the truth? Pupil size changes associated with recognition memory. Memory. 2011;19:398–405. doi: 10.1080/09658211.2011.575788. [DOI] [PubMed] [Google Scholar]

- Hilchey MD, Klein RM, Satel J, Wang Z. Oculomotor inhibition of return: How soon is it “recoded” into spatiotopic coordinates? Attention, Perception, & Psychophysics. 2012;74:1145–1153. doi: 10.3758/s13414-012-0312-1. [DOI] [PubMed] [Google Scholar]

- Hoffman KL, Dragan MC, Leonard TK, Micheli C, Montefusco-siegmund R, Taufik A, Valiante TA. Saccades during visual exploration align hippocampal 3-8 Hz rhythms in human and non-human primates. Frontiers in Systems Neuroscience. 2013:7. doi: 10.3389/fnsys.2013.00043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horwitz GD, Newsome WT. Target selection for saccadic eye movements: prelude activity in the superior colliculus during a direction-discrimination task. Journal of Neurophysiology. 2001;86:2543–2558. doi: 10.1152/jn.2001.86.5.2543. [DOI] [PubMed] [Google Scholar]

- Huerta PT, Lisman JE. Bidirectional synaptic plasticity induced by a single burst during cholinergic theta oscillation in CA1 in vitro. Neuron. 1995;15:1053–1063. doi: 10.1016/0896-6273(95)90094-2. [DOI] [PubMed] [Google Scholar]

- Humphrey NK. ' Interest ' and ' pleasure ': two determinants of a monkey ' s visual preferences. Perception. 1972;1:395–416. doi: 10.1068/p010395. [DOI] [PubMed] [Google Scholar]

- Hyman JM, Wyble BP, Goyal V, Rossi CA, Hasselmo ME. Stimulation in hippocampal region CA1 in behaving rats yields long-term potentiation when delivered to the peak of theta and long-term depression when delivered to the trough. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2003;23:11725–11731. doi: 10.1523/JNEUROSCI.23-37-11725.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Irwin DE, Zelinsky GJ. Eye movements and scene perception: memory for things observed. Perception & Psychophysics. 2002;64:882–895. doi: 10.3758/bf03196793. [DOI] [PubMed] [Google Scholar]

- Ito HT, Zhang SJ, Witter MP, Moser EI, Moser MB. A prefrontal–thalamo– hippocampal circuit forgoal-directed spatialnavigation. Nature. 2015;522:50–5. doi: 10.1038/nature14396. [DOI] [PubMed] [Google Scholar]

- Jacobs J, Weidemann CT, Miller JF, Solway A, Burke JF, Wei X-X, Suthana N, Sperling MR, Sharan AD, Fried I, Kahana MJ. Direct recordings of grid-like neuronal activity in human spatial navigation. Nature Neuroscience. 2013:8–11. doi: 10.1038/nn.3466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johansson R, Johansson M. Look Here, Eye Movements Play a Functional Role in Memory Retrieval. Psychological Science. 2013;25:236–42. doi: 10.1177/0956797613498260. [DOI] [PubMed] [Google Scholar]

- Jutras MJ, Buffalo EA. Recognition memory signals in the macaque hippocampus. Proceedings of the National Academy of Sciences of the United States of America. 2010a;107:401–406. doi: 10.1073/pnas.0908378107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jutras MJ, Buffalo EA. Synchronous neural activity and memory formation. Current Opinion in Neurobiology. 2010b;20:150–155. doi: 10.1016/j.conb.2010.02.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jutras MJ, Buffalo EA. Oscillatory correlates of memory in non-human primates. NeuroImage. 2014;85:694–701. doi: 10.1016/j.neuroimage.2013.07.011. Pt 2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jutras MJ, Fries P, Buffalo EA. Gamma-band synchronization in the macaque hippocampus and memory formation. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2009;29:12521–12531. doi: 10.1523/JNEUROSCI.0640-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jutras MJ, Fries P, Buffalo EA. Oscillatory activity in the monkey hippocampus during visual exploration and memory formation. Proceedings of the National Academy of Sciences of the United States of America. 2013;117:13144–13149. doi: 10.1073/pnas.1302351110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kafkas A, Montaldi D. Recognition memory strength is predicted by pupillary responses at encoding while fixation patterns distinguish recollection from familiarity. Quarterly journal of experimental psychology. 2011;64:1971–1989. doi: 10.1080/17470218.2011.588335. [DOI] [PubMed] [Google Scholar]

- Killian NJ, Jutras MJ, Buffalo EA. A map of visual space in the primate entorhinal cortex. Nature. 2012;5:3–6. doi: 10.1038/nature11587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killian NJ, Potter SM, Buffalo EA. Saccade direction encoding in the primate entorhinal cortex during visual exploration. Proceedings of the National Academy of Sciences. 2015;20:1417059. doi: 10.1073/pnas.1417059112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim JN, Shadlen MN. Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nature Neuroscience. 1999;2:176–185. doi: 10.1038/5739. [DOI] [PubMed] [Google Scholar]

- Kit D, Katz L, Sullivan B, Snyder K, Ballard D, Hayhoe M. Eye movements, visual search and scene memory, in an immersive virtual environment. PLoS ONE. 2014;9:1–11. doi: 10.1371/journal.pone.0094362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein R. Nature. 1988;334:430–431. doi: 10.1038/334430a0. [DOI] [PubMed] [Google Scholar]

- Kobayashi Y, Amaral DG. Macaque monkey retrosplenial cortex: II. Cortical afferents. Journal of Comparative Neurology. 2003;466:48–79. doi: 10.1002/cne.10883. [DOI] [PubMed] [Google Scholar]

- Kobayashi Y, Amaral DG. Macaque monkey retrosplenial cortex: III. Cortical efferents. Journal of Comparative Neurology. 2007;502:810–833. doi: 10.1002/cne.21346. [DOI] [PubMed] [Google Scholar]

- Kravitz DJ, Saleem KS, Baker CI, Mishkin M. A new neural framework for visuospatial processing. Nature Reviews Neuroscience. 2011;12:217–230. doi: 10.1038/nrn3008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreiman G, Koch C, Fried I. Category-specific visual responses of single neurons in the human medial temporal lobe. Nature Neuroscience. 2000;3:946–953. doi: 10.1038/78868. [DOI] [PubMed] [Google Scholar]

- Laeng B, Bloem IM, D'Ascenzo S, Tommasi L. Scrutinizing visual images: The role of gaze in mental imagery and memory. Cognition. 2014;131:263–283. doi: 10.1016/j.cognition.2014.01.003. [DOI] [PubMed] [Google Scholar]

- Laeng B, Teodorescu D-S. Eye scanpath during visual imagery reenact those of perception of the same visual scene. Cognitive Science. 2002;26:207–231. [Google Scholar]

- Land M, Mennie N, Rusted J. The roles of vision and eye movements in the control of activities of daily living. Perception. 1999;28:1311–1328. doi: 10.1068/p2935. [DOI] [PubMed] [Google Scholar]

- Loftus GR. Eye fixations and recognition memory for pictures. Cognitive Psychology. 1972;3:525–551. [Google Scholar]

- Manns JR, Stark CE, Squire LR. The visual paired-comparison task as a measure of declarative memory. Proceedings of the National Academy of Sciences of the United States of America. 2000;97:12375–12379. doi: 10.1073/pnas.220398097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCartney H, Johnson AD, Weil ZM, Givens B. Theta reset produces optimal conditions for long-term potentiation. Hippocampus. 2004;14:684–687. doi: 10.1002/hipo.20019. [DOI] [PubMed] [Google Scholar]

- McKee RD, Squire LR. On the development of declarative memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1993;19:397–404. doi: 10.1037//0278-7393.19.2.397. [DOI] [PubMed] [Google Scholar]

- Meister MLR, Killian NJ, Buffalo EA. Allocentric representation in primate entorhinal neurons (Program No. 769.723) Society for Neuroscience; San Diego, CA: 2013. [Google Scholar]

- Mirpour K, Arcizet F, Ong WS, Bisley JW. Been there, seen that: a neural mechanism for performing efficient visual search. Journal of neurophysiology. 2009;102:3481–3491. doi: 10.1152/jn.00688.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molitor RJ, Ko PC, Hussey EP, Ally BA. Memory-related eye movements challenge behavioral measures of pattern completion and pattern separation. Hippocampus. 2014;24:666–672. doi: 10.1002/hipo.22256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Motter BC, Belky EJ. The guidance of eye movements during active visual search. Vision Research. 1998;38:1805–1815. doi: 10.1016/s0042-6989(97)00349-0. [DOI] [PubMed] [Google Scholar]

- Mountcastle VB, Lynch JC, Georgopoulos a., Sakata H, Acuna C. Posterior parietal association cortex of the monkey: command functions for operations within extrapersonal space. Journal of Neurophysiology. 1975;38:871–908. doi: 10.1152/jn.1975.38.4.871. [DOI] [PubMed] [Google Scholar]

- Naber M, Frässle S, Rutishauser U, Einhauser W. Pupil size signals novelty and predicts later retrieval success for declarative memories of natural scenes. Journal of Vision. 2013;13:1–20. doi: 10.1167/13.2.11. [DOI] [PubMed] [Google Scholar]

- Nelson WW, Loftus GR. The functional visual field during picture viewing. Journal of Experimental Psychology: Human Learning and Memory. 1980;6:391–399. [PubMed] [Google Scholar]

- Nemanic S, Alvarado MC, Bachevalier J. The hippocampal/parahippocampal regions and recognition memory: insights from visual paired comparison versus object-delayed nonmatching in monkeys. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2004;24:2013–2026. doi: 10.1523/JNEUROSCI.3763-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nishijo H, Ono T, Eifuku S, Tamura R. The relationship between monkey hippocampus place-related neural activity and action in space. Neuroscience Letters. 1997;226:57–60. doi: 10.1016/s0304-3940(97)00255-3. [DOI] [PubMed] [Google Scholar]

- Nowicka A, Ringo JL. Eye Position-Sensitive Units In Hippocampal-Formation And In Inferotemporal Cortex Of The Macaque Monkey. European Journal Of Neuroscience. 2000;12:751–759. doi: 10.1046/j.1460-9568.2000.00943.x. [DOI] [PubMed] [Google Scholar]

- O'Keefe J. Place units in the hippocampus of the freely moving rat. Experimental Neurology. 1976;51:78–109. doi: 10.1016/0014-4886(76)90055-8. [DOI] [PubMed] [Google Scholar]

- O'Keefe J, Recce ML. Phase relationship between hippocampal place units and the EEG theta rhythm. Hippocampus. 1993;3:317–330. doi: 10.1002/hipo.450030307. [DOI] [PubMed] [Google Scholar]

- Ono T, Nakamura K, Nishijo H, Eifuku S. Monkey hippocampal neurons related to spatial and nonspatial functions. Journal of neurophysiology. 1993;70:1516–1529. doi: 10.1152/jn.1993.70.4.1516. [DOI] [PubMed] [Google Scholar]

- Pertzov Y, Avidan G, Zohary E. Accumulation of visual information across multiple fixations Yoni Pertzov. Journal of Vision. 2009;9:1–12. doi: 10.1167/9.10.2. [DOI] [PubMed] [Google Scholar]

- Pertzov Y, Zohary E, Avidan G. Rapid formation of spatiotopic representations as revealed by inhibition of return. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2010;30:8882–8887. doi: 10.1523/JNEUROSCI.3986-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posner MI, Cohen Y. Components of visual orienting. 1984:531–556. [Google Scholar]

- Reynolds GD. Infant visual attention and object recognition. Behavioural Brain Research. 2015;285:34–43. doi: 10.1016/j.bbr.2015.01.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ringo JL, Sobotka S. Eye movements modulate activity in hippocampal, parahippocampal, and inferotemporal neurons. Journal of Neurophysiology. 1994;71:1285–1288. doi: 10.1152/jn.1994.71.3.1285. [DOI] [PubMed] [Google Scholar]

- Robertson RG, Rolls ET, Georges-François P, Panzeri S. Head direction cells in the primate pre-subiculum. Hippocampus. 1999;9:206–219. doi: 10.1002/(SICI)1098-1063(1999)9:3<206::AID-HIPO2>3.0.CO;2-H. [DOI] [PubMed] [Google Scholar]

- Robinson DA. Eye movements evoked by collicular stimulation in the alert monkey. Vision Research. 1972;12:1795–1808. doi: 10.1016/0042-6989(72)90070-3. [DOI] [PubMed] [Google Scholar]

- Robinson DA, Fuchs AF. Eye movements evoked by stimulation of frontal eye fields. Journal of Neurophysiology. 1969;32:637–648. doi: 10.1152/jn.1969.32.5.637. [DOI] [PubMed] [Google Scholar]

- Rutishauser U, Ye S, Koroma M, Tudusciuc O, Ross IB, Chung JM, Mamelak AN. Representation of retrieval confidence by single neurons in the human medial temporal lobe. Nature Neuroscience. 2015:18. doi: 10.1038/nn.4041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ryan JD, Althoff RR, Whitlow S, Cohen NJ. Amnesia is a Deficit in Relational Memory. Psychological Science. 2000;11:454–461. doi: 10.1111/1467-9280.00288. [DOI] [PubMed] [Google Scholar]

- Samuels ER, Szabadi E. Functional neuroanatomy of the noradrenergic locus coeruleus: its roles in the regulation of arousal and autonomic function part II: physiological and pharmacological manipulations and pathological alterations of locus coeruleus activity in humans. Current Neuropharmacology. 2008;6:254–285. doi: 10.2174/157015908785777193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato N, Sakata H, Tanaka YL, Taira M. Navigation-associated medial parietal neurons in monkeys. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:17001–17006. doi: 10.1073/pnas.0604277103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato N, Sakata H, Tanaka YL, Taira M. Context-dependent place-selective responses of the neurons in the medial parietal region of macaque monkeys. Cerebral Cortex. 2010;20:846–858. doi: 10.1093/cercor/bhp147. [DOI] [PubMed] [Google Scholar]

- Schiller D, Eichenbaum H, Buffalo EA, Davachi L, Foster DJ, Leutgeb S, Ranganath C. Memory and Space: Towards an Understanding of the Cognitive Map. Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2015;35:13904–13911. doi: 10.1523/JNEUROSCI.2618-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Wilson DA, Radman T, Scharfman H, Lakatos P. Dynamics of Active Sensing and perceptual selection. Current Opinion in Neurobiology. 2010;20:172–176. doi: 10.1016/j.conb.2010.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shariat Torbaghan S, Yazdi D, Mirpour K, Bisley JW. Inhibition of return in a visual foraging task in non-human subjects. Vision Research. 2012;74:2–9. doi: 10.1016/j.visres.2012.03.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shepard RN. Recognition Memory for Words , Sentences , and Pictures. Journal of Verbal Learning and Behavior. 1967;6:156–162. [Google Scholar]

- Shibutani H, Sakata H, Hyvärinen J. Saccade and blinking evoked by microstimulation of the posterior parietal association cortex of the monkey. Experimental Brain Research. 1984;55:1–8. doi: 10.1007/BF00240493. [DOI] [PubMed] [Google Scholar]

- Smith CN, Hopkins RO, Squire LR. Experience-dependent eye movements, awareness, and hippocampus-dependent memory. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2006;26:11304–11312. doi: 10.1523/JNEUROSCI.3071-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith CN, Squire LR. Experience-dependent eye movements reflect hippocampus-dependent (aware) memory. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 2008;28:12825–12833. doi: 10.1523/JNEUROSCI.4542-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sobotka S, Nowicka A, Ringo JL. Activity linked to externally cued saccades in single units recorded from hippocampal, parahippocampal, and inferotemporal areas of macaques. Journal of Neurophysiology. 1997;78:2156–2163. doi: 10.1152/jn.1997.78.4.2156. [DOI] [PubMed] [Google Scholar]

- Sobotka S, Ringo JL. Saccadic eye movements, even in darkness, generate event-related potentials recorded in medial sputum and medial temporal cortex. Brain Research. 1997;756:168–173. doi: 10.1016/s0006-8993(97)00145-5. [DOI] [PubMed] [Google Scholar]

- Sobotka S, Zuo W, Ringo JL. Is the functional connectivity within temporal lobe influenced by saccadic eye movements? Journal of Neurophysiology. 2002;88:1675–1684. doi: 10.1152/jn.2002.88.4.1675. [DOI] [PubMed] [Google Scholar]

- Sommer MA, Wurtz RH. A pathway in primate brain for internal monitoring of movements. Science. 2002;296:1480–1482. doi: 10.1126/science.1069590. [DOI] [PubMed] [Google Scholar]

- Sommer MA, Wurtz RH. What the brain stem tells the frontal cortex. I. Oculomotor signals sent from superior colliculus to frontal eye field via mediodorsal thalamus. Journal of Neurophysiology. 2004;91:1381–1402. doi: 10.1152/jn.00738.2003. [DOI] [PubMed] [Google Scholar]

- Sommer M. a., Wurtz RH. Influence of the thalamus on spatial visual processing in frontal cortex. Nature. 2006;444:374–377. doi: 10.1038/nature05279. [DOI] [PubMed] [Google Scholar]

- Sommer M. a., Wurtz RH. Brain circuits for the internal monitoring of movements. Annual Review of Neuroscience. 2008;31:317–338. doi: 10.1146/annurev.neuro.31.060407.125627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spivey MJ, Geng JJ. Oculomotor mechanisms activated by imagery and memory: Eye movements to absent objects. Psychological Research. 2001;65:235–241. doi: 10.1007/s004260100059. [DOI] [PubMed] [Google Scholar]

- Standing L. Learning 10,000 pictures. The Quarterly Journal of Experimental Psychology. 1973;25:207–222. doi: 10.1080/14640747308400340. [DOI] [PubMed] [Google Scholar]

- Standing L, Conezio J, Haber RN. Perception and memory for pictures: Single-trial learning of 2500 visual stimuli. Psychonomic Science. 1970;19:73–74. [Google Scholar]

- Tatler BW, Hayhoe MM, Land MF, Ballard DH. Eye guidance in natural vision: reinterpreting salience. Journal of Vision. 2011;11:5–5. doi: 10.1167/11.5.5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taube JS, Muller RU, Ranck JB. Head-direction cells recorded from the postsubiculum in freely moving rats. I. Description and quantitative analysis. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 1990;10:420–435. doi: 10.1523/JNEUROSCI.10-02-00420.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Linde I, Rajashekar U, Bovik AC, Cormack LK. Visual memory for fixated regions of natural images dissociates attraction and recognition. Perception. 2009;38:1152–1171. doi: 10.1068/p6142. [DOI] [PubMed] [Google Scholar]

- Wang Z, Klein RM. Searching for inhibition of return in visual search: A review. Vision Research. 2010;50:220–228. doi: 10.1016/j.visres.2009.11.013. [DOI] [PubMed] [Google Scholar]

- Wilson FAW, Goldman-Rakic PS. Viewing preferences of rhesus monkeys related to memory for complex pictures, colours and faces. Behavioural Brain Research. 1994;60:79–89. doi: 10.1016/0166-4328(94)90066-3. [DOI] [PubMed] [Google Scholar]

- Wurtz RH, Joiner WM, Berman RA. Neuronal mechanisms for visual stability: progress and problems. Philosophical transactions of the Royal Society of London. Series B, Biological sciences. 2011;366:492–503. doi: 10.1098/rstb.2010.0186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zingale CM, Kowler E. Planning sequences of saccades. Vision Research. 1987;27:1327–1341. doi: 10.1016/0042-6989(87)90210-0. [DOI] [PubMed] [Google Scholar]

- Zola SM, Manzanares CM, Clopton P, Lah JJ, Levey AI. A Behavioral Task Predicts Conversion to Mild Cognitive Impairment and Alzheimer's Disease. American Journal of Alzheimer's Disease and Other Dementias. 2012;28:179–184. doi: 10.1177/1533317512470484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zola SM, Squire LR, Teng E, Stefanacci L, Buffalo EA, Clark RE. Impaired Recognition Memory in Monkeys after Damage Limited to the Hippocampal Region. Journal of Neuroscience. 2000;20:451–463. doi: 10.1523/JNEUROSCI.20-01-00451.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]