Abstract

Purpose

The field of improving health care has been achieving more significant results in outcomes at scale in recent years. This has raised legitimate questions regarding the rigor, attribution, generalizability and replicability of the results. This paper describes the issue and outlines questions to be addressed in order to develop an epistemological paradigm that responds to these questions.

Questions

We need to consider the following questions: (i) Did the improvements work? (ii) Why did they work? (iii) How do we know that the results can be attributed to the changes made? (iv) How can we replicate them? (Note, the goal is not to copy what was done, but to affect factors that can yield similar results in a different context.)

Next steps

Answers to these questions will help improvers find ways to increase the rigor of their improvements, attribute the results to the changes made and better understand what is context specific and what is generalizable about the improvement.

Keywords: improvement, learning, complex adaptive systems, implementation, delivery

Introduction

This article raises an important issue in the field of improving healthcare today: ‘How do we learn about improving healthcare, so that we can make our improvement efforts more rigorous, attributable, generalizable and replicable?’ (See Fig. 1). Intended to outline what we know and what remains to be answered, the article represents a synthesis and analysis of the knowledge and experiences of the authors and reviewers. Although we acknowledge that the improvement community's opinions differ regarding the definition of certain terms, this article is framed by the working definitions found in Table 1. The paper concludes with key questions that need to be addressed in order to advance the field of healthcare improvement.

Figure 1.

Codifying improvement.

Table 1.

Key terms

| Key term | Definition |

|---|---|

| Process | The sequence of steps that converts inputs from suppliers to outputs for recipients. All work can be represented in the form of processes: clinical algorithms, patient materials, information flow. More often, processes of care delivery represent flows of several of the aforementioned types. |

| System | The sum total of all processes and other elements aimed at producing a common output. One can view the system of care in an HIV clinic as all the care processes that go into caring for patients with HIV. |

| Improving healthcare | The actions taken to ensure that interventions established to be efficacious are implemented effectively every time they are needed. |

| Complex adaptive system (CAS) | A CAS is comprised of individuals who learn, self-organize, evolve in response to changes in their internal and external environment, and inter-relate in a non-linear fashion to accomplish their work and tasks [3, 5]. |

| Time-series charts | Time-series charts are a graphical presentation of an indicator over time and are a common tool used to track continuous-quality-improvement data. Statistical process control was developed by Shewhart [28] and popularized by Deming [29] as a way to apply statistical methods to distinguish between natural or ‘common cause variation’ in a process (i.e. random variation that could be computed from a statistical model based on the Gaussian, Poisson or binomial distribution), versus ‘special cause variation,’ which is variation that follows certain defined patterns (e.g. 2 out of 3 successive points >2 SD from the mean) [30]. This adaptation of traditional statistical hypothesis testing into easily visualized tests is a practical way to add rigor to the interpretation of time-series charts. |

Background

The World Health Organization (WHO) describes quality care as care that is effective, efficient, accessible, acceptable, patient-centered, equitable and safe [1]. Yet, much of the care received in high-, middle- and low-income settings does not meet the WHO criteria, often due to the complexity of healthcare―which is why we need to improve healthcare [2]. For the purposes of this paper, we will use the following working definition for improving healthcare: ‘The actions taken to ensure that interventions established to be efficacious are implemented effectively every time they are needed.’

The multi-level structure found in healthcare settings exists as interconnected sets of autonomous healthcare providers, teams and units within healthcare organizations, nested within health systems. The dynamic human interactions between and among healthcare workers, patients, managers, payers and other actors and the variety of social, cultural, economic, historical, political and other factors within this multi-level structure put improving health care squarely in the arena of complex adaptive systems [3]. Complex adaptive systems are comprised of individuals who learn, self-organize and evolve in response to changes in their internal and external environment, and inter-relate in a non-linear fashion to accomplish their work and tasks [3, 4]. These complex adaptive systems, in which implementers operate, present unique challenges. They cannot be reduced to their component parts without the risk of negating the original intention of the intervention, therefore interventions must be implemented in a contextually adaptive manner in order to effectively work in any given system.

The issue

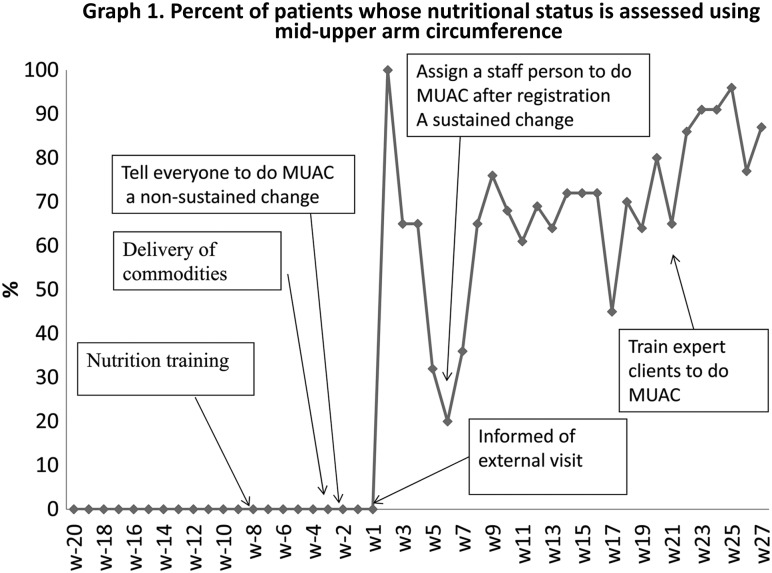

The field of improving health care has evolved to address the complex, interdependent, systemic nature of healthcare challenges, particularly through the use of adaptive, iterative testing and implementation of changes, and by empowering teams to use data in real time to do so. Teams and individuals who learn in these complex, adaptive systems use real-time data to assess whether introduced changes lead to an improvement in the outcome of interest—after which, they institute, adapt or discard these changes as needed, in a continuous cycle of testing and learning. This process is demonstrated in the example in Fig. 2.

Figure 2.

The aim of the improvement was to increase the proportion of HIV patients who received middle-upper arm circumference (MUAC) measurement in order to identify malnourished patients, and improve their nutritional status. The initial changes were to have nurses and physicians complete a nutrition-assessment training, provide them with the MUAC tapes, and ask them to measure and record the MUAC. However, these did not result in any improvement for the first few weeks (Graph 1). Then they achieved nearly 100% during the week of an external visit from the Ministry of Health, but this was not sustained. At this point, the health center engaged an improvement advisor to work with them. He set up a team comprised of the individuals who played roles in the process of care for HIV patients: receptionist, nurse, physician, pharmacist, and patient representative. They decided they would assess their progress on a weekly basis, using a time-series chart. The team decided to implement another change: to appoint one nurse to be in charge of performing MUAC right after registration. This led to an improvement of approximately 70%. The team discovered that patients skipped the MUAC station to be seen by the physician, or missed the nurse while she was out for a break. The team decided to test another change: involve expert patients in MUAC at the registration desk, including training them in MUAC measurement. This led to an improvement of approximately 90%. [Example from USAID Health Care Improvement Project (2007–2014)].

Improvements like these have generally relied on the use of time-series charts, which plot indicators of the improvement sought against frequent (daily or weekly) time intervals before, during and for a time period following the introduction of changes. At times, they have also used control limits, but as a rule, improvement has relied on analytic statistics, as opposed to enumerative statistics, to establish significance [5].

Improving healthcare engages all key stakeholders involved in the outcome of interest, including healthcare providers directly involved in delivering care, together with their supervisory and support structures, as required. This process can also include patients, families, communities and other stakeholders. By meaningfully engaging all key stakeholders, health teams are able to address systems and micro- and macro-political human-factors issues [6] and build ownership through restructuring processes. In addition, the adaptive, iterative nature of testing and learning allows the teams to implement changes appropriate to the local context and responsive to emergent and shifting dynamics among different actors and at various levels of the system.

Over the past two decades, the application of modern improvement methods has expanded beyond the administrative processes in facilities, where it was first applied (for example, to reduce waiting times) to include clinical improvements (like reducing the incidence of hospital-acquired infections) and more significant improvements in health care (like reducing secondary complications and decreases in mortality); and, moreover, achieving these outcomes and results at scale [7, 8]. This evolution has generated greater interest in improvement and simultaneously raised more questions regarding the validity, rigor, attribution, generalizability and replicability of the results.

In response to these legitimate questions, there have emerged two schools of thought. One school of thought calls for continuing to accept analytic statistics as sufficient evidence for improvement [5]. The other calls for subjecting improvement to the same enumerative statistical methods used in clinical research, such as the use of randomized control trials.

Discussion

The use of randomized controlled trials, the gold standard for clinical research, has been limited in the field of improving health care. In part, this is because randomized controlled trials often presume a linear, mechanistic system; however, improvement takes place within complex adaptive systems, which do not lend themselves neatly to this type of study. Furthermore, as illustrated in Fig. 1, our interventions must necessarily adapt to the context, which is often at odds with the conceptualization of improving health care as a fixed set of activities which can be studied through controlled trials [9]. Other constraints for the use of randomized, controlled trials include donor and host-country government constraints and ethical issues regarding randomization of interventions with proven efficacy, particularly, for those in low- and middle-income country settings [10, 11].

Improving health care is the act of taking an efficacious intervention from one setting and effectively implementing it in different contexts. It is this key element of adapting what works to new settings that sets improvement in contrast to clinical research [12, 13]. The study of these complex systems will therefore require different methods of inquiry. Such methods may include, but are not limited to, stepped-wedge designs, comparison groups with calculations of difference-in-difference, qualitative evaluations to understand why and how the interventions worked, and the use of mixed-methods’ approaches, including randomization and observation. Toward this end, there is a need not only for evidence-based practice, but also for ‘practice-based evidence’—which is relevant to the stakeholders responsible for implementation—and for systems that allow for rapid learning in order to build this knowledge. This knowledge may be generalizable or may need further adaption for a different context [14].

A 2015 literature review by Portela et al. [15] provided a useful overview of various methods that can be used to learn about improving health care and describes the strengths and weakness of various approaches that range from more traditional, experimental designs to quasi-experimental designs, as well as systematic reviews, program and process evaluations, qualitative methods and economic evaluations. The authors note that the dichotomy between designs classified as practical (‘aimed at producing change’) and those classified as scientific (‘aimed at producing new knowledge’) may be a false one, and that the field should find ways to optimize rigor and generalizability of studies, without compromising the importance of adaptability and context.

On this note, Davidoff et al. and other authors call for the demystification of the use of theory in improving health care [16], including more use of theory a priori to better understand how and why an improvement occurs and provide insight into the so-called ‘black box’ of improvement. Parry et al. provide a guide for a formative, theory-driven approach to evaluating improvement initiatives by defining three improvement phases for initiatives (innovation, testing, and scale up and spread), each defined by the degree of belief in the intervention, and each requiring a different evaluation approach [17].

Campbell et al. [18] also emphasize the importance of phasing; for example, in the use of randomized controlled trials for complex interventions. The authors describe a more flexible approach to the 2000 Medical Research Council framework [19] on this topic by considering an iterative, stepwise approach to building understanding of the context of the problem, intervention and evaluation, in order to obtain meaningful information from randomized, controlled trials of complex interventions.

Braithwaite et al. urge improvement teams to learn from the way clinicians adjust their behavior with their concept of Safety II: ‘[w]e must understand how frontline staff facilitate and manage their work flexibility and safely, instead of insisting on blind compliance or the standardization of their work’ [20].

Others have called attention to different ways to integrate adaptability with the fixed concept of randomized, controlled, trial interventions (delineating between standardization of, but not the form of, the intervention) [21] and the need for earlier pilot testing with iterative learning and non-linear evaluation processes, in order to more fully understand complex adaptive systems [22].

Furthermore, as a social intervention, improving health care is complex and, therefore, difficult to understand, design, implement, reproduce, describe and report. Frameworks, such as the Consolidated Framework for Implementation Research, developed by Damschroder et al. [23], provide guidance for ways to evaluate complex adaptive systems by classifying them into various domains, which describe various intervention and contextual factors relevant to the evaluation. The Template for Intervention Description and Replication (TIDieR) guidelines developed by Hoffmann et al. [24] and the Standards for Quality-Improvement Reporting Excellence (SQUIRE) Guidelines [25] provide a guide for improved reporting of interventions in order to improve the completeness of reporting and aid replicability [26].

Advancing the field: issues that need to be addressed

The work presented by the above-mentioned authors is rooted in the ideas of showing that improvement has occurred, why it has occurred, and how to better learn about improvement to make it happen more effectively and in other contexts. There is evidence to support these objectives and we must build upon this to move improvement forward. These are also the themes that lead us to the next level of questions for improvement.

In order to learn about improving health care, there is a need for thought leaders in improving health care, as well as from related fields that also use complex adaptive systems; researchers; and others to come together to co-develop a robust framework that has widespread support and that reflects the diverse, nuanced ways we learn about improving health care. Many questions remain to be answered. These include, but are not limited to:

Can we attribute the improvements we are measuring to the changes we are testing and implementing?

How do we know that no other factors are influencing the results—for example, other changes of which we are not aware, or secular trends?

If other factors are also affecting the results, how do we know what part is attributable to the changes we are making?

Why did the changes which yielded improvements work, and how?

If we obtain good results, and have documented the changes which yielded them, to what extent can we implement them elsewhere with the same fidelity and expect to get similar results?

What elements of an improvement are transferable, and what adaptations are needed?

How can we incorporate the effects of local context into the improvements?

How should we design improvement efforts to answer different learning objectives?

How do we optimize data collection that simultaneously serves to drive quality improvement, inform evaluation efforts, and fulfill performance reporting requirements?

Answers to these questions will necessarily lead to multiple study types, depending on what we want to learn, in what context, and for what purpose.

A phenomenon that is relevant to learning in general which also equally applies to improving health care, is that successful work which produces results tend to get published. Learning comes from not only what has worked and why, but also what has not worked and why not. In order to enhance learning we need to be deliberate about studying not only success, but also failures. The changes which failed, why they did not work and under what circumstance [10, 27].

Why/so what

A new framework for how we learn about improvement will help in the design, implementation and evaluation of improving health care to strengthen attribution and better understand variations in effectiveness through reproducible findings in different contexts. This will in turn allow us to understand which activities, under which conditions, are most effective at achieving sustained results in health outcomes.

Conclusion

The complexity of health care requires a more rigorous approach to advance our understanding of methods for learning about improving health care. Additionally, the greater use of robust qualitative, quantitate and mixed methods is needed to assess effectiveness—not merely to demonstrate if an intervention works, but why and how it works—and to explore the factors underlying success or failure.

Key questions to examine further include how to strengthen the rigor of the improvement; increase attribution of results to the changes tested; provide better balance to the often opposing needs of improving fidelity of the intervention, versus allowing for adaptation; make conclusions that are generalizable, but that also respond to the local context; and account for political considerations in improvement activities. This can lead to an improved epistemological paradigm for improvement.

Acknowledgements

The authors would like to acknowledge the contributions of a number of reviewers who guided this manuscript: Bruce Agins, Brian Austin, Don Goldmann, Frank Davidoff, Jim Heiby, Lani Marquez, Michael Marx, John Øvretveit, Alex Rowe and Alexia Zurkuhlen.

References

- 1.World Health Organization. Quality of Care: A Process for Making Strategic Choices in Health Systems. World Health Organization, 2006. [Google Scholar]

- 2.Holloway KA, Ivanovska V, Wagner AK et al. Have we improved use of medicines in developing and transitional countries and do we know how to? Two decades of evidence. Trop Med Int Health 2013;18:656–64. [DOI] [PubMed] [Google Scholar]

- 3.McDaniel RR, Driebe DJ. Complexity science and health care management. Adv Health Care Manage 2001;2:11–36. [Google Scholar]

- 4.Capra F. The Web of Life. New York, NY: Anchor Books, 1996. [Google Scholar]

- 5.Deming WE. On the distinction between enumerative and analytic surveys. J Am Stat Assoc 1953;262:244–55. [Google Scholar]

- 6.Langley A, Denis JL. Beyond evidence: the micropolitics of improvement. BMJ Qual Saf 2011;20(Suppl 1):i43–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Massoud MR, Mensah-Abrampah N. A promising approach to scale up health care improvements in low-and middle-income countries: the Wave-Sequence Spread Approach and the concept of the Slice of a System. F1000Res 2014;3:100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Franco LM, Marquez L. Effectiveness of collaborative improvement: evidence from 27 applications in 12 less-developed and middle-income countries. BMJ Qual Saf 2011;20:658–65. [DOI] [PubMed] [Google Scholar]

- 9.Ovretveit J. Understanding the conditions for improvement: research to discover which context influences affect improvement success. BMJ Qual Saf 2011;20(Suppl 1):i18–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Heiby J. The use of modern quality improvement approaches to strengthen African health systems: a 5-year agenda. Int J Qual Health Care 2014;26:117–23. [DOI] [PubMed] [Google Scholar]

- 11.Auerbach AD, Landefeld CS, Shojania KG. The tension between needing to improve care and knowing how to do it. N Engl J Med 2007;357:608–13. [DOI] [PubMed] [Google Scholar]

- 12.Dixon-Woods M. The problem of context in quality improvement. In: Bamber JR. (ed). Perspectives in Context: A Selection of Essays Considering Context in Successful Quality Improvement. London, UK: The Health Foundation, 2014,87–101. [Google Scholar]

- 13.Walshe K. Pseudoinnovation: the development and spread of healthcare quality improvement methodologies. Int J Qual Health Care 2009;21:153–9. [DOI] [PubMed] [Google Scholar]

- 14.Green LW, Glasgow RE. Evaluating the relevance, generalization, and applicability of research: issues in external validation and translation methodology. Eval Health Prof 2006;29:126–53. [DOI] [PubMed] [Google Scholar]

- 15.Portela MC, Pronovost PJ, Woodcock T et al. How to study improvement interventions: a brief overview of possible study types. Postgrad Med J 2015;91:343–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Davidoff F, Dixon-Woods M, Leviton L et al. Demystifying theory and its use in improvement. BMJ Qual Saf 2015;24:228–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Parry GJ, Carson-Stevens A, Luff DF et al. Recommendations for evaluation of health care improvement initiatives. Acad Pediatr 2013;13:S23–30. [DOI] [PubMed] [Google Scholar]

- 18.Campbell NC, Murray E, Darbyshire J et al. Designing and evaluating complex interventions to improve health care. BMJ 2007;334:455–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Medical Research Council. A Framework for Development and Evaluation of RCTs for Complex Interventions to Improve Health. London: MRC, 2000. [Google Scholar]

- 20.Braithwaite J, Wears RL, Hollnagel E. Resilient health care: turning patient safety on its head. Int J Qual Health Care 2015;5:418–20. [DOI] [PubMed] [Google Scholar]

- 21.Hawe P, Shiell A, Riley T. Complex interventions: how “out of control” can a randomised controlled trial be. BMJ 2004;328:1561–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Craig P, Dieppe P, Macintyre S et al. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ 2008;337:a1655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Damschroder LJ, Aron DC, Keith RE et al. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci 2009;4:50 5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hoffmann TC, Glasziou PP, Boutron I et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ 2014;348:g1687. [DOI] [PubMed] [Google Scholar]

- 25.Davidoff F, Batalden PB, Stevens DP et al. Development of the SQUIRE Publication Guidelines: evolution of the SQUIRE project. Jt Comm J Qual Patient Saf 2008;34:681–7. [DOI] [PubMed] [Google Scholar]

- 26.Davies L, Batalden P, Davidoff F et al. The SQUIRE Guidelines: an evaluation from the field, 5 years post release. BMJ Qual Saf 2015;24:769–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Matosin N, Frank E, Engel M et al. Negativity towards negative results: a discussion of the disconnect between scientific worth and scientific culture. Dis Model Mech 2014;7:171–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Shewhart WA. The Economic Control of Quality of Manufactured Product. New York: D Van Nostrand, 1931. [Google Scholar]

- 29.Deming WE. Out of the Crisis. Cambridge, MA: Massachusetts Institute of Technology Center for Advanced Engineering Studies, 1986. [Google Scholar]

- 30.Benneyan JC, Lloyd RC, Plsek PE. Statistical process control as a tool for research and healthcare improvement. Qual Saf Health Care 2003;12:458–64. [DOI] [PMC free article] [PubMed] [Google Scholar]