Abstract

This paper is concerned with the distributed and centralized fusion filtering problems in sensor networked systems with random one-step delays in transmissions. The delays are described by Bernoulli variables correlated at consecutive sampling times, with different characteristics at each sensor. The measured outputs are subject to uncertainties modeled by random parameter matrices, thus providing a unified framework to describe a wide variety of network-induced phenomena; moreover, the additive noises are assumed to be one-step autocorrelated and cross-correlated. Under these conditions, without requiring the knowledge of the signal evolution model, but using only the first and second order moments of the processes involved in the observation model, recursive algorithms for the optimal linear distributed and centralized filters under the least-squares criterion are derived by an innovation approach. Firstly, local estimators based on the measurements received from each sensor are obtained and, after that, the distributed fusion filter is generated as the least-squares matrix-weighted linear combination of the local estimators. Also, a recursive algorithm for the optimal linear centralized filter is proposed. In order to compare the estimators performance, recursive formulas for the error covariance matrices are derived in all the algorithms. The effects of the delays in the filters accuracy are analyzed in a numerical example which also illustrates how some usual network-induced uncertainties can be dealt with using the current observation model described by random matrices.

Keywords: least-squares estimation, distributed and centralized fusion methods, random parameter matrices, correlated noises, random delays

1. Introduction

Estimation problems in networked stochastic systems have been widely studied, especially in the past decade, due to the wide range of potential applications in many areas, such as target tracking, air traffic control, fault diagnosis, computer vision, and so on, and important advances in the design of multisensor fusion techniques have been achieved [1]. The basic question in the fusion estimation problems is how to merge the measurement data of different sensors; usually, the centralized and distributed fusion methods are used. Centralized fusion estimators are obtained by processing in the fusion center the measurements received from all sensors; in the distributed fusion method, first, local estimators, based on the measurements received from each sensor, are obtained and, then, these local estimators are combined according to a certain information fusion criterion. Several centralized and/or distributed fusion estimation algorithms have been proposed for conventional systems (see, e.g., [2,3,4,5], and the references therein), where the sensor measured outputs are affected only by additive noises, and assuming that the data are sent directly to the fusion center without any kind of transmission error; that is, assuming perfect connections.

However, although the use of sensor networks offers several advantages, due to restrictions of the physical equipment and uncertainties in the external environment, new problems associated with network-induced phenomena inevitably arise in both the output and the transmission of the sensor measurements [6]. Multiplicative noise uncertainties, sensor gain degradations and missing measurements are some of the random phenomena that usually arise in the sensor measured outputs, which cannot only be described by the usual additive disturbances. The existence of these uncertainties can severely degrade the performance of the conventional estimators, and has encouraged the need of designing new fusion estimation algorithms for such systems (see, e.g., [7,8,9,10,11], and references therein). Clearly, these situations with uncertainties in the measurements of the network sensors can be modeled by systems with random parameter measurement matrices, which have important practical significance due to the large number of realistic situations and application areas, such as digital control of chemical processes, radar control, navigation systems, or economic systems, in which this kind of systems with stochastic parameters are found (see, e.g., [12,13], among others). Therefore, it is not surprising that, in the past few years, much attention has been focused on the design of new fusion estimation algorithms for systems with random parameter matrices (see, e.g., [14,15,16,17], and references therein).

In addition to the aforementioned uncertainties, when the data packets are sent from the sensors to the processing center via a communication network, some further network-induced phenomena, such as random delays or measurement loss, inevitably arise during this transmission process, due to the unreliable network characteristics, imperfect communication channels and failures in the transmission. These additional transmission uncertainties can spoil the fusion estimators performance and motivate the need of designing fusion estimation algorithms that take their effects into consideration. In recent years, in response to the popularity of networked stochastic systems, the fusion estimation problem from observations with random delays and packet dropouts, which may happen during the data transmission, has been one of the mainstream research topics (see, e.g., [18,19,20,21,22,23,24,25,26,27,28,29,30], and references therein). All the above papers on signal estimation with random transmission delays assume independent random delays in each sensor and mutually independent delays between the different network sensors; in [31] this restriction was weakened and random delays featuring correlation at consecutive sampling times were considered, thus allowing to deal with common practical situations (e.g., those in which two consecutive observations cannot be delayed).

It should also be noted that in many real-world problems the measurement noises are usually correlated; for example, when all the sensors operate in the same noisy environment or when the sensor noises are state dependent. For this reason, the fairly conservative assumption that the measurement noises are uncorrelated is commonly weakened in most of the aforementioned research on signal estimation, including systems with both deterministic and random parameter matrices. Namely, the optimal Kalman filtering fusion problem in systems with cross-correlated noises at consecutive sampling times is addressed, for example, in [25]; also, under different types of noise correlation, centralized and distributed fusion algorithms are obtained in [11,26] for systems with multiplicative noise, and in [7] for systems where the measurements might have partial information about the signal. Autocorrelated and cross-correlated noises have been also considered in systems with random parameter matrices and transmission uncertainties; some results on the fusion estimation problems in these systems can be found in [22,24,27].

In this paper, covariance information is used to address the distributed and centralized fusion estimation problems for a class of linear networked stochastic systems with measured outputs perturbed by random parameter matrices, and subject to one-step random transmission delays. It is assumed that the sensor measurement noises are one-step autocorrelated and cross-correlated, and that the Bernoulli variables describing the measurement delays in the different sensors are correlated at the same and consecutive sampling times. The proposed observation model can describe the case of one-step delay, packet loss, or re-received measurements. To the best of the authors’ knowledge, fusion estimation problems in this framework with random measurement matrices and cross-correlated sensor noises in the measured outputs, together with correlated random delays in transmission, has not been investigated; so, encouraged by the above considerations, we reckon that it constitutes an interesting research challenge.

The main contributions of the current research are highlighted as follows: (i) The treatment used to address the estimation problem, based on covariance information, does not require the evolution model generating the signal process; nonetheless, the proposed fusion algorithms are also applicable to the conventional state-space model formulation; (ii) Random parameter matrices are considered in the measured outputs, which provide a fairly comprehensive and unified framework to describe some network-induced phenomena, such as multiplicative noise uncertainties or missing measurements, and correlation between the different sensor measurement noises is simultaneously considered; (iii) As in [31], random correlated delays in the transmission, with different delay characteristics at each sensor, are considered; however, the observation model in this paper is more general than that in [31], as the latter does not take random measurement matrices and correlated noises into account; (iv) Unlike [22,24,31], where only centralized fusion estimators are obtained, in this paper, both centralized and distributed estimation problems are addressed; the estimators are obtained under the innovation approach and recursive algorithms, very simple computationally and suitable for online applications, are proposed; (v) Finally, it must be noted that we do not use the augmented approach to deal with the delayed measurements, thus reducing the computational cost compared with the augmentation method.

The rest of the paper is organized as follows. Both, the measurement outputs with random parameter matrices, and the one-step random delay observation models are presented in Section 2, including the model hypotheses under which the distributed and centralized estimation problems are addressed. The distributed fusion method is applied in Section 3; specifically, the local least-squares linear filtering algorithms are derived in Section 3.1 using an innovation approach and, in Section 3.2, the proposed distributed fusion filter is obtained by a matrix-weighted linear combination of the local filtering estimators, using the mean squared error as optimality criterion. In Section 4, a recursive algorithm for the centralized least-squares linear filtering estimator is proposed. Section 5 is devoted to analyze the effectiveness of the proposed estimation algorithms by a simulation example, in which the effects of the sensor random delays on the estimators are compared. Some conclusions are drawn in Section 6.

Notation:

The notations throughout the paper are standard. The notation indicates the minimum value of two real numbers . and denote the n-dimensional Euclidean space and the set of all real matrices, respectively. For a matrix A, the symbols and denote its transpose and inverse, respectively; the notation represents the Kronecker product of the matrices . If the dimensions of vectors or matrices are not explicitly stated, they are assumed to be compatible with algebraic operations. In particular, I and 0 denote the identity matrix and the zero matrix of appropriate dimensions, respectively. For any function , depending on the time instants k and s, we will write for simplicity; analogously, will be written for any function , depending on the sensors i and j. Finally, denotes the Kronecker delta function.

2. Problem Formulation and Model Description

This paper deals with the distributed and centralized fusion filtering problems from randomly delayed observations coming from networked sensors; the signal measurements at the different sensors are noisy linear functions with random parameter matrices, and the sensor noises are assumed to be correlated and cross-correlated at the same and consecutive sampling times. The estimation is performed in a processing center, which is connected to all sensors, where the measurements are transmitted through unreliable communication channels which may lead to one-step random delays, due to network congestion or other causes. In the centralized filtering problem, the estimators are obtained by fusion of all the network observations at each sampling time, whereas in the distributed filtering, local estimators, based only on the observations of each individual sensor, are first obtained and then, a fusion estimator based on the local ones is calculated.

Our aim is to design recursive algorithms for the optimal linear distributed and centralized filters under the least-squares (LS) criterion, requiring only the first and second-order moments of the processes involved in the model describing the observations from the different sensors. Next, we present the model and formulate the hypotheses necessary to address the estimation problem.

Description of the observation model. Let us consider a discrete-time second-order -dimensional signal process, , which is measured in m sensor nodes of the network, , that generate the outputs , described by

| (1) |

where are independent random parameter matrices of appropriate dimensions and is the process describing the measurement noise in sensor i, which is assumed to be one-step correlated. We also assume that all the sensors operate in the same noisy environment and there exists correlation between different sensor noises at the same and consecutive sampling times.

In order to estimate the signal process, , the measurement outputs are transmitted to a processing center via unreliable channels, causing one-step random delays in such transmissions. When a measurement, , suffers delay and, hence, it is unavailable at time k, the processor is assumed to use the previous one, ; this way of dealing with delays requires that the first measurement is always available and so, considering zero-one random variables , the observations used in the estimation are described by

| (2) |

In this paper, the variables modelling the delays are assumed to be one-step correlated, thus covering many practical situations; for example those in which two consecutive observations through the same channel cannot be delayed, and situations where there is some sort of link between the different communications channels.

Model hypotheses. The LS estimation problem will be addressed under the following hypotheses about the processes involved in Equations (1) and (2), which formally specify the above assumptions:

-

(H1)

The signal process, , has zero mean and its covariance function can be expressed in a separable form; namely, , where are known matrices of dimensions .

-

(H2)

, , are independent sequences of independent random parameter matrices whose entries have known means and known second-order moments; we will denote .

-

(H3) The sensor measurement noises , are zero-mean sequences with known second-order moments, defined by:

-

(H4)

are sequences of Bernoulli random variables with known means, . It is assumed that and are independent for , and the second-order moments, and are also known.

-

(H5)

For , the processes and are mutually independent.

Hypotheses (H1)–(H5) guarantee that the observation processes in the different sensors have zero mean, and that the matrices , can be obtained from , and their transposes, by the following expressions:

| (3) |

Remark 1.

The term in becomes from which, from the independence between and , is equal to . From (H2), for or , and is computed from its entries, according the following formula:

where is any random matrix and denote its entries.

3. Distributed Fusion Linear Filter

The aim of this section is to address the distributed fusion linear filtering problem of the signal , from the randomly delayed observations defined by Equations (1) and (2), under the LS criterion. The proposed distributed filter is designed as the LS matrix-weighted linear combination of the local LS linear filters and therefore, in a first step, such local filters need being derived.

3.1. Derivation of the Local LS Linear Filters

With the purpose of obtaining the signal LS linear filters based on the available observations from each sensor, we will use an innovation approach, which provides recursive algorithms for the local estimators, that will be denoted by .

For each sensor , the innovation at time k, which represents the new information provided by the k-th observation, is defined by , where is the LS linear estimator of based on , with . As it is known [32], the innovations, , constitute a zero-mean white process, and the LS linear estimator of any random vector based on the observations , denoted by , can be calculated as a linear combination of the corresponding innovations, ; namely,

| (4) |

where denotes the covariance matrix of . This general expression is derived from the Orthogonal Projection Lemma (OPL), which establishes that the estimation error is uncorrelated with all the observations or, equivalently, uncorrelated with all the innovations.

Using the following alternative expression for the observations given by Equation (2),

| (5) |

and taking into account the independence hypotheses stated on the model, it is easy to see, from Equation (4), that

| (6) |

where

The general Expression (4) for the LS linear estimators as linear combination of the innovations, together with Expression (6) for the one-stage observation predictor, are the starting point to derive the local recursive filtering algorithms presented below in Theorem 1; these algorithms provide also the filtering error covariance matrices, , which measure the accuracy of the estimators when the LS optimality criterion is used.

Hereafter, for the matrices and involved in the signal covariance factorization (H1), the following operator will be used:

| (7) |

Theorem 1.

For each , the local LS linear filters, and the corresponding error covariance matrices, , are given by

(8) and

(9) where the vectors and the matrices are recursively obtained from

(10)

(11) and the matrices satisfy

(12)

The innovations , and their covariance matrices, , are given by

| (13) |

and

| (14) |

The coefficients , , are calculated as

| (15) |

| (16) |

Finally, the matrices and , are given in Equations (3) and (7), respectively.

Proof of Theorem 1.

The local filter will be obtained from the general Expression (4), starting from the computation of the coefficients .

The independence hypotheses and the separable structure of the signal covariance (H1) lead to , with given by Equation (7). From Expression (6) for we have:

Hence, using now Equation (4) for and , the filter coefficients are expressed as

which guarantees that , with given by

| (17) |

Therefore, by defining and , Expression (8) for the filter follows immediately from Equation (4), and Equation (9) is obtained by using the OPL to express , and applying (H1) and Equation (8).

The recursive Expressions (10) and (11) are directly obtained from the corresponding definitions, taking into account that which, in turn, from Equation (17), leads to Equation (12) for .

From now on, using that and Equation (7), Expression (6) for the observation predictor will be equivalently written as follows:

| (18) |

From Equation (18), Expression (13) for the innovation is directly obtained and, applying the OPL to express its covariance matrix as , the following identity holds:

Now, using again Equation (18), it is deduced from Expression (12) that and, since and , Expression (14) for is obtained.

To complete the proof, the expressions for , with given in Equation (5), are derived taking into account that is uncorrelated with . Consequently, , and Expression (16) is directly obtained from Equations (1), (2) and (5), using the hypotheses stated on the model. Next, using Equation (4) for in , we have:

| (19) |

To compute the first expectation involved in this formula, we express and we apply the OPL to rewrite , thus obtaining that ; then, by expressing and using Equations (12) and (18), it follows that The second expectation in Equation (19) is easily computed taking into account that, from the OPL, it is equal to and using Equation (18). So the proof is completed. ☐

3.2. Derivation of the Distributed LS Fusion Linear Filter

As it has been mentioned previously, a linear matrix-weighted fusion filter is now generated from the local filters by applying the LS optimality criterion. The distributed fusion filter at any time k is hence designed as a product, , where is the vector constituted by the local filters, and is a matrix such that the mean squared error, , is minimized.

As it is known, the solution of this problem is given by and, consequently, the proposed distributed filter is expressed as:

| (20) |

with .

In view of Equation (20), and since the OPL guarantees that , the derivation of only requires the knowledge of the matrices .

The following theorem provides a recursive algorithm to compute the matrices which not only determine the proposed distributed fusion filter, but also the filtering error covariance matrix, .

Theorem 2.

Let denote the vector constituted by the local LS filters given in Theorem 1, and , with . Then, the distributed filtering estimator, , and the error covariance matrix, , are given by

(21) and

(22)

The matrices , , are computed by

| (23) |

with satisfying

| (24) |

where are given by

| (25) |

and for , satisfy

| (26) |

The innovation cross-covariance matrices are obtained from

| (27) |

where , are given by

| (28) |

The coefficients , involved in the above expressions, are computed by

| (29) |

| (30) |

Finally, the matrices , and , are given in Equations (3) and (7), respectively.

Proof.

As it has been discussed previously, Expression (21) is immediately derived from Equation (20), while Equation (22) is obtained from , using (H1) and (21). Moreover, Equation (23) for is directly obtained using Equation (8) for the local filters and defining .

Next, we derive the recursive formulas to obtain the matrices , which clearly satisfy Equation (24) by simply using Equation (10) and defining .

For subsequent derivations, the following expression of the one-stage predictor of based on the observations of sensor i will be used; this expression is obtained from Equation (5), taking into account, as proven in Theorem 1, that , and defining :

| (31) |

As Expression (31) is a generalization of Equation (18), hereafter we will also refer to it for the local predictors .

By applying the OPL, is clear that and, consequently, we can rewrite ; then, using Equation (31) for both predictors, Expression (25) is easily obtained. Also, Equation (26) for , is immediate from Equation (10), by simply defining .

To obtain Equation (27), firstly we apply the OPL to express . Then, using Equation (31) for and , and definitions of and , we have

and Equation (27) is obtained taking into account that and , as it has been derived in the proof of Theorem 1.

Next, Expression (28) for with , is obtained from , and using Equation (31) in .

Finally, the reasoning for obtaining the coefficients is also similar to that of in Theorem 1, so it is omitted. Then the proof of Theorem 2 is completed. ☐

4. Centralized LS Fusion Linear Filter

In the centralized fusion filtering, the observations of the different sensors are jointly processed at each sampling time to yield the optimal filter of the signal , which will be denoted by . To carry out this process, at each time we will work with the vector constituted by the observations of all sensors, , which, from Equation (2), can be expressed by

| (32) |

where is the vector constituted by the sensor measured outputs given in Equation (1), and . Let us note that, analogously to the sensor measured outputs , the stacked vector is a noisy linear function of the signal, with random parameter matrices defined by , and noise :

| (33) |

The processes involved in Equations (32) and (33) satisfy the following properties, which are immediately derived from the model hypotheses (H1)–(H5):

-

(P1)

is a sequence of independent random parameter matrices whose entries have known means and second-order moments.

-

(P2)

The noise is a zero-mean sequence with known second-order moments defined by the matrices .

-

(P3)

The matrices have known means, , and and are independent for .

-

(P4)

The processes and are mutually independent.

In view of Equations (32) and (33) and the above properties, the study of the LS linear filtering problem based on the stacked observations, , is completely similar to that of the local filtering problem carried out in Section 3. Therefore, the centralized filtering algorithm described in the following theorem is derived by an analogous reasoning to that used in Theorem 1 and, hence, its proof is omitted.

Theorem 3.

The centralized LS linear filter, and the corresponding error covariance matrix, , are given by

and

where the vectors and the matrices are recursively obtained from

The matrices satisfy

The innovations, , and their covariance matrices, , are given by

and

and the coefficients , verify

In the above formulas, the matrices and are computed by , with given in Equation (3), and , with defined in Equation (7).

5. Numerical Simulation Example

This section is devoted to analyze the effectiveness of the proposed distributed and centralized filtering algorithms by a simulation example. Let us consider a zero-mean scalar signal process, , with autocovariance function which is factorizable according to (H1) just taking, for example, and

The measured outputs of this signal, which are provided by four different sensors, are described by Equation (1):

where the processes and , are defined as follows:

-

, , and , where is a zero-mean Gaussian white process with unit variance, and , , are white processes with the following time-invariant probability distributions:

-

–For , are Bernoulli random variables with .

-

–is uniformly distributed over .

-

–

It is also assumed that the sequences and are mutually independent.

-

–

The additive noises are defined as , where , , , and is a zero-mean Gaussian white process with unit variance.

Note that the sequences of random variables , model different types of uncertainty in the measured outputs: missing measurements in sensor 1; both missing measurements and multiplicative noise in sensor 2; and continuous and discrete gain degradation in sensors 3 and 4, respectively. Moreover, it is clear that the additive noises , are only correlated at the same and consecutive time instants, with

Next, according to our theoretical observation model, it is supposed that, at any sampling time , the data transmissions are subject to random one-step delays with different rates and such delays are correlated at consecutive sampling times. More precisely, let us assume that the available measurements are given by Equation (2):

where the variables modeling this type of correlated random delays are defined using three independent sequences of independent Bernoulli random variables, , , with constant probabilities, , for all ; specifically, for and

It is clear that the sensor delay probabilities are time-invariant: , for , and . Moreover, the independence of the sequences , , together with the independence of the variables in each sequence, guarantee that the random variables and are independent if , for any . Also, it is clear that, at each sensor, the variables are correlated at consecutive sampling times and , for and . Finally, we have that is independent of , , but correlated with at consecutive sampling times, with and .

Let us observe that, for each sensor , if , then ; this fact guarantees that, when the measurement at time k is delayed, the available measurement at time is well-timed. Therefore, this correlation model avoids the possibility of two consecutive delayed observations at the same sensor. Table 1 shows an example of data transmission in sensor 1 when .

Table 1.

Sensor 1 data transmission.

| Time k | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | ||

| 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | |||

Note that if , there is no delay at time k; i.e., the output is received on time by the fusion center and . If , the packet received at time k is the output at ; i.e., there is one-step delay and the processed observation is . From Table 1, we see that and are are lost, and and are re-received.

To illustrate the feasibility and analyze the effectiveness of the proposed estimators, the algorithms were implemented in MATLAB, and a hundred iterations were run. In order to measure the estimation accuracy, the error variances of both distributed and centralized fusion estimators were calculated for several values of the delay probabilities at the different sensors, obtained from several values of . Let us observe that the delay probabilities, , for , are the same if is used instead of ; for this reason, only the case will be considered here.

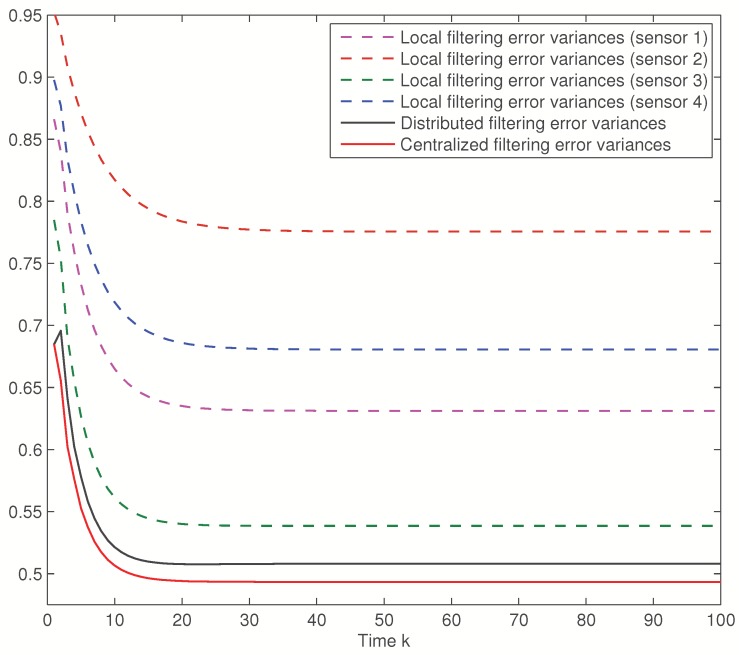

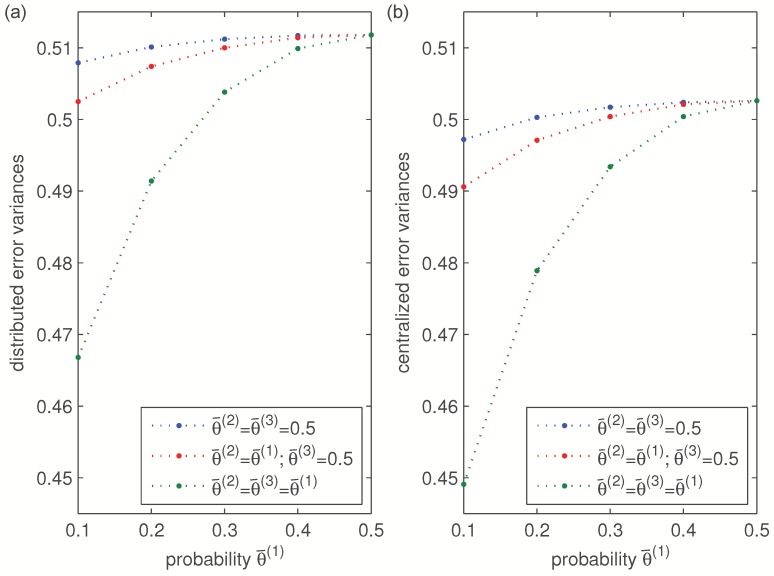

First, the error variances of the local, distributed and centralized filters will be compared considering the same delay probabilities for the four sensors. Figure 1 displays these error variances when , , which means that the delay probability at every sensor is . This figure shows that the error variances of the distributed fusion filtering estimator are lower than those of every local estimators, but slightly greater than those of the centralized one. However, this slight difference is compensated by the fact that the distributed fusion structure reduces the computational cost and has better robustness and fault tolerance. Analogous results are obtained for other values of . Actually, Figure 2 displays the distributed and centralized filtering error variances for , which lead to the delay probabilities , respectively. In this figure, both graphs (corresponding to the distributed and centralized fusion filters, respectively) show that the performance of the filters is poorer as increases. This fact was expected as the increase of yields a rise in the delay probabilities. This figure also confirms that both methods, distributed and centralized, have approximately the same accuracy, thus corroborating the previous results.

Figure 1.

Local, distributed and centralized filtering error variances for , .

Figure 2.

(a) Distributed filtering error variances and (b) centralized filtering error variances for different values of .

In order to carry out a further discussion on the effects of the sensor random delays, Figure 3 shows a comparison of the filtering error variances in the following cases:

Case I: error variances versus , when . In this case, as mentioned above, the values , lead to the values , respectively, for the delay probabilities of sensors 1 and 4, whereas the delay probabilities of sensors 2 and 3 are constant and equal to 0.25.

Case II: error variances versus , when and . Varying as in Case I, the delay probabilities of sensors 1, 2 and 4 are equal and take the aforementioned values, whereas the delay probability of sensor 3 is constant and equal to 0.25.

Case III: error variances versus , when . Now, as in Figure 2, the delay probabilities of the four sensors are equal, and they all take the aforementioned values.

Figure 3.

(a) Distributed filtering error variances and (b) centralized filtering error variances at , versus .

Since the behavior of the error variances is analogous in all the iterations, only the results of a specific iteration () are displayed in Figure 3, which shows that the performance of the distributed and centralized estimators is indeed influenced by the probability and, as expected, better estimations are obtained as becomes smaller, due to the fact that the delay probabilities, , decrease with . Moreover, this figure shows that the error variances in Case III are less than those of Case II which, in turn, are lower than those of Case I. This is due to the fact that, while the delay probabilities of the four sensors are varied in Case III, only two and three sensors vary their delay probabilities in Cases I and II, respectively. Since the constant delay probabilities of the other sensors are assumed to take their greatest possible value, this figure confirms that the estimation accuracy improves as the delay probabilities decrease.

6. Conclusions

In this paper, distributed and centralized fusion filtering algorithms have been designed in multi-sensor systems from measured outputs with random parameter matrices and correlated noises, assuming correlated random delays in transmissions. The main outcomes and results can be summarized as follows:

Information on the signal process: our approach, based on covariance information, does not require the evolution model generating the signal process to design the proposed distributed and centralized filtering algorithms; nonetheless, they are also applicable to the conventional formulation using the state-space model.

Signal uncertain measured outputs: random measurement matrices and cross-correlation between the different sensor noises are considered in the measured outputs, thus providing a unified framework to address different network-induced phenomena, such as missing measurements or sensor gain degradation, along with correlated measurement noises.

Random one-step transmission delays: the fusion estimation problems are addressed assuming random one-step delays in the outputs transmission to the fusion center through the network communication channels; the delays have different characteristics at each sensor and they are assumed to be correlated and cross-correlated at consecutive sampling times. This correlation assumption covers many situations where the common assumption of independent delays is not realistic; for example, networked systems with stand-by sensors for the immediate replacement of a failed unit, thus avoiding the possibility of two successive delayed observations.

Fusion filtering algorithms: firstly, recursive algorithms for the local LS linear signal filters based on the measured output data coming from each sensor have been designed by an innovation approach; the computational procedure of the local algorithms is very simple and suitable for online applications. After that, the matrix-weighted sum that minimizes the mean-squared estimation error is proposed as distributed fusion estimator. Also, using covariance information, a recursive centralized LS linear filtering algorithm, with analogous structure to that of the local algorithms, is proposed. The accuracy of the proposed fusion estimators, obtained under the LS optimality criterion, is measured by the error covariance matrices, which can be calculated offline as they do not depend on the current observed data set.

Simulations: a numerical simulation example has illustrated the usefulness of the proposed algorithms for the estimation of a scalar signal. Error variance comparisons have shown that both distributed and centralized fusion filters outperform the local ones, as well as a slight superiority of the centralized fusion estimators over the distributed ones. The effects of the delays on the estimators performance have been also analyzed by the error variances. This example has also highlighted the applicability of the proposed algorithms to different multi-sensor systems with stochastic uncertainties, which can be dealt with using the observation model with random measurement matrices considered in this paper.

Acknowledgments

This research is supported by Ministerio de Economía y Competitividad and Fondo Europeo de Desarrollo Regional FEDER (grant no. MTM2014-52291-P).

Abbreviations

The following abbreviations are used in this manuscript:

| LS | Least-Squares |

| OPL | Orthogonal Projection Lemma |

Author Contributions

All the authors contributed equally to this work. Raquel Caballero-Águila, Aurora Hermoso-Carazo and Josefa Linares-Pérez provided original ideas for the proposed model and collaborated in the derivation of the estimation algorithms; they participated equally in the design and analysis of the simulation results; and the paper was also written and reviewed cooperatively.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Li W., Wang Z., Wei G., Ma L., Hu J., Ding D. A Survey on multisensor fusion and consensus filtering for sensor networks. Discret. Dyn. Nat. Soc. 2015;2015:683701. [Google Scholar]

- 2.Ran C., Deng Z. Self-tuning weighted measurement fusion Kalman filtering algorithm. Comput. Stat. Data Anal. 2012;56:2112–2128. doi: 10.1016/j.csda.2012.01.001. [DOI] [Google Scholar]

- 3.Feng J., Zeng M. Optimal distributed Kalman filtering fusion for a linear dynamic system with cross-correlated noises. Int. J. Syst. Sci. 2012;43:385–398. doi: 10.1080/00207721.2010.502601. [DOI] [Google Scholar]

- 4.Yan L., Li X.R., Xia Y., Fu M. Optimal sequential and distributed fusion for state estimation in cross-correlated noise. Automatica. 2013;49:3607–3612. doi: 10.1016/j.automatica.2013.09.013. [DOI] [Google Scholar]

- 5.Feng J., Wang Z., Zeng M. Distributed weighted robust Kalman filter fusion for uncertain systems with autocorrelated and cross-correlated noises. Inform. Fusion. 2013;14:78–86. doi: 10.1016/j.inffus.2011.09.004. [DOI] [Google Scholar]

- 6.Hu J., Wang Z., Chen D., Alsaadi F.E. Estimation, filtering and fusion for networked systems with network-induced phenomena: New progress and prospects. Inform. Fusion. 2016;31:65–75. doi: 10.1016/j.inffus.2016.01.001. [DOI] [Google Scholar]

- 7.Liu Y., He X., Wang Z., Zhou D. Optimal filtering for networked systems with stochastic sensor gain degradation. Automatica. 2014;50:1521–1525. doi: 10.1016/j.automatica.2014.03.002. [DOI] [Google Scholar]

- 8.Caballero-Águila R., García-Garrido I., Linares-Pérez J. Information fusion algorithms for state estimation in multi-sensor systems with correlated missing measurements. Appl. Math. Comput. 2014;226:548–563. doi: 10.1016/j.amc.2013.10.068. [DOI] [Google Scholar]

- 9.Fangfang P., Sun S. Distributed fusion estimation for multisensor multirate systems with stochastic observation multiplicative noises. Math. Probl. Eng. 2014;2014:373270. doi: 10.1155/2014/373270. [DOI] [Google Scholar]

- 10.Pang C., Sun S. Fusion predictors for multi-sensor stochastic uncertain systems with missing measurements and unknown measurement disturbances. IEEE Sens. J. 2015;15:4346–4354. doi: 10.1109/JSEN.2015.2416511. [DOI] [Google Scholar]

- 11.Tian T., Sun S., Li N. Multi-sensor information fusion estimators for stochastic uncertain systems with correlated noises. Inform. Fusion. 2016;27:126–137. doi: 10.1016/j.inffus.2015.06.001. [DOI] [Google Scholar]

- 12.De Koning W.L. Optimal estimation of linear discrete-time systems with stochastic parameters. Automatica. 1984;20:113–115. doi: 10.1016/0005-1098(84)90071-2. [DOI] [Google Scholar]

- 13.Luo Y., Zhu Y., Luo D., Zhou J., Song E., Wang D. Globally optimal multisensor distributed random parameter matrices Kalman filtering fusion with applications. Sensors. 2008;8:8086–8103. doi: 10.3390/s8128086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Shen X.J., Luo Y.T., Zhu Y.M., Song E.B. Globally optimal distributed Kalman filtering fusion. Sci. China Inf. Sci. 2012;55:512–529. doi: 10.1007/s11432-011-4538-7. [DOI] [Google Scholar]

- 15.Hu J., Wang Z., Gao H. Recursive filtering with random parameter matrices, multiple fading measurements and correlated noises. Automatica. 2013;49:3440–3448. doi: 10.1016/j.automatica.2013.08.021. [DOI] [Google Scholar]

- 16.Linares-Pérez J., Caballero-Águila R., García-Garrido I. Optimal linear filter design for systems with correlation in the measurement matrices and noises: Recursive algorithm and applications. Int. J. Syst. Sci. 2014;45:1548–1562. doi: 10.1080/00207721.2014.909093. [DOI] [Google Scholar]

- 17.Caballero-Águila R., García-Garrido I., Linares-Pérez J. Distributed fusion filtering in networked systems with random measurement matrices and correlated noises. Discret. Dyn. Nat. Soc. 2015;2015:398605. doi: 10.1155/2015/398605. [DOI] [Google Scholar]

- 18.Shi Y., Fang H. Kalman filter based identification for systems with randomly missing measurements in a network environment. Int. J. Control. 2010;83:538–551. doi: 10.1080/00207170903273987. [DOI] [Google Scholar]

- 19.Li H., Shi Y. Robust H∞ filtering for nonlinear stochastic systems with uncertainties and random delays modeled by Markov chains. Automatica. 2012;48:159–166. doi: 10.1016/j.automatica.2011.09.045. [DOI] [Google Scholar]

- 20.Li N., Sun S., Ma J. Multi-sensor distributed fusion filtering for networked systems with different delay and loss rates. Digit. Signal Process. 2014;34:29–38. doi: 10.1016/j.dsp.2014.07.016. [DOI] [Google Scholar]

- 21.Sun S., Ma J. Linear estimation for networked control systems with random transmission delays and packet dropouts. Inf. Sci. 2014;269:349–365. doi: 10.1016/j.ins.2013.12.055. [DOI] [Google Scholar]

- 22.Caballero-Águila R., Hermoso-Carazo A., Linares-Pérez J. Covariance-based estimation from multisensor delayed measurements with random parameter matrices and correlated noises. Math. Probl. Eng. 2014;2014:958474. doi: 10.1155/2014/958474. [DOI] [Google Scholar]

- 23.Chen B., Zhang W., Yu L. Networked fusion Kalman filtering with multiple uncertainties. IEEE Trans. Aerosp. Electron. Syst. 2015;51:2332–2349. doi: 10.1109/TAES.2015.130803. [DOI] [Google Scholar]

- 24.Caballero-Águila R., Hermoso-Carazo A., Linares-Pérez J. Optimal state estimation for networked systems with random parameter matrices, correlated noises and delayed measurements. Int. J. Gen. Syst. 2015;44:142–154. doi: 10.1080/03081079.2014.973728. [DOI] [Google Scholar]

- 25.Wang S., Fang H., Tian X. Recursive estimation for nonlinear stochastic systems with multi-step transmission delays, multiple packet dropouts and correlated noises. Signal Process. 2015;115:164–175. doi: 10.1016/j.sigpro.2015.03.022. [DOI] [Google Scholar]

- 26.Chen D., Yu Y., Xu L., Liu X. Kalman filtering for discrete stochastic systems with multiplicative noises and random two-step sensor delays. Discret. Dyn. Nat. Soc. 2015;2015:809734. doi: 10.1155/2015/809734. [DOI] [Google Scholar]

- 27.Caballero-Águila R., Hermoso-Carazo A., Linares-Pérez J. Fusion estimation using measured outputs with random parameter matrices subject to random delays and packet dropouts. Signal Process. 2016;127:12–23. doi: 10.1016/j.sigpro.2016.02.014. [DOI] [Google Scholar]

- 28.Chen D., Xu L., Du J. Optimal filtering for systems with finite-step autocorrelated process noises, random one-step sensor delay and missing measurements. Commun. Nonlinear Sci. Numer. Simul. 2016;32:211–224. doi: 10.1016/j.cnsns.2015.08.015. [DOI] [Google Scholar]

- 29.Wang S., Fang H., Tian X. Minimum variance estimation for linear uncertain systems with one-step correlated noises and incomplete measurements. Digit. Signal Process. 2016;49:126–136. doi: 10.1016/j.dsp.2015.10.007. [DOI] [Google Scholar]

- 30.Gao S., Chen P., Huang D., Niu Q. Stability analysis of multi-sensor Kalman filtering over lossy networks. Sensors. 2016;16:566. doi: 10.3390/s16040566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Caballero-Águila R., Hermoso-Carazo A., Linares-Pérez J. Linear estimation based on covariances for networked systems featuring sensor correlated random delays. Int. J. Syst. Sci. 2013;44:1233–1244. doi: 10.1080/00207721.2012.659709. [DOI] [Google Scholar]

- 32.Kailath T., Sayed A.H., Hassibi B. Linear Estimation. Prentice Hall; Upper Saddle River, NJ, USA: 2000. [Google Scholar]