Abstract

Little is known about validity of self-reported mammography surveillance among breast cancer survivors. Most studies have focused on accuracy among healthy, average-risk populations and none have assessed validity by electronic medical record (EMR) extraction method. To assess validity of survivor-reported mammography post-active treatment care, we surveyed all survivors diagnosed 2004–2009 in an academic hospital cancer registry (n = 1441). We used electronic query and manual review to extract EMR data. Concordance, sensitivity, specificity, positive predictive value, and report-to-records ratio were calculated by comparing survivors' self-reports to data from each extraction method. We also assessed average difference in months between mammography dates by source and correlates of concordance. Agreement between the two EMR extraction methods was high (concordance 0.90; kappa 0.70), with electronic query identifying more mammograms. Sensitivity was excellent (0.99) regardless of extraction method; concordance and positive predictive value were good; however, specificity was poor (manual review 0.20, electronic query 0.31). Report-to-records ratios were both over 1 suggesting over-reporting. We observed slight forward telescoping for survivors reporting mammograms 7–12 months prior to survey date. Higher educational attainment and less time since mammogram receipt were associated with greater concordance. Accuracy of survivors' self-reported mammograms was generally high with slight forward telescoping among those recalling their mammograms between 7 and 12 months prior to the survey date. Results are encouraging for clinicians and practitioners relying on survivor reports for surveillance care delivery and as a screening tool for inclusion in interventions promoting adherence to surveillance guidelines.

Keywords: Breast cancer survivors, Mammography surveillance, Validity

Introduction

The number of US breast cancer survivors is rapidly increasing (>2.85 million in 2010 [1]) with significant numbers diagnosed at early stages. Post-active treatment surveillance with mammography is recommended to detect recurrent and new primary cancers early and improve survival [2–5]. Despite its demonstrated benefits, adherence is suboptimal (ranging from 53 to 92 % [6–13]), although estimates are wide ranging and depend in part on data source (e.g., self-report, claims data, medical record). From a cancer control research perspective, a valid self-report measure of mammography surveillance is important for identification of correlates/predictors, appropriate triage of survivors who would benefit from interventions promoting post-treatment surveillance, evaluation of intervention trials, and assessment of trends in adherence to guidelines [14, 15]. Providers caring for breast cancer survivors also need information on the accuracy of self-reported behavior in order to make timely recommendations. While there is a large literature documenting the accuracy of self-reported mammography among healthy women; [16, 17] little is known about the accuracy of breast cancer survivors' reports [18].

To evaluate validity and reliability of self-reported behavior, studies have used a variety of data sources as the gold standard including administrative claims, computerized radiology databases, and paper/electronic medical records; some studies have even combined administrative and medical record data [16, 19]. Several have argued that administrative claims data are not as precise as clinical data gleaned from manual abstraction of paper medical records [20]. It is less clear if this caveat is also true with electronic medical records (EMR), which is important given increasing adoption in US. Approximately, 34 % of physician offices had EMR systems in 2011 [21] and the number is likely to grow with federal initiatives encouraging EMR adoption [22]. An advantage of EMRs is the ability to use computer programmers to extract data which significantly reduces time and cost. With federal initiatives encouraging the meaningful use of EMRs [22, 23], researchers should examine performance of different EMR extraction methods.

This study was designed to accomplish three aims: (1) examine concordance and kappa between two methods of EMR data extraction: electronic query and manual review; (2) evaluate the concordance, sensitivity, specificity, positive predictive value, and report-to-records ratio of breast cancer survivors' self-reported most recent mammogram with EMR data extracted via electronic query and manual review; and (3) identify correlates of concordance between survivors' report and the EMR.

Methods

Data were collected as part of a larger study on breast and colorectal cancer survivors. Recruitment methods, participants, and data collection for the patient survey have been previously described [24]. Breast cancer survivors with stage 0–III disease diagnosed between 2004 and 2009 (N = 1441) and listed in an academic hospital cancer registry were invited to complete a survey between July and December 2011. The response rate was 31.4 % (n = 452). Based on survey responses, we excluded patients who (1) did not report at least the year of the most recent mammogram (n = 3); (2) reported a mammography date in the future relative to the survey date (n = 7); or (3) reported bilateral mastectomies (n = 70) because post-treatment mammography screenings are not recommended for this subgroup. The final analytic sample was 372.

The hospital and outpatient clinics (primary care, radiology, oncology) in the academic medical center all use the same EMR (EpicCare; Verona, WI). Mammography data from the EMR were obtained through two methods: manual review by three trained non-physician research assistants (RAs) and an electronic query of the hospital data warehouse.

Outcome measures

Patient self-report

Breast cancer survivors answered the following survey question: “What was the month and year of your most recent mammogram?”

Electronic query

We requested a report from the Clinical Information Systems (CIS) team at the academic medical center that electronically queried the hospital data warehouse. The hospital data warehouse contains claims- and procedure-based EMR data directly downloaded from the EpicCare EMR system. The date of all mammograms between January 1, 2010 and December 1, 2011 for each survivor was extracted.

Manual review

RAs used an abstraction form to manually extract from the survivor's EMR all mammography dates between January 1, 2010 and December 1, 2011 as well as the location where data were stored. RAs systematically looked in three locations: imaging, progress notes of encounters, and media reports. The latter was where clinical staff uploaded digital copies of mammograms received outside the healthcare system. We also used manual review to identify and exclude survivors receiving bilateral or two separate mastectomy procedures (i.e., in effect a bilateral mastectomy but not at the same time). RAs were blinded to survivors' self-reported mammogram status. A 10 % random sample of survivors was reviewed as a quality control check and had 100 % agreement on mammography month and year.

Receipt of a recent mammogram

For each data source (survivor self-report, electronic query, manual review), we used the date survivors reported completing the survey (hereafter survey date) to calculate whether or not the survivor had a mammogram within the past 12 months. We chose a 12-month interval because survivors varied in the time since completing active treatment; thus, at a minimum, all were recommended to get a mammogram at least every year [2–5]. For each data source, we coded the patient as having had a recent mammogram (1 = yes) if the mammogram month and year were within 13 months of the survey date; otherwise, the survivor was coded as 0 (no recent mammogram).

Other variables of interest

Sociodemographic variables included survivor-reported age, sex, race/ethnicity, marital status, and education. If date of birth was missing or unclear, we calculated age based on the birth date documented in the cancer registry (the data source from which the survey sample was selected). We also queried the cancer registry for the number of years since cancer diagnosis. Healthcare access and utilization variables including insurance, receipt of follow-up care in 2010 and 2011, and type of provider seen for follow-up care were obtained through the survey.

Data analysis

To examine agreement between the two EMR data extraction methods for ascertaining recent post-treatment mammography (Aim 1), we compared electronic query to manual review and calculated concordance (proportion of survivors with agreement between two sources of data) and kappa as there is no gold standard for how to collect EMR data.

To evaluate the validity of survivors' self-report by EMR extraction method (Aim 2), we compared self-report versus manual review and self-report versus electronic query. We calculated point estimates and 95 % confidence intervals (CIs) for the following validity measures: concordance, sensitivity (proportion of survivors who self-reported a mammogram within the past 13 months among those with one documented in the EMR), specificity (proportion of patients who reported not having a mammogram in the past 13 months among those without a mammogram in the EMR), positive predictive value (proportion of survivors who had one documented in the EMR among those who self-reported a recent mammogram), and report-to-records ratio (percentage of self-reported mammograms divided by the percentage of mammograms documented in the EMR). If the report-to records ratio is greater than 1, then the estimate suggests a pattern of over-reporting recent mammograms by survivors. We estimated sensitivity to assess under-reporting and specificity, positive predictive value, and report-to-records ratio to examine over-reporting.

We used Tisnado et al.'s criteria for evaluating concordance, sensitivity, and specificity of ambulatory care services: ≥0.9 indicates excellent agreement, ≥0.8 to<0.9 indicates good agreement, ≥0.7 to <0.8 indicates fair agreement, and <0.7 indicates poor agreement [25].

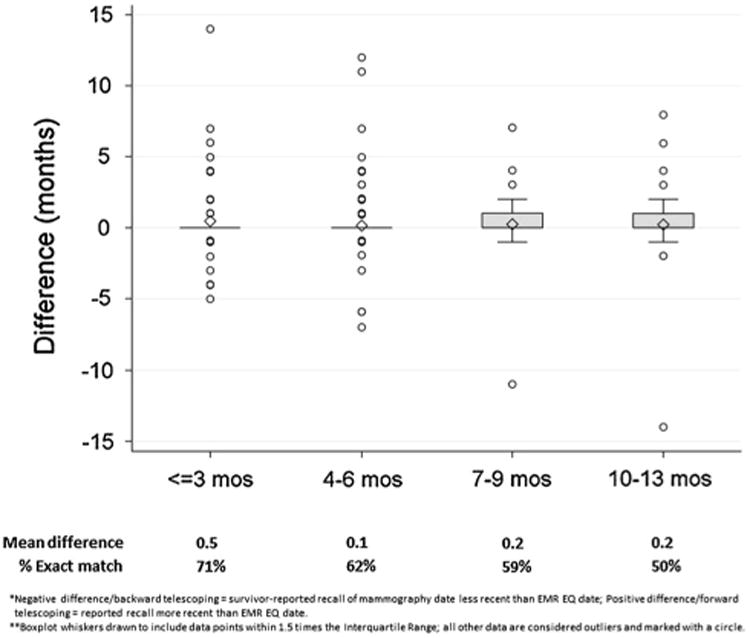

For the subset of women who reported a mammogram month and year in the 13 months prior to the survey date and had mammogram dates available through electronic query, we also computed the average difference in months between survivors' self-report and the EMR data to examine telescoping (survivors perceiving the mammography event to be more distant or more recent than it actually was) and stratified estimates by recency of the mammogram relative to the survey date [26].

To evaluate correlates of concordance (Aim 3), we ran univariate logistic regression models with the concordance outcome variable comparing self-report to the EMR extraction method that yielded the most comprehensive mammography data.

Results

In our final analytic sample of 372 breast cancer survivors, most were over age 50, non-Hispanic White, married or living with a partner, and had private insurance or Medicare (Table 1). Based on survey data, 94.1 % of breast cancer survivors reported having a mammogram within 13 months of their survey date. Electronic query EMR extraction method identified a mammogram within 13 months of their survey date for 84.4 % of the survivors. Manual review identified 76.3 % within the same time-frame; 5.6 % (n = 10) of the mammograms identified by manual review were only documented in the provider's progress note because the mammogram was conducted at a radiology facility external to the academic medical center. Concordance between the two extraction methods was 0.90 and kappa was 0.70. Among the subset who had mammography dates available through both methods (n = 287), the average difference between electronic query and manual review was 0.8 months (range −4.0 to 14.0 months); dates exactly matched 85 % of the time.

Table 1. Sociodemographic and healthcare utilization characteristics for breast cancer survivors recruited through an academic medical center's cancer registry and responded to a mailed survey, 2011 (N = 372).

| Characteristics | N (%) |

|---|---|

| Age (years) | |

| ≤49 | 35 (9.4) |

| 50–64 | 188 (50.5) |

| 65+ | 149 (40.1) |

| Race/ethnicity | |

| Hispanic | 33 (8.9) |

| NH White | 270 (72.6) |

| NH Black | 57 (15.3) |

| NH Other | 12 (3.2) |

| Married/live with partner (yes) | 243 (65.4) |

| Education* | |

| <HS/HS diploma/GED | 74 (19.8) |

| Some college/technical degree | 121 (32.5) |

| Bachelor's degree | 104 (27.9) |

| Graduate degree | 74 (19.8) |

| Insurance status* | |

| Private/VA | 244 (65.5) |

| Medicare/medigap | 86 (23.1) |

| Medicaid/state | 31 (8.4) |

| None | 11 (3.0) |

| Follow-up care in 2010/11 (yes) | 331 (89.0) |

| Provider type, 2010/2011 follow-up care | |

| No provider | 41 (11.0) |

| PCP only | 11 (3.0) |

| Oncologist only | 254 (68.3) |

| PCP and oncologist | 66 (17.7) |

| Years since diagnosis | |

| <5 years | 221 (59.4) |

| ≥5 years | 151 (40.6) |

NH Non-Hispanic, HS high school, GED general educational development, VA Veteran's affairs, PCP primary care physician

Reduced sample size for married/live with partner (n = 370), education (n = 369), and insurance status (n = 368) due to missing data

Table 2 shows the point estimates and 95 % CIs for the five validity measures (concordance, sensitivity, specificity, positive predictive value, and report-to-records ratio) when comparing survivors' self-report to each of the EMR data extraction methods. Based on Tisnado et al.'s criteria and regardless of data extraction method, survivors' self-reports showed excellent sensitivity (both extraction methods: 0.99), and fair/good agreement for concordance and positive predictive value. However, specificity was poor (manual review: 0.20; electronic query 0.31); and both report-to records ratios were over 1. Collectively, the estimates for specificity, positive predictive value, and re-port-to-records ratio suggest a consistent pattern of over-reporting with some survivors reporting a recent mammogram when there was none documented in the EMR.

Table 2. Summary validity measures of recent mammography surveillance among breast cancer survivors surveyed in 2011 by electronic medical record extraction method (N = 372).

| EMR extraction method | Self and EMR A | Self only B | EMR only C | Neither D | Concordance | Sensitivity | Specificity | PPV | Report-to-records ratio |

|---|---|---|---|---|---|---|---|---|---|

| n | n | n | n | (95 % CI) | (95 % CI) | (95 % CI) | (95 % CI) | (95 % CI) | |

| Manual review | 280 | 70 | 4 | 18 | 0.80 (0.76–0.84) | 0.99 (0.96–1.00) | 0.20 (0.13–0.30) | 0.80 (0.75–0.84) | 1.23 (1.10, 1.36) |

| Electronic query | 310 | 40 | 4 | 18 | 0.88 (0.84–0.91) | 0.99 (0.97–1.00) | 0.31 (0.20–0.45) | 0.89 (0.85–0.92) | 1.11 (1.00, 1.23) |

Validity measures were assessed using the following formulas: concordance = [(A + D)/(A + B + C + D)]; sensitivity = [A/(A + C)]; specificity = [D/(B + D)]; false positive probability = [B/(A + B)]; PPV = [A/(A + B)]; report-to-records ratio = [(A + B)/(A + C)]

Figure 1 shows the difference in months between self-reported and EMR electronic query mammogram dates by recency of survivors' self-reported mammogram date relative to her survey date. Most survivors, who reported that they had a mammogram within 3 months and 4-6 months of the survey date, had no difference with the EMR (71 and 62 %, respectively, had an exact month and year match). Survivors who reported a mammogram 7–13 months prior to the survey date had slight forward telescoping (e.g., they recalled the mammography date to be more recent than documented in the EMR).

Fig. 1. Difference in months between self-reported and EMR electronic query mammogram dates by recency of self-report relative to survey date among breast cancer surivors who reported a recent mammogram (N = 287).

Table 3 shows univariate analyses examining correlates of concordance between self-reported and EMR data. Education and recency of self-reported mammogram were associated with concordance. Survivors with a bachelor's degree were more likely to be concordant than survivors with a high school degree or less. Survivors who reported a mammogram 7–13 months prior or who did not provide the month of the mammogram were less likely to be concordant than those who reported a mammogram less than 3 months prior to the survey date.

Table 3. Univariate analyses examining correlates of concordance between self-report and EMR data extracted via electronic query (N = 372).

| Characteristics | Total | Comparison of SR and EMR was concordant | Crude |

|---|---|---|---|

| N | n (%) | Odds ratio (95 % CI) | |

| Age (years) | |||

| ≤49 | 35 | 33 (94.3) | 1.00 |

| 50–64 | 188 | 164 (87.2) | 0.41 (0.09, 1.84) |

| 65+ | 149 | 131 (87.9) | 0.44 (0.10, 2.00) |

| Race/ethnicity | |||

| NH White | 270 | 243 (90.0) | 1.00 |

| NH Black | 57 | 46 (80.7) | 0.46 (0.22, 1.00) |

| Hispanic | 33 | 29 (87.9) | 0.81 (0.26, 2.47) |

| NH other | 12 | 10 (83.3) | 0.56 (0.12, 2.67) |

| Marital status* | |||

| No | 128 | 115 (89.8) | 1.00 |

| Yes | 242 | 212 (87.6) | 0.80 (0.40, 1.59) |

| Education* | |||

| <HS/high school/GED | 73 | 61 (83.6) | 1.00 |

| Some college/Technical degree | 120 | 104 (86.7) | 1.28 (0.57, 2.88) |

| Bachelor's degree | 103 | 97 (94.2) | 3.18 (1.13, 8.92) |

| Graduate degree | 73 | 64 (87.7) | 1.40 (0.55, 3.56) |

| Insurance status* | |||

| Private/VA | 241 | 215 (89.2) | 1.00 |

| Medicare/medigap | 85 | 73 (85.9) | 0.74 (0.35, 1.53) |

| Medicaid/state | 31 | 28 (90.3) | 1.13 (0.32, 3.97) |

| None | 11 | 9 (81.8) | 0.54 (0.11, 2.66) |

| Follow-up care in 2010–2011 | |||

| No | 41 | 34 (82.9) | 1.00 |

| Yes | 331 | 294 (88.8) | 1.64 (0.68, 3.95) |

| Type of provider seen | |||

| No provider | 41 | 34 (82.9) | 1.00 |

| PCP only | 11 | 7 (63.6) | 0.36 (0.08, 1.57) |

| Oncologist only | 254 | 231 (90.9) | 2.07 (0.82, 5.19) |

| PCP and oncologist | 66 | 56 (84.8) | 1.15 (0.40, 3.31) |

| Years since diagnosis | |||

| <5 years | 221 | 196 (88.7) | 1.00 |

| ≥5 years | 151 | 132 (87.4) | 0.89 (0.47, 1.67) |

| Recency of self-reported mammogram | |||

| ≤3 months | 155 | 143 (92.3) | 1.00 |

| 4–6 months | 114 | 103 (90.4) | 0.79 (0.33, 1.85) |

| 7–9 months | 42 | 33 (78.6) | 0.31 (0.12, 0.79) |

| 10–13 months | 27 | 21 (77.8) | 0.29 (0.10, 0.87) |

| >13 months | 17 | 15 (88.2) | 0.63 (0.13, 3.08) |

| No mammogram month reported | 17 | 13 (76.5) | 0.27 (0.08, 0.97) |

Bolded odds ratio and estimates indicate significant associations (P < 0.05)

Missing data for n = 2 (marital status), n = 3 (education), and n = 4 (insurance) survivors, respectively

Discussion

Many studies have considered the accuracy of mammography self-report among the general population [16, 17], but few have looked specifically at breast cancer survivors [18]. Surveillance guidelines for breast cancer survivors recommend more frequent mammograms than for women at average risk for breast cancer; thus, data on the accuracy of survivors' self-reports are needed to estimate bias in survey-based screening adherence estimates [16]. Similar to studies among average-risk women [16, 17], we found excellent agreement between survivors' reports and the EMR for sensitivity and fair/good agreement for concordance and positive predictive value; but poor agreement for specificity. Concordance and positive predictive value estimates were slightly higher using the electronic query versus manual review EMR extraction method, suggesting more complete extraction of mammograms using the electronic query method.

Concordance between the two extraction methods (manual review and electronic query) was excellent. Manual review involved using the EpicCare user interface to abstract dates from three locations in the survivor's EMR (imaging, progress notes of encounters, and media reports); we found documentation of mammograms received at an outside facility for only ten survivors. The electronic query, in contrast, involved extracting the same clinical tables as from the manual review in addition to extracting tables used to bill for services. Therefore, the electronic query cast a wider net, and was able to search for data that even trained reviewers cannot view while logged into the EpicCare user interface. When done correctly and thoroughly, electronic query of the EMR database could yield more complete results than the historical gold standard of manual review. Given the speed and cost savings from the electronic query method, our findings suggest that electronic query is the best extraction method for EMR databases that access both care delivery and billing data tables.

For clinicians treating breast cancer survivors, accurate self-reports of mammography are especially important. Currently, guidelines do not specify whether oncologists or primary care physicians should have primary responsibility for promoting mammography surveillance [2–4, 27], and there is evidence that many survivors seek care from both types of physicians [27, 28]. As such, oncologists and primary care physicians, who have unlinked EMRs and need to coordinate care, may find it challenging to verify mammography receipt and may rely on survivor self-report. Our findings of good agreement between survivor self-reported mammography and EMR (regardless of extraction method) should help clinicians feel comfortable trusting reports from breast cancer survivors particularly those who report having a mammography in the past 6 months. For these patients, the concordance is excellent. Concordance rates between survivors' reports and the EMR are lowest among those reporting a mammography 7–12 months prior to the survey data and those who did not report a date. Thus, clinicians seeing new patients who report a mammography date over than 6 months ago may want to verify mammography dates by contacting radiology facilities.

Our findings of poor specificity are similar, but more pronounced than studies among average-risk women [16, 17]. This indicates that some survivors are either falsely reporting a recent mammogram or may have had a mammogram that was not reflected in the EMR (e.g., at another radiology facility). Figure 1 suggests forward telescoping among survivors reporting mammogram dates 7–12 months prior to the survey date (i.e., some women are reporting that their mammogram was more recent in time than it actually was). Forward telescoping and over-reporting may occur because survivors' perceive mammograms to be socially desirable [29]. Our validity analyses assumed that the EMR, regardless of the extraction method, is the gold standard; yet it is possible that the EMR may not be up-to-date, particularly for mammograms received outside of the treating facility.

Breast cancer survivors, in general, and those represented in this study, use mammography at higher rates than women in the general population. In our sample, the EMR indicated that more than 80 % of the women were adherent with mammography surveillance guidelines. This high adherence rate may be a product of treatment in an academic medical center, or the fact that over two-thirds of the women report being followed by their oncologist. Although practice guidelines recommend annual surveillance mammography [2–5], many survivors are not adherent; this is particularly true of older survivors [6, 8] and those seen by primary care physicians [27, 30].

The following study limitations should be considered. Breast cancer survivors who participated in this study are all associated with an academic medical center; thus, they may not be representative of survivors treated in community practice settings. Also, the hospital and outpatient clinics (oncology, radiology, internal medicine, and family practice) share the same EMR system; thus, survivors may be more likely to have a mammogram at an internal facility and documentation of mammography in the EMR may be more thorough in this type of vertically integrated healthcare system. While EpicCare is the most widely used EMR system across the US, there are several other competitors and they may store mammography data differently. Future studies should examine the accuracy of survivors' reports and adherence rates in community practice settings using different EMR systems and with more ethnically diverse survivor populations.

In conclusion, the accuracy of breast cancer survivors' self-reported mammograms was generally high with suggestions of some slight forward telescoping among those recalling their mammograms between 7 and 12 months prior to the survey date. Results are encouraging for clinicians and practitioners who rely on survivor reports for surveillance care delivery and as a screening tool for inclusion in interventions promoting adherence to surveillance guidelines.

Acknowledgments

This study was funded by the Moncrief Cancer Institute. The content is solely the responsibility of the authors and does not necessarily represent the official view of the Moncrief Cancer Institute.

Abbreviations

- EMR

Electronic medical record

- RA

Research assistant

- CI

Confidence interval

Footnotes

Conflict of interest The authors declare that they have no conflicts of interest.

References

- 1.Surveillance, Epidemiology, and End Results Program, National Cancer Institute. SEER Stat Fact Sheets: Breast Cancer. National Cancer Institute; 2014. [Accessed 29, 2014]. http://seer.cancer.gov/statfacts/html/breast.html. [Google Scholar]

- 2.Khatcheressian JL, Hurley P, Bantug E, Esserman LJ, Grunfeld E, Halberg F, Hantel A, Henry NL, Muss HB, Smith TJ, Vogel VG, Wolff AC, Somerfield MR, Davidson NE. Breast cancer follow-up and management after primary treatment: American Society of Clinical Oncology clinical practice guideline update. J Clin Oncol. 2013;31:961–965. doi: 10.1200/JCO.2012.45.9859. [DOI] [PubMed] [Google Scholar]

- 3.Khatcheressian JL, Wolff AC, Smith TJ, Grunfeld E, Muss HB, Vogel VG, Halberg F, Somerfield MR, Davidson NE. American Society of Clinical Oncology 2006 update of the breast cancer follow-up and management guidelines in the adjuvant setting. J Clin Oncol. 2006;24:5091–5097. doi: 10.1200/JCO.2006.08.8575. [DOI] [PubMed] [Google Scholar]

- 4.Bevers TB, Anderson BO, Bonaccio E, Buys S, Daly MB, Dempsey PJ, Farrar WB, Fleming I, Garber JE, Harris RE, Heerdt AS, Helvie M, Huff JG, Khakpour N, Khan SA, Krontiras H, Lyman G, Rafferty E, Shaw S, Smith ML, Tsangaris TN, Williams C, Yankeelov T. NCCN clinical practice guidelines in oncology: breast cancer screening and diagnosis. J Natl Compr Cancer Netw. 2009;7:1060–1096. doi: 10.6004/jnccn.2009.0070. [DOI] [PubMed] [Google Scholar]

- 5.Smith TJ, Davidson NE, Schapira DV, Grunfeld E, Muss HB, Vogel VG, III, Somerfield MR. American Society of Clinical Oncology 1998 update of recommended breast cancer surveillance guidelines. J Clin Oncol. 1999;17:1080–1082. doi: 10.1200/JCO.1999.17.3.1080. [DOI] [PubMed] [Google Scholar]

- 6.Carcaise-Edinboro P, Bradley CJ, Dahman B. Surveillance mammography for Medicaid/Medicare breast cancer patients. J Cancer Surviv. 2010;4:59–66. doi: 10.1007/s11764-009-0107-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Doubeni CA, Field TS, Ulcickas YM, Rolnick SJ, Quessenberry CP, Fouayzi H, Gurwitz JH, Wei F. Patterns and predictors of mammography utilization among breast cancer survivors. Cancer. 2006;106:2482–2488. doi: 10.1002/cncr.21893. [DOI] [PubMed] [Google Scholar]

- 8.Field TS, Doubeni C, Fox MP, Buist DS, Wei F, Geiger AM, Quinn VP, Lash TL, Prout MN, Yood MU, Frost FJ, Silliman RA. Under utilization of surveillance mammography among older breast cancer survivors. J Gen Intern Med. 2008;23:158–163. doi: 10.1007/s11606-007-0471-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Elston Lafata J, Simpkins J, Schultz L, Chase GA, Johnson CC, Yood MU, Lamerato L, Nathanson D, Cooper G. Routine surveillance care after cancer treatment with curative intent. Med Care. 2005;43:592–599. doi: 10.1097/01.mlr.0000163656.62562.c4. [DOI] [PubMed] [Google Scholar]

- 10.Geller BM, Kerlikowske K, Carney PA, Abraham LA, Yankaskas BC, Taplin SH, Ballard-Barbash R, Dignan MB, Rosenberg R, Urban N, Barlow WE. Mammography surveillance following breast cancer. Breast Cancer Res Treat. 2003;81:107–115. doi: 10.1023/A:1025794629878. [DOI] [PubMed] [Google Scholar]

- 11.Breslau ES, Jeffery DD, Davis WW, Moser RP, McNeel TS, Hawley S. Cancer screening practices among racially and ethnically diverse breast cancer survivors: results from the 2001 and 2003 California health interview survey. J Cancer Surviv. 2010;4:1–14. doi: 10.1007/s11764-009-0102-5. [DOI] [PubMed] [Google Scholar]

- 12.Duffy CM, Clark MA, Allsworth JE. Health maintenance and screening in breast cancer survivors in the United States. Cancer Detect Prev. 2006;30:52–57. doi: 10.1016/j.cdp.2005.06.012. [DOI] [PubMed] [Google Scholar]

- 13.Katz ML, Donohue KA, Alfano CM, Day JM, Herndon JE, Paskett ED. Cancer surveillance behaviors and psychosocial factors among long-term survivors of breast cancer. Cancer and Leukemia Group B 79804. Cancer. 2009;115:480–488. doi: 10.1002/cncr.24063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vernon SW, Tiro JA, Meissner HI. Behavioral research in cancer screening. In: Miller SM, Bowen DJ, Croyle RT, Rowland JH, editors. Handbook of cancer control and behavioral science: a resource for researchers, practitioners, and policymakers. American Psychological Association; Washington, DC: 2008. pp. 255–278. [Google Scholar]

- 15.Vernon SW, Briss PA, Tiro JA, Warnecke RB. Some methodologic lessons learned from cancer screening research. Cancer. 2004;101:1131–1145. doi: 10.1002/cncr.20513. [DOI] [PubMed] [Google Scholar]

- 16.Rauscher GH, Johnson TP, Cho YI, Walk JA. Accuracy of self-reported cancer-screening histories: a meta-analysis. Cancer Epidemiol Biomark Prev. 2008;17:748–757. doi: 10.1158/1055-9965.EPI-07-2629. [DOI] [PubMed] [Google Scholar]

- 17.Howard M, Agarwal G, Lytwyn A. Accuracy of self-reports of Pap and mammography screening compared to medical record: a meta-analysis. Cancer Causes Control. 2009;20:1–13. doi: 10.1007/s10552-008-9228-4. [DOI] [PubMed] [Google Scholar]

- 18.Norman SA, Localio AR, Zhou L, Bernstein L, Coates RJ, Flagg EW, Marchbanks PA, Malone KE, Weiss LK, Lee NC, Nadel MR. Validation of self-reported screening mammography histories among women with and without breast cancer. Am J Epidemiol. 2003;158:264–271. doi: 10.1093/aje/kwg136. [DOI] [PubMed] [Google Scholar]

- 19.Armstrong K, Long JA, Shea JA. Measuring adherence to mammography screening recommendations among low-income women. Prev Med. 2004;38:754–760. doi: 10.1016/j.ypmed.2003.12.023. [DOI] [PubMed] [Google Scholar]

- 20.Tang PC, Ralston M, Arrigotti MF, Qureshi L, Graham J. Comparison of methodologies for calculating quality measures based on administrative data versus clinical data from an electronic health record system: implications for performance measures. J Am Med Inform Assoc. 2007;14:10–15. doi: 10.1197/jamia.M2198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hsiao CJ, Burt CW, Rechtsteiner E, Hing E, Woodwell DA, Sisk JE. Preliminary estimates of electronic medical record use by office-based physicians: United States, 2008. 2008 http://www.cdc.gov/nchs/products/pubs/pubd/hestats/hestats.htm.

- 22.Blumenthal D. Stimulating the adoption of health information technology. N Engl J Med. 2009;360:1477–1479. doi: 10.1056/NEJMp0901592. [DOI] [PubMed] [Google Scholar]

- 23.Blumenthal D. Implementation of the federal health information technology initiative. N Engl J Med. 2011;365:2426–2431. doi: 10.1056/NEJMsr1112158. [DOI] [PubMed] [Google Scholar]

- 24.Carpentier MY, Tiro JA, Savas LS, Bartholomew LK, Melhado TV, Coan SP, Argenbright KE, Vernon SW. Are cancer registries a viable tool for cancer survivor outreach? A feasibility study. J Cancer Surviv. 2013;7:155–163. doi: 10.1007/s11764-012-0259-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tisnado DM, Adams JL, Liu H, Damberg CL, Chen WP, Hu FA, Carlisle DM, Mangione CM, Kahn KL. What is the concordance between the medical record and patient self-report as data sources for ambulatory care? Med Care. 2006;44:132–140. doi: 10.1097/01.mlr.0000196952.15921.bf. [DOI] [PubMed] [Google Scholar]

- 26.Zapka JG, Bigelow C, Hurley T, Ford LD, Egelhofer J, Cloud WM, Sachsse E. Mammography use among sociodemographically diverse women: the accuracy of self-report. Am J Public Health. 1996;86:1016–1021. doi: 10.2105/ajph.86.7.1016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hollowell K, Olmsted CL, Richardson AS, Pittman HK, Bellin L, Tafra L, Verbanac KM. American Society of Clinical Oncology-recommended surveillance and physician specialty among long-term breast cancer survivors. Cancer. 2010;116:2090–2098. doi: 10.1002/cncr.25038. [DOI] [PubMed] [Google Scholar]

- 28.Cheung WY, Neville BA, Cameron DB, Cook EF, Earle CC. Comparisons of patient and physician expectations for cancer survivorship care. J Clin Oncol. 2009;27:2489–2495. doi: 10.1200/JCO.2008.20.3232. [DOI] [PubMed] [Google Scholar]

- 29.Johnson TP, O'Rourke DP, Burris JE, Warnecke RB. An investigation of the effects of social desirability on the validity of self-reports of cancer screening behaviors. Med Care. 2005;43:565–573. doi: 10.1097/01.mlr.0000163648.26493.70. [DOI] [PubMed] [Google Scholar]

- 30.Snyder CF, Frick KD, Kantsiper ME, Peairs KS, Herbert RJ, Blackford AL, Wolff AC, Earle CC. Prevention, screening, and surveillance care for breast cancer survivors compared with controls: changes from 1998 to 2002. J Clin Oncol. 2009;27:1054–1061. doi: 10.1200/JCO.2008.18.0950. [DOI] [PMC free article] [PubMed] [Google Scholar]