Abstract

Background

One of the major debates in implementation research turns around fidelity and adaptation. Fidelity is the degree to which an intervention is implemented as intended by its developers. It is meant to ensure that the intervention maintains its intended effects. Adaptation is the process of implementers or users bringing changes to the original design of an intervention. Depending on the nature of the modifications brought, adaptation could either be potentially positive or could carry the risk of threatening the theoretical basis of the intervention, resulting in a negative effect on expected outcomes. Adaptive interventions are those for which adaptation is allowed or even encouraged. Classical fidelity dimensions and conceptual frameworks do not address the issue of how to adapt an intervention while still maintaining its effectiveness.

Discussion

We support the idea that fidelity and adaptation co-exist and that adaptations can impact either positively or negatively on the intervention’s effectiveness. For adaptive interventions, research should answer the question how an adequate fidelity-adaptation balance can be reached. One way to address this issue is by looking systematically at the aspects of an intervention that are being adapted. We conducted fidelity research on the implementation of an empowerment strategy for dengue prevention in Cuba. In view of the adaptive nature of the strategy, we anticipated that the classical fidelity dimensions would be of limited use for assessing adaptations. The typology we used in the assessment—implemented, not-implemented, modified, or added components of the strategy—also had limitations. It did not allow us to answer the question which of the modifications introduced in the strategy contributed to or distracted from outcomes. We confronted our empirical research with existing literature on fidelity, and as a result, considered that the framework for implementation fidelity proposed by Carroll et al. in 2007 could potentially meet our concerns. We propose modifications to the framework to assess both fidelity and adaptation.

Summary

The modified Carroll et al.’s framework we propose may permit a comprehensive assessment of the implementation fidelity-adaptation balance required when implementing adaptive interventions, but more empirical research is needed to validate it.

Keywords: Implementation, Fidelity, Adaptation, Reinvention, Adaptive interventions, Conceptual framework, Translating research, Cuba

Background

Some authors argue that intervention research is a set of sequential studies (i.e., efficacy, effectiveness, and dissemination) that provides different kinds of evidence about an intervention [1, 2]. Rychectnik et al. [3], based on Nutbean and Bauman [4], formulated how to proceed while building evidence for innovative interventions: assessing process and outcome/impact of the intervention (intervention testing); determining if similar outcomes can be reproduced when the intervention is adapted to other settings or populations (intervention replication); and examining real population outcomes and public health benefits (intervention dissemination). Replication and dissemination studies are rooted on the assumption of the potential translation of evidence-based interventions to new settings and hinge on implementation issues.

Implementation is a specific set of purposeful processes and activities designed to put into practice an intervention or program of known dimensions [5], which requires to be measured with outcomes that are conceptually and empirically distinct from those to assess intervention effectiveness [5–7]. Distinguishing between “implementation” and “intervention” outcomes is critical. When translation efforts fail, it helps to determine if the failure occurred because the intervention was ineffective (intervention failure) or whether it was deployed incorrectly (implementation failure) [7].

Translating evidence-based health interventions has resulted in one of the major dilemmas in implementation research: fidelity versus adaptation [8, 9]. Fidelity or the degree to which an intervention is implemented as intended by its developers [8–14] is an implementation outcome [7] that is particularly meant to ensure that the intervention maintains its intended effects [8–14]. On the opposite, adaptation is the process of bringing changes to the original design of an intervention by its implementers or users [9, 11, 12]. Fidelity and adaptation are closely linked but remain two opposed concepts. The highest the level of the fidelity achieved, the less there are changes brought to the original design of an intervention. Inversely, the more an intervention is adapted, the more likely the fidelity can be threatened.

Several studies have demonstrated that the fidelity with which an intervention is implemented affects its effectiveness [8–15]. Hence, achieving high fidelity has been the overriding concern for many researchers who struggle to move from efficacy studies to real-world implementation, in particular in the field of pharmacological and psychosocial interventions designed to treat specific health problems [16–18]. However, in practice, the adaptation of interventions has been the rule rather than the exception [9, 10]. Moreover, some authors have argued that certain interventions might need to be adapted in the course of its implementation [9, 11, 12]. This is the case of adaptive interventions. We define these as interventions for which stakeholders are allowed, or even encouraged, to bring changes to the original design. This definition includes the type of adaptive intervention as defined by Collins et al. [19] and Nahum-Shani et al. [20], where pre-defined changes are allowed by the intervention developers. For this type of adaptive interventions, fidelity is important to ensure that pre-defined adaptations occurred as intended. However, adaptive interventions also include not pre-defined changes originating from implementers. This kind of changes occur more in the context of complex public health interventions involving different organizational levels and targeting collective behaviors than in interventions targeting individuals with different needs and where the control of the implementation process by the intervention developers is not possible or even desirable.

Examples of such adaptive interventions are empowerment strategies for disease prevention and control. Empowerment is a process through which individuals, groups, and communities are provided with the capabilities to take power over decisions that affect their lives [21, 22]. Indeed, promoting participation in decision-making implies a high degree of uncertainty as to what will be planed and/or achieved. In all cases, depending on the nature of the modifications brought to the original design of an intervention, adaptation could either be potentially positive or could carry the risk of threatening the theoretical basis of the intervention, resulting in a negative effect on expected outcomes [8, 12].

While five dimensions [adherence, dose, quality of delivery, participant responsiveness, and program differentiation] have been put forward and are commonly used for measuring fidelity [8–15], there has been little research or practical advice on how to adapt an intervention to maintain its effective ingredients and mechanisms [12]. On the basis of a critical systematic review of existing conceptualizations of implementation fidelity, Carroll et al. [15] proposed a conceptual framework for understanding and measuring this concept. They acknowledged that adaptations are likely to occur in real-world implementation, but the question on how to address this issue while measuring fidelity remained unanswered.

In this debate paper, we argue that for adaptive interventions, the issue of fidelity cannot be apprehended independently from the issue of adaptation and that both concepts are intrinsically linked. We then propose a modified Carroll et al.’s framework for implementation fidelity bringing together literature on fidelity and results from our empirical research in this field in order to provide a better fit for adaptive interventions.

Carroll et al.’s conceptual framework for implementation fidelity

As previously stated, in the last decade-and-a-half, the concept of implementation fidelity has been described and defined in terms of five dimensions that need to be measured: adherence—program implementation as described; dose—frequency and duration of the exposure to the program; quality of delivery—manner in which the program is delivered; participant responsiveness—the degree to which participants are engaged; and program differentiation—critical features that distinguishes the program [8–14]. While some authors argue that each of these dimensions is an alternative way to measure fidelity, it has been also argued that a comprehensive picture of fidelity requires the measurement of all the five dimensions [15].

Carroll et al. [15] proposed their conceptual framework in an attempt to “attribute meaning” to the concept of fidelity, but also to clarify and explain the function of each of the five classical fidelity dimensions and their relationship to one another. In their framework, they also included two additional elements suggested by a broader literature review on diffusion of innovations and on implementation fidelity: intervention complexity and facilitation strategies. These are strategies put in place to optimize the level of fidelity achieved.

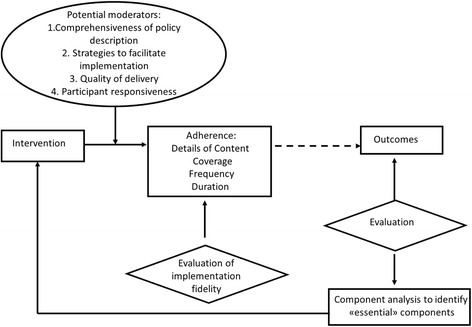

For Carroll et al. [15], “the measurement of implementation fidelity is the measurement of adherence, i.e., how far those responsible for delivering an intervention actually adhere to the intervention as it is outlined by its designers. Adherence includes the subcategories of content, frequency, duration and coverage (i.e., dose). The degree to which the intended content or frequency of an intervention is implemented is the degree of implementation fidelity achieved for that intervention. The level achieved may be influenced or affected, (i.e., moderated) by certain other variables: intervention complexity, facilitation strategies, quality of delivery, and participant responsiveness” (Fig. 1). The broken line in Fig. 1 indicates that the relationship between an intervention and its outcomes is external to implementation fidelity, but that the degree of implementation fidelity achieved can affect this relationship.

Fig. 1.

Conceptual framework for implementation fidelity proposed by Carroll et al. in 2007

According to Carroll et al. [15], in the real world, an intervention cannot be always fully implemented as planned. An intervention may also be implemented successfully, and meaningfully, if only its essential components are implemented. An analysis of outcomes may help to identify those components that are essential to the intervention, if the intervention is to maintain its intended effects. Outcome evaluation may also inform the content of the intervention by determining the minimum requirements for high implementation fidelity, i.e., the essential components.

Carroll et al. [15] stated that identifying essential components provides scope for adaptation but that the question on how to identify what is essential remains unanswered. They suggested that a possible way to identify what is essential could be conducting sensitivity or component analysis using implementation fidelity data and performance outcomes from different studies of the same intervention.

The implementation process and the need for adaptation

Dusenbury et al. [8] conducted a literature review on research on the fidelity of implementation in different fields (e. g., mental health, prevention of psychopathology, personal and social competence promotion, education, drug abuse treatment and prevention) published over a 25-year period. In this review, the authors discussed the tensions between fidelity of implementation and the need for adaptation [8]. They concluded that research has not yet indicated whether and under what conditions adaptation might enhance program outcomes and under which conditions it results in a loss of effectiveness.

Although there is a general agreement that adaptation entails bringing changes to the original design of an intervention [9–15, 23–26], there is no common agreement on the definition of adaptation [9]. Restrictive definitions co-exist with broader understandings. In restrictive definitions, adaptations are limited to tailoring interventions to local contexts and circumstances [5, 19, 20]. In a broader sense, adaptation is the result of the cognitive processes that occur while implementers or potential users struggle to give meaning to an intervention during its implementation [23]. In this understanding of the term, originating from diffusion of innovation theory scholars, this specific type of adaptation, which goes further than simply adapting, is very often called reinvention. We situate ourselves in this line of thinking.

Rogers [23], in his solid and well-articulated diffusion of innovations theory, brings together central concepts and issues regarding widespread implementation (e.g., dissemination, replication, sustainability, institutionalization, routinization, and fidelity) that have been discussed in the literature at large [27–31]. His theory is built on observations of regularities and patterns in the diffusion of a wide range of innovations (i.e., idea, practice, or object that is perceived as new by its potential individual and organizational users), in different cultural contexts with different users, as well as on a theoretical reflection on the issue that extends over decades.

Diffusion of innovations theory dismantles some of the arguments of early diffusion studies supporting the idea that an innovation is an invariant, which does not change throughout the diffusion process; and that potential users are passive subjects that implement an innovation with fidelity, i.e., just as intended by its developers. In Rogers’ view [23], reinvention (i.e., a specific type of adaptation) or the degree to which an innovation is changed or modified occurs at the implementation stage for many potential users and leads to faster and sustainable adoption of the innovation.

Bauman, Stein, and Ireys [25] introduced the idea of program “uniqueness” referring to the specifics and unusual conditions under which programs are created that are not present in actual implementation. This idea brings us back to an understanding of “translating” evidence-based interventions as the art to achieve an equivalent rather than a literal copy [2, 32]. Backer [11] argued that adaptations of some features of innovative interventions are inevitable, even desirable, to maintain the theory-based outcomes. On the one hand, the inability to modify programs may produce users’ resistance. Besides, a rigid position regarding fidelity can lead to innovations that are irrelevant or even inappropriate for certain users. Backer [11] also states that interventions that are flexible and that can be adapted have a better chance to fit a wider range of users. Certain users may have an aversion to simply “copying” interventions and pressurize for recognition of their adaptations [11].

Furthermore, according to some authors [11, 33], program fidelity is underpinned by a professionally driven or “top-down” approach to implementation, while adaptation seems to be closer to a user-based or “bottom-up” approach, which is more politically appealing to promoters of social development. Consequently, some authors state that a certain amount of adaptation is needed in order to achieve users’ involvement and ownership for successful implementation of some innovations [34, 35]. This is particularly relevant for adaptive interventions. These require emphasizing the processes that permit them to be modified and revised according to their unfolding interaction within the institutional setting and context [36]. This connects with the idea of mutual adaptation, i.e., adaptation of both the intervention and of the host organization [11, 36–38], as organizational changes will—need to—occur within the institutional setting to accommodate the intervention [5].

If there is no agreement in the literature on defining adaptation, another challenge is the lack of consensus on how to operationalize these concepts [9]. Still, some typologies are found in the literature [11, 24, 39].

Adaptations can be deliberate or accidental and include (1) additions of new components; (2) deletions or radical modifications to an intervention component in such a way that it no longer resembles the original one; and (3) minor or major modifications to an existing intervention component [11, 24] (e.g., changes in the nature of program components, in the manner or intensity of administration, cultural modifications required by local circumstances [11]). According to Rebchook et al. [24], in theory, the implications of the above three kinds of adaptations on fidelity are different. When adding something new, fidelity can be easily maintained. When a component is suppressed or radically modified, fidelity is threatened. In the third case, depending upon what the modifications entail, it may or may not affect fidelity. More recently, Stirman et al. [39] proposed an elaborated framework for classifying modifications brought to evidence-based programs or interventions. It encompasses what is modified, by whom, at which level of delivery, contextual modifications and the nature of the modifications introduced in the content of the intervention.

In the literature, authors deal with adaptation in very different ways. Bellg and colleagues do not take into consideration adaptation and are exclusively concerned with documenting, monitoring, and enhancing fidelity [16–18]. For some authors such as Collins, Murphy, and Bierman [19], adaptation of intervention options (e.g., dosage) can only be decided upon by intervention developers and follows a sequence of decision rules that recommend when and how the intervention should be modified with the aim to optimize long-term effectiveness. Other authors [5, 40, 41] support the idea that interventions should first be implemented with fidelity before allowing for adaptation, in order to discriminate between desirable and undesirable changes. Finally, for Carroll et al. [15], adaptation is likely to occur, but they argue that as far as essential components are not known, fidelity to the whole intervention is required.

We support the idea that fidelity and adaptation co-exist [23]. From our point of view, adaptations can lead either to improve on or to threaten the intervention’s underlying theory of change [42] and thus impact positively or negatively on effectiveness. For adaptive interventions, research should answer the question how an adequate fidelity-adaptation balance can be reached. One way to address this issue is looking systematically at the aspects of an intervention that are being adapted [12].

Our empirical research: fidelity-adaptation balance in the implementation of an empowerment strategy

Dengue is a vector-borne disease that is transmitted by an Aedes mosquito infected with one of the four dengue viruses [43, 44]. A mild episode of the disease can evolve to a severe and fatal hemorrhagic illness [45]. The disease is of growing public health importance in tropical and subtropical areas [46]. All currently available control methods target the Aedes mosquito, and it is nearly impossible to implement them without community acceptance or active involvement [47–50].

Empowerment strategies have been reported as effective for community-based dengue prevention and control in Cuba [51–55] and elsewhere [56–58]. Still, empowerment strategies remain controversial due to transferability and scalability issues [59], and evidence available in the literature on participatory implementation processes is scarce [60].

We conducted in the Cuban context a fidelity assessment of the implementation of an evidence-based empowerment strategy aiming at community involvement in decision-making on dengue vector control activities when it was replicated at intermediate scale [61]. The empowerment strategy was developed by researchers from the Pedro Kourí Institute of Tropical Medicine (IPK) in Havana City. It was defined in terms of components and subcomponents. The components were capacity building, organization and management, community work, and surveillance.

The strategy was implemented between October 2004 and December 2007 in 16 communities (circumscriptions) randomly selected within three People’s Councils (PCs) in La Lisa municipality of Havana City [55]. The circumscription is the lowest level of local government and covers about 1000 inhabitants. The PCs are intermediate government structures between the municipalities and the circumscriptions. Circumscriptions and PCs were heterogeneous in terms of socio-demographic composition of the population, previous experiences with participation, characteristics of the leadership, and resources and dynamic of the local government, among others.

In view of the adaptive nature of the strategy, we anticipated that the classical fidelity dimensions would be of limited use for assessing adaptations. We therefore opted to assess fidelity and adaptation in the implementation of the strategy based on Rebchoock et al.’s [24] typology to determine implemented, not-implemented, modified or added components, and subcomponents at circumscription level.

A three-step assessment was conducted [61]: (1) an individual evaluation by three strategy developers involved as facilitators in the implementation of the strategy, based on the analysis of proceedings and minutes of capacity-building workshops and process documentation forms that were filled in by implementers (i.e., implementation descriptors of component/subcomponents of the strategy for each circumscription); (2) a discussion of these assessments by a broader group of strategy developers (the three from the first step and three additional ones). If the six agreed that in a given circumscription, a component or subcomponent of the strategy was implemented as intended, it was classified as implemented. If all agreed that a component or subcomponent was not implemented, it was classified as such. If any of the professionals judged that a component or subcomponent was modified, it was classified as such. Added activities were also identified; and (3) the assessment was consensually refined following its discussion with implementers in a participatory evaluation workshop.

Qualitative data obtained from the three-step assessment were transformed into quantitative data [62]. Frequencies of not-implemented, modified, and implemented subcomponents were tabulated over all circumscriptions, and the average was calculated for the four components. To explore the relationships among the components, not-implemented, modified, and implemented components of the strategy were scored 0, 1, and 2, respectively, and their values were summed for each circumscription. In addition, semi-structured interviews were conducted with 13 implementers and deductively analyzed to identify possible explanations for the observed variation in the implementation of the strategy. The assessment was conducted retrospectively, as part of the final evaluation of the replication of the strategy. Table 1 provides a description of the empowerment strategy for dengue vector control carried out in La Lisa municipality by components and subcomponents as well as the number of circumscriptions that implemented as intended, modified, or did not implement subcomponents.

Table 1.

The empowerment strategy for dengue vector control by components and subcomponents and the number of circumscriptions (n = 16) that implemented as intended, modified, or did not implement subcomponents. La Lisa municipality, Havana City, 2004–2007

| Components | Operational definition of the components | Subcomponents | Implemented as intended | Modified | Did not implement |

|---|---|---|---|---|---|

| 1. Capacity-building | Development of knowledge, capabilities and associated values, and practices required by community members to lead community empowerment for dengue vector control | 1.1 Diagnosis, group work, and participation | 10 | 1 | 5 |

| 1.2 Surveillance of risks and behaviors | 11 | 5 | 0 | ||

| 1.3 Action plans and communication strategy | 6 | 5 | 5 | ||

| 1.4 Participatory evaluation | 8 | 0 | 8 | ||

| 2. Organization and management | The way the stakeholders involved in dengue vector control establish themselves, set commitments and roles, identify resources, and make decisions | 2.1 Presence of community working groups (CWGs) leading the strategy | 7 | 4 | 5 |

| 2.2 Vector control program staff within CWGs | 4 | 7 | 5 | ||

| 2.3 Community resources identified | 3 | 1 | 12 | ||

| 2.4 External resources mobilized | 3 | 0 | 13 | ||

| 3. Community work | Repetitive cycle of actions developed by a group of community members to change the conditions that increase the probability of dengue transmission | 3.1 Risk mapping | 11 | 1 | 4 |

| 3.2 Problem assessment | 10 | 2 | 4 | ||

| 3.3 Action plan | 7 | 1 | 8 | ||

| 3.4 Actions executed | 7 | 0 | 9 | ||

| 3.5 Communication strategy | 5 | 5 | 6 | ||

| 3.6 Elaboration of communication materials | 3 | 3 | 10 | ||

| 3.7 Monitoring and evaluation | 2 | 0 | 14 | ||

| 4. Surveillance | Timely and systematically organized data collection and analysis on dengue transmission risks and associated behaviors in order to take actions | 4.1 Identification of environmental risks | 16 | 0 | 0 |

| 4.2 Identification of domiciliary risks | 13 | 0 | 3 | ||

| 4.3 Identification of associated behaviors | 6 | 0 | 10 |

Surveillance was the most implemented component followed by capacity building. Community work and organization and management were less implemented or modified [61]. Even in the case of the more implemented components, there were some subcomponents not implemented. The more components and subcomponents were innovative (i.e., those that implied activities which were far-off from or disruptive of the routine vector control practices and that could not be easily accommodated by implementers’ expertise, previous experiences or know-how), the less they were implemented.

Scarcely implemented subcomponents were internal and external resource mobilization by the circumscriptions, designing communication strategies, and developing local communication materials. Main modifications introduced were the composition of CWGs, changing the approach of the capacity building from participatory to individualized and modifications to the design of the training activities such as using more adequate participatory techniques adapted to the characteristics of the participants. Within the whole strategy, some activities were added such as linking the strategy with activities conducted at primary school level, involving stakeholders not initially foreseen, self-organizing additional community training workshops, and conducting strength assessments as part of the participatory community diagnosis.

The difficulties encountered during implementation were related to appropriate training and skills, available time, lack of support and commitment to the strategy by the local government and health authorities, lack of motivation of local leadership, and integration of actors and resources. The study showed a wide variability of fidelity in the implementation of the intervention. The variability was largely explained by the complexity of the strategy and the lack of knowledge on its basic principles among the implementers. The variation in implementation fidelity did not result in a substantial loss of effectiveness [55]. More detailed information on the methods used in our fidelity study and results are provided in Pérez et al. 2010 [61].

Rebchook et al.’s [24] typology proved suitable to assess the fidelity-adaptation balance of the empowerment strategy, but had limitations. It did not allow us to answer the question “which of the modifications introduced in the strategy contributed to, or distracted from, outcomes?” Rebchook et al.’s assertion that adding new components or subcomponents to an original design a priori does not threaten fidelity, is questionable; such components could contradict the basic principles of the intervention. Moreover, using Rebchook et al.’s [24] typology, we could not provide a very detailed view of fidelity in terms of content, dose, and coverage.

A modified Carroll et al.’s framework for implementation fidelity

Therefore, we confronted our empirical research with existing literature on fidelity. As a result, we identified Carroll et al.’s [15] framework for implementation fidelity as the one that could potentially meet our concern of assessing adaptation in the context of fidelity [33].

This framework, slightly modified by adding two moderating factors (i.e., context and participant recruitment), was empirically tested by Hasson et al. [63, 64] in the evaluation of implementation fidelity of a complex intervention in health and social care. Recently, Gagliardi et al. [65] and von Thiele Schwarz et al. [12] applied the conceptual framework to surgical safety checklists and occupational health interventions, respectively. In all the above studies, the authors acknowledged that the framework was a useful evaluation tool for implementation fidelity for complex interventions.

We propose a modified Carroll et al.’s [15] framework for implementation fidelity that retains these authors ideas of conducting outcome evaluation and component analysis to identify those elements that are essential for an intervention. Indeed, evaluating implementation efforts and measuring the fidelity-adaptation balance have a meaning only in the context of outcomes [11]. However, we improved the graphical representation of the role of fidelity and outcome evaluations in identifying the essential components of an intervention (Fig. 2).

Fig. 2.

Modified Carroll et al.’s conceptual framework for implementation fidelity

We propose some major modifications to the framework. Carroll et al. [15] established adherence as the sole bottom-line measurement of implementation fidelity. As we learned from our empirical fidelity research [61], the nature of adaptations needs to be consciously captured in relation to their effect on effectiveness. In the same line, von Thiele et al. [12] recently suggested applying Carroll et al.’s subcategories of adherence (i.e., prescribed content, frequency, duration, and coverage) to describe and categorize adaptations. In addition, subcategories of adherence might not always be sufficient for every particular intervention; other aspects might be required.

Thus, in our adapted framework, we propose assessing intervention specific descriptors of both fidelity and adaptation, instead of fixed subcategories of adherence alone. To move in this direction, intervention developers need the following: first, to have a clear idea of the expected outcome(s); second, to make the functioning principles or theory of change [42] of the intervention explicit; third, to be able to state them in the form of specific descriptors of fidelity; and fourth, to establish questions to identify adaptations based on the description of the intervention. Intervention specific descriptors of adaptation are developed through answering these questions. A step further is to determine to what extent the adaptations identified affect the functioning principles of a particular component and/or of the intervention as a whole.

An example of this reasoning is provided using the capacity-building component of our empowerment strategy. The expected result was that the participants acquire the knowledge and skills to change the existing power relationships among them. The functioning principles were rooted in the pedagogical model of popular education [66]: the participants of the training need to acquire specific knowledge and skills, these are provided through a participatory learning process (learning group); and the learning group has to involve stakeholders who need to change their power relationships.

In Table 2, we provide specific descriptors of fidelity for capacity building. These provide a comprehensive description of the intervention as intended with details on content, processes (e.g., “what,” “how,” “how frequently,” “to whom,” and “by whom”) and specifications related to the implementation context. In Table 3, we provide questions to identify if adaptations were brought to the capacity-building component. Taking into account, the functioning principles of the component, only the first adaptation identified distracts from the expected result.

Table 2.

Example of specific descriptors of fidelity for the capacity-building component of the empowerment strategy for dengue vector control. La Lisa municipality, Havana City, 2004–2007

| Specific descriptors of fidelity for capacity-building | |

|---|---|

| What: development of knowledge and skills on four topics: (1) diagnosis, group work, and participation; (2) surveillance of risks and behaviors; (3) action plans and communication strategy; and (4) participatory evaluation. | |

| How: through a workshop based on the principles of the pedagogical model of popular education: e.g., the objective is that the participants think and, consequently, transform their reality, using a dialectic logic between theory and practice and participatory and experience-based learning methods. | |

| How frequently: one 4-h workshop for each topic in a 3-month-span period. | |

| To whom: a learning group composed of three to five stakeholders with different power relationships in relation to dengue vector control activities, belonging to at least three communities. | |

| By whom: facilitators previously trained based on the principles of the pedagogical model of popular education. | |

| Specifications related to the context: Three PCs are involved in the project. There are five to six circumscriptions randomly selected per PCs. Methodological support for the training is provided: e.g., written guidelines on how to conduct a popular education workshop, methodological counseling to the facilitators by at least one IPK’s strategy developer. |

Table 3.

Example of specific descriptors of adaptation for the capacity-building component of the empowerment strategy for dengue vector control. La Lisa municipality, Havana City, 2004–2007

| Specific descriptors of adaptation for capacity-building | |

|---|---|

| Questions to identify adaptations | Specific descriptors of adaptation for a PC |

| What: Was the content of the training changed in any way? How? Was any topic suppressed? Which one? Why? Was any topic replaced? By which one? Why? Was any topic added? Which one? Why? | The topic “diagnosis, group work, and participation” was suppressed of the content of the training because it was deemed irrelevant by the facilitators. Monothematic workshops on communication strategies were added to facilitate the assimilation by the participants of the topic. |

| How: Was any principle of the pedagogical model adapted (e.g., objectives, logic, learning methods)? Which one? How? Why? Was the pedagogical model replaced by another? By which one? Why? | Some of the learning methods were adapted to the characteristics of the participants. Reading sessions were replaced by interactive lectures to facilitate the understanding of the topics. |

| How frequently: Was any adaptation introduced in the frequency of the training (e.g., number of sessions, number of hours per sessions? How? Why? Were the workshop’s sessions split over time? How? Why? Was there any adaptation introduced in the length of the span period intended to provide the training? How? Why? | No adaptations (i.e., additions, modifications, deletions) identified |

| To whom: Was the learning group adapted in any way (e.g., quantity of the participants, role of the stakeholders in relation to dengue vector control activities)? How? Why? Was the learning group replaced by another teaching strategy? By which one? Why? | No adaptations identified |

| By whom: Was any facilitator not trained? Why? Was any principle of the pedagogical model adapted while training the facilitators? Which one? How? Why? Was the pedagogical model replaced by another? By which one? Why? | No adaptations identified |

| Specifications related to the context: Was there any change in the number of CPs? Why? Was there any change in the number of circumscriptions involved? Was there any circumscription replaced? How? By which one? Why? Were there modifications brought to the methodological support (e.g., provision of guidelines, content of the guidelines, methodological counseling)? How? Why? | No adaptations identified |

Through this analysis of the adaptations brought to an intervention, avenues to prospectively improve implementation can be identified. The added value would be to help intervention developers to identify those non-pre-defined adaptations that could improve the design of the intervention and, thus, effectiveness. Once a positive adaptation is identified, the intervention could go through a new cycle of designing, implementing, and testing. This would require feedback mechanisms.

The need to further identify potential sources of variability in implementation has been highlighted by some authors [67, 68], and Carroll et al. [15] themselves acknowledged that the level of fidelity achieved is influenced by potential moderating factors, which are not necessarily independent. Hasson et al. [63, 64] further emphasized the importance of other mechanisms and factors influencing implementation fidelity while testing Carroll et al.’s framework. Our modified framework maintains comprehensiveness of policy description, quality of delivery, and participant responsiveness as basic potential moderators, but permits to include other setting- or situation-specific moderators.

In addition, we agree with Carroll et al. [15] that facilitation strategies could influence potential moderators of the level of fidelity achieved. For instance, providing manuals and training to implementers could improve the quality of the delivery of an intervention. However, in our modified framework, those strategies are put in place not with the purpose to increase “strict adherence,” but to contribute to achieve an adequate fidelity-adaptation balance. Once adaptations and their positive or negative effects have been identified, facilitation strategies will only address those deemed as inadequate.

In the case of adaptive interventions, this also provides scope for adaptation. The need for such an adequate fidelity-adaptation balance, emphasized by Backer [11] and von Thiele Schwarz et al. [12], is strongly supported by the results of our empirical fidelity research [61] and further research on diffusion of the empowerment strategy for dengue prevention [69]. In practice, aiming at a fidelity-adaptation balance implies interdependency between fidelity and adaptation.

We also propose a further minor modification to Carroll et al.’s framework regarding the use of the term intervention. Keeping in mind the controversial top-down versus bottom-up approaches to implementation [11, 33], intervention may be a suited term for a top-down approach. Therefore, we consider that the term adaptive intervention is more appropriate in our modified theoretical framework of implementation fidelity.

More empirical research is needed to test and validate the modified framework, but we do believe that it may permit a comprehensive assessment of the implementation fidelity-adaptation balance for adaptive interventions.

Conclusions

Translating into practice evidence-based interventions, deals with unresolved tensions between the need for high implementation fidelity to ensure interventions’ intended effects and bringing changes to the original proposal to fit potential users’ needs. We argue that the issue of fidelity cannot be apprehended independently from the issue of adaptation and that both concepts are intrinsically linked. This paper proposes a conceptual framework of implementation fidelity modified from Carroll et al. [15] suitable to assess the fidelity-adaptation balance for adaptive interventions.

We retain Carroll et al.’s ideas of identifying those elements of an intervention that are essential to maintain its intended effects. However, we propose assessing intervention specific descriptors of both fidelity and adaptation instead of fixed subcategories of adherence alone. We argue that the assessment should capture the nature of the adaptations that occur while implementing adaptive interventions in relation to their effect on effectiveness. Besides, the measurement of adherence stricto sensu may not always be applicable to a particular intervention. We also suggest that developing facilitation strategies could influence moderators of fidelity and do not need to serve the purpose to optimize implementation fidelity, but to achieve an adequate fidelity-adaptation balance.

More empirical research is needed to test and validate the modified framework, but we do believe that it may permit a comprehensive assessment of the implementation fidelity-adaptation balance for adaptive interventions.

Acknowledgements

The research was funded by the Cuban Ministry of Health and through a framework agreement between the Institute of Tropical Medicine “Pedro Kourí,” the Institute of Tropical Medicine (Antwerp), and the Belgium Directorate-General for Development Cooperation.

Authors’ contributions

DP, PVDS, MCZ, MC, and PL have made substantive intellectual contributions to this debate paper. All contributed to drafting the manuscript. All authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Contributor Information

Dennis Pérez, Email: dennis@ipk.sld.cu.

Patrick Van der Stuyft, Email: PVDStuyft@itg.be.

Maríadel Carmen Zabala, Email: mzabala@flacso.uh.cu.

Marta Castro, Email: martac@ipk.sld.cu.

Pierre Lefèvre, Email: PLefevre@itg.be.

References

- 1.Sorensen G, Emmons K, Hunt MK, Johnston D. The implications of the results of community intervention trials. Annu Rev Public Health. 1998;19:379–416. doi: 10.1146/annurev.publhealth.19.1.379. [DOI] [PubMed] [Google Scholar]

- 2.Glasgow R, Lichtenstein E, Marcus A. Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. Am J Public Health. 2003;93:1261–1267. doi: 10.2105/AJPH.93.8.1261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Rychetnik L, Bauman A, Laws R, King L, Rissel C, Nutbeam D, Colagiuri S, Caterson I. Translating research for evidence-based public health: key concepts and future directions. J Epidemiol Community Health. 2012;66:1187–1192. doi: 10.1136/jech-2011-200038. [DOI] [PubMed] [Google Scholar]

- 4.Nutbeam D, Bauman A. Evaluation in a nutshell: a practical guide to the evaluation of health promotion programs. Sydney: McGraw Hill; 2006. [Google Scholar]

- 5.Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: a synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network (FMHI Publication 231); 2005. [Google Scholar]

- 6.Glasgow RE. eHealth evaluation and dissemination research. Am J Prev Med. 2007;32:119–126. doi: 10.1016/j.amepre.2007.01.023. [DOI] [PubMed] [Google Scholar]

- 7.Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement, challenges and research agenda. Adm Policy Ment Health. 2011;38:65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dusenbury L, Brannigan R, Falco M, Hansen WB. A review of research on fidelity of implementation: implications for drug abuse prevention in school settings. Heal Educ Res. 2003;18:237–256. doi: 10.1093/her/18.2.237. [DOI] [PubMed] [Google Scholar]

- 9.Sundell K, Beelmann A, Hasson H, von Thiele Schwarz U. Novel programs, international adoptions, or contextual adaptations? Meta-analytical results from German and Swedish intervention research. J Clin Child Adolesc Psychol. 2015 doi: 10.1080/15374416.2015.1020540. [DOI] [PubMed] [Google Scholar]

- 10.Hall GE, Hord SM. Implementing change: patterns, principles, potholes. New York: Allyn & Bacon; 2001. [Google Scholar]

- 11.Backer TE: Finding the balance: program fidelity and adaptation in substance abuse prevention: a state-of-the-art review. Rockville, MD: Center for Substance Abuse Prevention. 2002. Available at: http://www.csun.edu/sites/default/files/FindingBalance1.pdf. Accessed 11 Jan 2010.

- 12.von Thiele Schwarz U, Hasson H, Lindfors P: Applying a fidelity framework to understand adaptations in an occupational health intervention. Work 2014, 51. doi: 10.3233/WOR-141840 [DOI] [PubMed]

- 13.Dane A, Schneider B. Program integrity in primary and early secondary prevention: are implementation effects out of control? Clin Psychol Rev. 1998;18:23–45. doi: 10.1016/S0272-7358(97)00043-3. [DOI] [PubMed] [Google Scholar]

- 14.Dusenbury L, Brannigan R, Hansen WB, Walsh J, Falco M. Quality of implementation: developing measures crucial to understanding the diffusion of preventive interventions. Health Edu Res. 2005;20:308–313. doi: 10.1093/her/cyg134. [DOI] [PubMed] [Google Scholar]

- 15.Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implement Sci. 2007;2:40. doi: 10.1186/1748-5908-2-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bellg A, Borrelli B, Resnick B, Hecht J, Minicucci D, Ory M, Ogedegbe G, Orwig D, Ernst D, Czajkowski S. Enhancing treatment fidelity in health behaviour change studies: best practices and recommendations from the NIH Behavior Change Consortium. Health Psychol. 2004;23:443–451. doi: 10.1037/0278-6133.23.5.443. [DOI] [PubMed] [Google Scholar]

- 17.Borrelli B, Sepinwall D, Ernst D, Bellg AJ, Czajkowski S, Breger R, DeFrancesco C, Levesque C, Sharp DL, Ogedegbe G, Resnick B, Orwig D. A new tool to assess treatment fidelity and evaluation of treatment fidelity across 10 years of health behavior research. J Consult Clin Psychol. 2005;73:852–860. doi: 10.1037/0022-006X.73.5.852. [DOI] [PubMed] [Google Scholar]

- 18.Borrelli B. The assessment, monitoring, and enhancement of treatment fidelity in public health clinical trials. J Public Health Dent. 2011;71:52–63. doi: 10.1111/j.1752-7325.2011.00233.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Collins LM, Murphy SA, Bierman KL. A conceptual framework for adaptive preventive interventions. Prev Sci. 2004;5:185–196. doi: 10.1023/B:PREV.0000037641.26017.00. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Nahum-Shani I, Qian M, Almirall D, Pelham WE, Gnagy B, Fabiano G, Waxmonsky J, Yu J, Murphy S: Experimental design and primary data analysis methods for comparing adaptive interventions. Psychol Methods 2012,17: doi:10.1037/a0029372 [DOI] [PMC free article] [PubMed]

- 21.Rifkin SB. Paradigms lost: toward a new understanding of community participation in health programmes. Acta Trop. 1996;61:79–92. doi: 10.1016/0001-706X(95)00105-N. [DOI] [PubMed] [Google Scholar]

- 22.Pérez D, Lefèvre P, Romero MI, Sánchez L, de Vos P, Van der Stuyft P. Augmenting frameworks for appraising practices of community-based health interventions. Health Policy Plan. 2009;24:335–341. doi: 10.1093/heapol/czp028. [DOI] [PubMed] [Google Scholar]

- 23.Rogers EM. Diffusion of innovations. 5. New York: Free Press; 2003. [Google Scholar]

- 24.Rebchook MG, Kegeles SM, Huebner D, the TRIP Research Team Translating research into practice: the dissemination and initial implementation of an evidence-based HIV prevention program. AIDS Educ Prev. 2006;18:119–136. doi: 10.1521/aeap.2006.18.supp.119. [DOI] [PubMed] [Google Scholar]

- 25.Bauman LJ, Stein RE, Ireys HT. Reinventing fidelity: the transfer of social technology among settings. Am J Community Psychol. 1991;19:619–639. doi: 10.1007/BF00937995. [DOI] [PubMed] [Google Scholar]

- 26.Rice RE, Rogers EM. Reinvention in the innovation process. Sci Commun. 1980;1:449–514. doi: 10.1177/107554708000100402. [DOI] [Google Scholar]

- 27.Goodman RM, Steckler AB. A framework for assessing program institutionalization. Knowledge Soc Int J Knowledge Tranfer. 1989;2:52–66. [Google Scholar]

- 28.Goodman RM, Steckler AB. A model for institutionalization of health promotion programs. Fam Commu Health. 1989;11:63–78. doi: 10.1097/00003727-198902000-00009. [DOI] [Google Scholar]

- 29.Shediac-Rizkallah MC, Bone LR. Planning for the sustainability of community-based health programs: conceptual frameworks and future directions for research, practice and policy. Health Edu Res. 1998;13:87–108. doi: 10.1093/her/13.1.87. [DOI] [PubMed] [Google Scholar]

- 30.Pluye P, Potvin L, Denis JL. Making public health programs last: conceptualizing sustainability. Eval Program Plann. 2004;27:121–133. doi: 10.1016/j.evalprogplan.2004.01.001. [DOI] [Google Scholar]

- 31.Pluye P, Potvin L, Denis JL, Pelletier J, Mannoni C. Program sustainability begins with the first events. Eval Program Plann. 2005;28:123–137. doi: 10.1016/j.evalprogplan.2004.10.003. [DOI] [Google Scholar]

- 32.Lara M, Bryant-Stephens T, Damitz M, Findley S, González Gavillán J, Mitchell H, Ohadike YU, Persky VW, Ramos Valencia G, Rojas Smith L, Rosenthal M, Thyne S, Uyeda K, Viswanathan M, Woodell C. Balancing “fidelity” and community context in the adaptation of asthma evidence-based interventions in the “real world”. Health Promot Pract. 2011 doi: 10.1177/1524839911414888. [DOI] [PubMed] [Google Scholar]

- 33.Pérez D: Procesos de implementación de estrategias participativas en el control de aedes aegypti. Propuestas para su evaluación y transformación. Thesis Dissertation, Cuba: Havana University; 2011

- 34.Arthur MS, Blitz C. Bridging the gap between research and practice in drug abuse prevention through needs assessment and strategic community planning. J Community Psychol. 2000;28:241–256. doi: 10.1002/(SICI)1520-6629(200005)28:3<241::AID-JCOP2>3.0.CO;2-X. [DOI] [Google Scholar]

- 35.Backer TE. The failure of success: challenges of disseminating effective substance abuse prevention programs. J Community Psychol. 2000;28:363–373. doi: 10.1002/(SICI)1520-6629(200005)28:3<363::AID-JCOP10>3.0.CO;2-T. [DOI] [Google Scholar]

- 36.Berman P. Thinking about programmed and adaptive implementation: matching strategies to situations. In: Ingram H, Mann D, editors. Why policies succeed or fail. Beverly Hills: Sage Publications; 1980. [Google Scholar]

- 37.Berman P, McLaughlin MW. Implementation of educational innovation. Educ Forum. 1976;40:345–370. doi: 10.1080/00131727609336469. [DOI] [Google Scholar]

- 38.Leonard-Barton D. Implementation as mutual adaptation of technology and organization. Res Policy. 1988;17:251–267. doi: 10.1016/0048-7333(88)90006-6. [DOI] [Google Scholar]

- 39.Stirman SW, Miller CJ, Toder K, Calloway A. Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implement Sci. 2013;8:65. doi: 10.1186/1748-5908-8-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Dissemination Working Group . Common elements of developing and implementing program models. Calgary: FYI Consulting Ltd; 1999. [Google Scholar]

- 41.Winter SG, Szulanski G. Replication as strategy. Organ Sci. 2001;12:730–743. doi: 10.1287/orsc.12.6.730.10084. [DOI] [Google Scholar]

- 42.Hernandez M, Hodges S. Building upon the theory of change for systems of care. J Emot Behav Disord. 2003;11:19–26. doi: 10.1177/106342660301100104. [DOI] [Google Scholar]

- 43.Gubler DJ. Dengue, urbanization and globalization: the unholy trinity of the 21st century. Trop Med Health. 2011;39(Suppl 4):3–11. doi: 10.2149/tmh.2011-S05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Stoddard ST, Forshey BM, Morrison AC, Paz-Soldan VA, Vazquez-Prokopec GM, Astete H, Reiner RC, Jr, Vilcarromero S, Elder JP, Halsey ES, Kochel TJ, Kitron U, Scott TW. House-to-house human movement drives dengue virus transmission. Proc Natl Acad Sci U S A. 2013;15:994–999. doi: 10.1073/pnas.1213349110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Guzmán MG, Rodríguez-Roche R, Kourí G. Dengue and dengue hemorrhagic fever. Giornale Italiano Di Medicina Tropicale. 2006;11:1–2. [Google Scholar]

- 46.WHO. Dengue and severe dengue [factsheet no. 117]. Geneva, World Health Organization. 2012. Available at: http://www.who.int/mediacentre/factsheets/fs117/en/. Accessed 26 Jan 2015.

- 47.Parks W, Lloyd L: Planning social mobilization and communication for dengue fever prevention and control: a step-by-step guide. WHO/CDS/WMC/2004.2; 2004.

- 48.Parks W, Lloyd L, Nathan M, Hosein E, Odugleh A, Clark G, Gluber DJ. International experiences in social mobilization and communication for dengue prevention and control. Dengue Bulletin. 2004;28(Suppl):1–7. [Google Scholar]

- 49.San Martín JL, Prado M. Percepción de riesgo y estrategias de comunicación social sobre el dengue en las Américas. Rev Panam Salud Pública. 2004;15:135–139. doi: 10.1590/S1020-49892004000200014. [DOI] [PubMed] [Google Scholar]

- 50.Toledo ME, Baly A, Vanlerberghe V, Rodriguez M, Benitez JR, Duvergel J, Van der Stuyft P. The unbearable lightness of technocratic efforts at dengue control. Trop Med Int Health. 2008;13:728–736. doi: 10.1111/j.1365-3156.2008.02046.x. [DOI] [PubMed] [Google Scholar]

- 51.Toledo ME, Vanlerberghe V, Baly A, Ceballos E, Valdes L, Searret M, Boelaert M, Van der Stuyft P. Towards active community participation in dengue vector control: results from action research in Santiago de Cuba, Cuba. Trans R Soc Trop Med Hyg. 2007;101:56–63. doi: 10.1016/j.trstmh.2006.03.006. [DOI] [PubMed] [Google Scholar]

- 52.Sánchez L, Pérez D, Alfonso L, Castro M, Sánchez LM, Van der Stuyft P, Kourí G. Estrategia de educación popular para promover la participación comunitaria en la prevención del dengue. Rev Panam Salud Pública. 2008;24:61–69. doi: 10.1590/S1020-49892008000700008. [DOI] [PubMed] [Google Scholar]

- 53.Sánchez L, Pérez D, Cruz G, Castro M, Kouri G, Shkedy Z, Vanlerberghe V, Van der Stuyft P. Intersectoral coordination, community empowerment and dengue prevention: six years of controlled interventions in Playa Municipality, Havana, Cuba. Trop Med Int Health. 2009;14:1356–1364. doi: 10.1111/j.1365-3156.2009.02379.x. [DOI] [PubMed] [Google Scholar]

- 54.Vanlerberghe V, Toledo ME, Rodriguez M, Gomez D, Baly A, Benitez JR, Van der Stuyft P. Community involvement in dengue vector control: cluster randomized trial. BMJ. 2009;338:b1959. doi: 10.1136/bmj.b1959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Castro M, Sánchez L, Pérez D, Carbonell N, Lefèvre P, Vanlerberghe V, Van der Stuyft P. A community empowerment strategy embedded in a routine dengue vector control programme: a cluster randomised controlled trial. Trans R Soc TropMed Hyg. 2012;106:315–321. doi: 10.1016/j.trstmh.2012.01.013. [DOI] [PubMed] [Google Scholar]

- 56.Nam VS, Nguyen TY, Tran VP, Truong UN, Le QM, Le VL, Le TN, Bektas A, Briscombe A, Aaskov JG, Ryan PA, Kay BH. Elimination of dengue by community programs using Mesocyclops (Copepoda) against Aedes aegypti in central Vietnam. Am J Trop Med Hyg. 2005;72:67–73. [PubMed] [Google Scholar]

- 57.Kay B, Nam VS. New strategy against Aedes aegypti in Vietnam. Lancet. 2005;365:613–617. doi: 10.1016/S0140-6736(05)17913-6. [DOI] [PubMed] [Google Scholar]

- 58.Kay BH, Tuyet Hanh TT, Le NH, Quy TM, Nam VS, Hang PV, Yen NT, Hill PS, Vos T, Ryan PA. Sustainability and cost of a community-based strategy against Aedes aegypti in northern and central Vietnam. Am J Trop Med Hyg. 2010;82:822–830. doi: 10.4269/ajtmh.2010.09-0503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Rifkin SB. Examining the links between community participation and health outcomes: a review of the literature. Health Policy Plan. 2014;29:98–106. doi: 10.1093/heapol/czu076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Pérez D: Disclosing implementation, replication and diffusion processes of an empowerment strategy for dengue prevention and control. Thesis Dissertation. Ghent: Ghent University; 2015

- 61.Pérez D, Lefèvre P, Castro M, Sánchez L, Toledo ME, Vanlerbergue V, Van der Stuyft P. Process-oriented fidelity research assists in evaluation, adjustment and scaling up of community-based interventions. Health Policy Plan. 2010;26:413–422. doi: 10.1093/heapol/czq077. [DOI] [PubMed] [Google Scholar]

- 62.Creswell J, Clarck VP. Design and conducting mixed methods research. Washington DC: Sage Publications; 2011. [Google Scholar]

- 63.Hasson H. Study protocol: systematic evaluation of implementation fidelity of complex interventions in health and social care. Implement Sci. 2010;5:67. doi: 10.1186/1748-5908-5-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Hasson H, et al. Fidelity and moderating factors in complex interventions: a case study of a continuum of care program for frail elderly people in health and social care. Implement Sci. 2012;7:23. doi: 10.1186/1748-5908-7-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Gagliardi AR, Straus SE, Shojania KG, Urbach DR. Multiple interacting factors influence adherence, and outcomes associated with surgical safety checklists: a qualitative study. PLoS One. 2014;26:9. doi: 10.1371/journal.pone.0108585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Freire P. La educación liberadora. Madrid: Akal; 1981. [Google Scholar]

- 67.Arai L, Roen K, Roberts H, Popay J. It might work in Oklahoma but will it work in Southampton? Context and implementation in the effectiveness literature on domestic smoke detectors. Injury Prevention. 2005;11:148–151. doi: 10.1136/ip.2004.007336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Roen K, Arai L, Roberts H, Popay J. Extending systematic reviews to include evidence on implementation: methodological work on a review of community-based initiatives to prevent injuries. Soc Sci Med. 2006;63:1060–1071. doi: 10.1016/j.socscimed.2006.02.013. [DOI] [PubMed] [Google Scholar]

- 69.Pérez D, Lefèvre P, Castro M, Toledo ME, Zamora G, Bonet M, Van der Stuyft P. Diffusion of community empowerment strategies for Aedes aegypti control in Cuba: a muddling through experience. Soc Sci Med. 2013;84:44–52. doi: 10.1016/j.socscimed.2013.02.003. [DOI] [PubMed] [Google Scholar]