Abstract

The purpose of this study was to compare several different measures of physician-patient communication. We compared data derived from different measures of three communication behaviors: patient participation; physician information-giving; and physician participatory decision making (PDM) style, from 83 outpatient visits to oncology or thoracic surgery clinics for pulmonary nodules or lung cancer. Communication was measured with rating scales completed by patients and physicians after the consultation and by two different groups of external observers who used rating scales or coded the frequency of communication behaviors, respectively, after listening to an audio-recording of the consultation. Measures were compared using Pearson correlations. Correlations of patients’ and physicians’ ratings of patient participation (r=0.04) and physician PDM style (r=0.03) were low and not significant (P>0.0083 Bonferroni-adjusted). Correlations of observers’ ratings with patients’ or physicians’ ratings for patient participation and physician PDM style were moderate or low (r=0.15, 0.27, 0.07, and 0.01, respectively), but were not statistically significant (P>0.0083 Bonferroni-adjusted). Correlations between observers’ ratings and frequency measures were 0.31, 0.52, and 0.63, and were statistically significant with p-values 0.005, <0.0001, and <0.0001; respectively, for PDM style, information-giving, and patient participation. Our findings highlight the potential for using observers’ ratings as an alternate measure of communication to more labor intensive frequency measures.

Keywords: Communication, Physician Patient Relations, Patient Participation, Decision-making style, Information-giving, Observer Variation, Reproducibility of Results

Introduction

Certain elements of physician-patient communication are important to patient-centered medical care, particularly in visits focused on diagnosis and treatment of cancer. Patient-centered medical care can achieve several functions including: fostering healing relationships, exchanging information, responding to emotions, making decisions, managing uncertainty, and enabling patient self-management (Epstein et al., 2005).

Patient-centered communication

Patient-centered care can be facilitated with specific patient-centered communication behaviors including when patients use active participatory communication (e.g. asking questions and giving opinions) and when clinicians provide information and encourage and facilitate patient involvement (Street, Gordon, Ward, Krupat, & Kravitz, 2005). Physicians should be sufficiently and clearly informative, supportive, and encourage patient involvement in the consultation and their care (Arora, 2003; Epstein et al., 2005). Patients should actively participate, at least with respect to expressing their concerns, preferences, symptoms, and asking questions (Epstein et al., 2005; Street, 2001). However, while most can agree on the key elements of communication that need to be done well, researchers vary greatly in the measures they use to assess communication (Ong, de Haes, Hoos, & Lammes, 1995; Rimal, 2001).

Variability in methods to measure of communication

Variability in the assessment of patient-centered communication can be a problem in that different measures of the same construct (e.g., informativeness) may produce different results and conclusions, based on the measures chosen and the assumptions underlying those measures (Ong et al., 1995; Rimal, 2001). Some methods for measuring communication use audio- or video-recorded patient-clinician interactions and external observers to code these audiotapes to generate a quantitative frequency measure or count of patients’ (e.g., information seeking) and physicians’ (e.g., information-giving) behaviors (Cegala, 1997; Gordon, Street, Kelly, Souchek, & Wray, 2005; Roter, 1977; Street & Millay, 2001). Other methods use questionnaires with rating scales to get the post-visit perspective of patients and physicians about specific communication behaviors that occurred during a medical encounter (Brody, Miller, Lerman, Smith, & Caputo, 1989; Galassi, Schanberg, & Ware, 1992; Street, 1992). Questionnaires with rating scales for patients may also be completed by external observers tasked to respond from a patient's perspective (Blanch-Hartigan, Hall, Krupat, & Irish, 2013).

Variability in ratings of communication

Not only is there variability in measures of communication, but there may be variability in the evaluations and perspective of individuals completing the measures. For example, though a physician gives detailed information describing a surgical procedure (i.e., rationale, alternatives, risks, and benefits), a patient who already knows the information or was looking for other information (e.g., recovery time) might rate that physician as less informative. In this case, variability in evaluation may result when the self-evaluation by the physician or the evaluation by an external observer rates the physician highly on informativeness. Physicians and patients completing evaluations may not agree in other assessments of their interaction in medical encounters as well. In a study of patients’ and physicians’ perceptions of communication and shared decision making in primary care medical encounters, Saba et al., used video-triggered stimulated recall and found that observed communication behavior in a medical interaction may not correlate with the patients’ or physicians’ ratings of their subjective experience of collaboration (Saba et al., 2006).

Variability may also occur when comparing assessments of communication of the patient or physician with those of external observers. Assessments by external observers who were not participants in the medical encounter may differ from assessments of those who were participants (i.e., self-assessments vs. assessments of partners). External observers evaluate a recorded medical interaction as a trained coder or as an analogue patient (Blanch-Hartigan et al., 2013) and observers can provide a valid method for gathering data about medical interactions (van Vliet et al., 2012). Nonetheless, external observers may not have access to features of non-verbal communication (when evaluating audio-recordings) or to prior relational history, and their evaluations of an encounter may differ from physicians’ or patients’ evaluations (Kasper, Hoffmann, Heesen, Kopke, & Geiger, 2012).

Few studies have compared different techniques for assessment of doctor-patient communication using the same dataset. Street found poor correlation of ratings from questionnaires with frequency measures coded from audio-recorded medical encounters (Street, 1992). Cegala found that although patients and physicians agree in general about what constitutes competent communication there is little agreement, if any, at the dyadic level between physician and patient (Cegala, Gade, Lenzmeier Broz, & McClure, 2004). Compared with real patients’ satisfaction, Blanch-Hartigan (2013) found that analogue patients’ (i.e., external observers’) satisfaction was a better predictor of physicians’ patient-centered communication. In a systematic review and meta-analysis, van Vliet (2012) reported that analogue patients’ ratings overlap with real patients’ ratings and analogue patients’ ratings were not subject to ceiling effects. In an issue of Health Communication, several investigators analyzed the same sample of physician-patient audio-recordings using different coding systems that assessed elements of patient-centered communication. Collectively, the results indicated that, whether or not consultations were considered “patient-centered” depended on which coding scheme was used (Rimal, 2001).

Objectives and significance of this study

In this investigation, we examine and compare several different measures of physician-patient communication. We include the perspective of two different groups of external observers, one completing questionnaire-based rating scales of communication and one completing frequency measures of communication. Using the perspective of external observers, patients, and physicians, we compare one interactant's assessment of the other interactant's communication (e.g., a physician's rating of patient's involvement), assessment of one's own communication (e.g., a patient's self-rating of his/her involvement in the interaction), an external observer's rating (e.g. observer's rating of patient's involvement), and an external observer's count or frequency measure of communication (e.g., a quantitative count of a patient's involvement). The three elements of communication examined include physician information-giving, physician participatory decision making style, and patient involvement in the consultation.

These comparisons are important because there is no gold standard for the measurement of communication, and few studies have compared across more than two different methods of measuring similar behaviors. Such comparisons also take an initial step toward developing consensus among possible communication assessments by identifying common elements of concordance among measures.

Methods

Subjects

Eligible patients with pulmonary nodules or lung cancer who presented for initial visits for treatment decision making to thoracic surgery or oncology clinics were recruited at a large southern VA hospital (Gordon, Street, Sharf, Kelly, & Souchek, 2006; Gordon, Street, Sharf, & Souchek, 2006). All patients provided informed consent and the study was approved by the institutional review board.

Data Collection

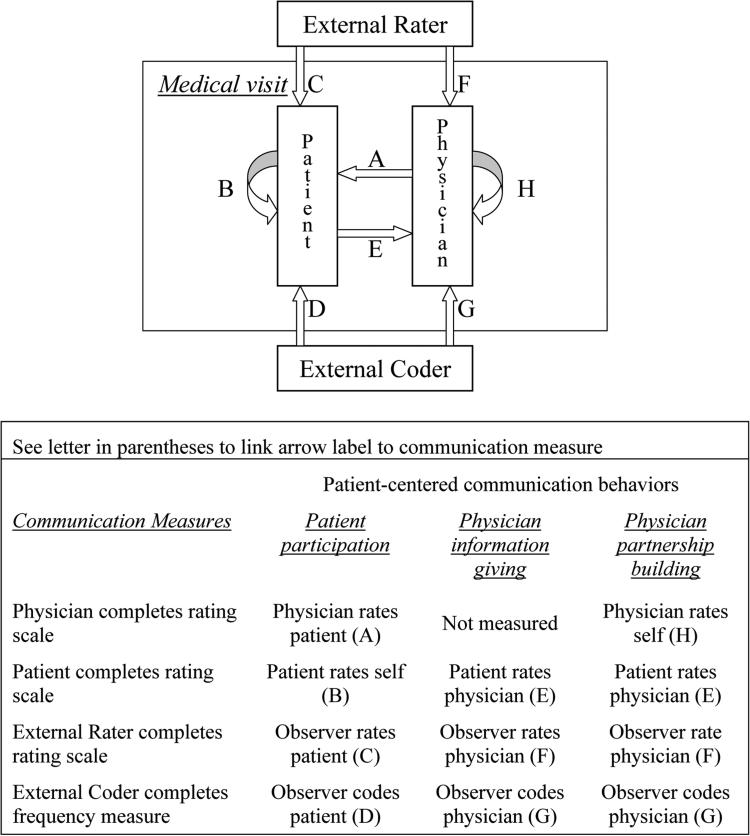

The Figure illustrates several different perspectives to assess communication in a medical visit. Each arrow shows one perspective for the assessment of communication (e.g. the external observer assesses the patient indicated by arrow labeled “C”, or the patient conducts a self-assessment indicated by the arrow labeled “B”). The Figure also includes a Key that links the arrows in the graphic with the three communication behaviors and the communication measures used to conduct the assessment.

Figure.

Graphic and table of communication behaviors and the corresponding measures.

Frequency measures of communication

Physician–patient interactions were audio-taped and transcribed. A mixed quantitative–qualitative method was used to code the transcripts (Gordon et al., 2005; Street & Millay, 2001). For this analysis we focused on three patient-centered communication behaviors: active patient participation; physician information giving; and physician participatory decision-making style (partnership building). Active patient participation is a summary measure of patients’ active involvement in the interaction and is composed of patients’ communicative behaviors coded as questions, expressions of concern, and requests or assertions. Physician information giving is coded using an informed decision making model and includes physicians’ statements coded as diagnoses, prognoses, definitions, rationales, instructions, recommendations, risks, and alternatives (Gordon et al., 2005). Physician participatory decision-making style (partnership building) represents an effort to encourage and legitimize active patient participation in the consultation, and includes facilitative and accommodative communication behaviors such as discussing patient involvement in decision making, assessing patient understanding, exploring patient preferences, and explicitly agreeing with or affirming patient opinions, beliefs or requests. Transcripts were coded by two undergraduate students, who were paid for their time. Coders did not interact with study participants and were not informed of the purpose of the study or about patient or physician characteristics. Coders listened to audio recordings to generate frequency measures or counts of physicians’ and patients’ communication behaviors. Coders had 90% agreement on unitizing utterances and achieved interrater reliabilities of 0.83 and 0.78 for information giving and patient participation, respectively.

Rating scales of communication

Rating scales were prospectively chosen to measure patient-centered content similar to the content coded from audio recordings as frequency measures. Three rating scales were chosen to collect these data from patients. Patient participation was measured with a 6-item scale that included Lerman's 4-item patient information scale: (i) I asked for recommendations about my medical condition; (ii) I asked a lot of questions about my medical condition; (iii) I went into great detail about my medical symptoms; (iv) I asked the doctor to explain the treatment or procedure in greater detail (Lerman et al., 1990) and two additional items that asked whether patients (i) offered opinions or (ii) expressed concerns to the doctor. Physician information giving (informativeness) was measured with a 5-item scale: (i) I understand the treatment plan for me; (ii) this doctor told me what the treatment would do; (iii) I understand the treatment side effects; (iv) I have a good idea about the changes to expect in my health; and (v) this doctor clearly explained the treatment or procedure (Galassi et al., 1992). The third measure, physician participatory decision making style was measured with a 3-item scale modified from Kaplan (Kaplan, Greenfield, Gandek, Rogers, & Ware, 1996) as follows: (i) This doctor strongly encouraged me to help make the treatment decision; (ii) This doctor made certain I had some control over the treatment decision; and (iii) This doctor did not ask me to help make the treatment decision, but instead just told me what my treatment would be. Responses for all scale items were collected on a 10-point scale (strongly disagree to strongly agree). Patients returned questionnaires the same day of the visit after a post-visit meeting with a research assistant.

Rating scales were also completed by physicians and by two external observers (undergraduate students who were different individuals than the coders for the frequency measure). Physicians rated themselves on participatory decision making style and rated patient participation using a post-visit questionnaire. The observers completed the patient questionnaires after listening to the audio recording of the interaction. Raters were trained on the general features of patient-centered communication, specifically with respect to the importance of physicians providing clear and sufficient information, encouraging patient involvement, and the importance of active patient participation in the consultation. In the context of applying the rating scale to a physician-patient encounter, they were instructed to listen to the encounter and then make objective judgments of the degree to which the doctor was informative and encouraged patient involvement and the degree of patient participation. Observers had good inter-rater reliability with kappa coefficients of 0.77, 0.78, and 0.74 for Informativeness, PDM style, and patient participation, respectively.

Data analysis

In our analysis, data derived from different measures of the three communication behaviors were compared using Pearson correlations. First, we examined the correlation of four measures of patient participation: patients’ self-ratings of participation, physicians’ ratings, observers’ ratings and frequency measures of patient participation (Figure). Second we examined correlations among three measures of physician information giving: patients’ ratings, observers’ ratings, and frequency measures of physician information giving. Third we examined correlations among four measures of physician PDM style: patients’ ratings, physicians’ self ratings, observers’ ratings and frequency measures of PDM style (Figure). A Bonferroni correction was applied to adjust P-values for multiple comparisons. We consider P-values of P=0.016 (0.05/3) or P=0.0083 (0.05/6) to be significant for analyses with 3 or 6 comparisons, respectively (Bland & Altman, 1995). Analyses were conducted with SAS version 9.2 (Cary, NC).

Results

The sample consisted of 83 patients, who visited with one of 4 oncologists or 11 surgeons. Patients mean age was 65.1 years, 25% were African–American, 94% were male, 44% had more than a high school education, 60% brought a companion to the visit, 87% had biopsy proven lung cancer and mean score of physical and mental quality of life were 39.8 and 64.3 points on a 1 to 100 scale. Mean scores for patients’, physicians’, and external observers’ ratings of communication, and frequency measures of communication behaviors are presented in Table 1.

Table 1.

Patients’, physicians’ and external observers’ ratings and coding (frequency measure) of communication (N=83)

| Patients’ Active Participation | Mean ± SD | Range |

|---|---|---|

| Patients’ Ratings | 8.0 ± 1.9 | 2.3 – 10.0 |

| Physicians’ Ratings of patients (N=78) | 7.2 ± 1.7 | 2.5 – 10.0 |

| Observer Rating | 6.4 ± 2.3 | 1.0 – 9.8 |

| Frequency Measure (utterances) | 25.8 ± 23.1 | 0 - 99 |

| Physicians’ Information Giving | ||

| Patients’ Ratings | 8.3 ± 2.4 | 1.0 – 10.0 |

| Observers’ Ratings | 6.9 ± 2.1 | 2.6 – 10.0 |

| Frequency Measure (utterances) | 78.3 ± 50.9 | 6 – 269 |

| Physicians’ Participatory Decision-Making Style | ||

| Patients’ Ratings | 8.1 ± 2.4 | 1.0 – 10.0 |

| Physician's Ratings of self (N=75) | 8.0 ± 1.6 | 3.5 - 10.0 |

| Observers’ Ratings | 5.7 ± 3.1 | 1.0 – 10.0 |

| Frequency Measure (utterances) | 5.3 ± 5.5 | 0 – 36 |

Patient participation

In our analysis comparing correlations among different measures of active patient participation (see Figure labels A, B, C and D) we found that physicians’ ratings had low and insignificant correlations with patients’ and observers’ ratings, and with frequency measures of active patient participation (Table 2). Patients’ ratings of their own active communication behaviors were not correlated with observers’ ratings (r=0.15; P=0.17) or with frequency measures (r=0.22; P=0.05; Bonferroni-adjusted). External observers’ ratings were statistically significantly correlated with the frequency measure of patient participation (r=0.63; P<0.0001, Table 2).

Table 2.

Correlations of 4 measures of patients’ participation

| Patients’ Rating | P-Value | Observers’ Rating | P-Value | Frequency Measure | P-value | |

|---|---|---|---|---|---|---|

| Physicians’ Rating (N=78) | r = 0.04 | 0.71 | r = 0.27 | 0.02 | r = 0.18 | 0.11 |

| Patients’ Rating (N=83) | r = 0.15 | 0.17 | r = 0.22 | 0.05 | ||

| Observers’ Rating (N=83) | r = 0.63 | <0.0001* |

Significant P-values are less than 0.0083 using an adjustment for multiple comparisons

Information giving

We examined correlations among three measures of physician information giving (see Figure labels E, F, and G). Patients’ ratings of physician information giving had low, but statistically significant correlations with observers’ ratings and the frequency measure of physician information giving (r=0.32; P=0.003 and r=0.34; P=0.002, respectively). Observers’ ratings were modestly and statistically significantly correlated with the frequency measure of physician information giving (r=0.51; P<.0001).

Participatory decision-making style

Patients’ ratings of physician participatory decision making style were not correlated with physicians’ ratings, observers’ ratings or frequency measures (Figure labels E, F, and H; and Table 3). Neither were physicians’ ratings significantly correlated (P>0.0083 Bonferroni-adjusted) with observers’ ratings or frequency measures. However, the frequency measure of physician participatory decision making style was modestly and statistically significantly (r=0.31; P=0.005) correlated with the external observers’ ratings.

Table 3.

Correlations of 4 measures of physicians’ participatory decision-making style

| Patient rating | P-value | Observers’ rating | P-value | Frequency measure | P-value | |

|---|---|---|---|---|---|---|

| Physician Rating (N=75) | r = 0.03 | 0.79 | r = 0.01 | 0.92 | r = 0.26 | 0.03 |

| Patients’ Rating (N=83) | r = 0.07 | 0.55 | r = 0.15 | 0.18 | ||

| Observers’ Rating (N=83) | r = 0.31 | 0.005* |

Significant P-values are less than 0.0083 using an adjustment for multiple comparisons

Discussion

We compared correlations of different measures of critical elements of physicians’ and patients’ communication. We found poor correlation among patients’ and physicians’ ratings of communication and poor correlation of patients’ and physicians’ ratings of communication with frequency measures of communication. However, observers’ ratings were moderately and statistically significantly correlated with frequency measures of communication. The differences in correlations among measures may be explained by the different perspectives of the patient, physician, and external raters and by differences inherent to qualitative evaluation of communication content compared with quantitative frequency measures that count communication behaviors.

Patients’ and physicians’ ratings of communication

The low correlation of patients’ and physicians’ ratings in our study may relate to physicians and patients having different goals for their communication. For example, though active patient communication would usually be considered desirable, physicians’ evaluations of active patient communication behaviors may not always be positive – especially if the physician was in a rush and thought that these patient behaviors (e.g., questions) prolonged the encounter. Also, when physicians are focused on medical tasks (e.g., diagnosis, treatment) they may use fewer patient-centered communication behaviors (e.g. exploring patients’ concerns and preferences, providing empathy and support) that are likely to be rated highly by patients. Another source of different ratings may occur if physicians and patients make assumptions when interpreting the behavior of their counterpart and these assumptions turn out to be inaccurate. Such misunderstanding could represent less effective communication. Thus, poor correlation of physicians’ and patients’ ratings may reflect their differing goals and perspectives.

Variations in ratings by participants compared with external observers

External observers bring a different perspective to assessment of communication because they were not part of the medical interaction. Patients and physicians evaluate the encounter from a participant's perspective; whereas, the observer has a different viewpoint. Low correlations such as those between patients’ or physicians’ and observers’ ratings of patient participation or physician decision-making style may reflect observers’ perspectives and preferences for a different style of communication (Mazzi et al., 2013).

In addition, evaluations could differ if features of communication are not available to the external observer because they would not be found on the audio recording (e.g., eye contact, touching), because the observer would not have access to the relational history of the patient and physician or because the observer's subjective ratings were based on inaccurate assumptions. Thus, poor correlation of physicians’ and patients’ ratings with observers’ assessments may reflect the different perspectives of patients and physicians with observers and potential misunderstanding by observers of the extent of physician-patient collaboration (Kasper et al., 2012; Saba et al., 2006).

Participants’ evaluations of themselves and their partner may be subject to social desirability (e.g., halo effect) and recall biases, but observers’ ratings may be more objective. In our study, the mean values for observers’ ratings were lower than participants’ self ratings and were not subject to ceiling effects, a finding supported by previous research (van Vliet et al., 2012). It is also possible that participants were not able to accurately provide self-assessments and thus gave overestimates of their performance, which may be more common in self-assessment of interpersonal skills (Lipsett, Harris, & Downing, 2011).

Frequency measures compared to rating scales

In addition to rating communication, we used external observers to assess communication with a quantitative methodology that counted communication behaviors (Gordon et al., 2005; Street & Millay, 2001). We found that these frequency measures had statistically significant and modest correlations with observers’ ratings for each of the three measures: patient participation, physician information giving, and physician decision making style. This consistent correlation of observers’ ratings with frequency measures provides potential support for the use of observers’ ratings as an alternate measure to more labor intensive frequency measures.

Nonetheless, frequency measures of communication may differ from communication rating scales because of differences inherent to quantitative compared with qualitative measures of communication as well as differences between measures that are descriptive (e.g., what kind of information the doctor gave the patient) and those that are evaluative (e.g., how informative was the doctor). When assessing communication, the quantitative count of a behavior may not correlate with a qualitative rating of that same behavior. For example, frequency measures may overestimate the degree of informativeness of information-giving behaviors when information was repeated unnecessarily, was already known, was riddled with jargon, and was not tailored to patient literacy. As these examples have illustrated, qualitative and quantitative assessments may differ. Thus, multiple measures of communication provide more complete assessments of physician-patient communication.

Limitations

There are numerous measures of communication that we did not evaluate, but that may hold promise for evaluating communication. For example, Ambady et al, found that tone of voice was a predictor of malpractice claims (Ambady et al., 2002). Other methods also include sequence analysis, which can lead to similar results when compared with cross-sectional analysis, but sequence analysis can show a direct relationship between physicians’ and patients’ communicative behaviors (Bensing, Verheul, Jansen, & Langewitz, 2010) and sequence analysis may be particularly valuable for examining infrequent communication behaviors (Eide, Quera, Graugaard, & Finset, 2004). Another limitation is that our sample was small and from patients undergoing evaluation for lung cancer or a pulmonary nodule in one hospital and evaluations of communication in these specialty care outpatient settings may not generalize to patients with different conditions or to those undergoing evaluation in other clinical settings. More importantly, we did not examine the relationships of these measures to actual health outcomes, which may be another approach to deciding which communication measures to use.

Conclusions

Our findings have implications for the assessment of physician-patient communication. One implication is that if observers’ ratings are highly correlated with measures from labor intensive coding systems, researchers may have a more efficient, cost-effective means to make objective assessments of communication behavior and process. On the other hand when correlations are low or modest, multiple methods of assessment may provide substantially more information.

Our findings are consistent with results in medical and other settings that perceptions of the quality of physician-patient communication often vary. Prior research has consistently reported that patients’ and physicians’ ratings of their own or the others' communication may not match with measures of what was said using an audio recording of the conversation (Cegala et al., 2004; Street, 1992). Furthermore, the perspectives of patients or physicians and third-party observers may vary. (Saba et al., 2006). Our findings suggest that more research is needed to find better ways to integrate approaches to measurement of communication because there is no gold standard measure of communication. Meanwhile, researchers and evaluators would be best served to garner perspectives from multiple measures and to follow up with the respondents to understand and appreciate their perspectives.

Acknowledgments

Funding: This work was supported in part by grants # IIR-12-050 and # PPO-08-402 and by Career Development Award #RCD 97-319 to Dr. Gordon, from Department of Veterans Affairs, Office of Research and Development, Health Services Research and Development Service, and by grant # P01 HS10876 from AHRQ.

Footnotes

The views expressed in the article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs.

References

- Ambady N, Laplante D, Nguyen T, Rosenthal R, Chaumeton N, Levinson W. Surgeons' tone of voice: a clue to malpractice history. Surgery. 2002;132(1):5–9. doi: 10.1067/msy.2002.124733. [DOI] [PubMed] [Google Scholar]

- Arora NK. Interacting with cancer patients: the significance of physicians' communication behavior. Social Science and Medicine. 2003;57(5):791–806. doi: 10.1016/s0277-9536(02)00449-5. [DOI] [PubMed] [Google Scholar]

- Bensing JM, Verheul W, Jansen J, Langewitz WA. Looking for trouble: the added value of sequence analysis in finding evidence for the role of physicians in patients' disclosure of cues and concerns. Medical Care. 2010;48(7):583–588. doi: 10.1097/MLR.0b013e3181d567a5. [DOI] [PubMed] [Google Scholar]

- Blanch-Hartigan D, Hall JA, Krupat E, Irish JT. Can naive viewers put themselves in the patients' shoes?: reliability and validity of the analogue patient methodology. Medical Care. 2013;51(3):e16–21. doi: 10.1097/MLR.0b013e31822945cc. [DOI] [PubMed] [Google Scholar]

- Bland JM, Altman DG. Multiple significance tests: the Bonferroni method. BMJ. 1995;310(6973):170. doi: 10.1136/bmj.310.6973.170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brody DS, Miller SM, Lerman CE, Smith DG, Caputo GC. Patient perception of involvement in medical care: relationship to illness attitudes and outcomes. Journal of General Internal Medicine. 1989;4(6):506–511. doi: 10.1007/BF02599549. [DOI] [PubMed] [Google Scholar]

- Cegala DJ. A study of doctors' and patients' communication during a primary care consultation: implications for communication training. Journal of Health Communication. 1997;2(3):169–194. doi: 10.1080/108107397127743. [DOI] [PubMed] [Google Scholar]

- Cegala DJ, Gade C, Lenzmeier Broz S, McClure L. Physicians' and patients' perceptions of patients' communication competence in a primary care medical interview. Health Communication. 2004;16(3):289–304. doi: 10.1207/S15327027HC1603_2. [DOI] [PubMed] [Google Scholar]

- Eide H, Quera V, Graugaard P, Finset A. Physician-patient dialogue surrounding patients' expression of concern: applying sequence analysis to RIAS. Soc.Sci.Med. 2004;59(1):145–155. doi: 10.1016/j.socscimed.2003.10.011. [DOI] [PubMed] [Google Scholar]

- Epstein RM, Franks P, Fiscella K, Shields CG, Meldrum SC, Kravitz RL, Duberstein PR. Measuring patient-centered communication in patient-physician consultations: theoretical and practical issues. Social Science and Medicine. 2005;61(7):1516–1528. doi: 10.1016/j.socscimed.2005.02.001. [DOI] [PubMed] [Google Scholar]

- Galassi JP, Schanberg R, Ware WB. The patient reactions assessment: A brief measure of the quality of the patient-provider medical relationship. Psychological Assessment. 1992;4(3):346–351. [Google Scholar]

- Gordon HS, Street RL, Jr., Kelly PA, Souchek J, Wray NP. Physician-patient communication following invasive procedures: an analysis of post-angiogram consultations. Social Science and Medicine. 2005;61(5):1015–1025. doi: 10.1016/j.socscimed.2004.12.021. [DOI] [PubMed] [Google Scholar]

- Gordon HS, Street RL, Jr., Sharf BF, Kelly PA, Souchek J. Racial differences in trust and lung cancer patients' perceptions of physician communication. Journal of Clinical Oncology. 2006;24(6):904–909. doi: 10.1200/JCO.2005.03.1955. [DOI] [PubMed] [Google Scholar]

- Gordon HS, Street RL, Jr., Sharf BF, Souchek J. Racial differences in doctors' information-giving and patients' participation. Cancer. 2006;107(6):1313–1320. doi: 10.1002/cncr.22122. [DOI] [PubMed] [Google Scholar]

- Kaplan SH, Greenfield S, Gandek B, Rogers WH, Ware JE., Jr. Characteristics of physicians with participatory decision-making styles. Annals of Internal Medicine. 1996;124(5):497–504. doi: 10.7326/0003-4819-124-5-199603010-00007. [DOI] [PubMed] [Google Scholar]

- Kasper J, Hoffmann F, Heesen C, Kopke S, Geiger F. MAPPIN'SDM--the multifocal approach to sharing in shared decision making. PloS One. 2012;7(4):e34849. doi: 10.1371/journal.pone.0034849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerman CE, Brody DS, Caputo GC, Smith DG, Lazaro CG, Wolfson HG. Patients' Perceived Involvement in Care Scale: relationship to attitudes about illness and medical care. Journal of General Internal Medicine. 1990;5(1):29–33. doi: 10.1007/BF02602306. [DOI] [PubMed] [Google Scholar]

- Lipsett PA, Harris I, Downing S. Resident self-other assessor agreement: influence of assessor, competency, and performance level. Archives of Surgery. 2011;146(8):901–906. doi: 10.1001/archsurg.2011.172. [DOI] [PubMed] [Google Scholar]

- Mazzi MA, Bensing J, Rimondini M, Fletcher I, van Vliet L, Zimmermann C, Deveugele M. How do lay people assess the quality of physicians' communicative responses to patients' emotional cues and concerns? An international multicentre study based on videotaped medical consultations. Patient Education and Counseling. 2013;90(3):347–353. doi: 10.1016/j.pec.2011.06.010. [DOI] [PubMed] [Google Scholar]

- Ong LM, de Haes JC, Hoos AM, Lammes FB. Doctor-patient communication: a review of the literature. Social Science and Medicine. 1995;40(7):903–918. doi: 10.1016/0277-9536(94)00155-m. [DOI] [PubMed] [Google Scholar]

- Rimal RN. Analyzing the physician-patient interaction: an overview of six methods and future research directions. Health Communication. 2001;13(1):89–99. doi: 10.1207/S15327027HC1301_08. [DOI] [PubMed] [Google Scholar]

- Roter DL. Patient participation in the patient-provider interaction: the effects of patient question asking on the quality of interaction, satisfaction and compliance. Health Education Monographs. 1977;5(4):281–315. doi: 10.1177/109019817700500402. [DOI] [PubMed] [Google Scholar]

- Saba GW, Wong ST, Schillinger D, Fernandez A, Somkin CP, Wilson CC, Grumbach K. Shared decision making and the experience of partnership in primary care. Annals of Family Medicine. 2006;4(1):54–62. doi: 10.1370/afm.393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Street RL., Jr. Analyzing communication in medical consultations. Do behavioral measures correspond to patients' perceptions? Medical Care. 1992;30(11):976–988. doi: 10.1097/00005650-199211000-00002. [DOI] [PubMed] [Google Scholar]

- Street RL., Jr. Active patients as powerful communicators. In: Robinson WP, Giles H, editors. The New Handbook of Language and Social Psychology. John Wiley & Sons Ltd.; West Sussex, England: 2001. pp. 541–560. [Google Scholar]

- Street RL, Jr., Gordon HS, Ward MM, Krupat E, Kravitz RL. Patient participation in medical consultations: why some patients are more involved than others. Med.Care. 2005;43(10):960–969. doi: 10.1097/01.mlr.0000178172.40344.70. [DOI] [PubMed] [Google Scholar]

- Street RL, Jr., Millay B. Analyzing patient participation in medical encounters. Health Communication. 2001;13(1):61–73. doi: 10.1207/S15327027HC1301_06. [DOI] [PubMed] [Google Scholar]

- van Vliet LM, van der Wall E, Albada A, Spreeuwenberg PM, Verheul W, Bensing JM. The validity of using analogue patients in practitioner-patient communication research: systematic review and meta-analysis. Journal of General Internal Medicine. 2012;27(11):1528–1543. doi: 10.1007/s11606-012-2111-8. [DOI] [PMC free article] [PubMed] [Google Scholar]