Abstract

Hearing loss and auditory prostheses can alter auditory processing by inducing large pitch mismatches and broad pitch fusion between the two ears. Similar to integration of incongruent inputs in other sensory modalities, the mismatched, fused pitches are often averaged across ears for simple stimuli. Here, we measured parallel effects on complex stimulus integration using a new technique based on vowel classification in five bilateral hearing aid users and eight bimodal cochlear implant users. Continua between five pairs of synthetic vowels were created by varying the first formant spectral peak while keeping the second formant constant. Comparison of binaural and monaural vowel classification functions for each vowel pair continuum enabled visualization of the following frequency-dependent integration trends: (1) similar monaural and binaural functions, (2) ear dominance, (3) binaural averaging, and (4) binaural interference. Hearing aid users showed all trends, while bimodal cochlear implant users showed mostly ear dominance or interference. Interaural pitch mismatches, frequency ranges of binaural pitch fusion, and the relative weightings of pitch averaging across ears were also measured using tone and/or electrode stimulation. The presence of both large interaural pitch mismatches and broad pitch fusion was not sufficient to predict vowel integration trends such as binaural averaging or interference. The way that pitch averaging was weighted between ears also appears to be important for determining binaural vowel integration trends. Abnormally broad spectral fusion and the associated phoneme fusion across mismatched ears may underlie binaural speech perception interference observed in hearing aid and cochlear implant users.

Electronic supplementary material

The online version of this article (doi:10.1007/s10162-016-0570-z) contains supplementary material, which is available to authorized users.

Keywords: vowel, binaural integration, fusion, pitch, hearing loss, hearing aid, cochlear implant, speech, bimodal cochlear implant

Introduction

Much progress has been made in the treatment of sensorineural hearing loss with hearing devices such as hearing aids (HAs) and cochlear implants (CIs). In particular, the new concept of bimodal CI stimulation, or combining acoustic and electric hearing from a HA and CI in opposite ears, has led to improved speech perception in noise over a CI alone for many individuals, especially for those with less severe losses (Kong et al. 2005; Dorman and Gifford 2010; Blamey et al. 2015). However, there remains significant variability in the benefit of combining hearing devices bilaterally, with some patients showing little benefit or worse performance for speech recognition compared to either ear alone (Carter et al. 2001; Walden and Walden 2005; McArdle et al. 2012; Ching et al. 2007).

Differences in hearing status and device programming can contribute to this variability in binaural benefit. Another potential factor, which has been relatively unexplored in relation to patient outcomes, is a change in central auditory processing due to experience with hearing loss and hearing devices. Certainly, hearing loss can lead to diplacusis, the perception of different pitches between ears for the same frequency tone (Albers and Wilson 1968), and has been shown to lead to cortical remapping of sound frequencies and intensities (Eggermont 2008; Cheung et al. 2009; Popescu and Polley 2010). Cochlear implants also alter the normal mapping of speech to the auditory nerve, because the way they are programmed leads to mismatches between sound frequencies assigned to CI electrodes and the actual electrodes’ cochlear place of stimulation. In the case of combined CI and HA use, spectral mismatches are also introduced between the CI stimulation in the implanted ear and acoustic hearing in the non-implanted ear.

While experience with such mismatches introduced by CIs can induce plastic pitch changes that reduce the mismatches over time (Reiss et al. 2007, 2008, 2011), not all individuals adapt their pitch perception, and some CI users even “mal-adapt” pitch so that mismatches increase over time (Reiss et al. 2014a). Interestingly, many bimodal CI users also have abnormally broad binaural pitch fusion such that sounds that elicit different pitches in the two ears fuse over a broad range of frequency differences up to 3–4 octaves; this suggests an alternate mechanism for reducing the perception of interaural pitch mismatches (Reiss et al. 2014b). This differs from normal-hearing listeners, who only fuse dichotically presented sounds with small pitch differences between the ears, with differences on the scale of 0.1 octaves, and segregate sounds farther apart in pitch (Odenthal 1963; Van den Brink et al. 1976). Hearing-impaired listeners without CIs can also have broad fusion ranges ranging from 1 to 3 octaves (Reiss et al. 2015; submitted).

Further, this fusion can lead to averaging of the different pitches perceived in the two ears (Reiss et al. 2014b), similar to what has been reported for normal-hearing listeners on a much smaller scale (Van den Brink et al. 1976), for the visual system for incongruent inputs to the two eyes (Hillis et al. 2002; Anstis and Rogers 2012) or for auditory-visual integration of spatially incongruent auditory and visual stimuli (e.g., Binda et al. 2007). It has been proposed that cues from multiple inputs within or across modalities are averaged to reduce overall signal uncertainty due to measurement noise and gaps in information from individual inputs (Hillis et al. 2002); generally, this would be true for matched information across inputs. In the hearing-impaired auditory system, however, the two ears are often mismatched in pitch, leading to incongruency of spectral information between ears. If incongruent spectral information is fused and averaged between ears, different percepts may arise under binaural compared to monaural stimulation. What are the effects of such abnormal binaural integration on perception of complex stimuli, such as speech sounds? Can abnormal binaural integration account for reduced speech perception benefit, or even interference, with two ears compared to one?

The spectral structure of vowels and how they are classified provide an opportunity to quantitatively measure binaural integration effects on complex spectral shape perception. Vowels are comprised of harmonically spaced components at multiples of the voice fundamental frequency (F0) with local peaks in the spectrum, called formants, determined by the shaping of the vocal tract; they are classified mainly based on the peak frequency locations of the first formant (F1) and second formant (F2) (Peterson and Barney 1952). Thus, if the same formants are presented to each ear, but an interaural pitch mismatch is present, different formants will be perceived in each ear (essentially dichotic perception). If the incongruent formants are fused and integrated binaurally, a third set of formants may be perceived and distinct from the monaurally perceived formants.

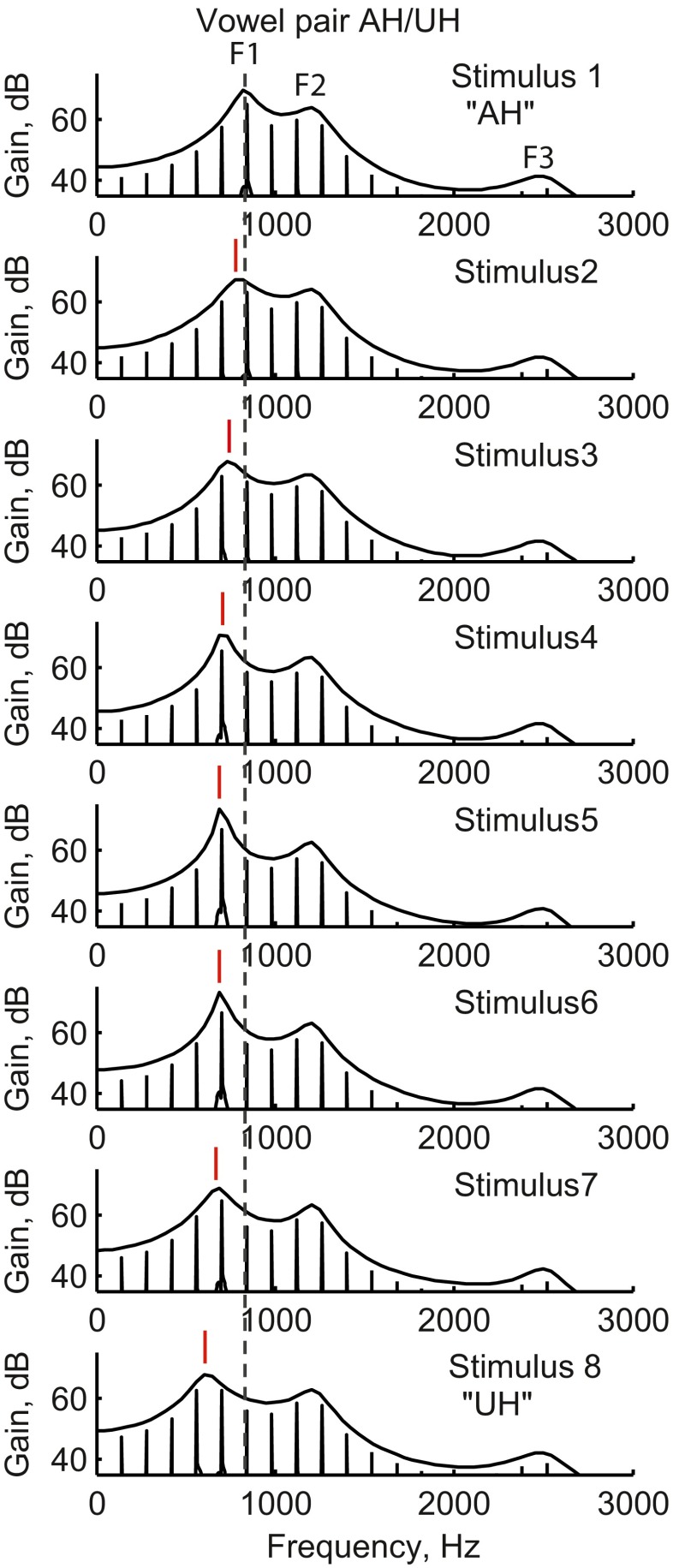

Here, we present a new technique using a continuum of stimuli between two exemplar synthetic vowels to probe changes in complex spectral shape perception under binaural compared to monaural stimulation. Essentially, the continuum was created by varying F1 in small steps while keeping F2 and other parameters constant, as shown for an example vowel pair in Figure 1, and subjects were asked to indicate which of the two exemplars they heard. Using this technique, binaural-monaural spectral shape perception effects on vowel classification were measured for five different vowel pairs (listed in Table 1) and compared with binaural pitch integration measured using simple stimuli in both bilateral HA users and bimodal CI users.

FIG. 1.

Spectra of eight continuum vowel stimuli spanning the transition from /α/ to /Λ/ (“AH to UH”). Vertical lines in the spectra are the harmonics spaced at the 100-Hz fundamental frequency. Stimulus number increases down the rows. The vertical dashed line indicates the position of F1 for the first stimulus as a reference. Note the systematic transition of F1, the first peak in the spectrum (indicated by short vertical lines above peaks), away from this dashed line from top to bottom, i.e., from stimulus 1 to stimulus 8. In contrast, F2, the second peak in the spectrum, is constant for all stimuli.

TABLE 1.

Synthetic vowel pair parameters

| Vowel pair | F1 start | F1 end | Average F1 step size | F2 start | F2 end | Average F2 step size | Example vowel 1 | Example vowel 2 |

|---|---|---|---|---|---|---|---|---|

| 1. /α/-/Λ/ (AH-UH) | 800 | 625 | −25 | 1200 | 1200 | 0 | Hot | Hut |

| 2. /ae/-/ε/ (AE-EH) | 662 | 521 | −20 | 1786 | 1786 | 0 | Had | Head |

| 3. /ε/-/I/ (EH-IH) | 521 | 388 | −19 | 1921 | 1921 | 0 | Head | Hid |

| 4. /I/-/i/ (IH-IY) | 388 | 265 | −17.5 | 2145 | 2145 | 0 | Hid | Heed |

| 5. /Λ/-/υ/ (UH-OO) | 625 | 438 | −26.5 | 1200 | 1029 | −24.5 | Hut | Hook |

The first seven columns list the five vowel pairs and the start, end, and step size values in hertz for F1 and F2 in the continuum of synthetic vowels between the first and second vowel in the pair. The last two columns show example words for the vowels at the beginning and end of each continuum. F0 = 100 Hz and duration = 250 ms for all stimuli

This study demonstrates the utility of a vowel continuum for measuring binaural interference and illustrates that binaural interference can occur at the phoneme level, especially in bimodal CI users with broad pitch fusion and large pitch mismatches across ears. The findings suggest that abnormal binaural spectral integration may explain some cases of binaural interference in hearing-impaired individuals.

Methods

Subjects

These studies were conducted according to the guidelines for the protection of human subjects as set forth by the Institutional Review Board (IRB) of Oregon Health & Sciences University (OHSU), and the methods employed were approved by that IRB. Five bilateral HA users and seven bimodal CI users were tested. For natural and synthetic vowel tests, subjects were tested with their personal HAs and/or CIs unless otherwise specified below. For pitch matching and fusion tests, subjects were tested using headphones and/or direct electric stimulation.

Bilateral HA users ranged in age from 68 to 80 (mean 70.8 ± 7.2 years); three were male, and two were female. Audiometric thresholds were generally symmetric between ears for HA users, with mean low-frequency thresholds of 72/82, 65/68, 62/63, 57/57, and 58/68 for left/right ears of HI04, HI05, HI06, HI07, and HI08, respectively (mean 65.2 ± 7.8 dB HL; averaged over 250, 500, and 1000 Hz). All HA users were tested with their own HAs. HI04, HI05, HI06, HI07, and HI08 used the Resound RITE, Oticon Alta, Phonak Naida, Phonak Certena, and Siemens Pure RITE HAs, respectively. All HAs were verified to meet target using real-ear measurements.

Bimodal CI users ranged in age from 31 to 82 (mean 62.0 ± 16.5 years); five were male, and two were female. Mean low-frequency thresholds in the non-implanted ear were 65, 75, 58, 48, 70, 85, and 82 dB HL for CI20, CI25, CI30, CI32, CI33, CI56, and CI85, respectively (mean 69.0 ± 13.0 dB HL; averaged over 250, 500, and 1000 Hz). All CI subjects had cochlear CI24RE internal devices and used a N5 or N6 cochlear implant speech processor with the ACE processing strategy, essentially an envelope-based, high-pulse rate, peak- or channel-picking strategy. Subjects CI20, CI25, and CI30 were tested with a lab Freedom processor; subjects CI32 and CI33 were tested with their personal N5 processors, and subjects CI56 and CI85 were tested with their personal N6 processors.

One of the CI subjects, CI20, did not regularly wear a HA in his non-implanted ear and was tested with a lab Oticon 380P. Another CI subject, CI30, did not regularly wear his Phonak Naida HA but brought it in for testing. The remaining subjects were tested with their own HAs; CI32 and CI33 used Phonak Naida HAs, while CI25, CI56, and CI85 used a Siemens DME ITE, Rexton, and Resound Enzo HAs, respectively. Note that both CI32 and CI33 had frequency shifting enabled for their Phonak Naida HAs above 1.5 kHz; the effects of frequency shifting on the synthetic vowel pair discrimination experiment are minimal because the first formant, the only cue provided for discriminating each pair, is below 1 kHz (Table 1). Small effects may occur in the natural vowel discrimination experiment where the second formant is also varied, as the second formant can sometimes be above 1.5 kHz. All of these HAs were verified to meet target up to 1500–2000 Hz using real-ear measurements.

All subjects were screened for cognitive deficits using the mini-mental status exam, with a requirement of a minimum score of 25 out of 30 (MMSE; Folstein et al. 1975; Souza et al. 2007). All subjects had at least 1 year of experience with their current hearing devices, with the exception of CI85 who was an experienced bimodal CI user but had recently switched to a Resound Enzo HA with 3 weeks of experience. All subjects were native speakers of American English.

Experiment 1: Vowel Classification Under Binaural Versus Monaural Stimulation

General Stimulus Presentation

Stimuli were presented in the free field in a double-walled sound-treated booth (IAC). A computer sound card (ESI Juli), programmable attenuators (TDT), mixer, and amplifier (Crown D75A) was used to present the stimuli via loudspeaker (Infinity). The listener was positioned 1 m from and facing the loudspeaker, with levels measured to be between 60 and 65 dB SPL.

Subjects were tested on each vowel pair in the binaural condition and in both monaural conditions. For binaural testing, subjects wore their hearing devices in both ears. For monaural testing of each ear, subjects wore their hearing device in the test ear, while the contralateral ear was plugged and muffed. In each run, subjects were tested on a single vowel pair and ear condition, with the order of vowel pairs and ear conditions randomized.

Generation of Synthetic Vowel Stimuli

Five synthetic vowel sets were created corresponding to vowel pairs with similar F2 in the F1–F2 vowel space for American English vowels, such as /ae/ (“had”) versus /ε/ (“head”). Each set consisted of eight stimuli sampled from a continuum of F1 values between the exemplars of the two vowels, while generally holding F2 constant, as shown for one example vowel pair in Figure 1. The F1 and F2 start/end values and step sizes are shown in Table 1. The exception was /Λ/ (“hut”) versus /υ/ (“hook”), where F2 was co-varied with F1 to accommodate the larger F2 difference between exemplars.

Praat Vowel Editor (Praat version 5.2.10, www.praat.org) was used to create these synthetic vowel sets. The higher formants (F3–F4) were kept constant. The fundamental frequency (F0) and stimulus duration were fixed at 100 Hz and 250 ms, respectively. A low F0 was used to reduce harmonic spacing and more densely sample the formants in the frequency domain, reducing fluctuations in bandwidth and harmonic number along the continuum.

Synthetic Vowel Response Measurement Procedure

Before testing, subjects were given a familiarization program so that they could play and listen to each vowel pair. Subjects were also provided with a printed reference sheet with example words for each exemplar vowel in the vowel pair (such as “hid” and “heed” for the /I/-/i/ pair). One subject, CI56, had poor visual acuity due to Usher’s syndrome and was instead given verbal examples with the example words in a sentence.

Subjects were tested on vowel classification of these continuum stimuli in a two-alternative forced-choice task. During each run, all 8 continuum stimuli from one vowel pair were presented in random sequence with a total of 10 repeats per stimulus, for a total of 80 stimuli. At each trial, the subject was given only two choices on the touchscreen, representing the two exemplars of the vowels in the vowel pair, and asked to indicate on a touchscreen which vowel was heard. No feedback was provided during testing.

Synthetic Vowel Response Analysis

Vowel classification functions were constructed from the averaged responses to each vowel pair and condition, with 0 assigned to higher F1 exemplar responses and 1 assigned to lower F1 exemplar responses, as shown for two example subjects in Figure 2. Statistical significance of differences in synthetic vowel perception under the three ear conditions was evaluated using non-parametric bootstrap estimation of 95 % confidence intervals at the 25 %, 50 %, and 75 % points on the function (Efron and Tibshirani 1993; Wichmann and Hill 2001). Binaural vowel trend categories were defined based on the bootstrap estimated differences as follows (and shown for individual examples of each category in Fig. 3): No effect = no significant differences between listening conditions (similar monaural and binaural functions), quantified as the lack of significant differences between any of the functions; Dominance = similarity of the binaural function to one ear’s monaural function, quantified as the lack of significant differences between the binaural function and one monaural function (interpreted as the dominant ear) combined with the presence of significant differences between the binaural function and the other monaural function (interpreted as the non-dominant ear); Averaging = significant differences between the binaural function and both monaural functions, plus the binaural function was intermediate between the two monaural functions on the stimulus axis; Interference = significant differences between the binaural function and both monaural functions, plus the binaural function was outside the range of the two functions with a shallower slope and/or larger bias than either monaural condition, indicative of worsened discrimination performance. Quantitatively, classification was based on the bootstrap ranges of error as shown by the horizontal lines for the 25 %, 50 %, and 75 % points in Figure 2 for at least two of the three points. If the bootstrap lines overlapped for at least two of the three points for any pair of functions, they were considered similar. If the bootstrap lines did not overlap for at least two points, the functions were considered different. Generally, the classifications were unambiguous, especially for the HA users. Ambiguous classifications such as transitions between dominance by one ear to the other are indicated in Tables 2 and 3.

FIG. 2.

Responses for all five vowel pairs as a function of continuum stimulus position for each vowel pair in two representative subjects: a bilateral hearing aid subject and a bimodal cochlear implant subject. Each row represents a different vowel pair, successively from /α/-/Λ/ (“AH to UH”), /ae/-/ε/ (“AE to EH”), /ε/-/I/ (“EH to IH”), /I/-/i/ (“IH to IY”), and /Λ/-/υ/ (“UH to OO”). A1–A5 Bilateral hearing aid subject. B1–B5 Bimodal cochlear implant subject. Dash-dot and dashed lines indicate the monaural right and left ear conditions, respectively, and solid lines indicate the binaural conditions. Symbols indicate the fitted 25 %, 50 %, and 75 % points on the vowel response function, also shown in text at the side of figure in order from top to bottom for binaural, right/HA, and left/CI conditions. The horizontal lines for each point indicate the 5 %–95 % confidence intervals obtained by bootstrap estimation.

FIG. 3.

Examples of the four types of binaural spectral integration observed in this study. A NH subject with normal classification of the /α/-/Λ/ pair, with no significant differences between monaural and binaural conditions. B Better ear dominance (with the CI ear) in a bimodal CI + HA subject with the /ε/-/I/ pair. C Binaural averaging in bilateral HA subject with the /Λ/-/υ/ pair. D Binaural interference in a CI + HA subject with the /I/-/i/ pair, with two repeats shown for each condition. Dash-dot and dashed lines indicate the monaural right and left ear conditions, respectively, and solid lines indicate the binaural conditions. Symbols indicate the fitted 25 %, 50 %, and 75 % points on the vowel response function. The horizontal lines for each point indicate the 5 %–95 % confidence intervals obtained by bootstrap estimation.

TABLE 2.

Binaural response types by subject and vowel

| Subject ID | /α/-/Λ/ | /ae/-/ε/ | /ε/-/I/ | /I/-/i/ | /Λ/-/υ/ |

|---|---|---|---|---|---|

| HI04 | Avg | L Dom | * | Avg | 0 |

| HI05 | 0 | 0 | 0 | 0 | 0 |

| HI06 | R Dom | 0 | Intf | 0 | 0 |

| HI07 | 0 | L Dom | L Dom | 0 | 0 |

| HI08 | Intf | Avg | R Dom | R Dom | Avg |

| CI20 | CNT | CNT | CNT | Intf | HA Dom |

| CI25 | CI Dom | 0 | CI Dom | Intf | – |

| CI30 | Intf | Intf | Intf | Intf | Intf |

| CI32 | HA Dom | 0 | 0 | 0 | HA Dom |

| CI33 | Dom** | Avg | 0 | 0 | 0 |

| CI56 | 0 | CI Dom | 0 | *Fac | CI Dom |

| CI85 | CI Dom | 0 | HA Dom | Intf | CI Dom |

The first column lists the subject, and columns 2–6 list the five vowel pairs. Notations are as follows: “0” = all curves were similar, i.e., showed no effect of listening condition; “Dom” = dominance by one ear (indicated as R or L for bilateral HA users, or HA or CI ear for bimodal CI users); “Avg” = averaging between the ears; “Intf” = interference between the ears; “–” = no data obtained; and “CNT” = could not test because the subject could not discriminate the vowel pair. “*” indicates a shift in dominance from one ear to the other, rather than true averaging or dominance by a single ear; this data point is not included in the summary data in Figure 4. “Dom**” indicates dominance switched between HA and CI ear across multiple trials. “*Fac” indicates transition from dominance by HA to dominance by CI, which results in a net facilitation in slope (improved performance)

TABLE 3.

Pitch and fusion range results by subject and frequency region/vowel

| Subject ID | Reference tone frequency, Hz or electrode (freq. alloc., Hz) | Pitch match, Hz | Fusion frequency range, Hz (octaves) | Vowel pair | pattern |

|---|---|---|---|---|---|

| HI04 | 420 | 388 | 125–460 (1.9) | /ε/-/I/ | Transition |

| HI04 | 707 | 717 | 350–1142 (1.7) | /α/-/Λ/ | Averaging |

| HI07 | 420 | 420 | 391–454 (0.2) | /ε/-/I/ | L dominance |

| HI07 | 707 | 777 | 622–3363 (2.4) | /α/-/Λ/ | No effect |

| HI08 | 420 | 594 | 223–550 (1.3) | /ε/-/I/ | R dominance |

| HI08 | 707 | 872 | 227–1540 (2.8) | /α/-/Λ/ | Interference |

| CI20 | 22 (188–313) | 420 | 250–1000(2.0) | /I/-/i/ | Interference |

| CI20 | 20 (438–563) | – | 420–500 (0.25) | /Λ/-/υ/ | HA dominance |

| CI25 | 22 (188–313) | 167 | 125–669 (2.4) | /I/-/i/ | Interference |

| CI25 | 21 (313–438) | 176 | 125–752 (2.6) | /ε/-/I/ | CI dominance |

| CI25 | 20 (438–563) | 189 | 125–895 (2.8) | /ε/-/I/ | CI dominance |

| CI25 | 19 (563–688) | 210 | 125–1195 (3.3) | /ae/-/ε/ | No effect |

| CI25 | 18 (688–813) | 193 | 125–2300 (4.2) | /α/-/Λ/ | CI dominance |

| CI30 | 22 (188–313) | 274 | 250–575 (1.2) | /I/-/i/ | Interference |

| CI30 | 21 (313–438) | 433 | 387–840 (1.1) | /ε/-/I/ | Interference |

| CI30 | 20 (438–563) | 547 | 398–821 (1.0) | /Λ/-/υ/ | Interference |

| CI30 | 19 (563–688) | 849 | 594–1000 (0.8) | /ae/-/ε/ | Interference |

| CI32 | 22 (188–313) | >2000 | 176–325 (0.9) | /I/-/i/ | No effect |

| CI32 | 21 (313–438) | 313 | 387–1592 (2.0) | /ε/-/I/ | No effect |

| CI32 | 20 (438–563) | – | 250–2000 (3.0) | /Λ/-/υ/ | HA dominance |

| CI32 | 19 (563–688) | 460 | 169–2000 (3.6) | /ae/-/ε/ | No effect |

| CI32 | 18 (688–813) | 440 | 607–2000 (1.7) | /α/-/Λ/ | HA dominance |

| CI33 | 22 (188–313) | 452 | 125–1414 (3.5) | /I/-/i/ | No effect |

| CI33 | 21 (313–438) | 512 | 125–1414 (3.5) | /ε/-/I/ | No effect |

| CI33 | 20 (438–563) | 622 | 125–1414 (3.5) | /Λ/-/υ/ | No effect |

| CI33 | 19 (563–688) | 942 | 125–1414 (3.5) | /ae/-/ε/ | Averaging |

| CI33 | 18 (688–813) | 1227 | 125–1414 (3.5) | /α/-/Λ/ | HA/CI dominance |

| CI56 | 22 (188–313) | 187 | 125–473 (1.9) | /I/-/i/ | Transition/facilitation |

| CI56 | 21 (313–438) | 210 | 125–420 (1.7) | /ε/-/I/ | No effect |

| CI56 | 20 (438–563) | 204 | 125–420 (1.7) | /Λ/-/υ/ | CI dominance |

| CI56 | 19 (563–688) | 190 | 125–500 (2.0) | /ae/-/ε/ | CI dominance |

| CI56 | 18 (688–813) | 196 | 133–707 (2.4) | /α/-/Λ/ | No effect |

| CI85 | 22 (188–313) | 398 | 140–420 (1.6) | /I/-/i/ | Transition/interference |

| CI85 | 21 (313–438) | 359 | 148–325 (1.1) | /ε/-/I/ | HA dominance |

| CI85 | 20 (438–563) | 447 | 148–353 (1.3) | /Λ/-/υ/ | CI dominance |

| CI85 | 19 (563–688) | 390 | 148–353 (1.3) | /ae/-/ε/ | No effect |

| CI85 | 18 (688–813) | 461 | 148–500 (1.8) | /α/-/Λ/ | CI dominance |

Columns 1 and 2 list the subject and the reference electrode or tone frequencies tested. For electrodes, the frequencies, in Hz, allocated to that electrode by the CI speech processor are shown in parentheses. Columns 3 and 4 show the pitch matches and fusion range results for the given subject and reference stimulus. In column 4, fusion ranges are shown in Hz, with fusion range bandwidths in parentheses, in units of octaves. Columns 5 and 6 show the vowel that had F1 variation in the frequency range of the reference stimulus or electrode frequency allocation and the vowel integration pattern observed for that subject

Natural Vowel Response Measurement Procedure and Analysis

The CI subjects were also tested on natural vowel identification of 9 recorded vowel sounds in a /h/-vowel-/d/ context, with each vowel spoken by 10 male and 10 female talkers (Hillebrand et al. 1995), for a total of 180 vowel sounds.

Before testing, subjects were given a familiarization program so that they could play and listen to five different male speaker exemplars of each vowel. Once familiarized, subjects were tested on vowel classification in a nine-alternative, forced-choice task. During each run, all 180 stimuli were presented in random sequence. At each trial, subjects were asked to indicate on a touchscreen which vowel was heard. No feedback was provided during testing.

Confusion matrices were generated for each condition by adding up the number of times a specific vowel was perceived for each vowel presented, with responses on the diagonal indicating correct responses where the vowel perceived matched the vowel presented and responses off the diagonal indicating incorrect responses.

Experiment 2: Interaural Pitch, Fusion Range, and Fusion Pitch Measurements

Stimuli

All stimuli were presented via computer to control electric and acoustic stimulus presentation. Electric stimuli were delivered directly to the CI instead of through the CI processor microphone, and acoustic stimuli were delivered via headphones instead of through HAs. For bimodal CI users, electric stimuli were delivered to the CI using NIC2 cochlear implant research software and hardware (Cochlear). Stimulation of each electrode followed the same protocol used in previous studies in the laboratory (Reiss et al. 2014a, b), such that each stimulus consisted of a pulse train of 25 μs biphasic pulses presented at 1200 pps with a total duration of 500 ms, with electrode ground set to monopolar stimulation. For both bilateral HA and bimodal CI users, acoustic stimuli were delivered using an ESI Juli sound card, TDT PA5 digital attenuator and HB7 headphone buffer, and Sennheiser HD-25 headphones.

Electric stimuli and acoustic tones were set to “medium loud and comfortable” levels corresponding to 6 or “most comfortable” on a visual loudness scale from 0 (no sound) to 10 (too loud). Loudness was balanced sequentially across all tone frequencies and/or electrodes both within and across ears. Tone frequencies that could not be presented loud enough to be considered a medium loud and comfortable level, due to the limited frequency range of residual hearing in hearing-impaired ears, were excluded. In addition, for bilateral HA users, tone frequencies were screened to be above the subject’s binaural beat range, which will vary with hearing loss and interaural phase difference (IPD) sensitivity; the lower limit of the binaural beat frequency range was determined using a rapid, adaptive IPD test (Grose and Mamo 2010).

Interaural Pitch Matching

A two-interval, two-alternative forced-choice constant-stimulus procedure was used to obtain pitch matches. One interval contained the reference stimulus (an electric pulse train to a single electrode for a CI user or an acoustic tone for a HA user), and the other interval contained a comparison acoustic tone delivered to the contralateral ear. All stimuli were 500 ms in duration and separated by a 500-ms interstimulus interval, with interval order randomized. The reference stimulus was held constant, while the comparison tone frequency was varied in one-fourth octave steps within the residual hearing range between 125 and 4000 Hz and presented in pseudorandom sequence across trials to reduce context effects (Reiss et al. 2012). In each trial, the subject was asked to indicate which interval had the higher pitch. At least six repeats of each frequency was presented. Pitch matches were computed as the 50 % point on the psychometric function generated from the average of the responses at each acoustic tone frequency.

Fusion Range Measurement

A five-alternative forced-choice task was used to measure the frequency ranges over which dichotic pitches were fused (Reiss et al. 2014b). In each trial, the comparison stimulus was presented simultaneously with the reference stimulus for 1500 ms. Subjects were instructed to first determine whether they heard one or two sounds. If they heard only one and the same sound in both ears, they were instructed to choose “Same.” If the subject could hear a different sound in each ear, then the subject was instructed to choose which ear had the higher pitch. Generally, the subject was only able to determine which ear was higher in pitch if the stimuli to the two ears were not fused, and one ear could be identified as having the higher pitch and the other ear as having the lower pitch. As in the pitch matching procedure, a reference stimulus was held constant, while comparison stimuli were varied across trials in pseudorandom sequence. Fusion ranges were calculated as the comparison tone frequency range over which one sound was heard more than 50 % of the time.

Fusion Pitch Matching

A two-interval, two-alternative task was used to measure the pitch of a fused electrode-tone pair for bimodal CI users only (Reiss et al. 2014b). This procedure was similar to that for interaural pitch matches, except that the reference electrode was presented simultaneously with a tone within the fusion range in the contralateral, non-implanted ear, and this fused electrode-tone pair compared with another sequentially played tone in the non-implanted ear. All stimuli were 500 ms in duration and separated by a 500-ms interstimulus interval, with interval order randomized. Subjects were asked to indicate whether the fused electrode-tone pair or the comparison tone was higher in pitch. The comparison tone frequencies were varied in a pseudorandom sequence, and the fusion pitch match was calculated as in the pitch matching procedure.

Results

Experiment 1: Vowel Classification Under Binaural Versus Monaural Stimulation

Frequency-Specific Trends in Vowel Classification Functions

Example vowel classification functions generated from the responses to the five synthetic vowel pairs are shown for two subjects in Figure 2. The first column shows results for a bilateral HA user, and the second column shows results for a bimodal CI user. Each row represents a different vowel pair. Each vowel classification function shows the percent of the time the subject chose the second vowel in the pair as a function of stimulus position in the F1 continuum from the first vowel (stimulus 1) to the second vowel (stimulus 8). Vowel classification functions were generally sigmoidal. For example, for the vowel pair /α/ (“AH” or “hot”) transitioning to /Λ/ (“UH” or “hut”) in Figure 2A1, the percent of responses as /Λ/ (UH) increased from stimulus 1 at left to stimulus 8 at right as the stimulus became gradually less like /α/ (AH) and more like /Λ/ (UH). All vowel pairs were ordered from high to low F1, so that the functions indicate the percent of the time the second, lower F1 vowel was heard. Note that a deviation of this curve from the middle indicates a classification bias, whereas a decreased slope indicates poorer vowel spectral shape discrimination.

For the bilateral HA user shown in Figure 2A, each panel shows vowel classification functions collected with the left ear (blue dashed lines, squares), right ear (red dash-dot lines, triangles), and both ears (black solid lines, circles). For the /α/-/Λ/ (AH to UH) vowel pair, the left ear function was approximately centered on the continuum with a 50 % point at stimulus 4.2 (Fig. 2A1, blue dashed line/squares). In contrast, the right ear function exhibited a rightward shift to a 50 % point of 6.1 on the stimulus axis, indicating a bias toward the first vowel or higher F1 (Fig. 2A1, red dash-dot line/triangles). The binaural function was intermediate between and resembled an average of the two monaural functions, with an intermediate bias and a 50 % point of 5.4 (Fig. 2A1, black solid line/circles).

Other vowel pairs showed different trends within the same subject, indicating that binaural integration type depends on the specific frequency region of F1 transition. The binaural function for the vowel pair /ae/-/ε/ (“AE” to “EH”) showed dominance by the left ear (Fig. 2A2), while the function for the vowel pair /ε/-/I/ (EH to “IH”) showed a transition in dominance from the left to the right ear (Fig. 2A3). The binaural function for the vowel pair /I/-/i/ (“IH” to IY) also showed averaging (Fig. 2A4). Finally, the vowel function for the last vowel pair /Λ/-/υ/ (UH to “OO”) showed no effects of listening condition such that binaural and monaural functions were all similar (Fig. 2A5).

For the bimodal CI user in Figure 2B, a different type of binaural integration was observed. Here, each panel shows vowel classification functions collected with the CI (blue dashed lines, squares), HA (red dash-dot lines, triangles), and CI and HA together (black solid lines, circles). None of the binaural functions in these cases showed simple dominance or averaging; instead, the functions showed rather complex integration of the monaural responses. For example, for the /α/-/Λ/ (AH to UH) pair (Fig. 2B1), the binaural function was severely flattened compared to either monaural function alone, indicating greater difficulty in discriminating vowel pair based on F1 information, i.e., interference with the CI and HA together compared to either device alone (Fig. 2B1). For the remaining four vowel pairs, a rightward shift of the curve was seen for the binaural function compared to those for the CI alone or the HA alone (Fig. 2B2–B5).

Four Main Patterns of Binaural Vowel Integration

Figure 3 shows exemplars of the four types of binaural integration observed in bilateral HA and bimodal CI users. As a reference, a normal-hearing listener’s vowel classification function for the /α/-/Λ/ (AH to UH) pair not only showed the expected S-shaped functions centered on the stimulus F1 range but also had no effects of listening condition (Fig. 3A).

Several instances of single ear dominance were observed, as seen for CI subject CI25’s vowel classification function for the /ε/-/I/ (EH to IH) pair in Figure 3B. In this case, the binaural function was dominated by the CI ear which had a steeper, non-biased function compared to the HA ear. Dominance was also found to occur for the HA ear in other bimodal CI subjects (Table 2).

Binaural averaging was observed more often in HA users, as for HA subject HI08 in Figure 3C. Note that in this example, discrimination of the /Λ/-/υ/ (UH to OO) pair was very poor with the left HA ear, while discrimination was near normal with the right HA ear, such that averaging of the two led to performance intermediate between the two monaural conditions.

Finally, binaural interference was sometimes observed as for CI subject CI25 shown in Figure 3D, where the function slope for the /I/-/i/ (IH to IY) pair decreased severely under the binaural condition compared to either monaural condition, similar to the effect shown in Figure 2B1 for CI30. In this example, two repeat runs were collected for each condition, showing the repeatability of these trends.

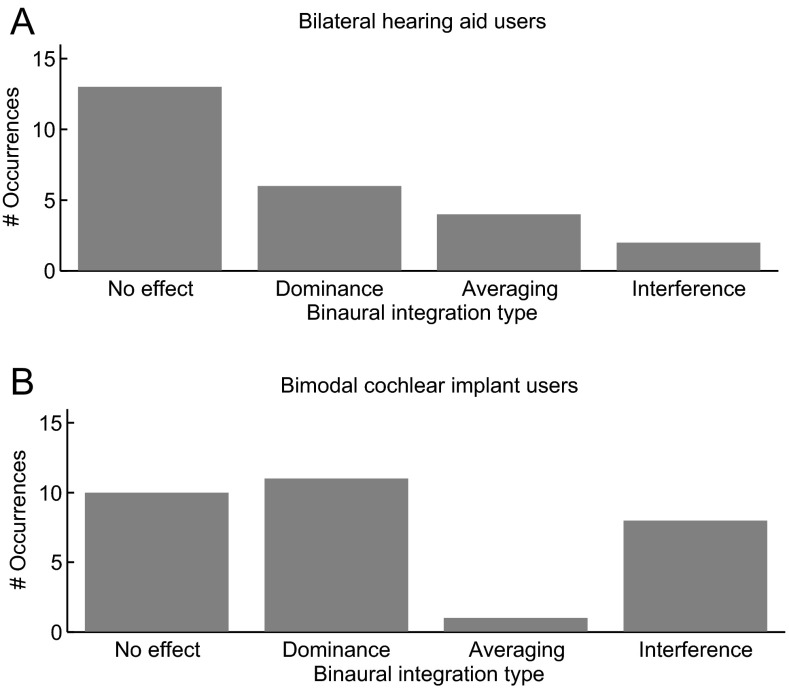

Trends for all subjects and vowel pairs tested are summarized in Table 2 and Figure 4. The majority of cases in bilateral HA users showed no effects of binaural stimulation, while other cases showed dominance, averaging, or interference, in descending order of frequency of occurrence (Fig. 4A). In contrast, for bimodal CI users, no effects, dominance, and interference were seen most often, while very few instances of averaging were observed (Fig. 4B). Interference occurred for the /I/-/i/ (IH to IY) pair in all four bimodal CI users that experienced interference, and it should be noted that these included the two subjects that did not regularly wear their HA with the CI.

FIG. 4.

Distributions of the four different binaural spectral integration types observed for the five vowel pairs. A Bilateral hearing aid users. B Bimodal cochlear implant users. “No effect” indicates that differences between the two monaural conditions and the binaural condition were all small, as in Figure 3A. “Dominance” indicates that the binaural condition was dominated by one ear, typically the better ear, as in Figure 3B. “Averaging” indicates that the binaural condition was significantly different from both monaural conditions and that responses occurred between those for the two monaural conditions, as in Figure 3C. “Interference” indicates that the binaural condition was significantly different from both monaural conditions and in addition elicited worse discrimination ability with a shallower slope and/or larger bias than either monaural condition, as in Figure 3D.

Comparisons to Trends Observed with Natural Vowels

When scores from testing with the CI and HA together were compared with scores with either device alone, all vowel pairs for which binaural interference was observed with synthetic vowel pairs elicited similar interference patterns with the natural vowel stimuli. For example, CI20 and CI25 had more natural vowel confusions for /I/-/i/ (IH to IY; Supplementary Figs. S1 and S2), and CI30 had more natural vowel confusions for both /α/-/Λ/ (AH to UH) and /Λ/-/υ/ (UH to OO) than the best ear alone (UH to OO; Supplementary Fig. S3). Another subject, CI33, exhibited detrimental averaging between the ears with the synthetic vowel pair /ae/-/ε/ (AE to EH) and also exhibited increased confusions between this pair (a bias toward EH) with natural vowels under bimodal stimulation (Supplementary Figs. S4 and S5A). CI33 also had additional confusions for another pair of vowels not tested in a synthetic vowel continuum, /Λ/ and /ε/ (UH and EH), which varied mainly in F2 instead of F1, suggesting that interference also occurred for discrimination of the second formant, possibly due to the frequency shifting of that subject’s hearing aid above 1.5 kHz.

Ear dominance was also well predicted by the synthetic vowel classification functions. Subjects with ear dominance typically did best with bimodal stimulation, such that performance followed the better ear for each vowel in natural vowel stimulation.

Experiment 2: Relationship of Fusion Range and Fusion Pitch Averaging Weights to Vowel Trend

Bilateral HA Users

Only a subset of bilateral HA users could be tested on binaural tone integration at frequencies low enough to be compared with vowel F1 variations, due to binaural beats often occurring below 1000 Hz. To assess binaural tone integration, pitch matches were conducted to measure the degree of pitch mismatch between ears, and fusion ranges were measured by finding the frequency range of tones in one ear that would fuse with a reference tone in the other ear. HI04, HI07, and HI08 were tested with reference tones at 420 and 707 Hz, which fall in the F1 frequency ranges of vowel pairs /ε/-/I/ (388–521 Hz) and /α/-/Λ/ (625–800 Hz), respectively. The reference ears were left, left, and right, respectively. The results are shown in Table 3.

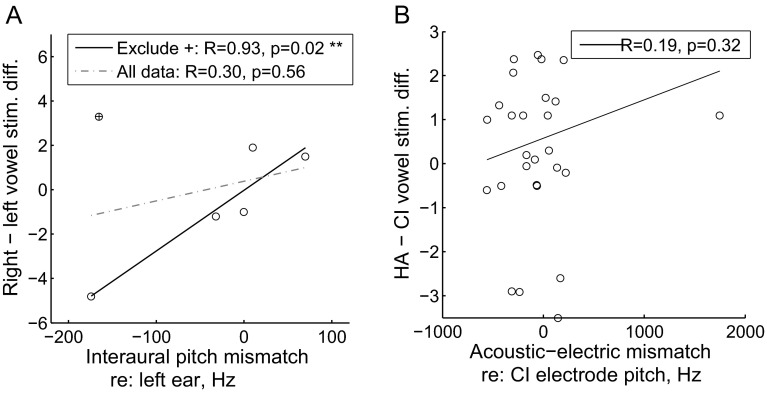

Four of the five HA users showed significant differences or asymmetry in their left and right vowel classification functions. For the three subjects tested on tone integration, differences in the 50 % points fitted to the right and left vowel classification functions are plotted versus interaural pitch mismatches, both relative to the left ear, in Figure 5A. The direction of the mismatch often predicted the direction and degree of the asymmetry in monaural vowel classification functions (circles in Fig. 5A) with the exception of one data point associated with binaural interference (+ symbol in Fig. 5A). With that very different subject’s data point included, there was no significant correlation (dashed line in Fig. 5A); if that data point from the one subject with binaural interference was excluded, the correlation was very tight and became highly significant (solid line in Fig. 5A; R = 0.93, p = 0.02, two-tailed Pearson correlation test). In other words, for most cases, if one ear had a higher perceived pitch than the other ear for the same frequency tone, the vowel F1 frequency was also likely to be perceived as higher and reflected as a rightward shift in the vowel classification function for the higher pitch ear relative to the function for the lower pitch ear.

FIG. 5.

Interaural pitch mismatches versus monaural vowel classification asymmetries for HA and CI users. A For HA users, differences in the 50 % points fitted to the right and left vowel classification functions are plotted versus interaural pitch mismatches, both relative to the left ear. When all data points were included, there was no significant correlation; if the one data point indicated by a “plus sign” was excluded, the correlation was highly significant. In other words, if one ear had a higher perceived pitch than the other ear for the same frequency tone, the vowel F1 frequency was also likely to be perceived as higher, which was reflected as a rightward shift in the vowel classification function for the higher pitch ear relative to the lower pitch ear. B For bimodal CI users, differences in the 50 % points fitted to the HA-only and CI-only vowel classification functions are plotted versus differences in electrode pitch and the frequencies allocated to that electrode (frequencies heard acoustically in the HA ear at the same time as the electrode is stimulated), for the acoustic/HA ear relative to the electric/CI ear. No significant correlation was observed for this group.

Fusion ranges varied with subject and tone frequency, ranging from 0.2 to 2.8 octaves. For HA users, ear dominance occurred for cases with narrower fusion ranges, while averaging and interference occurred with larger fusion ranges (Table 3). For this small sample, smaller pitch mismatches were associated more frequently with averaging compared to interference.

Bimodal CI Users

Binaural tone-electrode integration was also measured for the seven bimodal CI users. CI20 had a broad fusion range for the lowest frequency electrode where interference was observed for the corresponding /I/-/i/ vowel pair, while conversely, a small fusion range was observed for the higher frequency electrode where dominance was observed for the corresponding /Λ/-/υ/ vowel pair (Table 3). In this case, broad fusion would seem to correspond to cases of interference, whereas narrow fusion would seem to correspond to dominance.

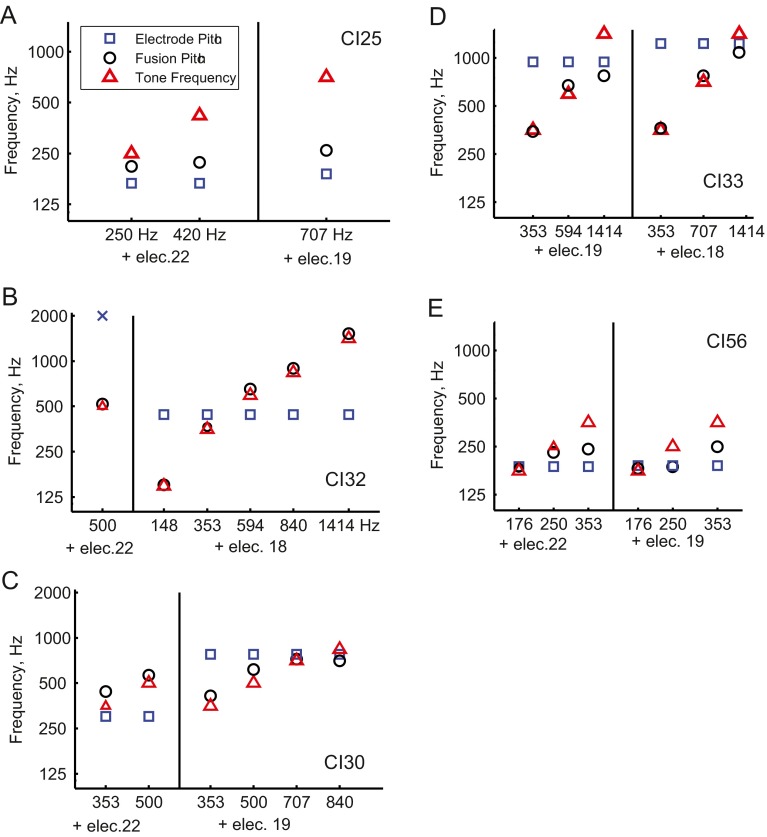

However, the width of fusion alone was not sufficient to explain all vowel integration trends in CI users. CI25, CI32, CI33, and CI56 exhibited broad fusion for all electrodes but still showed a range of trends depending on the specific electrode and vowel pair (Table 3). Instead, direct measures of the electrode-tone fusion pitch, i.e., how the pitches are averaged between the ears as shown for a few examples in Figure 6, served as better predictors of vowel integration trends for these individuals. For instance, CI25 experienced averaging of fused electrode-tone pitches, but averaging was weighted more toward the CI ear (compare black circles to blue squares in Fig. 6A), consistent with that subject’s CI ear dominance with two vowel pairs (Fig. 3B; Table 2). However, this does not explain the striking binaural interference effect with the lowest F1 /I/-/i/ vowel pair (Fig. 3D); this may be explained more by an integration of the weighted averaging across frequency. CI32, on the other hand, showed complete dominance of the fused electrode-tone pitch by acoustic tones (compare black circles to red triangles in Fig. 6B), consistent with the HA dominance observed in that subject’s vowel classification functions for the /α/-/Λ/ and /Λ/-/υ/ vowel pairs (Table 2).

FIG. 6.

Fusion pitch averaging trends for electrode-tone pairs in bimodal CI users. A Fusion pitch averaging for two electrodes paired with various contralateral acoustic tones in subject CI25. Different electrodes are separated by a vertical black line. Fusion electrode-tone pitches (circles) lay between the electrode pitches (squares) and tone frequencies (triangles) but were weighted more toward the electrode pitch. B Fusion pitch dominance by the acoustic ear for two electrodes for subject CI32. Fusion electrode-tone pitches (circles) were dominated by and overlapped with the tone frequencies (triangles). C For CI30, non-linear fusion pitch integration was observed for electrode 22 paired with two frequencies, in which the fused pitch was higher than either monaural pitch. Electrode 19 exhibited either pitch averaging when paired with low-frequency acoustic tones or small differences when paired with higher frequency tones close to the electrode pitch match. D For CI33, a transition was observed from fusion pitch dominance by the acoustic ear to non-linear fusion pitch integration with increasing tone pair frequency for both electrodes 18 and 19. E For CI56, a transition was observed from fusion pitch dominance by either the acoustic ear or the electrode pitch to averaging of the two with increasing tone pair frequency for both electrodes 22 and 19. Results from A and B are replotted from data previously shown in Figure 6 of Reiss et al. 2014b.

CI30 had small interaural pitch mismatches and a moderate fusion range, with an upward offset in the fusion range for electrodes 22 and 21 (Table 3). Fusion pitch measures indicated a non-linear averaging effect in which the electrode-tone fusion pitch was shifted above the higher frequency monaural pitch for electrode 22 (black circles above both blue squares and red triangles, left part of Fig. 6C), consistent with an increased bias toward the higher F1 exemplar in that subject’s binaural vowel classification function for the /I/-/i/ vowel pair (Fig. 2B4). In contrast, electrode 19 for this subject showed no such non-linear averaging effect (right part of Fig. 6C), consistent with the lack of increased bias observed binaurally for the corresponding /ae/-/ε/ vowel pair (Fig. 2B2).

Generally, dominance by one modality across all tone pair frequencies showed a straightforward correspondence to ear dominance by that modality, whereas averaging or mixtures of weighting trends were less easily linked to vowel perception trends. For instance, CI33 and CI56 both had transitions in weighting as the acoustic tone frequency increased. For CI33, the weighting trend shifted from dominance by the acoustic tone toward non-linear weighting below the electrode pitch for electrode 19 (left part of Fig. 6D); as for CI25, integration of these weightings over frequency may better explain why averaging was observed instead of a transition for the corresponding /ae/-/ε/ vowel pair (Supplementary Fig. S5A). For CI56, the weighting trend shifted from dominance by the acoustic tone toward averaging of the acoustic and electric pitches for electrode 22 (Fig. 6E), which does not directly correspond to the transition from HA dominance to CI dominance (instead of averaging) in the corresponding /I/-/i/ vowel pair (Supplementary Fig. S5B).

No significant correlation was seen between acoustic-electric pitch mismatches and the direction and degree of the asymmetry in monaural vowel classification functions (Fig. 5B; R = 0.19, p = 0.32, Pearson correlation test). No significant differences in pitch mismatches or pitch fusion ranges were associated with the four different vowel integration trends (p > 0.1 for all comparisons, two-tailed Wilcoxon rank-sum test).

Discussion

This study provides the first quantitative evidence of abnormal spectral shape integration across ears in individuals with hearing loss, through the use of a new technique based on synthetic vowel classification along a continuum. The results add to the growing body of evidence that binaural spectral integration differs in individuals with hearing loss and/or hearing devices such as HAs or CIs (Reiss et al. 2014b). In addition, although binaural speech perception interference in HA users was first reported more than half a century ago (Jerger et al. 1961), the cause of this interference has remained a puzzle up to now. This is the first study to investigate a potential underlying cause of interference, i.e., abnormally broad binaural spectral integration.

New Speech-Based Measure of Binaural Spectral Integration

The systematic variation of F1 within a single vowel pair provides a frequency-specific measure of how binaural integration affects complex spectral shape perception. The vowel classification functions constructed from responses to the synthetic vowel continua indicate the degree of both classification bias (the horizontal shift of the function along the stimulus or F1 frequency axis) and uncertainty (the overall slope of the function). The findings here demonstrate that binaural spectral integration can increase uncertainty as well as induce bias in vowel classification in hearing-impaired listeners.

Bias and uncertainty have been quantified monaurally in CI users using other methods based on natural speech such as multidimensional phoneme integration (MPI) and are negatively correlated with vowel recognition performance (Sagi et al. 2010; Kong and Braida 2011). This new approach has advantages over previous approaches in that frequency-specific modeling can be used to predict confusion patterns without requiring large amounts of data. Further, dominance and interference effects observed with synthetic vowels were often replicated with natural vowels, despite the addition of multiple talkers and other vowel identity cues including higher formants, formant transitions, and duration, indicating generalizability to real-world speech perception.

Abnormal Binaural Integration of Vowels in Hearing-Impaired Listeners

The binaural and monaural vowel classification functions obtained from this technique uncovered several interesting trends in hearing-impaired listeners. Significant differences in left and right vowel classification functions were often seen in HA users and were often, but not always, associated with the interaural pitch mismatch as measured by pure tones.

Bilateral HA listeners showed a variety of binaural vowel integration patterns, including no effects (likely due to small interaural pitch mismatches), ear dominance, averaging between ears, and interference between ears, depending on the subject and vowel pair. In this small sample, HA users with broader tone fusion ranges showed binaural vowel averaging or interference, while those with narrower tone fusion ranges showed no effects or dominance. This suggests that vowel averaging and interference may potentially be associated with abnormally broad binaural pitch fusion in HA users, especially if pitch averaging between mismatched ears is also present.

Similarly to HA users, bimodal CI listeners showed significant differences in CI-only and HA-only vowel classification functions, but the differences were not correlated with the acoustic-electric pitch mismatch. The lack of correlation may be related to the fact that many bimodal CI users experience changes in pitch perception over time (Reiss et al. 2014a); it is possible that these changes do not always translate to vowel classification changes.

Bimodal CI users also showed various binaural vowel integration patterns, including no effect, ear dominance, and interference, with a higher prevalence of interference than HA users. Very little averaging was observed in bimodal CI users in this sample. The higher prevalence of interference in bimodal CI users observed here may be due to the large interaural pitch mismatches introduced by the CI programming (Table 3; Reiss et al. 2014a, b). While previous studies have shown that CI users with Hybrid or EAS devices are able to adapt and reduce this pitch mismatch over time (Reiss et al. 2007, 2008), recent studies suggest pitch adaptation to be less common in bimodal CI users with less residual hearing (Reiss et al. 2014a). Many bimodal CI users also have broad dichotic pitch fusion, such that pitches differing by as much as 3–4 octaves between ears are fused, which may be an alternate mechanism to pitch adaptation for reducing the perception of this mismatch (Reiss et al. 2014b). However, no correlations were observed between these measures of interaural pitch mismatch and the incidence of binaural averaging or interference. Abnormal vowel integration between ears has also been observed in bimodal CI users when the two formants are presented separately to each ear, which could not be explained simply by interaural pitch mismatches alone (Guerit et al. 2014).

It is likely that the details of how these mismatches are fused across ears are more important in determining vowel integration than simply the presence of large interaural pitch mismatches and broad fusion. Many bimodal CI users with broad dichotic pitch fusion also experience pitch averaging between the ears, such that the fused tone pitch is a weighted average of the two original monaural pitches (Reiss et al. 2014b). For bimodal CI users, these findings suggest that the relative weighting of the two ears measured using single electrode-tone pairs better predicts vowel integration trends such as dominance than either the interaural pitch mismatch or the width of the binaural pitch fusion range alone. Interference arising from complex integration effects, on the other hand, is not as easily accounted for by the relative weighting of a few electrode-tone pairs. In such cases, denser sampling of the relative tone-electrode weighting over a wider range of electrodes and tone frequencies is needed to fully characterize and model binaural spectral integration; such integration may be a linear summation of the weighted binaural spectrum or alternatively a non-linear integration in which second-order (and possibly higher order) weights with multiple tone-electrode complexes also need to be computed.

Certainly, increased phoneme confusions have been shown to arise under other conditions and integration of other modalities. Consonant confusions can arise from the averaging of artificially mismatched formant transitions across ears in normal-hearing listeners (Cutting 1976; Halwes 1970). In auditory-visual integration, discrepant auditory and visual speech cues (e.g., “ba” and “ga”) are fused and averaged into a completely new percept (“da”) in a phenomenon known as the McGurk effect (McGurk and MacDonald 1976). However, the above cases all involve artificially induced mismatches or illusions; the confusions demonstrated in this study occur naturally with hearing loss and/or CI use. It is possible that as other neural prostheses such as retinal implants become more widespread, similar averaging and interference effects may also be seen to occur naturally across mismatched inputs in other sensory systems.

Implications for Speech Perception with Hearing Aids and Cochlear Implants

The findings here may explain the binaural interference previously reported in the literature. For HA users, binaural interference has been reported for speech perception in quiet or noise, with incidence ranging from 20 % (e.g., McArdle et al. 2012) to as high as 82 % (e.g., Walden and Walden 2005); note that the latter study did not plug the non-test ear but simply removed the HA in the “monaural” control conditions, essentially providing a lower level of stimulation to one ear than in the binaural condition. A larger, survey-based study indicated that 46 % of 94 participants preferred to use one hearing aid over two, also suggestive of interference; interestingly, this preference could be predicted in part by differences in binaural summation and dichotic listening, but not by pure tone thresholds (Cox et al. 2011). Such interference may be explained by either the binaural averaging or the complex interference trends seen in this study. Note that binaural averaging can also be a form of interference in that bias and discrimination ability are averaged to be intermediate between the poorer and better ear and thus worse than the better ear alone. Binaural averaging and complex interference may also conceivably occur for some consonants that are classified based on spectral cues, such as place of articulation or formant transitions.

For bimodal CI users, while benefit has been reported for combined CI and HA use in laboratory studies (Ching et al. 2007), such studies are biased in subject selection for those who regularly wear their HA with the CI. A recent survey-based study showed that 51 % out of 96 unilateral CI patients had discontinued hearing aid use in the non-implanted ear, often due to little perceived benefit (25 %) or interference (21 %; Fitzpatrick and LeBlanc 2010). One laboratory study in children also found interference in 67 % of nine bimodal CI users (Litovsky et al. 2006). In the current study, six out of seven cases of interference were observed in the two bimodal subjects who did not regularly wear their HA. The interference may explain why the subjects had stopped using their HA, as seen in the previously reported survey. Alternatively, regular HA use may be required for the brain to adapt and adjust fusion pitch weighting to reduce interference.

More importantly, these findings show that binaural interference for one phoneme/frequency region may be masked by concomitant benefits of binaural stimulation for other phonemes/frequency regions. Even when interference was observed for some vowels, similar or higher overall percent correct scores on vowel recognition were seen in the bimodal CI mode compared to the best monaural mode (e.g., CI25 and CI30; compare scores in Supplementary Figs. S2 and S3). More broadly, bimodal CI stimulation adds high-frequency information via the CI which often improves consonant perception. Such interference could lead to a net zero bimodal benefit with standard clinical speech tests which only measure the total number of correctly heard words and phonemes; this scenario is sub-optimal because a larger bimodal benefit would be observed if the interference was removed. In fact, a recent study showed that reducing low-frequency overlap of the CI with the HA improved word recognition scores in individuals with more aidable residual hearing (Fowler et al. 2016).

Similarly, a bilateral HA user may have a local “dead” (deafferented) frequency region in one ear that reduces discrimination ability for a specific phoneme, such that binaural averaging of the poor ear with the better ear worsens perception of that phoneme compared to the better ear alone. At the same time, perception of phonemes in frequency regions outside of the dead region may be improved with binaural stimulation through redundancy or other binaural benefits experienced by normal-hearing listeners. Again, in such cases, interference will not decrease overall speech perception score but simply limit the maximum performance that can be achieved binaurally in quiet or in noise.

This is the first study to investigate a potential underlying cause of binaural interference in hearing-impaired listeners at the phoneme level. Further research is needed to fully understand how these binaural interference effects arise, especially complex or non-linear effects, and to extend these findings with single vowels to consonant classification as well as to more complex listening situations involving multiple talkers and concurrently presented phonemes. Once the underlying mechanisms of interference are understood, new auditory training or device reprogramming approaches can be used to reduce binaural interference and maximize speech perception in quiet and in noise with two ears.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Each figure shows vowel confusion matrices for CI20, CI25, CI30, and CI33. In each figure, the CI-only, bimodal CI (CI+HA), and HA-only results are shown in the top, middle, and bottom panels, respectively. Each row represents one vowel and each column represents one possible vowel response by the subject. The shading and number for each cell indicate how often the subject chose a particular vowel (column) in response to presentation of a particular stimulus (row). Responses on the diagonal indicate correct responses, and responses off the diagonal indicate confusions. (GIF 317 kb)

(GIF 299 kb)

(GIF 309 kb)

(GIF 305 kb)

Additional example synthetic vowel classification functions for two subjects. CI33 (A) and CI56 (B). Plotted as in Fig. 2. (GIF 115 kb)

Acknowledgments

The laboratory cochlear implant Freedom processor was provided by Cochlear, and the laboratory hearing aid was provided by Oticon. This research was supported by grants R01 DC013307, P30 DC010755, and P30 DC005983 from the National Institutes of Deafness and Communication Disorders, National Institutes of Health.

References

- Albers GD, Wilson WH. Diplacusis. I. Historical review. Arch Otolaryngol. 1968;87(6):601–603. doi: 10.1001/archotol.1968.00760060603009. [DOI] [PubMed] [Google Scholar]

- Anstis S, Rogers B. Binocular fusion of luminance, color, motion, and flicker—two eyes are worse than one. Vis Res. 2012;53:47–53. doi: 10.1016/j.visres.2011.11.005. [DOI] [PubMed] [Google Scholar]

- Binda P, Bruno A, Burr DC, Morrone M. Fusion of visual and auditory stimuli during saccades: a Bayesian explanation for perisaccadic distortions. J Neurosci. 2007;27(32):8525–8532. doi: 10.1523/JNEUROSCI.0737-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blamey PJ, Maat B, Baskent D, Mawman D, Burke E, Dillier N, Beynon A, Klein-Punte A, Govaerts P, Skarzynski PH, Huber AM, Sterkers-Artieres F, Van de Heynin P, O’Leary S, Fraysse B, Green K, Sterkers O, Venail F, Skarzynski H, Vincent C, Truy E, Dowell R, Bergeron F, Lazard D. A retrospective multicenter study comparing speech perception outcomes for bilateral implantation and bimodal rehabilitation. Ear Hear. 2015;36:408–416. doi: 10.1097/AUD.0000000000000150. [DOI] [PubMed] [Google Scholar]

- Carter AS, Noe CM, Wilson RH. Listeners who prefer monaural to binaural hearing aids. J Am Acad Audiol. 2001;12:261–272. [PubMed] [Google Scholar]

- Cheung SM, Bonham BH, Schreiner CE, Godey B, Copenhaver DA. Realignment of interaural cortical maps in asymmetric hearing loss. J Neurosci. 2009;29(21):7065–7078. doi: 10.1523/JNEUROSCI.6072-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching TY, van Wanrooy E, Dillong H. Binaural-bimodal fitting or bilateral implantation for managing severe to profound deafness: a review. Trends Amplif. 2007;11(3):161–192. doi: 10.1177/1084713807304357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RM, Schwartz KS, Noe CM, Alexander GC. Preference for one or two hearing aids among adult patients. Ear Hear. 2011;32(2):181–197. doi: 10.1097/AUD.0b013e3181f8bf6c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cutting JE. Auditory and linguistic process in speech perception: inferences from six fusions in dichotic listening. Psychol Rev. 1976;83:114–140. doi: 10.1037/0033-295X.83.2.114. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH. Combining acoustic and electric stimulation in the service of speech recognition. Int J Audiol. 2010;49(12):912–919. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Efron B, Tibshirani R. An introduction to the bootstrap. New York: Chapman and Hall; 1993. [Google Scholar]

- Eggermont JJ. The role of sound in adult and developmental auditory cortical plasticity. Ear Hear. 2008;29:819–829. doi: 10.1097/AUD.0b013e3181853030. [DOI] [PubMed] [Google Scholar]

- Fitzpatrick EM, Leblanc S. Exploring the factors influencing discontinued hearing aid use in patients with unilateral cochlear implants. Trends Amplif. 2010;14(4):199–210. doi: 10.1177/1084713810396511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”: a practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Fowler, J.R., Eggleston, J.L., Reavis, K.M., McMillan, G.P., and Reiss, L.A.J. (2016) Effects of removing low-frequency electric information on speech perception with bimodal hearing. J. Speech Lang. Hear. Res [DOI] [PMC free article] [PubMed]

- Grose JH, Mamo SK. Processing of temporal fine structure as a function of age. Ear Hear. 2010;31(6):755–760. doi: 10.1097/AUD.0b013e3181e627e7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guerit F, Santurette S, Chalupper J, Dau T. Investigating interaural frequency-place mismatches via bimodal vowel integration. Trends Hear. 2014;18:1–10. doi: 10.1177/2331216514560590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halwes, T.G. (1970) Effects of dichotic fusion on the perception of speech (Doctoral dissertation, University of Minnesota, 1969). Dissertation Abstracts International, 1970, 31, p. 1565B. (University Microfilms No. 70–15, 736)

- Hillebrand, J., Getty, L.A., Clark, M.J., and Wheeler, K. (1995) Acoustic characteristics of American English vowels. J. Acoust. Soc. Am. 97(5), Pt. 1, 3099–3111 [DOI] [PubMed]

- Hillis JM, Ernst MO, Banks MS, Landy MS. Combining sensory information: mandatory fusion within, but not between, senses. Science. 2002;298(5598):1627–1630. doi: 10.1126/science.1075396. [DOI] [PubMed] [Google Scholar]

- Jerger J, Carhart R, Dirks D. Binaural hearing aids and speech intelligibility. J Speech Hear Res. 1961;4:137–148. doi: 10.1044/jshr.0402.137. [DOI] [PubMed] [Google Scholar]

- Kong YY, Braida LD. Cross-frequency integration for consonant and vowel identification in bimodal hearing. J Speech Lang Hear Res. 2011;54(3):959–980. doi: 10.1044/1092-4388(2010/10-0197). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong YY, Stickney GS, Zeng FG. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117:1351–1361. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- Litovsky RY, Johnstone PM, Godar S, Agrawal S, Parkinson A, Peters R, Lake J. Bilateral cochlear implants in children: localization acuity measured with minimum audible angle. Ear Hear. 2006;27(1):43–59. doi: 10.1097/01.aud.0000194515.28023.4b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McArdle RA, Killion M, Mennite MA, Chisolm TH. Are two ears not better than one? J Am Acad Audiol. 2012;23(3):171–181. doi: 10.3766/jaaa.23.3.4. [DOI] [PubMed] [Google Scholar]

- McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976;264(5588):746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Odenthal DW. Perception and neural representation of simultaneous dichotic pure tone stimuli. Acta Physiol Pharmacol Neerl. 1963;12:453–496. [PubMed] [Google Scholar]

- Peterson GE, Barney HE. Control of methods used in a study of vowels. J Acoust Soc Am. 1952;24:175–184. doi: 10.1121/1.1906875. [DOI] [Google Scholar]

- Popescu MV, Polley DB. Monaural deprivation disrupts development of binaural selectivity in auditory midbrain and cortex. Neuron. 2010;65(5):718–731. doi: 10.1016/j.neuron.2010.02.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss, L. A., Shayman, C. S., Walker, E.P., Bennett, K. O., Fowler, J. R., Hartling C. L., and Oh, Y. (submitted). Binaural pitch fusion is broader in hearing-impaired than normal-hearing listeners. Submitted to J. Acoust. Soc. Am [DOI] [PMC free article] [PubMed]

- Reiss LAJ, Turner CW, Erenberg SR, Gantz BJ. Changes in pitch with a cochlear implant over time. J Assoc Res Otolaryngol. 2007;8(2):241–257. doi: 10.1007/s10162-007-0077-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LAJ, Gantz BJ, Turner CW. Cochlear implant speech processor frequency allocations may influence pitch perception. Otol Neurootol. 2008;29(2):160–167. doi: 10.1097/mao.0b013e31815aedf4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LA, Lowder ML, Karsten SA, Turner CW, Gantz BJ. Effects of extreme tonotopic mismatches between bilateral cochlear implants on electric pitch perception: a case study. Ear Hear. 2011;32(4):536–40. doi: 10.1097/AUD.0b013e31820c81b0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LA, Perreau AE, Turner CW. Effects of lower frequency-to-electrode allocations on speech and pitch perception with the Hybrid short-electrode cochlear implant. Audiol Neurotol. 2012;17(6):357–72. doi: 10.1159/000341165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LAJ, Ito RA, Eggleston JL, Liao S, Becker JJ, Lakin CE, Warren FM, McMenomey SO. Pitch adaptation patterns in bimodal cochlear implant users: over-time and after experience. Ear Hear. 2014;36(2):e23–34. doi: 10.1097/AUD.0000000000000114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LAJ, Ito RA, Eggleston JL, Wozny DR. Abnormal binaural spectral integration in cochlear implant users. J Assoc Res Otolaryngol. 2014;15(2):235–248. doi: 10.1007/s10162-013-0434-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss, L., Shayman, C., Walker, E., O’Connell Bennett, K., and Fowler, J. (2015) Binaural Pitch Fusion is Broader in Hearing-Impaired Listeners than Normal-Hearing Listeners. 38thMidwinter Research Meeting of the Association for Research in Otolaryngology, February 2015, Baltimore, MD

- Sagi E, Fu QJ, Galvin JJ, 3rd, Svirsky MA. A model of incomplete adaptation to a severely shifted frequency-to-electrode mapping by cochlear implant users. J Assoc Res Otolaryngol. 2010;11(1):69–78. doi: 10.1007/s10162-009-0187-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Souza PE, Boike KT, Witherall K, Tremblay K. Prediction of speech recognition from audibility in older listeners with hearing loss: effects of age, amplification, and background noise. J Am Acad Audiol. 2007;18:54–65. doi: 10.3766/jaaa.18.1.5. [DOI] [PubMed] [Google Scholar]

- Van den Brink G, Sintnicolaas K, van Stam WS. Dichotic pitch fusion. J Acoust Soc Am. 1976;59(6):1471–1476. doi: 10.1121/1.380989. [DOI] [PubMed] [Google Scholar]

- Walden TC, Walden BE. Unilateral versus bilateral amplification for adults with impaired hearing. J Am Acad Audiol. 2005;16(8):574–584. doi: 10.3766/jaaa.16.8.6. [DOI] [PubMed] [Google Scholar]

- Wichmann FA, Hill NJ. The psychometric function: II. Bootstrap-based confidence intervals and sampling. Percept Psychophys. 2001;63(8):1314–1329. doi: 10.3758/BF03194545. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Each figure shows vowel confusion matrices for CI20, CI25, CI30, and CI33. In each figure, the CI-only, bimodal CI (CI+HA), and HA-only results are shown in the top, middle, and bottom panels, respectively. Each row represents one vowel and each column represents one possible vowel response by the subject. The shading and number for each cell indicate how often the subject chose a particular vowel (column) in response to presentation of a particular stimulus (row). Responses on the diagonal indicate correct responses, and responses off the diagonal indicate confusions. (GIF 317 kb)

(GIF 299 kb)

(GIF 309 kb)

(GIF 305 kb)

Additional example synthetic vowel classification functions for two subjects. CI33 (A) and CI56 (B). Plotted as in Fig. 2. (GIF 115 kb)