Abstract

PURPOSE

Best-worst scaling (BWS) is a survey method for assessing individuals’ priorities. It identifies the extremes—best and worst items, most and least important factors, biggest and smallest influences—among sets. In this article, we demonstrate an application of BWS in a primary care setting to illustrate its use in identifying patient priorities for services.

METHODS

We conducted a BWS survey in 2014 in Boston, Massachusetts, to assess the relative importance of 10 previously identified attributes of Papanicolaou (Pap) testing services among women experiencing homelessness. Women were asked to evaluate 11 sets of 5 attributes of Pap services, and identify which attribute among each set would have the biggest and smallest influence on promoting uptake. We show how frequency analysis can be used to analyze results.

RESULTS

In all, 165 women participated, a response rate of 72%. We identified the most and least salient influences on encouraging Pap screening based on their frequency of report among our sample, with possible standardized scores ranging from+1.0 (biggest influence) to −1.0 (smallest influence). Most important was the availability of support for issues beyond health (+0.39), while least important was the availability of accommodations for personal hygiene (−0.27).

CONCLUSIONS

BWS quantifies patient priorities in a manner that is transparent and accessible. It is easily comprehendible by patients and relatively easy to administer. Our application illustrates its use in a vulnerable population, showing that factors beyond those typically provided in health care settings are highly important to women in seeking Pap screening. This approach can be applied to other health care services where prioritization is helpful to guide decisions.

Keywords: stated preferences, conjoint analysis, methods, best-worst scaling, homeless, cervical cancer, vulnerable populations

INTRODUCTION

Best-worst scaling (BWS) is a survey method for assessing individuals’ priorities: what they view as best and worst among a set of items.1 BWS has been used to illuminate patients’ and clinicians’ priorities, such as which disease symptoms are most important to patients,2 what factors most influence patients in choosing a doctor3 or using a medication,4 and what is important for clinicians when deciding on treatments.5 BWS belongs to the conjoint analysis family of methods, which collectively serve to identify preferences and trade-offs that contribute to individuals’ choices with respect to “goods.”6 These methods have been noted for their cognitive and administrative simplicity,7 as well as their focus on the patient perspective.8,9 In this article, we present the BWS method in the context of cervical cancer screening priorities for homeless women.

Homeless women die from cervical cancer at a rate nearly 6 times that of the general population.10 The rate of guideline-adherent screening among homeless women has been reported to be approximately 50%, compared with 82.8% of the insured US population and 61.9% of the uninsured population.11–16 The literature identifies financial, transportation, and scheduling issues, and sources of care as critical obstacles for underserved women.17–19 BWS can distinguish among obstacles to identify those most salient for choice—improving on a list of important factors by quantifying the relative importance of each.

METHODS

Participants

We recruited adult women experiencing homelessness in Boston, Massachusetts, from 5 sheltered settings: a homeless medical respite center; a health care for the homeless outpatient medical clinic; an emergency (ie, overnight) shelter; a transitional housing program; and a residential substance use treatment program. Inclusion criteria were being aged 18 years or older, verbally proficient in English or Spanish, and awake and alert, as determined by the recruiter. We excluded women reporting a hysterectomy. We conducted recruitment at each site until the pool of eligible women was exhausted or the target quota for each site was achieved. Power calculations for this survey design are not yet available (see de Bekker-Grob et al20 for most recent guidance); we sought a minimum sample size of 150 women to provide reasonable precision in our estimates balanced with feasibility of recruitment.21

Measures

BWS identifies the relative importance of the attributes of a good.22 We used the BWS object case method, in which the importance of each contributing attribute of a decision is evaluated relative to that of all others.23 We asked women to evaluate previously identified attributes of a hypothetical Papanicolaou (Pap) smear screening intervention24 in terms of their relative influence on homeless women’s decision to be tested: women indicated which they thought would have the biggest and smallest influence on screening decisions. The 11 attributes encompassed 4 categories: provider (sex of patient’s choice, provider kindness, and provider is familiar); setting (accepting setting and no-cost testing); procedure (convenience of testing time/scheduling, explanation/questions answered); and personal fears/concerns (personal hygiene accommodations, testing is not contingent on substance use, counseling about results is provided, and a general category described as “support is provided around all issues the woman is facing”).24

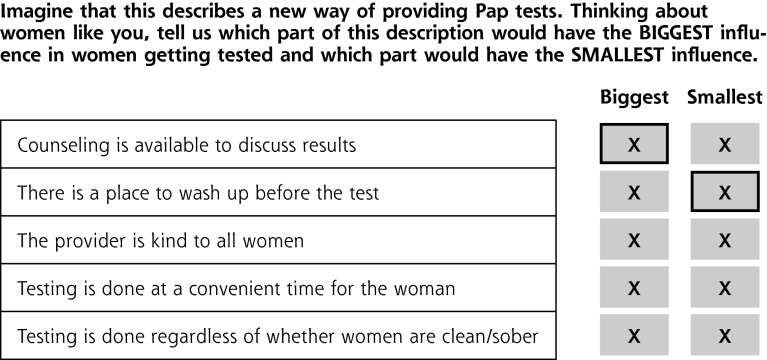

Interviewers showed women a sequence of 11 sets of 5 attributes each on a tablet computer. For each set, the woman indicated first which 1 had the biggest influence and then which 1 had the smallest influence, for a total of 2 responses per set (11 biggest influences and 11 smallest influences, or 22 data points per respondent). BWS is considered superior to rating scales because it avoids common biases,25 and superior to rankings because it is cognitively simpler to identify items that are the extremes among a list than those that are in a middle position (eg, the best and worst items of a list are relatively easy to identify, whereas the middle items of a list are more difficult to distinguish among).7 Each set of 5 attributes was presented singly; a sample question is given in Figure 1. The 11 sets were determined by an experimental design that ensured that each attribute was presented 5 times in total and viewed with each other twice (a so-called balanced incomplete block design).26,27 The order of the 5 attributes within each set was randomly assigned before the questionnaire was administered; the order of the 11 sets was randomly assigned for each respondent.

Figure 1.

Sample screen shot of a question from the survey.

Pap = Papanicolaou.

Note: Respondents were shown 11 questions similar to this, each with a different set of 5 attributes (ie, “objects,” predefined per the experimental design, so that each was seen 5 times and in combination with each other attribute twice during the course of the survey). They were instructed to choose which attribute would have the biggest influence on women’s decision to get tested, and which would have the smallest. A button appeared highlighted with color when it was “clicked.” After choosing biggest and smallest, respondents proceeded to the next question until completion of the 11 total sets.

Demographic and health data were collected at the end of the questionnaire. The questionnaire was available in English or Spanish, for self-completion or with interviewer assistance. (It is available from the authors on request.) Participants were given a $10 gift card as remuneration. Verbal informed consent was obtained before the start of the survey.

Statistical Analysis

We analyzed BWS response data in 2 ways: as frequency counts for the number of times each attribute was chosen as biggest and smallest across the series of 11 sets presented to each respondent, and as a standardized “score” for each attribute. The score was calculated as the difference between the frequency of being chosen as biggest vs smallest divided by the availability of each attribute (meaning the number of times it appeared across the design, or 5 × number of respondents)23,26—that is, (frequency of biggest – frequency of smallest)/(5 × 165). We generated confidence intervals for the standardized scores by bootstrapping respondents 1,000 times (eg, for the overall group, 165 women were sampled with replacement 1,000 times to generate different survey samples).28 The standardized score indicates the relative strength of influence, or salience, of an attribute across all women in the sample. Standardized scores are on a scale from −1.0 to +1.0, with a score of 0 indicating no salience, and scores toward ±1.0 indicating increasing salience. In our design, scores toward +1.0 indicate salience as a biggest influence on testing, scores toward −1.0 as a smallest influence, and a score of 0 indicates no salience to the decision to undergo testing. Scores have been shown to provide similar information as coefficients from a conditional logistic regression choice model, but with greater ease of calculation and interpretation.23,29,30 Finally, we conducted stratified analyses by factors hypothesized a priori to affect priorities: time since last Pap smear (within 12 months vs not), and self-reported addiction status (addicted vs not). Analyses were conducted using Stata 11 (StataCorp LP).

Although our data set was complete—that is, all women completed all choice screens—this technique is robust to missing data because it assumes no choice when an item is left missing. Any item that is not selected from a set is considered neither best nor worst, so it contributes nothing to the numerator of the standardized score, yet contributes to the denominator by virtue of being available for choice. A profusion of missing data from a single respondent might suggest miscomprehension of the task or a decision not to participate, and could trigger exclusion from the set.

The study was approved by the Harvard T. H. Chan School of Public Health Institutional Review Board.

RESULTS

Participants

A total of 165 women completed the survey between February and June 2014 (72% response rate); reasons for declining included lack of interest, scheduling conflicts, and competing priorities at that moment. Demographic, health history, and questionnaire completion data are shown in Table 1. Of note, 90% of women reported having had a Pap test within the past 3 years.

Table 1.

Sample Characteristics and Questionnaire Completion (N = 165)

| Characteristic | Mean (SD) or No. (%) |

|---|---|

| Age, y | 43.1 (13.1) |

| Racea | |

| White | 69 (41.8) |

| African American or black | 57 (34.5) |

| Native Hawaiian or Pacific Islander | 1 (0.6) |

| Other | 20 (12.1) |

| Multiple | 4 (2.4) |

| Hispanic onlyb | 14 (8.5) |

| Ethnicity: Latina/Hispanic | 45 (27.3) |

| Education | |

| <12 years | 36 (21.8) |

| High school diploma/GED | 57 (34.5) |

| Some college or college diploma | 68 (41.2) |

| Other type of degree/not sure | 4 (2.4) |

| Has childrenc | 119 (72.6) |

| Reported ≥1 medical condition | 133 (80.6) |

| Reported condition(s)d | |

| Depression/mental health condition | 76 (46.1) |

| Addiction | 54 (32.7) |

| High blood pressure | 49 (29.7) |

| Asthma/lung disease | 46 (27.9) |

| Liver disease/hepatitis C | 30 (18.2) |

| Diabetes | 28 (17.0) |

| Cancer | 15 (9.1) |

| Heart disease | 8 (4.8) |

| Another condition/disease | 60 (36.4) |

| Time since last Pap smear | |

| ≤12 months | 103 (62.4) |

| >12 months to ≤3 years | 46 (27.9) |

| >3 years | 7 (4.2) |

| Never had one | 4 (2.4) |

| Don’t know | 5 (3.0) |

| Questionnaire completion | |

| Spanish version | 19 (11.5) |

| Self-completed or some interviewer assistancee | 91 (55.8) |

| Time taken, min | 13.2 (4.4) |

GED = General Educational Development Test; Pap = Papanicolaou.

Note: Percentages may not sum to 100 because of rounding.

None reported Asian race.

Indicated only ethnicity.

Including children living elsewhere—data missing for 1 respondent.

Of women reporting at least 1 condition.

Data missing for 2 respondents.

Priorities

Women most frequently cited the biggest influence on the decision to pursue testing as “support is available for all issues the woman is facing” (370 women), followed by no-cost testing (277 women) (Table 2). Personal hygiene accommodations and the provider being familiar to the woman were most frequently cited as having the smallest influence on the decision to pursue testing (309 and 262 women, respectively).

Table 2.

Subjective Priority of Attributes Influencing Acceptance of Pap Smears Among Homeless Women: Frequency Counts and Standardized Score

| Attribute | No. of Times Chosen

|

Standardized Scorea | |

|---|---|---|---|

| Biggest Influence | Smallest Influence | ||

| Support is available for all issues the woman is facing | 370 | 51 | 0.39 |

| Testing is done at no cost | 277 | 96 | 0.22 |

| Testing is not contingent on substance use | 189 | 208 | −0.02 |

| Counseling is available to discuss results | 182 | 72 | 0.13 |

| Testing is done at convenient time | 174 | 119 | 0.07 |

| Choice of provider sex | 169 | 178 | −0.01 |

| Time during procedure for questions/explanations | 105 | 133 | −0.03 |

| Setting is accepting of homeless | 104 | 231 | −0.15 |

| Provider is kind | 88 | 156 | −0.08 |

| Personal hygiene accommodations | 83 | 309 | −0.27 |

| Provider is familiar to woman | 74 | 262 | −0.23 |

Pap = Papanicolaou.

Difference between count of chosen as biggest and count of chosen as smallest, divided by the number of times attribute was available to be selected per experimental design (for this design, 5 × number of respondents). Standardized scores indicate the salience of an attribute on a scale from −1.0 to +1.0. Scores toward +1.0 indicate salience as a biggest influence on testing, scores toward −1.0 indicate salience as a smallest influence on testing, and a score of 0 indicates no salience to the decision to undergo testing.

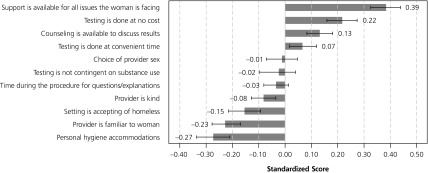

The relative priority assigned to each attribute is represented by the standardized scores depicted in Figure 2. For example, the score for “support is available for all issues the woman is facing” is computed as (370 − 51)/(5 × 165) = 0.39. The length of the bars represents the relative salience of each attribute on the screening decision, standardized to a scale ranging from −1.0 to +1.0; the 95% confidence intervals indicate the precision of each estimated score (overlapping intervals indicate that the difference is not significant). Stratified analyses showed that personal hygiene accommodations were significantly less important for women who reported addiction, but no other attributes differed significantly across strata (results not shown).

Figure 2.

Standardized scores for the 11 hypothetical screening attributes.

Note: Standardized “biggest – smallest” scores and 95% confidence intervals for the 11 hypothetical screening attributes influencing homeless women’s decision to be tested. A total of 165 women participated, each of whom chose biggest and smallest attributes from 11 sets of 5 attributes each (3,630 total choices).

Interviewers used their judgment to assess participant comprehension and cooperation, and we conducted analyses with and without the 5 women who did not meet the criteria for comprehension and cooperation. We found no difference in results and therefore included all women in our analytic data set.

DISCUSSION

BWS offers a simple and transparent, patient-centered method to assess the relative importance of factors that guide decisions. The method is feasible for both patients and investigators, and extends insights to priorities that can directly inform the allocation of resources by dictating what should be done first among a list of possibilities.

Homeless women were willing and able to complete our survey, as indicated by our response rate and minimal missing data. Our results showed distinctions with the literature: characteristics of providers and settings were relatively low in priority for our women compared with those reported in the literature,17,19,31–33 as were provider sex and perceived barriers to care due to substance use.17,24,34 Our illustration of BWS demonstrates a sorting on importance of the multitude of factors identified as barriers or facilitators in decisions—critical for policy decisions and informative for intervention design.

The limitations of BWS impose some constraints on results. First, this method provides a prioritization among those attributes included in the task, so results are limited to and relative among the attributes included in the BWS questions—excluding factors omitted from the inquiry. Second, good practice dictates that BWS attributes be derived empirically from qualitative research and the descriptors be pretested to measure comprehension and understanding.35 Nevertheless, as qualitative descriptors, attributes are subject to respondent interpretation: they may be interpreted differently than intended, or differently across respondents. Our most important attribute, “support is available on all issues the woman is facing,” was derived from a compilation of concerns expressed by women in our focus groups (such as housing, emotional support, transportation, child care), and intended to capture things not typically provided in a health care setting.24 The broad scope of this attribute may be responsible for its relative importance to women—it may have been differently interpreted across women and multiple factors included in the single attribute. BWS surveys can be designed to offer finer distinctions among attributes to address this limitation. The object case that we used measures priorities across attributes defined categorically, for example, support vs cost vs hygiene accommodations.23 The “profile” case extends the design to allow for levels within each attribute for those that are ordinal or continuous: a little or a lot of support; low, moderate, or high cost.23 By adding more distinctions within each attribute, the number of possible combinations of choices increases and, hence, the sample size required to collect data on all combinations increases. We could have further distinguished among the components of “support for all issues” to illuminate what within that category was important to women, but our intent was to distinguish among those factors internal and external to the health care system. The BWS design can be selected to assess the specific trade-offs of interest to the investigator, and pretesting can inform interpretation of the attribute descriptive labels.

A third limitation is that population samples are rarely available for survey research, so selection bias is often a concern. Ninety percent of the women in our sample reported having had a Pap smear within the past 3 years (the current recommended screening interval36), far above the 51.4% screening rate for the entire Boston Health Care for the Homeless Program.37 We recruited women exclusively from sheltered locations and excluded women who were not awake and alert, and thereby possibly selected women who are screened at a higher rate. Moreover, our qualitative data revealed some confusion among women in distinguishing between pelvic examinations and Pap smears, which may have led to their overreporting of Pap smears in our survey.24 One approach to address selection bias in samples is to frame questions in terms of a general population to which respondents belong, or to ask respondents to consider policy options—both of which can help avoid personal reports. We framed our question by asking our respondents to consider the priorities of “women like them” to encourage population insights and possibly inform choices beyond the screening history of our particular women. Nevertheless, the external validity of our results is limited.

Finally, BWS assumes that preferences are sufficiently similar across individuals in the sample that the mean is representative for the group. This assumption may not be reasonable for all samples, and methods have been proposed to explore subgroup differences (eg, data segmentation and latent class analysis38,39).

In conclusion, BWS quantifies patient priorities in a manner that is transparent and accessible. It is easily comprehendible by survey participants and relatively simple to administer. Our application illustrates its use in a vulnerable population, showing that factors beyond those typically provided in health care settings are highly important to women in seeking Pap tests. This approach can be applied to other health care areas where prioritization is helpful to guide decisions.

Acknowledgments

The authors gratefully acknowledge the assistance of Adrianna Saada, MPH, and Emely Santiago in data collection. We also thank the organizations who generously allowed us to collect data from their patients and clients. Finally, we are most appreciative of the women who participated in the study, without whom this research would have been impossible.

Footnotes

Conflicts of interest: authors report none.

Funding support: Research reported in this publication was supported by the National Cancer Institute of the National Institutes of Health under award number R21CA164712.

Disclaimer: The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The funding agreement guaranteed the authors’ independence in the research.

Previous presentations: Preliminary results were presented at the 36th Annual Meeting of the Society for Medical Decision Making; October 18–22, 2014; Miami, Florida.

References

- 1.Flynn TN, Louviere JJ, Peters TJ, Coast J. Best–worst scaling: What it can do for health care research and how to do it. J Health Econ. 2007;26(1):171–189. [DOI] [PubMed] [Google Scholar]

- 2.Ungar WJ, Hadioonzadeh A, Najafzadeh M, Tsao NW, Dell S, Lynd LD. Quantifying preferences for asthma control in parents and adolescents using best-worst scaling. Respir Med. 2014;108(6):842–851. [DOI] [PubMed] [Google Scholar]

- 3.Ejaz A, Spolverato G, Bridges JF, Amini N, Kim Y, Pawlik TM. Choosing a cancer surgeon: analyzing factors in patient decision making using a best-worst scaling methodology. Ann Surg Oncol. 2014;21(12):3732–3738. [DOI] [PubMed] [Google Scholar]

- 4.Ross M, Bridges JF, Ng X, et al. A best-worst scaling experiment to prioritize caregiver concerns about ADHD medication for children. Psychiatr Serv. 2015;66(2):208–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Najafzadeh M, Lynd LD, Davis JC, et al. Barriers to integrating personalized medicine into clinical practice: a best-worst scaling choice experiment. Genet Med. 2012;14(5):520–526. [DOI] [PubMed] [Google Scholar]

- 6.Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making: a user’s guide. Pharmacoeconomics. 2008;26(8):661–677. [DOI] [PubMed] [Google Scholar]

- 7.Gallego G, Bridges JF, Flynn T, Blauvelt BM, Niessen LW. Using best-worst scaling in horizon scanning for hepatocellular carcinoma technologies. Int J Technol Assess Health Care. 2012;28(3):339–346. [DOI] [PubMed] [Google Scholar]

- 8.Ryan M, Farrar S. Using conjoint analysis to elicit preferences for health care. BMJ. 2000;320(7248):1530–1533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bridges JF, Kinter ET, Kidane L, Heinzen RR, McCormick C. Things are looking up since we started listening to patients: trends in the application of conjoint analysis in health 1982–2007. Patient. 2008;1(4):273–282. [DOI] [PubMed] [Google Scholar]

- 10.Baggett TP, Chang Y, Porneala BC, Bharel M, Singer DE, Rigotti NA. Disparities in cancer incidence, stage, and mortality at Boston Health Care for the Homeless Program. Am J Prev Med. 2015;49(5): 694–702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.National Center for Health Statistics. Health, United States, 2013. Hyattsville, MD: US Government Printing Office; 2014. [Google Scholar]

- 12.Chau S, Chin M, Chang J, et al. Cancer risk behaviors and screening rates among homeless adults in Los Angeles County. Cancer Epidemiol Biomarkers Prev. 2002;11(5):431–438. [PubMed] [Google Scholar]

- 13.Diamant AL, Brook RH, Fink A, Gelberg L. Use of preventive services in a population of very low-income women. J Health Care Poor Underserved. 2002;13(2):151–163. [DOI] [PubMed] [Google Scholar]

- 14.Long HL, Tulsky JP, Chambers DB, et al. Cancer screening in homeless women: attitudes and behaviors. J Health Care Poor Underserved. 1998;9(3):276–292. [DOI] [PubMed] [Google Scholar]

- 15.Teruya C, Longshore D, Andersen RM, et al. Health and health care disparities among homeless women. Women Health. 2010;50(8): 719–736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Weinreb L, Goldberg R, Lessard D. Pap smear testing among homeless and very low-income housed mothers. J Health Care Poor Underserved. 2002;13(2):141–150. [DOI] [PubMed] [Google Scholar]

- 17.Ackerson K, Gretebeck K. Factors influencing cancer screening practices of underserved women. J Am Acad Nurse Pract. 2007;19(11): 591–601. [DOI] [PubMed] [Google Scholar]

- 18.Hogenmiller JR, Atwood JR, Lindsey AM, Johnson DR, Hertzog M, Scott JC., Jr Self-efficacy scale for Pap smear screening participation in sheltered women. Nurs Res. 2007;56(6):369–377. [DOI] [PubMed] [Google Scholar]

- 19.Spadea T, Bellini S, Kunst A, Stirbu I, Costa G. The impact of interventions to improve attendance in female cancer screening among lower socioeconomic groups: a review. Prev Med. 2010;50(4):159–164. [DOI] [PubMed] [Google Scholar]

- 20.de Bekker-Grob EW, Donkers B, Jonker MF, Stolk EA. Sample size requirements for discrete-choice experiments in healthcare: a practical guide. Patient. 2015;8(5):373–384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Orme B. Getting Started with Conjoint Analysis: Strategies for Product Design and Pricing Research. Madison, WI: Research Publishers LLC; 2006. [Google Scholar]

- 22.Louviere J, Flynn T, Marley A. Best-Worst Scaling: Theory, Methods and Applications. Cambridge, UK: Cambridge University Press; 2015. [Google Scholar]

- 23.Flynn TN. Valuing citizen and patient preferences in health: recent developments in three types of best-worst scaling. Expert Rev Pharmacoecon Outcomes Res. 2010;10(3):259–267. [DOI] [PubMed] [Google Scholar]

- 24.Wittenberg E, Bharel M, Saada A, Santiago E, Bridges JF, Weinreb L. Measuring the preferences of homeless women for cervical cancer screening interventions: development of a Best-Worst Scaling survey. Patient. 2015;8(5):455–467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Soutar G, Sweeney J, McColl-Kennedy J. Best-worst scaling: an alternative to ratings data. In: Louviere J, Flynn T, Marley A, eds. Best-Worst Scaling: Theory, Methods and Applications. Cambridge, United Kingdom: Cambridge University Press; 2015:177–187. [Google Scholar]

- 26.Louviere JJ, Flynn TN. Using best-worst scaling choice experiments to measure public perceptions and preferences for healthcare reform in Australia. Patient. 2010;3(4):275–283. [DOI] [PubMed] [Google Scholar]

- 27.The %MktBIBD Macro. SAS Institute Inc website. http://support.sas.com/rnd/app/macros/mktbibd/mktbibd.htm Accessed Feb 1, 2016.

- 28.Hollander M, Wolfe D, Chicken E. Nonparametric Statistical Methods. 3rd ed. Hoboken, NJ: John Wiley and Sons; 2013. [Google Scholar]

- 29.Finn A, Louviere JJ. Determining the appropriate response to evidence of public concern: the case of food safety. J Public Policy Mark. 1992;11(2):12–25. [Google Scholar]

- 30.Marley A, Louviere J. Some probabilistic models of best, worst, and best-worst choices. J Math Psychol. 2005;49(6):464–480. [Google Scholar]

- 31.Ackerson K, Preston SD. A decision theory perspective on why women do or do not decide to have cancer screening: systematic review. J Adv Nurs. 2009;65(6):1130–1140. [DOI] [PubMed] [Google Scholar]

- 32.Baron RC, Rimer BK, Breslow RA, et al. ; Task Force on Community Preventive Services. Client-directed interventions to increase community demand for breast, cervical, and colorectal cancer screening a systematic review. Am J Prev Med. 2008;35(1)(Suppl):S34–S55. [DOI] [PubMed] [Google Scholar]

- 33.Task Force on Community Preventive Services. Recommendations for client- and provider-directed interventions to increase breast, cervical, and colorectal cancer screening. Am J Prev Med. 2008;35(1) (Suppl):S21–S25. [DOI] [PubMed] [Google Scholar]

- 34.Zuckerman M, Navizedeh N, Feldman J, McCalla S, Minkoff H. Determinants of women’s choice of obstetrician/gynecologist. J Womens Health Gend Based Med. 2002;11(2):175–180. [DOI] [PubMed] [Google Scholar]

- 35.Coast J, Horrocks S. Developing attributes and levels for discrete choice experiments using qualitative methods. J Health Serv Res Policy. 2007;12(1):25–30. [DOI] [PubMed] [Google Scholar]

- 36.Moyer VA. Screening for cervical cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2012;156(12):880–891, W312. [DOI] [PubMed] [Google Scholar]

- 37.HRSA Health Center Program. Clinical and financial performance measures. http://bphc.hrsa.gov/qualityimprovement/performancemeasures/index.html Accessed Oct 29, 2015.

- 38.Deal K. Segmenting patients and physicians using preferences from discrete choice experiments. Patient. 2014;7(1):5–21. [DOI] [PubMed] [Google Scholar]

- 39.Fraenkel L, Lim J, Garcia-Tsao G, Reyna V, Monto A. Examining hepatitis C virus treatment preference heterogeneity using segmentation analysis: treat now or defer? J Clin Gastroenterol. 2016;50(3): 252–257. [DOI] [PMC free article] [PubMed] [Google Scholar]