Abstract

Objectives. To study the effects of several survey features on response rates in a general population health survey.

Methods. In 2012 and 2013, 8000 households in British Columbia, Canada, were randomly allocated to 1 of 7 survey variants, each containing a different combination of survey features. Features compared included administration modes (paper vs online), prepaid incentive ($2 coin vs none), lottery incentive (instant vs end-of-study), questionnaire length (10 minutes vs 30 minutes), and sampling frame (InfoCanada vs Canada Post).

Results. The overall response rate across the 7 groups was 27.9% (range = 17.1–43.4). All survey features except the sampling frame were associated with statistically significant differences in response rates. The survey mode elicited the largest effect on the odds of response (odds ratio [OR] = 2.04; 95% confidence interval [CI] = 1.61, 2.59), whereas the sampling frame showed the least effect (OR = 1.14; 95% CI = 0.98, 1.34). The highest response was achieved by mailing a short paper survey with a prepaid incentive.

Conclusions. In a mailed general population health survey in Canada, a 40% to 50% response rate can be expected. Questionnaire administration mode, survey length, and type of incentive affect response rates.

Because of changing public opinion on survey participation, there has been a consistent pattern of decreasing response rates from all forms of population-based data collection in recent decades.1–5 As such, nonresponse bias may arise from poor response rates attributable to differences between respondents and nonrespondents.6 This can lead to erroneous conclusions and limited generalizability of findings.

A number of studies have examined the effects of survey design on response rates. In a meta-analysis of 115 studies, Yammarino et al. showed that survey length, monetary incentive, and repeated contact were associated with higher response rates in a mailed survey.7 In a Cochrane systematic review conducted by Edwards et al., factors associated with increased response rate to a mailed survey were shorter questionnaire, personalized cover letters, the use of colored ink, monetary incentives, prepaid incentive, first-class postage with tracking, inclusion of return postage with tracking, inclusion of return postage with stamp, previous contact with participant, follow-up contact, and provision of a second questionnaire.8 In the same study, factors that were found to increase response rate in Web-based surveys included cash lotteries, shorter questionnaire, inclusion of visual elements, ease of login, and Internet speed.8,9

Several studies compared the response between mail and Web-based surveys, with findings consistently showing a higher response rate associated with mail surveys.10–13 Although online surveys present advantages such as reduced cost, convenience, geographic access, and improved timeliness compared with paper surveys, methodological issues exist, including restricted Internet access and lack of population-based sampling frames.

Incentive use in mail surveys is widely recognized as an effective means to increase response rate. Evidence suggests that the inclusion of a prepaid incentive may result in higher response rate than a conditional incentive that is given only after the questionnaire has been completed.9,14 A 1993 meta-analysis of 38 studies showed mail surveys that included a prepaid reward produced an average response increase of 19.1%, whereas a survey that contained a conditional reward showed an average increase of 7.9%.14 Lottery incentives have been deemed useful for providing incentives online.9,15,16 However, the use of an instant lottery as an effective method to increase survey response was a more recent concept. Tuten et al. suggested that the effect of lottery incentives could be further improved when participants were notified of the results of the prize draw immediately upon survey completion.15 To date, we could find no study that has compared the effects between instant lottery and prepaid coin incentive on response rate.

The length of the questionnaire is another important factor to consider when one is implementing a general population survey. A number of studies reported that Web survey participants were more likely to respond to shorter questionnaires.17–19 Edwards et al. used a factorial design to examine the effect of topic salience and survey length on response rate.8 Results showed a statistically higher response rate associated with shorter survey length (30.8% vs 18.6%).

Inclusion of a personalized survey invitation has been noted as a worthwhile strategy to carry out when a low response rate is expected.19,20 Field et al. classified a number of survey methods as personalization. These include direct telephone contact, inclusion of handwritten notes, and personalization of the cover letter and envelope.21 The implication is that personalization invokes the necessary social exchange that can increase survey response.22 Results from previous studies suggest that personalization of the survey invitation is an important factor in the individual’s decision to participate in the survey.23,24

Lastly, it is crucial for survey respondents to reflect the characteristics of the target population because the usefulness and accuracy of the results rely on the coverage of its sampling frame. A biased sample that is systematically different in demographic characteristics compared with the intended population may produce inaccurate results. Therefore, selecting a sampling frame that maximizes coverage within the target population is key to collecting accurate public health information.

The objective of current study is to determine the effect of several survey design factors, including survey mode, the use of monetary incentives, questionnaire length, and sampling frame, on response rates by using a general population health survey administered to British Columbian households.

METHODS

The British Columbia Health Survey (BCHS) was conducted between September 2012 and February 2013. This general population survey was designed to target all community-dwelling adults in British Columbia. The survey asked about general health, quality of life, and use of health services, with an emphasis on osteoarthritis. The InfoCanada sampling frame is updated annually with National Change of Address done each month. The Canada Post sampling frame is updated on a monthly basis.

Invitation letters were mailed to 8000 randomly selected households in British Columbia, who were randomly allocated to 1 of the 7 experimental groups (Table 1). The baseline group received the baseline survey without any incentives, and the L incentive group and C incentive group surveys contained the instant lottery (10 prizes of $100, odds 1 in 800, and a grand prize of $1000, odds of 1 in 8000) and $2 prepaid coin incentive, respectively. The LC incentive survey contained both instant lottery and prepaid coin incentive. The LC InfoCan participants were selected from the InfoCanada sampling frame as opposed to Canada Post. The LC short survey contained the shortened questionnaire (10 minutes, 219 items vs 30 minutes, 39 items). Lastly, the C short paper survey was administered via the paper mode instead of online.

TABLE 1—

Combinations of Survey Factors Included Within Mail-Out Groups: British Columbia, Canada, 2012–2013

| Variables | Baseline | L Incentive | C Incentive | LC Incentive | LC InfoCan | LC Short | C Short Paper |

| Sample size | 1000 | 1000 | 1000 | 1000 | 2000 | 1000 | 1000 |

| Survey mode | Online | Online | Online | Online | Online | Online | Paper |

| Prepaid cash incentive | No | No | Yes | Yes | Yes | Yes | Yes |

| Instant lottery | No | Yes | No | Yes | Yes | Yes | No |

| Survey length | Long | Long | Long | Long | Long | Short | Short |

| Sampling frame | Canada Post | Canada Post | Canada Post | Canada Post | InfoCanada | Canada Post | Canada Post |

Note. C incentive = prepaid coin, long questionnaire, Canada Post sampling frame, Web-based survey; C short paper = prepaid coin, short questionnaire, Canada Post sampling frame, paper survey; LC incentive = prepaid coin, instant lottery, long questionnaire, Canada Post sampling frame, Web-based survey; LC InfoCan = prepaid coin, instant lottery, long questionnaire, InfoCanada sampling frame, Web-based survey; LC short = prepaid coin, instant lottery, short questionnaire, Canada Post sampling frame, Web-based survey; L incentive = instant lottery, long questionnaire, Canada Post sampling frame, Web-based survey.

Overall, Canada Post provided 6000 residential addresses and InfoCanada provided 2000. Household addresses provided by Canada Post were selected from an address database, whereas those provided by InfoCanada were obtained through a phone directory. Furthermore, letters provided to households obtained through InfoCanada were personally addressed, whereas letters to addresses provided by Canada Post were not. Instead, a generic heading—“Dear British Columbia Resident” was used. These letters asked the household adults (aged 18 years and older) with the most recent birthday to complete and return the survey. After the initial study invitation (November 11), 3 reminder mails were sent at weeks 1, 3, and 5 (November 18, November 29, and December 13, respectively). The week-3 reminder included a second copy of the survey for the paper questionnaire group. Individuals who had already returned the survey were excluded from receiving subsequent reminders. For online surveys, different login keywords were provided for each group. Paper surveys were mailed together with the invitation letter and a prepaid return envelope. Participants who were unable or preferred not to complete the Web-based survey were offered to complete a paper survey as an alternative. This was not possible vice versa.

Comparisons

Survey design features under examination included survey mode (paper vs online), provision of prepaid coin incentive (prepaid coin incentive vs no coin incentive), methods of lottery incentives (instant lottery vs poststudy lottery), survey length (10 minutes vs 30 minutes), and sampling frame (InfoCanada vs Canada Post).

We hypothesized that the use of various incentives and survey factors will generate higher response rates compared with the reference categories. Specific hypotheses were

the use of a paper survey will generate a higher response rate than the online survey (LC short vs C short paper),

a shorter questionnaire will result in a higher response rate than the longer questionnaire (LC incentive vs LC short),

the inclusion of an instant $100 lottery will generate a higher response rate than an end-of-study lottery (baseline vs L incentive),

the inclusion of a $2 prepaid coin incentive will generate a higher response rate than no coin incentive (baseline vs C incentive), and

the survey group selected from the InfoCanada sampling frame will generate a higher response rate than those selected from the Canada Post sampling frame (LC incentive vs LC InfoCan).

Statistical Analysis

We examined demographic characteristics of respondents across all 7 groups. We examined mean age differences across the groups with 1-way analysis of variance, and we assessed gender and education-level differences by using the χ2 test for independence. We calculated the adjusted response rate by using the intention-to-treat principle.25 Participants who chose to complete the paper survey in place of the online survey were analyzed with their originally assigned groups.

We made 6 a priori pairwise comparisons to examine whether the addition of specific survey factors(s) generated significant response rate differences. We calculated the χ2 test of independence with an α level of 0.05 for each comparison. We used a logistic regression model based on the pooled sample to determine the effect of each factor with control for other factors in the full sample. We completed all statistical analyses with R studio (version 0.97.314, 2009–2015, RStudio Inc, Boston, MA).

RESULTS

Of the 8000 BCHS surveys sent, 2231 responses were received (27.9%). The mean age of BCHS survey groups varied from 50.4 years (C incentive) to 57.3 years (C short paper; Table 2). Differences in age across all groups were nonsignificant (P > .05), with the exception of higher age of the participants in LC InfoCan and C short paper (P < .01). There were more women than men in all groups (P > .05) except LC InfoCan (P < .001). Education levels did not differ significantly across the groups (P > .05; Table 2).

TABLE 2—

Response Rates and Demographics of Sampling Groups: British Columbia, Canada, 2012–2013

| Survey Groups | Baseline, No. (%) or Mean ± SD | L Incentive, No. (%) or Mean ± SD | C Incentive, No. (%) or Mean ± SD | LC Incentive, No. (%) or Mean ± SD | LC InfoCan, No. (%) or Mean ± SD | LC Short, No. (%) or Mean ± SD | C Short Paper, No. (%) or Mean ± SD |

| Sample size | 1000 | 1000 | 1000 | 1000 | 2000 | 1000 | 1000 |

| Response rate | 168 (16.8) | 198 (19.8) | 205 (20.5) | 281 (28.1) | 592 (29.6) | 332 (33.2) | 455 (45.5) |

| Adjusted response ratea | 171 (17.1) | 198 (19.8) | 208 (20.8) | 282 (28.2) | 601 (30.1) | 337 (33.7) | 434 (43.4) |

| Age | 53.4 ±16.0 | 51.0 ±16.5 | 50.4 ±16.4 | 51.9 ±15.4 | 57.2 ±14.5 | 51.2 ±17.1 | 57.3 ±17.1 |

| Gender | |||||||

| Male | 57 (33.9) | 79 (40.0) | 86 (41.7) | 116 (41.3) | 346 (58.4) | 147 (44.3) | 189 (41.9)b |

| Female | 111 (66.1) | 119 (60.0) | 119 (58.3) | 165 (58.7) | 246 (41.6) | 185 (55.7) | 262 (55.9)b |

| Education | |||||||

| No diploma | 25 (14.9) | 20 (10.1) | 19 (9.3) | 29 (10.3) | 66 (11.1) | 33 (9.9) | 40 (8.8) |

| Secondary | 27 (16.1) | 39 (19.6) | 32 (15.6) | 47 (16.7) | 91 (15.4) | 43 (13.0) | 83 (18.2) |

| Postsecondary | 83 (49.4) | 104 (52.5) | 117 (57.1) | 151 (53.7) | 320 (54.1) | 175 (52.7) | 247 (54.3) |

| Graduate level | 27 (16.1) | 28 (14.1) | 26 (12.7) | 46 (16.4) | 92 (15.5) | 55 (16.6) | 73 (16.0) |

| Not stated | 6 (3.6) | 7 (3.5) | 11 (5.4) | 8 (2.8) | 23 (3.9) | 26 (7.8) | 12 (2.6) |

Note. C incentive = prepaid coin, long questionnaire, Canada Post sampling frame, Web-based survey; C short paper = prepaid coin, short questionnaire, Canada Post sampling frame, paper survey; LC incentive = prepaid coin, instant lottery, long questionnaire, Canada Post sampling frame, Web-based survey; LC InfoCan = prepaid coin, instant lottery, long questionnaire, InfoCanada sampling frame, Web-based survey; LC short = prepaid coin, instant lottery, short questionnaire, Canada Post sampling frame, Web-based survey; L incentive = instant lottery, long questionnaire, Canada Post sampling frame, Web-based survey.

Adjusted response rate = intention-to-treat method.

Four responses of “not applicable” in C short paper group for gender.

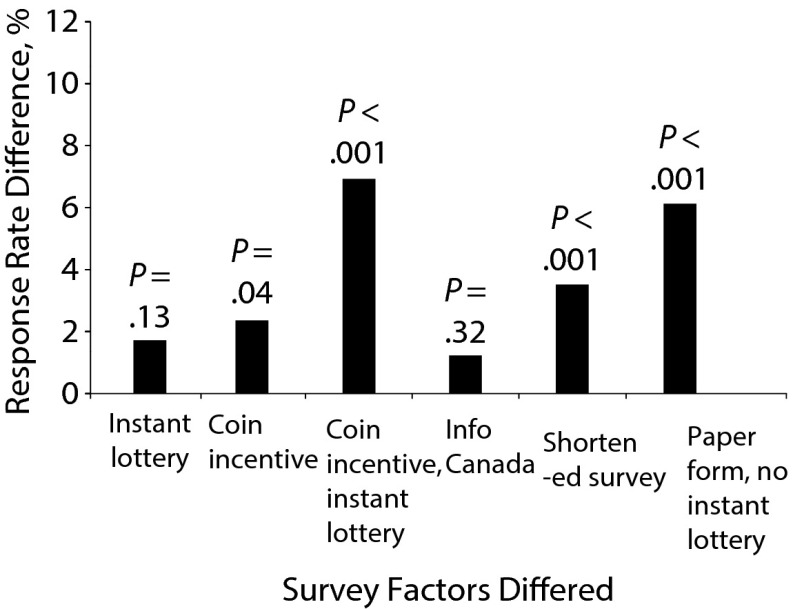

Adjusted response rates were 17.1% in the baseline group, 19.8% in the L incentive group, 20.8% in the C incentive group, 28.2% in the LC incentive group, 30.1% in the LC InfoCan group, 33.7% in the LC short group, and 43.4% in the C short paper group (Table 2). With the exceptions of instant lottery and InfoCanada sampling frame, all other factors in prespecified comparisons showed a significant impact on response rates (Figure 1).

FIGURE 1—

Differences in Response Rates Attributable to Survey Factors: British Columbia, Canada, 2012–2013

In multivariable analysis, paper mode (odds ratio [OR] = 2.04; 95% confidence interval [CI] = 1.61, 2.59), shorter questionnaire (OR = 1.35; 95% CI = 1.13, 1.62), instant lottery (OR = 1.35; 95% CI = 1.16, 1.58), and coin incentive (OR = 1.44; 95% CI = 1.23, 1.67) were associated with significantly higher response rates than were the respective reference categories (Table 3).

TABLE 3—

Estimated Odds Ratios and 95% Confidence Intervals of Survey Factors Compared With Reference Categories: British Columbia, Canada, 2012–2013

| Survey Factors | OR (95% CI) |

| Sampling frame | |

| InfoCanada | 1.14 (0.98, 1.34) |

| Canada Post (Ref) | 1.00 |

| Lottery incentive | |

| Instant | 1.35 (1.16, 1.58) |

| End-of-study (Ref) | 1.00 |

| Survey length | |

| Short | 1.35 (1.13, 1.62) |

| Long (Ref) | 1.00 |

| Prepaid incentive | |

| Coin | 1.44 (1.23, 1.67) |

| No coin (Ref) | 1.00 |

| Administration mode | |

| Paper | 2.04 (1.61, 2.59) |

| Online (Ref) | 1.00 |

Note. CI = confidence interval; OR = odds ratio.

DISCUSSION

Numerous studies have shown a consistent pattern of decreasing survey response rate over the recent decades.1–5 Dillman et al. observed a mean annual decline of 10% in response rate from the late 1980s to 1995.26 In this study, the overall response rate was 27.9%. We found a general pattern of increasing response rate as more survey design features were added. Online response rate was almost doubled (17.1% to 33.7%) with the use of monetary rewards in combination with a relatively short (10 minutes) questionnaire.

Consistent with previous studies,10–12 the paper survey achieved significantly higher response rate compared with the online survey (33.7% vs 43.4%). According to Dillman et al., traditional mail surveys continue to be favored for a number of reasons.26 The issue of accessibility is especially prevalent within the elderly population. Recent research has shown that American seniors aged 65 years and older, despite being the fastest growing population in Internet and mobile phone use, continue to lag behind the rest of the population with regard to technology adoption and use.27 More complicated tasks such as login procedure, Web navigation, and troubleshooting may be challenging without clear instructions and timely support from the research staff. Trust also remains to be a large issue for Web survey participation. Respondents may be reluctant to take part because of fears of potential scams, infraction of privacy, or links containing computer viruses.

On the contrary, most people are comfortable with opening a mail envelope containing the paper questionnaire.28 There are still a number of disadvantages of using the paper method, such as the inability to use extensive skip logic, which enables future questions to be automatically answered on the basis of a previous response. As such, paper surveys may limit survey efficiency and depth of questions asked. Online surveys may gain popularity by improving the user-friendliness aspect of the participant experience. This includes ease of login and adopting a professional interface including logos of credible organizations.

When we combined data from all groups in the current study, results showed that participants who received an instant lottery incentive had 35% higher odds of response than those who received a poststudy lottery. In addition to increased response rate, use of lotteries may also increase participant’s tendency to remain on the survey after arriving at the uniform resource locator link.15,16 There is currently a lack of literature on the implementation of instant lottery as opposed to end-of-study lottery. Given our finding, the use of an instant lottery may be a better alternative.

Results of this study were in agreement with past literature that coin incentives are effective in increasing survey response rate.6,29 There are a number of advantages that prepaid coin incentives hold over postpaid monetary rewards. Because incentives encourage early response, cost may be saved from other aspects of the study such as mailing fees for reminder letters.30 In addition, the inclusion of a prepaid award may promote social exchange, establish survey legitimacy, and improve participant cooperation.14

It is well known that a long questionnaire is one of the most frequent reasons for poor response rates.31,32 Indeed, the current study shows that shortening the questionnaire significantly improved survey response. In a survey of unemployed residents in Croatia, response rate was significantly higher for a 10-minute survey than for a 30-minute survey (75% vs 63%, respectively).31 In another study, the response rate was significantly higher for an 8- to 9-minute survey than for a 20-minute survey (67.5% vs 63.4%, respectively).32 Aside from a decrease in response, questionnaires that are overly long may also produce lower-quality data. One study found that surveys longer than about 20 minutes may induce fatigue in respondents, leading to inaccurate response.33

The use of the InfoCanada sampling frame was accompanied by an invitation letter, personally addressed to the head of each household (the individual listed in the database). Personalization resulted in a 1.9% absolute increase in response rate, but this did not elicit a statistically significant increase in response rate compared with the reference category. This is in contrast to previous studies.20,34 Heerwegh et al. showed that personalization produced a significant 8.6% increase in response rate compared with the control group in a Web survey of students that used personalized salutation.34 Similarly, by using a personalized invitation letter, Joinson and Reips observed a 6.5% (OR = 1.40) increase in response rate compared with a generic heading.20 It was suggested that a personalized invitation letter reduces the participants’ “perception of anonymity” and encourages social exchange.20,22 The observed lack of effect of personalization in our study could be attributable to demographic differences between respondents in each sampling frame. InfoCanada favors older age and male respondents (Table 1), which may lead to a decrease in response rate, thus dampening the effect of personalization.

There are a number of limitations of this study. We evaluated the effects of survey factors at specific levels. For example, we offered a $2 reward for the prepaid coin incentives and were not able to examine the effect of a $1 or $5 reward. The effects of different reward sizes and survey lengths on response rates may be of interest in future studies. Another limitation is the inability to identify reasons for nonparticipation, such as refusal versus inability to contact because of change of address, unopened mail, or delivery failure. If nonparticipation is because of the latter, the reported response rates may be underestimated. However, efforts were made to ensure that both sampling frames were up to date. Lastly, the BCHS survey was administered and collected between 2012 and 2013. Given the trend of decreasing response rate over the recent years, results from this study may not accurately reflect the survey response one would expect in 2016.

In conclusion, we assessed the effectiveness of different incentives designed to improve response rates and compared different sampling approaches for mail or online surveys. To our best knowledge, this is the first large-scale randomized study to assess the impact of combinations of various survey design methods in online and mail surveys of the general population. Given that the BCHS survey design largely followed the tailored design method of Dillman et al.,35 we believe that these results provide a realistic estimate for expected response rates in future self-administered health survey in the Canadian general population. With the exception of the InfoCanada sampling frame, the use of paper mode, instant lottery, prepaid coin incentive, and shorter questionnaire all demonstrated significant improvement in response rate compared with the reference conditions. Nevertheless, it is important to keep in mind that because of the ever-changing attitudes toward survey participation, social environment, and technology, researchers must accommodate and adapt by using the appropriate survey methods to fully maximize the effects of survey design on response rate.

ACKNOWLEDGMENTS

This project was supported by The Canada Foundation for Innovation.

HUMAN PARTICIPANT PROTECTION

Ethics for administration and collection of British Columbia Health Survey data have been submitted and approved by the University of British Columbia Behavioral Research Ethics Board (H11-02246).

REFERENCES

- 1.Beebe TJ, Rey E, Ziegenfuss JY et al. Shortening a survey and using alternative forms of prenotification: impact on response rate and quality. BMC Med Res Methodol. 2010;10:50. doi: 10.1186/1471-2288-10-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Groves RM. Survey Errors and Survey Costs. New York, NY: John Wiley and Sons; 1989. [Google Scholar]

- 3.Loosveldt G, Storms V. Measuring public opinions about surveys. Int J Public Opin Res. 2007;20(1):74–89. [Google Scholar]

- 4.Berk ML, Schur CL, Feldman J. Twenty-five years of health surveys: does more data mean better data? Health Aff (Millwood) 2007;26(6):1599–1611. doi: 10.1377/hlthaff.26.6.1599. [DOI] [PubMed] [Google Scholar]

- 5.Rossi P, Henry W, James D, Anderson AB. Sample Surveys: History, Current Practice, and Future Prospects. San Diego, CA: Academic Press; 2003. [Google Scholar]

- 6.Brealey SD, Atwell C, Bryan S et al. Improving response rates using a monetary incentive for patient completion of questionnaires: an observational study. BMC Med Res Methodol. 2007;7:12. doi: 10.1186/1471-2288-7-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Yammarino F, Skinner S, Childers T. Understanding mail survey response behaviour: a meta-analysis. Public Opin Q. 1991;55(4):613–639. [Google Scholar]

- 8.Edwards PJ, Roberts I, Clarke MJ et al. Methods to increase response to postal and electronic questionnaires. Cochrane Database Syst Rev. 2009;(3):MR000008. doi: 10.1002/14651858.MR000008.pub4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Doerfling P, Kopec JA, Liang MH, Esdaile JM. The effect of cash lottery on response rates to an online health survey among members of the Canadian Association of Retired Persons: a randomized experiment. Can J Public Health. 2010;101(3):251–254. doi: 10.1007/BF03404384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Leece P, Bhandari M, Sprague S et al. Internet versus mailed questionnaires: a randomized comparison (2) J Med Internet Res. 2004;6(3):e30. doi: 10.2196/jmir.6.3.e30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Whitehead L. Methodological issues in Internet-mediated research: a randomized comparison of Internet versus mailed questionnaires. J Med Internet Res. 2011;13(4):e109. doi: 10.2196/jmir.1593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Akl EA, Maroun N, Klocke RA, Montori V, Schunemann HJ. Electronic mail was not better than postal mail for surveying residents and faculty. J Clin Epidemiol. 2005;58(4):425–429. doi: 10.1016/j.jclinepi.2004.10.006. [DOI] [PubMed] [Google Scholar]

- 13.Mavis BE, Brocato JJ. Postal surveys versus electronic mail surveys. The tortoise and the hare revisited. Eval Health Prof. 1998;21(3):395–408. doi: 10.1177/016327879802100306. [DOI] [PubMed] [Google Scholar]

- 14.Church AH. Estimating the effect of incentives on mail survey response rates: a meta-analysis. Public Opin Q. 1993;57(1):62–79. [Google Scholar]

- 15.Tuten TL, Galesic M, Bosnjak M. Effects of immediate versus delayed notification of prize draw results on response behavior in Web surveys—an experiment. Soc Sci Comput Rev. 2004;22(3):377–384. [Google Scholar]

- 16.Bosnjak M, Tuten TL. Prepaid and promised incentives in Web surveys—an Experiment. Soc Sci Comput Rev. 2003;21(2):208–217. [Google Scholar]

- 17.McCambridge J, Kalaitzaki E, White IR et al. Impact of length or relevance of questionnaires on attrition in online trials: randomized controlled trial. J Med Internet Res. 2011;13(4):e96. doi: 10.2196/jmir.1733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Marcus B, Bosnjak M, Linder S, Pilischenko S, Schutz A. Compensating for low topic interest and long surveys: a field experiment on nonresponse in Web surveys. Soc Sci Comput Rev. 2007;25(3):372–383. [Google Scholar]

- 19.Sahlqvist S, Song Y, Bull F, Adams E, Preston J, Ogilvie D. Effect of questionnaire length, personalisation and reminder type on response rate to a complex postal survey: randomised controlled trial. BMC Med Res Methodol. 2011;11:62. doi: 10.1186/1471-2288-11-62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Joinson AN, Reips U-D. Personalized salutation, power of sender and response rates to Web-based surveys. Comput Human Behav. 2007;23(3):1372–1383. [Google Scholar]

- 21.Field TS, Cadoret CA, Brown ML et al. Surveying physicians: do components of the “Total Design Approach” to optimizing survey response rates apply to physicians? Med Care. 2002;40(7):596–605. doi: 10.1097/00005650-200207000-00006. [DOI] [PubMed] [Google Scholar]

- 22.Hill EW. A theological perspective on social exchange theory. J Relig Health. 1992;31(2):141–148. doi: 10.1007/BF00986792. [DOI] [PubMed] [Google Scholar]

- 23.Dillman DA. Mail and Telephone Surveys: The Total Design Method. New York, NY: John Wiley and Sons; 1978. [Google Scholar]

- 24.Levy RM, Shapiro M, Halpern SD, Ming ME. Effect of personalization and candy incentive on response rates for a mailed survey of dermatologists. J Invest Dermatol. 2012;132(3 pt 1):724–726. doi: 10.1038/jid.2011.392. [DOI] [PubMed] [Google Scholar]

- 25.Pfeffermann D. The role of sampling weights when modeling survey data. Int Stat Rev. 1993;61(2):317–337. [Google Scholar]

- 26.Dillman DA, Dolsen DE, Machlis GE. Increasing response to personally-delivered mail-back questionnaires. J Off Stat. 1995;11(2):129–139. [Google Scholar]

- 27.Zickuhr K, Madden M. Pew Research Center’s Internet and American Life Project. Washington, DC: Pew Research Center; 2012. Older adults and Internet use. [Google Scholar]

- 28.Couper MP. Technology trends in survey data collection. Soc Sci Comput Rev. 2005;23:486–501. [Google Scholar]

- 29.Simmons E, Wilmot A. Incentive payments on social surveys; a literature review. Soc Survey Methodol Bull. 2004;53:1–11. [Google Scholar]

- 30.Singer E. The use and effects of incentives in surveys. Oral presentation at: National Science Foundation; October 3–4, 2012; Washington, DC.

- 31.Galesic M, Bosnjak M. Effects of questionnaire length on participation and indicators of response quality in a Web survey. Public Opin Q. 2009;73(2):349–360. [Google Scholar]

- 32.Crawford SD, Couper MP, Lamias MJ. Web surveys perceptions of burden. Soc Sci Comput Rev. 2001;19(2):146–162. [Google Scholar]

- 33.MacElroy B. Variables influencing dropout rates in Web-based surveys. Quirk’s Marketing Research Review. 2000:2000071. [Google Scholar]

- 34.Heerwegh D, Looseveldt G, Vanhove T, Matthijs T. Effects of personalization on web survey data response rates and data quality. Int J Soc Res Methodol. 2004;8(2):85–99. [Google Scholar]

- 35.Dillman DA, Smyth JD, Christian LM. Mail and Internet Surveys: The Tailored Design Method. Hoboken, NJ: John Wiley and Sons; 2009. [Google Scholar]