Significance

Across the Organization for Economic Co-operation and Development countries, the instruction time at age 10 y old varies by a factor of two, reflecting, at least in part, differences in beliefs about the value of additional instruction time. Because educational resources are typically not randomly allocated, it has proven to be a major challenge to determine how different educational resources, such as instruction time, affect student learning. We present evidence from a large-scale, randomized trial that increasing instruction time in school increases student learning. Importantly, a regime with no formal requirements on how the extra time is spent is at least as efficient as an increase in instruction time with a detailed teaching program.

Keywords: education, randomized controlled trial, school performance, school resources

Abstract

Increasing instruction time in school is a central element in the attempts of many governments to improve student learning, but prior research—mainly based on observational data—disputes the effect of this approach and points out the potential negative effects on student behavior. Based on a large-scale, cluster-randomized trial, we find that increasing instruction time increases student learning and that a general increase in instruction time is at least as efficient as an expert-developed, detailed teaching program that increases instruction with the same amount of time. These findings support the value of increased instruction time.

All governments responsible for school systems must consider the amount of instruction time that they should provide. The time that students spend in the classroom varies by a factor of two across the OECD (Organization of Economic Co-operation and Development) countries both in total compulsory instruction time and within specific subjects, such as reading, writing, and literature (1). These international differences have generated sustained debates about whether students benefit from having more instruction or on the contrary, whether governments can cut spending on instruction time without negatively impacting student achievements (2). Increased instruction time has been an element in many educational reforms in the United States, Europe, and Japan (2–4).

However, the existing evidence of the effectiveness of increasing instruction time is deficient. A review of the research before 2009 concludes that there seems to be a neutral to small positive effect of extending school time on achievements; however, most studies are based on weak designs, and the effect remains disputed. Skeptics argue that longer school days generate behavioral problems caused by fatigue and boredom (2). More recently, studies based on observational data found positive effects on student achievements (4–6), and studies of the impact of increased instruction time in combination with other interventions (e.g., more effective teachers, data-driven instruction, ability tracking, or improved pedagogy) also found positive effects (7, 8). A randomized trial conducted in The Netherlands does not find significant effects of increased instruction time (3). Nonetheless, it should be noted that this trial had substantial noncompliance and was based on only seven schools, which seems low-powered (9).

Other than the methodological limitations of the existing evidence, there are two potential explanations for the lack of strong evidence for the effect of increasing instruction time. One explanation relates to the students, and the other relates to the teachers. First, to benefit from more instruction time, students may need to be motivated to sacrifice short-term pleasures to pay attention to the teaching and thereby, achieve long-term gains (10). This exercise, however, requires self-control. Self-control has been shown to be a scarce resource that is exhausted when used. When that happens, it is harder for students to control their thoughts, fix their attention, and manage their emotions, and they may become more aggressive (11). Thus, extending the school day may be ineffective, because students’ self-control is depleted, and they may have more trouble managing their emotions, become more aggressive, become hyperactive, and/or conflict with their classmates. Furthermore, previous research has shown that boys have less self-control capacity than girls (12), and immigrant children and children with low socioeconomic status also tend to have less self-control (13). [This finding does not imply that immigrants have less self-control or lower academic achievement, because they have a different cultural background. Their achievement may be strongly related to the lower socioeconomic status of immigrants on average. Also, there may be significant heterogeneity among immigrants with non-Western backgrounds.] More formally, children can be expected to maximize their learning in school relative to the effort that it requires. Because the cost of effort as well as the relationship between effort and learning may be different for boys and girls as well as immigrants and natives, it is worth examining the effect of increasing instruction time separately for each of these groups, even if the power of the study does not allow strong conclusions based on subgroup analyses.

Second, the effect of increasing school resources is likely to depend on how teachers spend the additional time, which relates to the instructional regime in the school (that is, the set of rules for how to regulate the interplay between assessment and instruction) (14). We compare two opposite instructional regimes. One type has formalized instruction in a teaching program. On the one hand, this format may have several advantages. If teachers do not know how to teach effectively or if they are satisfied with some level of student achievements and therefore, not motivated to use the additional time effectively, providing a detailed teaching program for the additional instruction time may help increase the effectiveness of this time. On the other hand, this instructional regime with a high level of formalized instruction leaves less room for assessment of the individual student’s responsiveness to the intervention. Therefore, it may not tailor the instruction sufficiently to the needs of the different groups of students. We compare this regime with another type that has no formalized prescriptions for how the instruction should take place. This high discretion treatment leaves more room for individual assessments and thereby, more tailored instruction. This tradeoff between high discretion, allowing frontline bureaucrats to use their expertise, and low discretion, ensuring a more specific policy implementation, is a classic but topical dilemma (15–17). However, there is very little evidence on whether high or low discretion affects policy outcomes (18).

Results

To (i) improve the methodological quality of the evidence on increasing instruction time, (ii) compare two different instructional regimes on how to regulate the use of additional time, and (iii) compare how they affect different groups of students in terms of reading skills and behavioral problems that may come with depleted self-control, we use a large-scale, cluster-randomized trial involving 90 schools and 1,931 fourth grade students in Denmark. Instruction time in reading, writing, and literature was increased by 3 h (four lessons) weekly over 16 wk, corresponding to a 15% increase in the weekly instruction time (correspondingly reducing the students’ spare time). The cost was approximately US $182 per student. In the first treatment condition, there were no requirements in terms of how teachers should spend the extra time. This instructional regime with high levels of teacher discretion allows teachers to accommodate their teaching to the specific needs of the students in the classroom across a broad range of outcomes. Conversely, a more detailed, expert-developed teaching program may better ensure high-quality teaching. In the second treatment condition, teachers had the same increase in instruction time but were required to follow a detailed program developed by national experts and aimed at improving general language comprehension. We compare the two treatments with a control group continuing with the same instruction time as usual. SI Methods has more details about the treatments.

To measure student achievement in reading, we use a national standardized, online, self-scoring adaptive reading test used by all of the schools in the country (19). The test is based on three subscales: language comprehension, decoding, and reading comprehension. The study was designed to test the effect on the combined measure, but we also examine the effects on the three subscales. Different versions of the test are developed for second and fourth grades. We use the results of the second grade test as a baseline test (along with a third grade math test) and the fourth grade test as an outcome measure. To measure behavioral problems, we use the student responses to the Strengths and Difficulties Questionnaire (SDQ), which is based on five subscales (emotional symptoms, conduct problems, peer relationship problems, hyperactivity/inattention, and prosocial behavior), of which the first four can be combined for a total difficulty score (20, 21). The SDQ responses are used as a second outcome (SI Methods has details about the assessments). Outcomes were measured at the end of the intervention period (see Fig. S1).

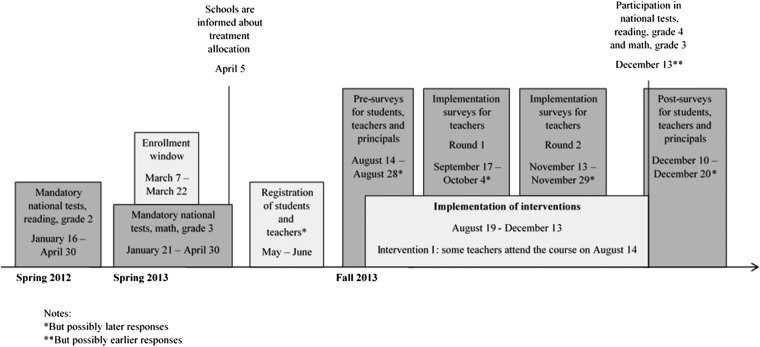

Fig. S1.

Timeline of the intervention with increased instruction time.

We find no significant differences in means of the baseline covariates between the control group and the treatment group without a teaching program. A formal F test of the null hypothesis that all baseline covariates are the same for the no teaching program treatment and the control group is not rejected (P = 0.71). The teaching program treatment group differs significantly from the control group on 2 baseline covariates (of 28), and the F test rejects the null hypothesis that all baseline covariates are the same (P < 0.01). SI Methods and Table S1 has more details about the baseline balance. Table S2 presents a formal attrition analysis.

Table S1.

Balancing of student characteristics

| Variable | 1: NOTP | 2: With a TP | 3: Control group | Difference 1 − 3 | Difference 2 − 3 | |||

| Mean | SD | Mean | SD | Mean | SD | |||

| Students | ||||||||

| Test score, reading grade 2 | −0.18 | 1.07 | −0.14 | 0.94 | −0.23 | 0.05 | 0.10 | |

| Missing test score, reading grade 2 | 0.04 | 0.02 | 0.05 | −0.01 | −0.03† | |||

| Test score, math grade 3 | −0.18 | 1.08 | −0.07 | 0.98 | −0.19 | 0.00 | 0.11 | |

| Missing test score, math grade 3 | 0.06 | 0.04 | 0.08 | −0.02 | −0.04 | |||

| Non-Western background | 0.29 | 0.22 | 0.30 | −0.01 | −0.08 | |||

| Girl | 0.50 | 0.49 | 0.51 | −0.01 | −0.02 | |||

| Single-mother family | 0.27 | 0.24 | 0.23 | 0.04 | 0.02 | |||

| No. of siblings | 1.46 | 1.32 | 1.48 | −0.02 | −0.16 | |||

| First born | 0.40 | 0.40 | 0.40 | 0.00 | −0.00 | |||

| Age 9 y or younger | 0.29 | 0.26 | 0.28 | 0.01 | −0.02 | |||

| Age 10 y | 0.64 | 0.68 | 0.65 | −0.01 | 0.02 | |||

| Age 11 y | 0.07 | 0.07 | 0.07 | 0.01 | −0.00 | |||

| Born in the first quarter | 0.24 | 0.25 | 0.22 | 0.02 | 0.03 | |||

| Born in the second quarter | 0.22 | 0.23 | 0.26 | −0.03 | −0.03 | |||

| Born in the third quarter | 0.29 | 0.30 | 0.27 | 0.02 | 0.03 | |||

| Born in the fourth quarter | 0.24 | 0.22 | 0.25 | −0.01 | −0.03 | |||

| Mothers | ||||||||

| Log earnings | 8.82 | 5.40 | 9.46 | 5.04 | 8.72 | 5.43 | 0.10 | 0.74 |

| Age, y | 36.00 | 5.28 | 35.60 | 5.12 | 35.62 | 5.38 | 0.38 | −0.02 |

| None or missing educational information | 0.10 | 0.06 | 0.09 | 0.01 | −0.03 | |||

| High school | 0.30 | 0.34 | 0.34 | −0.05 | −0.01 | |||

| Vocational education | 0.31 | 0.31 | 0.28 | 0.03 | 0.03 | |||

| Higher education | 0.29 | 0.29 | 0.28 | 0.01 | 0.01 | |||

| Fathers | ||||||||

| Log earnings | 9.84 | 5.03 | 10.32 | 4.71 | 9.80 | 5.06 | 0.04 | 0.52 |

| Age, y | 39.11 | 6.14 | 38.36 | 5.67 | 39.07 | 6.38 | 0.05 | −0.71* |

| None or missing educational information | 0.12 | 0.09 | 0.10 | 0.01 | −0.01 | |||

| High school | 0.30 | 0.33 | 0.34 | −0.04 | −0.01 | |||

| Vocational education | 0.32 | 0.31 | 0.32 | −0.01 | −0.01 | |||

| Higher education | 0.27 | 0.27 | 0.24 | 0.03 | 0.04 | |||

| N schools (total = 90) | 31 | 28 | 31 | 62 | 59 | |||

| N students (total = 1931) | 634 | 641 | 656 | 1,290 | 1,297 | |||

Observations with missing information are excluded from the table unless otherwise indicated. When covariates are included in regressions, relevant indicators for missing covariates are always included. All information related to parents and family is registered when students are 6 y old; t tests are corrected for clustering at the school level.

P < 0.1.

P < 0.01.

Table S2.

Attrition analysis

| Variable | 1: No test | 2: No test | 3: No SDQ | 4: No SDQ | ||||

| Coefficient | SE | Coefficient | SE | Coefficient | SE | Coefficient | SE | |

| NOTP | −0.001 | 0.063 | −0.002 | 0.061 | −0.098† | 0.049 | −0.096† | 0.047 |

| With a TP | −0.006 | 0.062 | 0.000 | 0.059 | −0.120† | 0.052 | −0.114† | 0.050 |

| Students | ||||||||

| Test score, reading grade 2 | −0.039‡ | 0.015 | −0.028† | 0.012 | ||||

| Missing test score, reading grade 2 | 0.174‡ | 0.059 | 0.058 | 0.048 | ||||

| Test score, math grade 3 | 0.028* | 0.014 | −0.006 | 0.012 | ||||

| Missing test score, math grade 3 | 0.153† | 0.063 | 0.123† | 0.050 | ||||

| Non-Western background | −0.032 | 0.022 | −0.019 | 0.022 | ||||

| Missing immigrant information | −0.000 | 0.002 | −0.003 | 0.002 | ||||

| Missing immigrant information | −0.021 | 0.131 | −0.165 | 0.136 | ||||

| Age 10 y | 0.008 | 0.019 | −0.011 | 0.021 | ||||

| Age 11 y | 0.053 | 0.048 | 0.019 | 0.040 | ||||

| Girl | 0.015 | 0.018 | 0.009 | 0.017 | ||||

| Single-mom family | 0.046† | 0.021 | 0.051† | 0.022 | ||||

| No. of siblings | −0.002 | 0.009 | −0.005 | 0.009 | ||||

| First-born | 0.007 | 0.017 | 0.027 | 0.019 | ||||

| Born in the second quarter | −0.024 | 0.025 | −0.008 | 0.024 | ||||

| Born in third quarter | −0.027 | 0.028 | −0.032 | 0.023 | ||||

| Born in fourth quarter | −0.005 | 0.032 | −0.027 | 0.026 | ||||

| Missing family information | 0.028* | 0.014 | −0.006 | 0.012 | ||||

| Mothers | ||||||||

| Log earnings | −0.000 | 0.002 | −0.003 | 0.002 | ||||

| Age, y | 0.003 | 0.002 | −0.004 | 0.002 | ||||

| High school | 0.009 | 0.040 | 0.002 | 0.044 | ||||

| Vocational education | 0.015 | 0.039 | −0.018 | 0.046 | ||||

| Higher education | −0.005 | 0.045 | −0.008 | 0.048 | ||||

| Fathers | ||||||||

| Log earnings | −0.001 | 0.002 | −0.002 | 0.002 | ||||

| Age, y | −0.001 | 0.001 | 0.003 | 0.002 | ||||

| High school | −0.001 | 0.044 | −0.016 | 0.048 | ||||

| Vocational education | 0.004 | 0.045 | −0.036 | 0.047 | ||||

| Higher education | 0.040 | 0.044 | −0.004 | 0.047 | ||||

| Constant | 0.102† | 0.042 | 0.054 | 0.103 | 0.225‡ | 0.073 | 0.253† | 0.125 |

| Observations | 1,931 | 1,931 | 1,931 | 1,931 | ||||

| Adjusted R2 | 0.155 | 0.178 | 0.108 | 0.126 | ||||

| Stratum indicators | Yes | Yes | Yes | Yes | ||||

| Covariates | No | Yes | No | Yes | ||||

The propensity of not attending the test/answering the survey with SDQ is modeled by a linear probability model. Coefficients for selected covariates are displayed. Indicators for missing information and stratum fixed effects are also included. Cluster-robust SEs are shown.

P < 0.1.

P < 0.05.

P < 0.01.

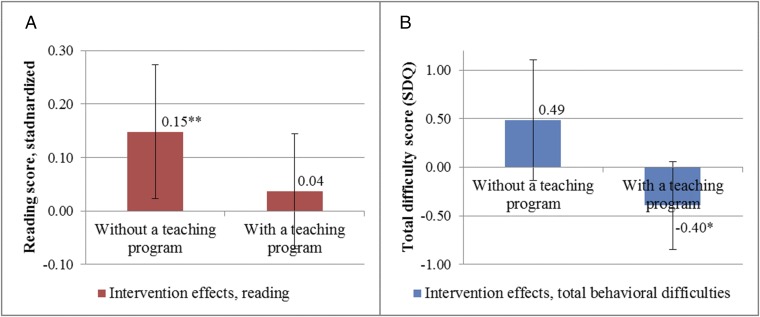

The estimated treatment effects on reading are presented in Fig. 1A. Increasing instruction time without a teaching program increases student achievement in reading by 0.15 SD (P = 0.02) compared with the control group. The effect of increasing instruction time with a teaching program is small and insignificant. However, the estimated effects on the reading subscales suggest that the teaching program (aimed at improving general language comprehension) has a statistically significant effect on the subscale language comprehension of 0.14 SD (P = 0.03), which would be expected, but not on the other two subscales (Table S3). The general increase in instruction time with no teaching program has significantly positive effects on both language comprehension and decoding. The differences between the two treatment groups are not statistically significant. It should be noted, however, that the statistical power of the trial does not allow us to detect minor differences between the treatment groups.

Fig. 1.

Effects of increasing instruction time on (A) student achievement in reading and (B) behavioral difficulties. Stratum indicators and baseline achievement in reading and math are included as controls. Error bars reflect 95% confidence intervals. SEs are corrected for clustering at the classroom level. *P < 0.1; **P < 0.05.

Table S3.

Intervention effects on student achievement

| Description | Raw difference in means | Primary analyses | ||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

| Panel A. Outcome: | Reading | Reading | Reading | Reading | Reading | Reading | Reading | Reading |

| Main results | Grade 4 | Grade 4 | Grade 4 | Grade 4 | Grade 4 | Grade 4 | Grade 4 | Grade 4 |

| NOTP | 0.247† (0.106) | 0.146* (0.075) | 0.148† (0.064) | 0.149† (0.061) | ||||

| With a TP | 0.206* (0.109) | 0.157† (0.060) | 0.037 (0.055) | 0.036 (0.052) | ||||

| Constant | −0.563‡ (0.078) | −0.563‡ (0.078) | −1.192‡ (0.301) | −0.331* (0.166) | −0.092 (0.295) | −1.509‡ (0.038) | −0.587‡ (0.116) | −0.841‡ (0.291) |

| Observations | 1,023 | 1,049 | 1,023 | 1,023 | 1,023 | 1,049 | 1,049 | 1,049 |

| Adjusted R2 | 0.015 | 0.010 | 0.086 | 0.573 | 0.581 | 0.107 | 0.584 | 0.589 |

| Stratum indicators | No | No | Yes | Yes | Yes | Yes | Yes | Yes |

| Baseline achievement | No | No | No | Yes | Yes | No | Yes | Yes |

| Covariates | No | No | No | No | Yes | No | No | Yes |

| Panel B. Outcome: | Reading | Reading | Reading | Reading | Reading | Reading | ||

| Robustness check | Grade 4 | Grade 4 | Grade 4 | Grade 4 | Grade 4 | Grade 4 | ||

| NOTP | 0.181‡ (0.064) | 0.208‡ (0.051) | 0.219‡ (0.046) | |||||

| With a TP | 0.143* (0.077) | 0.090 (0.057) | 0.096* (0.055) | |||||

| Constant | −0.995‡ (0.191) | −0.301* (0.156) | −0.652† (0.300) | −1.335‡ (0.082) | −0.618‡ (0.101) | −1.241‡ (0.292) | ||

| Observations | 1,072 | 1,072 | 1,072 | 1,098 | 1,098 | 1,098 | ||

| Adjusted R2 | 0.084 | 0.608 | 0.623 | 0.078 | 0.611 | 0.625 | ||

| Stratum indicators | Yes | Yes | Yes | Yes | Yes | Yes | ||

| Baseline achievement | No | Yes | Yes | No | Yes | Yes | ||

| Covariates | No | No | Yes | No | No | Yes | ||

| Panel C. Learning domains of the reading score: | Language comprehension | Decoding | Reading comprehension | Language comprehension | Decoding | Reading comprehension | ||

| NOTP | 0.152† (0.059) | 0.167‡ (0.055) | 0.072 (0.062) | |||||

| With a TP | 0.139† (0.062) | 0.006 (0.049) | −0.048 (0.052) | |||||

| Constant | −0.624‡ (0.192) | −0.114 (0.183) | −0.136* (0.074) | −0.838‡ (0.056) | −0.365‡ (0.076) | −0.348* (0.201) | ||

| Observations | 1,023 | 1,023 | 1,023 | 1,049 | 1,049 | 1,049 | ||

| Adjusted R2 | 0.444 | 0.459 | 0.464 | 0.452 | 0.462 | 0.482 | ||

| Stratum indicators | Yes | Yes | Yes | Yes | Yes | Yes | ||

| Baseline achievement | Yes | Yes | Yes | Yes | Yes | Yes | ||

| Covariates | No | No | No | No | No | No | ||

Panel A presents the results of our main analyses. Panel B presents the results when comparing the increasing instruction time interventions with the third (math) treatment arm. Panel C presents the results from our primary specification on the three domains of student reading achievement. Included covariates are in the table. Cluster-robust SEs are in parentheses.

P < 0.1.

P < 0.05.

P < 0.01.

Fig. 1B shows that the teaching program significantly reduces student behavioral problems. Instruction time without a teaching program may increase behavioral problems, but the overall results are insignificant (see Table S4 for details).

Table S4.

Intervention effects on total behavioral difficulties and strengths

| Description | Raw difference in means | Primary analyses | ||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

| Panel A. Outcome: | Difficulties | Difficulties | Difficulties | Difficulties | Difficulties | Difficulties | Difficulties | Difficulties |

| NOTP | 0.081 (0.461) | 0.563* (0.333) | 0.487 (0.317) | 0.500 (0.320) | ||||

| With a TP | −0.286 (0.441) | −0.562† (0.231) | −0.395* (0.232) | −0.545† (0.220) | ||||

| Constant | 9.625‡ (0.325) | 9.625‡ (0.325) | 7.718‡ (1.994) | 5.819‡ (1.564) | 6.476† (2.604) | 10.623‡ (0.576) | 8.363‡ (0.967) | 10.352‡ (2.609) |

| Adjusted R2 | −0.001 | −0.000 | 0.026 | 0.101 | 0.105 | 0.037 | 0.113 | 0.129 |

| Panel B. Outcome: | Strengths | Strengths | Strengths | Strengths | Strengths | Strengths | Strengths | Strengths |

| NOTP | −0.102 (0.160) | −0.050 (0.129) | −0.043 (0.129) | −0.004 (0.129) | ||||

| With a TP | 0.044 (0.154) | 0.048 (0.093) | 0.057 (0.093) | 0.051 (0.091) | ||||

| Constant | 7.725‡ (0.119) | 7.725‡ (0.119) | 8.846‡ (0.121) | 8.923‡ (0.125) | 8.913‡ (0.531) | 8.464‡ (0.220) | 8.553‡ (0.246) | 8.713‡ (0.550) |

| Adjusted R2 | 0.000 | −0.001 | 0.041 | 0.042 | 0.059 | 0.060 | 0.064 | 0.070 |

| Observations | 1,062 | 1,075 | 1,062 | 1,062 | 1,062 | 1,075 | 1,075 | 1,075 |

| Stratum indicators | No | No | Yes | Yes | Yes | Yes | Yes | Yes |

| Baseline achievement | No | No | No | Yes | Yes | No | Yes | Yes |

| Covariates | No | No | No | No | Yes | No | No | Yes |

Panel A shows the results on the total difficulty score, whereas panel B shows the results on the prosocial score. The table shows included covariates. Cluster-robust SEs are in parentheses.

P < 0.1.

P < 0.05.

P < 0.01.

The study is not powered for multiple tests of effects on groups, but because of the concerns that increasing instruction time will be less beneficial for students with less capacity for self-control, it is worth examining results for subgroups—although it should be emphasized that these results are exploratory. Subgroup analyses presented in Table S5 suggest that students of non-Western origin do not seem to benefit from any of the two interventions. Increased instruction time without a teaching program may cause increased behavioral difficulties for boys, whereas it has no effect on girls. Conversely, increased instruction time with a teaching program seems to reduce behavioral difficulties for girls.

Table S5.

Heterogeneous intervention effects on student reading achievement (panel A) and total behavioral difficulties (panel B) by gender and country of origin

| Description | Sample | |||

| 1 | 2 | 3 | 4 | |

| Panel A. Outcome: Reading, grade 4 | Boy | Girl | Non-Western | Western |

| NOTP | 0.125 (0.076) | 0.143 | 0.013 (0.094) | 0.186 (0.065) |

| P value | 0.107 | 0.103 | 0.886 | 0.006‡ |

| Adjusted P value | >0.50 | >0.50 | >0.50 | 0.047† |

| Observations | 505 | 507 | 310 | 710 |

| With a TP | −0.095 (0.071) | 0.163 (0.060) | −0.097 (0.090) | 0.074 (0.063) |

| P value | 0.190 | 0.009‡ | 0.286 | 0.240 |

| Adjusted P value | >0.50 | 0.062* | >0.50 | >0.50 |

| Observations | 523 | 515 | 290 | 757 |

| Panel B. Outcome: Total difficulties | Boy | Girl | Non-Western | Western |

| NOTP | 0.954 (0.435) | 0.019 (0.529) | 0.363 (0.528) | 0.543 (0.386) |

| P value | 0.033† | 0.971 | 0.495 | 0.165 |

| Adjusted P value | 0.196 | >0.50 | >0.50 | >0.50 |

| Observations | 519 | 526 | 308 | 745 |

| With a TP | 0.477 (0.424) | −1.322 (0.468) | 0.147 (0.535) | −0.593 (0.269) |

| P value | 0.265 | 0.007‡ | 0.785 | 0.032† |

| Adjusted P value | >0.50 | 0.054* | >0.50 | 0.224 |

| Observations | 531 | 532 | 275 | 797 |

| Stratum indicators | Yes | Yes | Yes | Yes |

| Baseline achievement | Yes | Yes | Yes | Yes |

| Covariates | No | No | No | No |

The intervention effects of the two treatments are estimated in separate regressions. All specifications include stratum indicators and baseline achievement in reading and math. Observations with missing information on the relevant characteristic are dropped. Cluster-robust SEs are in parentheses.

P < 0.1.

P < 0.05.

P < 0.01.

SI Methods

A. Procedure.

In the autumn of 2013, a large-scale randomized, controlled trial in primary schools in Denmark was implemented. The Danish Ministry of Education funded the trial. A primary concern was to evaluate the interventions that could improve the outcomes—both academic and behavioral—of bilingual students with Danish as a second language. Therefore, only schools with a relatively high concentration of bilingual students were eligible.

Fig. S1 shows a timeline for the implementation of the trial and the relevant measurements. In March of 2013, all of the municipalities in Denmark received an email from the Minister of Education with information about the randomized trial. The email contained a brief description of the interventions and study design. The municipalities were given a little more than 2 wk to sign up and informed that all of the schools that expected to have at least 10% bilingual students in grade 4 in the following school year (August to June) were eligible to participate. The municipalities were encouraged to sign up all of their eligible schools, meaning that only public schools were eligible for participation. In 2013, about 81% of all Danish primary and lower secondary school students attended public schools (23).

Based on administrative records for grade 3 students in the 2012/2013 school year, ∼332 of 1,209 schools were eligible to participate, 126 of which enrolled in the trial. It is estimated that about 37% of the eligible schools chose to enroll in the trial.

After the enrollment deadline, the randomization was performed. The trial was designed as a two-stage, cluster-randomized trial with three treatment arms (two instruction time treatments with a teaching program (TP) and without a teaching program (NOTP) and a third treatment not related to instruction time and not analyzed here). There were two levels of randomization: school and classroom. It was a block-randomized trial. The enrolled schools were divided into blocks—which we denote “strata”—based on administrative records on the share of students of non-Western origin in grade 2 in the school year 2011/2012 and the average performance of their prospective grade 4 students in the national grade 2 reading test. Specifically, schools were first placed in three groups based on their share of non-Western students: 8 schools with a share between 0.55 and 1, 56 schools with a share between 0.19 and 0.55, and, finally, 60 schools with a share between 0 and 0.19. Two schools with less than 10% of non-Western origin were randomly excluded from the analysis. Within each of three groups, the schools were ranked according to their average performance on the reading test and divided into strata accordingly. Each stratum consisted of four schools, and by random assignment, each of these schools was placed in one of three treatment arms or the control group. In each school, one grade 4 classroom was randomly selected for participation in the trial by simple randomization. However, only classrooms that were expected to have at least 10% bilingual students were included in the randomization.

The number of schools participating in the trial ended up being determined by the number of schools that could be recruited: 31 schools were eventually allocated to each of the experimental conditions. The number of participating schools limits the statistical power of the study. Notably, although we are able to detect a significant effect of the treatment with NOTP compared with the control group, we are not able to detect a significant difference between the two treatment groups (because the mean outcome in the other treatment group is higher than in the control group). Because of the limited power of the study, we are not able to conclude whether this lack of difference between the two treatments is caused by the fact that there is no substantial difference or the fact that we cannot estimate the difference precisely enough.

Although the trial consisted of three different interventions, we focus on the two that increased instruction time. We exclude schools that received the third treatment from our main analyses; they are only included as a robustness check in Table S3.

The Danish Ministry of Education reimbursed the participating schools for all of the costs associated with participation in the trial given that they implemented the intervention that they were allocated and participated in the data collection. All schools (in both the treatment and control conditions) were paid US $352 to have one classroom participate in the data collection. Schools in the treatment conditions were additionally paid US $3,924 to implement the treatment in one classroom.

A smaller number of schools in the three treatment arms participated in case studies during the implementation of the trial. In June of 2013, information meetings were held that provided some practical information for the schools allocated to one of three treatment arms. School representatives were later divided according to their treatment arm and provided with treatment-specific information. On these occasions, school representatives had the opportunity to pose questions related to the implementation of the interventions.

The first intervention was simply extra instruction time in Danish with high teacher discretion (i.e., NOTP). The classrooms in this treatment arm were given four extra (45-min) lessons per week for 16 wk during the autumn of 2013. If possible, the regular Danish teacher taught the lessons.* The teachers did not receive any explicit teaching material, only a 27-page report entitled “Evidence-based teaching strategies—an idea catalogue with evidence-based strategies for the planning of reading instruction of bilingual children.” The report was prepared by researchers and national experts in the instruction of bilingual children and based on an extensive, systematic literature review of research on effective methods and teaching strategies for teaching bilingual children with Danish as a second language. The teachers received the material for inspiration but were not required to use it. Data from implementation surveys show that the extent to which the teachers used the report was limited, and only 17% found it useful (SI Results, section D).

The second intervention was an expert-developed TP coupled with extra instruction time with lower teacher discretion. The classrooms in this treatment group were also given four extra (45-min) lessons per week for 16 wk during the autumn of 2013. If possible, the regular Danish teacher taught the lessons. The teachers were provided with very detailed teaching material containing different texts and classroom exercises for each week of the intervention. The material was developed and reviewed by national experts in language instruction with the aim of improving student performance—that of bilingual students with Danish as a second language in particular. The schools received four copies of the teaching material and were told not to distribute it during the trial. Teachers were also asked not to use the material in other classrooms than the one selected for participation in the trial. The teachers largely complied with the program. All but one teacher reported that they have used the material to some or great extent (SI Results, section D).

The control group schools were required to participate in the data collection to the same extent as those in the three treatment arms. The only difference was that the treatment arm schools were also subject to two implementation surveys during the autumn that were aimed at investigating whether schools implemented the interventions as intended. All of the schools were reimbursed for the costs of participating in the data collection.

When the schools enrolled in the trial, they were informed that it would run for two rounds. The first is described here and took place in the fall of 2013. The schools that were allocated to the control group in the first round would be allocated to a treatment arm in the second round. This feature was meant to encourage the control group schools to remain in the trial and may also have reduced any observer effects specific to being allocated to a treatment or control group. Furthermore, differences between the two treatment groups should not be of such observer effects.

In the 2012/2013 school year (the year before implementing the trial), the average number of hours of instruction at the grade 4 level was 818 h, corresponding to 20 h/wk or about 27 lessons. The average number of hours of Danish instruction was 191 h, corresponding to about 5 h/wk or about six lessons. The average number of hours of math instruction was 124 h, corresponding to about 3 h/wk or about four lessons. The remaining lessons at the grade 4 level would mainly be made up of lessons in English, history, religious studies, science, physical education, and creative subjects. The exact number of lessons can vary across schools and classrooms, but the Danish Ministry of Education sets a minimum for the number of instruction hours (24). On average, the intervention increased the yearly number of Danish lessons by 25%, whereas the total weekly instruction time was increased by 15% during the intervention. Information from implementation surveys confirms the intervention details.

Increasing the instruction time necessarily occurs at the expense of other spare time activities. Of the participating students, 57% were enrolled in after-school care in the autumn of 2013. The primary function of these care facilities is pedagogical rather than academic. The facilities are heavily subsidized, but the parents cover part of the costs.

Fig. S2 presents the average yearly instruction time in reading, writing, and literature for 10 y olds in OECD countries. Denmark is in the upper end of the distribution, markedly above the OECD average. Thus, the intervention increased the instruction time from an already relatively high level of lessons within the subject. Comparing the total instruction time for 10 y olds in compulsory education, Denmark is slightly below the OECD average, with around 800 h/y (1).

Fig. S2.

Yearly instruction time in reading, writing, and literature at age 10 y old across OECD countries.

B. Measures.

The primary outcomes of interest are student achievement in reading and measures of behavioral responses. Academic achievement was assessed using the Danish National Tests, an official, standardized testing system introduced in 2010. Public school students are subject to mandatory reading tests every second year from grade 2 onward. The mandatory testing period is carried out in each spring term (January to April) but with the extra feature that teachers can sign up their classes for up to two voluntary tests at the same level in the fall term before and/or after the mandatory tests. This feature is exploited to evaluate the reading achievements of participating students after the intervention. In 2013, the voluntary test period ran from October 21 to December 13. To ensure that students were only evaluated after the intervention period, participating schools were asked to book the voluntary test slots on the last day of testing, December 13, before other schools were allowed to sign up. We use the results from the corresponding mandatory second grade reading and mandatory third grade math tests as baseline achievement measures.

The National Tests are adaptive, meaning that the difficulty level of the questions is continuously updated to match the estimated ability of the student throughout the test (14). Both student ability and difficulty level are measured on a Rasch-calibrated logit scale. The test questions are randomly drawn from a large item bank. Although continuously updating the item bank is an important part of adaptive testing (25), all of the included test items are subject to pilot testing and strict analysis before inclusion in the item bank (19, 25).

During the reading test session, the student’s reading skills are evaluated within three separate cognitive domains simultaneously: language comprehension, decoding, and reading comprehension (19). The main outcome analyzed is an average standardized measure of the three achievement scores for each student. First, achievement scores are standardized to zero mean and unit variance within each cognitive domain. Second, a simple average is constructed across domains, and third, this measure is standardized once more. Because the analyzed test results are from the voluntary tests, the population of test takers in the fall of 2013 is likely to be a selected sample of students. Therefore, we use the distribution of the 2013 mandatory reading test for fourth graders in the standardizations (i.e., the cohort 1 y ahead of our intervention sample).† Generally, the means of test scores within a specific domain increase slightly over time, whereas the SDs remain more or less constant. We also include analyses based on the standardized achievement measures for the separate domains as outcomes.

To measure student behavior and determine the possible behavioral effects of the intervention, the SDQ was administered to students after the intervention.‡ The SDQ is a validated survey instrument (20, 21). We administered the one-sided, self-rated SDQ for 11–17 y olds (26) in an electronic version approved by Youthinmind. Most of the students participating in the trial were actually only 10 y old at the time of the survey, but we faced a variety of tradeoffs in the choice of survey instrument and found this to be the preferred feasible option. The SDQ is a relatively brief, 25-item questionnaire. The questionnaire contains items on both problematic and positive behavior. All items are answered by marking one of three boxes, indicating whether the respondent finds the relevant statement “not true,” “somewhat true,” or “certainly true.” The respondent is asked to consider their situation for the past 6 mo when answering the questionnaire. The items are divided into five different subscales: emotional symptoms, conduct problems, hyperactivity/inattention, peer relationship problems, and prosocial behavior. The first four can be combined to a total difficulties score ranging from 0 to 40; higher scores indicate higher levels of difficulty. Prosocial behavior is evaluated separately on a 0–10 scale, with higher scores indicating better social behavior.

The postsurveys were administered electronically, and the teachers were responsible for ensuring that all students answered the survey. The teachers were encouraged to use a lesson and provide all of the students with access to a computer. They were allowed to help students understand the questionnaire items if they were unsure about wordings. The postintervention surveys were distributed at the end of the intervention period. Participants were asked to respond before December 20.

We conduct analyses both with and without student-specific covariates. The students in the participating classrooms were linked to administrative registers maintained by Statistics Denmark using unique personal identifiers. The data on individuals include information about their birth, country of origin, previous test results, and parental information (Table S1 shows a full list of covariates).

To avoid confounding factors caused by parental responses to treatment allocation, all of the information related to parents and family structure is measured in the year of the student’s sixth birthday, well before the trial. We were unable to match ∼1% of the participating students’ identifiers to register data. Where information on student characteristics and baseline achievement is missing, a dummy variable adjustment approach is used as recommended for group-randomized, controlled trials in the work in ref. 27.

C. Sample Characteristics, Comparability, and Attrition Analysis.

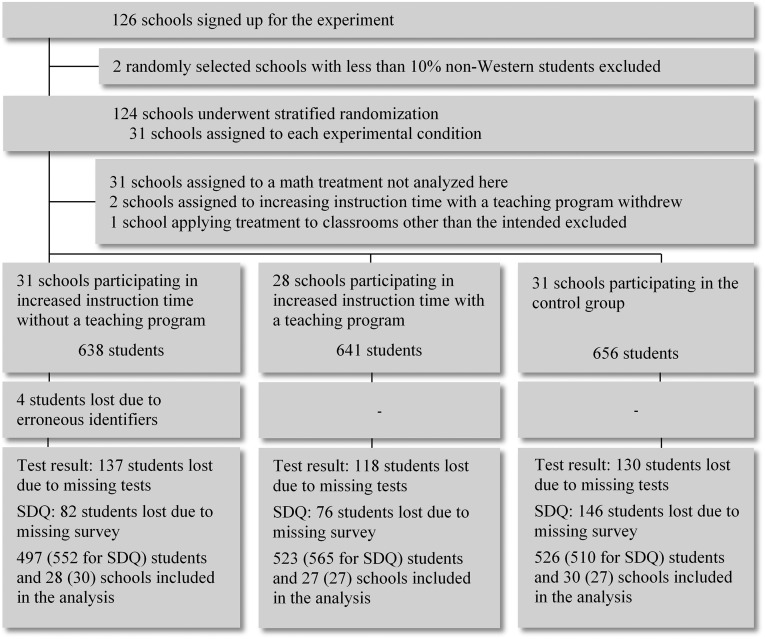

One hundred twenty-six schools signed up for the trial. Fig. S3 presents a flow diagram of the trial. To match the four experimental conditions, 124 schools were included in the stratified randomization. Before the stratified randomization, two schools with less than 10% non-Western students were randomly excluded. These two schools are not included in the analyses; 2 schools withdrew from the trial before implementation, and 31 schools were allocated to a third treatment group aimed at improving the math teachers’ ability to integrate Danish as a second language in the instruction. These schools are not included in the main analyses. Of the remaining 91 participating schools, 1 school participated fully in the intervention but with another classroom than assigned by the randomization and was, therefore, excluded from the sample, because we cannot measure the outcomes for the intended classroom. On the student level, four students who had been linked to invalid identifiers were also excluded. Finally, ∼20% of the sample was lost because of missing test scores (16% for SDQ scores).

Fig. S3.

Flow diagram of schools and students participating in the randomized trial.

Descriptive characteristics of students are presented in Table S1. Here, the comparability of the sample across groups is also shown. The differences in means indicate that the randomization was successful in creating uniform samples across the intervention and control groups.

A few differences are observed across the treatment and control groups, but they are no more than what is to be expected by construction. Students in the treatment group with a TP were significantly less likely to be missing baseline reading scores compared with those in the comparison group. The pretests on student reading achievement are measured before randomization. Moreover, the fathers of students in the TP sample are marginally younger than those of students in the control group. Although the differences in means of the baseline achievement scores in math and reading are not significant, evidence from Table S1 suggests that the TP students may be a slightly positively selected sample compared with the students in the other groups.§

In the postintervention surveys, the treated students answered two questions about their participation in the extra lessons. Here, 4% of the students responded that they had formally been exempted from participation in the TP treatment, whereas 11% were formally exempt from participation in the NOTP treatment. Overall, 80% of the students assigned to either treatment reported that they had participated in all lessons. Around 90% reported having been present for more than one-half of the extra lessons. The results of our primary analyses are robust to excluding students who reported not having been present for a considerable fraction of the intervention.

As illustrated in Fig. S3, 20% of the students did not take the reading test in December, whereas 16% of the students were lost because of attrition in postintervention survey responses. In total, five whole classrooms did not complete the reading tests after the intervention, whereas six did not complete the postintervention survey.¶ Balance analyses across groups suggest that samples and treatment groups remain largely similar in terms of observables. A formal attrition analysis on both outcomes is presented in Table S2. In specifications 1 and 3 in Table S2, the probability of not attending the reading test/answering the postintervention survey is regressed only on indicators for treatment groups and stratum fixed effects. Specifications 2 and 4 in Table S2 further include available student characteristics as listed in Table S1. In terms of the postintervention reading test, specifications 1 and 2 in Table S2 reveal that there are no differences in the attrition rates across control and intervention groups. Disadvantaged students were less likely to attend the test; poorer performance on the second grade baseline reading test, missing the baseline tests, or having a single mother are strong predictors of missing the postintervention reading test. Additional analyses (not presented) suggest that NOTP students who do not attend the test may be marginally disadvantaged in terms of baseline attendance rates and reading scores compared with the control group students.

When considering the self-reported behavioral outcomes in specifications 3 and 4 in Table S2, students from both treatment groups are significantly less likely to attrite compared with the control students. Again, poorer baseline reading scores, not attending the baseline math test, and having a single mother are all associated with a higher probability of not answering the postintervention survey. We suspect that control group teachers (who are less interested in participating in the data collection, because they did not receive any treatment and may, therefore, not understand the purpose of the data collection) may cause the significantly higher attrition rate of the control group.

Because the trial focuses on students from schools with a considerable percentage of bilingual students with Danish as a second language, the sample is slightly negatively selected in terms of academic achievement, parental characteristics, and so forth compared with the general population of students in public schools. Thus, the distribution of grade 2 reading scores from the school year of 2011/2012 (one of the stratifying variables) of participating schools is to the left of that of the general population of students. Consistent with the participation requirement, 25% of the third grade students from 2012/2013 (who would attend grade 4 in the autumn of 2013) in the participating schools are of non-Western origin; the corresponding fraction is 8% for the average public school. Consequently, students in the participating schools have slightly less advantageous parental backgrounds, except that fewer come from single-mother homes. There were, however, no considerable differences in the instruction time. The average public school had slightly less yearly instruction time in Danish (190 h) than the participating schools (193 h) but with a smaller variance.

Auxiliary analyses show that, compared with other eligible public schools in Denmark, there are no significant differences in the baseline scores of the students or the yearly instruction time in Danish (193 h for both). Still, there are significantly more students of non-Western origin in the participating schools compared with in the eligible ones (on average 25% vs. 20%), and their mothers are generally slightly less educated.

Discussion

These results suggest that increasing instruction time does increase average student achievement. An effect of 0.15 SD in reading means that a student at the median of the reading score distribution moves to the 44th percentile. This effect is substantial compared with the relatively cheap intervention. The effect size per US $1,000 per student would be ∼0.82 SD. The results suggest that governments cannot reduce instruction time without the risk of adversely affecting student achievement, but this interpretation naturally depends on the generalizability of the results. First, for reasons explained above, the marginal effect of instruction time may be decreasing. OECD figures show that, in Denmark, where this study took place, instruction time in reading, writing, and literature at age 10 y old (which is the median age in this study) is above the average of OECD countries (Fig. S2). The total compulsory instruction time is very close to the OECD average (1). If marginal returns to instruction time are decreasing, the many countries that provide the same or less instruction time than Denmark would have at least as large of effects. The effects for countries providing more instruction time are more uncertain.

Second, the effects of increasing instruction time may depend on the instructional regime (14). Decision-makers face a tradeoff between a high-discretion program allowing teachers to use their assessment of the individual students to tailor instruction vs. a more detailed teaching program that—based on the best available evidence—regulates the instruction more. The latter primarily affected one subscale of reading. The benefits of a detailed, expert-developed program may be outweighed by a narrower focus on a specific learning domain, and more detailed instructional regulation curtails teachers’ opportunities to differentiate their teaching to the needs of different students in the classroom. It is worth emphasizing that implementation survey data suggest that teachers generally had a positive attitude toward the teaching program: 96% of teachers reported that they used the material to some or a large extent, 83% of the teachers found that the teaching program was useful, and 88% believed that it was beneficial for the whole class.

The high-discretion instructional regime with no teaching program had a significant average treatment effect. This finding does not prove that high-discretion programs will be better than a more detailed, evidence-based program. However, it does suggest that there are some benefits of giving teachers good opportunities to differentiate their instruction. Survey data show that 90% of the teachers report that they used (parts of) the increased instruction time for working more with existing materials, and at the same time, 90% used new materials, which supports the notion that they use the high-discretion regime to accommodate their teaching. The effect of increasing instruction time may also depend on other factors, such as the educational level of the teachers or other available school resources. No single study will, therefore, settle the debate. However, the very low regulated treatment tested in the no teaching program condition makes the results relatively applicable to other contexts.

However, the exploratory results of the high-discretion no teaching program raise two concerns. First, boys did benefit from the intervention in terms of their reading skills, but they may also have experienced increased behavioral problems. Boys have been found to have less self-control (12), and therefore, making them work longer during the day may exhaust their self-control and thereby, create behavioral problems. Second, non-Western students seemed to show no or very little benefit of the intervention. Nevertheless, 73% of the teachers report that they also believed that the intervention benefitted bilingual students. This issue points to the other aspect of the instructional regime than the instruction, namely the assessment of the students’ progress. Teachers did not seem to notice if the non-Western students did not benefit from the instruction. Therefore, it might be that the effect of a high-discretion regime on the instruction side would be even more effective combined with more regulation on the assessment side, thereby making teachers more aware of how their students respond to their teaching.

It should be emphasized that these considerations should be seen as hypotheses for future research, because the power of the trial does not allow strong inference about the differences between the student groups and treatment conditions. The results do confirm, however, that increasing instruction time in an instructional regime with little formalization has positive average treatment effects on the reading skills of the students.

Methods

Participants.

The randomized, controlled trial was approved and funded by the Danish Ministry of Education and Aarhus University. All schools have volunteered to participate in the trial. Parents of students were informed about the content of the trial beforehand and told how to withdraw their child from the trial if they wished.

All interventions were implemented for students in grade 4 in the fall of 2013. Danish public schools that expected to have at least 10% bilingual students in grade 4 in the school year 2013/2014 were eligible to participate in the trial.

The participating schools were fully reimbursed for the costs associated with participation in the trial.

Procedure.

In March of 2013, the Minister of Education sent an email to all municipalities in Denmark informing them about the upcoming randomized trial, eligibility criteria, and enrollment procedures. The municipalities were invited to enroll all of their eligible schools in the trial; 126 schools enrolled in the trial. We estimate that this constitutes about 37% of the eligible schools.

The trial was a two-stage, cluster-randomized trial with three treatment arms. Fig. S3 shows a diagram of the flow of schools and students participating in the trial. The two levels of randomization were school and classroom. First, administrative records from the school year of 2011/2012 were used to divide schools into strata based on the share of students of non-Western origin in grade 2 and the average score on the national reading test in grade 2. Each stratum contained four schools, and allocation to one of four experimental conditions (the two treatment arms, the control group, and a third treatment not related to instruction time and not analyzed here) was random within the stratum. Second, one classroom in each school was selected to participate in the trial by simple randomization. Randomization assures an unbiased distribution of baseline characteristics between experimental conditions, although some imbalance will occur in any finite sample. We find no substantially large imbalances between control and treatment groups (among 28 baseline student characteristics reported in Table S1, none of the mean values were significantly different across the treatment group without a teaching program and the control group, and only two means were significantly different across the treatment group with a teaching program and the control group). However, some minor imbalance occurs in baseline reading achievement between the control group and the teaching program group. To be conservative and because of a strong expected relationship between baseline achievement and outcomes, the effect estimates presented here are controlled for baseline achievement in reading and math. SI Methods and SI Results has more details about the balance of the experimental conditions and the robustness of the results to different model specifications.

Although the trial included three treatment arms, the focus of this study is the two interventions that involved an increase in instruction time. Thus, we exclude schools that received the third treatment from our analyses. In the interventions that involved an increase in instruction time, the classrooms received four extra (45-min) lessons per week for 16 wk. In the first treatment arm, classrooms received extra instruction time in Danish with high teacher discretion (i.e., without a teaching program). Thus, the teachers were not provided with any explicit teaching material. In the second treatment arm, classrooms received extra instruction time in Danish with low teacher discretion (i.e., teachers were provided with very detailed teaching material containing texts and classroom exercises for each week of the intervention).

Analyses.

We estimate each of the two treatment effects separately (i.e., including only observations in the relevant treatment arm and the control group). The empirical analysis presented is based on a linear regression model that includes a treatment indicator, stratum fixed effects, and baseline test scores. The stratum fixed effects are included to take into account that treatment assignment was random within strata. We account for the hierarchical structure of the data (students within classrooms) by clustering SEs at the classroom level. Similar results are found using hierarchical linear modeling (Table S6), which would be expected based on simulations comparing hierarchical linear or multilevel models with models using clustered SEs (22). Because of attrition, outcome data are missing for some students. Test score attrition does not correlate with the treatment assignment, but participants in the control group were less likely to respond to the postintervention survey containing the SDQ outcomes (Table S2). We adjust for baseline achievement to present the more conservative estimates. Effect sizes are generally larger when we do not include baseline achievement (Table S3). We estimate the intention to treat effect, which is an estimate of the effect of assigning students to increased instruction time that does not impose assumptions about noncompliance. The intention to treat effect is of immediate relevance to policymakers, because it reflects the average treatment effect taking into account that not all students will comply with a policy that increases instruction time. For instance, students may transfer to a private school or other schools with no increase in instruction time.

Table S6.

Intervention effects on student reading achievement (panel A) and total behavioral difficulties (panel B) based on two-level hierarchical models

| Description | 1 | 2 | 3 | 4 | 5 | 6 |

| Panel A. Outcome: | Reading, grade 4 | Reading, grade 4 | Reading, grade 4 | Reading, grade 4 | Reading, grade 4 | Reading, grade 4 |

| NOTP | 0.150* (0.066) | 0.167† (0.055) | 0.166† (0.052) | |||

| With a TP | 0.157* (0.061) | 0.041 (0.049) | 0.039 (0.046) | |||

| Constant | −1.187† (0.172) | −0.321* (0.144) | −0.111 (0.270) | −1.509* (0.164) | −0.594† (0.131) | −0.829† (0.273) |

| Observations | 1,023 | 1,023 | 1,023 | 1,049 | 1,049 | 1,049 |

| No. of groups | 58 | 58 | 58 | 57 | 57 | 57 |

| Panel B. Outcome: | Difficulties | Difficulties | Difficulties | Difficulties | Difficulties | Difficulties |

| NOTP | 0.563 (0.355) | 0.487 (0.340) | 0.500 (0.340) | |||

| With a TP | −0.562 (0.364) | −0.395 (0.350) | −0.545 (0.347) | |||

| Constant | 7.718† (1.015) | 5.819† (0.998) | 6.476† (2.177) | 10.623† (0.985) | 8.363† (0.974) | 10.352† (2.263) |

| Observations | 1,062 | 1,062 | 1,062 | 1,075 | 1,075 | 1,075 |

| No. of groups | 57 | 57 | 57 | 54 | 54 | 54 |

| Stratum indicators | Yes | Yes | Yes | Yes | Yes | Yes |

| Baseline achievement | No | Yes | Yes | No | Yes | Yes |

| Covariates | No | No | Yes | No | No | Yes |

Random intercepts are included in all specifications. Panel A shows the results on reading achievement, whereas panel B shows the results on the total difficulty score. The table shows included covariates. SEs are in parentheses.

P < 0.05.

P < 0.01.

Additional details about the trial and the analyses are in SI Methods and SI Results.

SI Results

A. Preliminary Analyses.

Our preliminary analyses estimate the raw intervention effects as measured by the difference in the raw means between the two treatment groups and the control group. The results presented in columns 1 and 2 in Table S3 suggest that increasing instruction time has a significant positive effect of more than 0.2 SDs (P = 0.02 and P = 0.06, respectively) on student achievement for both interventions, whereas the effects on total behavioral difficulties are inconclusive (columns 1 and 2 in Table S4). These differences in raw means for reading achievement are somewhat larger than the results of our primary analyses (SI Results, section B), where stratum fixed effects are included in the models. Including stratum indicators reduces the estimated effect sizes to around 0.15 SD (P = 0.06 and P = 0.01, respectively), presumably reflecting a slight imbalance in terms of unequal proportions of treatment and control students across blocks. Although the ex ante probability of being assigned to an experimental condition is the same across strata, differing class sizes cause the ex post proportions of students in these conditions to differ. However, including stratum fixed effects ensures that only similar schools in terms of the stratification variables (baseline achievement in reading and share of non-Western students at the grade level) are compared in the analysis, thus more accurately—and more conservatively—depicting the true intervention effects. Lastly, we cannot reject that the intervention effects are the same when estimated with and without stratum indicators (P = 0.24 NOTP; P = 0.62 with TP). We, therefore, include stratum indicators in our primary analyses as suggested in the works in refs. 29 and 30.

B. Primary Analyses.

In our primary analyses, we estimate the effects of the interventions using ordinary least squares regression with SEs corrected for clustering within schools (in SI Results, section C, we also present evidence of the robustness of our results by reproducing them in a multilevel model to account for the nested structure of the data). The model specifies that student achievement is a function of baseline achievement and other student-specific characteristics. Thus,

| [S1] |

where denotes the outcome of interest for student i in school j of stratum k. The model is estimated separately for the two treatment groups to increase flexibility (i.e., each treatment group is compared with the control group separately)#; represents the intention to treat (ITT) effect of the relevant treatment. The primary outcomes are student academic achievement in reading and total behavioral difficulties (SDQ). All models include stratum fixed effects (). The stratum fixed effects are parameterized by including a dummy variable for each stratum (i.e., 30 stratum indicators). We estimate the above model both including and excluding baseline achievement and other baseline student characteristics. The vector contains the baseline achievement in reading (grade 2) and math (grade 3) as well as corresponding missing indicators. The vector includes child covariates (indicators for student age, quarter of birth, gender, birth order, being of non-Western origin,‖ and having a single mother), parental covariates (age, log of earnings, and a set of indicators for educational attainment), and relevant missing indicators (Table S1 shows a complete list of covariates).

Table S3, panel A presents the intervention effects on student reading achievement. Our preferred specifications 4 and 7 in Table S3 correspond to the results presented in the text and include stratum fixed effects and the baseline achievement measures. Specifications 3 and 6 in Table S3 include only stratum indicators, whereas specifications 5 and 8 in Table S3 also include covariates. Inclusion of covariates has little effect on the estimated effects. There are 58 and 57 clusters in the NOTP and TP samples, respectively. The average cluster sizes are 18 in both samples, and the median is 18 in the NOTP sample and 20 in the TP sample. Only nine clusters in total contain less than 12 students; 50 clusters of roughly equal size are often enough for cluster-robust SEs to provide valid inference (31).

Participating schools were asked to sign up for the reading test on the last day of testing (December 13). Thus, the estimated ITT effects presented here may be interpreted as an immediate ITT. As noted in the text, the results indicate considerable and significant effects of increasing instruction time (Table S3, panel B). Particularly, four extra high-discretion Danish lessons per week (NOTP) benefit students in terms of improved reading achievement.

Although the results for increased instruction time NOTP are robust to the inclusion of baseline achievement and other covariates, the results for increasing instruction time with the low discretion program are not. Controlling for baseline achievement, the overall effect of the TP treatment becomes small and statistically insignificant.** The marked drop in the estimated effect size from specification 6 to 7 in Table S3 suggests that the distribution of student baseline achievement across the control and treatment groups is slightly skewed. Overall, the TP treatment group students simply performed slightly better at the outset, although the differences in the means of the baseline achievements in reading and math were not significant. The text focuses on the specifications including the baseline achievement measures, which are more conservative.

To further elaborate on this issue, we test the effects of increasing instruction time against the effect of the third intervention, which aimed to enhance the skills of the classroom math teachers. The math teachers participated in a 5-d course designed by the Danish Ministry of Education and received a written proposal of an ideal lesson plan to better qualify them to integrate Danish as a second language in the math classes. This treatment is not expected to affect—or is expected to at least affect only to a very limited extent—student achievement in reading. At the same time, the distributions of baseline achievement measures among the students in the TP treatment group are more comparable with those of the students in the math treatment group than the control group, thus, implying that the math treatment group is a plausible comparison group.†† Strictly speaking, the estimated effects will reflect how much increased instruction time increases student achievement relative to an upgrading of the math teacher. The results of this robustness analysis are shown in Table S3, panel B. The estimated effects in specifications 3–5 are on the same order of magnitude as those in Table S3, panel A, and they are still robust to the inclusion of baseline achievement measures. However, the inclusion of baseline achievement in specification 7 in Table S3 causes a less dramatic drop in the estimated effect than would be expected if the TP group is somewhat positively selected relative to the control group.

Furthermore, we analyze the intervention effects on the three domains of the reading test: language comprehension, decoding, and reading comprehension. Table S3, panel C presents the results where the three domains are considered as separate outcomes. All of the specifications include the baseline achievement to take into account the sensitivity of the effect of the TP intervention. The results are robust to the inclusion of covariates. The curriculum in the TP intervention was developed with a particular focus on language comprehension. Interestingly, language comprehension seems to be the only domain significantly affected by the TP intervention, whereas the NOTP intervention seems to impact a wider range of cognitive reading domains.‡‡

Explorative analyses of whether the effects of increased instruction time change across identifiable subgroups of students are conducted based on gender and country of origin. Table S5 presents the results of our main specification by subsamples: boys, girls, students of non-Western origin, and students of Western and Danish origin. Where information of the relevant characteristic is missing, the observation is dropped from the subsamples; thus, the sample sizes do not correspond to the results in Tables S3 and S4. Unadjusted P values are shown. To control for the familywise error rate associated with testing multiple hypotheses simultaneously, we apply the simple stepdown method proposed in the work in ref. 32. Adjusted P values are shown. Although more powerful than the standard Bonferroni method, this correction assumes independence of the individual P values, and thus, we consider it conservative. We block 24 hypotheses in Table S5 into two families based on the outcome of choice [i.e., reading scores (Table S5, panel A) and SDQ scores (Table S5, panel B)]. Using two-sided tests and a familywise error rate of 0.1, we can still reject that increased instruction time NOTP has no effect on Danish students and students of Western origin and that the extra lessons with a TP have no effect for girls. In the SDQ block, the null hypotheses of no treatment effect of increased instruction time with the TP are rejected for girls.

C. Analyses Based on a Two-Level Hierarchical Model.

We investigate whether the nested structure of the data significantly affects our results in Table S3. A standard two-level hierarchical model of student achievement is considered to account for the sampling of students within classrooms. As before, all of the models include stratum fixed effects, and analyses both with and without baseline achievement and student-specific covariates were conducted. For the full models, the student level (level 1) includes all of the baseline achievement measures and covariates. The school-level model (level 2) includes indicators for treatment assignment and random effects for individual schools. Formally, we consider the following models.

Level 1 model (students):

Level 2 model (schools):

In the models, measures the ITT effect of each treatment in separate specifications. The model is estimated separately for the two treatment groups to increase flexibility. A more detailed description of the variables can be found in relation to the description of the model used for the primary analyses.

The results from the two-level hierarchical models are presented in Table S6. The results are very similar to the results presented in Table S3, which is in line with previous findings that hierarchical linear models and linear models with clustered SEs produce similar results (22).

D. Analyses of Teachers’ Use of the Additional Instruction Time.

During the intervention period, two rounds of implementation surveys were administered to the two treatment groups; one in the last half of September and one in November (Fig. S1). In the following section, we focus on results from the latter one, which is thought to better evaluate the overall implementation of the interventions.

All teachers in the NOTP treatment respond that they are the regular Danish teacher of the participating class; 77% of the teachers (two nonrespondents) report the same in the TP sample.

In the remainder of this section, the implementation survey sample sizes are 30 for the NOTP sample (one nonrespondent) and 24 for the TP sample (four nonrespondents).

NOTP.

The NOTP teachers were free to spend the extra time as they pleased. This feature is reflected in the survey data; 90% of the responding teachers reported that they, to some or a great extent, spent the extra lessons covering the same material that they had already planned—but in greater detail. At the same time, most teachers also experimented with new material. In total, 90% responded that they, to some or a great extent, tried out new material and methods; 40% reported that they, to some or a great extent, spent the extra lessons on separate projects or bundled the lessons for project days.

The NOTP teachers received a 27-page report for inspiration on how to support bilingual students, but they were not required to use it in the treatment; 40% of the teachers reported that they used this material to a small extent, whereas 40% reported not to have used it at all. Only 17% of the teachers found the report useful, whereas 47% found it useless or did not use it at all.

The teachers generally had a positive attitude toward the intervention; 77% of the NOTP teachers agree that the high-discretion intervention was profitable for the class as a whole, whereas 73% agreed that the bilingual students profited. Eighty-three percent responded that they enjoyed teaching the extra lessons.

With a TP.

The TP teachers were presented with a very detailed TP to use. The survey data confirm a high compliance rate. In the implementation surveys, 96% of the responding TP teachers (all but one) stated that they had used the TP material to some or a large extent; 83% responded that they found the material useful. The remaining 17% were indifferent.

Also here, the teachers generally had a positive attitude toward the program; 88% agreed that the intervention with the TP was profitable for the class as a whole, whereas 83% agreed that it was profitable for bilingual students. Sixty-seven percent of the TP teachers enjoyed teaching the program in the extra lessons.

Acknowledgments

We thank Michael Rosholm for supporting the setup of the project, Rambøll for data collection assistance and support, and Jessica Goldberg for helpful comments and discussions. The implementation and evaluation of the randomized trial were funded by the Danish Ministry of Education.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission. J.L. is a guest editor invited by the Editorial Board.

Data deposition: The data used in this study are survey and administrative data hosted by Statistics Denmark. The data cannot be made publicly available due to the sensitive nature of the data which contains individual-level information. Data security policy means that access can be obtained from Aarhus only and currently requires approval by Statistics Denmark and completion of a test on data security policy. M.K.H. will provide assistance for researchers who are interested in getting access to the data.

*In Denmark, teachers are subject-specific but follow the same classroom across several grades. On average, the teachers in the trial had taught the students for 1.5 years before the trial started.

†Baseline test results are obtained by means of mandatory testing and standardized based on their own distributions.

‡A student survey was also administered at the beginning of the intervention period. Because this survey was conducted after randomization, which is not ideal, we do not use these data as baseline. Auxiliary analyses confirm that the propensity to answer the presurvey differed across treatment conditions.

§An F test confirms that mean baseline characteristics jointly differ across TP and control groups (P = 0.00; P values corrected for clustering at the school level). The F statistic tests the joint hypothesis that the coefficients of all covariates are equal to zero in a model regressing the treatment indicator on all covariates. In other words, if the mean of just one variable is significantly different between the treatment and control groups, the null hypothesis does not hold. Therefore, for the TP group, the significant F test confirms what can also be seen from the balance table, namely that a few variables are significantly related to treatment assignment. However, we fail to reject that differences in the baseline characteristics of the NOTP and control groups are jointly zero (P = 0.71; P values corrected for clustering at the school level). Thus, other than not being significantly related to the treatment indicator individually, the covariates are also not jointly significant. It should be noted, however, that small imbalances in variables strongly related to the outcome—such as baseline achievement—are more important to adjust for than statistically significant differences in variables weakly related to the outcome (28, 29).

¶As a consequence of the pairwise (four-way) stratification strategy, when outcomes are missing for an entire school, the other school in the stratum does not contribute to the point estimate of the intervention effect. Thus, the estimation sample is selected on attrition. Estimated effect sizes in models without strata indicators are larger than in the main specification with strata indicators (compare with raw differences in means in Table S3).

#We fail to reject that a pooled specification, where indicators of both treatments are included simultaneously, produces different intervention effects. Eq. S1, however, has the advantage that it is less sensitive to potential imbalances in baseline covariates that may occur by chance across experimental conditions.

‖Non-Western origin includes students who are either immigrants or second generation immigrants from non-Western countries. Definitions of immigrants and non-Westerns countries are provided by Statistics Denmark.

**The drop in the point estimate is unlikely to be driven by attrition because of missing outcomes. The gap in estimated effect sizes is similar when including an imputed version (nonstochastic regression imputation) of the missing fourth grade test results as an outcome.

††Mean baseline reading score for the math treatment group is −0.103, and the corresponding mean baseline math score is −0.169.

‡‡This analysis should be considered exploratory only; P values are not corrected for multiplicity.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1516686113/-/DCSupplemental.

References

- 1. OECD (2014) Education at a Glance (OECD Publishing, Paris)

- 2.Patall E, Cooper H, Allen A. Extending the school day or school year. Rev Educ Res. 2010;80(3):401–436. [Google Scholar]

- 3.Meyer E, van Klaveren C. The effectiveness of extended day programs: Evidence from a randomized field experiment in the Netherlands. Econ Educ Rev. 2013;36:1–11. [Google Scholar]

- 4.Kikuchi N. The effect of instructional time reduction on educational attainment. J Jpn Int Econ. 2014;32:17–41. [Google Scholar]

- 5.Jensen VM. Working longer makes students stronger? Educ Res. 2013;55(2):180–194. [Google Scholar]

- 6.Parinduri RA. Do children spend too much time in schools? Evidence from a longer school year in Indonesia. Econ Educ Rev. 2014;41:89–104. [Google Scholar]

- 7.Fryer RG. Injecting charter school best practices into traditional public schools: Evidence from field experiments. Q J Econ. 2014;129(3):1355–1407. [Google Scholar]

- 8.Cortes KE, Goodman JS. Ability-tracking, instructional time, and better pedagogy: The effect of double-dose algebra on student achievement. Am Econ Rev. 2014;104(5):400–405. [Google Scholar]

- 9.Schochet PZ. Statistical power for random assignment evaluations of education programs. J Educ Behav Stat. 2008;33(1):62–87. [Google Scholar]

- 10.Duckworth AL, Seligman MEP. Self-discipline outdoes IQ in predicting academic performance of adolescents. Psychol Sci. 2005;16(12):939–944. doi: 10.1111/j.1467-9280.2005.01641.x. [DOI] [PubMed] [Google Scholar]