Significance

Placebo effects pose problems for some intervention studies, particularly those with no clearly identified mechanism. Cognitive training falls into that category, and yet the role of placebos in cognitive interventions has not yet been critically evaluated. Here, we show clear evidence of placebo effects after a brief cognitive training routine that led to significant fluid intelligence gains. Our goal is to emphasize the importance of ruling out alternative explanations before attributing the effect to interventions. Based on our findings, we recommend that researchers account for placebo effects before claiming treatment effects.

Keywords: placebo effects, cognitive training, brain training, fluid intelligence

Abstract

Although a large body of research shows that general cognitive ability is heritable and stable in young adults, there is recent evidence that fluid intelligence can be heightened with cognitive training. Many researchers, however, have questioned the methodology of the cognitive-training studies reporting improvements in fluid intelligence: specifically, the role of placebo effects. We designed a procedure to intentionally induce a placebo effect via overt recruitment in an effort to evaluate the role of placebo effects in fluid intelligence gains from cognitive training. Individuals who self-selected into the placebo group by responding to a suggestive flyer showed improvements after a single, 1-h session of cognitive training that equates to a 5- to 10-point increase on a standard IQ test. Controls responding to a nonsuggestive flyer showed no improvement. These findings provide an alternative explanation for effects observed in the cognitive-training literature and the brain-training industry, revealing the need to account for confounds in future research.

What’s more, working memory is directly related to intelligence—the more you train, the smarter you can be.

NeuroNation (www.neuronation.com/, May 8, 2016)

The above quotation, like many others from the billion-dollar brain-training industry (1), suggests that cognitive training can make you smarter. However, the desire to become smarter may blind us to the role of placebo effects. Placebo effects are well known in the context of drug and surgical interventions (2, 3), but the specter of a placebo may arise in any intervention when the desired outcome is known to the participant—an intervention like cognitive training. Although a large body of research shows that general cognitive ability, g, is heritable (4, 5) and stable in young adults (6), recent research stands in contrast to this, indicating that intelligence can be heightened by cognitive training (7–12). General cognitive ability and IQ are related to many important life outcomes, including academic success (13, 14), job performance (15), health (16, 17), morbidity (18), mortality (18, 19), income (20, 21), and crime (13). In addition, the growing population of older people seeks ways to stave off devastating cognitive decline (22). Thus, becoming smarter or maintaining cognitive abilities via cognitive training is a powerful lure, raising important questions about the role of placebo effects in training studies.

The question of whether intelligence can be increased through training has generated a lively scientific debate. Recent research claims that it is possible to improve fluid intelligence (Gf: a core component of general cognitive ability, g) by means of working memory training (7–12, 23, 24); even meta-analyses support these claims (25, 26), concluding that improvements from cognitive training equate to an increase “…of 3–4 points on a standardized IQ test.” (ref. 25; but cf. ref. 27). However, researchers have yet to identify, test, and confirm a clear mechanism underlying fluid intelligence gains after cognitive training (28). One potential mechanism that has yet to be tested is that the observed effects are partially due to positive expectancy or placebo effects.

Researchers now recognize that placebo effects may potentially confound cognitive-training [i.e., “brain training” (29)] outcomes and may underlie some of the posttraining fluid intelligence gains (24, 27, 29–32). Specifically, it has been argued that “overt” recruitment methods in which the expected benefits of training are stated (or implied) may lead to a sampling bias in the form of self-selection, such that individuals who expect positive results will be overrepresented in any sample of participants (29, 33). If an individual volunteers to participate in a study entitled “Brain Training and Cognitive Enhancement” because he or she thinks the training will be effective, any effect of the intervention may be partially or fully explained by participant expectations.

Expectations regarding the efficacy of cognitive training may be rooted in beliefs regarding the malleability of intelligence (34). Dweck’s (34) work showed that people tend to hold strong implicit beliefs regarding whether or not intelligence is malleable and that these beliefs predict a number of learning and academic outcomes. Consistent with that work, there is evidence that individuals with stronger beliefs in the malleability of intelligence have greater improvements in fluid intelligence tasks after working-memory training (10). If individuals who believe that intelligence is malleable are overrepresented in a sample, the apparent effect of training may be related to the belief of malleability, rather than to the training itself.

The present study was motivated by concerns about overt recruitment and self-selection bias (29, 33), as well as our own observation that few published articles on cognitive training provide details regarding participant recruitment. In fact, of the primary studies included in the meta-analysis of Au et al. (25) only two provided sufficient detail to determine whether participants were recruited overtly [e.g., “sign up for a brain training study” (10)] or covertly [e.g., “did not inform subjects that they were participating in a training study” (24)]. (We were able to assess 18 of the 20 studies.) We later emailed the corresponding authors from all of the studies in the Au et al. (25) meta-analysis for more detailed recruitment information. (This step was done at the suggestion of a reviewer and occurred after data collection was complete. We chose to place this information here instead of the discussion to accurately portray the current recruitment standards within the field.) All but one author responded. We determined that 17 (of 19) studies used overt recruitment methods that could have introduced a self-selection bias. Specifically, 17 studies explicitly mentioned “cognitive” or “brain” training. Of those 17, we found that 11 studies further suggested the potential for improvement or enhancement. Only two studies didn’t mention either (Table S1). A comparison of effect sizes listed in the Au et al. (25) meta-analysis by these three methods of recruitment (i.e., overt, overt and suggestive, and covert) lends further credence to the possibility of a confounded placebo effect. For all of the studies that overtly recruited, Hedge’s g = 0.27; for all of the studies that overtly recruited and suggested improvement, Hedge's g = 0.28; and for the studies that covertly recruited, Hedge's g = 0.11. Lastly, we searched the internet (via Google) for the terms “participate in a brain training study” and “brain training participate.” The top 10 results for both searches revealed six separate laboratories that are actively and overtly recruiting individuals to participants in either a “brain training study” or a “cognitive training study.” Taken together, these findings provide clear evidence that suggestive recruitment methods are common and that such recruitment may contribute to the positive outcomes reported in the cognitive-training literature. We therefore hypothesized that overt and suggestive recruitment would be sufficient to induce positive posttraining outcomes.

Table S1.

Responses from authors when queried about recruitment methods

| No. | Response |

| 1 | “We were definitely explicit about cognitive training and the potential for improving intelligence. We wanted to stack the deck toward seeing effects in as many ways as possible.” |

| 2 | “Our advertisements did state the improvement of memory.” |

| 3 | “We informed them [participants]…that they may profit from the training.” |

| 4 | “Yes, the participants were informed that were participating in a cognitive training study.” |

| 5 | “The flyer said that we're looking for participants for a cognitive training study.” |

| 6 | “I don’t remember exactly the how announcement or posting were worded, but I’m pretty sure “working memory training” or “train your brain” were in there somewhere.” |

| 7 | “Thanks for your interest in my cognitive training experiments.” |

| 8 | “We never used the words brain training or cognitive training in describing the study.” |

Materials and Methods

We designed a procedure to intentionally induce a placebo effect via overt recruitment. Our recruitment targeted two populations of participants using different advertisements varying in the degree to which they evoked an expectation of cognitive improvement (Fig. 1). Once participants self-selected into the two groups, they completed two pretraining fluid intelligence tests followed by 1 h of cognitive training and then completed two posttraining fluid intelligence tests on the following day. Two individual difference metrics regarding beliefs about cognition and intelligence were also collected as potential moderators. The researchers who interacted with participants were blind to the goal of the experiment and to the experimental condition. Aside from their means of recruitment, all participants completed identical cognitive-training experiments. All participants read and signed an informed consent form before beginning the experiment. The George Mason University Institutional Review Board approved this research.

Fig. 1.

Recruitment flyers for placebo (Left) and control (Right) groups.

We recruited the placebo group (n = 25) with flyers overtly advertising a study for brain training and cognitive enhancement (d). The text “Numerous studies have shown working memory training can increase fluid intelligence” was clearly visible on the flyer. We recruited the control group (n = 25) with a visually similar flier containing generic content that did not mention brain training or cognitive enhancement. We determined the sample sizes for both groups based upon two a priori criteria: (i) Previous, significant training studies had sample sizes of 25 or fewer (7, 8); and (ii) statistical power analyses (power ≥ 0.7) on between-group designs dictated a sample size of 25 per group for a moderate to large effect size (d ≥ 0.7). Our rationale for the first criterion was that we were trying to replicate previous training studies, but with the additional manipulation of a placebo that had been omitted in those studies. The second criterion simply allowed us a good chance to find a reasonably large and important effect with the sample size we selected. In sum, we felt that the sample size allowed for a good replication of prior studies, but restricted us to finding only worthwhile results to report. The final sample of participants consisted of 19 males and 31 females, with an average age of 21.5 y (SD = 2.3). The groups (n = 50; 25 for each condition) did not differ by age [t(48) = 0.18, P = 0.856] or by gender composition [χ2(1) = 0.76, P = 0.382].

After the pretests (Gf assessments described below), participants completed 1 h of cognitive training with an adaptive dual n-back task (SI Materials and Methods). We chose this task for two reasons: First, it is commonly used in cognitive training research, and, second, a high-face-validity task was required to maintain the credibility of the training regimen [compare placebo pain medication appearing identical to the real medication (35)]. In this task, participants were presented with two streams of information: auditory and visuospatial. There were eight stimuli per modality that were presented at a rate of 3 s per stimuli. For each stream, participants decided whether the current stimulus matched the stimulus that was presented n items ago. Our n-back task was an adaptive version in which the level of n changed as performance increased or decreased within each block.

SI Materials and Methods

Tasks.

RAPM.

RAPM is a test of inductive reasoning (37, 38). Each question has nine geometric patterns arranged in a 3 × 3 matrix according to an unknown set of rules. The bottom right pattern is always missing, and the objective is to match the correct missing pattern from a set of eight possible matches. We followed the protocol designed by Jaeggi et al. (10) to create parallel versions of the RAPM, resulting in the RAPM-A and -B, both consisting of 18 problems.

BOMAT.

The BOMAT is similar to the RAPM. However, it suffers from less of a ceiling effect in university participants compared with RAPM (8, 10). Each problem has 15 geometric patterns arranged in a 5 × 3 matrix, and the missing pattern can occur in any location. Participants must choose one correct pattern from six available options. We followed the protocol designed by Jaeggi et al. (10) to create parallel versions of the test, resulting in the BOMAT-A and -B, both consisting of 27 problems.

Questionnaires.

Theories of Intelligence Scale.

The Theories of Intelligence Scale (34) measures beliefs regarding the malleability of intelligence. This six-point scale has eight questions that can be answered from strongly agree to strongly disagree.

Need for Cognition Scale.

The Need for Cognition Scale measures “the tendency for an individual to engage in and enjoy thinking” (41). This 9-point scale has 18 questions that can be answered from very strong agreement to very strong disagreement.

Training Task.

Dual n-back task.

The dual n-back task is commonly used in cognitive training research (7, 8, 10). In this task, participants were presented with two streams of information: auditory and visuospatial. There were eight stimuli per modality that were presented at a rate of 3 s per stimuli. For each stream, participants decided whether the current stimulus matched the stimulus that was presented n items ago. Our n-back task was an adaptive version in which the level of n changed as performance increased or decreased within each block. This task is freely available online at brainworkshop.sourceforge.net/.

Recruitment.

Participants self-selected into either the placebo or control groups by sending an email in response to one of the two flyers presented in the main text (Fig. 1). Flyers were placed throughout buildings on the George Mason University Fairfax campus and were evenly distributed within each building. That is, if the placebo flyer was placed in a building, a control flyer was also placed in it. Flyers were replaced weekly to ensure that pull tags were always available. We also responded to all emails in an identical manner. Specifically, when a participant emailed informing us that they were interested in either study, we responded with the following:

“Thank you for your email and interest in participating. To complete this experiment, you will need to be available for two sessions that occur on back to back days (e.g., Monday and Tuesday). Please allow up to 3 hours for session one and up to 2 hours for session two. We currently have the following sets of sessions available over the next two weeks. Please let us know what sets of times work for you so that we can schedule you. If you have any questions, feel free to email us back.”

When participants emailed back with their available time, we then responded with this email:

“Thank you for agreeing to participate. You are scheduled to complete session one at [TIME AND DATE HERE] and session two at [TIME AND DATE HERE] in [BUILDING NAME AND ROOM NUMBER]. [RESEARCHER’S NAME] will be working with you at both sessions. Please arrive on time, use the restroom beforehand, and turn off your cell phone for the duration of the experiment. A break will be offered during session one. If you have any questions, please email us back. Thank you and have a nice day.”

Procedure.

All participants read and signed an informed consent form before beginning the experiment. Importantly, the consent form did not mention the goal of the study. Additionally, all researchers, who were blind to the goal of the experiment anyway, were instructed to not talk to the participants about anything other than the experiment itself (i.e., the instructions) because we did not want to introduce any additional biases. On day 1, participants completed the RAPM-A and BOMAT-A, took a short break, and then completed ∼1 h of the dual n-back training task. On day 2, participants completed the RAPM-B and BOMAT-B, then the Theories of Intelligence Scale, the Need for Cognition Scale, and a short demographic survey.

RAPM to IQ Conversion.

We used two different methods to determine that our RAPM improvement (d = 0.50) equated to a 5- to 10-point increase in IQ scores. We opted to widen the range to 5–10 to better capture the improvement within a wider range of error. (i) Following the approach of the Au et al. (2015; ref. 25) meta-analysis, we multiplied the SD increase of 0.50 by the SD of common IQ tests, 15. Therefore, 0.50 × 15 = 7.5 points increased on an IQ test. (ii) By using Table APM36 from the RAPM Manual (40) that compares RAPM scores to IQ scores, an increase of 0.50 SDs units equated to an approximate increase of 6–8 IQ points.

Results

All analyses were conducted by using mixed-effects linear regression with restricted maximum likelihood. As expected, both groups’ training performance improved over time [B = 0.016, SE = 0.002, t(48) = 10.5, P < 0.001]. All participants began at 2-back; 18% did not advance beyond a 2-back, 14% finished training at a 4-back, and 68% at a 3-back. Training performance did not differ by group [B = −0.002, SE = 0.002, t(48) = −1.00, P = 0.321): Both the placebo and control groups completed training with a similar degree of success. A placebo effect can occur in the absence of training differences between groups.

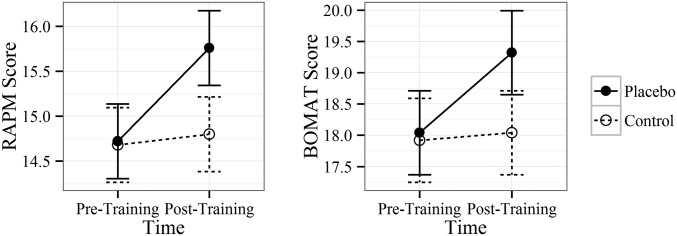

The placebo effect does, however, necessitate an effect on the outcome of interest. Pretraining and posttraining fluid intelligence was measured with Raven’s Advanced Progressive Matrices (RAPM) and Bochumer Matrices Test (BOMAT), two tests of inductive reasoning widely used to assess Gf (36–38). No baseline differences were found between groups on either test [t(48) = −0.063, P = 0.939 and t(48) = −0.123, P = 0.938, respectively]. We observed a main effect of time on test performance in which scores on both intelligence tests increased from pretraining to posttraining. These main effects of time on both intelligence measures, however, were qualified by an interaction by group [RAPM: B = 0.65, SE = 0.19, t(48) = 3.41, P = 0.0013, d = 0.98; and BOMAT: B = 0.82, SE = 0.18, t(48) = 4.63, P < 0.0001, d = 1.34]. Specific contrasts showed that these moderation effects were entirely driven by the participants in the placebo group—the only individuals in the study to score significantly higher on posttraining compared with pretraining sessions for both RAPM [B = −1.04, SE = 0.19, t(48) = −5.46, P < 0.0001, d = 0.50] and for the BOMAT [B = −1.28, SE = 0.18, t(48) = −7.22, P < 0.0001, d = 0.39]. Extrapolating RAPM to IQ (25, 39, 40), these improvements equate to a 5- to 10-point increase on a standardized 100-point IQ test (SI Materials and Methods). In contrast, the pretraining and posttraining scores for participants in the control group were statistically indistinguishable, both for RAPM [B = −0.12, SE = 0.19, t(48) = −0.63, P = 0.922] and for the BOMAT [B = −0.12, SE = 0.18, t(48) = −0.68, P = 0.905]. The results are summarized in Tables S2–S4 and depicted in Fig. 2. Interestingly, pooling the data across groups to form one sample (combining the self-selection and control groups) revealed significant posttraining outcomes [B = 0.41, SE = 0.11, t(49) = 3.90, P = 0.0003, d = 0.28 (RAPM); and B = 0.50, SE = 0.15, t(49) = 4.69, P < 0.0001, d = 0.21 (BOMAT)]. That is, the effect from the placebo group was strong enough to overcome the null effect from the control group (when pooled).

Table S2.

Regression results for RAPM predicted by time and group

| Time or group | B | SE | df | t | P |

| Intercept | 14.80 | 0.42 | 53.3 | 35.6 | <0.0001*** |

| Time | −0.12 | 0.19 | 48 | −0.63 | 0.5320 |

| Group | −0.96 | 0.59 | 53.3 | 1.63 | 0.1085 |

| Time × group | −0.92 | 0.27 | 48 | −0.3.41 | <0.0013** |

**P < 0.01; ***P < 0.001.

Table S4.

Means and SDs for RAPM and BOMAT

| Control or placebo | RAPM | BOMAT | ||

| Pretraining | Posttraining | Pretraining | Posttraining | |

| Control | 14.68 (2.27) | 14.80 (1.89) | 17.92 (3.38) | 18.04 (3.47) |

| Placebo | 14.72 (2.25) | 15.76 (1.88) | 18.04 (3.52) | 19.32 (3.05) |

Values are means with SDs in parentheses.

Fig. 2.

Estimated marginal means of the RAPM (Left) and BOMAT (Right) scores by time and group; errors bars represent SEs.

Table S3.

Regression results for BOMAT predicted by time and group

| Time or group | B | SE | df | t | P |

| Intercept | 18.04 | 0.67 | 49.70 | 26.85 | <0.0001*** |

| Time | −0.12 | 0.18 | 48 | −0.68 | 0.50 |

| Group | 1.28 | 0.95 | 49.70 | 1.35 | 0.18 |

| Time × group | −1.16 | 0.25 | 48 | −4.63 | <0.0001*** |

***P < 0.001.

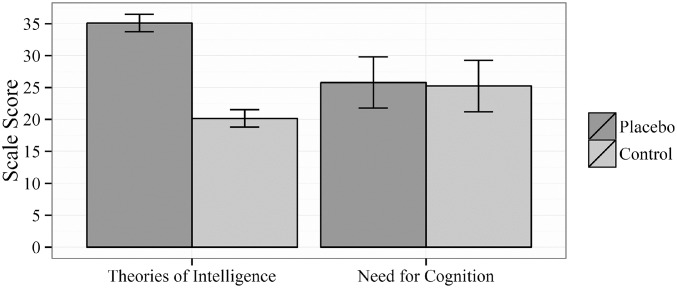

We also observed differences between groups for scores on the Theories of Intelligence scale, which measures beliefs regarding the malleability of intelligence (34). The participants in the placebo group reported substantially higher scores on this index compared with controls [B = 14.96, SE = 1.93, t(48) = 7.75, P < 0.0001, d = 2.15], indicating a greater confidence that intelligence is malleable. These findings indicate that our manipulation via recruitment flyer produced significantly different groups with regard to expectancy. We did not detect differences in Need for Cognition scores (41) [B = 0.56, SE = 5.67, t(48) = 0.10, P = 0.922] (Fig. 3). Together, these results support the interpretation that participants self-selected into groups based on differing expectations.

Fig. 3.

Estimated marginal means of the Theories of Intelligence and Need for Cognition scales by group; error bars represent SEs.

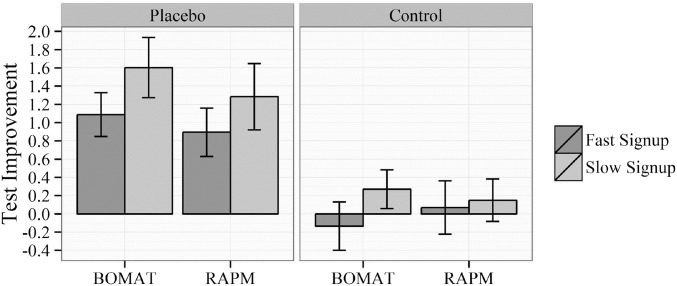

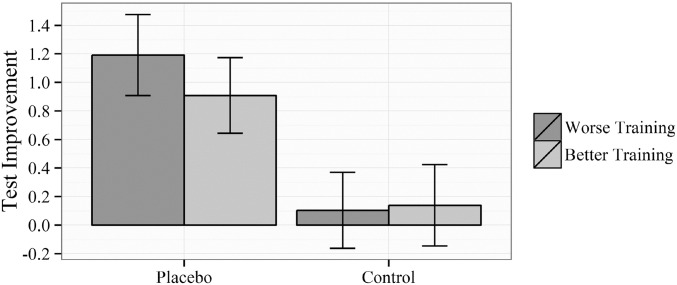

We also tested whether the response time to volunteer for our study influenced the aforementioned findings. Specifically, we noticed that the placebo condition appeared to fill faster than the control condition did (366 vs. 488 h). It is possible that speed of signup might represent another measure for—or perhaps gradations within—the strength of the placebo effect. The volunteer response time differences by group failed to produce a significant effect on either the RAPM [B = 0.04, SE = 0.17, t(46) = 0.23, P = 0.819] or the BOMAT [B = 0.20, SE = 0.16, t(46) = 1.28, P = 0.201]. Volunteer response time also failed to explain the improvement observed within the placebo group alone, on RAPM [B = 0.20, SE = 0.20, t(23) = 0.95, P = 0.341] and BOMAT [B = 0.26, SE = 0.22, t(23) = 1.22, P = 0.237] (Fig. 4).

Fig. 4.

Improvement in test scores from pretraining to posttraining by group and speed of participant sign up, split into fast and slow (z = −1 and z = 1 of minutes since experiment onset, respectively). Error bars represent SE.

Researchers have hypothesized that a training dosage effect may exist, such that the quality of performance on a training task is associated with the degree of subsequent skill transfer (7). However, as discussed previously, no pre–post improvements occurred within the control group, even though all participants performed equally well on the training task. Consequently, training performance did not predict subsequent performance improvement on its own [B = 0.017, SE = 0.20, t(46) = 0.09, P = 0.930], nor did it moderate the effect of group on the observed test performance improvements [B = −0.16, SE = 0.28, t(46) = −0.58, P = 0.567] (Fig. 5). Therefore, our data do not support the dosage-effect hypothesis.

Fig. 5.

Improvement in test scores from pretraining to posttraining by group and performance on training task (z = −1 and z = 1 of training performance, respectively). Error bars represent SE.

Discussion

We provide strong evidence that placebo effects from overt and suggestive recruitment can affect cognitive training outcomes. These findings support the concerns of many researchers (24, 27, 29–32), who suggest that placebo effects may underlie positive outcomes seen in the cognitive-training literature. By capitalizing on the self-selecting tendencies of participants with strong positive beliefs about the malleability of intelligence, we were able to induce an improvement in Gf after 1 h of working memory training. We acknowledge that the flyer itself could have induced the positive beliefs about the malleability of intelligence. Either way, these findings present an alternative explanation for effects reported in the cognitive-training literature and in the brain-training industry, demonstrating the need to account for placebo effects in future research.

Importantly, we do not claim that our study revealed a population of individuals whose intelligence was truly changed by the training that they received in our study. It is extremely unlikely that individuals in the placebo group increased their IQ by 5–10 points with 1 h of cognitive training. Three elements of our design and results support this position. First, a single, 1-h training session is far less than the traditional 15 or more hours spread across weeks commonly used in training studies (8, 10, 23). We argue that the use of a very short training period was sufficient to avoid a true training effect. Second, we observed similar baseline scores on both of the fluid intelligence tests between groups, suggesting that both groups were equally engaged in the experiment. Thus, initial nonequivalence between groups or regression artifacts are likely absent from our design. Third, equivalent performance on the training task between groups suggests that the differences in posttraining intelligence were not the (direct) result of training. If groups showed dramatically different training effects on the dual n-back task, it might follow that one group showed higher posttraining scores on the test of general cognitive ability.

Therefore, our study, to our knowledge, is the first to explicitly model the main effect of expectancy effects while controlling for the effect of training. That is, because our design was unlikely to have produced true training effects, our positive effects on Gf are solely the result of overt and suggestive recruitment. Although posttraining gains in fluid intelligence are typically discussed in terms of a main effect of training (7, 8, 10, 11), we argue that such studies cannot rule out an interaction between training and effects from overt and suggestive recruitment. Furthermore, based on the evidence we reviewed above, we are unaware of any previous studies that obtained a positive main effect of training in the absence of expectation or self-selection. Indeed, to our knowledge, the rigor of double-blind randomized clinical trials is nonexistent in this research area.

Moving forward, we suggest that researchers exercise care in their design of cognitive training studies. Our findings raise philosophical concerns and questions that merit discussion within the scientific field so that this area of inquiry can advance. We discuss two different schools of thought about how to recruit participants and design training studies. We hope that this work can begin a conversation leading to a consensus on how to best design future research in this field.

First, following in the tradition of randomized controlled trials used in medicine, one approach suggests that recruitment and study design should be as covert as possible (29, 32). Specifically, several research groups have argued for the need to remove study-specific information from the recruitment and briefing procedures, avoid providing the goals of the research to participants, and omit mention of any anticipated outcomes (29, 32, 33). The purpose of such a design would be to minimize any confounding effects (e.g., placebo or expectation). Our earlier review of the Au et al. (25) meta-analyses revealed two studies that followed this approach.

Alternatively, the second approach suggests that we should only recruit participants who believe that the training will work and that we should do this using overt methods. Such a screening process would eliminate participants whose prior beliefs would prevent an otherwise effective treatment from having an effect. That is, if a participant does not care about the training, puts little effort in, and/or is motivated solely by something else (e.g., money), they are not likely to improve with any intervention, including cognitive training. Although positive expectancies would be overrepresented in such an overtly recruited sample, proper use of active controls should allow for training effects to be isolated from expectation. This view is in line with some from the medical domain who argue that researchers can make use of participant expectation to better test treatment effects in randomized controlled trials (42). This view is also in line with some from the psychotherapy domain who argue that motivation is important for treatment effectiveness (43).

One interesting consideration is the likelihood that these two design approaches recruit from different subpopulations. Dweck (34) has shown that individuals hold implicit beliefs regarding whether or not intelligence is malleable and that these beliefs predict a number of learning and academic outcomes. Thus, it is possible that the benefits from cognitive training occur only in individuals who believe the training will be effective. That being said, this possibility is not applicable to our data because our design eliminated a main effect of training. It will be important in future work to investigate the relation between expectation and processes of learning during cognitive training.

Our data do not allow us to understand the field as a whole; instead, they allow us to understand existing limitations to current research that require further exploration. To wit, we identified expectancy as a major factor that needs to be considered for a fuller understanding of training effects. More rigorous designs such as double-blind, block randomized controlled trials that measure multiple outcomes may offer a better “test” of these cognitive training effects. Blinding subjects to cognitive training may be the biggest obstacle in these designs—as pointed out by Boot et al. (29), because participants become aware of the goals of the study. Furthermore, assessing expectancy and personal theories of intelligence malleability (cf. ref. 34) before randomization to ensure adequate representation in all groups would allow us to better assess the true training effects and the potential for expectancy to produce effects alone or in interaction with training. Finally, researchers should use more measures of Gf to determine whether positive outcomes are the result of latent changes or changes in test-specific performance. We are aware of no study to date—including the present one—that uses these rigorous methods. (We include the present one by design. Our goal was to determine whether a main effect of expectation existed using methods similar to published research.) By using such methods, we can begin to understand whether true training effects exist and are generalizable to samples (and perhaps populations) beyond those who expect to improve.

Conclusion

Our findings have important implications for cognitive-training research and the brain-training industry at large. Previous cognitive-training results may have been inadvertently influenced by placebo effects arising from recruitment or design. For the field of cognitive training to advance, it is important that future work report recruitment information and include the Theories of Intelligence Scale (34) to determine the relation between observed effects of training and of expectancy. The brain-training industry may be advised to temper their claims until the role of placebo effects is better understood. Many commercial brain-training websites make explicit claims about the effectiveness of their training that are not currently supported by many in the scientific community (ref. 44; cf. ref. 45). Consistent with that concern, one of the largest brain-training companies in the world agreed in January 2016 to pay a $2 million fine to the Federal Trade Commission for deceptive advertising about the benefits of their programs (46). The deception—exaggerated claims of training efficacy—may be fueling a placebo effect that may contaminate actual brain-training effects.

We argue that our findings also have broad implications for the advancement of science of human cognition; in a recent replication effort published in Science, only 36% (35 of 97) of the psychological science studies (including those that fall under the broad category of neuroscience) were successfully replicated (47). Failure to control or account for placebo effects could have contributed to some of these failed replications. Our goal in any experiment should be to take every step possible to ensure that the effects we seek are the result of manipulated interventions—not confounds that go unreported or undetected.

Acknowledgments

This work was supported by George Mason University; the George Mason University Provost PhD Awards; Office of Naval Research Grant N00014-14-1-0201; and Air Force Office of Scientific Research Grant FA9550-10-1-0385.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: The data have been achived on Figshare, https://figshare.com/articles/Placebo_csv/2062479.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1601243113/-/DCSupplemental.

References

- 1. Selk J (2013) Amidst billion-dollar brain fitness industry, a free way to train your brain. Forbs. Available at www.forbes.com/sites/jasonselk/2013/08/13/amidst-billion-dollar-brain-fitness-industry-a-free-way-to-train-your-brain/#6b3457647d41. Accessed May 8, 2016.

- 2.Shapiro AK. Handbook of Psychotherapy and Behavior Change. Wiley; New York: 1971. [Google Scholar]

- 3.Turner JA, Deyo RA, Loeser JD, Von Korff M, Fordyce WE. The importance of placebo effects in pain treatment and research. JAMA. 1994;271(20):1609–1614. [PubMed] [Google Scholar]

- 4.Bouchard TJ, Jr, Lykken DT, McGue M, Segal NL, Tellegen A. Sources of human psychological differences: The Minnesota Study of Twins Reared Apart. Science. 1990;250(4978):223–228. doi: 10.1126/science.2218526. [DOI] [PubMed] [Google Scholar]

- 5.Plomin R, Pedersen NL, Lichtenstein P, McClearn GE. Variability and stability in cognitive abilities are largely genetic later in life. Behav Genet. 1994;24(3):207–215. doi: 10.1007/BF01067188. [DOI] [PubMed] [Google Scholar]

- 6.Larsen L, Hartmann P, Nyborg H. The stability of general intelligence from early adulthood to middle-age. Intelligence. 2008;36(1):29–34. [Google Scholar]

- 7.Jaeggi SM, Buschkuehl M, Jonides J, Perrig WJ. Improving fluid intelligence with training on working memory. Proc Natl Acad Sci USA. 2008;105(19):6829–6833. doi: 10.1073/pnas.0801268105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jaeggi SM, et al. The relationship between n-back performance and matrix reasoning—Implications for training and transfer. Intelligence. 2010;38(6):625–635. [Google Scholar]

- 9.Jaeggi SM, Buschkuehl M, Jonides J, Shah P. Short- and long-term benefits of cognitive training. Proc Natl Acad Sci USA. 2011;108(25):10081–10086. doi: 10.1073/pnas.1103228108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jaeggi SM, Buschkuehl M, Shah P, Jonides J. The role of individual differences in cognitive training and transfer. Mem Cognit. 2014;42(3):464–480. doi: 10.3758/s13421-013-0364-z. [DOI] [PubMed] [Google Scholar]

- 11.Rudebeck SR, Bor D, Ormond A, O’Reilly JX, Lee AC. A potential spatial working memory training task to improve both episodic memory and fluid intelligence. PLoS One. 2012;7(11):e50431. doi: 10.1371/journal.pone.0050431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Strenziok M, et al. Neurocognitive enhancement in older adults: Comparison of three cognitive training tasks to test a hypothesis of training transfer in brain connectivity. Neuroimage. 2014;85(Pt 3):1027–1039. doi: 10.1016/j.neuroimage.2013.07.069. [DOI] [PubMed] [Google Scholar]

- 13.Neisser U, et al. Intelligence: Knowns and unknowns. Am Psychol. 1996;51(2):77–101. [Google Scholar]

- 14.Watkins MW, Lei P-W, Canivez GL. Psychometric intelligence and achievement: A cross-lagged panel analysis. Intelligence. 2007;35(1):59–68. doi: 10.3390/jintelligence5030031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Schmidt FL, Hunter JE. The validity and utility of selection methods in personnel psychology: Practical and theoretical implications of 85 years of research findings. Psychol Bull. 1998;124(2):262–275. [Google Scholar]

- 16.Gottfredson LS. Intelligence: Is it the epidemiologists’ elusive “fundamental cause” of social class inequalities in health? J Pers Soc Psychol. 2004;86(1):174–199. doi: 10.1037/0022-3514.86.1.174. [DOI] [PubMed] [Google Scholar]

- 17.Gottfredson LS, Deary IJ. Intelligence predicts health and longevity, but why? Curr Dir Psychol Sci. 2004;13(1):1–4. [Google Scholar]

- 18.Whalley LJ, Deary IJ. Longitudinal cohort study of childhood IQ and survival up to age 76. BMJ. 2001;322(7290):819. doi: 10.1136/bmj.322.7290.819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.O’Toole BI, Stankov L. Ultimate validity of psychological tests. Pers Individ Dif. 1992;13(6):699–716. [Google Scholar]

- 20.Ceci SJ, Williams WM. Schooling, intelligence, and income. Am Psychol. 1997;52(10):1051–1058. [Google Scholar]

- 21.Strenze T. Intelligence and socioeconomic success: A meta-analytic review of longitudinal research. Intelligence. 2007;35(5):401–426. [Google Scholar]

- 22.Willis SL, et al. ACTIVE Study Group Long-term effects of cognitive training on everyday functional outcomes in older adults. JAMA. 2006;296(23):2805–2814. doi: 10.1001/jama.296.23.2805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Harrison TL, et al. Working memory training may increase working memory capacity but not fluid intelligence. Psychol Sci. 2013;24(12):2409–2419. doi: 10.1177/0956797613492984. [DOI] [PubMed] [Google Scholar]

- 24.Redick TS, et al. No evidence of intelligence improvement after working memory training: A randomized, placebo-controlled study. J Exp Psychol Gen. 2013;142(2):359–379. doi: 10.1037/a0029082. [DOI] [PubMed] [Google Scholar]

- 25.Au J, et al. Improving fluid intelligence with training on working memory: A meta-analysis. Psychon Bull Rev. 2015;22(2):366–377. doi: 10.3758/s13423-014-0699-x. [DOI] [PubMed] [Google Scholar]

- 26.Karbach J, Verhaeghen P. Making working memory work: A meta-analysis of executive-control and working memory training in older adults. Psychol Sci. 2014;25(11):2027–2037. doi: 10.1177/0956797614548725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Dougherty MR, Hamovitz T, Tidwell JW. Reevaluating the effectiveness of n-back training on transfer through the Bayesian lens: Support for the null. Psychon Bull Rev. 2016;23(1):306–316. doi: 10.3758/s13423-015-0865-9. [DOI] [PubMed] [Google Scholar]

- 28.Greenwood PM, Parasuraman R. The mechanisms of far transfer from cognitive training: Review and hypothesis. Neuropsychology. November 16, 2015 doi: 10.1037/neu0000235. [DOI] [PubMed] [Google Scholar]

- 29.Boot WR, Simons DJ, Stothart C, Stutts C. The pervasive problem with placebos in psychology: Why active control groups are not sufficient to rule out placebo effects. Perspect Psychol Sci. 2013;8(4):445–454. doi: 10.1177/1745691613491271. [DOI] [PubMed] [Google Scholar]

- 30.Melby-Lervåg M, Hulme C. Is working memory training effective? A meta-analytic review. Dev Psychol. 2013;49(2):270–291. doi: 10.1037/a0028228. [DOI] [PubMed] [Google Scholar]

- 31.Melby-Lervåg M, Hulme C. There is no convincing evidence that working memory training is effective: A reply to Au et al. (2014) and Karbach and Verhaeghen (2014) Psychon Bull Rev. 2016;23(1):324–330. doi: 10.3758/s13423-015-0862-z. [DOI] [PubMed] [Google Scholar]

- 32.Shipstead Z, Redick TS, Engle RW. Is working memory training effective? Psychol Bull. 2012;138(4):628–654. doi: 10.1037/a0027473. [DOI] [PubMed] [Google Scholar]

- 33.Boot WR, Blakely DP, Simons DJ. Do action video games improve perception and cognition? Front Psychol. 2011;2:226. doi: 10.3389/fpsyg.2011.00226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Dweck C. Self-Theories: Their Roles in Motivation, Personality, and Development. Psychology Press; New York: 2000. [Google Scholar]

- 35.Evans FJ. The placebo response in pain reduction. Adv Neurol. 1974;4:289–296. [Google Scholar]

- 36.Hossiep R, Turck D, Hasella M. Bochumer Matrizentest (BOMAT) Advanced. Hogrefe; Goettingen, Germany: 1999. [Google Scholar]

- 37.Raven JC, Court JH, Raven J, Kratzmeier H. Advanced Progressive Matrices. Oxford Psychologists Press; Oxford: 1994. [Google Scholar]

- 38.Raven JC, Court JH. Raven’s Progressive Matrices and Vocabulary Scales. Oxford Psychologists Press; Oxford: 1998. [Google Scholar]

- 39.Frey MC, Detterman DK. Scholastic assessment or g? The relationship between the Scholastic Assessment Test and general cognitive ability. Psychol Sci. 2004;15(6):373–378. doi: 10.1111/j.0956-7976.2004.00687.x. [DOI] [PubMed] [Google Scholar]

- 40.Raven J, Raven JC, Court JH. Manual for the Raven’s Progressive Matrices and Vocabulary Scales. Oxford Psychologists Press; Oxford: 1998. [Google Scholar]

- 41.Cacioppo JT, Petty RE. The need for cognition. J Pers Soc Psychol. 1982;42(1):805–818. [Google Scholar]

- 42.Torgerson DJ, Klaber-Moffett J, Russell IT. Patient preferences in randomised trials: Threat or opportunity? J Health Serv Res Policy. 1996;1(4):194–197. doi: 10.1177/135581969600100403. [DOI] [PubMed] [Google Scholar]

- 43.Ryan RM, Lynch MF, Vansteenkiste M, Deci EL. Motivation and autonomy in counseling, psychotherapy, and behavior change: A look at theory and practice. Couns Psychol. 2010;39(2):193–260. [Google Scholar]

- 44.Stanford Center on Longevity 2014 A consensus on the brain training industry from the scientific community. Available at longevity3.stanford.edu/blog/2014/10/15/the-consensus-on-the-brain-training-industry-from-the-scientific-community-2/. Accessed May 8, 2016.

- 45.Cognitive Training Data 2015 Open letter response to the Stanford Center on longevity. Available at www.cognitivetrainingdata.org/. Accessed May 8, 2016.

- 46.Federal Trade Commission 2016 Lumosity to pay 2$ million to settle FTC deceptive advertising charges for its “brain training” program. Available at https://www.ftc.gov/news-events/press-releases/2016/01/lumosity-pay-2-million-settle-ftc-deceptive-advertising-charges. Accessed May 8, 2016.

- 47.Open Science Collaboration PSYCHOLOGY. Estimating the reproducibility of psychological science. Science. 2015;349(6251):aac4716. doi: 10.1126/science.aac4716. [DOI] [PubMed] [Google Scholar]